1. Introduction

Let

be locally Lipschitz continuous. Consider the nonlinear equation in the following simplest form:

where

,

and

,

. Furthermore, we assume that there exists

, which is a solution to Equation (

1) (i.e.,

). It is worth recalling that Pang and Qi [

1] presented significant motivations and a broad scope of applications for nonsmooth equations.

Some class of iterative exponential methods for solving one-dimensional nonlinear equations was proposed by Chen and Li [

2]. One of the most interesting formulas, and simultaneously the simplest one, is the method which has the following form:

This method turns to the classic Newton method if we use the first-order Taylor series expansion of the expression

. The above method in Equation (

2) has (at least) quadratic convergence if the function

is twice differentiable and

in a neighborhood of the solution. A substantial extension of the exponential method in Equation (

2) to the nonsmooth case in

was presented in [

3]. This locally and superlinearly convergent method can be written in the following two-stage form:

where the matrix

is arbitrarily taken from the B-differential of

F at

.

On the other hand, one traditional approach for solving nonsmooth equations is the inexact generalized Newton method:

where the matrix

could be arbitrarily taken from some subdifferential of

F at

(not only from the generalized Jacobian or B-differential but also from, for example, the ∗-differential) and

for all

Martínez and Qi in [

4] stated the local and superlinear convergence of the generalized Jacobian-based method with Equation (

4) for solving equations with semismooth function

F. Other versions of such Newton-type methods for solving nonsmooth equations were also considered among the others in [

5,

6,

7]. The main concept of the inexact Newton-type method is to substitute the standard Newton equation

with an inequality as in Equation (

4), where the parameter

is the forcing term. Then, the Newton step

may be determined inexactly, such as by the use of any iterative method with feasible inaccuracy, especially when the current approximation is far from the solution to Equation (

1).

The main objective of this paper is to introduce an inexact version of the exponential method with a subdifferential for multidimensional nonsmooth cases. Therefore, we present some new iterative methods for solving nondifferentiable problems, which can be sufficiently effective in solving not only nonsmooth equations but also some important problems in nonsmooth optimization. The exponential method was introduced in [

2] for finding single roots of univariate nonlinear equations and considered in [

8] for solving unconstrained optimization problems. In both cases, the proposed formulas allow solving only smooth and one-dimensional problems. We discuss an algorithm which is intended to solve both nonsmooth and multi-dimensional problems. Our method allows solving Equation (

1), in which the function is only Lipschitz continuous and need not be differentiable. A primary extension, shown in Equation (

3), was introduced in [

3], but this study focuses on the inexact approach. In this way, vector

can be approximated in every iteration with some inaccuracy, which reduces the cost of determining it. However, all these methods are only locally convergent. A new approach also includes a backtracking procedure, and as a result, we obtained a global convergence of the inexact exponential method.

This paper is organized as follows. We recall some important needed notions and properties in

Section 2. In

Section 3, we not only introduce an algorithm but also prove its global and superlinear convergence.

Section 4 features the results of numerical tests. In

Section 5 at the end, we give some conclusions.

2. Preliminaries

Throughout the paper, denotes the Euclidean norm on , where we regard the vector from as a column vector. However, all theoretical results do not depend on the choice of the norm.

We assume that the function

F is locally Lipschitz continuous in the traditional sense. In other words, for every

, there exists a constant

such that

If

is a differentiable function, we denote the Jacobian matrix of

F at

x as

and the set where

F is differentiable as

. The local Lipschitz continuity of the function

F implies that it is differentiable almost everywhere, according to Rademacher’s theorem [

9]. Then, we have

which is called the Bouligand subdifferential (B-differential for short) of

F at

x [

10]. Furthermore, the generalized Jacobian of function

F at

x (in the sense of Clarke [

9]) is the convex hull of the Bouligand subdifferential such that

We say that the function F is BD-regular at x if F is locally Lipschitz continuous at x and if all matrices V from are nonsingular. For BD-regular functions, Qi proved some important properties (see Lemma 2).

In turn, the set

is called the b-differential of function

F at

x (defined by Sun and Han in [

11]). It is easy to verify that in a one-dimensional case (i.e., when

), the generalized Jacobian reduces to the Clarke generalized gradient and

i.e., the B-differential and b-differential coincide). Furthermore, a ∗-differential

is a non-empty bounded set for any

x such that

where

denotes the generalized gradient of the component function

at

x. Obviously, for a locally Lipschitz function, all previously mentioned differentials, namely

,

and

, are ∗-differentials (see Gao [

12]). Obviously, if all components

of function

F are

at

x, then

.

The notion of semismoothness was primarily introduced by Mifflin [

13] for functionals. Later, Qi and Sun [

14] extended the original definition to nonlinear operators. A function

F is semismooth at

x if

F is locally Lipschitz continuous at

x and for any

, the limit

exists. If function

F is semismooth at

x, then

F is directionally differentiable at

x, and

is equal to the above limit:

Lemma 1 (Lemma 2.2 by Qi [

15])

. Suppose that the function is directionally differentiable in a neighborhood of x. The following statements are equivalent:- (1)

F is semismooth at x;

- (2)

is semicontinuous at x;

- (3)

For any , the following is true:

Moreover, we say that function

F is

p-order semismooth at

x if for any matrix

V from the generalized Jacobian

, it holds that

where

. If

, then

F is just called strongly semismooth [

16].

Remark 1. - (i)

Clearly, semismoothness of any order implies semismoothness.

- (ii)

The piecewise function is strongly semismooth.

Lemma 2 (Lemma 2.6 by Qi [

15])

. If function F is BD-regular at x, then there is a neighborhood N of x and a constant such that for any and matrix , V is nonsingular, andIf the function F is also semismooth at , then for any , the following is true: Qi and Sun showed in [

14] that if a function

F is semismooth at

x, then

Furthermore, if a function

F is

p-order semismooth at

x, then

Throughout this paper, denotes the closed ball with a center x and radius r in (if the center is known, and a radius is negligible, just N is used).

Furthermore, for a given diagonal matrix whose diagonal entries are

such that

then

denotes a so-called exponential matrix, which has the following form:

3. The Algorithm and Its Properties

In this section, we introduce a new algorithm, and we discuss its convergence. For solving Equation (

1), we can consider a method in the descriptive two-stage form

where matrix

is taken from some subdifferential of

F at

. We suggest an approach which is a combination of two methods: the iterative exponential one and the inexact generalized Newton one with a decreasing function norm and backtracking. The proposed inexact approach is similar to the algorithm presented by Birgin et al. [

17] for smooth equations, whereas the exponential formula was introduced in [

3]. Because of the nondifferentiability of

F, as iteration matrices, we use matrices from the B-differential instead of the Jacobians.

Remark 2. - (i)

The matrix may be chosen absolutely arbitrarily from known elements of the Bouligand subdifferential (in Step 1 of Algorithm 1). Appropriate matrices are usually suitable Jacobians of F.

- (ii)

The backtracking approach used in Step 2 is a particular case of the minimum reduction algorithm.

- (iii)

The formula in Equation (10) for obtaining could be written in a substitute form, which explicitly shows how to generate a new approximation using components of the previous approximation : - (iv)

Various strategies for the choice of the forcing sequence can be found in [18] but for the inexact methods for solving smooth equations. Therefore, most of these strategies would need to be modified with respect to the nondifferentiability of F. For example, we could set as a small constant or use the following rule:where . Then, Equation (8) holds with equality.

Before the convergence of Algorithm 1 is proven, we present a necessary important assumption:

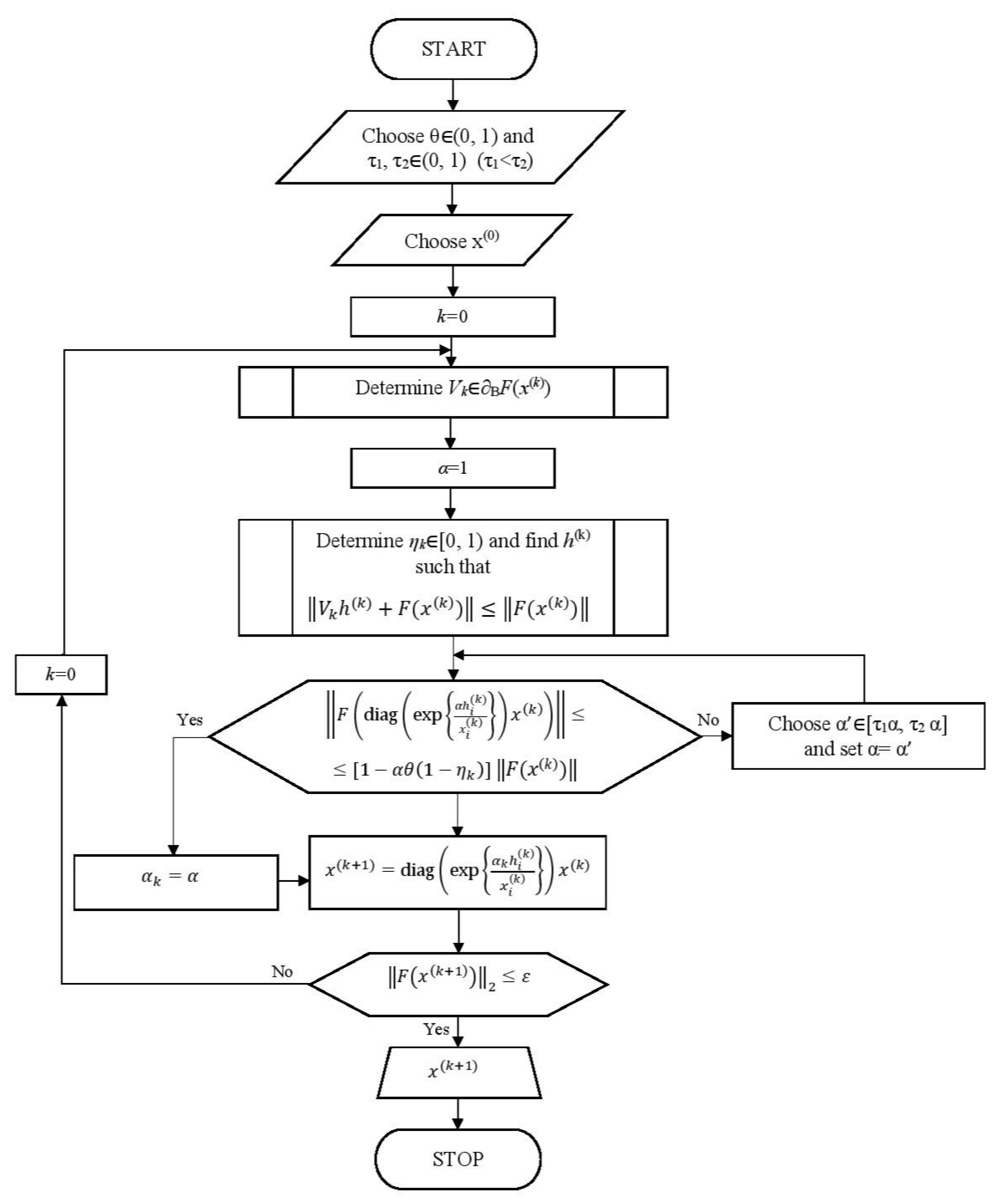

| Algorithm 1: Inexact exponential method (IEM). |

Assume that , and . Let be an arbitrary starting approximation. The steps for obtaining from are as follows: Step 1: Determine and find an value that satisfies

where . Set . Step 2: If

then set and

Otherwise, choose , set , and repeat Step 2. |

Assumption 1. Function F satisfies Assumption 1 at x if for any y from a neighborhood N of x and any matrix , it holds that Furthermore, function F satisfies Assumption 1 at x with a degree ρ if the following holds: Remark 3. - (i)

It is not difficult to indicate functions that satisfy Assumption 1. We can indicate three classes of such functions. Assumption 1 is implied not only by semismoothness but also by H-differentiability (introduced by Song et al. [19]) and second-order C-differentiability (introduced by Qi [10]). - (ii)

If function F is BD-regular at x and satisfies Assumption 1 at x, then there is a neighborhood N of x and a constant such that for any and matrix , the following is true: - (iii)

Lemma 2, proven in [7], states that if function F is BD-regular at , satisfies Assumption 1 at and , where and , thenwhere N is a neighborhood of .

Now, we establish some theoretical foundations for Algorithm 1:

Lemma 3. Assume that Algorithm 1 is carried out with determined in such way that the inequality in Equation (9) holds. If the series is divergent, then Proof of Lemma 3. Under Equation (

9), the following is true:

Since and , convergence to 0 follows from the divergence of . □

The divergence of series

implies a satisfactory decrease in

over all steps such that

, namely if the inequality in Equation (

9) holds.

Lemma 4. Let and x be given, and assume that there is , which satisfies , where . If function F satisfies Assumption 1 at x, then there is such that for any , there exists h, which satisfieswhere . Proof of Lemma 4. Obviously, and .

Set

where

is sufficiently small for the following inequality to be satisfied:

whenever

. Under Assumption 1, such a value of

exists. Since

, then

.

Let

for any

. Then, we have

□

Lemma 4 above implies that the inexact exponential step is well-defined for the current approximation , and suitable and values exist for any .

Now, we will prove that the method described by Algorithm 1 is convergent. The first theorem regarding convergence is analogous to the one presented in [

20] for the inexact generalized Newton method for solving nonsmooth equations, albeit with some significant changes in the proof:

Theorem 1. Let be a sequence generated by Algorithm 1 with , and for each k, there is a constant such that the following holds:andfor any matrix . If function F satisfies Assumption 1 at , then every limiting point of the sequence is a solution to Equation (1), and Proof of Theorem 1. Suppose that K is a sequence of indices such that . We have two cases due to the behavior of the sequence :

- (i)

is not convergent to 0.

Then, there is a sequence of indices

and

such that

for all

. Clearly, for all

, the following is true:

In turn, according to Equation (

9), we have

By adding all these inequalities side by side, we obtain the following inequality:

Therefore, . Hence, .

- (ii)

is convergent to 0.

For

k at large enough values, under the choice

(according to Step 2 of Algorithm 1), there is

,

such that

and

Therefore, for some

such that Equation (

8) is satisfied, it holds that

which implies that

and finally

Since is bounded, and tend toward zero, Assumption 1 and the local Lipschitz continuity of function F imply that the product on the right side of the above inequality tends toward zero for large enough values of k. Hence, and .

Let

be arbitrary. Let

be such that

. Note that

. Therefore, according to Equation (

9), the following holds:

Therefore, if

, we have that

, and thus

□

The main theoretical result of this section is presented in the theorem below. Theorem 2 establishes the sufficient conditions for superlinear convergence of the inexact exponential method for solving nonsmooth equations:

Theorem 2. Let be a sequence generated by Algorithm 1 with . If is a limiting point of , function F is BD-regular at and satisfies Assumption 1 at , then the sequence is superlinearly convergent to .

Proof of Theorem 2. If matrix

is nonsingular, then by Equation (

8), we have that

From the BD-regularity of function

F at

, there is a

such that for any

and any

, we have

. Therefore, we have

Thus, from Theorem 1, it follows that

and every limiting point of

is a solution to Equation (

1). Furthermore, the construction of new approximation

in Algorithm 1 and Equation (

12) implies that

Since function

F is BD-regular at

, the inverse function theorem guarantees that there is

such that

, provided

. Let

be arbitrary. Then, the set

cannot contain any solution. Therefore, not only does it not contain any limiting point, but it can also contain a finite number of approximations. Hence, there is

such that, for all

, we have

Let

be such that

Since

is a limiting point, there is

such that

, and hence

However, we have that

because

. Therefore, we also have that

for all integers

. A number

is arbitrary, so the sequence

converges to a solution

to Equation (

1).

According to Equation (

8) and Assumption 1, for large enough

k values, we have

Since both

and

, we obtain

Thus, for

k values that are large enough, we have

Therefore, the inequality in Equation (

9) holds with

, and for

k values that are large enough, we obtain

Now, under the local Lipschitz continuity and BD-regularity of function

F at

and Assumption 1, there exists

such that

for all

x in a neighborhood of

(see Remark 3 (iii)). Then, according to Equation (

14), we have

□

Remark 4. - (i)

It is not hard to check that Theorem 2 is still true if our new Algorithm 1 is used with one of other subdifferentials mentioned in Section 1. - (ii)

The method is quadratically convergent if the function F satisfies Assumption 1 at with a degree of two.

Some versions of the inexact Newton methods have superlinear convergence under an assumption called a local error bound condition. It requires that

holds for a positive constant

c in a neighborhood

N of the solution to Equation (

1). A local error bound holds, for example, for a piecewise linear function with non-unique solutions.

In solving smooth equations, a local error bound condition is noticeably weaker than the nonsingularity of the Jacobian

(see [

21]). In our nonsmooth case, this condition can be used as a weaker assumption instead of the BD-regularity. Obviously, for our inexact exponential method, both the BD-regularity and local error bound perform the same role as the nonsingularity of the Jacobian in a smooth case.

4. Numerical Experiments

Now, we present some numerical results which help us to confirm the theoretical properties of the algorithm. We solved a few different problems. Algorithm 1 was implemented in C++ with double precision arithmetic and performed using Apache NetBeans. In all examples, the crucial parameters were set to be alike as follows:

,

for all

k and

. The stopping criterion was the condition

. A flowchart of Algorithm 1 is presented in

Figure 1. In our opinion, the results of the numerical computations were satisfactory, as we will now demonstrate.

In all examples,

is the starting approximation, and

is the number of iterations performed by Algorithm 1. For comparison of the efficiency of the new method, we also show the results obtained in tests by the inexact generalized Newton (IGM) method presented in [

20] (

), which also has superlinear convergence.

Example 1. As the first (double) test problem, we chose the usual Equation (1) with two different nonsmooth functions: The equation with function has one solution , while the equation with function has two solutions: and . Both equations have one nondifferentiability point each: for and for .

Both functions and are locally Lipschitz at their solution points. is at because Therefore, is also semismooth at . In turn, Qi and Sun proved in [14] that the function is semismooth at some point if and only if all of its component functions are semismooth at this point. And the component functions of are semismooth as the sum of the semismooth functions (the local Lipschitz continuities of the absolute value function and quadratic function are easy to check). Moreover, the Bouligand subdifferential of at has the form The equations can be solved by Algorithm 1. The only point of nondifferentiability of the function is not a solution, and function satisfies Assumption 1 (it follows from Remark 3(i)) and is BD-regular at . The exemplary results are shown in Table 1 and Table 2. Example 2. The second test problem was Equation (1) with the function (used among the others in [4]), defined bywith Obviously, if , then function F is differentiable. Therefore, the value may be treated as the degree of nondifferentiability of function F [22]. Equation (1) has an infinite number of solutions , where are arbitrary. First, the starting approximation was . We used Algorithm 1 to solve one series of smooth problems (with ) and three series of nonsmooth problems with various parameters . Function F is semismooth as a composite of semismooth functions (as mentioned in Example 1), and all functions are semismooth as piecewise smooth functions (see [13]). In the smooth case, Algorithm 1 (IEM) stopped after six iterations, while Algorithm IGM generated seven successive approximations. The most interesting results we selected are presented in Table 3. In these tests, we noticed that the choice of starting point did not affect the number of iterations very much when Algorithm 1 generated a convergent sequence. Therefore, we omitted the results for other starting points. The number of iterations was actually very similar for a small number of variables. However, Algorithm 1 showed its advantage for larger problems (i.e., when a system of nonlinear equations has at least a dozen unknowns).

Example 3. The last test problem was the Walrasian production–price problem [23] with the demand function ξ defined in the following form: Here, is the price vector, measures the i-th consumer’s intensity of demand for commodity j, and is the i-th consumer’s elasticity of substitution for . Furthermore, the followingis a function of the price chosen in such way to satisfy the budget constraint for each unit. Let denote the technology, denote the total endowment of the economy prior to production and denote the production plan. Then, the Walrasian model can be written as the nonlinear complementarity problem (NCP) in the formwith the following functions:where the operator “min” denotes the component-wise minimum of the vectors. Function is locally Lipschitzian, semismooth and BD-regular, so we can use Algorithm 1 to solve nonsmooth Equation (1). An approximate solution of such a problem (NCP) with , and is We solved this problem using the inexact exponential method with various starting approximations from the neighborhood of in the formwhere and . The exemplary results are shown in Table 4. The Broyden-like method (from [24]) generated somewhat comparable results, which was not surprising since all these methods have the same order of convergence. The number of iterations was equal to 27, 9, −, 66 and 263 for the same initial points as in Table 4. In this test problem, the convergence significantly depended on the location of the starting point (parameters and ). Here, Algorithm 1 showed its advantage for initial points further from the solution.