Eyes versus Eyebrows: A Comprehensive Evaluation Using the Multiscale Analysis and Curvature-Based Combination Methods in Partial Face Recognition

Abstract

:1. Introduction

- We compare the eyes and eyebrows to find the most discriminative facial feature for a partial face recognition system.

- We evaluate the eye and eyebrow features with a combination of multiscale analysis and curvature-based methods. This combination aimed to capture the details of these features at finer scales and offer them in-depth characteristics using curvature. The combination using a curvature-based method was proven to improve the performance of the recognition system.

- We demonstrate a comprehensive evaluation of all variables that occur due to combining multiscale analysis and curvature-based methods.

- The results from the proposed methods are compared using the limited number of images versus the whole dataset. We also compare the results to other works with the condition of the same datasets, and we successfully achieve similar high-accuracy results using only eye and eyebrow images.

2. Materials and Methods

2.1. Methods

2.1.1. The Curvature-Based Method

2.1.2. The Scale Space with a Curvature-Based Method

2.1.3. The Discrete Wavelet Transform with a Curvature-Based Method

2.2. Materials and Experimental Set-Ups

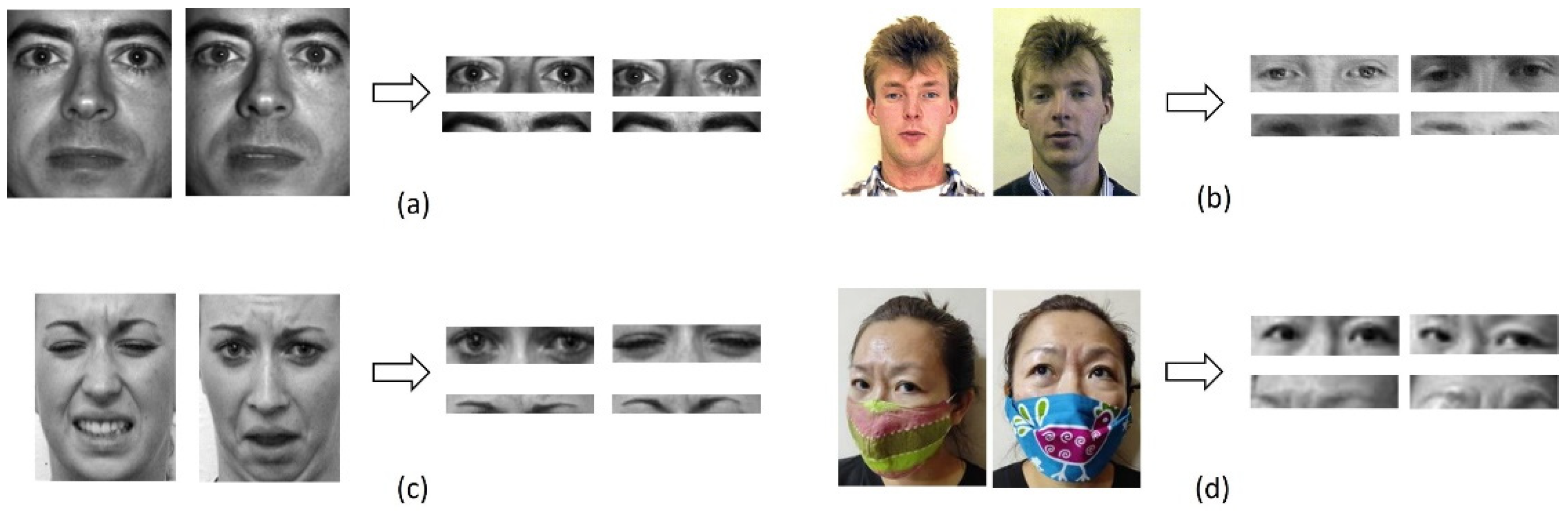

2.2.1. Datasets

- The Cropped Extended Yale Face Database B (EYB) [34,35] has 38 frontal face images of 38 respondents in 65 image variations (1 ambient + 62 illuminations). The original size of each image is 168 × 192 pixels in grayscale. Each image in the dataset has little to no variation in the location of the eyes and eyebrows. First, we evaluated 434 cropped face images taken randomly from 7 respondents in the dataset for this research. Since extremely dark images were inside the dataset, we evaluated 62 images for each respondent. We evaluated part of the EYB dataset first to observe the performance of all the proposed ideas. Then, we later re-evaluated the whole dataset using the best method that produced the highest recognition performance to observe the effect using limited data vs. larger data. Re-evaluation for the best method used 2242 images from 38 respondents. A total of 59 images was taken from each respondent. Several images were not observed because they were bad images resulting from the acquisition process.

- The Aberdeen dataset (ABD) [36] has 687 color face images from 90 respondents. Each respondent provided between 1 and 18 images. The dataset has variations in lighting and viewpoint. The original resolution of this dataset varied from 336 × 480 to 624 × 544 pixels. There are images with different hairstyles, outfits, and facial expressions in the ABD. We evaluated 84 face images randomly from 21 respondents, with a total of 4 images with lighting and viewpoint variations for each respondent. We also re-evaluated the whole dataset to observe the effect using limited data vs. larger data. Re-evaluations for the best method used 244 images from 61 respondents. To create a balanced dataset, we did not use the whole of the images from the 90 respondents because only 61 respondents from the dataset had the consistency of containing 4 images, while the other 29 respondents had a varying number of images per person ranging from 1 to 18 images.

- The pain expression subset dataset (PES) [36] has 84 cropped images from the pain expression dataset. The face images have a fixed location for the eyes, with 7 expressions from each of the 12 respondents. The original resolution is 181 × 241 pixels in grayscale. The eyes and eyebrows differ in shape according to the respondent’s expression. For this work, we evaluated all images in the dataset.

- The real fabric face mask dataset (RFFMDS v.1.0) [32] has 176 images from 8 respondents. A total of 22 images consisting of 2 face masks and barefaced images were gathered from each respondent. The images have varying viewpoints and head pose angles. The images are 200 × 150 pixels in an RGB color space. This dataset was evaluated to compare the eye and eyebrow images with full-masked face images for the recognition system. For this dataset, the final size for the cropped eyes image was 49 × 13 pixels, and the final size for the cropped eyebrows image was 67 × 20 pixels. Both the eye and eyebrow images were in grayscale.

2.2.2. Classification and Performance Calculation

3. Results and Discussion

3.1. The EYB Eyes Image Results

3.1.1. The Curvature-Based Method Results

3.1.2. The Scale Space with a Curvature-Based Method Results

3.1.3. The DWT with a Curvature-Based Method Results

3.1.4. Summary

3.2. The EYB Eyebrow Image Results

3.2.1. Curvature-Based Method Results

3.2.2. The Scale Space with Curvature-Based Method Results

3.2.3. The DWT with Curvature-Based Method Results

3.2.4. Summary of the EYB Eyebrow Image Results

3.3. Results Using Other Datasets

3.4. Comparison with Other Methods

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Peixoto, S.A.; Vasconcelos, F.F.; Guimarães, M.T.; Medeiros, A.G.; Rego, P.A.; Neto, A.V.L.; de Albuquerque, V.H.C.; Filho, P.P.R. A high-efficiency energy and storage approach for IoT applications of facial recognition. Image Vis. Comput. 2020, 96, 103899. [Google Scholar] [CrossRef]

- Chen, L.W.; Ho, Y.F.; Tsai, M.F. Instant social networking with startup time minimization based on mobile cloud computing. Sustainability 2018, 10, 1195. [Google Scholar] [CrossRef] [Green Version]

- Zeng, D.; Veldhuis, R.; Spreeuwers, L. A survey of face recognition techniques under occlusion. IET Biom. 2021, 10, 581–606. [Google Scholar] [CrossRef]

- Zhang, L.; Verma, B.; Tjondronegoro, D.; Chandran, V. Facial expression analysis under partial occlusion: A survey. ACM Comput. Surv. 2018, 51, 1–49. [Google Scholar] [CrossRef] [Green Version]

- Damer, N.; Grebe, J.H.; Chen, C.; Boutros, F.; Kirchbuchner, F.; Kuijper, A. The Effect of Wearing a Mask on Face Recognition Performance: An Exploratory Study. In Proceedings of the 2020 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 16–18 September 2020. [Google Scholar]

- Carragher, D.J.; Hancock, P.J.B. Surgical face masks impair human face matching performance for familiar and unfamiliar faces. Cogn. Res. Princ. Implic. 2020, 5, 59. [Google Scholar] [CrossRef]

- Li, C.; Ge, S.; Zhang, D.; Li, J. Look Through Masks: Towards Masked Face Recognition with De-Occlusion Distillation. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar]

- Geng, M.; Peng, P.; Huang, Y.; Tian, Y. Masked Face Recognition with Generative Data Augmentation and Domain Constrained Ranking. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar]

- Ding, F.; Peng, P.; Huang, Y.; Geng, M.; Tian, Y. Masked Face Recognition with Latent Part Detection. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar]

- Li, Y.; Guo, K.; Lu, Y.; Liu, L. Cropping and attention based approach for masked face recognition. Appl. Intell. 2021, 51, 3012–3025. [Google Scholar] [CrossRef]

- Ejaz, M.S.; Islam, M.R. Masked face recognition using convolutional neural network. In Proceedings of the 2019 International Conference on Sustainable Technologies for Industry 4.0 (STI), Dhaka, Bangladesh, 24–25 December 2019. [Google Scholar]

- Nguyen, J.; Duong, H. Anatomy, Head and Neck, Anterior, Common Carotid Arteries; StatPearls Publishing: Treasure Island, FL, USA, 2020. [Google Scholar]

- Kumari, P.; Seeja, K.R. Periocular biometrics: A survey. J. King Saud Univ. Comput. Inf. Sci. 2019, 34, 1086–1097. [Google Scholar] [CrossRef]

- Zhao, Z.; Kumar, A. Improving periocular recognition by explicit attention to critical regions in deep neural network. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2937–2952. [Google Scholar] [CrossRef]

- Karczmarek, P.; Pedrycz, W.; Kiersztyn, A.; Rutka, P. A study in facial features saliency in face recognition: An analytic hierarchy process approach. Soft Comput. 2017, 21, 7503–7517. [Google Scholar] [CrossRef] [Green Version]

- Peterson, M.F.; Eckstein, M.P. Looking just below the eyes is optimal across face recognition tasks. Proc. Natl. Acad. Sci. USA 2012, 109, E3314–E3323. [Google Scholar] [CrossRef] [Green Version]

- Tome, P.; Fierrez, J.; Vera-Rodriguez, R.; Ortega-Garcia, J. Combination of Face Regions in Forensic Scenarios. J. Forensic Sci. 2015, 60, 1046–1051. [Google Scholar] [CrossRef] [PubMed]

- Abudarham, N.; Yovel, G. Reverse engineering the face space: Discovering the critical features for face identification. J. Vis. 2016, 16, 40. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abudarham, N.; Shkiller, L.; Yovel, G. Critical features for face recognition. Cognition 2019, 182, 73–83. [Google Scholar] [CrossRef] [PubMed]

- Ding, L.; Martinez, A.M. Features versus context: An approach for precise and detailed detection and delineation of faces and facial features. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2022–2038. [Google Scholar] [CrossRef] [Green Version]

- Biswas, R.; González-Castro, V.; Fidalgo, E.; Alegre, E. A new perceptual hashing method for verification and identity classification of occluded faces. Image Vis. Comput. 2021, 113, 104245. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Y.; Song, Y.; Rong, X. Facial expression recognition based on auxiliary models. Algorithms 2019, 12, 227. [Google Scholar] [CrossRef] [Green Version]

- Bülthoff, I.; Jung, W.; Armann, R.G.M.; Wallraven, C. Predominance of eyes and surface information for face race categorization. Sci. Rep. 2021, 11, 2021. [Google Scholar] [CrossRef]

- Fu, S.; He, H.; Hou, Z.G. Learning race from face: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2483–2509. [Google Scholar] [CrossRef] [Green Version]

- Oztel, I.; Yolcu, G.; Öz, C.; Kazan, S.; Bunyak, F. iFER: Facial expression recognition using automatically selected geometric eye and eyebrow features. J. Electron. Imaging 2018, 27, 023003. [Google Scholar] [CrossRef]

- García-Ramírez, J.; Olvera-López, J.A.; Olmos-Pineda, I.; Martín-Ortíz, M. Mouth and eyebrow segmentation for emotion recognition using interpolated polynomials. J. Intell. Fuzzy Syst. 2018, 34, 3119–3131. [Google Scholar] [CrossRef]

- Sadr, J.; Jarudi, I.; Sinha, P. The role of eyebrows in face recognition. Perception 2003, 32, 285–293. [Google Scholar] [CrossRef] [PubMed]

- Yujian, L.; Cuihua, F. Eyebrow Recognition: A New Biometric Technique. In Proceedings of the Ninth IASTED International Conference on Signal and Image Processing, Honolulu, HI, USA, 20–22 August 2007. [Google Scholar]

- Yujian, L.; Xingli, L. HMM based eyebrow recognition. In Proceedings of the Third International Conference on Intelligent Information Hiding and Multimedia Signal Processing (IIH-MSP 2007), Kaohsiung, Taiwan, 26–28 November 2007. [Google Scholar]

- Turkoglu, M.O.; Arican, T. Texture-Based Eyebrow Recognition. In Proceedings of the 2017 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 20–22 September 2017. [Google Scholar]

- Li, Y.; Li, H.; Cai, Z. Human eyebrow recognition in the matching-recognizing framework. Comput. Vis. Image Underst. 2013, 117, 170–181. [Google Scholar] [CrossRef]

- Lionnie, R.; Apriono, C.; Gunawan, D. Face Mask Recognition with Realistic Fabric Face Mask Data Set: A Combination Using Surface Curvature and GLCM. In Proceedings of the 2021 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Toronto, ON, Canada, 21–24 April 2021. [Google Scholar]

- Lionnie, R.; Apriono, C.; Gunawan, D. A Study of Orthogonal and Biorthogonal Wavelet Best Basis for Periocular Recognition. ECTI-CIT 2022. submitted. [Google Scholar]

- Georghiades, A.S.; Belhumeur, P.N.; Kriegman, D.J. From few to many: Illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 643–660. [Google Scholar] [CrossRef] [Green Version]

- Lee, K.C.; Ho, J.; Kriegman, D.J. Acquiring linear subspaces for face recognition under variable lighting. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 684–698. [Google Scholar]

- Aberdeen, I. Psychological Image Collection at Stirling (PICS). Available online: http://pics.psych.stir.ac.uk/ (accessed on 23 May 2022).

- Godinho, R.M.; Spikins, P.; O’Higgins, P. Supraorbital morphology and social dynamics in human evolution. Nat. Ecol. Evol. 2018, 2, 956–961. [Google Scholar] [CrossRef] [Green Version]

- Tang, Y.; Li, H.; Sun, X.; Morvan, J.M.; Chen, L. Principal curvature measures estimation and application to 3D face recognition. J. Math. Imaging Vis. 2017, 59, 211–233. [Google Scholar] [CrossRef]

- Emambakhsh, M.; Evans, A. Nasal patches and curves for expression-robust 3D face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 995–1007. [Google Scholar] [CrossRef] [Green Version]

- Samad, M.D.; Iftekharuddin, K.M. Frenet frame-based generalized space curve representation for pose-invariant classification and recognition of 3-D face. IEEE Trans. Hum. Mach. Syst. 2016, 46, 522–533. [Google Scholar] [CrossRef]

- Bærentzen, J.A. Guide to Computational Geometry Processing; Springer: London, UK, 2012. [Google Scholar]

- Callens, S.J.P.; Zadpoor, A.A. From flat sheets to curved geometries: Origami and kirigami approaches. Mater. Today 2018, 21, 241–264. [Google Scholar] [CrossRef]

- Gray, A. Modern differential geometry of curves and surfaces with mathematica. Comput. Math. Appl. 1998, 36, 121. [Google Scholar]

- Lindeberg, T. Generalized Gaussian Scale-Space Axiomatics Comprising Linear Scale-Space, Affine Scale-Space and Spatio-Temporal Scale-Space. J. Math. Imaging Vis. 2011, 40, 36–81. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Burger, W.; Burge, M. Principles of Digital Image Processing: Advanced Methods; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Mokhtarian, F.; Mackworth, A.K. A theory of multiscale, curvature-based shape representation for planar curves. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 789–805. [Google Scholar] [CrossRef]

- Mokhtarian, F.; Mackworth, A.K. Scale-Based Description and and recognition of planar curves and two-dimensional shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 34–43. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hennig, M.; Mertsching, B. Box filtering for real-time curvature scale-space computation. J. Phys. Conf. Ser. 2021, 1958, 012020. [Google Scholar] [CrossRef]

- Zeng, J.; Liu, M.; Fu, X.; Gu, R.; Leng, L. Curvature Bag of Words Model for Shape Recognition. IEEE Access 2019, 7, 57163–57171. [Google Scholar] [CrossRef]

- Gong, Y.; Goksel, O. Weighted mean curvature. Signal Processing 2019, 164, 329–339. [Google Scholar] [CrossRef]

- Tan, W.; Zhou, H.; Song, J.; Li, H.; Yu, Y.; Du, J. Infrared and visible image perceptive fusion through multi-level Gaussian curvature filtering image decomposition. Appl. Opt. 2019, 58, 3064. [Google Scholar] [CrossRef]

- Mokhtarian, F.; Suomela, R. Robust image corner detection through curvature scale space. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1376–1381. [Google Scholar] [CrossRef] [Green Version]

- Bakar, S.A.; Hitam, M.S.; Yussof, W.N.J.H.W.; Mukta, M.Y. Shape Corner Detection through Enhanced Curvature Properties. In Proceedings of the 2020 Emerging Technology in Computing, Communication and Electronics (ETCCE), Dhaka, Bangladesh, 21–22 December 2020. [Google Scholar]

- Sundararajan, D. Discrete Wavelet Transform: A Signal Processing Approach; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Xu, W.; Ding, K.; Liu, J.; Cao, M.; Radzieński, M.; Ostachowicz, W. Non-uniform crack identification in plate-like structures using wavelet 2D modal curvature under noisy conditions. Mech. Syst. Signal Processing 2019, 126, 469–489. [Google Scholar] [CrossRef]

- Janeliukstis, R.; Rucevskis, S.; Wesolowski, M.; Chate, A. Experimental structural damage localization in beam structure using spatial continuous wavelet transform and mode shape curvature methods. Measurement 2017, 102, 253–270. [Google Scholar] [CrossRef]

- Bao, L.; Cao, Y.; Zhang, X. Intelligent Identification of Structural Damage Based on the Curvature Mode and Wavelet Analysis Theory. Adv. Civ. Eng. 2021, 2021, 8847524. [Google Scholar] [CrossRef]

- Teimoori, T.; Mahmoudi, M. Damage detection in connections of steel moment resisting frames using proper orthogonal decomposition and wavelet transform. Measurement 2020, 166, 108188. [Google Scholar] [CrossRef]

- Karami, V.; Chenaghlou, M.R.; Gharabaghi, A.R.M. A combination of wavelet packet energy curvature difference and Richardson extrapolation for structural damage detection. Appl. Ocean Res. 2020, 101, 102224. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, J.; Meng, M. Small Target Recognition Using Dynamic Time Warping and Visual Attention. Comput. J. 2020, 65, 203–216. [Google Scholar] [CrossRef]

- Li, J.; Ma, H.; Lv, Y.; Zhao, D.; Liu, Y. Finger vein feature extraction based on improved maximum curvature description. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019. [Google Scholar]

- Viola, P.; Jones, M. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Patel, S.P.; Upadhyay, S.H. Euclidean distance based feature ranking and subset selection for bearing fault diagnosis. Expert Syst. Appl. 2020, 154, 113400. [Google Scholar] [CrossRef]

- Powers, D. Evaluation: From Precision, Recall and F-Measure to ROC, Informedness, Markedness & Correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Rahmad, C.; Arai, K.; Asmara, R.A.; Ekojono, E.; Putra, D.R.H. Comparison of Geometric Features and Color Features for Face Recognition. Int. J. Intell. Eng. Syst. 2021, 14, 541–551. [Google Scholar] [CrossRef]

- Huixian, Y.; Gan, W.; Chen, F.; Zeng, J. Cropped and Extended Patch Collaborative Representation Face Recognition for a Single Sample Per Person. Autom. Control Comput. Sci. 2019, 53, 550–559. [Google Scholar] [CrossRef]

- Lin, J.; Te Chiu, C. Low-complexity face recognition using contour-based binary descriptor. IET Image Process. 2017, 11, 1179–1187. [Google Scholar] [CrossRef]

- Phornchaicharoen, A.; Padungweang, P. Face recognition using transferred deep learning for feature extraction. In Proceedings of the 2019 Joint International Conference on Digital Arts, Media and Technology with ECTI Northern Section Conference on Electrical, Electronics, Computer and Telecommunications Engineering (ECTI DAMT-NCON), Nan, Thailand, 30 January–2 February 2019. [Google Scholar]

- Deng, W.; Hu, J.; Guo, J. Face Recognition via Collaborative Representation: Its Discriminant Nature and Superposed Representation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2513–2521. [Google Scholar] [CrossRef] [PubMed]

- Wright, J.; Yang, A.Y.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust Face Recognition via Sparse Representation. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 210–227. [Google Scholar] [CrossRef] [Green Version]

| k-NN | Acc (%) | Training Time (s) |

|---|---|---|

| k = 1 | 91.00 | 13.47 |

| k = 3 | 89.20 | 17.02 |

| k = 5 | 85.50 | 17.31 |

| k = 7 | 84.10 | 14.69 |

| Abbr. | Method |

|---|---|

| K | Gaussian curvature |

| H | mean curvature |

| X | max principal curvature |

| N | min principal curvature |

| SS + K/H/X/N | scale space with curvature (Gaussian, mean, max principal, and min principal) |

| O-L | octave level in scale space |

| A# | approximation coefficient in DWT from # (decomposition level) |

| Hr# | horizontal coefficient in DWT from # (decomposition level) |

| V# | vertical coefficient in DWT from # (decomposition level) |

| D# | diagonal coefficient in DWT from # (decomposition level) |

| Sym2 | Symlet wavelet with 2 vanishing moments |

| Db2 | Daubechies wavelet with 2 vanishing moments |

| Bior2.2 | biorthogonal wavelet with 2 vanishing moments for synthesis and analysis |

| 1-NN | k-nearest neighbor with k = 1 |

| SVM-1 | support vector machine with linear kernel |

| SVM-2 | support vector machine with polynomial kernel |

| Classification | Base | Curvature-Based | |||

|---|---|---|---|---|---|

| K | H | X | N | ||

| 1-NN | 58.92 | 15.88 | 32.58 | 47.42 | 51.36 |

| SVM-1 | 66.36 | 31.80 | 51.61 | 59.91 | 58.99 |

| SVM-2 | 44.70 | 25.81 | 30.41 | 34.56 | 22.12 |

| Classifier | Method | Octave-Level (O-L) | |||||

|---|---|---|---|---|---|---|---|

| 0-1 | 0-2 | 0-3 | 1-1 | 1-2 | 1-3 | ||

| 1-NN | SS | 54.84 | 54.10 | 50.41 | 48.36 | 45.90 | 43.32 |

| SS + K | 41.24 | 43.89 | 45.90 | 25.32 | 29.10 | 29.29 | |

| SS + H | 63.20 | 64.49 | 63.32 | 53.78 | 52.35 | 51.89 | |

| SS + X | 63.85 | 64.06 | 64.70 | 57.58 | 56.43 | 51.77 | |

| SS + N | 66.41 | 65.35 | 65.46 | 46.38 | 45.88 | 42.86 | |

| SVM-1 | SS | 69.59 | 69.59 | 69.12 | 68.20 | 67.28 | 66.82 |

| SS + K | 47.93 | 53.92 | 57.60 | 49.77 | 42.40 | 36.41 | |

| SS + H | 64.06 | 64.52 | 65.44 | 74.65 | 64.98 | 60.37 | |

| SS + X | 65.44 | 64.06 | 63.13 | 76.04 | 72.81 | 64.98 | |

| SS + N | 67.74 | 66.82 | 66.82 | 60.83 | 50.23 | 45.16 | |

| SVM-2 | SS | 49.31 | 40.55 | 40.55 | 24.42 | 25.81 | 18.89 |

| SS + K | 35.02 | 38.71 | 35.48 | 22.12 | 26.73 | 35.02 | |

| SS + H | 43.78 | 59.91 | 54.38 | 35.48 | 32.26 | 26.27 | |

| SS + X | 43.78 | 36.41 | 36.87 | 29.49 | 29.03 | 33.18 | |

| SS + N | 53.00 | 52.53 | 48.85 | 33.18 | 33.64 | 33.64 | |

| Classifier | DWT | Wavelet Coefficient and Decomposition Level | |||||||

|---|---|---|---|---|---|---|---|---|---|

| A1 | V1 | Hr1 | D1 | A2 | V2 | Hr2 | D2 | ||

| 1-NN | Haar | 58.06 | 50.71 | 51.64 | 20.07 | 55.44 | 58.87 | 56.87 | 29.82 |

| Sym2 | 59.45 | 21.06 | 32.70 | 14.40 | 58.29 | 45.85 | 52.17 | 22.24 | |

| Db2 | 59.29 | 21.08 | 32.86 | 14.82 | 58.04 | 45.55 | 51.71 | 22.33 | |

| Bior2.2 | 57.24 | 18.39 | 27.60 | 14.95 | 58.27 | 31.24 | 43.80 | 17.65 | |

| SVM-1 | Haar | 67.28 | 58.06 | 55.30 | 41.94 | 70.51 | 56.68 | 54.38 | 43.32 |

| Sym2 | 66.36 | 41.47 | 48.85 | 30.88 | 72.81 | 47.00 | 51.15 | 27.19 | |

| Db2 | 66.36 | 41.47 | 48.85 | 30.88 | 72.81 | 47.00 | 51.15 | 27.19 | |

| Bior2.2 | 69.12 | 40.55 | 47.00 | 26.27 | 71.43 | 39.17 | 42.86 | 23.50 | |

| SVM-2 | Haar | 16.13 | 33.18 | 36.87 | 30.41 | 56.68 | 44.24 | 37.33 | 29.03 |

| Sym2 | 16.59 | 24.42 | 29.95 | 26.27 | 51.61 | 35.02 | 43.78 | 27.19 | |

| Db2 | 16.59 | 24.42 | 29.95 | 26.27 | 51.61 | 35.02 | 43.78 | 27.19 | |

| Bior2.2 | 20.74 | 28.11 | 28.57 | 20.74 | 41.47 | 26.73 | 29.95 | 23.96 | |

| Classifier | DWT | Wavelet Coefficient and Curvature Combination | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A-K | A-H | A-X | A-N | V-K | V-H | V-X | V-N | Hr-K | Hr-H | Hr-X | Hr-N | D-K | D-H | D-X | D-N | ||

| SVM-1 | Haar Lv1 | 20.28 | 70.51 | 64.06 | 56.22 | 21.66 | 51.61 | 44.24 | 47.47 | 22.12 | 53.92 | 51.15 | 50.69 | 24.88 | 43.78 | 38.25 | 39.63 |

| Haar Lv2 | 28.11 | 61.75 | 65.44 | 55.76 | 25.35 | 52.07 | 41.01 | 45.62 | 20.74 | 48.85 | 46.08 | 48.85 | 21.66 | 34.56 | 31.80 | 30.41 | |

| Sym2 Lv1 | 22.58 | 74.65 | 64.06 | 69.12 | 22.58 | 46.54 | 44.24 | 43.78 | 26.73 | 45.16 | 38.71 | 42.40 | 29.95 | 28.57 | 37.33 | 32.72 | |

| Sym2 Lv2 | 28.11 | 66.36 | 68.66 | 58.99 | 17.05 | 43.78 | 34.10 | 40.09 | 21.66 | 47.47 | 42.40 | 40.09 | 17.05 | 23.50 | 24.42 | 31.34 | |

| Db2 Lv1 | 22.58 | 74.65 | 64.06 | 69.12 | 22.58 | 46.54 | 44.24 | 43.78 | 26.73 | 45.16 | 38.71 | 42.40 | 29.95 | 28.57 | 37.33 | 32.72 | |

| Db2 Lv2 | 28.11 | 66.36 | 68.66 | 58.99 | 17.05 | 43.78 | 34.10 | 40.09 | 21.66 | 47.47 | 42.40 | 40.09 | 17.05 | 23.50 | 24.42 | 31.34 | |

| Bior2.2 Lv1 | 23.50 | 70.97 | 65.90 | 68.66 | 22.58 | 44.70 | 44.24 | 38.25 | 25.35 | 45.16 | 46.08 | 41.01 | 26.27 | 25.81 | 32.72 | 35.48 | |

| Bior2.2 Lv2 | 30.41 | 64.52 | 65.90 | 59.91 | 19.82 | 41.47 | 35.94 | 36.41 | 23.96 | 39.17 | 39.17 | 40.09 | 22.12 | 23.96 | 30.41 | 28.57 | |

| Classifier | Base | Curvature-Based | |||

|---|---|---|---|---|---|

| K | H | X | N | ||

| 1-NN | 63.27 | 18.29 | 52.65 | 72.74 | 72.86 |

| SVM-1 | 92.63 | 56.68 | 86.64 | 86.64 | 91.24 |

| SVM-2 | 64.52 | 35.94 | 47.93 | 31.34 | 35.94 |

| Classifier | Method | Octave-Level (O-L) | |||||

|---|---|---|---|---|---|---|---|

| 0-1 | 0-2 | 0-3 | 1-1 | 1-2 | 1-3 | ||

| 1-NN | SS | 57.76 | 56.36 | 55.30 | 52.24 | 49.95 | 45.18 |

| SS + K | 47.74 | 48.16 | 39.52 | 35.37 | 38.69 | 37.35 | |

| SS + H | 82.70 | 83.11 | 83.25 | 76.82 | 76.04 | 70.90 | |

| SS + X | 83.76 | 91.00 | 83.41 | 69.12 | 64.88 | 61.45 | |

| SS + N | 84.22 | 83.00 | 82.53 | 79.26 | 74.72 | 67.12 | |

| SVM-1 | SS | 87.56 | 88.48 | 87.10 | 85.25 | 86.64 | 86.18 |

| SS + K | 78.80 | 82.95 | 83.87 | 64.52 | 65.44 | 65.44 | |

| SS + H | 93.55 | 94.47 | 89.86 | 94.93 | 93.55 | 85.71 | |

| SS + X | 94.01 | 93.09 | 91.24 | 88.94 | 88.48 | 82.03 | |

| SS + N | 93.55 | 94.93 | 93.09 | 93.55 | 89.40 | 83.41 | |

| SVM-2 | SS | 66.36 | 47.47 | 55.30 | 29.49 | 22.58 | 23.04 |

| SS + K | 36.87 | 39.63 | 44.70 | 21.20 | 34.10 | 23.50 | |

| SS + H | 63.59 | 57.14 | 38.25 | 31.34 | 32.26 | 33.64 | |

| SS + X | 46.08 | 67.74 | 30.41 | 28.11 | 27.19 | 22.12 | |

| SS + N | 62.21 | 55.76 | 57.60 | 38.71 | 40.09 | 33.64 | |

| Classifier | DWT | Wavelet Coefficient and Decomposition Level | |||||||

|---|---|---|---|---|---|---|---|---|---|

| A1 | V1 | Hr1 | D1 | A2 | V2 | Hr2 | D2 | ||

| 1-NN | Haar | 63.69 | 60.58 | 77.65 | 24.29 | 61.52 | 78.02 | 77.51 | 33.34 |

| Sym2 | 62.76 | 30.16 | 56.47 | 17.14 | 59.03 | 63.29 | 81.13 | 33.06 | |

| Db2 | 63.02 | 29.26 | 52.67 | 17.10 | 60.00 | 57.93 | 81.50 | 34.06 | |

| Bior2.2 | 64.03 | 25.53 | 46.38 | 17.26 | 62.26 | 46.96 | 75.23 | 25.46 | |

| SVM-1 | Haar | 92.17 | 84.79 | 87.1 | 79.72 | 89.4 | 86.18 | 86.18 | 69.12 |

| Sym2 | 91.71 | 73.73 | 83.41 | 74.65 | 91.71 | 86.64 | 91.24 | 70.97 | |

| Db2 | 91.71 | 73.73 | 83.41 | 74.65 | 91.71 | 86.64 | 91.24 | 70.97 | |

| Bior2.2 | 91.71 | 76.96 | 83.87 | 69.59 | 90.78 | 80.18 | 87.56 | 57.6 | |

| SVM-2 | Haar | 35.48 | 43.32 | 40.55 | 34.56 | 60.83 | 56.22 | 48.39 | 32.26 |

| Sym2 | 26.73 | 36.87 | 35.02 | 23.96 | 59.45 | 41.47 | 45.62 | 35.02 | |

| Db2 | 26.73 | 36.87 | 35.02 | 23.96 | 59.45 | 41.47 | 45.62 | 35.02 | |

| Bior2.2 | 29.03 | 37.79 | 35.94 | 31.8 | 56.68 | 32.26 | 44.24 | 29.49 | |

| Classifier | DWT | Wavelet Coefficient and Curvature Combination | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A-K | A-H | A-X | A-N | V-K | V-H | V-X | V-N | Hr-K | Hr-H | Hr-X | Hr-N | D-K | D-H | D-X | D-N | ||

| SVM-1 | Haar Lv1 | 38.25 | 98.61 | 95.39 | 96.77 | 37.79 | 90.78 | 87.56 | 89.86 | 40.09 | 92.63 | 92.63 | 91.71 | 47.93 | 80.65 | 82.95 | 82.95 |

| Haar Lv2 | 34.10 | 88.48 | 82.95 | 87.56 | 33.64 | 92.17 | 82.49 | 88.02 | 30.88 | 90.78 | 89.86 | 84.79 | 27.19 | 71.43 | 59.91 | 65.44 | |

| Sym2 Lv1 | 43.78 | 98.16 | 96.31 | 97.24 | 43.32 | 88.48 | 84.79 | 85.25 | 42.40 | 94.01 | 94.01 | 91.24 | 48.39 | 72.81 | 76.96 | 69.59 | |

| Sym2 Lv2 | 41.94 | 94.01 | 86.64 | 94.93 | 30.41 | 82.03 | 84.33 | 81.11 | 35.94 | 92.63 | 88.94 | 92.63 | 27.19 | 70.97 | 60.37 | 65.90 | |

| Db2 Lv1 | 43.78 | 98.16 | 96.31 | 97.24 | 43.32 | 88.48 | 84.79 | 85.25 | 42.40 | 94.01 | 94.01 | 91.24 | 48.39 | 72.81 | 76.96 | 69.59 | |

| Db2 Lv2 | 41.94 | 94.01 | 86.64 | 94.93 | 30.41 | 82.03 | 84.33 | 81.11 | 35.94 | 92.63 | 88.94 | 92.63 | 27.19 | 70.97 | 60.37 | 65.90 | |

| Bior2.2 Lv1 | 34.56 | 96.77 | 96.77 | 97.24 | 40.09 | 87.10 | 79.26 | 83.41 | 35.94 | 93.09 | 90.78 | 93.09 | 44.70 | 68.20 | 70.97 | 67.74 | |

| Bior2.2 Lv2 | 34.56 | 93.09 | 89.40 | 94.01 | 29.49 | 72.81 | 67.28 | 69.12 | 32.72 | 90.78 | 90.32 | 87.10 | 26.73 | 52.07 | 49.77 | 51.61 | |

| Region | Method | Accuracy (%) | |

|---|---|---|---|

| Limited EYB (434 Images) | Whole EYB (2242 Images) | ||

| Eyes | base | 66.36 | 74.04 |

| SS + H | 74.65 | 85.37 | |

| SS + X | 76.04 | 83.41 | |

| Sym2(A) + H | 74.65 | 96.88 | |

| Sym2(A) + X | 64.06 | 96.52 | |

| Db2(A) + H | 74.65 | 96.88 | |

| Db2(A) + X | 64.06 | 96.52 | |

| Eyebrows | base | 92.63 | 79.3 |

| SS + H | 94.93 | 83.23 | |

| SS + N | 94.93 | 83.59 | |

| Sym2(A) + H | 98.16 | 83.59 | |

| Sym2(A) + X | 96.31 | 93.22 | |

| Db2(A) + H | 98.16 | 83.59 | |

| Db2(A) + X | 96.31 | 93.22 | |

| Dataset | Methods | Accuracy (%) | Total Images (Class/Testing per Class/Training per Class) |

|---|---|---|---|

| RFFMDS v1.0 | Eyes * | 81.25 | 160 (8/10/10) |

| Eyebrows * | 95.00 | 160 (8/10/10) | |

| Lionnie [32] | 87.50 | 176 (8/2/20) | |

| ABD | Eyes * | 69.05 | 84 (21/2/2) |

| Eyebrows * | 57.14 | 84 (21/2/2) | |

| Eyes * | 39.34 | 244 (61/2/2) | |

| Eyebrows * | 23.77 | 244 (61/2/2) | |

| Rahmad [67] on SVM | 74.83 | 687 (90:10-CV) ** | |

| EYB dataset | Eyes * | 76.04 | 434 (7/31/31) |

| Eyebrows * | 98.61 | 434 (7/31/31) | |

| Eyes * | 96.88 | 2242 (38/29/30) | |

| Eyebrows * | 93.22 | 2242 (38/29/30) | |

| Yang [68] | 93.96 | 70 (10/1/6) | |

| Lin [69] | 67.42 | 2432 (38/1/63) | |

| Phornchaicharoen [70] | 96.56 | 2404 (80:20) *** | |

| Deng [71] on L-SVM | 97.10 | 2432 (38/32/32) | |

| Wright [72] on SVM | 97.70 | 2432 (38/32/32) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lionnie, R.; Apriono, C.; Gunawan, D. Eyes versus Eyebrows: A Comprehensive Evaluation Using the Multiscale Analysis and Curvature-Based Combination Methods in Partial Face Recognition. Algorithms 2022, 15, 208. https://doi.org/10.3390/a15060208

Lionnie R, Apriono C, Gunawan D. Eyes versus Eyebrows: A Comprehensive Evaluation Using the Multiscale Analysis and Curvature-Based Combination Methods in Partial Face Recognition. Algorithms. 2022; 15(6):208. https://doi.org/10.3390/a15060208

Chicago/Turabian StyleLionnie, Regina, Catur Apriono, and Dadang Gunawan. 2022. "Eyes versus Eyebrows: A Comprehensive Evaluation Using the Multiscale Analysis and Curvature-Based Combination Methods in Partial Face Recognition" Algorithms 15, no. 6: 208. https://doi.org/10.3390/a15060208

APA StyleLionnie, R., Apriono, C., & Gunawan, D. (2022). Eyes versus Eyebrows: A Comprehensive Evaluation Using the Multiscale Analysis and Curvature-Based Combination Methods in Partial Face Recognition. Algorithms, 15(6), 208. https://doi.org/10.3390/a15060208