Precision-Based Weighted Blending Distributed Ensemble Model for Emotion Classification

Abstract

:1. Introduction

- A precision-based weighted blending distributed ensemble model for emotion classification is proposed and tested on three datasets, as well as on a combination of them.

- The suggested ensemble model can work in a distributed manner using the concepts of Spark’s resilient distributed datasets, which provide quick in-memory processing capabilities and also perform iterative computations in an effective way.

- The proposed ensemble model outperforms other approaches because not only does it consider the probabilities of each class, but also the precision value of each classifier, when generating the final prediction, thus giving greater weight to the classifier that performs well throughout each run.

2. Related Work

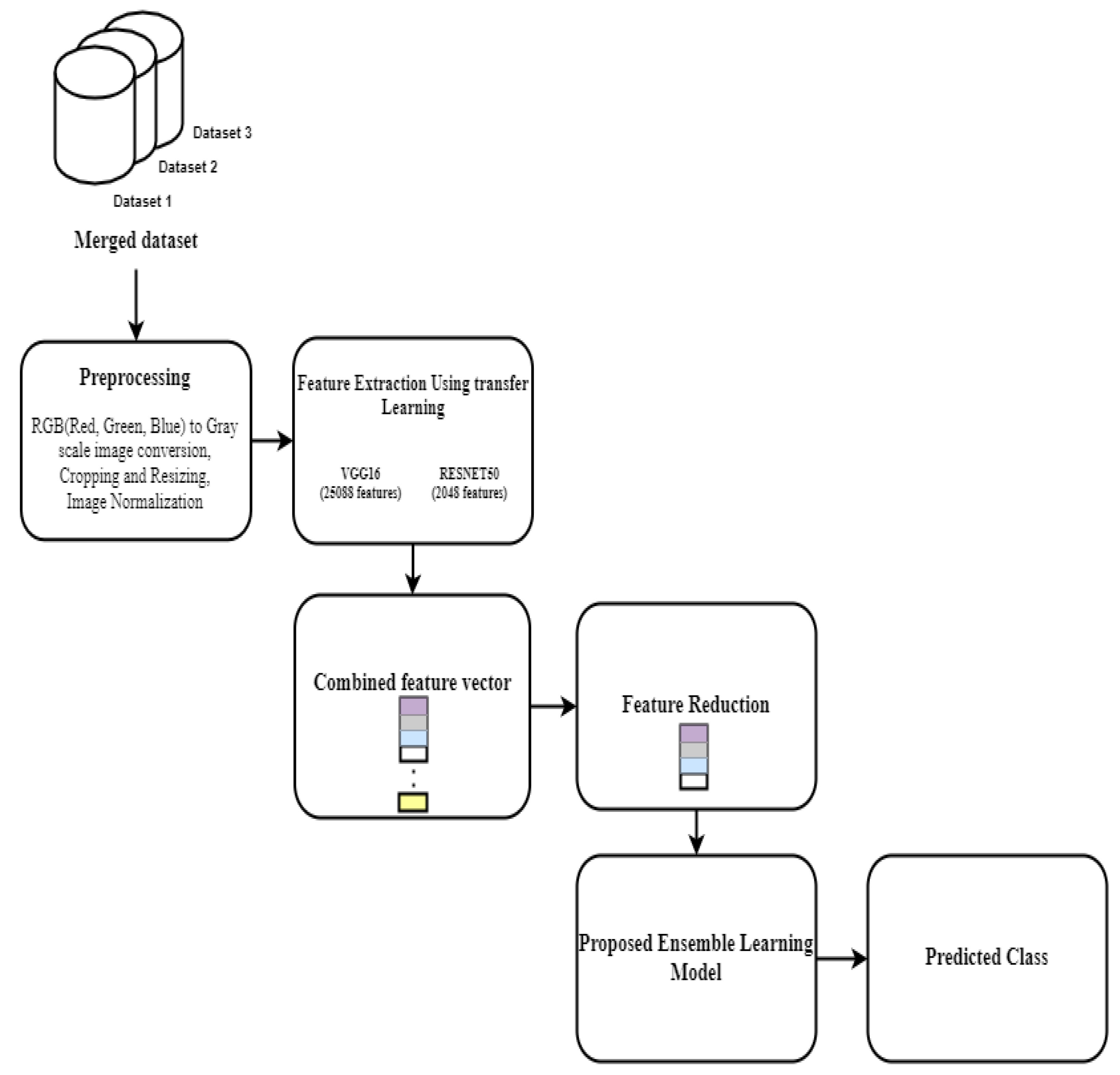

3. The Proposed Method

3.1. Dataset Description

3.2. Preprocessing

3.3. Extracting Features Using Transfer Learning

3.4. Feature Reduction

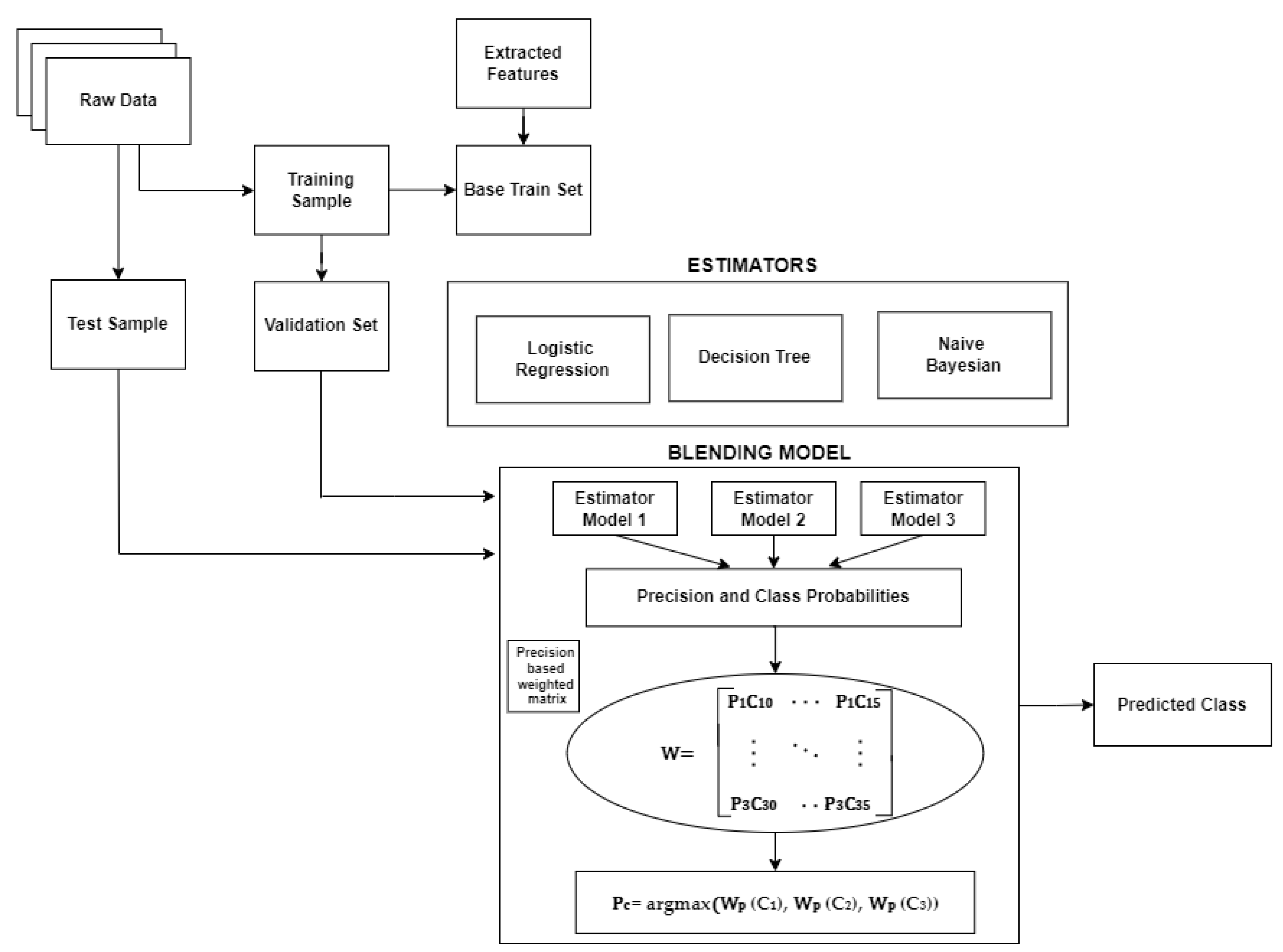

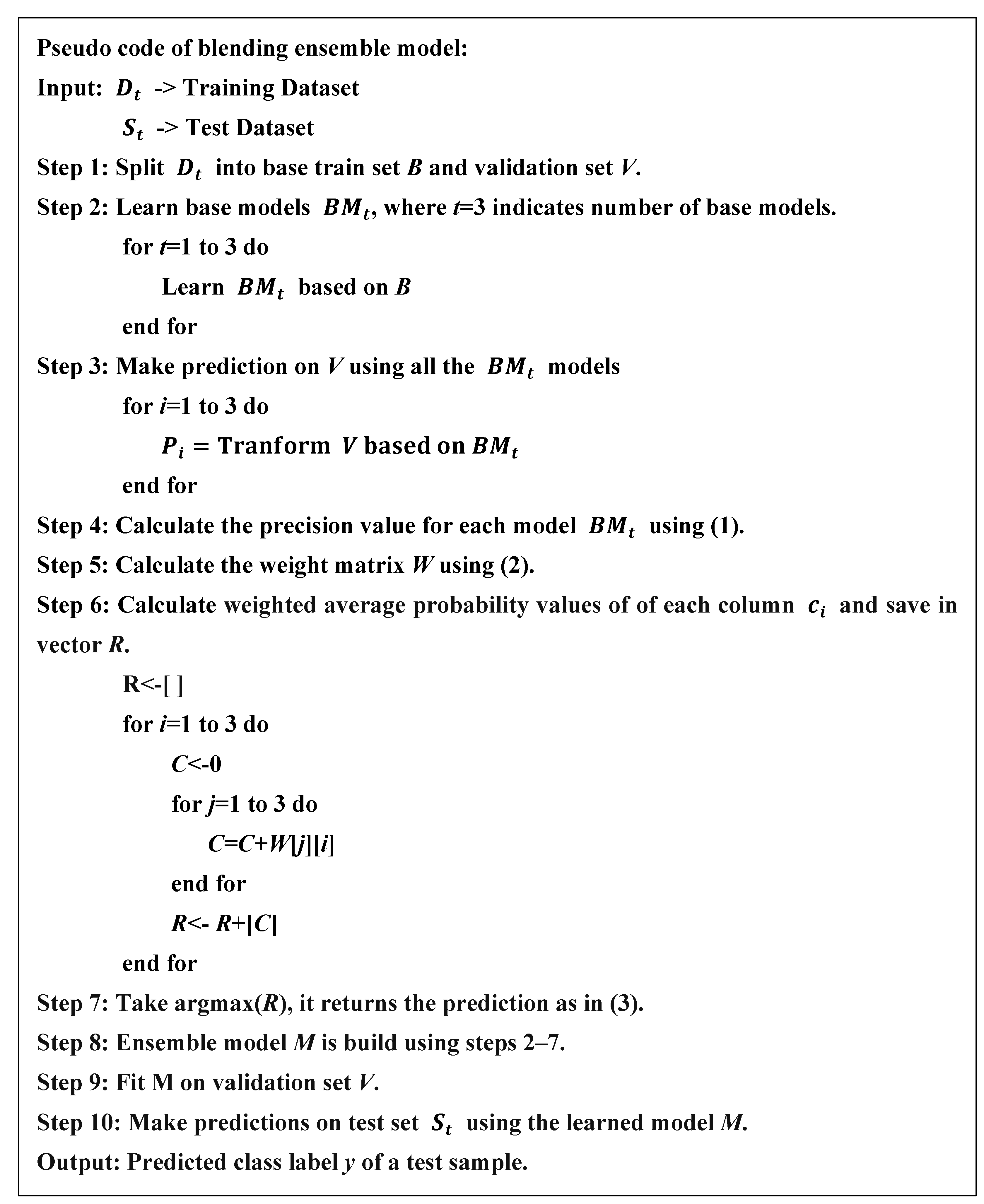

3.5. Precision Based Weighed Blending Ensemble Learning

3.6. Considerations to Explainability Issues of the Proposed Ensemble Model

4. Results

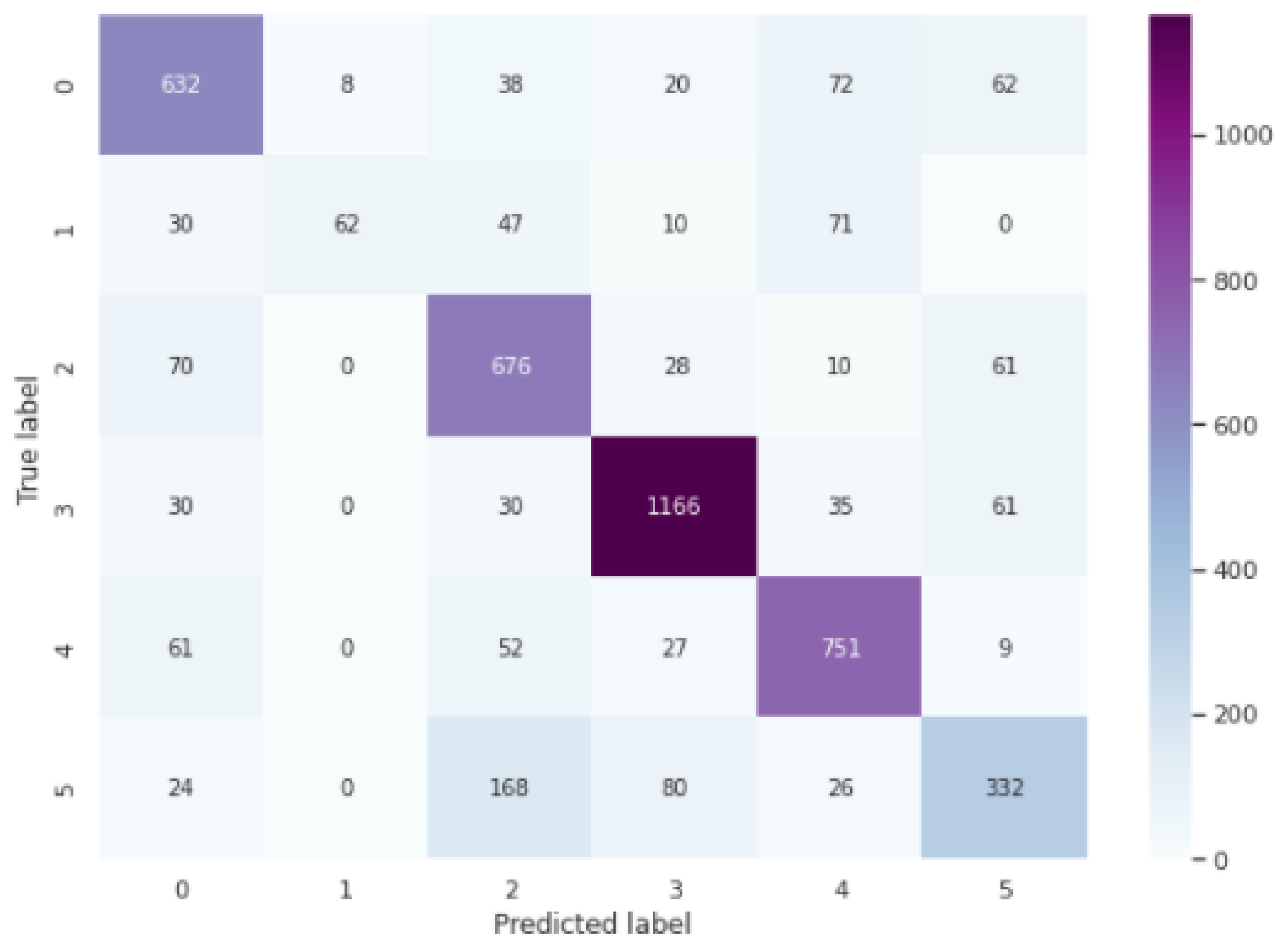

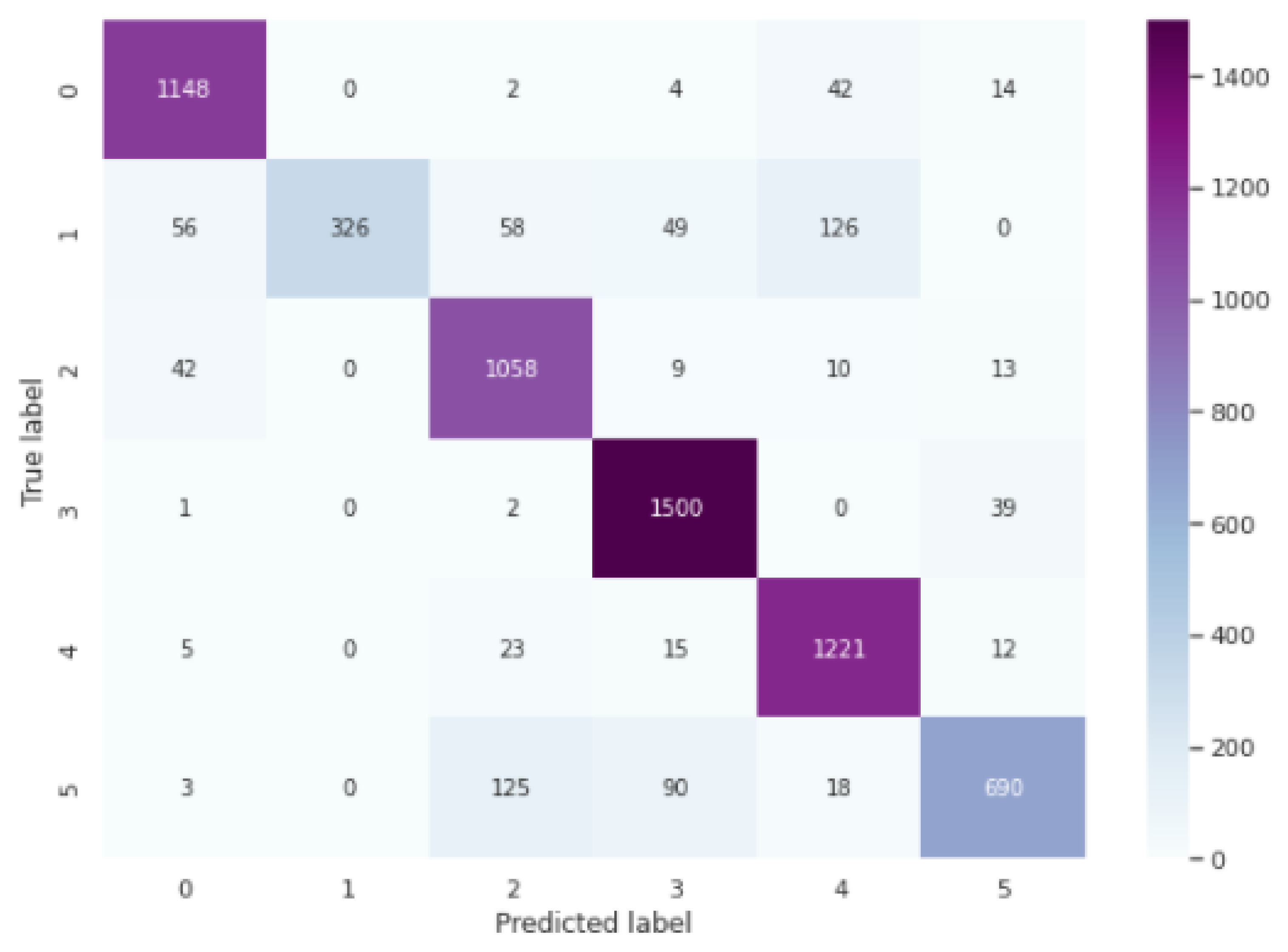

4.1. Classification Accuracy and Confusion Matrix on the FER-2013 Dataset

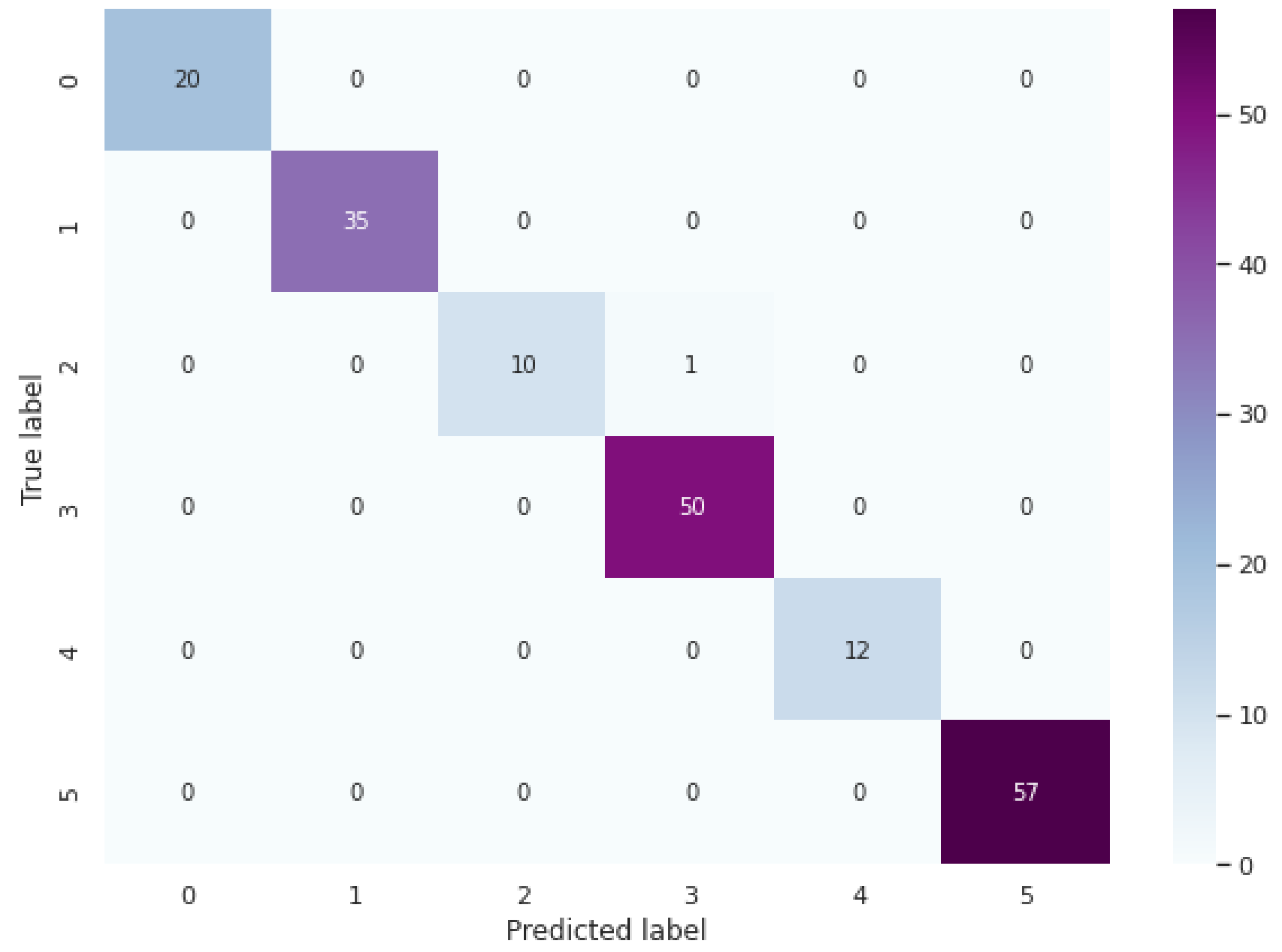

4.2. Classification Accuracy and Confusion Matrix on the CK+ Dataset

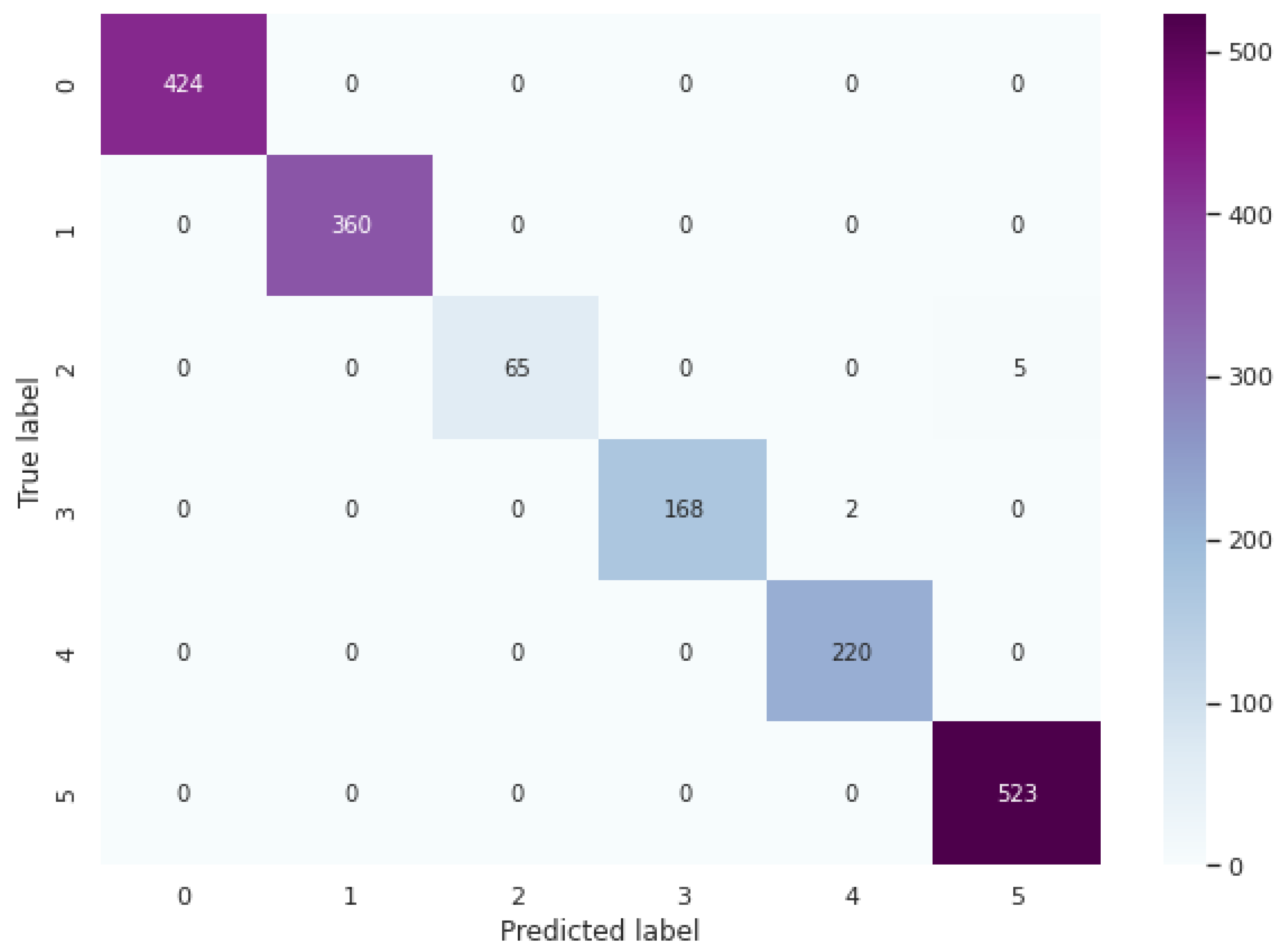

4.3. Classification Accuracy and Confusion Matrix on the FERG-DB Dataset

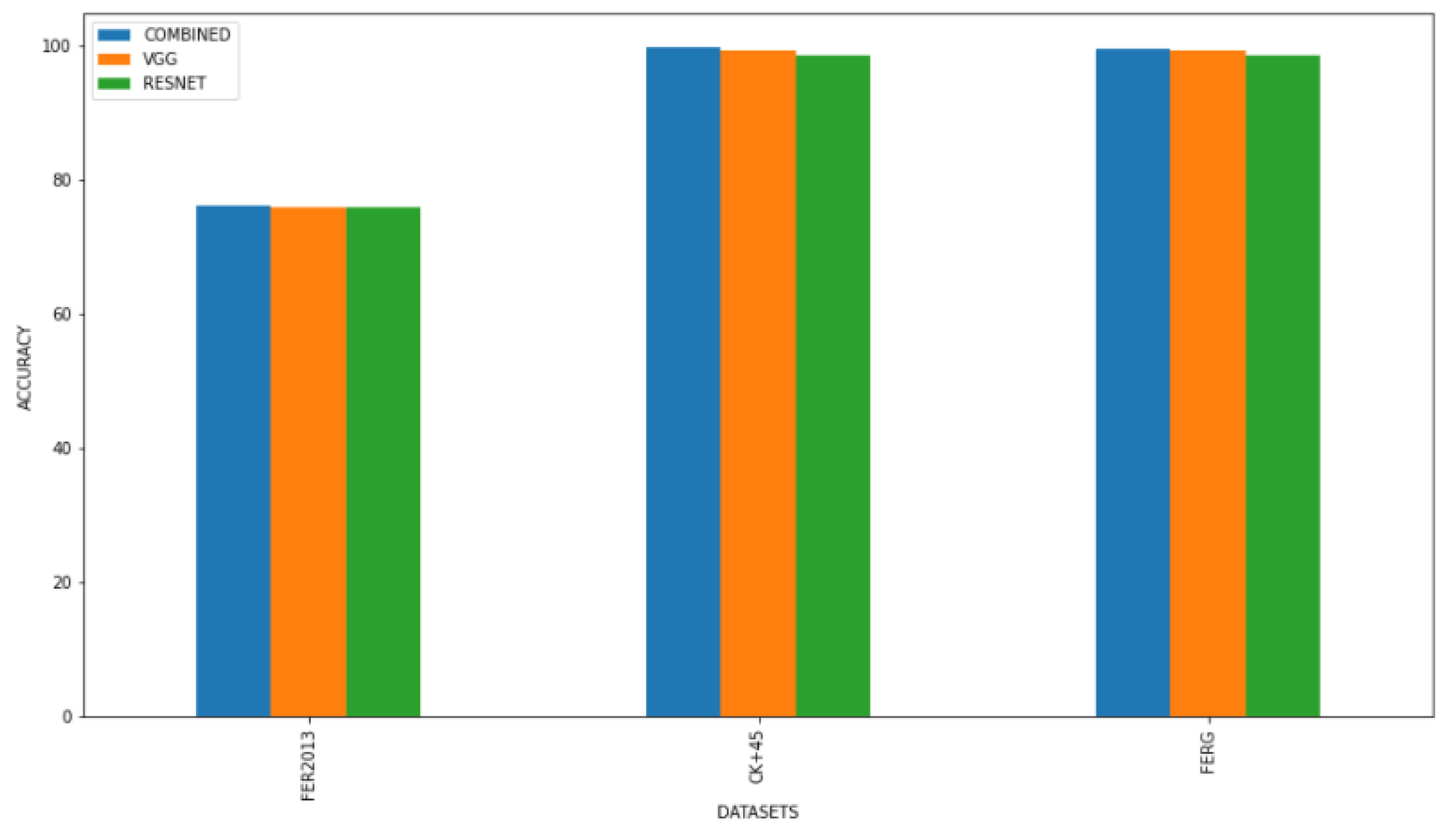

4.4. Accuracy Comparison Based on the Model Used for Transfer Learning on Each Dataset

4.5. Classification Accuracy of Individual Classifiers and the Proposed Approach on the Three Datasets and on the Combined Dataset

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- SimilarNet: The European Taskforce for Creating Human–Machine Interfaces Similar to Human–Human Communication. Available online: http://www.similar.cc/ (accessed on 31 December 2021).

- Kayalvizhi, S.; Kumar, S.S. A neural networks approach for emotion detection in humans. IOSR J. Electr. Comm. Engin. 2017, 38–45. [Google Scholar]

- Van den Broek, E.L. Ubiquitous emotion-aware computing. Pers. Ubiquit. Comput. 2013, 17, 53–67. [Google Scholar] [CrossRef] [Green Version]

- Ménard, M.; Richard, P.; Hamdi, H.; Daucé, B.; Yamaguchi, T. Emotion Recognition based on Heart Rate and Skin Conductance. In Proceedings of the 2nd International Conference on Physiological Computing Systems, Angers, France, 13 February 2015; pp. 26–32. [Google Scholar]

- Zheng, W.-L.; Lu, B.-L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Mental Dev. 2017, 7, 162–175. [Google Scholar] [CrossRef]

- Ganaie, M.A.; Hu, M.; Tanveer, M.; Suganthan, P.N. Ensemble deep learning: A review. arXiv 2021, arXiv:2104.02395. [Google Scholar]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef] [Green Version]

- Halevy, A.; Norvig, P.; Pereira, F. The unreasonable effectiveness of data. IEEE Intell. Syst. 2009, 24, 8–12. [Google Scholar] [CrossRef]

- Ekman, P. An argument for basic emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Matilda, S. Emotion recognition: A survey. Int. J. Adv. Comp. Res. 2015, 3, 14–19. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Wiem, M.; Lachiri, Z. Emotion classification in arousal valence model using MAHNOB-HCI database. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 318–323. [Google Scholar]

- Liu, Y.; Sourina, O. EEG Databases for Emotion Recognition. In Proceedings of the 2013 International Conference on Cyberworlds, Yokohama, Japan, 21–23 October 2013; pp. 302–309. [Google Scholar]

- Bahari, F.; Janghorbani, A. EEG-based Emotion Recognition Using Recurrence Plot Analysis and K Nearest Neighbor Classifier. In Proceedings of the 2013 20th Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 18–20 December 2013; pp. 228–233. [Google Scholar]

- Murugappan, M.; Mutawa, A. Facial geometric feature extraction based emotional expression classification using machine learning algorithms. PLoS ONE 2021, 16, e0247131. [Google Scholar]

- Cheng, B.; Liu, G. Emotion Recognition from Surface EMG Signal Using Wavelet Transform and Neural Network. In Proceedings of the 2nd International Conference on Bioinformatics and Biomedical Engineering (ICBBE), Shanghai, China, 16–18 May 2008; pp. 1363–1366. [Google Scholar]

- Kim, J.H.; Poulose, A.; Han, D.S. The extensive usage of the facial Image threshing machine for facial emotion recognition performance. Sensors 2021, 21, 2026. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef] [Green Version]

- Rivera, A.R.; Castillo, J.R.; Chae, O.O. Local directional number pattern for face analysis: Face and expression recognition. IEEE Trans. Image Process. 2013, 22, 1740–1752. [Google Scholar] [CrossRef] [PubMed]

- Moore, S.; Bowden, R. Local binary patterns for multi-view facial expression recognition. Comput. Vis. Image Underst. 2011, 115, 541–558. [Google Scholar] [CrossRef]

- Happy, S.L.; George, A.; Routray, A. A Real Time Facial Expression Classification System Using Local Binary Patterns. In Proceedings of the 2012 4th International Conference on Intelligent Human Computer Interaction (IHCI), Kharagpur, India, 27–29 December 2012; pp. 1–5. [Google Scholar]

- Ghimire, D.; Jeong, S.; Lee, J.; Park, S.H. Facial expression recognition based on local region specific features and support vector machines. Multimed. Tools Appl. 2017, 76, 7803–7821. [Google Scholar] [CrossRef] [Green Version]

- Hammal, Z.; Couvreur, L.; Caplier, A.; Rombaut, M. Facial expression classification: An approach based on the fusion of facial deformations using the transferable belief model. Int. J. Approx. Reason. 2007, 46, 542–567. [Google Scholar] [CrossRef]

- Devi, M.K.; Prabhu, K. Face Emotion Classification Using AMSER with Artificial Neural Networks. In Proceedings of the 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 6–7 March 2020; pp. 148–154. [Google Scholar]

- Corchs, S.; Fersini, E.; Gasparini, F. Ensemble learning on visual and textual data for social image emotion classification. Int. J. Mach. Learn. Cyber. 2019, 10, 2057–2070. [Google Scholar] [CrossRef]

- Nguyen, H.D.; Yeom, S.; Lee, G.S.; Yang, H.J.; Na, I.S.; Kim, S.H. Facial emotion recognition using an ensemble of multi-level convolutional neural networks. Int. J. Pattern Recognit. Artif. Intell. 2019, 33, 1940015. [Google Scholar] [CrossRef]

- Fan, Y.; Lam, J.C.K.; Li, V.O.K. Multi-region Ensemble Convolutional Neural Network for Facial Expression Recognition. In Proceedings of the 27th International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; pp. 84–94. [Google Scholar]

- Poulose, A.; Reddy, C.S.; Kim, J.H.; Han, D.S. Foreground Extraction Based Facial Emotion Recognition Using Deep Learning Xception Model. In Proceedings of the 12th International Conference on Ubiquitous and Future Networks (ICUFN), Jeju Island, Korea, 17–20 August 2021; pp. 356–360. [Google Scholar]

- Poulose, A.; Kim, J.H.; Han, D.S. Feature Vector Extraction Technique for Facial Emotion Recognition Using Facial Landmarks. In Proceedings of the 2021 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea, 20–22 October 2021; pp. 1072–1076. [Google Scholar]

- Meng, X.; Bradley, J.; Yavuz, B.; Sparks, E.; Venkataraman, S.; Liu, D.; Freeman, J.; Tsai, D.B.; Amde, M.; Owen, S.; et al. MLlib: Machine learning in Apache Spark. J. Mach. Learn. Res. 2016, 17, 1235–1241. [Google Scholar]

- Challenges in Representation Learning: Facial Expression Recognition Challenge. Available online: https://www.kaggle.com/c/challenges-in-representation-learning-facial-expression-recognition-challenge/data (accessed on 31 December 2021).

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The Extended Cohn-Kanade Dataset (CK+): A Complete Dataset for Action Unit and Emotion-specified Expression. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Aneja, D.; Colburn, A.; Faigin, G.; Shapiro, L.; Mones, B. Modeling Stylized Character Expressions via Deep Learning. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 136–153. [Google Scholar]

- Metrics and Scoring: Quantifying the Quality of Predictions. Available online: https://scikit-learn.org/stable/modules/model_evaluation.html#precision-recall-f-measure-metrics (accessed on 31 December 2021).

- Khanzada, A.; Bai, B.; Celepcikay, F.T. Facial expression recognition with deep learning. arXiv 2020, arXiv:2004.11823. [Google Scholar]

- Pramerdorfer, C.; Kampel, M. Facial expression recognition using convolutional neural networks: State of the art. arXiv 2016, arXiv:1612.02903. [Google Scholar]

- Pecoraro, R.; Basile, V.; Bono, V.; Gallo, S. Local multi-head channel self-attention for facial expression recognition. arXiv 2021, arXiv:2111.07224. [Google Scholar]

- Shi, J.; Zhu, S.; Liang, Z. Learning to amend facial expression representation via de-albino and affinity. arXiv 2021, arXiv:2103.10189. [Google Scholar]

- Tang, Y. Deep learning using linear support vector machines. arXiv 2013, arXiv:1306.0239. [Google Scholar]

- Minaee, S.; Minaei, M.; Abdolrashidi, A. Deep-emotion: Facial expression recognition using attentional convolutional network. Sensors 2021, 21, 3046. [Google Scholar] [CrossRef]

- Giannopoulos, P.; Perikos, I.; Hatzilygeroudis, I. Deep Learning Approaches for Facial Emotion Recognition: A Case Study on FER-2013. In Advances in Hybridization of Intelligent Methods; Springer: Cham, Switzerland, 2018; pp. 1–16. [Google Scholar]

- Pourmirzaei, M.; Montazer, G.A.; Esmaili, F. Using self-supervised auxiliary tasks to improve fine-grained facial representation. arXiv 2021, arXiv:2105.06421. [Google Scholar]

- Ding, H.; Zhou, S.K.; Chellappa, R. FaceNet2ExpNet: Regularizing a Deep Face Recognition Net for Expression Recognition. In Proceedings of the 12th IEEE International Conference on Automatic Face & Gesture Recognition, Washington, DC, USA, 30 May–3 June 2017; pp. 118–126. [Google Scholar]

- Han, Z.; Meng, Z.; Khan, A.-S.; Tong, Y. Incremental boosting convolutional neural network for facial action unit recognition. Adv. Neural Inf. Process. Syst. 2016, 29, 109–117. [Google Scholar]

- Meng, Z.; Liu, P.; Cai, J.; Han, S.; Tong, Y. Identity-aware Convolutional Neural Network for Facial Expression Recognition. In Proceedings of the IEEE International Conference on Automatic Face & Gesture Recognition, Washington, DC, USA, 30 May–3 June 2017; pp. 558–565. [Google Scholar]

- Jung, H.; Lee, S.; Yim, J.; Park, S.; Kim, J. Joint Fine-tuning in Deep Neural Networks for Facial Expression Recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2983–2991. [Google Scholar]

- Clément, F.; Piantanida, P.; Bengio, Y.; Duhamel, P. Learning anonymized representations with adversarial neural networks. arXiv 2018, arXiv:1802.09386. [Google Scholar]

- Hang, Z.; Liu, Q.; Yang, Y. Transfer Learning with Ensemble of Multiple Feature Representations. In Proceedings of the IEEE 2018 IEEE 16th International Conference on Software Engineering Research, Management and Applications (SERA), Kunming, China, 13–15 June 2018; pp. 54–61. [Google Scholar]

| SNO | Classification Model | Accuracy % |

|---|---|---|

| 1 | Proposed method (weighted ensemble model) | 76.2 |

| 2 | Ensemble CNN [36] | 75.8 |

| 3 | Ensemble CNN [37] | 75.2 |

| 4 | LHC-Net [38] | 74.42 |

| 5 | VGG [37] | 72.70 |

| 6 | Resnet [37] | 72.40 |

| 7 | Inception [37] | 71.60 |

| 8 | ARM [39] | 71.38 |

| 9 | CNN + SVM [40] | 71.20 |

| 10 | Attentional ConvNet [41] | 70.02 |

| 11 | GoogLeNet [42] | 65.20 |

| SNO | Classification Model | Accuracy % |

|---|---|---|

| 1 | Proposed method (weighted ensemble model) | 99.4 |

| 2 | Using Self-Supervised Auxiliary Tasks to Improve Fine-Grained Facial Representation [43] | 98.23 |

| 3 | FaceNet2ExpNet: Regularizing a Deep Face Recognition Net for Expression Recognition [44] | 98.6 |

| 4 | DTAGN [45] | 97.2 |

| 5 | IACNN [46] | 95.37 |

| 6 | IB-CNN [47] | 95.1 |

| SNO | Classification Model | Accuracy % |

|---|---|---|

| 1 | Proposed method (weighted ensemble model) | 99.6 |

| 2 | Adversarial NN [48] | 98.2 |

| 3 | Ensemble Multi-feature [49] | 97 |

| 4 | DeepExpr [34] | 89.02 |

| SNO | Dataset | Logistic Regression Model | Naïve Bayesian | Decision Tree | Proposed Ensemble Model |

|---|---|---|---|---|---|

| 1 | FER 2013 | 74.2 | 75.46 | 76.1 | 76.2 |

| 2 | CK+ | 98.9 | 99.12 | 99.38 | 99.4 |

| 3 | FERG-DB | 99.02 | 99.4 | 99.52 | 99.6 |

| 4 | Combined | 87.5 | 87.98 | 88.2 | 88.68 |

| SNO | Dataset | Precision % | Recall % | F1-Score % |

|---|---|---|---|---|

| 1 | FER-2013 | 77.01 | 76.95 | 75.9 |

| 2 | CK+ | 98.96 | 98.47 | 98.68 |

| 3 | FERG-DB | 99.5 | 99.57 | 99.5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Soman, G.; Vivek, M.V.; Judy, M.V.; Papageorgiou, E.; Gerogiannis, V.C. Precision-Based Weighted Blending Distributed Ensemble Model for Emotion Classification. Algorithms 2022, 15, 55. https://doi.org/10.3390/a15020055

Soman G, Vivek MV, Judy MV, Papageorgiou E, Gerogiannis VC. Precision-Based Weighted Blending Distributed Ensemble Model for Emotion Classification. Algorithms. 2022; 15(2):55. https://doi.org/10.3390/a15020055

Chicago/Turabian StyleSoman, Gayathri, M. V. Vivek, M. V. Judy, Elpiniki Papageorgiou, and Vassilis C. Gerogiannis. 2022. "Precision-Based Weighted Blending Distributed Ensemble Model for Emotion Classification" Algorithms 15, no. 2: 55. https://doi.org/10.3390/a15020055

APA StyleSoman, G., Vivek, M. V., Judy, M. V., Papageorgiou, E., & Gerogiannis, V. C. (2022). Precision-Based Weighted Blending Distributed Ensemble Model for Emotion Classification. Algorithms, 15(2), 55. https://doi.org/10.3390/a15020055