Machine Learning Approaches for Skin Cancer Classification from Dermoscopic Images: A Systematic Review

Abstract

1. Introduction

- Basal cell carcinoma or basalioma (BCC) (Figure 1a). It accounts for about 80% of cases and originates in the basal cells, the deepest cells of the epidermis. Basal cell growth is slow, so in most cases BCC is curable and causes minimal damage if diagnosed and treated in time.

- Squamous cell carcinoma or cutaneous spinocellular carcinoma (SCC) (Figure 1b). This accounts for approximately 16% of skin cancers and originates in the squamous cells in the most superficial layer of the epidermis. If detected early it is easily curable, but if neglected it can infiltrate the deeper layers of the skin and spread to other parts of the body.

- Malignant Melanoma (MM) (Figure 1c). Originating in the melanocytic cells located in the epidermis, it is the most aggressive malignant skin tumour. It spreads rapidly, has a high mortality rate as it metastasises in the early stages, and is difficult to treat. It accounts for only 4% of skin cancers but induces mortality in 80% of cases. Only 14% of patients with metastatic melanoma survive for five years [9]. If diagnosed in the early stages it has a 95% curability rate, so its early diagnosis can greatly increase life chances.

- Shape: computation of area, perimeter, compactness index, rectangularity, bulkiness, major and minor axis length, convex hull, comparison with a circle, eccentricity, Hu’s moment invariants, wavelet invariant moments, Zunic compactness, symmetry maps, symmetry distance, and adaptive fuzzy symmetry distance.

- Color: computation of average, standard deviation, variance, skewness, maximum, minimum, entropy, 1D or 3D color histograms, and the autocorrelogram. In addition, several techniques have been used to group the pixels, namely k-means, Gaussian mixture model (GMM), and multi-thresholding.

- Texture: computation of the gray-level co-occurrence matrix (GCLM), gray level run-length matrix (GLRLM), local binary patterns (LBP), wavelet and Fourier transforms, fractal dimension, multidimensional receptive fields histograms, Markov random fields, and Gabor filters.

- Section 2. We present the methodology employed to perform the systematic research and present the main public databases containing dermoscopic images, relevant for the paper analysed here.

- Section 3. In this section, we discuss and explain several ML and DL methods commonly used for demoscopic image classification tasks.

- Section 4. We summarise in this section all the research applied to skin lesions on dermoscopic images selected for this paper; those works are categorised according to the approach taken, i.e., ML, DL, and ML/DL hybrid.

- Section 5. In this section, results are discussed.

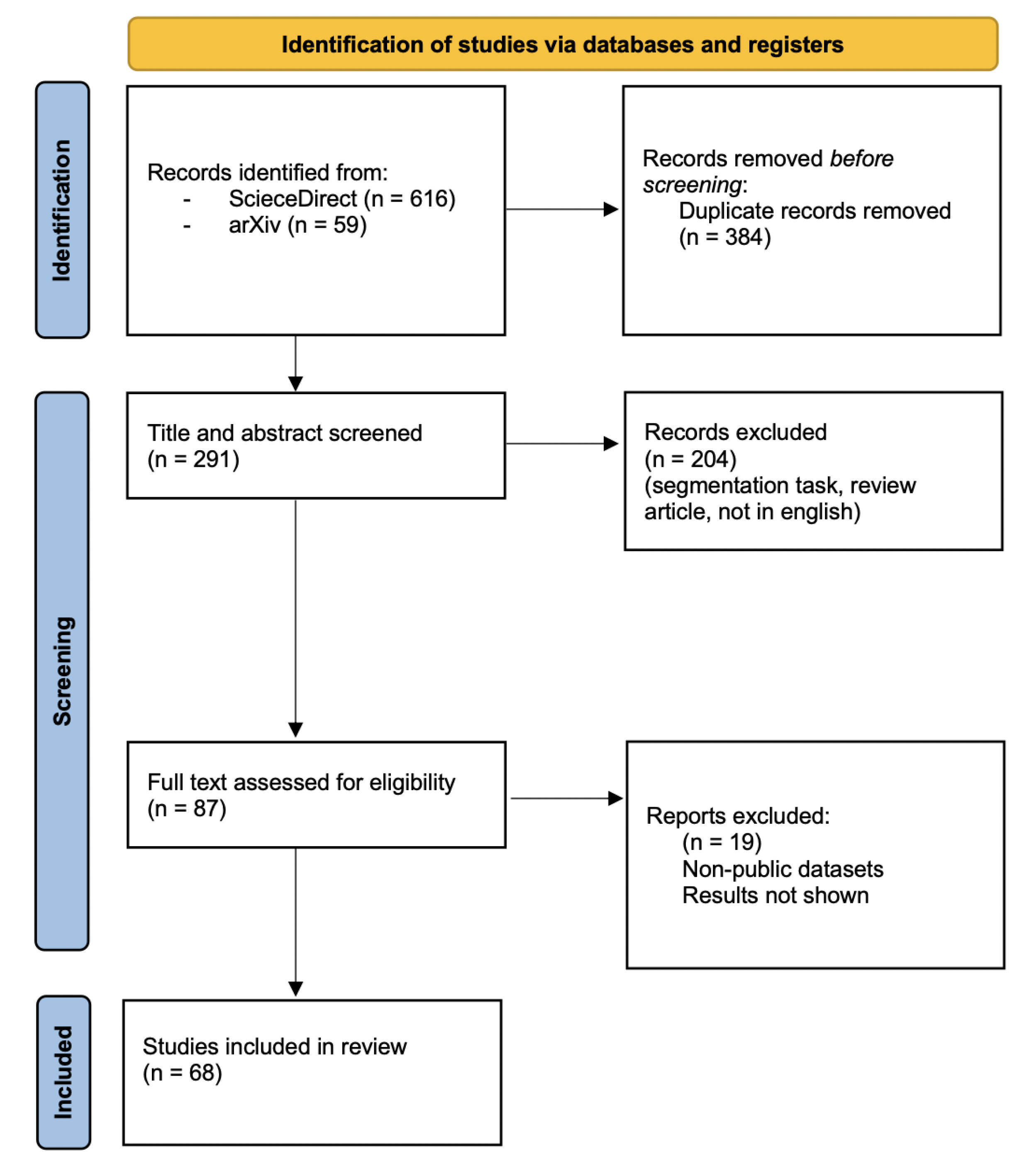

2. Material and Methods

2.1. Search Strategy

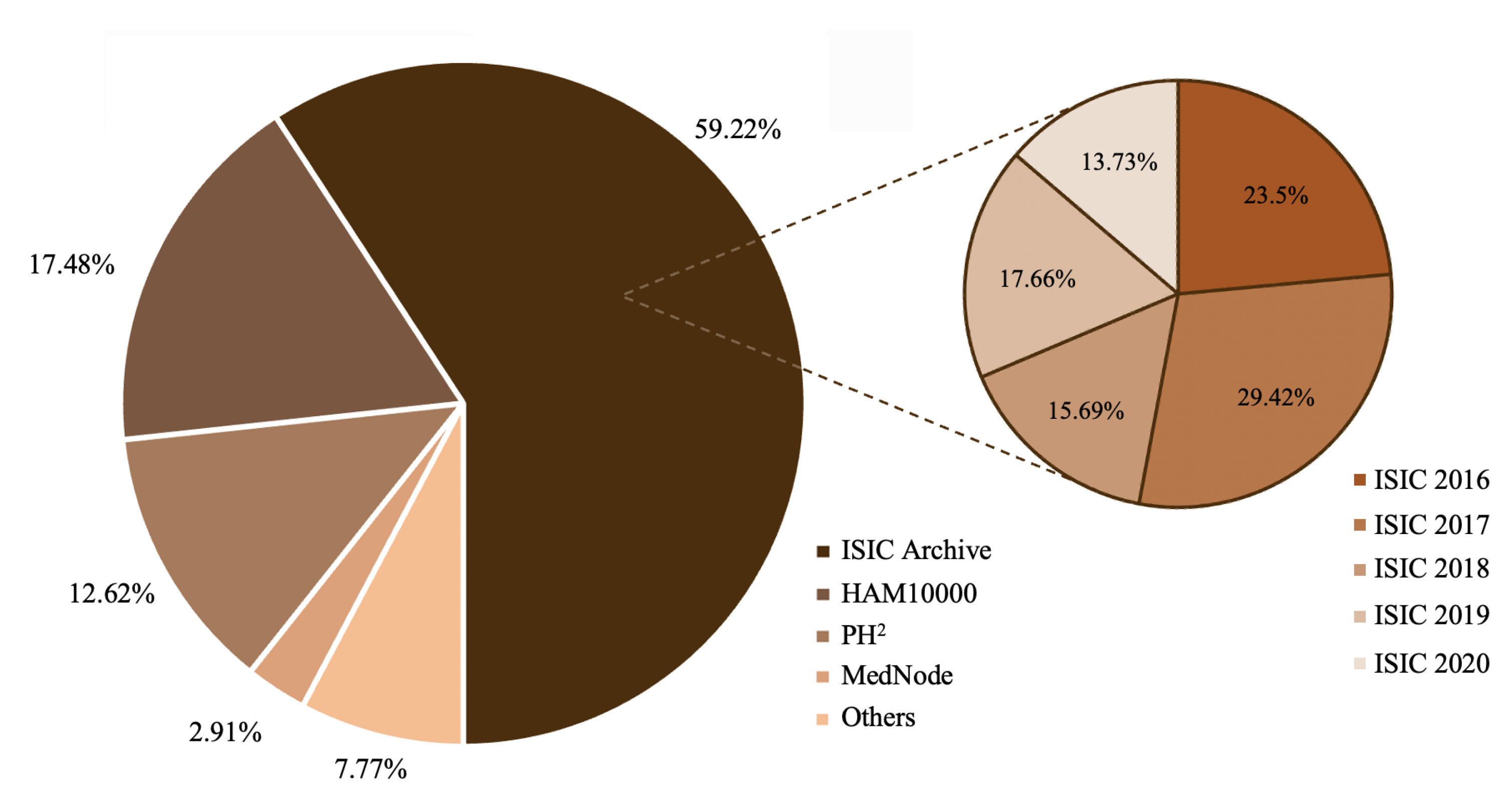

2.2. Common Skin Lesion Databases

- ISIC archive. The ISIC archive [10], which combines several datasets of skin lesions, was originally released by the International Skin Imaging Collaboration in 2016 for the challenge called International Symposium on Biomedical Imaging (ISBI). Various modifications have been made over the years.

- HAM10000. The human-against-machine dataset (HAM) [57] (available at [58]), that arises from the addition of some images to the ISIC2018 dataset, contains more than 10,000 images with seven different diagnoses collected from two sources: Cliff Rosendahl’s skin cancer practice in Queensland, Australia, and the Dermatology Department of the Medical University of Vienna, Austria.

- PH². The PH² database [59] (available at [60]) acquired at the Dermatology Service of Hospital Pedro Hispano, Matosinhos, Portugal, contains 200 images divided into common nevi, atypical nevi, and melanoma skin cancer images. Together with the images, annotations such as medical segmentation of the pigmented skin lesion, histological and clinical diagnoses, and scores assigned by other dermatological criteria are provided.

| Database | N/AN | CN | MM | SK | BCC | DF | AK | VL | SCC | Tot |

|---|---|---|---|---|---|---|---|---|---|---|

| ISIC 2016 [63] | 726 | - | 173 | - | - | - | - | - | - | 899 |

| ISIC 2017 [64] | 1372 | - | 374 | 254 | - | - | - | - | - | 2000 |

| ISIC 2019 [65] | 12,875 | - | 4522 | 2624 | 3323 | 239 | 867 | 253 | 628 | 25,331 |

| ISIC 2020 [66] | 27,124 | 5193 | 584 | 135 | - | - | - | - | - | 33,126 |

| HAM10000 | 6705 | - | 1113 | 1099 | 514 | 115 | 327 | 142 | - | 10,015 |

| PH | 80 | 80 | 40 | - | - | - | - | - | - | 200 |

| MedNode | 100 | - | 70 | - | - | - | - | - | - | 170 |

3. Artificial Intelligence

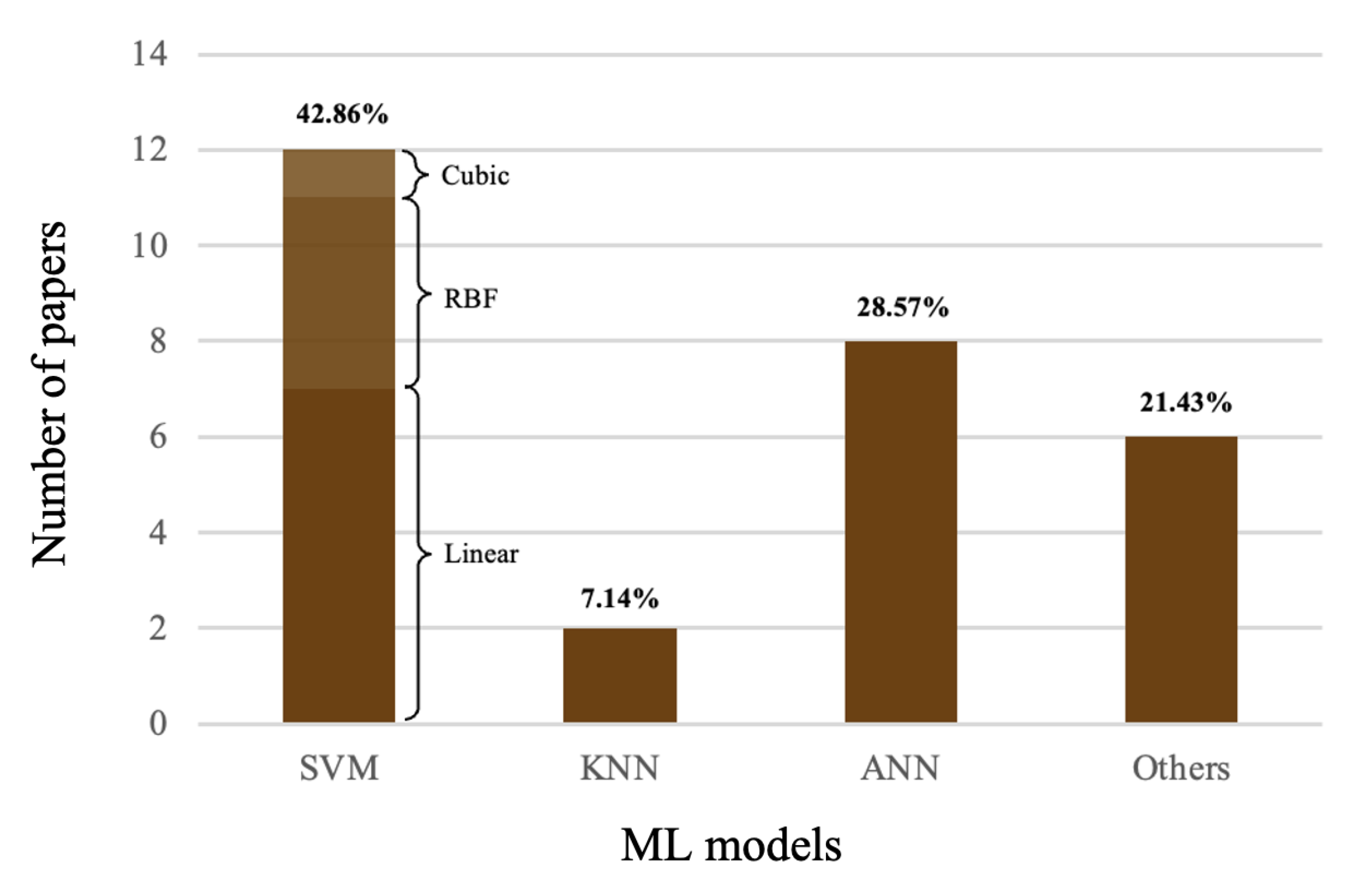

3.1. Machine Learning

3.1.1. Decision Trees

3.1.2. Support Vector Machines

3.1.3. K-Nearest Neighbors

3.1.4. Artificial Neural Networks

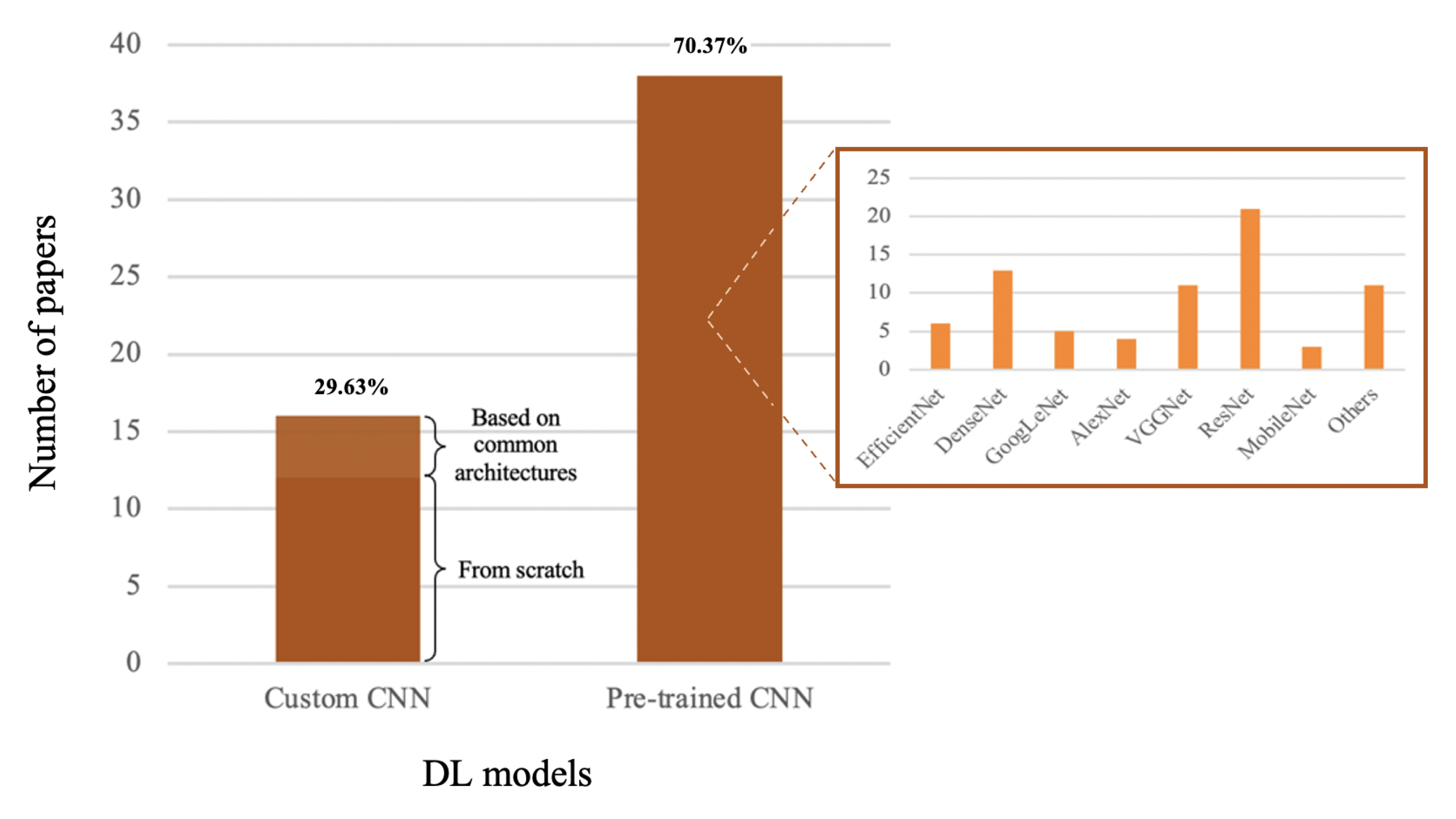

3.2. Deep Learning

- Convolutional layers. Convolutional layers are able to learn local patterns, and this entails two important properties: the learned patterns are translation invariant and the learning extends to spatial hierarchies of patterns. This allows the CNN to efficiently learn increasingly complex visual concepts as the depth of the network increases. Convolutional layers contain a series of filters that run over the input image performing the convolution operation and generate feature maps to be sent to subsequent layers.

- Normalization layers. These are layers for normalising input data by means of a specific function that does not provide any trainable parameters and only acts in forward propagation. The use of those layers has diminished in recent times.

- Regularization layer. They are layers designed to reduce overfitting by randomly ignoring a proportion of neurons during each training session. The best known regularization technique is the dropout.

- Pooling layers. Pooling layers perform subsampling of feature maps while retaining the main information contained therein, in order to reduce the model parameters and the computational cost of the operations to be performed. Pooling filters, of which the most used are average pooling and max pooling, run over the feature maps they receive as input by performing the convolution operation as in the case of convolutional layers, but in this case there are no trainable parameters.

- Fully connected layers. In those layers, every neuron of the layer is connected to all activation functions of the previous layer. The first fully connected layer (FC) takes input feature maps as output from the last convolutional or pooling layer, and the last of the FC layers is the CNN classifier.

3.3. Pre-Trained Models and Transfer Learning

4. Results

4.1. Machine-Learning Methods

4.2. Deep-Learning Methods

4.3. ML/DL Hybrid Techniques

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SC | Skin Cancer |

| MM | Melanoma |

| BCC | Basal Cell Carcinoma |

| SCC | Squamous Cell Carcinoma |

| SK | Seborrheic Keratosis |

| CAD | Computer-Aided Diagnosis |

| ML | Machine Learning |

| DL | Deep Learning |

| ANN | Artificial Neural Network |

| CNN | Convolutional Neural Network |

| DA | Data Augmentation |

| TL | Transfer Learning |

| ACC | Accuracy |

| SE | Sensitivity |

| SP | Specificity |

| PR | Precision |

| REC | Recall |

| AUC | Area Under the ROC (Receiver Operating Characteristic) Curve |

References

- Apalla, Z.; Nashan, D.; Weller, R.B.; Castellsagué, X. Skin Cancer: Epidemiology, Disease Burden, Pathophysiology, Diagnosis, and Therapeutic Approaches. Dermatol. Ther. 2017, 7 (Suppl. 1), 5–19. [Google Scholar] [CrossRef] [PubMed]

- Hu, W.; Fang, L.; Ni, R.; Zhang, H.; Pan, G. Changing trends in the disease burden of non-melanoma skin cancer globally from 1990 to 2019 and its predicted level in 25 years. BMC Cancer 2022, 22, 836. [Google Scholar] [CrossRef] [PubMed]

- Pacheco, A.G.; Krohling, R.A. Recent advances in deep learning applied to skin cancer detection. arXiv 2019, arXiv:1912.03280. [Google Scholar]

- Goyal, M.; Knackstedt, T.; Yan, S.; Hassanpour, S. Artificial intelligence-based image classification methods for diagnosis of skin cancer: Challenges and opportunities. Comput. Biol. Med. 2020, 127, 104065. [Google Scholar] [CrossRef] [PubMed]

- Narayanan, D.L.; Saladi, R.N.; Fox, J.L. Ultraviolet radiation and skin cancer. Int. J. Dermatol. 2010, 49, 978–986. [Google Scholar] [CrossRef]

- Hasan, M.R.; Fatemi, M.I.; Khan, M.M.; Kaur, M.; Zaguia, A. Comparative Analysis of Skin Cancer (Benign vs. Malignant) Detection Using Convolutional Neural Networks. J. Healthc. Eng. 2021, 2021, 5895156. [Google Scholar] [CrossRef]

- Al-Masni, M.A.; Al-Antari, M.A.; Choi, M.-T.; Han, S.-M.; Kim, T.-S. Skin lesion segmentation in dermoscopy images via deep full resolution convolutional networks. Comput. Methods Programs Biomed. 2018, 162, 221–231. [Google Scholar] [CrossRef]

- Dildar, M.; Akram, S.; Irfan, M.; Khan, H.U.; Ramzan, M.; Mahmood, A.R.; Alsaiari, S.A.; Saeed, A.H.M.; Alraddadi, M.O.; Mahnashi, M.H. Skin Cancer Detection: A Review Using Deep Learning Techniques. Int. J. Environ. Res. Public Health 2021, 18, 5479. [Google Scholar] [CrossRef]

- Miller, A.J.; Mihm, M.C., Jr. Melanoma. N. Engl. J. Med. 2006, 355, 51–65. [Google Scholar] [CrossRef]

- ISIC Archive. Available online: https://www.isic-archive.com/ (accessed on 10 October 2022).

- Lopes, J.; Rodrigues, C.M.P.; Gaspar, M.M.; Reis, C.P. How to Treat Melanoma? The Current Status of Innovative Nanotechnological Strategies and the Role of Minimally Invasive Approaches like PTT and PDT. Pharmaceutics 2022, 14, 1817. [Google Scholar] [CrossRef]

- Nachbar, F.; Stolz, W.; Merkle, T.; Cognetta, A.B.; Vogt, T.; Landthaler, M.; Bilek, P.; Braun-Falco, O.; Plewig, G. The ABCD rule of dermatoscopy. High prospective value in the diagnosis of doubtful melanocytic skin lesions. J. Am. Acad. Dermatol. 1994, 30, 551–559. [Google Scholar] [CrossRef]

- Duarte, A.F.; Sousa-Pinto, B.; Azevedo, L.F.; Barros, A.M.; Puig, S.; Malvehy, J.; Haneke, E.; Correia, O. Clinical ABCDE rule for early melanoma detection. Eur. J. Dermatol. 2021, 31, 771–778. [Google Scholar] [CrossRef] [PubMed]

- Marghoob, N.G.; Liopyris, K.; Jaimes, N. Dermoscopy: A Review of the Structures That Facilitate Melanoma Detection. J. Osteopath. Med. 2019, 119, 380–390. [Google Scholar] [CrossRef] [PubMed]

- Hussaindeen, A.; Iqbal, S.; Ambegoda, T.D. Multi-label prototype based interpretable machine learning for melanoma detection. Int. J. Adv. Signal Image Sci. 2022, 8, 40–53. [Google Scholar] [CrossRef]

- Menzies, S.; Braun, R. Menzies Method. Dermoscopedia 2018, 19, 37. Available online: https://dermoscopedia.org/w/index.php?title=Menzies_Method&oldid=9988 (accessed on 10 October 2022).

- Venturi, F.; Pellacani, G.; Farnetani, F.; Maibach, H.; Tassone, D.; Dika, E. Noninvasive diagnostic techniques in the preoperative setting of Mohs micrographic surgery: A review of the literature. Dermatol. Ther. 2022, 35, e15832. [Google Scholar] [CrossRef]

- Venkatesh, B.; Suthanthirakumari, B.; Srividhya, R. Diagnosis of Skin Cancer with its Stages and its Precautions by using Multiclass CNN Technique. Int. Res. J. Mod. Eng. Technol. Sci. 2022, 4, 587–592. [Google Scholar]

- Thomas, L.; Puig, S. Dermoscopy, Digital Dermoscopy and Other Diagnostic Tools in the Early Detection of Melanoma and Follow-up of High-risk Skin Cancer Patients. Acta Derm. Venereol. 2017, 218, 14–21. [Google Scholar] [CrossRef]

- Batista, L.G.; Bugatti, P.H.; Saito, P.T.M. Classification of Skin Lesion through Active Learning Strategies. Comput. Methods Programs Biomed. 2022, 226, 107122. [Google Scholar] [CrossRef]

- Youssef, A.; Bloisi, D.D.; Muscio, M.; Pennisi, A.; Nardi, D.; Facchiano, A. Deep Convolutional Pixel-wise Labeling for Skin Lesion Image Segmentation. In Proceedings of the 2018 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rome, Italy, 11–13 June 2018; pp. 1–6. [Google Scholar]

- Wighton, P.; Lee, T.K.; Lui, H.; McLean, D.I.; Atkins, M.S. Generalizing common tasks in automated skin lesion diagnosis. IEEE Trans. Inf. Technol. Biomed. 2011, 15, 622–629. [Google Scholar] [CrossRef]

- Abbas, Q.; Celebi, M.E.; García, I.F. Hair removal methods: A comparative study for dermoscopy images. Biomed. Signal Process. Control 2011, 6, 395–404. [Google Scholar] [CrossRef]

- Lee, T.; Gallagher, V.N.R.; Coldman, A.; McLean, D. DullRazor: A software approach to hair removal from images. Comput. Biol. Med. 1997, 27, 533–543. [Google Scholar] [CrossRef]

- Vocaturo, E.; Zumpano, E.; Veltri, P. Image pre-processing in computer vision systems for melanoma detection. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2117–2124. [Google Scholar]

- Glaister, J.; Amelard, R.; Wong, A.; Clausi, D.A. MSIM: Multistage illumination modeling of dermatological photographs for illumination-corrected skin lesion analysis. IEEE Trans. Biomed. Eng. 2013, 60, 1873–1883. [Google Scholar] [CrossRef]

- Korotkov, K.; Garcia, R. Computerized analysis of pigmented skin lesions: A review. Artif. Intell. Med. 2012, 56, 69–90. [Google Scholar] [CrossRef] [PubMed]

- Emre Celebi, M.; Wen, Q.; Hwang, S.; Iyatomi, H.; Schaefer, G. Lesion border detection in dermoscopy images using ensembles of thresholding methods. Skin Res. Technol. 2013, 19, 252–258. [Google Scholar] [CrossRef]

- Sarker, M.M.K.; Rashwan, H.A.; Akram, F.; Singh, V.K.; Banu, S.F.; Chowdhury, F.U.H.; Choudhury, K.A.; Chambon, S.; Radeva, P.; Puig, D.; et al. SLSNet: Skin lesion segmentation using a lightweight generative adversarial network. arXiv 2021, arXiv:1907.00856. [Google Scholar] [CrossRef]

- Pour, M.P.; Seker, H. Transform domain representation-driven convolutional neural networks for skin lesion segmentation. Expert Syst. Appl. 2020, 144, 113129. [Google Scholar] [CrossRef]

- Tang, P.; Yan, X.; Liang, Q.; Zhang, D. AFLN-DGCL: Adaptive Feature Learning Network with Difficulty-Guided Curriculum Learning for skin lesion segmentation. Appl. Soft Comput. 2021, 110, 107656. [Google Scholar] [CrossRef]

- Mahboda, A.; Tschandlb, P.; Langsc, G.; Eckerd, R.; Ellinger, I. The effects of skin lesion segmentation on the performance of dermatoscopic image classification. Comput. Methods Programs Biomed. 2020, 197, 105725. [Google Scholar] [CrossRef]

- Nidaa, N.; Irtazab, A.; Javedc, A.; Yousafa, M.H.; Mahmood, M.T. Melanoma lesion detection and segmentation using deep region based convolutional neural network and fuzzy C-means clustering. Int. J. Med Inform. 2019, 124, 37–48. [Google Scholar] [CrossRef]

- Garcia-Arroyo, J.L.; Garcia-Zapirain, B. Segmentation of skin lesions in dermoscopy images using fuzzy classification of pixels and histogram thresholding. Comput. Methods Programs Biomed. 2019, 168, 11–19. [Google Scholar] [CrossRef] [PubMed]

- Daia, D.; Donga, C.; Xua, S.; Yanb, Q.; Lia, Z.; Zhanga, C.; Luo, N. Ms RED: A novel multi-scale residual encoding and decoding network for skin lesion segmentation. Med. Image Anal. 2022, 75, 102293. [Google Scholar] [CrossRef]

- Pereira, P.M.M.; Fonseca-Pinto, R.; Paiva, R.P.; Assuncao, P.A.A.; Tavora, L.M.N.; Thomaz, L.A.; Faria, S.M.M. Dermoscopic skin lesion image segmentation based on Local Binary Pattern Clustering: Comparative study. Biomed. Signal Process. Control 2020, 59, 101924. [Google Scholar] [CrossRef]

- Wibowo, A.; Purnama, S.R.; Wirawan, P.W.; Rasyidi, H. Lightweight encoder-decoder model for automatic skin lesion segmentation. Inform. Med. Unlocked 2021, 25, 100640. [Google Scholar] [CrossRef]

- Rout, R.; Parida, P. Transition region based approach for skin lesion segmentation. Procedia Comput. Sci. 2020, 171, 379–388. [Google Scholar] [CrossRef]

- Barata, C.; Celebi, M.E.; Marques, J.S. A survey of feature extraction in dermoscopy image analysis of skin cancer. IEEE J. Biomed. Health Inform. 2018, 23, 1096–1109. [Google Scholar] [CrossRef]

- Danku, A.E.; Dulf, E.H.; Banut, R.P.; Silaghi, H.; Silaghi, C.A. Cancer Diagnosis With the Aid of Artificial Intelligence Modeling Tools. IEEE Access 2022, 10, 20816–20831. [Google Scholar] [CrossRef]

- Shandilya, S.; Chandankhede, C. Survey on recent cancer classification systems for cancer diagnosis. In Proceedings of the 2017 International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 22–24 March 2017; pp. 2590–2594. [Google Scholar]

- Çayır, S.; Solmaz, G.; Kusetogullari, H.; Tokat, F.; Bozaba, E.; Karakaya, S.; Iheme, L.O.; Tekin, E.; Yazıcı, C.; Özsoy, G.; et al. MITNET: A novel dataset and a two-stage deep learning approach for mitosis recognition in whole slide images of breast cancer tissue. Neural Comput. Appl. 2022, 34, 17837–17851. [Google Scholar] [CrossRef]

- Khuriwal, N.; Mishra, N. Breast cancer diagnosis using deep learning algorithm. In Proceedings of the 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida, India, 12–13 October 2018; pp. 98–103. [Google Scholar]

- Simin, A.T.; Baygi, S.M.G.; Noori, A. Cancer Diagnosis Based on Combination of Artificial Neural Networks and Reinforcement Learning. In Proceedings of the 2020 6th Iranian Conference on Signal Processing and Intelligent Systems (ICSPIS), Mashhad, Iran, 23–24 December 2020; pp. 1–4. [Google Scholar]

- Tschandl, P.; Codella, N.; Akay, B.N.; Argenziano, G.; Braun, R.P.; Cabo, H.; Gutman, D.; Halpern, A.; Helba, B.; Hofmann-Wellenhof, R.; et al. Comparison of the accuracy of human readers versus machine-learning algorithms for pigmented skin lesion classification: An open, web-based, international, diagnostic study. Lancet Oncol. 2019, 20, 938–947. [Google Scholar] [CrossRef]

- Marchetti, M.A.; Codella, N.C.F.; Dusza, S.W.; Gutman, D.A.; Helba, B.; Kalloo, A.; Mishra, N.; Carrera, C.; Celebi, M.E.; DeFazio, J.L.; et al. International Symposium on Biomedical Imaging challenge: Comparison of the accuracy of computer algorithms to dermatologists for the diagnosis of melanoma from dermoscopic images. J. Am. Acad. Dermatol. 2018, 78, 270–277. [Google Scholar] [CrossRef]

- Maron, R.C.; Weichenthal, M.; Utikal, J.S.; Hekler, A.; Berking, C.; Hauschild, A.; Enk, A.K.; Haferkamp, S.; Klode, J.; Schadendorf, D.; et al. Systematic outperformance of 112 dermatologists in multiclass skin cancer image classification by convolutional neural networks. Eur. J. Cancer 2019, 119, 57–65. [Google Scholar] [CrossRef] [PubMed]

- Haenssle, H.A.; Fink, C.; Toberer, F.; Winkler, J.; Stolz, W.; Deinlein, T.; Hofmann-Wellenhof, R.; Lallas, A.; Emmert, S.; Buhl, T.; et al. Man against machine reloaded: Performance of a market-approved convolutional neural network in classifying a broad spectrum of skin lesions in comparison with 96 dermatologists working under less artificial conditions. Ann. Oncol. 2020, 31, 137–143. [Google Scholar] [CrossRef] [PubMed]

- Han, S.S.; Park, I.; Chang, S.E.; Lim, W.; Kim, M.S.; Park, G.H.; Chae, J.B.; Huh, C.H.; Na, J.-I. Augmented Intelligence Dermatology: Deep Neural Networks Empower Medical Professionals in Diagnosing Skin Cancer and Predicting Treatment Options for 134 Skin Disorders. J. Investig. Dermatol. 2020, 104, 1753–1761. [Google Scholar] [CrossRef] [PubMed]

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Klode, J.; Hauschild, A.; Berking, C.; Schilling, B.; Haferkamp, S.; Schadendorf, D.; Holland-Letz, T.; et al. Deep learning outperformed 136 of 157 dermatologists in a head-to-head dermoscopic melanoma image classification task. Eur. J. Cancer. 2019, 113, 47–54. [Google Scholar] [CrossRef]

- Haenssle, H.A.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A.; Ben Hadj Hassen, A.; Thomas, L.; Enk, A.; et al. Man against machine: Diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef]

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Klode, J.; Hauschild, A.; Berking, C.; Schilling, B.; Haferkamp, S.; Schadendorf, D.; Fröhling, S.; et al. A convolutional neural network trained with dermoscopic images performed on par with 145 dermatologists in a clinical melanoma image classification task. Eur. J. Cancer 2019, 111, 148–154. [Google Scholar] [CrossRef]

- Adegun, A.; Viriri, S. Deep learning techniques for skin lesion analysis and melanoma cancer detection: A survey of state-of-the-art. Artif. Intell. Rev. 2011, 54, 811–841. [Google Scholar] [CrossRef]

- Rezk, E.; Eltorki, M.; El-Dakhakhni, W. Improving Skin Color Diversity in Cancer Detection: Deep Learning Approach. JMIR Dermatol. 2022, 5, e39143. [Google Scholar] [CrossRef]

- Mporas, I.; Perikos, I.; Paraskevas, M. Color Models for Skin Lesion Classification from Dermatoscopic Images. Advances in Integrations of Intelligent Methods. In Advances in Integrations of Intelligent Methods; Springer: Singapore, 2020; Volume 170, pp. 85–98. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 Dataset, a Large Collection of Multi-Source Dermatoscopic Images of Common Pigmented Skin Lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef]

- HAM10000. Available online: https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/DBW86T (accessed on 10 October 2022).

- Mendonca, T.; Ferreira, P.M.; Marques, J.S.; Marcal, A.R.S.; Rozeira, J. PH2-A Dermoscopic Image Database for Research and Benchmarking. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 5437–5440. [Google Scholar]

- PH2. Available online: http://www.fc.up.pt/addi (accessed on 10 October 2022).

- Giotis, I.; Molders, N.; Land, S.; Biehl, M.; Jonkman, M.F.; Petkov, N. MED-NODE: A computer-assisted melanoma diagnosis system using non-dermoscopic images. Expert Syst. Appl. 2015, 42, 6578–6585. [Google Scholar] [CrossRef]

- MedNode. Available online: https://www.cs.rug.nl/~imaging/ (accessed on 10 October 2022).

- ISIC2016. Available online: https://challenge.isic-archive.com/data/#2016 (accessed on 10 October 2022).

- ISIC2017. Available online: https://challenge.isic-archive.com/data/#2017 (accessed on 10 October 2022).

- ISIC2019. Available online: https://challenge.isic-archive.com/data/#2019 (accessed on 10 October 2022).

- ISIC2020. Available online: https://challenge.isic-archive.com/data/#2020 (accessed on 10 October 2022).

- Kingsford, C.; Salzberg, S. What are decision trees? Nat. Biotechnol. 2008, 26, 1011–1013. [Google Scholar] [CrossRef] [PubMed]

- Boser, B.; Guyon, I.; Vapnik, V. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992. [Google Scholar]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods; Cambridge University Press: Cambrigde, UK, 2000; Available online: www.support-vector.net (accessed on 10 October 2022).

- Fix, E.; Hodges,, J.L. Discriminatory analysis. Nonparametric discrimination: Consistency properties. Int. Stat. Rev./Rev. Int. Stat. 1989, 57, 238–247. [Google Scholar] [CrossRef]

- Altman, N. An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 1992, 46, 175–185. [Google Scholar]

- Fukushima, K. Cognitron: A self-organizing multilayered neural network. Biol. Cybern. 1975, 20, 121–136. [Google Scholar] [CrossRef] [PubMed]

- Convolutional Neural Network. Learn Convolutional Neural Network from Basic and Its Implementation in Keras. Available online: https://towardsdatascience.com/covolutional-neural-network-cb0883dd6529 (accessed on 10 October 2022).

- Kumar, S.; Kumar, A. Extended Feature Space-Based Automatic Melanoma Detection System. arXiv 2022, arXiv:2209.04588. [Google Scholar]

- Kanca, E.; Ayas, S. Learning Hand-Crafted Features for K-NN based Skin Disease Classification. In Proceedings of the International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 9–11 June 2022; pp. 1–4. [Google Scholar]

- Bansal, P.; Vanjani, A.; Mehta, A.; Kavitha, J.C.; Kumar, S. Improving the classification accuracy of melanoma detection by performing feature selection using binary Harris hawks optimization algorithm. Soft Comput. 2022, 26, 8163–8181. [Google Scholar] [CrossRef]

- Oliveira, R.B.; Pereira, A.S.; Tavares, J.M.R.S. Skin lesion computational diagnosis of dermoscopic images: Ensemble models based on input feature manipulation. Comput. Methods Programs Biomed. 2017, 149, 43–53. [Google Scholar] [CrossRef]

- Tajeddin, N.Z.; Asl, B.M. Melanoma recognition in dermoscopy images using lesion’s peripheral region information. Comput. Methods Programs Biomed. 2018, 163, 143–153. [Google Scholar] [CrossRef]

- Cheong, K.H.; Tang, K.J.W.; Zhao, X.; WeiKoh, J.E.; Faust, O.; Gururajan, R.; Ciaccio, E.J.; Rajinikanth, V.; Acharya, U.R. An automated skin melanoma detection system with melanoma-index based on entropy features. Biocybern. Biomed. Eng. 2021, 41, 997–1012. [Google Scholar] [CrossRef]

- Chatterjee, S.; Dey, D.; Munshi, S. Integration of morphological preprocessing and fractal based feature extraction with recursive feature elimination for skin lesion types classification. Comput. Methods Programs Biomed. 2019, 178, 201–218. [Google Scholar] [CrossRef]

- Camacho-Gutiérrez, J.A.; Solorza-Calderón, S.; Álvarez-Borrego, J. Multi-class skin lesion classification using prism- and segmentation-based fractal signatures. Expert Syst. Appl. 2022, 197, 116671. [Google Scholar] [CrossRef]

- Moradi, N.; Mahdavi-Amiri, N. Kernel sparse representation based model for skin lesions segmentation and classification. Comput. Methods Programs Biomed. 2019, 182, 105038. [Google Scholar] [CrossRef] [PubMed]

- Fu, Z.; An, J.; Qiuyu, Y.; Yuan, H.; Sun, Y.; Ebrahimian, H. Skin cancer detection using Kernel Fuzzy C-means and Developed Red Fox Optimization algorithm. Biomed. Signal Process. Control 2022, 71, 103160. [Google Scholar] [CrossRef]

- Balaji, V.R.; Suganthi, S.T.; Rajadevi, R.; Kumar, V.K.; Balaji, B.S.; Pandiyan, S. Skin disease detection and segmentation using dynamic graph cut algorithm and classification through Naive Bayes classifier. Measurement 2020, 163, 107922. [Google Scholar] [CrossRef]

- Raza, A.; Siddiqui, O.A.; Shaikh, M.K.; Tahir, M.; Ali, A.; Zaki, H. Fined Tuned Multi-Level Skin Cancer Classification Model by Using Convolutional Neural Network in Machine Learning. J. Xi’an Shiyou Univ. Nat. Sci. Ed. 2022, 18, e11936. [Google Scholar]

- Guergueb, T.; Akhloufi, M. Multi-Scale Deep Ensemble Learning for Melanoma Skin Cancer Detection. In Proceedings of the 2022 IEEE 23rd International Conference on Information Reuse and Integration for Data Science (IRI), San Diego, CA, USA, 9–11 August 2022; pp. 256–261. [Google Scholar]

- Shahsavari, A.; Khatibi, T.; Ranjbari, S. Skin lesion detection using an ensemble of deep models: SLDED. Multimed. Tools Appl. 2022, 1–20. [Google Scholar] [CrossRef]

- Wu, Y.; Lariba, A.C.; Chen, H.; Zhao, H. Skin Lesion Classification based on Deep Convolutional Neural Network. In Proceedings of the 2022 IEEE 4th International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 29–31 July 2022; pp. 376–380. [Google Scholar]

- Thapar, P.; Rakhra, M.; Cazzato, G.; Hossain, M.S. A Novel Hybrid Deep Learning Approach for Skin Lesion Segmentation and Classification. J. Healthc. Eng. 2022, 2022, 1709842. [Google Scholar] [CrossRef]

- Kumar, K.A.; Vanmathi, C. Optimization driven model and segmentation network for skin cancer detection. Comput. Electr. Eng. 2022, 103, 108359. [Google Scholar] [CrossRef]

- Vanka, L.P.; Chakravarty, S. Melanoma Detection from Skin Lesions using Convolution Neural Network. In Proceedings of the 2022 IEEE India Council International Subsections Conference (INDISCON), Bhubaneswar, India, 15–17 July 2022; pp. 1–5. [Google Scholar]

- Girdhar, N.; Sinha, A.; Gupta, S. DenseNet-II: An improved deep convolutional neural network for melanoma cancer detection. Soft Comput. 2022. [Google Scholar] [CrossRef]

- Montaha, S.; Azam, S.; Rafid, A.; Islam, S.; Ghosh, P.; Jonkman, M. A shallow deep learning approach to classify skin cancer using down-scaling method to minimize time and space complexity. PLoS ONE 2022, 17, e0269826. [Google Scholar] [CrossRef] [PubMed]

- Patil, S.M.; Rajguru, B.S.; Mahadik, R.S.; Pawar, O.P. Melanoma Skin Cancer Disease Detection Using Convolutional Neural Network. In Proceedings of the 2022 3rd International Conference for Emerging Technology (INCET), Belgaum, India, 27–29 May 2022; pp. 1–5. [Google Scholar]

- Tabrizchi, H.; Parvizpour, S.; Razmara, J. An Improved VGG Model for Skin Cancer Detection. Neural Process. Lett. 2022. [Google Scholar] [CrossRef]

- Diwan, T.; Shukla, R.; Ghuse, E.; Tembhurne, J.V. Model hybridization & learning rate annealing for skin cancer detection. Multimed. Tools Appl. 2022. [Google Scholar]

- Sharma, P.; Gautam, A.; Nayak, R.; Balabantaray, B.K. Melanoma Detection using Advanced Deep Neural Network. In Proceedings of the 2022 4th International Conference on Energy, Power and Environment (ICEPE), Shillong, India, 29 April–1 May 2022; pp. 1–5. [Google Scholar]

- Jojoa Acosta, M.F.; Caballero Tovar, L.Y.; Garcia-Zapirain, M.B.; Percybrooks, W.S. Melanoma diagnosis using deep learning techniques on dermatoscopic images. BMC Med. Imaging 2021, 21, 6. [Google Scholar] [CrossRef] [PubMed]

- Romero Lopez, A.; Giro-i-Nieto, X.; Burdick, J.; Marques, O. Skin lesion classification from dermoscopic images using deep learning techniques. In Proceedings of the 2017 13th IASTED International Conference on Biomedical Engineering (BioMed), Innsbruck, Austria, 20–21 February 2017; pp. 49–54. [Google Scholar]

- Wei, L.; Ding, K.; Hu, H. Automatic Skin Cancer Detection in Dermoscopy Images Based on Ensemble Lightweight Deep Learning Network. IEEE Access 2020, 8, 99633–99647. [Google Scholar] [CrossRef]

- Safdar, K.; Akbar, S.; Gull, S. An Automated Deep Learning based Ensemble Approach for Malignant Melanoma Detection using Dermoscopy Images. In Proceedings of the 2021 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 13–14 December 2021; pp. 206–211. [Google Scholar]

- Ozturk, S.; Cukur, T. Deep Clustering via Center-Oriented Margin Free-Triplet Loss for Skin Lesion Detection in Highly Imbalanced Datasets. arXiv 2022, arXiv:2204.02275. [Google Scholar] [CrossRef]

- Garcia, S.I. Meta-learning for skin cancer detection using Deep Learning Techniques. arXiv 2021, arXiv:2104.10775. [Google Scholar]

- Nadipineni, H. Method to Classify Skin Lesions using Dermoscopic images. arXiv 2020, arXiv:2008.09418. [Google Scholar]

- Chaturvedi, S.S.; Gupta, K.; Prasad, P.S. Skin Lesion Analyser: An Efficient Seven-Way Multi-Class Skin Cancer Classification Using MobileNet. arXiv 2020, arXiv:1907.03220. [Google Scholar]

- Milton, M.M.A. Automated Skin Lesion Classification Using Ensemble of Deep Neural Networks in ISIC 2018: Skin Lesion Analysis Towards Melanoma Detection Challenge. arXiv 2019, arXiv:1901.10802. [Google Scholar]

- Majtner, T.; Bajić, B.; Yildirim, S.; Hardeberg, J.Y.; Lindblad, J.; Sladoje, N. Ensemble of Convolutional Neural Networks for Dermoscopic Images Classification. arXiv 2018, arXiv:1808.05071. [Google Scholar]

- Yang, X.; Zeng, Z.; Yeo, S.J.; Tan, C.; Tey, H.L.; Su, Y. A Novel Multi-task Deep Learning Model for Skin Lesion Segmentation and Classification. arXiv 2017, arXiv:1703.01025. [Google Scholar]

- Alom, M.Z.; Aspiras, T.; Taha, T.M.; Asari, V.K. Skin Cancer Segmentation and Classification with NABLA-N and Inception Recurrent Residual Convolutional Networks. arXiv 2019, arXiv:1904.11126. [Google Scholar]

- Agarwal, K.; Singh, T. Classification of Skin Cancer Images using Convolutional Neural Networks. arXiv 2022, arXiv:2202.00678. [Google Scholar]

- Wanga, Y.; Caie, J.; Louiea, D.C.; Jane Wanga, Z.; Lee, T.K. Incorporating clinical knowledge with constrained classifier chain into a multimodal deep network for melanoma detection. Comput. Biol. Med. 2021, 137, 104812. [Google Scholar] [CrossRef]

- Choudhary, P.; Singhai, J.; Yadav, J.S. Skin lesion detection based on deep neural networks. Chemom. Intell. Lab. Syst. 2022, 230, 104659. [Google Scholar] [CrossRef]

- Cao, X.; Pan, J.S.; Wang, Z.; Sun, Z.; Haq, A.; Deng, W.; Yang, S. Application of generated mask method based on Mask R-CNN in classification and detection of melanoma. Comput. Methods Programs Biomed. 2021, 207, 106174. [Google Scholar] [CrossRef] [PubMed]

- Malibari, A.A.; Alzahrani, J.S.; Eltahir, M.M.; Malik, V.; Obayya, M.; Duhayyim, M.A.; Lira Neto, A.V.; de Albuquerque, V.H.C. Optimal deep neural network-driven computer aided diagnosis model for skin cancer. Comput. Electr. Eng. 2022, 103, 108318. [Google Scholar] [CrossRef]

- Sayeda, G.I.; Solimanb, M.M.; Hassanien, A.E. A novel melanoma prediction model for imbalanced data using optimized SqueezeNet by bald eagle search optimization. Comput. Biol. Med. 2021, 136, 104712. [Google Scholar] [CrossRef]

- Mahboda, A.; Schaefer, G.; Wang, C.; Dorffner, G.; Ecker, R.; Ellinger, I. Transfer learning using a multi-scale and multi-network ensemble for skin lesion classification. Comput. Methods Programs Biomed. 2020, 193, 105475. [Google Scholar] [CrossRef]

- Hameeda, N.; Shabutc, M.A.; Ghoshb, M.K.; Hossain, M.A. Multi-class multi-level classification algorithm for skin lesions classification using machine learning techniques. Expert Syst. Appl. 2020, 141, 112961. [Google Scholar] [CrossRef]

- Elashiri, M.A.; Rajesh, A.; Pandey, S.N.; Shukla, S.K.; Urooje, S.; Lay-Ekuakillef, A. Ensemble of weighted deep concatenated features for the skin disease classification model using modified long short term memory. Biomed. Signal Process. Control 2022, 76, 103729. [Google Scholar] [CrossRef]

- Wang, D.; Pang, N.; Wang, Y.; Zhao, H. Unlabeled skin lesion classification by self-supervised topology clustering network. Biomed. Signal Process. Control 2021, 66, 102428. [Google Scholar] [CrossRef]

- Ali, M.S.; Miah, M.S.; Haque, J.; Rahman, M.M.; Islam, M.K. An enhanced technique of skin cancer classification using deep convolutional neural network with transfer learning models. Mach. Learn. Appl. 2021, 5, 100036. [Google Scholar] [CrossRef]

- Hasan, M.K.; Elahi, M.T.E.; Alam, M.A.; Jawad, M.T.; Martí, R. DermoExpert: Skin lesion classification using a hybrid convolutional neural network through segmentation, transfer learning, and augmentation. Inform. Med. Unlocked 2022, 28, 100819. [Google Scholar] [CrossRef]

- Khan, M.A.; Zhang, Y.-D.; Sharif, M.; Akram, T. Pixels to Classes: Intelligent Learning Framework for Multiclass Skin Lesion Localization and Classification. Comput. Electr. Eng. 2021, 90, 106956. [Google Scholar] [CrossRef]

- Rahman, Z.; Hossain, M.S.; Islam, M.R.; Hasan, M.M.; Hridhee, R.A. An approach for multiclass skin lesion classification based on ensemble learning. Inform. Med. Unlocked 2021, 25, 100659. [Google Scholar] [CrossRef]

- Sertea, S.; Demirel, H. Gabor wavelet-based deep learning for skin lesion classification. Comput. Biol. Med. 2019, 113, 103423. [Google Scholar] [CrossRef]

- Iqbal, I.; Younus, M.; Walayat, K.; Kakar, M.U.; Ma, J. Automated multi-class classification of skin lesions through deep convolutional neural network with dermoscopic images. Comput. Med. Imaging Graph. 2021, 88, 101843. [Google Scholar] [CrossRef]

- Harangi, B. Skin lesion classification with ensembles of deep convolutional neural networks. J. Biomed. Inform. 2018, 86, 25–32. [Google Scholar] [CrossRef]

- Indraswari, R.; Rokhana, R.; Herulambang, W. Melanoma image classification based on MobileNetV2 network. Procedia Comput. Sci. 2022, 197, 198–207. [Google Scholar] [CrossRef]

- Kotra, S.R.S.; Tummala, R.B.; Goriparthi, P.; Kotra, V.; Ming, V.C. Dermoscopic image classification using CNN with Handcrafted features. J. King Saud Univ.-Sci. 2021, 33, 101550. [Google Scholar]

- Harangi, B.; Baran, A.; Hajdu, A. Assisted deep learning framework for multi-class skin lesion classification considering a binary classification support. Biomed. Signal Process. Control 2020, 62, 102041. [Google Scholar] [CrossRef]

- Carvajal, D.C.; Delgado, M.; Guevara Ibarra, D.; Ariza, L.C. Skin Cancer Classification in Dermatological Images based on a Dense Hybrid Algorithm. In Proceedings of the 2022 IEEE XXIX International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Lima, Peru, 11–13 August 2022. [Google Scholar]

- Sharafudeen, M. Detecting skin lesions fusing handcrafted features in image network ensembles. Multimed. Tools Appl. 2022. [Google Scholar] [CrossRef]

- Redha, A.; Ragb, H.K. Skin lesion segmentation and classification using deep learning and handcrafted features. arXiv 2021, arXiv:2112.10307. [Google Scholar]

- Benyahia, S.; Meftah, B.; Lézoray, O. Multi-features extraction based on deep learning for skin lesion classification. Tissue Cell 2022, 74, 101701. [Google Scholar] [CrossRef]

- Codella, N.C.F.; Nguyen, Q.-B.; Pankanti, S.; Gutman, D.A.; Helba, B.; Halpern, A.C.; Smith, J.R. Deep learning ensembles for melanoma recognition in dermoscopy images. IBM J. Res. Dev. 2017, 61, 5:1–5:15. [Google Scholar] [CrossRef]

- Bansal, P.; Garg, R.; Soni, P. Detection of melanoma in dermoscopic images by integrating features extracted using handcrafted and deep learning models. Comput. Ind. Eng. 2022, 168, 108060. [Google Scholar] [CrossRef]

- Mirunalini, P.; Chandrabose, A.; Gokul, V.; Jaisakthi, S.M. Deep learning for skin lesion classification. arXiv 2017, arXiv:1703.04364. [Google Scholar]

- Qureshi, A.S.; Roos, T. Transfer Learning with Ensembles of Deep Neural Networks for Skin Cancer Classification in Imbalanced Data Sets. Neural Process. Lett. 2022. [Google Scholar] [CrossRef]

- Gajera, H.K.; Nayak, D.R.; Zaveri, M.A. A comprehensive analysis of dermoscopy images for melanoma detection via deep CNN features. Biomed. Signal Process. Control 2023, 79, 104186. [Google Scholar] [CrossRef]

- Mahboda, A.; Schaefer, G.; Ellinger, I.; Ecker, R.; Pitiot, A.; Wange, C. Fusing fine-tuned deep features for skin lesion classification. Comput. Med. Imaging Graph. 2019, 71, 19–29. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Moub, L.; Zhu, X.X.; Mandal, M. Automatic skin lesion classification based on mid-level feature learning. Comput. Med. Imaging Graph. 2020, 84, 101765. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Jiang, F.; Zhou, F.; He, X.; Ni, D.; Chen, S.; Wang, T.; Lei, B. Convolutional descriptors aggregation via cross-net for skin lesion recognition. Appl. Soft Comput. J. 2020, 92, 106281. [Google Scholar] [CrossRef]

- Hauser, K.; Kurz, A.; Haggenmüller, S.; Maron, R.C.; von Kalle, C.; Utikal, J.S.; Meier, F.; Hobelsberger, S.; Gellrich, F.F.; Sergon, M.; et al. Explainable artificial intelligence in skin cancer recognition: A systematic review. Eur. J. Cancer 2022, 167, 54–69. [Google Scholar] [CrossRef]

- Lima, S.; Terán, L.; Portmann, E. A proposal for an explainable fuzzy-based deep learning system for skin cancer prediction. In Proceedings of the 2020 seventh international conference on eDemocracy & eGovernment (ICEDEG), Buenos Aires, Argentina, 22–24 April 2020; pp. 29–35. [Google Scholar]

- Pintelas, E.; Liaskos, M.; Livieris, I.E.; Kotsiantis, S.; Pintelas, P. A novel explainable image classification framework: Case study on skin cancer and plant disease prediction. Neural Comput. Appl. 2021, 33, 15171–15189. [Google Scholar] [CrossRef]

- Shorfuzzaman, M. An explainable stacked ensemble of deep learning models for improved melanoma skin cancer detection. Multimed. Syst. 2022, 28, 1309–1323. [Google Scholar] [CrossRef]

- Zia Ur Rehman, M.; Ahmed, F.; Alsuhibany, S.A.; Jamal, S.S.; Zulfiqar Ali, M.; Ahmad, J. Classification of Skin Cancer Lesions Using Explainable Deep Learning. Sensors 2022, 22, 6915. [Google Scholar] [CrossRef]

| Diagnosis Rules | Description |

|---|---|

| The ABCDE rule [12,13] | It is based on morphological characteristics such as asymmetry (A), irregularity of the edges (B), nonhomogeneous color (C), a diameter size (D) greater than or equal to 6 mm, and evolution (E) understood as temporal changes in size, shape, color, elevation, and the appearance of new symptoms (bleeding, itching, scab formation) [14]. |

| Seven Point Checklist [15] | It is based on the seven main dermoscopic features of melanoma (major criteria: atypical pigment network, blue-whitish veil and atypical vascular pattern; minor criteria: irregular pigmentation, irregular streaks, irregular dots and globules, regression structures) by assigning a score to each of these. |

| The Menzies method [16] | It is based on 11 features, two negative and nine positive, which are assessed as present/absent. |

| Architetture | Year | Developed by | Parameters | Layers | Input Size |

|---|---|---|---|---|---|

| GoogLeNet | 2014 | Szegedy et al. | 4 M | 144 | 224 × 224 |

| InceptionV3 | 2015 | Szegedy et al. | 23.8 M | 316 | 299 × 299 |

| ResNet18 | 2015 | He et al. | 11.17 M | 72 | 224 × 224 |

| ResNet50 | 2015 | He et al. | 25.6 M | 177 | 224 × 224 |

| ResNet101 | 2015 | He et al. | 44.7 M | 347 | 224 × 224 |

| SqueezeNet | 2016 | Iandola et al. | 1.2 M | 68 | 227 × 227 |

| DenseNet201 | 2017 | Huang et al. | 20.2 M | 709 | 224 × 224 |

| Xception | 2017 | Chollet | 22.9 M | 171 | 299 × 299 |

| Inception-ResNet | 2017 | Szegedy et al. | 55.8 M | 824 | 299 × 299 |

| EfficientNetB0 | 2019 | Mingxing and Le | 5.3 M | 290 | 224 × 224 |

| Author & Year | Classification Task | Dataset | Data Augmentation | Methods Used | Cross Validation | Results |

|---|---|---|---|---|---|---|

| Kumar et al. [74] 2022 | Binary: MM vs. benign | MedNode | - | F + Ensemble Bagged Tree classifier | - | ACC = 0.95, SE = 0.94, SP = 0.97, AUC = 0.99 |

| Kanca et al. [75] 2022 | Binary: MM vs. N and SK | ISIC2017 | - | F + KNN classifier | - | ACC = 0.68, SE = 0.80, SP = 0.80 |

| Bansal et al. [76] 2022 | Binary: MM vs. non-MM | HAM10000 | Blurring, increased brightness, addition of contrast and noise, flipping, zoom, and others | F and F (with BHHO-S algorithm) + linear SVM | - | ACC = 0.88, SE = 0.89, SP = 0.89, PR = 0.86 |

| Oliveira et al. [77] 2017 | Binary: benign vs. malignant | ISIC2016 | - | F and F (using SE-OPS approach) + OPF classifier | 10-fold | ACC = 0.94, SE = 0.92, SP = 0.97 |

| Tajeddin et al. [78] 2018 | Binary: MM vs. N and MM vs. N/AN | PH | - | F and F (with SFS approach) + linear SVM and RUSBoost classifiers | 10-fold | 1° SE = 0.97, SP = 1; 2° SE = 0.95, SP = 0.95 |

| Cheong et al. [79] 2021 | Binary: benign vs. malignant | DermIS, DermQuest, ISIC2016 | Image rotation: ±30, ±60 and ±90 degrees | F and F (t-Student test) + RBF-SVM | - | ACC = 0.98, SE = 0.97, SP = 0.98, PR = 0.98, F1 = 0.98 |

| Chatterjee et al. [80] 2019 | Multi-class: MM, N and BCC | ISIC archive, PH, IDS | - | F and F (RFE method) + RBF-SVM | 10-fold | ISIC: ACC = 0.99, SE = 0.98, SP = 0.98; PH: ACC = 0.98, SE = 0.91, SP = 0.99; IDS: ACC = 1, SE = 1, SP = 1 |

| Camacho-Gutiérrez et al. [81] 2022 | Multi-class: N, MM, SK, BCC, DF, AK, VL | ISIC 2019 | - | statistical fractal signatures + LDA classifier | - | Four-classes: ACC = 0.87, SE = 0.63, SP = 0.89, PR = 0.65; seven-classes: ACC = 0.88, SE = 0.41, SP = 0.92, PR = 0.46 |

| Moradi et al. [82] 2019 | Binary: MM vs. normal; Multi-class: MM, BCC and N | ISIC2016, PH | - | F and calculation of sparse code using KOMP algorithm + linear classifier | 10-fold | Binary ISIC: ACC = 0.96, SE = 0.97, SP = 0.93: binary PH2: ACC = 0.96, SE = 100, SP = 0.92; three-classes: overall ACC = 0.86 |

| Fu et al. [83] 2020 | Multi-class: BCC, SK, MM, N | ISIC2020 | - | F and F + MPL-averaged optimized by DRFO algorithm | - | ACC = 0.91, SE = 0.90, SP = 0.92 |

| Balaji et al. [84] 2020 | Multi-class: benign vs. malignant | ISIC2017 | - | F + Naïve Bayes classifier | - | ACC = 0.94 for benign cases, 0.91 for MM and 0.93 for SK. |

| Author & Year | Classification Task | Dataset | Data Augmentation | Methods Used | Cross Validation | Results |

|---|---|---|---|---|---|---|

| Raza et al. [85] 2022 | Binary: benign vs. malignant | ISIC archive | - | Parameter transfer of a pre-trained network to a CNN | - | ACC = 0.96 |

| Guergueb et al. [86] 2022 | Binary: benign vs. malignant | ISIC archive, ISIC2020 | Mixup and CutMix techniques | Ensemble of three pre-trained CNNs: EfficientNetB8, SEResNeXt10 and DenseNet264 | 3-fold | ACC = 0.989, SE = 0.962, SP = 0.988, AUC = 0.99 |

| Shahsavari et al. [87] 2022 | Multi-class: BCC, MM, N, SK | ISIC archive, PH | Image rotation: 45, 90, 135, 180, 210; horizontal and vertical flipping | Ensemble of four pre-trained CNNs: GoogLeNet, VGGNet, ResNet and ResNeXt | - | ACC = 0.879 on ISIC, ACC = 0.94 on PH |

| Wu et al. [88] 2022 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | HAM10000 | Random clipping, flipping and ranslation | Use of TL on InceptionV3, ResNet50 and Denset201 | - | ACC train = 0.99, ACC val = 0.869 |

| Thapar et al. [89] 2022 | Binary: MM vs. non-MM | ISIC2017, ISIC2018, PH | - | F and F (based on GOA) + custom CNN | - | ISIC2017: ACC = 0.98, SE = 0.96, SP = 0.99, PR = 0.97, F1 = 0.97; ISIC2018: ACC = 0.98, SE = 0.97, SP = 0.99, PR = 0.98, F1 = 0.97; PH: ACC = 0.98, SE = 0.96, SP = 0.99, PR = 0.97, F1 = 0.96 |

| Kumar et al. [90] 2022 | Binary: benign vs. malignant | ISIC archive | Resizing, vertical and horizontal flipping and rotation (45 degrees) | Pre-trained SqueezeNet re-trained by AWO algorithm | 5 and 9-fold | ACC = 0.925, SE = 0.921, SP = 0.917 |

| Vanka et al. [91] 2022 | Binary: benign vs. malignant | ISIC archive | - | Custom CNN | - | TPR = 0.94, TNR = 0.98, F1 = 0.96 |

| Girdhar et al. [92] 2022 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | HAM10000 | Details are missing | Custom CNN | - | ACC = 0.963, REC = 0.96, F1 = 0.957 |

| Montaha et al. [93] 2022 | Binary: benign vs. malignant | ISIC archive | Brightness and contrast alteration of images | Custom shallow CNN | 5 and 10-fold | ACC = 0.987, PR = 0.989 |

| Patil et al. [94] 2022 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | HAM10000 | - | Pre-trained DL method | - | ACC = 0.997 |

| Tabrizchi et al. [95] 2022 | Binary: MM vs. benign | ISIC2020 | Image rotation: 90, 180, 270 degrees; center cropping, brightness change, and mirroring | New DL model based on VGG16 | Leave-one-out | ACC = 0.87, SE = 0.852, F1 = 0.922, AUC = 0.923 |

| Diwan et al. [96] 2022 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | HAM10000 | - | Custom CNN based on AlexNet | - | ACC = 0.878, SP = 962, PR = 0.787, REC = 0.774, F1 = 0.778 |

| Sharma et al. [97] 2022 | Binary: benign vs. malignant | HAM10000 | - | Use of some pre-trained networks: VGG16, VGG19, DenseNet101 and ResNet101 | - | ACC = 0.848 |

| Jojoa Acosta et al. [98] 2021 | Binary: bening vs. malignant | ISIC2017 | Image rotation: 180 degrees; vertical flipping | Use of pre-trained ResNet52 in 5 different situations | - | ACC = 0.904, SE = 0.82, SP = 0.925 |

| Romero Lopez et al. [99] 2017 | Binary: benign vs. malignant | ISIC2016 | - | Use of VGG16 in 3 different situations | - | ACC = 0.813, SE = 0.787, PR = 0.797 |

| Wei et al. [100] 2020 | Binary: benign vs. malignant | ISIC2016 | Image rotation: 90, 180, 270 degrees; mirroring, center cropping, brightness change and random occlusion operations | Custom architecture based on MobileNet and DenseNet | - | MobileNEt ACC = 0.865, AUC = 0.832; DenseNEt: ACC = 0.855, AUC = 0.845 |

| Safdar et al. [101] 2021 | Binary: MM vs. benign | PH, MedNode, ISIC2020 | Affine Image Transformation and color Transformation approaches | Use of pre-trained ResNet50 and InceptionV3 | - | ACC = 0.934, SP = 0.965, PR = 0.895, AUC = 0.988 |

| Ozturk et al. [102] 2022 | Binary: benign vs. malignant | HAM10000, ISIC2019, ISIC2020 | - | Deep clustering approach. Custom CNNs based on VGG16, ResNet50, DenseNet169 and EfficientNetB3 | - | ACC = 0.98, SP = 0.999, PR = 0.961, REC = 0.98, F1 = 0.97, AUC = 0.709 |

| Garcia [103] 2022 | Multi-class: MM, benign, malignant | ISIC2019, PH, 7-point checklist dataset | - | Use of a meta-learning method and pre-trained ResNet50 | 3-fold | F1 = 0.53, Jaccard similarity index= 0.472 |

| Nadipineni [104] 2020 | Multi-class: MM, N, BCC, AK, SK, DF, VL, SCC | ISIC2019, 7-point checklist dataset | Random brightness, contrast changes, random flipping, rotation, scaling, and shear, and CutOut | Use of pre-trained MobileNet | 10-fold | ACC = 0.886 |

| Chaturvedi et al. [105] 2020 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | HAM10000 | Image rotation, zoom, horizontal/ vertical flipping | Use of three pre-trained networks (EfficientNet, SENet and ResNet) in three different situations | - | ACC = 0.831, PR = 0.89, REC = 0.83, F1 = 0.83 |

| Milton [106] 2019 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | HAM10000 | Image rotation, flipping, random cropping, adjust brightness and contrast, pixel jitter, Aspect Ratio, random shear, zoom, and vertical/horizontal shift and flip | Use of pre-trained networks: PNASNet-5-Large, InceptionResNetV2, SENet154 327 and InceptionV4 | - | ACC = 0.76 |

| Majtner et al. [107] 2018 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | ISIC2018 | Image rotation, horizontal flipping | Combination of VGG16 and GoogLeNet pre-trained networks | - | ACC VGG16 = 0.801, ACC GoogLeNet = 0.799, ACC ensemble = 0.815 |

| Yang et al. [108] 2017 | Multi-class: MM vs. N and KS; MM and N vs. SK | ISIC2017 | - | Custom CNN based on GoogLeNet | - | AUC = 0.926, Jaccard index = 0.724 |

| Alom et al. [109] 2019 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | HAM10000 | Horizontal/ vertical flipping | Custom CNN | - | ACC = 0.871 |

| Agarwal et al. [110] 2022 | Binary: benign vs. malignant | ISIC archive | Re-scaling, shearing, vertical/horizontal flipping, zoom | Use of TL on XceptionNet, DenseNet201, ResNet50 and MobileNetV2 | - | ACC = 0.866, PR = 0.865, REC = 0.86, F1 = 0.862 |

| Wang et al. [111] 2021 | Binary: benign vs. malignant | 7-point checklist dataset | - | Custom CNN based on ResNet50 | - | ACC = 0.813, SE = 0.529, SP = 0.891 |

| Choudhary et al. [112] 2022 | Binary: benign vs. malignant | ISIC2017 | Based on Mask R-CNN | F + FFNN | - | ACC = 0.826, SE = 0.857, SP = 0.764, REC = 0.893, F1 = 0.824 |

| CaoaJeng et al. [113] 2021 | Binary: MM vs. benign | ISIC2017, ISIC2018 | - | Use of pre-trained models: InceptionV4, ResNet and DenseNet121 | 5-fold | ACC = 0.906, SE = 0.78, SP = 0.934, AUC = 0.95 |

| Malibari et al. [114] 2022 | Multi-class: N, MM, SK, BCC, DF, AK, VL, SCC | ISIC2019 | - | Custom DNN | - | ACC = 0.956, SP = 0.963, PR = 0.847, REC = 0.925, F1 = 0.884 |

| Sayeda et al. [115] 2021 | Binary: MM vs. benign | ISIC2020 | Random translation, scale, rotation, reflection, and shear | Use of pre-trained SqueezeNet | - | ACC = 0.98, SE = 1, SP = 0.97, F1 = 0.98, AUC = 0.99 |

| Mahbod et al. [116] 2020 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | ISIC2016, ISIC2017, ISIC2018 | - | Assembling of pre-trained EfficientNetB0, EfficientNetB1 and SeReNeXt-50 | - | ACC = 0.96, PR = 913, AUC = 0.981 |

| Hameeda et al. [117] 2020 | Multi-class Single-level; multi-class Multi-level | ISIC2016, PH, DermIS, DermQuest, DermNZ | - | F + ANN and pre-trained AlexNet | - | ML: ACC = 0.64; DL: ACC = 0.96 |

| Elashiri et al. [118] 2022 | Multi-class classification | PH, HAM10000 | - | F using Resnet50, VGG16 and Deeplabv3 + modified LSTM | - | PH: ACC = 0.94, SE = 0.94, SP = 0.93, PR = 0.90, F1 = 0.92; HAM: ACC = 0.94, SE = 0.94, SP = 0.94, PR = 0.34, F1 = 0.5 |

| Wang et al. [119] 2018 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | ISIC2018 | Image rotation, flipping, scaling, tailoring, translation, adding noise, and changing contrast | F using pre-trained ResNet50 and decoder in Cycle GAN + STCN | - | ACC = 0.79, AUC = 0.81 |

| Ali et al. [120] 2021 | Binary: benign vs. malignant | HAM10000 | Image rotation, random cropping, mirroring, and color-shifting using principle component analysis | Custom DCNN | - | ACC = 0.91, PR = 0.97, REC = 0.94, F1 = 0.95 |

| Hasan et al. [121] 2022 | Binary: MM vs. N; Multi-class: MM, N, SK and N/AN, MM, SK, BCC, DF, AK, VL | ISIC2016, ISIC2017, ISIC2018 | Image rotation (180, 270 degrees); gamma, logarithmic, and sigmoid corrections, and stretching, and shrinking of the intensity levels | Custom CNN | 5-fold | ISIC2016: AUC = 0.96, REC = 0.92, PR = 0.92; ISIC2017: AUC = 0.95, REC = 0.86, PR = 0.86; ISIC2018: AUC = 0.97, REC = 0.86, PR = 0.85 |

| Khan et al. [122] 2021 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | HAM10000 | - | Custom CNN | - | ACC = 0.87, SE = 0.86, PR = 0.87, F1 = 0.86 |

| Rahman et al. [123] 2019 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | ISIC2019, HAM10000 | Image rotation (0–30 degrees), flipping, shearing (0.1), and zooming (90% to 110%). | Ensemble of 5 pre-trained models (ResNeXt, SeResNeXt, ResNet, Xception and DenseNet) | - | ACC = 0.87, PR = 0.87, REC = 0.93, F1 = 0.89, MCC = 0.87 |

| Sertea et al. [124] 2019 | Binary: MM vs. SK | ISIC2017 | Image rotation: 18, 45 degrees | Use of pre-trained ResNet18 and AlexNet | - | MM: ACC = 0.83, SE = 0.13, SP = 1, AUC = 0.96; SK: ACC = 0.82, SE = 0.17, SP = 0.98, AUC = 0.66 |

| Iqbal et al. [125] 2021 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | ISIC2017, ISIC2018, ISIC2019 | Image rotation (30 to 30 degrees), translation (12.5% shift to the left, the right, up, and down), and horizontal/vertical flipping | Custom CNN | - | ISIC2017: ACC = 0.93, SE = 0.93, SP = 0.91, PR = 0.94, F1 = 0.93, AUC = 0.96; ISIC2018: ACC = 0.89, SE = 0.89, SP = 0.96, PR = 0.90, F1 = 0.89, AUC = 0.99; ISIC2019: ACC = 0.90, SE = 0.90, SP = 0.98, PR = 0.91, F1 = 0.90, AUC = 0.99 |

| Harangi [126] 2018 | Multi-class: MM, N, SK | ISIC2017 | Cropping of random samples from the images; horizontal flipping and rotation (90, 180, 270 degrees) | Use of two pre-trained networks (ResNet and GoogLeNet), and two networks with randomly initialized weights (VGGNet and AlexNet) | - | ACC = 0.87, SE = 0.56, SP = 0.79, AUC = 0.89 |

| Indraswari et al. [127] 2022 | Binary: benign vs. malignant | ISIC archive, ISIC2016, MedNode, PH | - | Use of modify pre-trained MobileNetV2 | - | ISIC archive: ACC = 0.85, SE = 0.85, SP = 0.85, PR = 0.83; ISIC2016: ACC = 0.83, SE = 0.36, SP = 0.95, PR = 0.64; MedNode: ACC = 0.75, SE = 0.76, SP = 0.73, PR = 0.67; PH: ACC = 0.72, SE = 0.33, SP = 0.92, PR = 0.67 |

| Kotra et al. [128] 2021 | Binary: MM vs. n; SK vs. SCC; MM vs. SK; MM vs. BCC; N vs. BCC | ISIC2016 | - | Injection of hand-extracted features into the FC layer of a CNN | - | MM vs. N: ACC = 0.93; SK vs. SCC: ACC = 0.95; MM vs. SK: ACC = 0.98; MM vs. BCC: ACC = 0.99; N vs. BCC: ACC = 0.99 |

| Harangi et al. [129] 2020 | Binary: healthy vs. diseased; multi-class: N/AN, MM, SK, BCC, DF, AK, VL | HAM10000 | Cropping of random samples from the images; horizontal/vertical flipping, rotation (90, 180, 270 degrees) and application of random brighten and contrast factors | Use of modify pre-trained GoogLeNet-InceptionV3 network | - | MM: ACC = 0.91, SE = 0.45, SP = 0.97, PR = 0.68, AUC = 0.81 |

| Author & Year | Classification Task | Dataset | Data Augmentation | Methods Used | Cross Validation | Results |

|---|---|---|---|---|---|---|

| Carvajal et al. [130] 2022 | Binary: MM vs. carcinoma | HAM10000 | - | F using pre-trained DenseNet121 + XGBoost classifier | - | ACC = 0.91, SE = 0.93, PR = 0.91, F1 = 0.91 |

| Sharafudeen et al. [131] 2022 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL, SCC | ISIC2018, ICIS2019 | - | F with EfficientNet networks B4 to 486 B7 and hand-extracted features + ANN | - | ISIC2018: ACC = 0.91, SE = 0.98, ISIC2019: ACC = 0.86, SE = 0.98 |

| Redha et al. [132] 2021 | Multi-class: N/AN, MM, SK, BCC, DF, AK, VL | ISIC2018 | Random crops and rotation (0–180 degrees), vertical/horizontal flips, and shear (0–30 degrees) | F using pre-trained DL architectures (ResNet50 and DenseNet201) + SVM | - | ACC = 0.92, SE = 0.88, SP = 0.97, AUC = 0.98 |

| Benyahia et al. [133] 2017 | Multi-class: healthy, benign, malignant, eczema; multi-level | ISIC2019, PH | - | F using pre-trained DenseNet201 + FineKNN or CubicSVM classifiers | - | ISIC: ACC = 0.92, PH: ACC = 99 |

| Codella et al. [134] 2017 | Binary: benign vs. malignant | ISIC2016 | - | F by hand, by sparse coding methods and by Deep Residual Network (DRN) + SVM | 3-fold | SP = 0.95, PR = 0.65, AUC = 0.84 |

| Bansal et al. [135] 2022 | Binary: MM vs. non-MM | HAM10000, PH | Image rotation, vertical/horizontal flipping, zoom, increased brightness and contrast, and noise addition | F: hand-crafted, from ResNet and 503 EfficientNet + ANN | - | HAM10000: ACC = 0.95, SE = 0.95, SP = 0.95, PR = 0.95, F1 = 0.95; PH: ACC = 0.98, SE = 0.98, SP = 0.98, PR = 0.96, F1 = 0.97; |

| Mirunalini et al. [136] 2017 | Binary: benign vs. malignant; MM vs. non-MM | ISIC2017 | - | F with InceptionV3 + FFNNs | - | 1°: ACC = 0.72; 2°: ACC = 0.71; average-AUC = 0.66 |

| Qureshi et al. [137] 2021 | Binary: benign vs. malignant | ISIC archive, ISIC2020 | - | Ensemble of six CNN + SVM | F1 = 0.23 ± 0.04, AUC-PR = 0.16 ± 0.04, AUC = 0.87 ± 0.02 | |

| Gajera et al. [138] 2022 | Binary: MM vs. non-MM | ISIC2016, ISICI2017, HAM10000, PH | F using pre-trained DenseNet121 network + MPL | 5-fold | ISIC2016: ACC = 0.81; ISICI2017: ACC = 0.81; HAM10000: ACC = 0.81; PH: ACC = 0.98 | |

| Mahboda et al. [139] 2019 | Binary: MM vs. SK | ISIC2016 | - | F using pre-trained AlexNet, VGG15, ResNet18 and ResNet101 models + RBF-SVM | - | MM:SE = 0.812, SP = 0.785, AUC = 0.873; SK:SE = 0.933, SP = 0.859, AUC = 0.955 |

| Liu et al. [140] 2020 | Binary: MM vs. non-MM; SK vs. non-SK | ISIC2017 | - | F using pre-trained ResNet50 and DenseNet201 models + RBF-SVM | - | ResNet: ACC = 0.87, AUC = 0.89; DenseNet: ACC = 0.87, AUC = 0.89 |

| Yu et al. [141] 2020 | Binary: MM vs. others; SK vs. others | ISIC2016, ISIC2017 | Image rotation, flipping, translation, and cropping; color-based data augmentation | F from CNNs + linear SVM | - | MM ISIC2016: ACC = 0.87, SE = 0.6, SP = 0.85, PR = 0.69, AUC = 0.86; MM ISIC2017: ACC = 0.84, SE = 0.61, SP = 0.90, PR = 0.63, AUC = 0.84; SK ISIC2017: ACC = 0.92, SE = 0.80, SP = 0.94, PR = 0.82, AUC = 0.95. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grignaffini, F.; Barbuto, F.; Piazzo, L.; Troiano, M.; Simeoni, P.; Mangini, F.; Pellacani, G.; Cantisani, C.; Frezza, F. Machine Learning Approaches for Skin Cancer Classification from Dermoscopic Images: A Systematic Review. Algorithms 2022, 15, 438. https://doi.org/10.3390/a15110438

Grignaffini F, Barbuto F, Piazzo L, Troiano M, Simeoni P, Mangini F, Pellacani G, Cantisani C, Frezza F. Machine Learning Approaches for Skin Cancer Classification from Dermoscopic Images: A Systematic Review. Algorithms. 2022; 15(11):438. https://doi.org/10.3390/a15110438

Chicago/Turabian StyleGrignaffini, Flavia, Francesco Barbuto, Lorenzo Piazzo, Maurizio Troiano, Patrizio Simeoni, Fabio Mangini, Giovanni Pellacani, Carmen Cantisani, and Fabrizio Frezza. 2022. "Machine Learning Approaches for Skin Cancer Classification from Dermoscopic Images: A Systematic Review" Algorithms 15, no. 11: 438. https://doi.org/10.3390/a15110438

APA StyleGrignaffini, F., Barbuto, F., Piazzo, L., Troiano, M., Simeoni, P., Mangini, F., Pellacani, G., Cantisani, C., & Frezza, F. (2022). Machine Learning Approaches for Skin Cancer Classification from Dermoscopic Images: A Systematic Review. Algorithms, 15(11), 438. https://doi.org/10.3390/a15110438