Abstract

The Cutting Stock Problem (CSP) is an optimisation problem that roughly consists of cutting large objects in order to produce small items. The computational effort for solving this problem is largely affected by the number of cutting patterns. In this article, in order to cope with large instances of the One-Dimensional Cutting Stock Problem (1D-CSP), we resort to a pattern generating procedure and propose a strategy to restrict the number of patterns generated. Integer Linear Programming (ILP) models, an implementation of the Column Generation (CG) technique, and an application of the Generate-and-Solve (G&S) framework were used to obtain solutions for benchmark instances from the literature. The exact method was capable of solving small and medium sized instances of the problem. For large sized instances, the exact method was not applicable, while the effectiveness of the other methods depended on the characteristics of the instances. In general, the G&S method presented successful results, obtaining quasi-optimal solutions for the majority of the instances, by employing the strategy of artificially reducing the number of cutting patterns and by exploiting them in a heuristic framework.

1. Introduction

The CSP is an optimisation problem that basically consists of cutting larger parts (objects) available in stock in order to produce smaller parts (items) to meet a given demand, optimising a certain objective function, for example, the loss of material or the cost of the objects to be cut. This problem belongs to the NP-Hard class [1], that is, there is no deterministic algorithm that can solve it in polynomial time with certificate of optimality, unless P = NP. Thus, for large sized instances of the problem to be tackled efficiently, it is necessary to resort to approximation algorithms, such as heuristics and metaheuristics, and, therefore, the guarantee of optimality is lost in order to obtain good solutions in a reasonable time.

Applications of this problem are commonly found in a wide variety of industries in which the waste of materials is a major concern, such as in manufacturing processes of steel, textile, paper, and glass. It is important to note that, in real world applications, different constraints may be considered due to the particularities of the manufacturing processes involved in each scenario. Given its wide applicability, in addition to its theoretical relevance, the search for increasingly promising solutions to this problem is still very relevant [2].

In this work, we propose a procedure for generating cutting patterns for the 1D-CSP and a strategy for reducing the number of patterns generated, in order to deal with large scale instances of the problem, even if the guarantee of obtaining an optimal solution is lost. Using benchmark instances from the literature [3], computational experiments were performed for two ILP models, an implementation of the classical CG technique [4,5,6], and an application of the G&S framework [7,8,9,10].

We employed CPLEX [11] for solving the ILP models. We resorted to Coluna [12] for running the CG technique and Java Concert for implementing the G&S method to tackle the problem. The exact method was able to solve only small and medium sized instances. None of the CG and G&S algorithms stood out from the other for all instances. In fact, the effectiveness varied according to the characteristics of the instances, such as the number of item types, the size of the items in relation to the size of the object, and the demand for each item type. Roughly, G&S performed well for all classes, obtaining quasi-optimal solutions for the majority of the instances.

The remainder of this article is structured as it follows: in Section 2, we formally state the 1D-CSP, discuss the approaches commonly used to solve it, and introduce the G&S framework. In Section 3, we present a procedure for generating cutting patterns for the 1D-CSP and propose a strategy for artificially reducing the number of patterns to be considered into the formulation. In addition, we explain in detail the application of this methodology to the 1D-CSP. Section 4 is dedicated to present and analyse the computational results obtained from the different approaches. In Section 5, final remarks and perspectives on future work conclude the article.

2. Preliminaries

In this section, we formally introduce the 1D-CSP, review the literature on the CG technique, and present the G&S methodology.

2.1. The Cutting Stock Problem

The CSP was first described by Kantorovich in 1939 and later published in [13]. This problem presents many variations, but it can be usually classified according to its dimensional aspect: one-dimensional (1D), two-dimensional (2D), and three-dimensional (3D)—and as to the length of stock objects—single or multiple. In this article, according to the classification proposed by Wäscher et al. in [14], the variant known in the literature as One-Dimensional Simple Stock Size Cutting Stock Problem is considered, characterised by one-dimensional cutting patterns obtained from large identical objects of fixed dimension, and it can be seen as a generalisation of the Bin Packing Problem (BPP) [15].

The 1D-CSP can be formally stated as follows. Consider large objects of length L in stock and a set of m different types of items characterised by a length and a demand , . A cutting pattern describes how many items of each type are cut from a stock object, and it can be represented by a vector , where indicates the quantity of items of the type i obtained by cutting an object according to the cutting pattern . For the cutting patterns to be valid, we must have:

The decision variable is defined as the number of objects cut according to the cutting pattern . The classical Gilmore–Gomory ILP formulation proposed in [5,6] for the problem can be stated as:

The objective function (2) is to minimise the total number of objects cut to satisfy the demands of each type of items. The constraints (3) ensure that the demand for each type of items is satisfied and the constraints (4) impose the domain of the decision variables. This model has a very strong relaxation. Indeed, the modified integer round-up property (MIRUP) [16] holds for the 1D-CSP, i.e, the optimal value of any instance of the problem is not greater than the optimal value of the linear programming relaxation of model (2)–(4) rounded up plus 1 (one). As a matter of fact, most instances present a gap smaller than 1 (one). These are called integer round-up property (IRUP) instances. Conversely, instances with a gap greater than or equal to 1 (one) are called non-IRUP instances. In practice, when the quantities ordered for each item type are large, the solution to the linear programming relaxation is usually used to obtain a heuristic solution of good quality to the integer problem. However, if the number of items of each size is very small, the optimal fractional solution is useless, and rounding heuristics may lead to very poor solutions [17].

It is important to mention that the number n of cutting patterns in model (2)–(4) is exponential in the number of items and, therefore, it is computationally infeasible to explicitly consider all cutting patterns in advance even for moderately sized instances. Nonetheless, the number of different patterns in a solution is relatively small and, usually, necessary patterns are generated during a CG process.

Alternative pseudo-polynomial ILP models—i.e., formulations in which the numbers of variables and constraints are polynomials [15]—were also investigated in the literature. In [17], an arc-flow formulation with side constraints was introduced. The model has a set of flow conservation constraints and a set of constraints to ensure that the demand is satisfied. Recently, an enhanced arc-flow formulation, called Reflect, was presented in [18]. In a branch-and-price framework, arc-flow formulations are commonly preferred because the implementation of the algorithm involves modifications to the subproblem that are conceptually simpler [19].

2.2. Column Generation

A classical approach commonly employed for solving many non-trivial optimisation problems, and particularly the CSP, is the CG technique [5,6]. The general idea is to initially consider only a subset of the cutting patterns of the original problem and iteratively add patterns that have the potential to improve the current value of the objective function. CG algorithms first define the continuous relaxation of model (2)–(4) by removing the integrality constraints on variables x and heuristically initialize it with a restricted set of patterns that provides a feasible solution. For the sake of simplicity, in the following we use P to define both the set of patterns and the set of patterns indices. The resulting optimisation problem, called the restricted master problem (RMP), is as follows:

Once model (5)–(7) has been solved, let be the dual variable associated with the i-th constraint (6). The existence of a column that could reduce the objective function value is determined by the reduced costs . The column with the most negative reduced cost may be determined by solving a bounded knapsack problem in which the profits are given by the dual variables . Let be the number of times item type i is used, the pricing subproblem can be written as:

If the solution to the pricing subproblem has a value greater than 1 (one), then the corresponding cutting pattern has negative reduced cost and it is added to the RMP. The process is iterated until no column with negative reduced cost is found, thus providing the optimal solution value to the continuous relaxation of model (2)–(4). Usually the solution found at the end of the CG method is fractional and, therefore, an additional effort is required to obtain a feasible integer solution. Rounding heuristics are commonly employed to perform this task, but their efficiency strongly depends on the instances at hand.

Alternatively, one can embed the column generation lower bound into an enumeration tree, thus obtaining a branch-and-price algorithm [15]. However, degeneracy difficulties and long-tail effects are known to occur as problems become larger. In order to accelerate the convergence to the continuous optimal solution and stabilise the column generation approach, additional dual cuts, and methods to tighten lower and upper bounds on the dual variables were proposed in [20,21]. In [22], an exact solution approach based on cutting plane generation was introduced. The algorithm computes a lower bound by solving the continuous relaxation of the set covering formulation, and an upper bound by using heuristics. The method was embedded into a branch-and-price algorithm in [23].

2.3. Generate-and-Solve

The G&S framework was introduced in [7,8,9,10] to cope with hard combinatorial optimisation problems and it is based on problem instance reduction [24]. The general idea resides on the identification of a reduced sub-instance of a given problem instance, such that the sub-instance contains high-quality solutions to the original problem instance. This way, one could apply an ILP solver to the reduced sub-instance in order to obtain a high-quality solution to the original problem instance [25]. As a matter of fact, ILP solvers are highly effective for small to medium sized instances of hard problems and, in those cases in which a problem instance can be sufficiently reduced, it might be very efficient in solving the reduced problem instance.

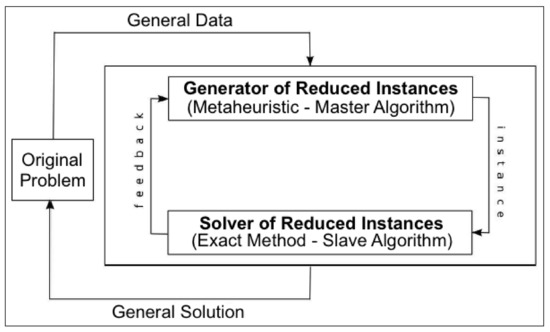

This is the underlying idea of the G&S, which prescribes the integration of two distinct conceptual components: the Generator of Reduced Instances (GRI) and the Solver of Reduced Instances (SRI), as illustrated in Figure 1. An exact method (e.g., ILP solver) encapsulated in the SRI component is responsible for solving reduced instances (i.e., subproblems) of the original problem that still preserve its conceptual structure. Thus, a feasible solution to a given subproblem will also be a feasible solution to the original problem. At a higher level, the GRI (e.g., a metaheuristic [26]) works on the complementary optimisation problem of generating reduced instances, which, when submitted to the SRI, produce feasible solutions whose objective function values can be used as a figure of merit (fitness) of the associated subproblems, thus guiding the search process. The interaction between GRI and SRI continues until a given stopping condition is satisfied. The best solution obtained by the solver to any of the subproblems generated by the GRI is considered to be the solution to the original problem instance.

Figure 1.

Generate-and-solve framework.

In fact, advances in exact solution methods and hardware technology have encouraged a number of researchers to design heuristics that incorporate phases where ILP models are solved, the so-called matheuristics [27]. The relation between the original problem and the mathematical programming model incorporated in a matheuristic may vary significantly. For example, the aforementioned problem instance reduction idea is related to column generation-based matheuristics and set-covering approaches [28,29], in which the exact method is modified to speed up the convergence, thus loosing the guarantee of optimality. A further advantage of column generation-based approaches is that they are flexible and easily adaptable to different problem characteristics.

As an example, a set-covering-based formulation was used to obtain a general heuristic approach for bin packing problems [30]. The approach operates in two phases that are heuristically performed. In the first phase (column generation), a very large number of feasible item-sets (columns) is generated, while in the second phase (column optimization) a feasible solution of the problem is obtained by solving the associated set-covering instance.

3. Materials and Methods

In this section, we first propose a procedure to generate a subset of cutting patterns and then propose an application of the G&S approach for tackling the problem.

3.1. Generation of Cutting Patterns

The task of generating all cutting patterns is computationally expensive, since the quantity n of patterns can be extremely large. The greater the variability of the length of the items and the smaller these items are in relation to the object size, the greater is the complexity of this task. In what follows, we resort to a procedure for generating cutting patterns proposed by Suliman [31] and propose a strategy employed to artificially reduce the amount of patterns considered into the ILP formulation.

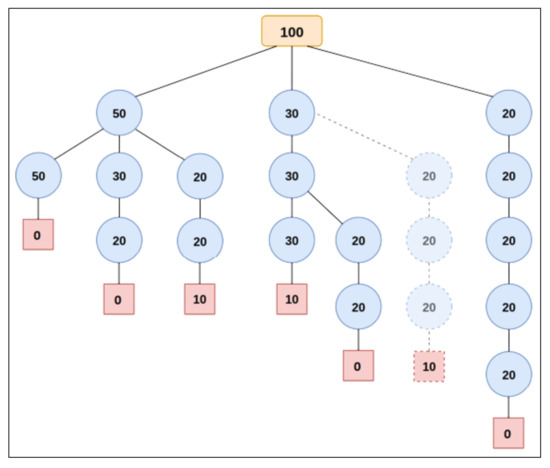

Consider an example in which the identical objects of length and a set of different types of items are given. The procedure starts by sorting the items in descending order of length , . In the running example, assume , , and . An m-ary search tree is used to illustrate the pattern generation procedure, as presented in Figure 2. The root node (in orange) represents the length of the large object, the internal nodes (in blue) represent the length of the items, and the leaf nodes (in red) indicate a cut loss. Any path from the root node down to a leaf represents a cutting pattern.

Figure 2.

Example of a cutting pattern search tree.

Starting from the root node, the procedure recursively includes as a new branch every item that can be obtained from the residual length of the object. If it is no longer possible to include any of the items as a new branch of a given node, the leaf node is created, indicating the cut loss of the cutting pattern defined. The implementation, however, is optimised as in [31] so that the height of the search tree is limited to the number m of different types of items. In addition, in every cutting pattern, we limit the number of items of each type to their corresponding demand, because this reduces the search space of the continuous relaxation in instances where the demands are small.

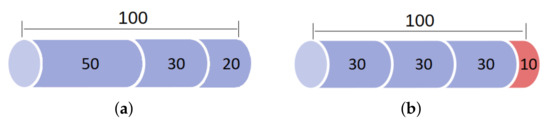

In the running example, there exist cutting patterns, depicted in the search tree, from left to right: , , , , , e . The patterns and are illustrated in Figure 3a,b, respectively.

Figure 3.

Examples of cutting patterns. The items are drawn in blue and the cut loss is drawn in red. In (a), we illustrate the cutting pattern with cut loss equal to 0 and, in (b), the cutting pattern with cut loss equal to 10.

The number n of cutting patterns can grow exponentially according to the number m of item types, and, therefore, the large number of cutting patterns generally makes computation infeasible. To cope with this issue, we propose to limit the generation to a maximum number M of cutting patterns. More precisely, a maximum number of cutting patterns beginning with a given type of item i is computed as follows:

The recursive procedure to construct the search tree follows a depth-first strategy and includes the branches of a given node according to the ordering of the items. A new branch is added only if the limit has not been reached for patterns beginning with type of item i.

In the running example, consider the value of . As we have , . In Figure 2, only the cutting patterns represented in solid lines are actually generated in the search tree. The branch represented in dashed lines is, in turn, pruned down. In this example, the cutting patterns is discarded.

3.2. Application of the Generate-and-Solve

Reduced instances of the 1D-CSP can be obtained by considering only a subset of the decision variables of the original problem instance, that is, only a subset of the feasible cutting patterns. The reduced instance is, therefore, an ILP model containing all the constraints present in the problem formulation, but only a subset of the cutting patterns, as in the CG technique. The choice of the subset of cutting patterns, however, is performed through a metaheuristic engine.

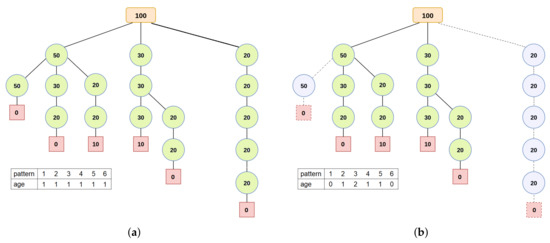

To explain the representation of a reduced instance, we refer to the running example discussed previously. Recall that, in order to deal with large sized instances of the problem, we employ a strategy for reducing the number of patterns. Thus, in Figure 4, we represent only the cutting patterns that were not discarded when applying this strategy.

Figure 4.

In the representation of reduced instances of the problem, each position in the array is associated with a cutting pattern in the search tree. The cutting patterns considered in the reduced instance present a value greater than or equal to 1 (one) in the array and are highlighted in green in the search tree. In (a), we illustrate a reduced instance with all cutting patterns from the search tree and, in (b), a reduced instance with only 4 cutting patterns.

The representation of a reduced instance of the problem is performed using an array of integers. Each position in the array is associated with a cutting pattern in the search tree. In this representation, a value greater than or equal to 1 (one) indicates that the associated cutting pattern (decision variable) is considered in the reduced instance (ILP model). Conversely, a value of 0 (zero) indicates that the associated cutting pattern is not taken into account in the reduced instance.

In addition, the value is used as an aging mechanism [25] to control the growth of reduced instances. Whenever a new cutting pattern is incorporated into the reduced instance, its age is set to 1 (one) and, at each iteration of the G&S method, the age of every cutting pattern that is part of the reduced instance is increased by 1 (one). If the age of a cutting pattern reaches a maximum age value , the value is reset to 0 (zero), that is, the pattern is removed from the reduced instance to prevent unpromising cutting patterns from unnecessarily impacting the efficiency of the ILP solver. However, whenever a cutting pattern is effectively used in the solution returned by the ILP solver, its age is reset to 1 (one) to ensure that this pattern is considered in the next iterations of the G&S method.

In order to obtain an initial reduced instance that produces a feasible solution when submitted to the ILP solver, the following greedy strategy was adopted to select the cutting patterns. Considering the cutting pattern search tree from left to right, the current cutting pattern is added to the instance under construction if it contains an item that has not yet been covered by any other pattern. The process continues until all items have been covered by the patterns added to the instance. Then, the reduced instance is submitted to the SRI to obtain an initial solution. From then on, the aging mechanism guarantees the existence of a feasible solution in all reduced instances submitted to the SIR. In addition, at each iteration of the G&S method, the current instance contains a solution that is at least as good as the previous ones.

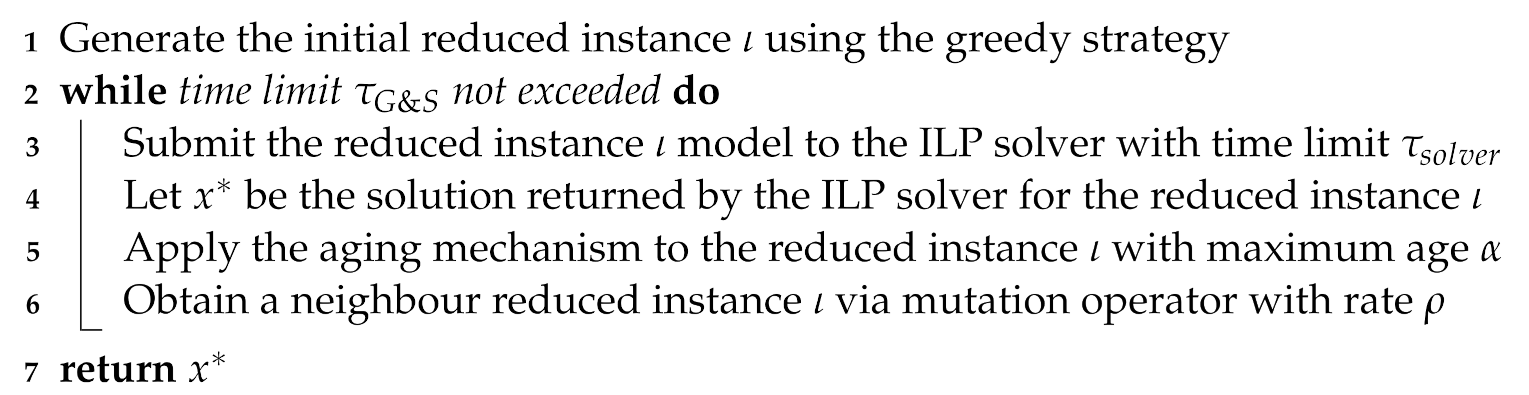

For the GRI, a simple hill climbing algorithm was implemented, as illustrated in Algorithm 1. A neighbour instance is obtained from the application of a mutation operator. Considering a mutation rate , the mutation operator, when effectively applied to a position of the array, modifies the value to 1 (one) whenever the original value is 0 (zero), and, conversely, modifies the value to 0 (zero) whenever the original value is greater than 1 (one). Note that, when the original value is 1 (one), the value remains unchanged in order to ensure that the neighbour instance preserves the current feasible solution.

| Algorithm 1: Pseudocode of the generate-and-solve method implemented. |

|

At each iteration of the G&S method, a new reduced instance is generated and submitted to the SRI to obtain a new best solution. For each reduced instance, we adopt a time limit of computation of the ILP solver. Furthermore, we consider an execution time limit as a stopping criterion for the G&S method.

4. Results

We employed IBM ILOG CPLEX 22.1 [11] to solve the ILP models and used Coluna 0.4.2 (JuMP 1.1.0 and Julia 1.8) [12] to run the CG technique. Coluna implements a default CG procedure and provides dual and primal bounds for each iteration of the method. We set CPLEX as underlying ILP solver to handle master and subproblem. For the search tree, we considered a maximum number of cutting patterns. The hill climbing algorithm was implemented in Java language and, after preliminary tests, the values of the parameters of the G&S method were chosen: s, s, , and . The computational experiments were performed on Intel Core i7 7500U CPU 2.70 GHz 8 GB RAM machines. Benchmark instances from the literature [3], divided into 5 classes, were used to carry out a comparative analysis of the performance of the different methods. We set an execution timeout of 600 s for all methods.

The comparative results are presented in Table 1, Table 2, Table 3, Table 4 and Table 5. Along with the characterisation of the instances, we present the computational results for the different methods. Since G&S is stochastic, we provide the best and average values over 10 (ten) executions of this method, besides the average time to best (), i.e., the elapsed time until finding the best solution of an execution. For the CG algorithm, we provide the dual lower bound and the primal upper bound . Note that, when the CG algorithm stops before convergence due to the time limit of 600 s, the dual bound may not represent the object value of the linear relaxation of the original ILP formulation. For each instance, we mark the proven optimal solutions with the symbol and highlight in boldface the best solutions found among those obtained by the competitive approaches. Particularly, in Table 1, we present as well computational results for the ILP formulations proposed by Gilmore and Gomory [5,6] (GG-ILP) and Delorme and Iori [18] (REFLECT-ILP), in an attempt to investigate the scalability of the exact approach. In this table, and define, respectively, a primal lower bound and a primal upper bound for the ILP models.

Table 1.

Results for the set of instances of class U.

Table 2.

Results for the set of instances of class hard28.

Table 3.

Results for the set of instances of class 7hard.

Table 4.

Results for the set of instances of class hard10.

Table 5.

Results for the set of instances of class wae_gau1.

The computational results for the set of instances of class U are presented in Table 1. The characteristics of these instances are as follows: the number of types of items ranges from 15 to 1005; the length of each item is between 31.66% and 35.01% of the length of the object; and the demand multiplicity (i.e., the average demand per item type), calculated as the average value among all instances of the class, is unitary. CPLEX was able to obtain feasible solutions to the GG-ILP formulation only for the instances with up to 495 item types. For the small sized instances, GG-ILP obtained proven optimal solutions very fast. The REFLECT-ILP, in turn, obtained feasible solutions for the instances with up to 675 item types. Despite the fact that REFLECT-ILP proved to be more time consuming for small sized instances compared to GG-ILP, CPLEX scaled better with this formulation. CG and G&S methods were able to find feasible solutions for all instances of this class. CG proved to be the most effective and time efficient for small sized instances (with up to 405 item types). It is important to remark that, for medium sized instances, CG outperforms the G&S approach. G&S obtained optimal solutions for instances with up to 285 item types. In addition, for large sized instances, G&S provided the best feasible solutions, and, therefore, the strategy of artificially limiting the number of cutting patterns proved to be very effective.

The set of instances of class hard28, presented in Table 2, has between 136 to 189 types of items. In these instances, the length of each item is between 0.1% and 80.0% of the length of the object and the demand multiplicity is of 1.1221. The demands vary from 1 (one) to 3 (three). The wide variety of the length of the items in relation to the length of the object stands out. CG method obtained the proven optimal solution for 15 out of 28 instances. This method, however, was not able to provide a feasible solution for the instance BPP195, which does not have any particular characteristic that justifies this behavior. Conversely, G&S obtained a better solution for 13 out of 28 instances of this class. For the other instances (but instance BPP900), the difference in the quality of the solution obtained by the G&S approach and the proven optimal solution was limited to 1 (one) additional object. It is also important to remark that the elapsed time until finding the best solution is relatively small, suggesting that convergence was reached very fast.

In Table 3, the computational results for the set of instances of class 7hard are presented. These instances are characterised by: number of types of items ranging from 85 to 143; length of each item is between 1.7% and 80.0% of the length of the object; and demand multiplicity is of 1.1027 units per item (varying from 1 to 4). As in the set of instances of class hard28, there is a wide variety of the length of the items, although the ratio for the smallest length is a little larger. Note, also, that the number of types of items is smaller here. For these instances, CG method provided the proven optimal solution for 5 instances. G&S obtained a better solution for 2 out of 7 instances of this class. Again, for the other 5 instances, the quality of the solution obtained by the G&S approach and the proven optimal solution differs by 1 (one) additional object. The convergence of both algorithms is very fast.

The set of instances of class hard10, presented in Table 4, has between 197 and 200 types of items. In these instances, the length of each item is between 20% and 35% of the length of the object and the demand multiplicity is of 1.0050 units per item (varying from 1 to 3). The number of types of items is greater than in the two previous classes, but there is a significantly smaller variation in the length of the items. For all instances of this class, G&S overachieved the CG method and the solutions differed by 1 (one) additional object considering the dual lower bound provided by the CG algorithm (but for instance HARD7). It was also noticed that CG was not able to obtain a feasible solution for the instance HARD4.

Finally, the computational results for the set of instances of class wae_gau1 are presented in Table 5. These instances are characterised by: the number of types of items ranges from 33 to 64; the length of each item is between 0.02% and 73.32% of the length of the object; and the demand multiplicity is of 2.5974 (varying from 1 to 38). The number of items is lower in relation to the other classes of instances, but with a large demand multiplicity. In addition, the variation in the length of the items is huge. For these instances, G&S significantly outperformed CG. The solutions obtained by the G&S method differed at most by 1 (one) from the lower bound provided by the CG algorithm (but, for instance, WAE_GAU1_TEST0055_2). In fact, the CG method was only able to handle 4 out 17 instances of this class. Again, the strategy of artificially limiting the number of cutting patterns proved to be effective.

5. Conclusions

The computational effort for solving the 1D-CSP is largely affected by the number of the cutting patterns. In order to cope with large instances of the problem, we proposed a strategy to restrict the number of patterns to be generated while applying a pattern generating procedure. Using benchmark instances, computational experiments were performed for ILP models, an implementation of the CG technique, and an application of the G&S framework. We begin the conclusions on our experimental analysis, stating that the exact approach was only able to cope well with small and medium-sized instances. In addition, none of the CG and G&S algorithms stood out from the other for all instances. In fact, the effectiveness of these methods varied according to the characteristics of the instances, such as the number of item types, the size of the items in relation to the size of the object, and the demand multiplicity. Nonetheless, G&S performed well for all classes, obtaining quasi-optimal solutions for the majority of the instances. In addition, the strategy of artificially reducing the number of cutting patterns proved to be very effective.

It is important to remark, however, that G&S does not present any sort of guarantee of convergence and the optimality gap cannot be estimated by the G&S method alone. On the other hand, CG was able to produce strong lower bounds fast. Therefore, G&S could be integrated to CG to speed up the convergence of branch-and price algorithms, especially when CG fails to produce good-quality integer feasible solutions. Future research is also envisaged to adapt the proposed framework using arc-flow formulations, which have shown to be appealing in branch-and-price algorithms.

Author Contributions

Conceptualization, N.N.; methodology, N.N; software, J.V.S.S.; validation, J.V.S.S.; formal analysis, N.N. and J.V.S.S.; investigation, N.N. and J.V.S.S.; resources, N.N.; data curation, J.V.S.S.; writing—original draft preparation, N.N. and J.V.S.S.;writing—review and editing, N.N.; visualization, N.N. and J.V.S.S.; supervision, N.N.; project administration, N.N.; funding acquisition, N.N. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by Fundação Edson Queiroz and Universidade de Fortaleza.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets considered in this study are publicly available at https://www.euro-online.org/websites/esicup (accessed on 11 September 2022).

Acknowledgments

The authors acknowledge Fundação Edson Queiroz and Universidade de Fortaleza for the administrative support. We thank three anonymous referees for helpful comments.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 1D-CSP | One-Dimensional Cutting Stock Problem |

| CG | Column Generation |

| CSP | Cutting Stock Problem |

| G&S | Generate-and-Solve |

| GRI | Generator of Reduced Instances |

| ILP | Integer Linear Programming |

| SRI | Solver of Reduced Instances |

References

- Sweeney, P.E.; Paternoster, E.R. Cutting and Packing Problems: A Categorized, Application-Orientated Research Bibliography. J. Oper. Res. Soc. 1992, 43, 691–706. [Google Scholar] [CrossRef]

- Arai, H.; Haraguchi, H. A Study of Ising Formulations for Minimizing Setup Cost in the Two-Dimensional Cutting Stock Problem. Algorithms 2021, 14, 182. [Google Scholar] [CrossRef]

- ESICUP: Working Group on Cutting and Packing. Data Sets. Available online: https://www.euro-online.org/websites/esicup (accessed on 11 September 2022).

- Dantzig, G.B.; Wolfe, P. Decomposition Principle for Linear Programs. Oper. Res. 1960, 8, 101–111. [Google Scholar] [CrossRef]

- Gilmore, P.C.; Gomory, R.E. A Linear Programming Approach to the Cutting-Stock Problem. Oper. Res. 1961, 9, 849–859. [Google Scholar] [CrossRef]

- Gilmore, P.C.; Gomory, R.E. A Linear Programming Approach to the Cutting Stock Problem—Part II. Oper. Res. 1963, 11, 863–888. [Google Scholar] [CrossRef]

- Nepomuceno, N.; Pinheiro, P.R.; Coelho, A.L. Tackling the container loading problem: A hybrid approach based on integer linear programming and genetic algorithms. In Proceedings of the European Conference on Evolutionary Computation in Combinatorial Optimization, Valencia, Spain, 11–13 April 2007; Springer: Berlin, Germany, 2007; pp. 154–165. [Google Scholar] [CrossRef]

- Nepomuceno, N.; Pinheiro, P.R.; Coelho, A.L. A Hybrid Optimization Framework for Cutting and Packing Problems. In Recent Advances in Evolutionary Computation for Combinatorial Optimization; Springer: Berlin, Germany, 2008; pp. 87–99. [Google Scholar] [CrossRef]

- Saraiva, R.D.; Nepomuceno, N.; Pinheiro, P.R. The generate-and-solve framework revisited: Generating by simulated annealing. In Proceedings of the European Conference on Evolutionary Computation in Combinatorial Optimization, Vienna, Austria, 3–5 April 2013; Springer: Berlin, Germany, 2013; pp. 262–273. [Google Scholar] [CrossRef]

- Dias Saraiva, R.; Nepomuceno, N.; Rogério Pinheiro, P. A Two-Phase Approach for Single Container Loading with Weakly Heterogeneous Boxes. Algorithms 2019, 12, 67. [Google Scholar] [CrossRef]

- Cplex, I.I. 12.8 User’s Manual. In Book 12.8 User’s Manual, Series 12.8 User’s Manual; IBM: Armonk, NY, USA, 2017. [Google Scholar]

- Marques, G.; Vanderbeck, F. Coluna: An Open-Source Branch-Cut-and-Price Framework. Available online: https://github.com/atoptima/Coluna.jl (accessed on 11 September 2022).

- Kantorovich, L.V. Mathematical Methods of Organizing and Planning Production. Manag. Sci. 1960, 6, 366–422. [Google Scholar] [CrossRef]

- Wäscher, G.; Haußner, H.; Schumann, H. An improved typology of cutting and packing problems. Eur. J. Oper. Res. 2007, 183, 1109–1130. [Google Scholar] [CrossRef]

- Delorme, M.; Iori, M.; Martello, S. Bin packing and cutting stock problems: Mathematical models and exact algorithms. Eur. J. Oper. Res. 2016, 255, 1–20. [Google Scholar] [CrossRef]

- Scheithauer, G.; Terno, J. The modified integer round-up property of the one-dimensional cutting stock problem. Eur. J. Oper. Res. 1995, 84, 562–571. [Google Scholar] [CrossRef]

- Valério de Carvalho, J. Exact solution of bin-packing problems using column generation and branch-and-bound. Ann. Oper. Res. 1999, 86, 629–659. [Google Scholar] [CrossRef]

- Delorme, M.; Iori, M. Enhanced Pseudo-polynomial Formulations for Bin Packing and Cutting Stock Problems. INFORMS J. Comput. 2020, 32, 101–119. [Google Scholar] [CrossRef]

- Valério de Carvalho, J. LP models for bin packing and cutting stock problems. Eur. J. Oper. Res. 2002, 141, 253–273. [Google Scholar] [CrossRef]

- Ben Amor, H.; Desrosiers, J.; Valério de Carvalho, J.M. Dual-Optimal Inequalities for Stabilized Column Generation. Oper. Res. 2006, 54, 454–463. [Google Scholar] [CrossRef]

- Clautiaux, F.; Alves, C.; Valério de Carvalho, J.; Rietz, J. New Stabilization Procedures for the Cutting Stock Problem. INFORMS J. Comput. 2011, 23, 530–545. [Google Scholar] [CrossRef]

- Scheithauer, G.; Terno, J.; Müller, A.; Belov, G. Solving one-dimensional cutting stock problems exactly with a cutting plane algorithm. J. Oper. Res. Soc. 2001, 52, 1390–1401. [Google Scholar] [CrossRef]

- Belov, G.; Scheithauer, G. A branch-and-cut-and-price algorithm for one-dimensional stock cutting and two-dimensional two-stage cutting. Eur. J. Oper. Res. 2006, 171, 85–106. [Google Scholar] [CrossRef]

- Blum, C.; Raidl, G.R. Hybridization Based on Problem Instance Reduction. In Hybrid Metaheuristics: Powerful Tools for Optimization; Springer: Cham, Switzerland, 2016; pp. 45–62. [Google Scholar] [CrossRef]

- Blum, C.; Pinacho, P.; López-Ibáñez, M.; Lozano, J.A. Construct, Merge, Solve & Adapt: A new general algorithm for combinatorial optimization. Comput. Oper. Res. 2016, 68, 75–88. [Google Scholar] [CrossRef]

- Hussain, K.; Mohd Salleh, M.N.; Cheng, S.; Shi, Y. Metaheuristic research: A comprehensive survey. Artif. Intell. Rev. 2019, 52, 2191–2233. [Google Scholar] [CrossRef]

- Archetti, C.; Speranza, M. A survey on matheuristics for routing problems. EURO J. Comput. Optim. 2014, 2, 223–246. [Google Scholar] [CrossRef]

- Ball, M.O. Heuristics based on mathematical programming. Surv. Oper. Res. Manag. Sci. 2011, 16, 21–38. [Google Scholar] [CrossRef]

- Doerner, K.F.; Schmid, V. Survey: Matheuristics for Rich Vehicle Routing Problems. In Proceedings of the Hybrid Metaheuristics, Vienna, Austria, 1–2 October 2010; Blesa, M.J., Blum, C., Raidl, G., Roli, A., Sampels, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 206–221. [Google Scholar] [CrossRef]

- Monaci, M.; Toth, P. A Set-Covering-Based Heuristic Approach for Bin-Packing Problems. INFORMS J. Comput. 2006, 18, 71–85. [Google Scholar] [CrossRef][Green Version]

- Suliman, S.M. Pattern generating procedure for the cutting stock problem. Int. J. Prod. Econ. 2001, 74, 293–301. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).