Ensembling EfficientNets for the Classification and Interpretation of Histopathology Images

Abstract

1. Introduction

2. Materials and Methods

2.1. Deep Learning Methods

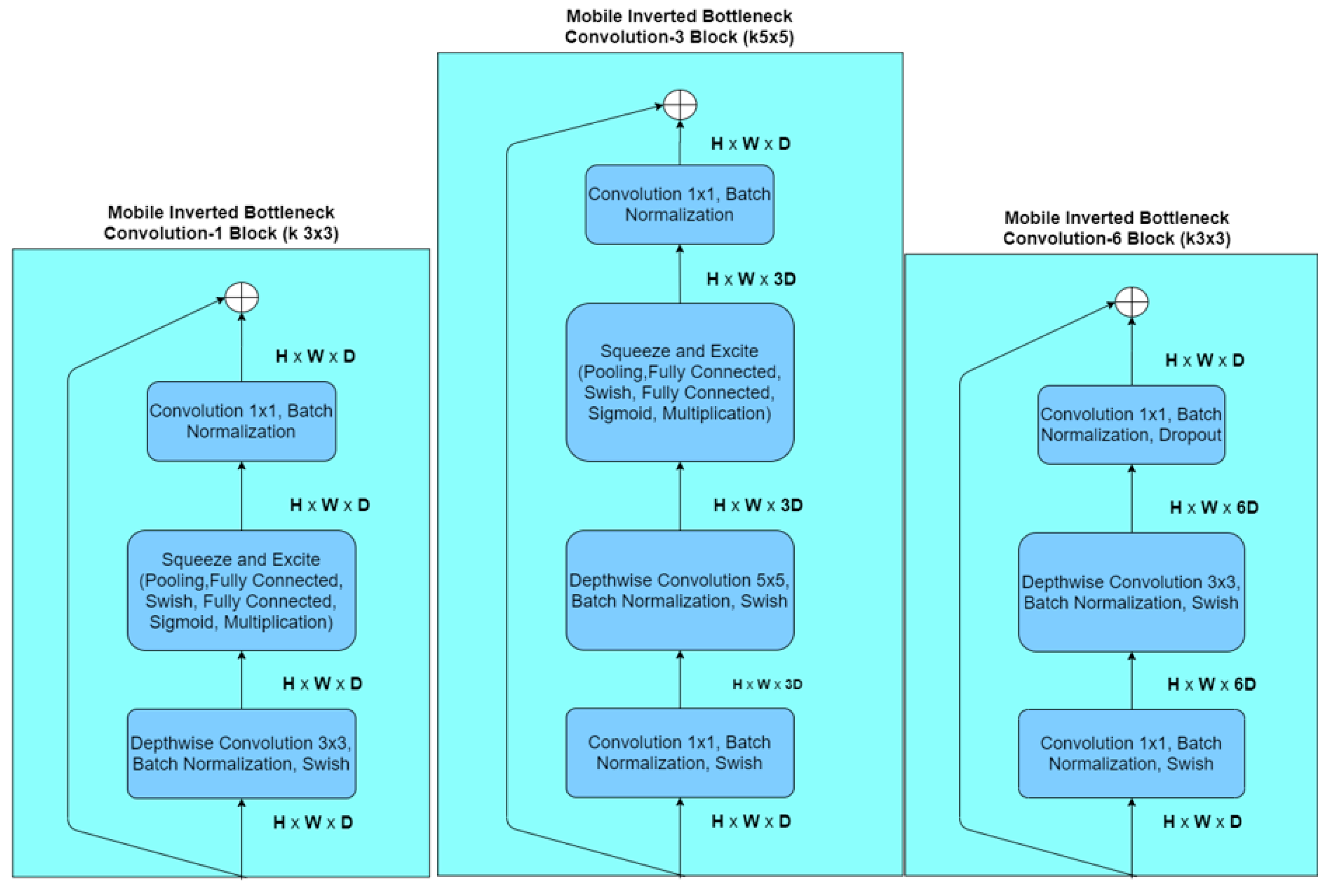

2.1.1. EfficientNets

w = βφ

r = γφ

α∙β2∙γ2 ≈ 2

α ≥ 1, β ≥ 1, γ ≥ 1

2.1.2. InceptionNet, XceptionNet, ResNet

2.1.3. Ensemble Classifiers

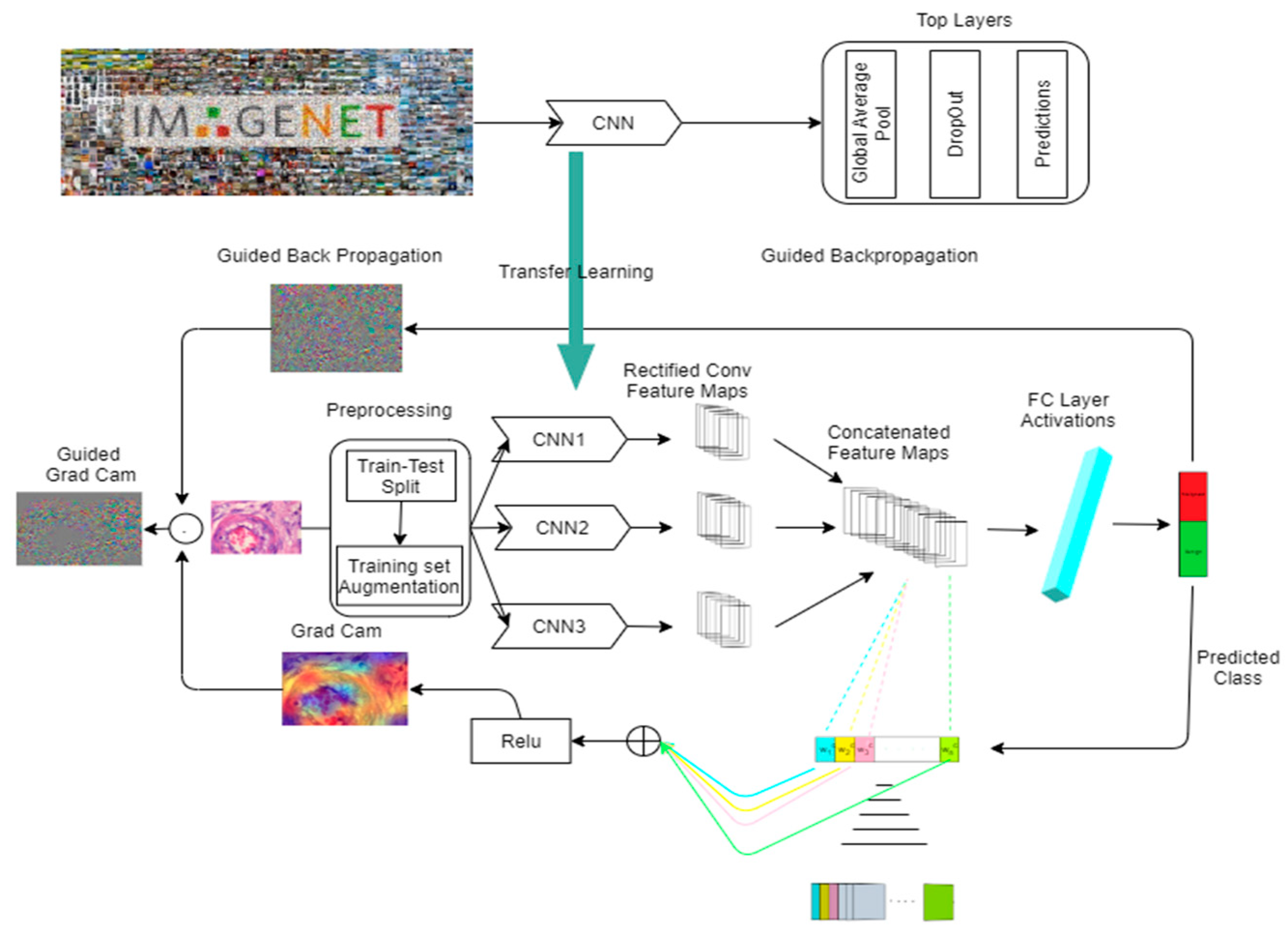

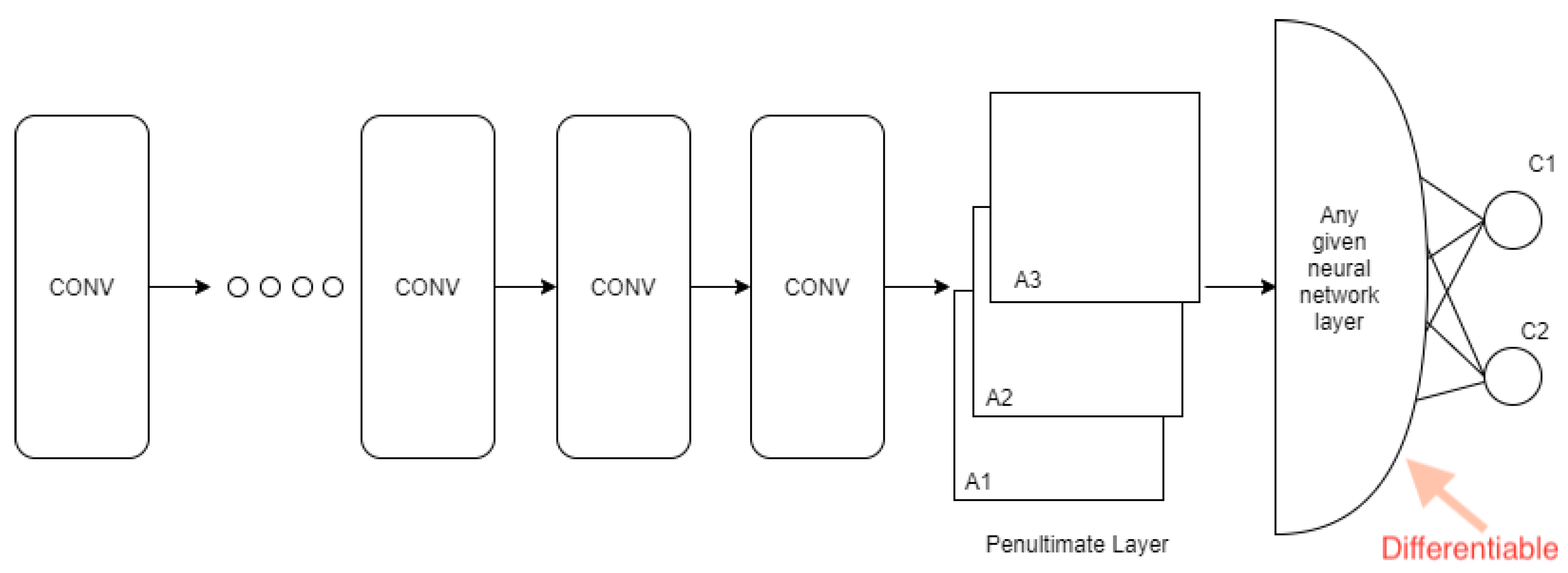

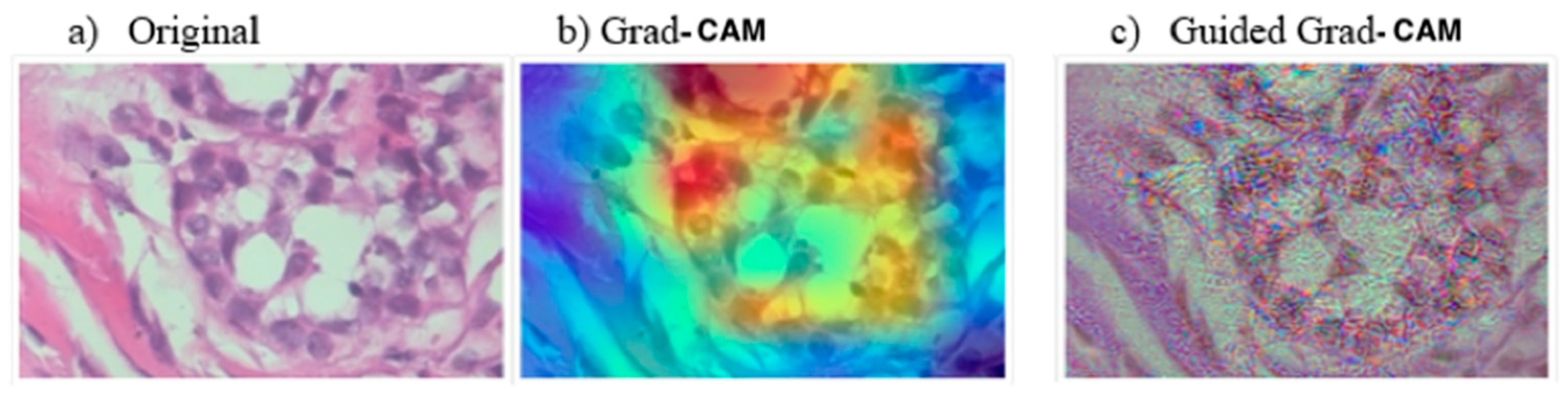

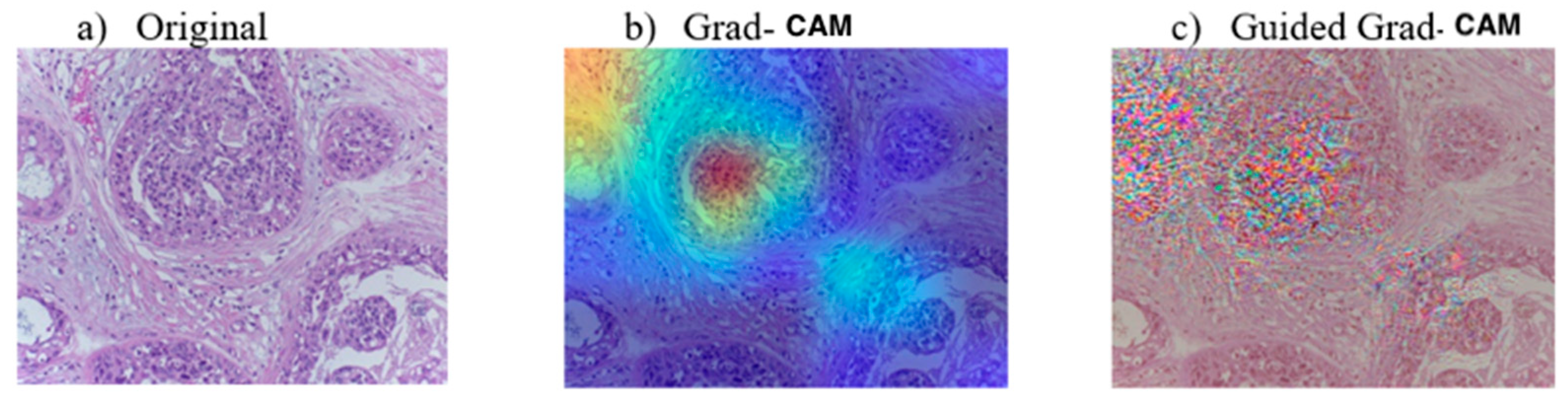

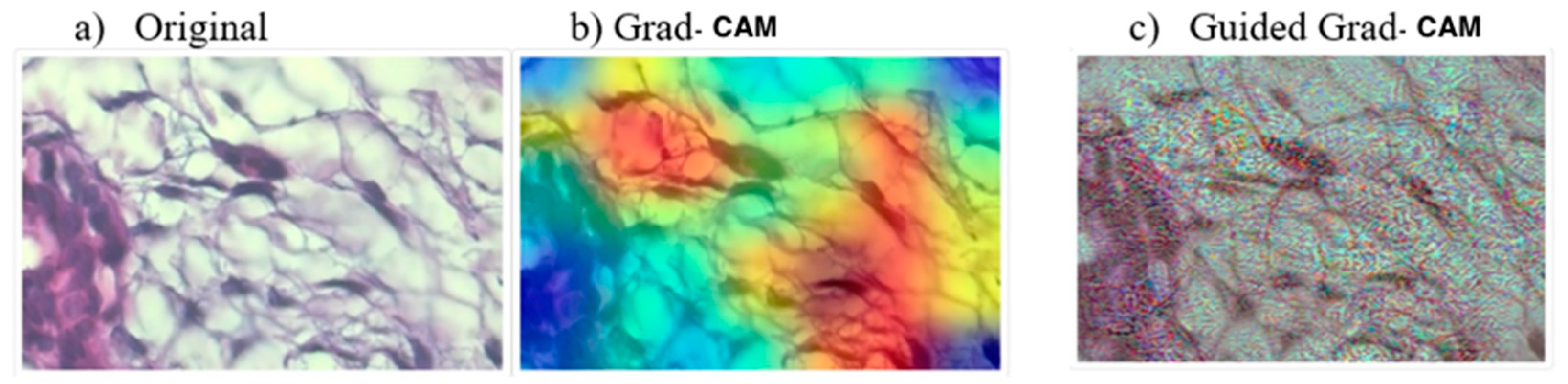

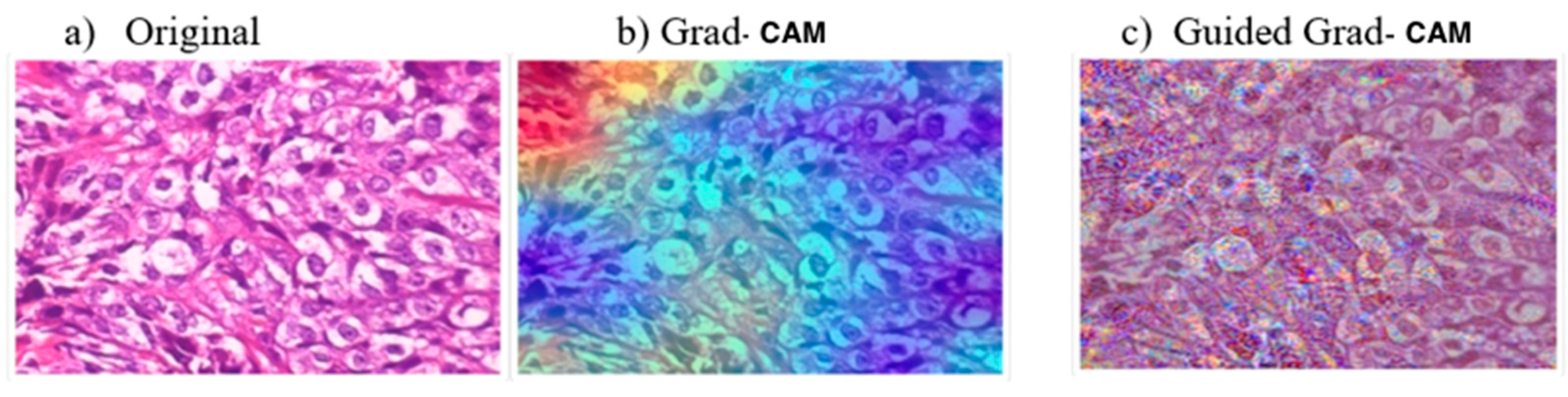

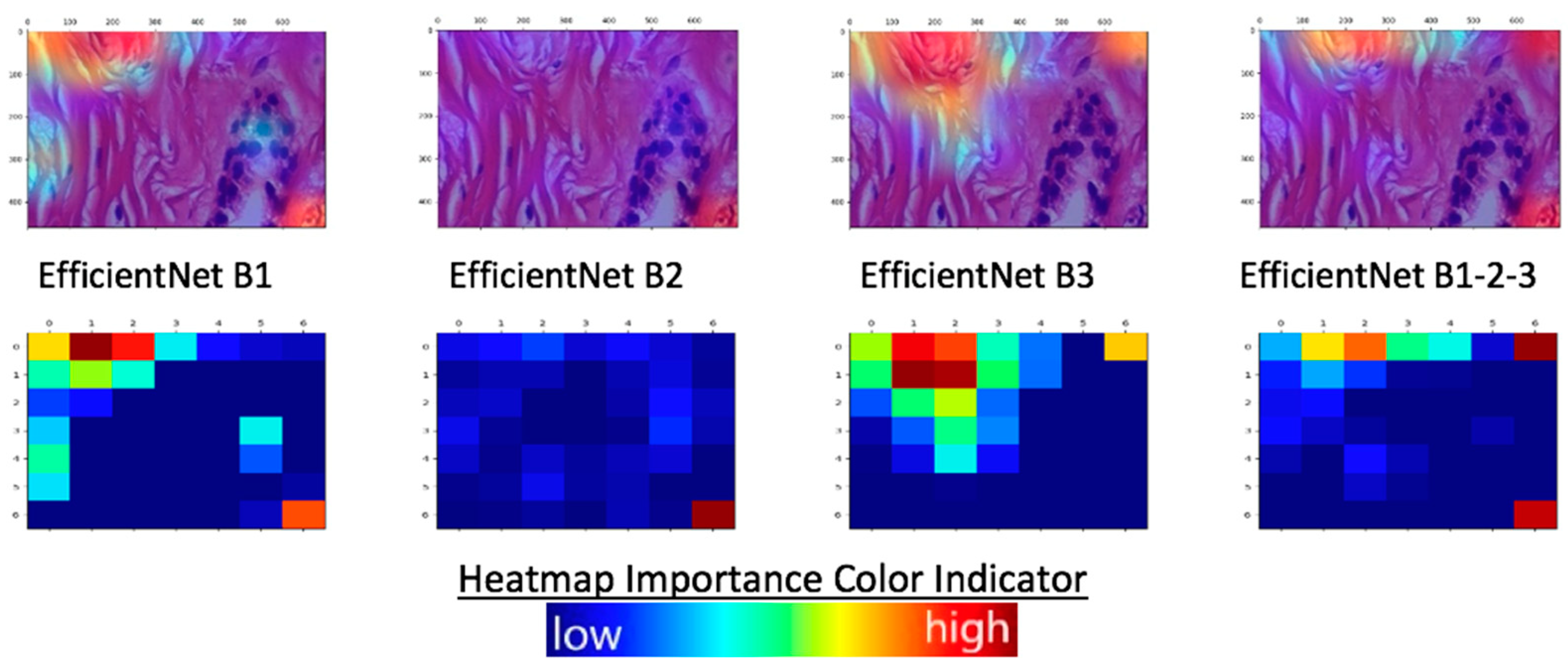

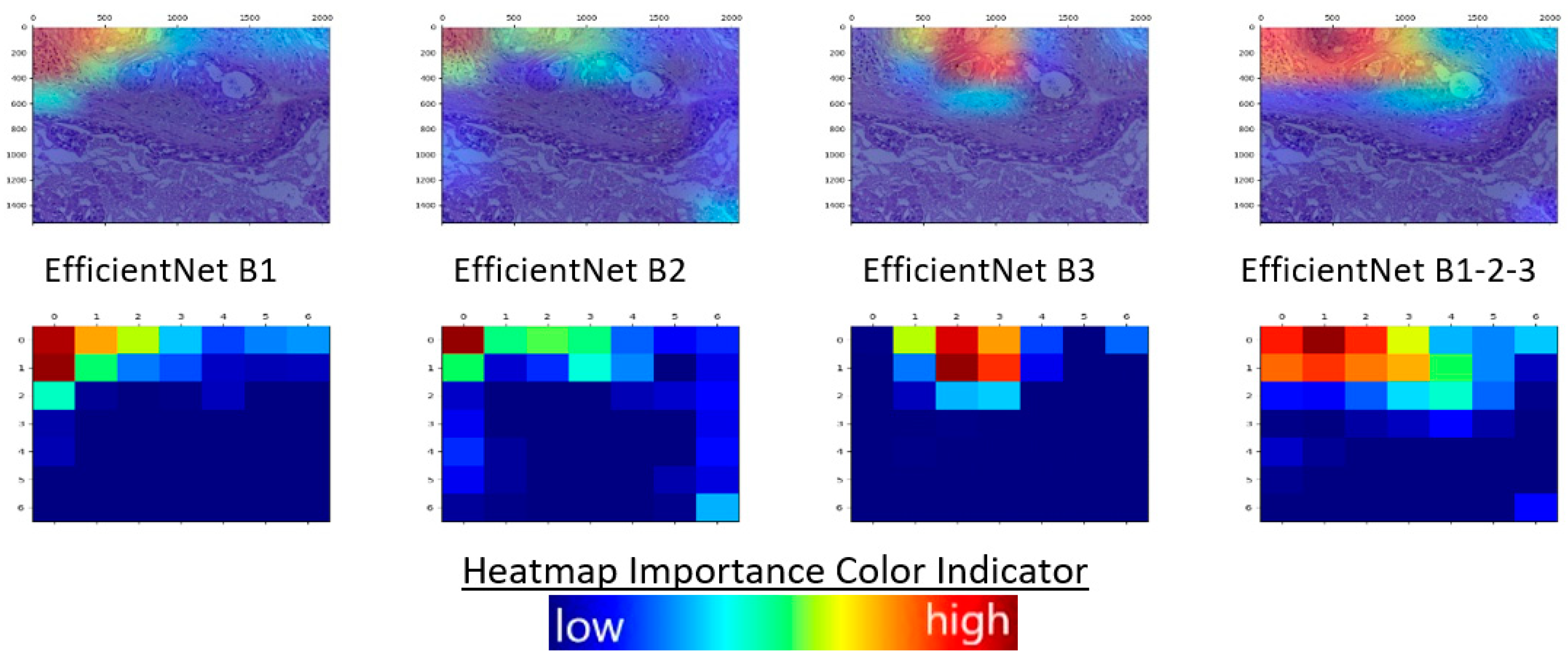

2.2. Explainability

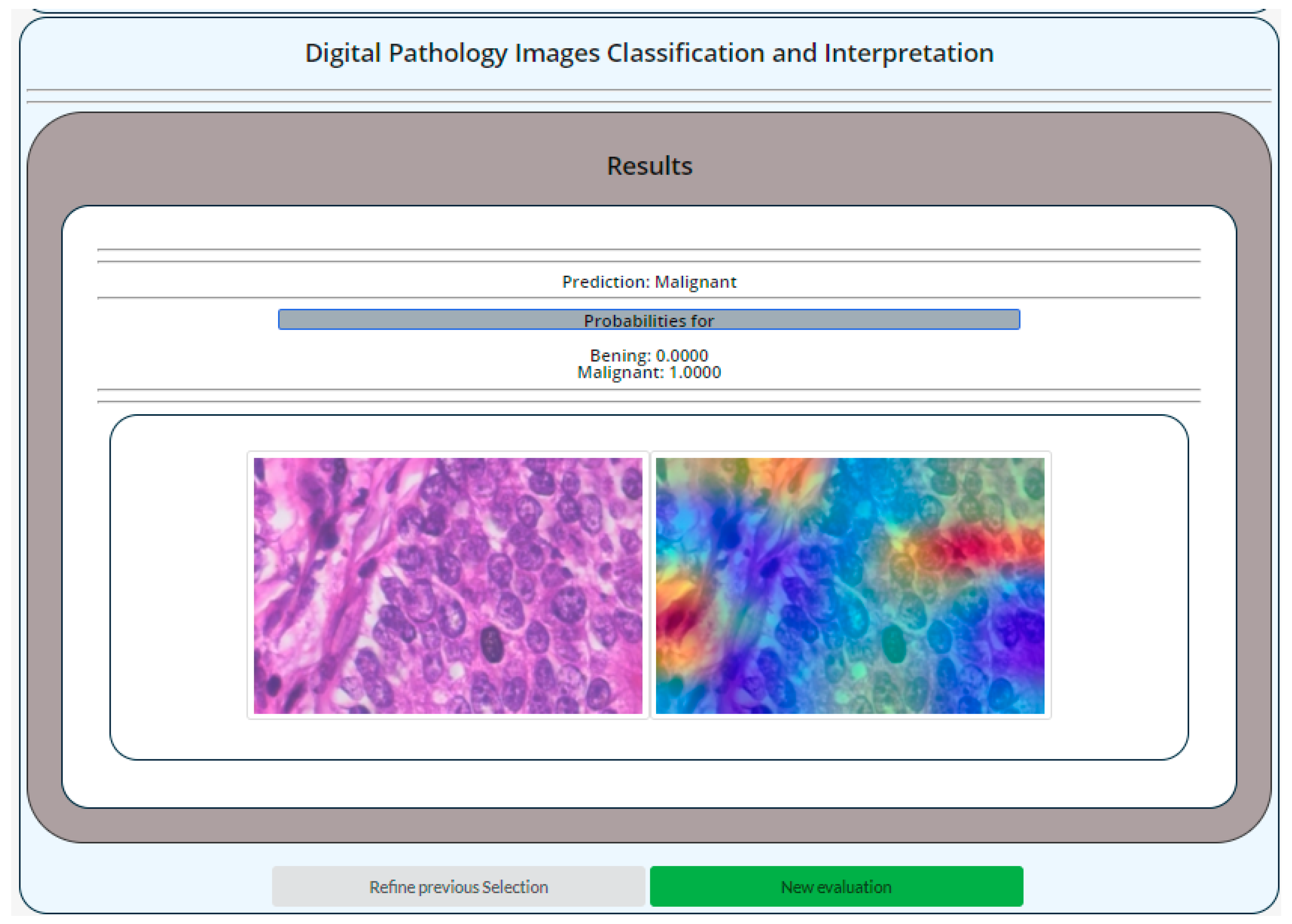

2.3. System Architecture and Methodology

- Image classification;

- Result explainability.

3. Experimental Results

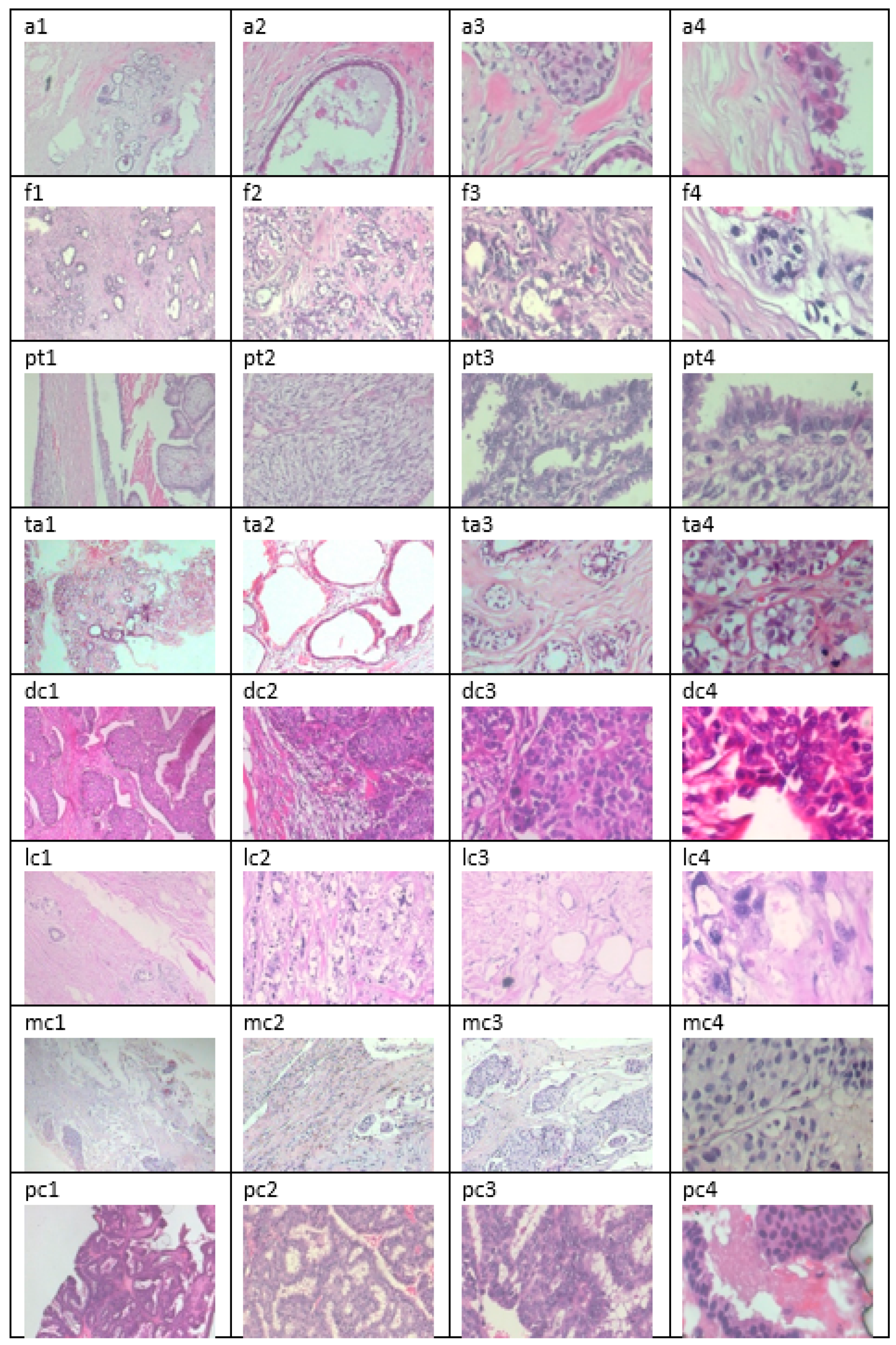

3.1. Datasets and Hardware

- Divided in 2480 benign and 5429 malignant samples;

- 3-channel RGB (8 bits in each channel);

- In PNG format;

- In four different magnifying factors (40×, 100×, 200×, 400×);

- Their dimensions are 700 × 460 pixels.

3.2. Evaluation Metrics

- Accuracy metric is defined as the fraction of the correctly classified instances divided by the total number of instances, as shown in Equation (5):

- Precision metric is defined as the fraction of the true positives divided by the true positives and false positives as shown in Equation (6):

- Recall metric is defined as the fraction of the true positives divided by the true positives and false negatives as shown in Equation (7):

- Area under Curve (AUC) metric is defined as the area under the receiver operating curve. The receiver operating curve is drawn by plotting true positive rate (TPR) versus false positive rate (FPR) at different classification thresholds. TPR is another word for recall, whereas FPR is the fraction of the false positives divided by the true negatives and false positives as shown in Equation (8):

3.3. Results

- EfficientNets B0-B7;

- InceptionNet V3;

- ExceptionNet;

- VGG19;

- ResNet152V2;

- Inception-ResNetV2.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Santos, M.K.; Ferreira Júnior, J.R.; Wada, D.T.; Tenório, A.; Barbosa, M.; Marques, P. Artificial intelligence, machine learning, computer-aided diagnosis, and radiomics: Advances in imaging towards to precision medicine. Radiol. Bras. 2019, 52, 387–396. [Google Scholar] [CrossRef]

- Cheng, J.Z.; Ni, D.; Chou, Y.H.; Qin, J.; Tiu, C.; Chang, Y.C.; Huang, C.S.; Shen, D.; Chen, C.M. Computer-Aided Diagnosis with Deep Learning Architecture: Applications to Breast Lesions in US Images and Pulmonary Nodules in CT Scans. Sci. Rep. 2016, 6, 24454. [Google Scholar] [CrossRef]

- Chan, K.; Zary, N. Applications and Challenges of Implementing Artificial Intelligence in Medical Education: Integrative Review. JMIR Med. Educ. 2019, 5, e13930. [Google Scholar] [CrossRef]

- Hekler, A.; Utikal, J.S.; Enk, A.H.; Solass, W.; Schmitt, M.; Klode, J.; Schadendorf, D.; Sondermann, W.; Franklin, C.; Bestvater, F.; et al. Deep learning outperformed 11 pathologists in the classification of histopathological melanoma images. Eur. J. Cancer 2019, 118, 91–96. [Google Scholar] [CrossRef] [PubMed]

- Morrow, J.M.; Sormani, M.P. Machine learning outperforms human experts in MRI pattern analysis of muscular dystrophies. Neurol. Mar. 2020, 94, 421–422. [Google Scholar] [CrossRef] [PubMed]

- Ardila, D.; Kiraly, A.P.; Bharadwaj, S.; Choi, B.; Reicher, J.J.; Peng, L.; Tse, D.; Etemadi, M.; Ye, W.; Corrado, G.; et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 2019, 25, 954–961. [Google Scholar] [CrossRef] [PubMed]

- Brosch, T.; Tam, R. Initiative for the Alzheimers Disease Neuroimaging. Manifold learning of brain MRIs by deep learning. Med. Image Comput. Comput. Assist. Interv. 2013, 16, 633–640. [Google Scholar] [CrossRef] [PubMed]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K.; et al. CheXNet: Radiologist-Level Pneumonia Detection on Chest X-rays with Deep Learning. arXiv 2017, arXiv:1711.05225. [Google Scholar]

- Cong, W.; Xi, Y.; Fitzgerald, P.; De Man, B.; Wang, G. Virtual Monoenergetic CT Imaging via Deep Learning. Patterns 2020, 1, 100128. [Google Scholar] [CrossRef] [PubMed]

- Soffer, S.; Klang, E.; Shimon, O.; Nachmias, N.; Eliakim, R.; Ben-Horin, S.; Kopylov, U.; Barash, Y. Deep learning for wireless capsule endoscopy: A systematic review and meta-analysis. Gastrointest. Endosc. 2020, 92, 831–839.e8. [Google Scholar] [CrossRef]

- Yang, J.; Wang, W.; Lin, G.; Li, Q.; Sun, Y. Infrared Thermal Imaging-Based Crack Detection Using Deep Learning. IEEE Access 2019, 7, 182060–182077. [Google Scholar] [CrossRef]

- Maglogiannis, I.; Delibasis, K.K. Enhancing classification accuracy utilizing globules and dots features in digital dermoscopy. Comput. Methods Programs Biomed. 2015, 118, 124–133. [Google Scholar] [CrossRef] [PubMed]

- Maglogiannis, I.; Sarimveis, H.; Kiranoudis, C.T.; Chatziioannou, A.A.; Oikonomou, N.; Aidinis, V. Radial basis function neural networks classification for the recognition of idiopathic pulmonary fibrosis in microscopic images. IEEE Trans. Inf. Technol. Biomed. A Publ. IEEE Eng. Med. Biol. Soc. 2008, 12, 42–54. [Google Scholar] [CrossRef] [PubMed]

- Prasoon, A.; Petersen, K.; Igel, C.; Lauze, F.; Dam, E.; Nielsen, M. Deep feature learning for knee cartilage segmentation using a triplanar convolutional neural network. Med. Image Comput. Comput. Assist. Interv. 2013, 16, 246–253. [Google Scholar] [CrossRef] [PubMed]

- Cai, L.; Gao, J.; Zhao, D. A review of the application of deep learning in medical image classification and segmentation. Ann. Transl. Med. 2020, 8, 713. [Google Scholar] [CrossRef] [PubMed]

- Han, C.; Hayashi, H.; Rundo, L.; Araki, R.; Shimoda, W.; Muramatsu, S.; Furukawa, Y.; Mauri, G.; Nakayama, H. GAN-based synthetic brain MR image generation. In Proceedings of the IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 734–738. [Google Scholar] [CrossRef]

- Haskins, G.; Kruger, U.; Yan, P. Deep learning in medical image registration: A survey. Mach. Vis. Appl. 2020, 31, 8. [Google Scholar] [CrossRef]

- Lukashevich, P.V.; Zalesky, B.A.; Ablameyko, S.V. Medical image registration based on SURF detector. Pattern Recognit. Image Anal. 2011, 21, 519. [Google Scholar] [CrossRef]

- Höfener, H.; Homeyer, A.; Weiss, N.; Molin, J.; Lundström, C.F.; Hahn, H.K. Deep learning nuclei detection: A simple approach can deliver state-of-the-art results. Comput. Med. Imaging Graph. 2018, 70, 43–52. [Google Scholar] [CrossRef]

- Kucharski, D.; Kleczek, P.; Jaworek-Korjakowska, J.; Dyduch, G.; Gorgon, M. Semi-Supervised Nests of Melanocytes Segmentation Method Using Convolutional Autoencoders. Sensors 2020, 20, 1546. [Google Scholar] [CrossRef]

- Tschuchnig, M.E.; Oostingh, G.J.; Gadermayr, M. Generative Adversarial Networks in Digital Pathology: A Survey on Trends and Future Potential. Patterns 2020, 1, 100089. [Google Scholar] [CrossRef]

- Kallipolitis, A.; Stratigos, A.; Zarras, A.; Maglogiannis, I. Fully Connected Visual Words for the Classification of Skin Cancer Confocal Images. In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Valletta, Malta, 27–29 February 2020; Volume 5, pp. 853–858. [Google Scholar] [CrossRef]

- Kallipolitis, A.; Maglogiannis, I. Creating Visual Vocabularies for the Retrieval and Classification of Histopathology Images. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 7036–7039. [Google Scholar] [CrossRef]

- López-Abente, G.; Mispireta, S.; Pollán, M. Breast and prostate cancer: An analysis of common epidemiological features in mortality trends in Spain. BMC Cancer 2014, 14, 874. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Forman, D.; Ferlay, J. Chapter 1.1: The Global and Regional Burden of Cancer; World Cancer Report; Stewart, B.W., Wild, C.P., Eds.; The International Agency for Research on Cancer; World Health Organization: Geneva, Switzerland, 2017; pp. 16–53. ISBN 978-92-832-0443-5. [Google Scholar]

- Anagnostopoulos, I.; Maglogiannis, I. Neural network-based diagnostic and prognostic estimations in breast cancer microscopic instances. Med. Biol. Eng. Comput. 2006, 44, 773–784. [Google Scholar] [CrossRef] [PubMed]

- Goudas, T.; Maglogiannis, I. An Advanced Image Analysis Tool for the Quantification and Characterization of Breast Cancer in Microscopy Images. J. Med. Syst. 2015, 39, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Alinsaif, S.; Lang, J. Histological Image Classification using Deep Features and Transfer Learning. In Proceedings of the 17th Conference on Computer and Robot Vision (CRV), Ottawa, ON, Canada, 13–15 May 2020; pp. 101–108. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Kassani, S.H.; Kassani, P.H.; Wesolowski, M.J.; Schneider, K.A.; Deters, R. Classification of histopathological biopsy images using ensemble of deep learning networks. In Proceedings of the 29th Annual International Conference on Computer Science and Software Engineering (CASCON 19), Markham, ON, Canada, 4–6 November 2019; IBM Corp: Armonk, NY, USA, 2019; pp. 92–99. [Google Scholar]

- Livieris, I.E.; Kanavos, A.; Tampakas, V.; Pintelas, P. A Weighted Voting Ensemble Self-Labeled Algorithm for the Detection of Lung Abnormalities from X-Rays. Algorithms 2019, 12, 64. [Google Scholar] [CrossRef]

- Shi, X.; Xing, F.; Xu, K.; Chen, P.; Liang, Y.; Lu, Z.; Guo, Z. Loss-Based Attention for Interpreting Image-Level Prediction of Convolutional Neural Networks. IEEE Trans. Image Process. 2020, 30, 1662–1675. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G.; Albanie, S. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence, Salt Lake, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Kaiming, H.; Xiangyu, Z.; Shaoqing, R.; Jian, S. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Mondéjar-Guerra, V.; Novo, J.; Rouco, J.; Penedo, M.; Ortega, M. Heartbeat classification fusing temporal and morphological information of ECGs via ensemble of classifiers. Biomed. Signal Process. Control 2019, 47, 41–48. [Google Scholar] [CrossRef]

- Hong, S.; Wu, M.; Zhou, Y.; Wang, Q.; Shang, J.; Li, H.; Xie, J. ENCASE: An ENsemble ClASsifiEr for ECG classification using expert features and deep neural networks. In Proceedings of the Computing in Cardiology (CinC), Rennes, France, 24–27 September 2017; pp. 1–4. [Google Scholar]

- Gessert, N.; Nielsen, M.; Shaikh, M.; Werner, R.; Schlaefer, A. Skin lesion classification using ensembles of multi-resolution EfficientNets with meta data. MethodsX 2020, 7, 100864. [Google Scholar] [CrossRef]

- Livieris, I.E.; Iliadis, L.; Pintelas, P. On ensemble techniques of weight-constrained neural networks. Evol. Syst. 2021, 12, 155–167. [Google Scholar] [CrossRef]

- Liu, Q.; Yu, L.; Luo, L.; Dou, Q.; Heng, P. Semi-Supervised Medical Image Classification with Relation-Driven Self-Ensembling Model. IEEE Trans. Med. Imaging 2020, 39, 3429–3440. [Google Scholar] [CrossRef]

- Lam, L. Classifier combinations: Implementations and theoretical issues. In Proceedings of the First International Workshop on Multiple Classifier Systems of Lecture Notes in Computer Science, MCS 2000, Cagliari, Italy, 21–23 June 2000; Springer: Berlin/Heidelberg, Germany, 2000; Volume 1857, pp. 77–86. [Google Scholar]

- Wu, Y.; Liu, L.; Xie, Z.; Bae, J.; Chow, K.; Wei, W. Promoting High Diversity Ensemble Learning with EnsembleBench. arXiv 2020, arXiv:2010.10623. [Google Scholar]

- Tran, L.; Veeling, B.S.; Roth, K.; Swiatkowski, J.; Dillon, J.V.; Snoek, J.; Mandt, S.; Salimans, T.; Nowozin, S.; Jenatton, R. Hydra: Preserving Ensemble Diversity for Model Distillation. arXiv 2020, arXiv:2001.04694. [Google Scholar]

- Pintelas, E.; Livieris, I.E.; Pintelas, P. A Grey-Box Ensemble Model Exploiting Black-Box Accuracy and White-Box Intrinsic Interpretability. Algorithms 2020, 13, 17. [Google Scholar] [CrossRef]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2921–2929. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, Κ.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Spanhol, F.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A Dataset for Breast Cancer Histopathological Image Classification. IEEE Trans. Biomed. Eng. 2016, 63, 1455–1462. [Google Scholar] [CrossRef] [PubMed]

- Kather, J.N.; Halama, N.; Marx, A. 100,000 histological images of human colorectal cancer and healthy tissue (Version v0.1). Zenodo 2018, 5281. [Google Scholar] [CrossRef]

- Aresta, G.; Araújo, T.; Kwok, S.; Chennamsetty, S.S.; Safwan, M.; Alex, V.; Marami, B.; Prastawa, M.; Chan, M.; Donovan, M.; et al. BACH: Grand challenge on breast cancer histology images. Med. Image Anal. 2019, 56, 122–139. [Google Scholar] [CrossRef] [PubMed]

| Stage i | Operator Fi | Resolution Hi × Wi | Channels Ci | Layers Li |

|---|---|---|---|---|

| 1 | Conv 3 × 3 | 224 × 224 | 32 | 1 |

| 2 | MBConv1, k3 × 3 | 112 × 112 | 16 | 1 |

| 3 | MBConv6, k3 × 3 | 112 × 112 | 24 | 2 |

| 4 | MBConv6, k5 × 5 | 56 × 56 | 40 | 2 |

| 5 | MBConv6, k3 × 3 | 28 × 28 | 80 | 3 |

| 6 | MBConv6, k5 × 5 | 14 × 14 | 112 | 3 |

| 7 | MBConv6, k5 × 5 | 14 × 14 | 192 | 4 |

| 8 | MBConv6, k3 × 3 | 7 × 7 | 320 | 1 |

| 9 | Conv 1 × 1, Pooling, FC | 7 × 7 | 1280 | 1 |

| Class | Subclasses | Magnification Factors | Total | |||

|---|---|---|---|---|---|---|

| 40× | 100× | 200× | 400× | |||

| Benign | Adenosis | 114 | 113 | 111 | 106 | 444 |

| Fibroadenoma | 253 | 260 | 264 | 237 | 1014 | |

| Tubular Adenoma | 109 | 121 | 108 | 115 | 453 | |

| Phyllodes Tumor | 149 | 150 | 140 | 130 | 569 | |

| Malignant | Ductal Carcinoma | 864 | 903 | 896 | 788 | 3451 |

| Lobular Carcinoma | 156 | 170 | 163 | 137 | 626 | |

| Mucinous Carcinoma | 205 | 222 | 196 | 169 | 792 | |

| Papillary Carcinoma | 145 | 142 | 135 | 138 | 560 | |

| Total | 1995 | 2081 | 2013 | 1820 | 7909 | |

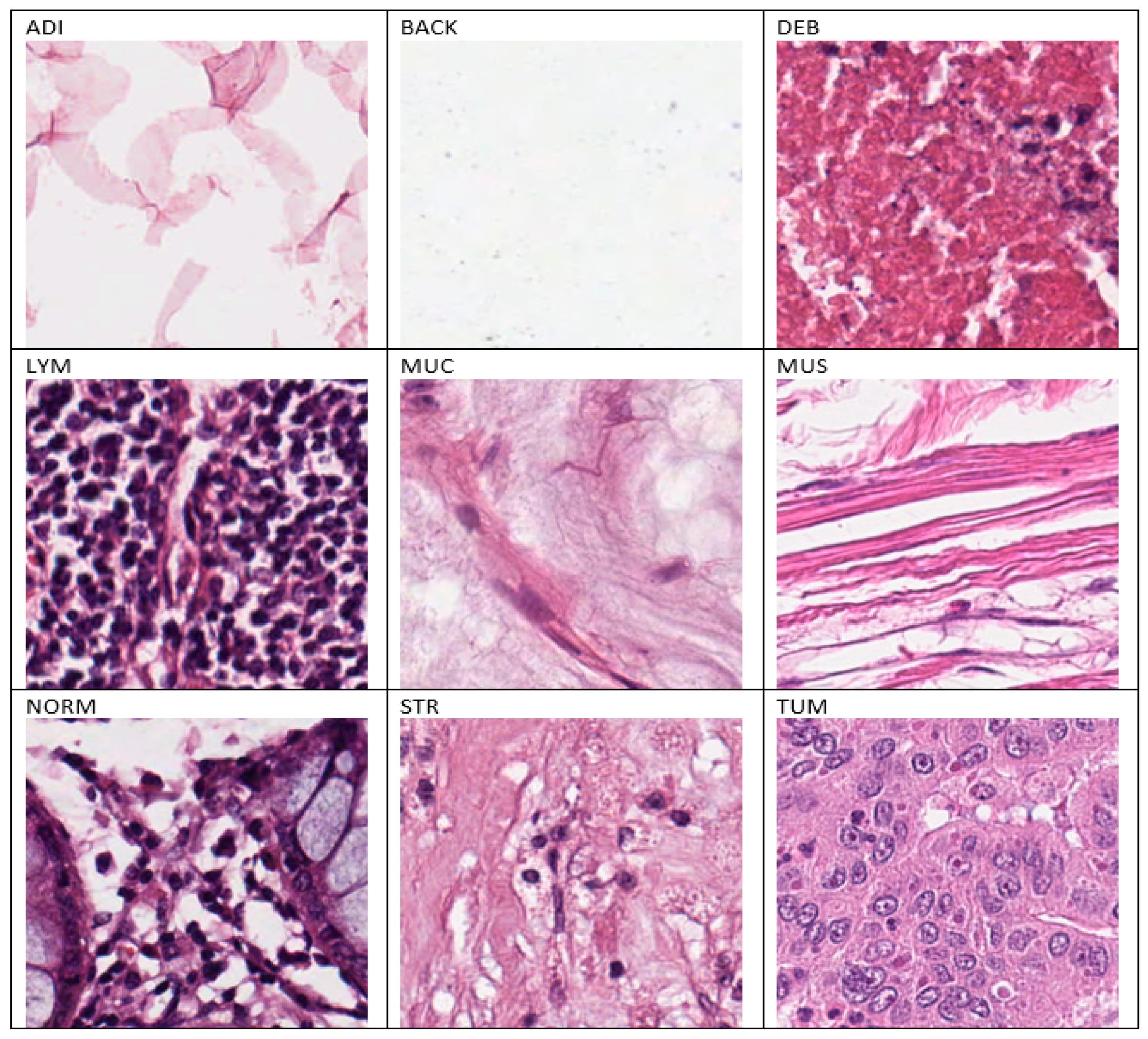

| Class | Number of Samples | Percentage (%) |

|---|---|---|

| ADI | 10,407 | 10.4 |

| BACK | 10,566 | 10.56 |

| DEB | 11,513 | 11.51 |

| LYM | 11,556 | 11.56 |

| MUC | 8896 | 8.9 |

| MUS | 13,537 | 13.54 |

| STR | 8763 | 8.76 |

| NORM | 10,446 | 10.45 |

| TUM | 14,316 | 14.32 |

| Hyperparameters | Values |

|---|---|

| Epochs | 10 |

| Optimizer | Adam |

| Learning Rate | Custom |

| Regularizer | L2 |

| Batch size | 8 |

| Breast Cancer | Colon Cancer | |||

|---|---|---|---|---|

| Architecture | Accuracy | AUC | Accuracy | AUC |

| EfficientNetB0 | 0.9766 | 0.9945 | 0.9946 | 0.9993 |

| EfficientNetB1 | 0.9798 | 0.9964 | 0.9898 | 0.9984 |

| EfficientNet B2 | 0.9817 | 0.9982 | 0.9920 | 0.9988 |

| EfficientNet B3 | 0.9855 | 0.9988 | 0.9897 | 0.9984 |

| EfficientNet B4 | 0.9858 | 0.9980 | 0.9910 | 0.9982 |

| EfficientNet B5 | 0.9804 | 0.9975 | 0.9924 | 0.9982 |

| EfficientNet B6 | 0.9728 | 0.9953 | 0.9894 | 0.9986 |

| ExceptionNet | 0.9785 | 0.9942 | 0.9909 | 0.9985 |

| InceptionNetV3 | 0.8868 | 0.9430 | 0.9844 | 0.9981 |

| VGG16 | 0.9320 | 0.9769 | 0.9795 | 0.9969 |

| ResNet152V2 | 0.8720 | 0.9431 | 0.9564 | 0.9913 |

| Breast Cancer | Colon Cancer | |||

|---|---|---|---|---|

| Architecture | Accuracy | AUC | Accuracy | AUC |

| EfficientNetB0-2 | 0.9925 | 0.9985 | 0.9946 | 0.9991 |

| EfficientNetB1-3 | 0.9855 | 0.9984 | 0.9856 | 0.9989 |

| Breast Cancer | Colon Cancer | ||||

|---|---|---|---|---|---|

| Split | Architecture | Accuracy | AUC | Accuracy | AUC |

| 40–60% | EfficientNetB0 | 0.9789 | 0.9974 | 0.9645 | 0.9874 |

| EfficientNetB1 | 0.9778 | 0.9974 | 0.9688 | 0.9899 | |

| EfficientNetB2 | 0.9824 | 0.9986 | 0.9764 | 0.9906 | |

| EfficientNetB0-2 | 0.9835 | 0.9989 | 0.9822 | 0.9934 | |

| 30–70% | EfficientNetB0 | 0.9712 | 0.9962 | 0.9618 | 0.9822 |

| EfficientNetB1 | 0.9737 | 0.9972 | 0.9666 | 0.9831 | |

| EfficientNetB2 | 0.9751 | 0.9968 | 0.9703 | 0.9852 | |

| EfficientNetB0-2 | 0.9785 | 0.9979 | 0.9782 | 0.9925 | |

| Breast Cancer | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Metrics | Acc | Pr | Rec | Acc | Pr | Rec | Acc | Pr | Rec | Acc | Pr | Rec |

| Magnification Factor | 40× | 100× | 200× | 400× | ||||||||

| Architecture | ||||||||||||

| EfficientNetB0 | 0.9699 | 0.9699 | 0.9699 | 0.9792 | 0.9792 | 0.9792 | 0.9631 | 0.9631 | 0.9631 | 0.9560 | 0.9560 | 0.9560 |

| EfficientNetB1 | 0.9749 | 0.9749 | 0.9749 | 0.9679 | 0.9679 | 0.9679 | 0.9473 | 0.9473 | 0.9473 | 0.9158 | 0.9158 | 0.9158 |

| EfficientNet B2 | 0.9799 | 0.9799 | 0.9799 | 0.9712 | 0.9712 | 0.9712 | 0.9631 | 0.9631 | 0.9631 | 0.9396 | 0.9396 | 0.9396 |

| EfficientNet B3 | 0.9766 | 0.9766 | 0.9766 | 0.9744 | 0.9744 | 0.9744 | 09666 | 0.9666 | 0.9666 | 0.9451 | 0.9451 | 0.9451 |

| EfficientNetB0-2 | 0.9883 | 0.9883 | 0.9883 | 0.9712 | 0.9712 | 0.9712 | 0.9719 | 0.9719 | 0.9719 | 0.9469 | 0.9469 | 0.9469 |

| EfficientNetB1-3 | 0.9866 | 0.9866 | 0.9866 | 0.9824 | 0.9824 | 0.9824 | 0.9859 | 0.9859 | 0.9859 | 0.9697 | 0.9697 | 0.9697 |

| Breast Cancer | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Metrics | Acc | Pr | Rec | Acc | Pr | Rec | Acc | Pr | Rec | Acc | Pr | Rec |

| Magnification Factor | 40× | 100× | 200× | 400× | ||||||||

| Architecture | ||||||||||||

| EfficientNetB0 | 0.9097 | 0.9215 | 0.9030 | 0.8702 | 0.8849 | 0.8622 | 0.8313 | 0.8569 | 0.8207 | 0.8333 | 0.8552 | 0.8114 |

| EfficientNetB1 | 0.8796 | 0.8948 | 0.8679 | 0.8638 | 0.8696 | 0.8446 | 0.8313 | 0.8569 | 0.8207 | 0.8205 | 0.8340 | 0.8004 |

| EfficientNet B2 | 0.8796 | 0.8948 | 0.8979 | 0.8798 | 0.8918 | 0.8718 | 0.8629 | 0.8723 | 0.8401 | 0.8040 | 0.8275 | 0.7729 |

| EfficientNet B3 | 0.8963 | 0.9103 | 0.8829 | 0.8846 | 0.8893 | 0.8750 | 0.8594 | 0.8703 | 0.8489 | 0.8443 | 0.8681 | 0.8351 |

| EfficientNetB0-2 | 0.9114 | 0.9248 | 0.9047 | 0.8686 | 0.8744 | 0.8590 | 0.8629 | 0.8915 | 0.8524 | 0.8443 | 0.8641 | 0.8150 |

| EfficientNetB1-3 | 0.9264 | 0.9368 | 0.9164 | 0.8963 | 0.9103 | 0.8829 | 0.8664 | 0.8775 | 0.8436 | 0.8571 | 0.8716 | 0.8333 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kallipolitis, A.; Revelos, K.; Maglogiannis, I. Ensembling EfficientNets for the Classification and Interpretation of Histopathology Images. Algorithms 2021, 14, 278. https://doi.org/10.3390/a14100278

Kallipolitis A, Revelos K, Maglogiannis I. Ensembling EfficientNets for the Classification and Interpretation of Histopathology Images. Algorithms. 2021; 14(10):278. https://doi.org/10.3390/a14100278

Chicago/Turabian StyleKallipolitis, Athanasios, Kyriakos Revelos, and Ilias Maglogiannis. 2021. "Ensembling EfficientNets for the Classification and Interpretation of Histopathology Images" Algorithms 14, no. 10: 278. https://doi.org/10.3390/a14100278

APA StyleKallipolitis, A., Revelos, K., & Maglogiannis, I. (2021). Ensembling EfficientNets for the Classification and Interpretation of Histopathology Images. Algorithms, 14(10), 278. https://doi.org/10.3390/a14100278