1. Introduction

With the rapid development of the Internet, more and more people are participating in the Internet. People’s food, clothing, housing, and transportation are closely related to the Internet. The Internet has also brought a lot of convenience to our lives. For example, many things that are frequently used in daily life, such as online shopping, mobile phone positioning, search engines, etc., have been closely connected with our daily lives. Due to the diversity of things, we are easily dazzled when doing online shopping, for example, and do not know how to quickly choose what we want to buy. Over time different classification techniques have emerged. The traditional classification technique is a separate text classification, which uses the underlying characteristics of the text to show the characteristics of things. Using the classifier to classify data is a better method than the traditional classification method. Using local image features to generate a simple fuzzy classifier to distinguish known categories from other categories [

1] represents a new target classification method. Boosting meta-learning is used to find the most representative local features. This method improves the classification accuracy and greatly shortens the learning and testing time. Additionally, by effectively combining a variety of deep neural networks for classification and learning deep internal features from image data sets it can produce the most advanced classification performance [

2]. The combination of Boosting algorithm and neural network can use weights to constrain the classification performance of the neural network, thereby improving the performance of the classifier [

3]. The Boosting method is not only used for classification, but can also be applied to medicine. The Multi-instance Learning Boost Algorithm (MIL-Boost) establishes a predictive model that can predict the deterioration of insulin resistance early based on the TyG index [

4]. This algorithm is widely used in clinical decision support systems. According to the principle of Boosting, we can combine several weak learners, or find a suitable classifier. Multi-class Boosting classifier-MBC adds a new multi-class constraint to the objective function [

5], which can effectively mine different types of features in a unified classification framework. In addition, Boosting combined with weak learners, the number of enhanced iterations can be modeled as a continuous hyperparameter, and fitted with standard techniques to obtain an effective learning algorithm, which can be widely used in regression and classification [

6]. AdaBoost, as a representative of the Boosting algorithm, constructs a globally optimal combination of weak classifiers on the basis of sample weights, which greatly improves the classification performance [

7]. Given training data, weak learning algorithms (such as decision trees) can be trained to generate weak learners, and these weak learners only need to have better accuracy than random guessing. Training with different training data can get different weak learners. These weak learners act as members and make joint decisions. To obtain information from different weak learners, AdaBoost solves the following two problems: First, how to choose a group of weak learners with different advantages and disadvantages so that they can make up for each other’s deficiencies. Second, how to combine the output of weak learners to obtain better overall decision-making performance.

To solve the first problem, AdaBoost allows each newly added weak learners to reflect some new patterns in the data. To achieve this, AdaBoost maintains a weight distribution for each training sample. That is, there is a distribution corresponding to any sample, which indicates the importance of this sample. When measuring the performance of weak learners, AdaBoost considers the weight of each sample. A misclassified sample with a larger weight will contribute a greater training error rate than a misclassified sample with a smaller weight. To obtain a smaller weighted error rate, weak classifiers must focus more on high-weight samples to ensure accurate predictions. By modifying the weight of the sample, the weak learner can be guided to learn different parts of the training sample. AdaBoost is divided into multiple rounds of training. In the first round of training, we update the weight of the sample and train a weak learner so that the learner produces the smallest weighted training error on the weight distribution. In the first round, all samples have the same weight. In each subsequent round, we increase the weight of misclassified samples and reduce the weight of accurately classified samples. In this way, we make each round of weak learners focus more on samples that are difficult to be accurately classified in the previous round.

Now that we have obtained a set of trained weak learners with different strengths and weaknesses, how do we effectively combine them so that mutual advantages complement each other to produce a more accurate overall prediction effect? Each weak learner is trained with different weight distribution. We can see that different weak learners are assigned different tasks, and each weak learner tries its best to complete the given task. Intuitively, when we want to combine the judgments of each weak learner into the final prediction result, if the weak learner performs well in the previous task, we will believe it more, on the contrary, if the weak learner in the previous task performs poorly, we believe it less. In other words, we will combine weak learners in a weighted manner, and assign each weak learner a value indicating the degree of credibility. This value depends on its performance in the assigned task. The better the performance, the greater the value, and vice versa. AdaBoost training will eventually have two situations: one is that the training error is reduced to zero. The other is that no matter how many times of training, there will be no overfitting problem. Each subsequent weak classifier has an accuracy of 0.5. However, these are limited to the same mode. If you want to better apply it in real-life, it is a more cross-modal retrieval application. Each source or form of information can be called a mode. For example, people have a sense of touch, hearing, sight, and smell; the medium of information includes voice; video; text; and a variety of sensors, such as radar, infrared, and accelerometer. Each of the above can be called a mode.

The main method of cross-modal retrieval is to find the relationship between different modes and map different modes to the same subspace, where we can measure the similarity between different modes. Specifically, first, we have some training real columns, each instance is an image-text pair with a label; then, these real columns are divided into train set and test set, there are unrelated image–text pairs in each set, but all have the same category (that is, the training set and the test set do not have the same image–text pair, but the category is the same); then, during training, we learn a common semantic space from the train set; then, apply the common semantic space to the test set to generate a common feature representation for the instances in the test set; finally, use the common feature representation to compare The degree of similarity of the samples of the two modes is thus matched. Eventually, stronger learners of each modal can generate semantic vectors and apply logistic regression to obtain semantic vectors of images and texts. However, when features have high dimensions and sparse values, logistic regression cannot work effectively. Although image texts essentially represent the same set of semantic concepts, their manifestations differ greatly due to the large differences between different data modes. How to robustly represent the similarity between image and text and accurately measure the similarity is a tricky problem.

To deal with this problem, the existing methods can be divided into two main categories according to the different ways of modeling the corresponding relationship between image and text: one-to-one matching and many-to-many matching.

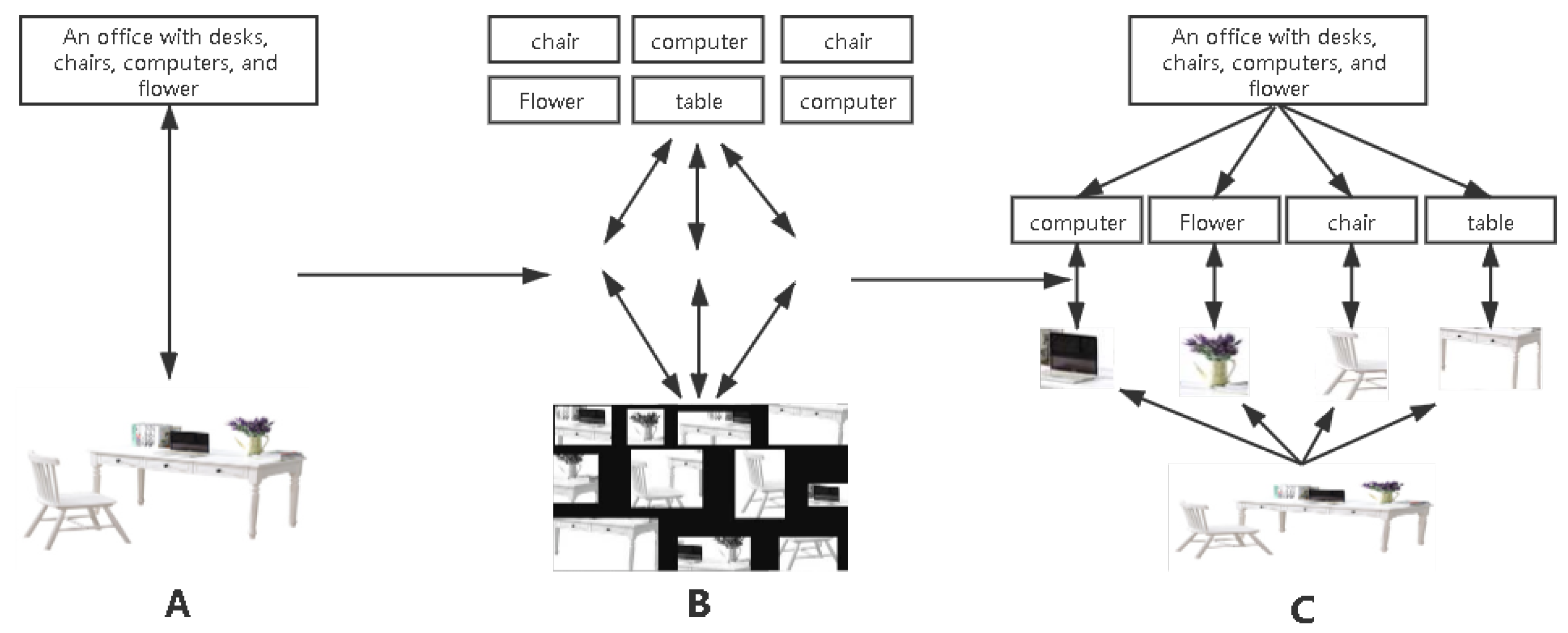

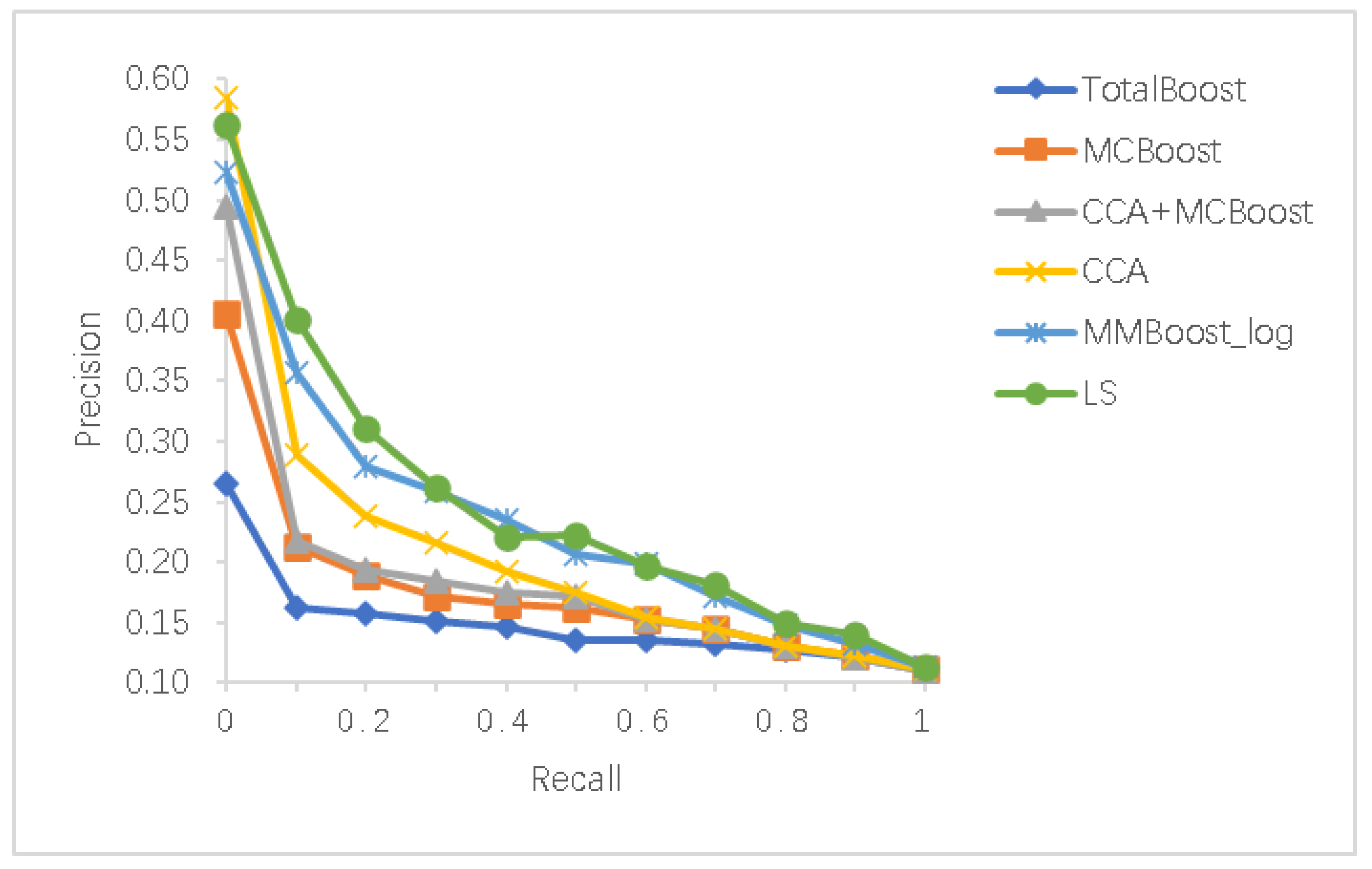

As shown in

Figure 1A, we can see that it is a one-to-one matching method, usually extracting global feature representations of images and texts, such as desks, chairs, computers, illustrations, etc. In the picture, and then using the structure, the objective function of transformation or canonical correlation analysis projects their features into a common space, so that the distance between similar pairs of image texts in the space is close, that is, the similarity is high. However, this matching method is only a rough measure of the global similarity of image text and does not specifically consider which local content of the image text is semantically similar. Therefore, in some tasks that require accurate similarity measurement, such as fine-grained for cross-modal retrieval, the experimental accuracy is often low. In

Figure 1B, the many-to-many matching method is shown. The many-to-many matching method is to try to extract multiple local instances from the image text, and then measure the local similarity for multiple paired instances. Fusion results in global similarity. We can see from the figure that there are many local instances extracted, but not all instances extracted by these methods depict semantic concepts. In fact, most of the instances are semantically meaningless and have nothing to do with the matching task. Only a few significant semantic instances determine the degree of matching. Those redundant examples can also be considered as noises that interfere with the matching process of a small number of semantic examples and increase the number of model calculations. In addition, existing methods usually need to explicitly use additional target detection algorithms or expensive manual annotations in the instance extraction process.

Considering this situation, we propose a multimodal multi-class enhancement framework that uses local similarity as a weak learner. As shown in

Figure 1C, its classification accuracy is improved compared to the previous method. To verify the effectiveness of the proposed local similarity as a weak learner multimodal multi-class enhancement framework, we tested the experimental performance of the framework. Moreover, compared with the current best method on two co-opened multimodal databases (Wiki and NUS-WIDE), showing good experimental results.

Our contributions mainly include the following.

On the basis of a simple multi-class enhancement framework using local similarity as a weak learner, it is extended to a multimodal multi-class enhancement framework. It can better analyze multi-modal semantic information, so as to obtain better cross-mode retrieval performance.

In the selection of weak learners, a suitable weak learner can be selected in each iteration. Specifically, each mode is transformed into a minimization optimization problem. Moreover, the algorithm provides corresponding formula derivation and theoretical analysis.

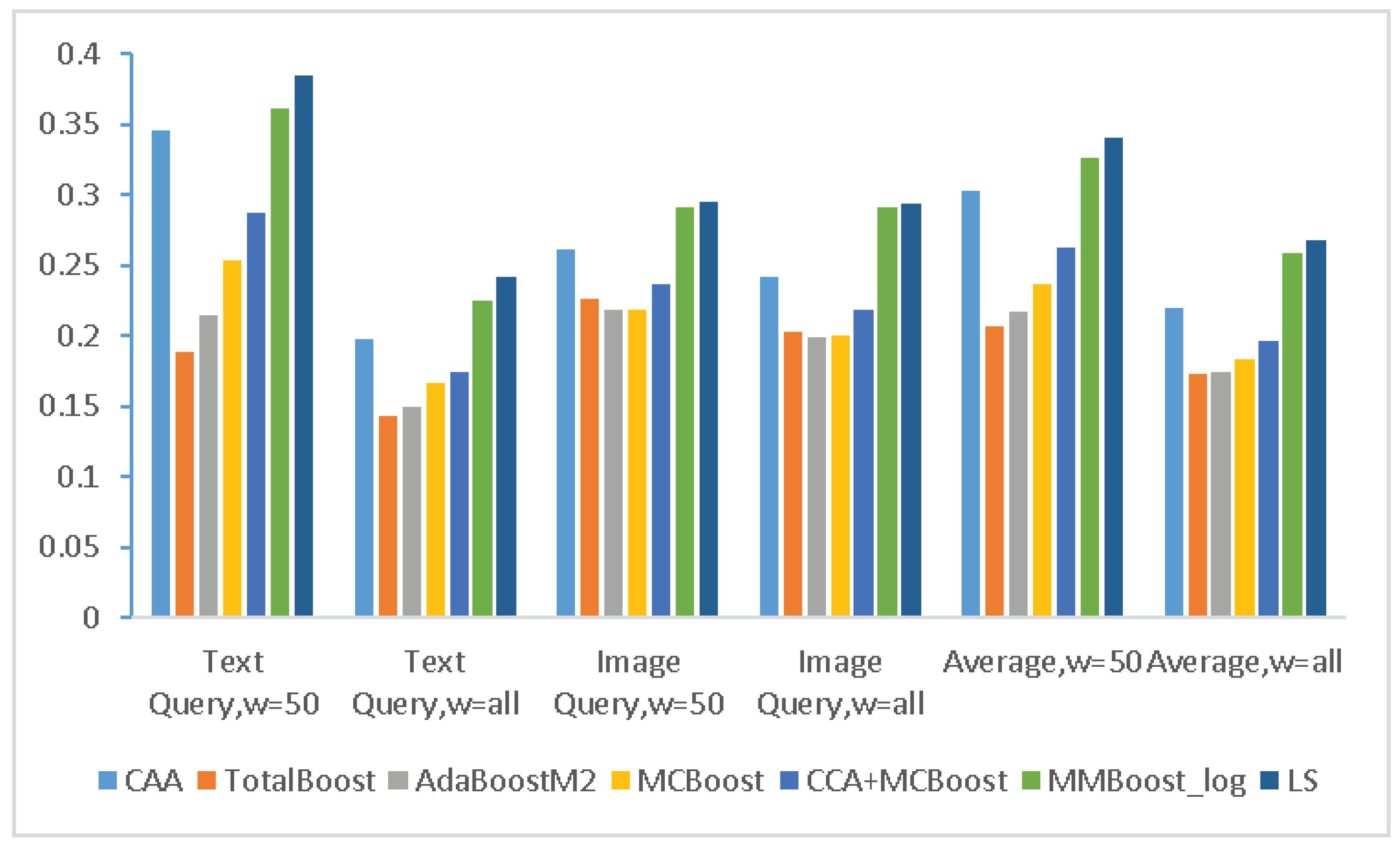

We conducted a large number of data experiments on the Wiki and NUS-WIDE datasets to verify the performance of the Boosting algorithm. Furthermore, compared with several latest methods. Experimental results show that our method has significant advantages for cross-mode retrieval.

The rest of the paper is arranged as follows, and the related work is introduced in

Section 2. In

Section 3, we introduced our framework and introduced the algorithm flow chart. In

Section 4, we did a convergence experiment. It shows that continuously reducing losses will only improve the decision boundary without overfitting the data. The comprehensive experiment is shown in

Section 5, and the final conclusion is summarized in

Section 6.

2. Related Work

Among the classifiers, the Boosting method is one of the better methods. It is a general and effective method to generate strong learners by combining weak learners. AdaBoost [

8] is such a method, which uses gradient descent to minimize the classification risk caused by exponential loss, and then select the if learner and its contribution coefficient for each iteration. Given training data and weak learners can be trained to generate different weak learners, and finally by combining weak learners, a classification model that is perfectly classified in the sample is generated. Weak learners generally use supervised machine learning algorithms, such as decision trees and support vector machines. Combining supervised machine learning algorithms with the boosting process can improve prediction efficiency [

9]. The AdaBoost-CNN method integrates AdaBoost and Convolutional Neural Network (CNN), which can process large imbalanced data sets with high precision [

10]. Supervised learning is for data that have been labeled. For incompletely labeled data, a semisupervised learning method [

11] is required. This method can extract useful information from incompletely labeled data.

In addition, AdaBoost-Cost [

12] also combines multiple weak learners into a strong learner, but the requirements for weak learners are not high, and weaker weak learners can also meet the requirements. These are all single mode or two types of modes. Obviously, there are many defects in more than a dozen lives. For example, the computational complexity has not dropped too much; it is difficult to meet people’s needs in terms of classification accuracy. Therefore, people began to study multimodal and multi-classification methods, but multimodal and multi-classification methods are more complicated and difficult to implement. TangentBoost [

13] studied the design of a robust classifier, which can effectively deal with the typical noise data set and outlier data set in computer vision, and uses the probabilistic heuristic view of classifier design to identify such losses. A set of necessary conditions. Using these conditions, a new enhancement algorithm is obtained. Experiments using data from computer vision problems such as scene classification, target tracking, and multi-instance learning show that TangentBoost is always better than the previous enhancement algorithm. REBEL [

14] is a simple and accurate multi-class enhancement method. This method is quick to train and has less data. A new type of weak learner called local similarity is proposed. This framework can prove to minimize the training error of any data set at an exponential rate. Experiments were conducted on various synthetic and real data sets, which proved that there is a consistent tendency to avoid overfitting, but it is only used on a single mode and shows good results. Mobile robots and self-driving cars rely on multimodal sensor devices to perceive and understand the surrounding environment. In addition to accurate spatial perception, a comprehensive semantic understanding of the environment is essential for efficient and safe operation. A new deep neural network structure, LilaNet [

15], is used for point-by-point, multi-category semantic annotation of semi-dense lidar data. An automatic process of large-scale cross-modal training data generation called automatic labeling is proposed to improve the performance of semantic labeling while keeping the workload of manual labeling low.

A direct optimization method for training multi-class boosting [

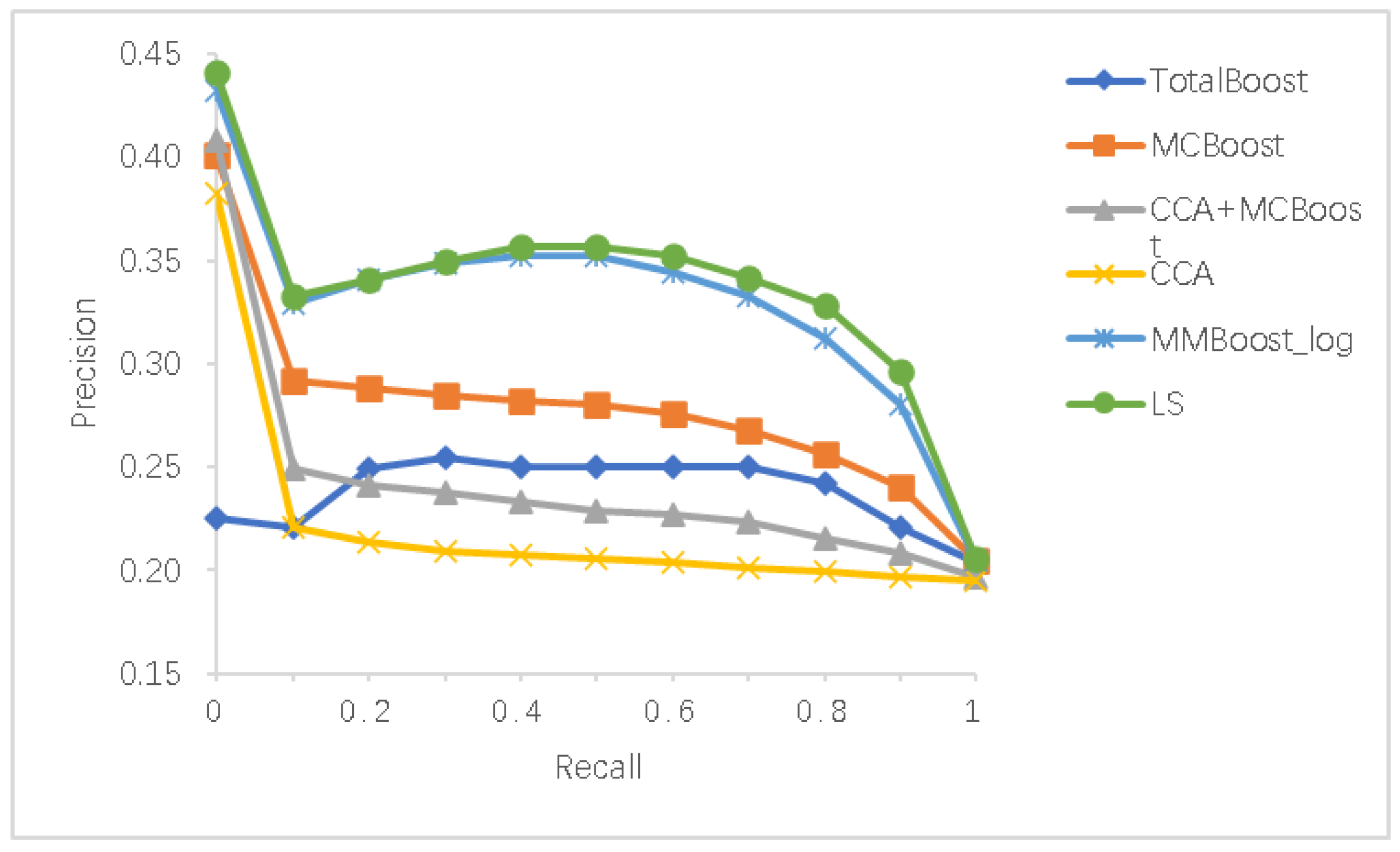

16] is a high-accuracy predictor formed by Boosting combined with a set of weak classifiers with general accuracy. Compared with binary advancing classification, multi-class advancing is more valued. It proposes a new multi-type boost formula. Different from the previous multi-boosting algorithm that decomposes the multi-boosting problem into multiple independent binary boosting problems, it is a direct optimization method for training multiple types of boosting. In addition, by explicitly deriving the dual relationship of the original optimization problem, a completely revised boosting is designed using column generation technology in convex optimization. In each iteration, the weights of all weak classifiers are updated. Moreover, compared with the latest multi-class improvement, the recognition accuracy has been improved to a certain extent. MCBoost [

17] proposed a new framework based on multi-dimensional codeword matrix and predictor, which minimizes risk through gradient descent in multi-dimensional function space, with one based on coordinate descent and one based on gradient descent. Both methods are very good multi-class enhancement methods. MMBoosting [

18] is a multimodal multi-class enhancement framework that can simultaneously capture the semantic information within the modal and the semantic correlation between the modals, and using multi-class exponential functions and logistic loss function, it obtained two new versions of MMBoost, namely, MMBoost_exp [

18] and MMBoost_log [

18], which have made great contributions to the cross-modal retrieval enhancement method.

Although considerable progress has been made in the multimodal and multi-class enhancement method, the classification accuracy is not very high. Therefore, from the above development, we need to find a suitable weak learner to replace the existing. Some weak learners can improve the accuracy of multi-classification. Although there are many weak learners, we choose local similarity as the weak learner. The reason is that during each iteration, a suitable weak learner can be selected. In the next section, we mainly introduce the framework we constructed.

3. Our Framework

We define some of our symbols, introduce our enhancement framework, and describe our training process. x represents a scalar, and the bold x represents a vector, where

, the logical expression

, the inner product expression is

, element-wise multiplication product is

. In a multi-class classification setting, a data point is represented as a feature vector

x and is associated with a class label y. Each point is composed of d features. We use local similarity as a weak learner, because it supports binary weak learners [

19], and its mathematical expression is simple (a closed solution that minimizes loss), and it has a strong empirical performance. To find the optimal predictor of different modes [

20], construct a target risk function based on experiments:

The first part and the second part are training loss functions of images and text, respectively, and the third part represents the model complexity, and the optimal local similarity is that the loss function and model complexity are minimized at the same time. The problem is to find the minimum value of the objective function. Local similarity returns a vector value output

:

The average misclassification error

can be expressed as

Local similarity uses an exponential function to cap the average training misclassification error:

where

, because it is an additive model [

21], the parameters of the previous training are fixed. Each iteration is equivalent to jointly optimizing a new weak learner f and the accumulation vector a. Therefore, the training loss at iteration

can be expressed for

where

f is a given weak learner, and

and

are the sum of the classification weights [

22] of right and wrong classification, respectively. In this form, it is easy to get the optimal accumulation vector

:

The training loss [

23] can be optimized as

3.1. Calculation of Local Similarity of Two Points

The traditional decision tree compares a single feature with a threshold and outputs +1 or −1. However, our weak learner (Local similarity) uses similarity measures [

24] to compare points in the input space. Due to its simplicity and effectiveness, we use the negative squared Euclidean distance

as the similarity measure between

and

. For the local similarity of two points, at a given

and

, if

and

are more similar, the input space is positive, and it has the largest absolute effect near

and

. Therefore, the two local similarities are

where

There are two modes of local similarity: single point and two points. The single point mode allows any single data point to be isolated, ensuring a basic reduction in loss [

25]. However, it essentially leads to pure memory of the training data; imitating the nearest neighbor classifier. By providing the edge style function, the two-point mode increases the ability to better generalize. The combination of these two modes makes it flexible to handle a wide range of classification problems [

26]. In addition, in any mode, the function of local similarity is easy to explain, that is, which of these fixed training points is more similar to a given query point.

3.2. Find the Appropriate Local Similarity

Assuming a data set with N samples, there are about possible local similarities, the following algorithm flowchart can effectively select the appropriate local similarity.

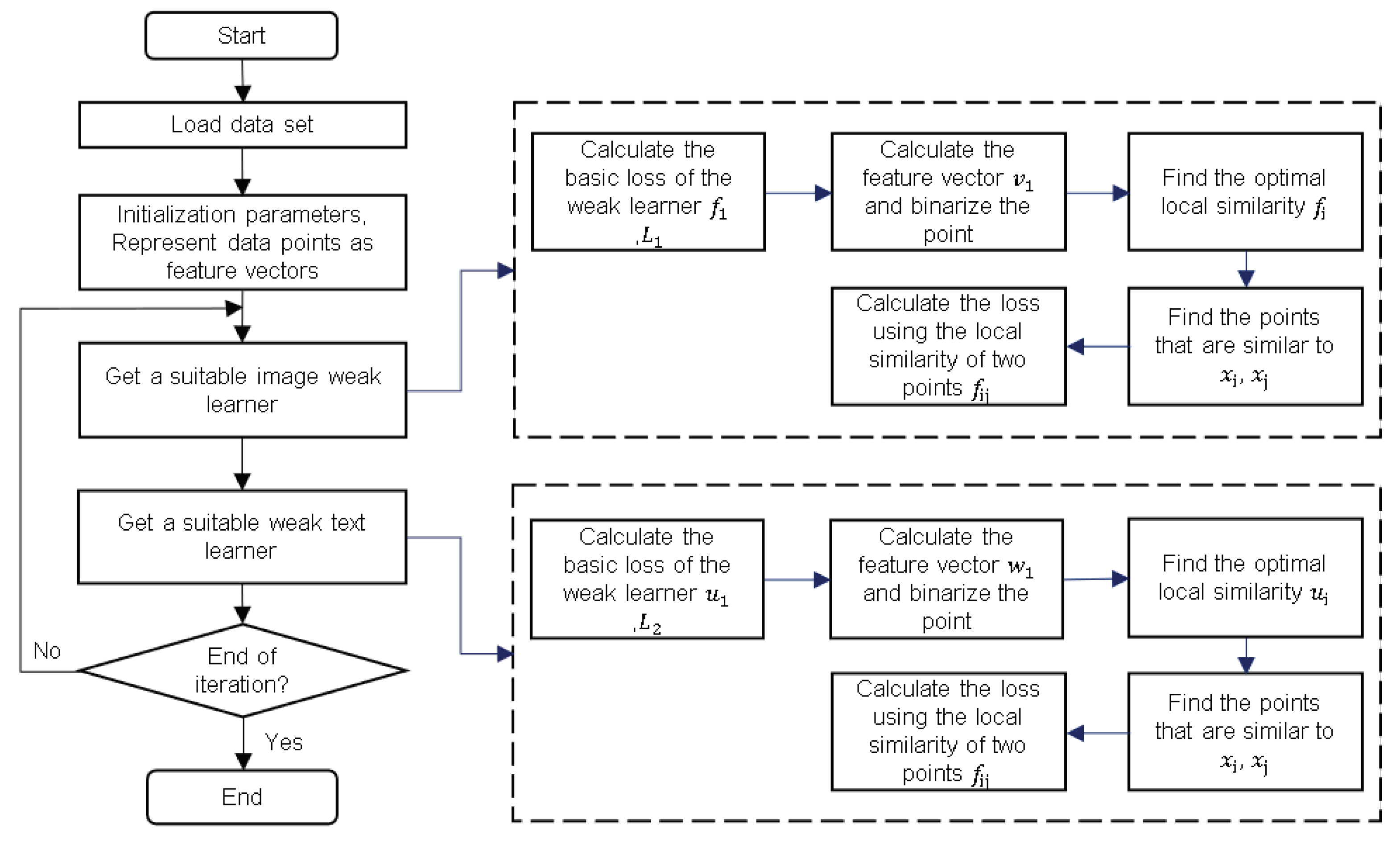

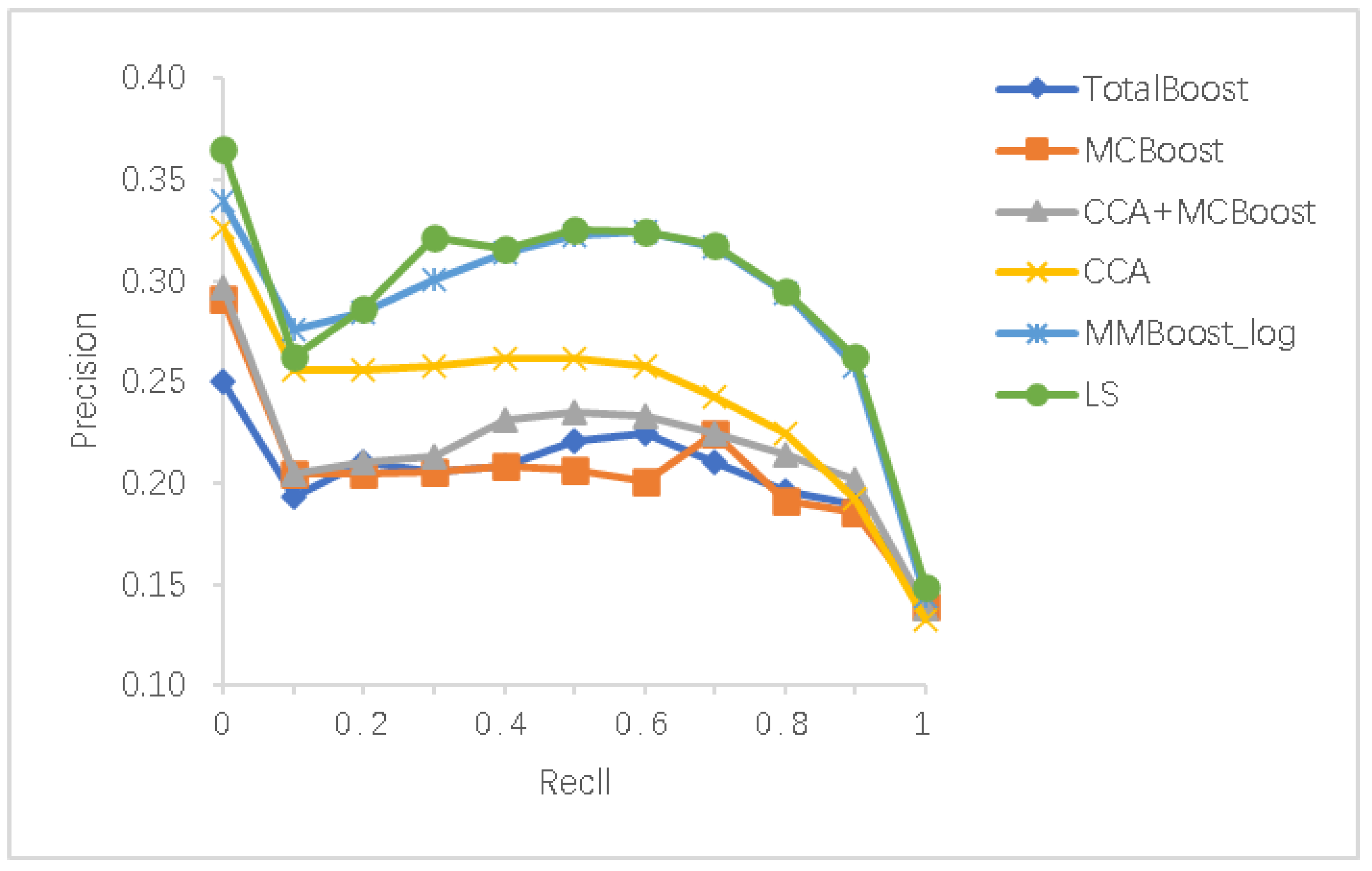

The purpose of the algorithm shown in

Figure 2 is to select the appropriate local similarity, that is, to learn a set of predictors, so that the algorithm can mine the semantic information within the modal and the semantic correlation between the modal. Moreover, to make the optimization problem simple, we learn each predictor in turn [

27]. First, we load the bimodal data set, initialize the inter-modal parameters, the parameters of the multi-class weak learner, and the number of iterations. The data set parameters include image set, text set and semantic vocabulary, and each semantic class is coded with a different unit vector, thereby representing the data point as a corresponding feature vector. At the beginning of the iteration, the predictors for images and text are initialized. Then, learn each predictor in turn, update the obtained image and text predictor to the latest predictor, increase the number of iterations by 1, and continue to execute the iterative loop until the loop condition is not satisfied. The learning of weak learners is mainly divided into the following steps:

- (1)

For the selection of the image weak learner, Formula (5) is used to calculate the basic loss of the weak learner .

- (2)

Because each non-negative feature value is related to an independent feature vector, let

be the eigenvector corresponding to the nth eigenvalue

, and define

as the row vector of the element

. Therefore,

can be decomposed into

From the above formula, the feature vector can be calculated, and all points are labeled according to their binarization class label .

- (3)

Find the optimal local similarity , that is, the point that is closer to the threshold , and all the points that meet the conditions i is marked as +1, and the other points are marked as −1. Then, calculate the loss of by Formula (8). Compared with , the one with smaller loss is regarded as the best so far.

- (4)

Find the point

that is most similar to

, which can be obtained by the following formula,

- (5)

Use the two-point local similarity to calculate the loss. If its performance exceeds the previous best, store the new learner and update the best loss so far.

- (6)

Find all points that are sufficiently similar to

and remove them from the rest of the current iteration. In our implementation, we removed all

:

If all points are deleted, return the best local similarity so far; otherwise, re-find the point

that is most similar to

. After completing this step, ensure that the best local similarity [

28] so far can be sufficient. For the selection of weak learner for text, an analogy to the selection of weak learner for the above image can be obtained.

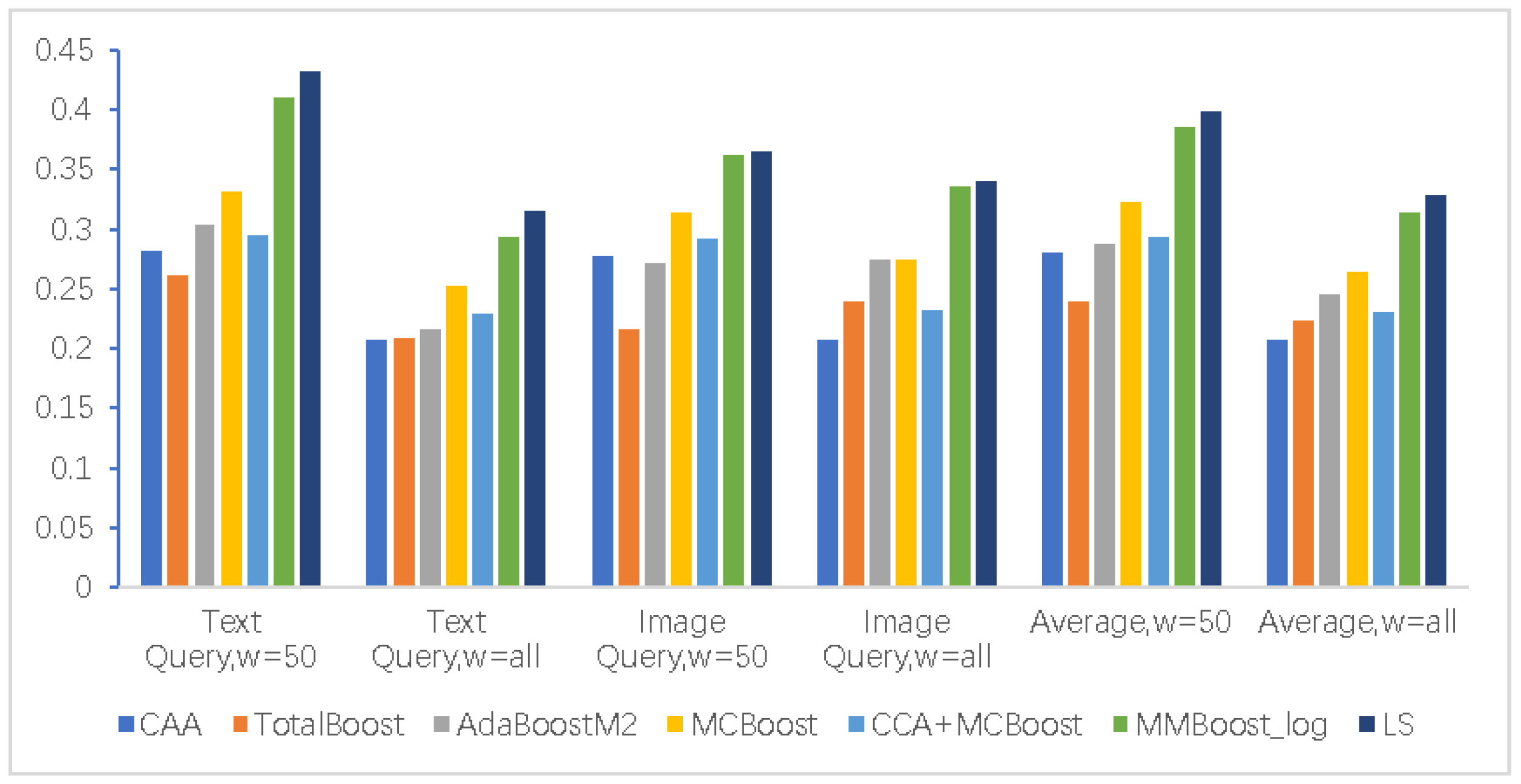

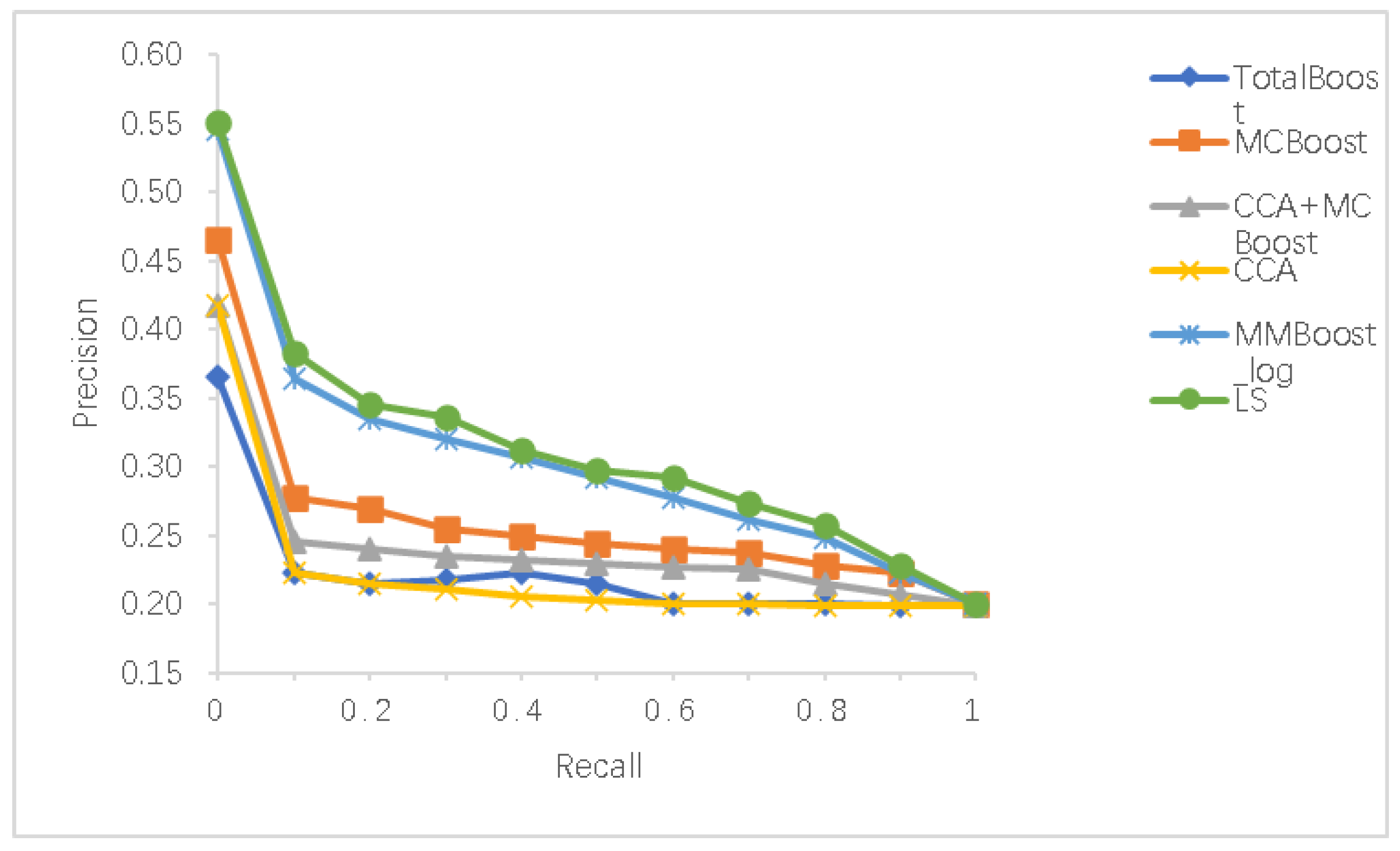

4. Convergence Experiment

In this section, we will demonstrate that the continuous reduction in loss will only improve the decision boundary without overfitting the data. To better visualize and intuitively understand what the classifier is doing, we generated a two-dimensional synthetic data set. The synthetic data set is artificially synthesized through the method of clustering. Both numpy and scikit-learn provide the function of random data generation; here, we use makegaussian_quantiles, a subfunction of scikitlearn, to generate grouped multidimensional normal distribution data. The parameters are set as follows. The number of samples generated in the first group is 200, and the number of samples generated in the second group is 300. The other parameters of the two samples are the same, the feature mean is 3 and the covariance coefficient is 2, which generates a 2-dimensional normal distribution, the generated data is divided into 3 groups according to quantiles, then the randomly generated samples and the corresponding categories of the samples are connected to the arrays. The 500 points of data obtained are divided, 2/3 as the training set, and 1/3 as the test set. The statistical characteristics of the synthetic data set are shown in

Table 1 below.

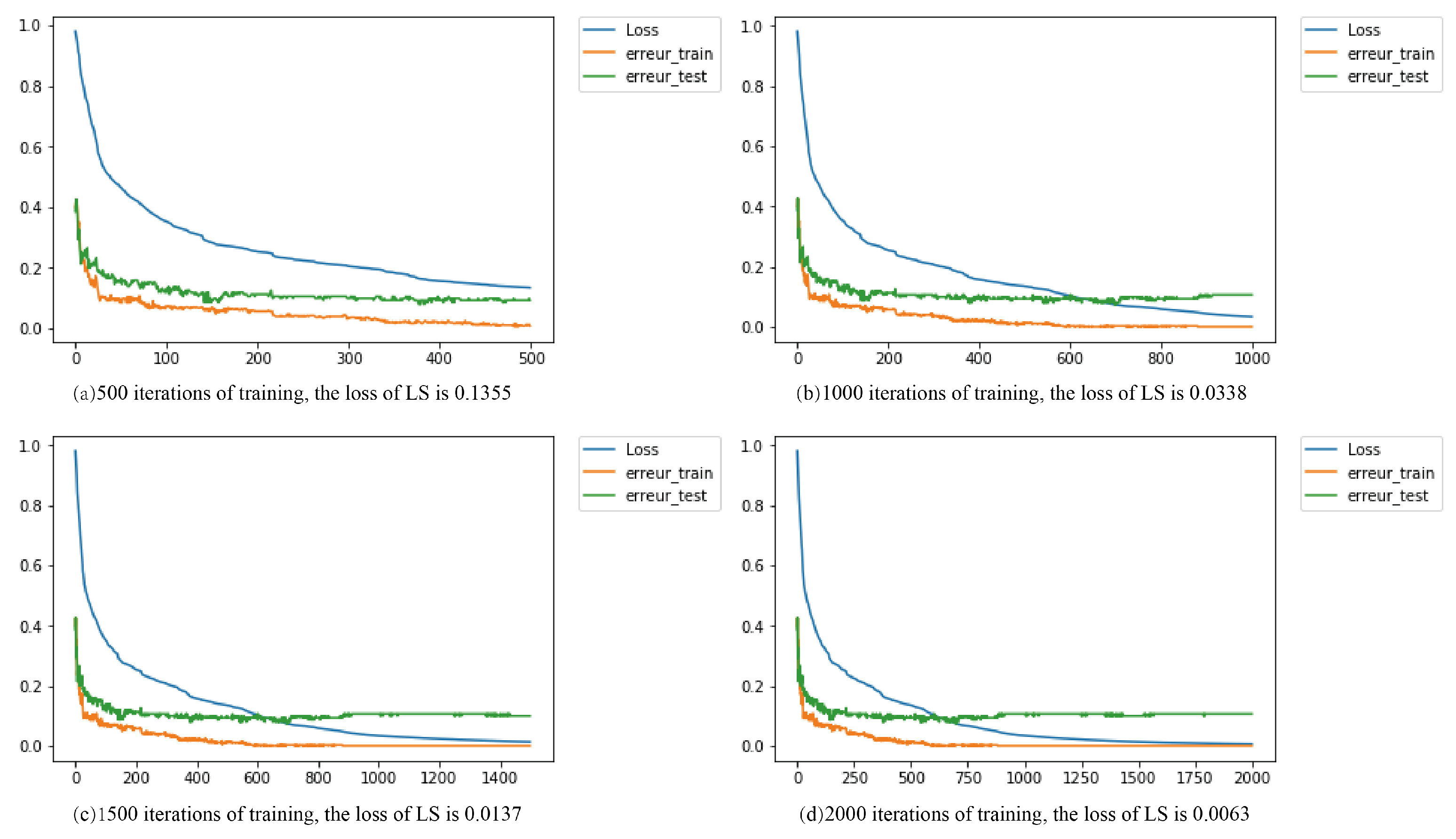

To make the annotation clear and simplified, we abbreviate the local similarity as LS, and the convergence results are shown in

Figure 3.

Figure 3a shows the training loss and training error of the classifier. Perform 500 iterations of training with the test error, and the final loss is 0.1355. Although the number of training is not sufficient, we can see intuitively that as the training error decreases, the test error and loss show a downward trend.

Figure 3b performs 1000 iterations on the training loss, training error, and test error of the classifier, and the final loss is 0.0338.

Figure 3c performs 1500 iterations on the training loss, training error, and test error of the classifier, and the final loss is 0.0137.

Figure 3d performs 2000 iterations on the training loss, training error, and test error of the classifier, and the final loss is 0.0063. From the above four generalization experiments, we can see that even if the training error is reduced to zero, the test error will not increase. Our framework is in the case of different iteration times, when the training loss is in a downward trend, the test error is also such a downward trend, and as the number of training increases, when the error drops to zero, the test error increases. The closer it is to zero, but not zero, it shows the authenticity of our experiment. In the actual test experiment, the error is certain. In all cases, the classification boundary is suitable for the training data, rather than overfitting, that is, each point is isolated. This can be observed more strictly from the training curve. As the number of iterations increases, the test error is quite stable when it reaches a minimum.