1. Introduction

Cloud computing is the practice of storing, managing, and processing data using a network of remote servers accessed via the Internet [

1]. As such, cloud computing is a model for easy and ubiquitous access to a set of servers, storage devices, networks, applications, and services with minimal interaction with the service provider and minimal management cost [

2]. Virtualization techniques are used in cloud systems to appropriately handle user requests. By applying virtualization, multiple Virtual Machines (VMs) are simultaneously run on a single Physical Machine (PM) controlled by software usually known as Hypervisor or Virtual Machine Monitor (VMM) [

3,

4,

5]. In Infrastructure-as-a-Service (IaaS) clouds, each user request is in the form of one or more VM requests.

The tendency of software to fail or cause a system failure after running continuously for a specific time period is referred to as software aging [

6,

7]. Software aging is a phenomenon in long-run software systems that causes an increased failure rate and/or degraded performance due to accumulation of aging errors [

8,

9]. Since VM requests in IaaS clouds are usually different in terms of software and tools for which they are initiated, their corresponding VMs exhibit complex behaviors and sophisticated interactions throughout their lifetime that enable VMMs to manage a wide variety of VM behaviors. Consequently, after running for a long time or managing heavy workloads, VMMs, like any other software, age and slow due to a multitude of internal errors and diverse behaviors of VMs [

7,

10,

11,

12,

13]. If these errors are not prevented or addressed properly, the performance of the system degrades and the cloud provider may fail to meet Quality of Service (QoS) requirements [

14].

Software rejuvenation is an effective method used to cope with software aging and performance degradation [

11,

15,

16]. In this method, software is restored to its original state before causing aging-induced crashes and system failures. In cloud systems, providers can also benefit from software rejuvenation by rejuvenating their VMMs before they fail. However, the main problem in this regard is how to choose the right VMM to be rejuvenated and the correct time to do so. In addition to the selection of the appropriate VMM and the proper time for rejuvenation, the selection of the right method amongst existing options is of utmost importance. The majority of models proposed in the literature use a series of side programs to measure the efficiency and performance of VMMs. A study showed that the majority of previous studies in the field of software rejuvenation adopted time-based or prediction-based strategies [

17], both of them show low performance.

The rapid worldwide growth of the cloud computing paradigm has led to the emergence of massive data centers with high power consumption across the world [

18]. In 2016, the total energy consumption of the world’s data centers was 416 terawatt hours (TWh), which is 38% higher than the 300 TWh of energy that the entire U.K. consumed in the same year [

19]. As a result, the power consumption of cloud computing servers and the field of green computing are worthy of more attention [

20]. Active PMs, i.e., switched-on PMs, consume a major portion of a cloud system’s power. Therefore, a cloud provider with many active but lightly loaded PMs/VMMs has high power waste, resulting in low energy efficiency. The technique proposed to increase the energy efficiency in this paper involves migration of VMs from lightly loaded VMMs, and then switching free PMs off, leading to a smaller number of active VMMs and PMs. In real-world cloud systems, requested VMs are heterogeneous and they differ in terms of processing speed, memory, and storage capacities. Therefore, in order to match real-world systems as much as possible, we consider two categories of VMs in this paper: (1) small VMs, which are VMs that need to be served for a short while and therefore utilize a small share of resources, and (2) big VMs, which are the VMs that need to be executed for a longer period of time and thus use a significant share of system resources. Over the course of their life, larger VMs have a greater impact on software aging of their host VMMs in contrast to the smaller VMs.

In this paper, we present a Stochastic Activity Networks (SAN) model for cloud data centers and provide a strategy for applying rejuvenation to candidate VMMs at the appropriate time. Model-based studies are amongst the most prevalent analyses that researches completed to study software aging and software rejuvenation [

21]. Since one of the main causes of failures of VMMs is the continuous execution of VMs running on VMMs [

17], the type and the total number of VMs executed by a VMM serve as the measure for determining the appropriate time of rejuvenation. Therefore, we define an upper bound for the maximum number of VMs that a VMM can run, starting from its last boot time, and force a rejuvenation whenever the age of a VMM reaches this limit. The age of a VMM can be expressed as the cumulative workload that the VMM has served since its last failure or rejuvenation. By analyzing the proposed model with the rejuvenation scheme, the benefits of applying the rejuvenation to VMMs of an IaaS cloud provider in reducing the number of VM failures and improving the performance and availability in the steady-state are observed. In addition, the proposed model enables power-awareness in the cloud management system by migrating active VMs from lightly loaded VMMs to more heavily loaded ones and switching off free PMs. Evaluation of the proposed scheme demonstrates that this power saving mechanism improves the system’s steady-state performance and availability. The formalism used in this paper to model the power-aware VMM rejuvenation scheme is SANs [

22,

23,

24]. SANs are probabilistic extensions of activity networks that are equipped with a set of activity time distribution functions, reactivation predicates, and enabling rate functions. They were developed to facilitate performance and dependability evaluation and have features that permit the representation of parallelism, timeliness, fault tolerance, and degradable performance [

25]. More detailed information about SANs can be found in the previous literature [

26,

27,

28].

The main contributions of this paper are summarized as follows. (1) A SAN model is presented for performance evaluation of IaaS clouds that considers many details of real systems, including VM multiplexing, migration of VMs between VMMs, VM heterogeneity, failure of VMMs, failure of VM migration, and different probabilities for arrival of different VM request types. (2) Using the presented SAN, a two-threshold power-aware software rejuvenation scheme is proposed and compared with two baselines based on diverse performance, availability, and power consumption measures defined on the system.

The rest of this paper is organized as follows.

Section 2 reviews the previous work in the field of software rejuvenation in cloud systems.

Section 3 provides a brief introduction to the SAN formalism. In

Section 4, the general structure of our system is explained. In

Section 5, the proposed SAN model and the rejuvenation scheme presented for the SAN are introduced in detail.

Section 6 presents the output measures of interest and the results of the performance evaluation, and finally,

Section 7 concludes the paper and provides some directions for future work.

2. Related Work

Evaluation of performance, availability, and power consumption of rejuvenation techniques in cloud systems using analytical models is an emerging topic. Machida et al. studied different methods of rejuvenation and suggested three main methods for software rejuvenation in a server virtualized system: cold, warm, and migration rejuvenation [

11]. In the cold rejuvenation method, active VMs on a VMM that want to be rejuvenated are switched off. After completion of the VMM rejuvenation, the switched-off VMs are restarted on the rejuvenated VMM. In the warm method, active VMs are suspended, and after completion of the VMM rejuvenation, the suspended VMs resume their running on the rejuvenated VMM. In the migration method, active VMs on the intended VMM are moved to another host VMM before rejuvenation of the intended VMM occurs. When rejuvenation is completed, they move back to their original PM. Therefore, the downtime caused by the migration method is shorter than that of other methods. Our model is also based on the migration rejuvenation method. In the model presented in Machida et al. [

11], the rejuvenation is periodically performed without considering the system’s workload. In contrast, the rejuvenation in our model is performed according to the VMM’s age.

Bruneo et al. analyzed the best period for rejuvenation to achieve high availability considering the VMM’s workload [

15]. They suggested a specific period of rejuvenation as the best one for each specified input workload. By changing the rate of input workload, the rejuvenation period also changes. More simply, the heavier the amount of workload, the longer the rejuvenation period; and the lighter the input workload, the shorter the rejuvenation period. Thus, rejuvenation time is adopted according to the VMM’s workload. In contrast to Bruneo et al. [

15], in our proposed model, the rejuvenation time is determined based on the age of a VMM instead of using a constant period, which provides a flexible model and an accurate analysis.

Melo et al. presented a rejuvenation model for cloud data centers to evaluate the availability in the steady-state [

10]. In their model, only one active VMM and one standby VMM were considered. Our proposed model is similar to the model presented in Melo et al. [

10]. In both models, the age of a VMM increases when a new workload is admitted. However, in Melo et al. [

10], rejuvenation is periodically performed, and the status of a VMM is checked at the end of each period to ensure that performance degradation is due to the age of the VMM and not the overload of the data center itself. If rejuvenation is required, all active VMs on the target VMM are moved to the standby VMM to continue their execution. Notably, the model with only one active VMM is not realistic for cloud data centers. In contrast to Melo et al. [

10], we consider several active VMMs in our model.

Melo et al. studied cloud data centers in different scenarios and analyzed different periods for setting rejuvenation timer in each scenario [

29]. The authors specified the best rejuvenation time for achieving high availability in the steady-state. Liu et al. studied rejuvenation from different aspects [

16]. Amongst the presented ideas in Liu et al. [

16], saving a system’s checkpoints periodically can also be used in case of failure to recover the state of the system. The authors applied warm rejuvenation, in which paused VMs are maintained on external memory. Compared to the approach presented in Liu et al. [

16], both migration rejuvenation and power-awareness are simultaneously applied in our presented model.

Roohitavaf et al. represented a SAN model using the Mӧbius tool to evaluate the availability and power consumption of cloud data centers [

22]. Based on their evaluation, by switching off low load VMMs, providers not only decrease the power consumption of a cloud data center but also reach higher availability if the migration time is short enough. Roohitavaf et al. [

22] only considered the energy saving aspect of cloud data centers; in contrast, we studied the simultaneous effect of power saving and rejuvenation on cloud data centers.

There are also other related studies in the field of VMM rejuvenation that presented models and schemes using techniques other than analytical modeling. Kourai and Ooba proposed VMBeam, which enables lightweight software rejuvenation of virtualized systems using zero-copy migration [

30]. The proposed VMBean has been implemented in Xen, an open source hypervisor. Sudhakar et al., proposed a neural network-based approach to approximate the non-linear relationship between resource usage statistics and the time to failure of VMMs caused by aging-related errors [

31]. Araujo et al., investigated the software aging effects in the Eucalyptus framework, considering workloads composed of intensive requests for remote storage attachment and VM instantiations [

32]. They also presented an approach that applies time series analysis to schedule rejuvenation to reduce the downtime by predicting the proper moment to perform the rejuvenation.

In summary, the approaches that analyze the performance, availability, and power consumption of VMM rejuvenation tasks in cloud systems using analytical models are rare. In contrast to existing work, our presented scheme allows power-aware rejuvenation of VMMs using a two-threshold mechanism. The proposed model supports PM heterogeneity, which has not been considered in previously presented models. Moreover, the model considers several other aspects of real cloud systems that have not usually been considered in previously published reports. In addition, the number of PMs/VMMs and the number of VMs that can simultaneously be serviced on a PM/VMM are larger than those modeled in other approaches.

4. System Description

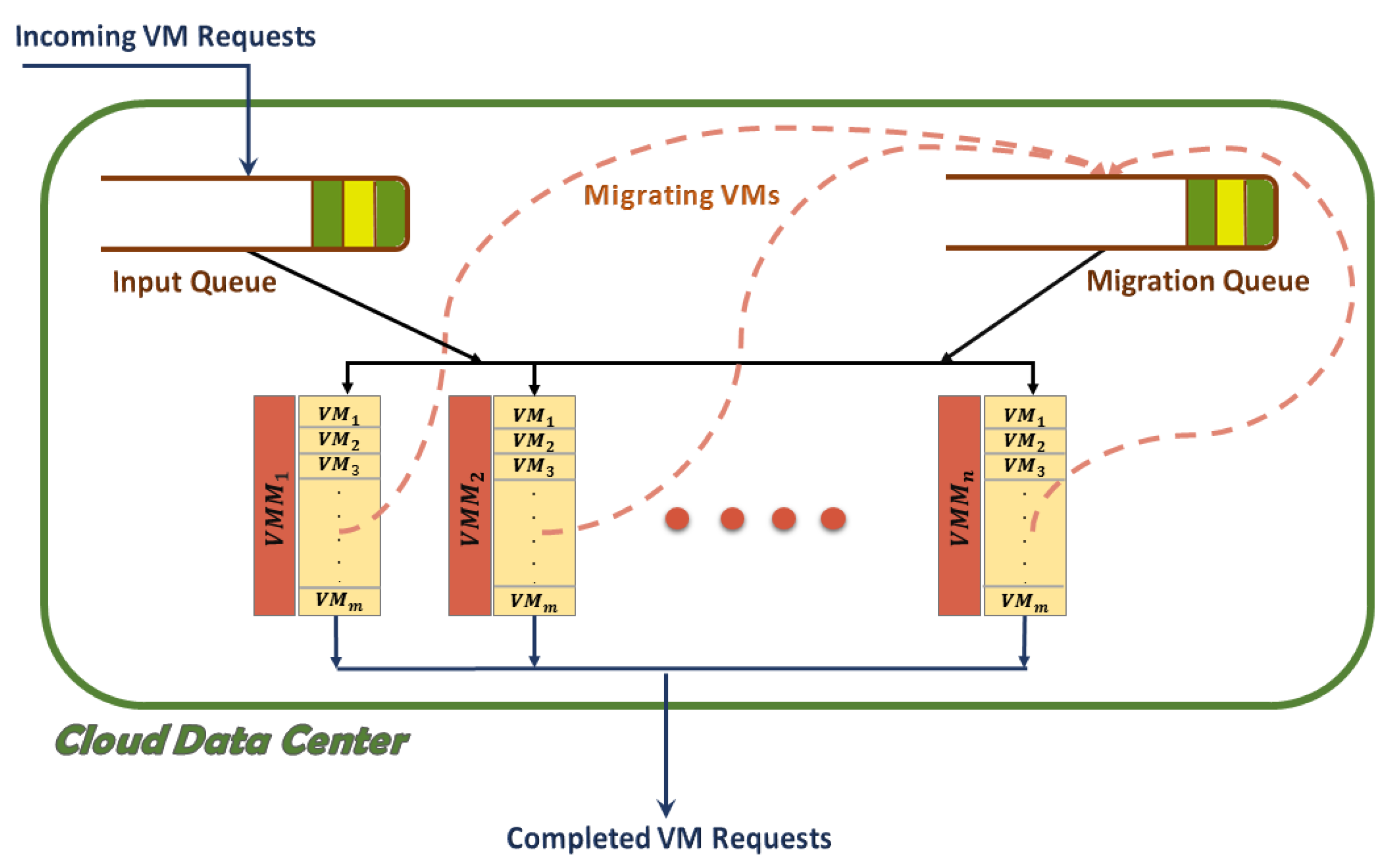

Figure 1 shows the structure of an IaaS cloud system where user requests are expressed as requests for VMs. This system contains

N physical machines, each one with an active VMM. User requests are placed in the input queue upon arrival. The migration queue is used when VMs need to be migrated between VMMs.

Right after system startup, all PMs of the cloud data center are assumed to be switched-off and they are switched on only on demand after receiving requests for VMs. Since active PMs consume more power, as long as there is free capacity on active VMMs, no PM is switched on. In other words, a new PM is switched on only if none of the active VMMs have sufficient capacity to accept migrating or requested VMs. When there is a VM request in the input queue, one of the active VMMs with free capacity is randomly selected to service the request. Similarly, when there is a VM in the migration queue, one of the active VMMs with free capacity is randomly chosen to service the migrating VM. We supposed that a PM can host up to M VMs concurrently. After finishing the tasks defined for a VM, the resources assigned to that VM are returned to their corresponding VMM, thereby providing the VMM some capacity to accept another VM. In order to make the model more realistic, we assumed that the system is able to handle two different types of VMs: big and small VMs. Big VMs are VMs that have a greater impact on VMM aging due to having a longer runtime or equivalently, using a more significant share of system resources. Here, we assumed that a big VM has a runtime twice as large as the runtime of a small VM.

A VMM may fail due to different reasons. One of the notable causes of VMM failure is aging-related errors. If an aged VMM is not rejuvenated at in time, the VMM fails. Thus, the failure of all VMs running on top of that VMM is possible. In serving a VM, the age of its hosting VMM increases, but completion of that VM does not decrease the VMM’s age. This is because running a VM has some adverse effects on the corresponding VMM that are not removed once VM’s tasks are completed. Therefore, both the number and the type of VMs served by a VMM can be considered indicators of its age. By VMM age, we mean the cumulative workloads that are accepted and served by that VMM after its last reboot. In our considered system, once the age of a VMM reaches the maximum threshold F, regardless of there being free capacity on other VMMs, the active VMs of that VMM are put in the migration queue, and the VMM is then rejuvenated. In order to improve system reliability, when the age of a VMM reaches the threshold F′ (F′ < F), and if other VMMs have enough free capacity to accept the VMs running on that VMM, these VMs are put in the migration queue, and the aged VMM is rejuvenated before reaching the threshold F. With the aim of power saving, when a VMM is relatively idle and other VMMs can serve its active VMs, these VMs are put in the migration queue to be migrated to those VMMs, and the emptied PM is switched off.

5. The Proposed Model

The proposed model is composed of five sub-models shown in

Figure 2. The insertion sub-model (

Figure 2a) models the arrival of user requests to the system, the migration sub-model (

Figure 2b) addresses the migration of VMs between VMMs, the service and green computing sub-model (

Figure 2c) models serving VMs and termination of lightly loaded VMMs with the aim of green computing goals, the rejuvenation sub-model (

Figure 2d) addresses rejuvenation of the aged VMMs, and the failure sub-model (

Figure 2e) models the failure of VMMs. We assumed that the times assigned to all timed activities follow an exponential distribution [

36,

37,

38,

39]. In

Figure 2a, the arrival of new requests to the system and selection of appropriate VMM to handle them are modeled. Timed activity

models the arrival of VM requests. Upon completion of

, a token is inserted into place

. Place

represents the input queue, and a token in this place serves as a request waiting in the queue. Once the number of tokens in place

reaches the threshold

, representing the capacity of the input queue, the input gate

prevents timed activity

from completion. The completion rate of activity

is

. The timed activity

randomly selects one of the active VMMs to serve a requested VM. Whenever at least one token is in place

, representing a VM request, and there is enough capacity on one of the VMMs to accept a new VM, timed activity

is enabled and can complete the activity. Upon completion of this activity, one token is removed from place

by input gate

. If more than one VMM can accept an incoming request,

randomly chooses the destination VMM. When

VMMi is chosen by this activity, one of the output gates

or

is selected. If the requested VM is small, the output gate

is enabled; otherwise, the output gate

is enabled. Here, we assumed that the chance of arrival of a small VM is twice as large as that of a big VM. In the output gate

, the number of active VMs running on VMM

i and the age of that VMM are both increased by one. However, in the output gate

, the number of active VMs running on VMM

i increases by one and the related age increases by two. The completion rate of activity

depends on the number of VMs that can be assigned to all available VMMs and the number of VM requests waiting in place

whichever is smaller—and the initiation rate of a VM is

. The rate of activity

is demonstrated in

Table 1. The predicates and functions corresponding to the input gates of the insertion sub-model are presented in rows 1 and 2 of

Table 2, and the functions corresponding to the output gates of the insertion sub-model are presented in rows 1 and 2 of

Table 3. In these tables, the

function returns the number of tokens inside place

. Notably, though places, activities, and gates of the proposed sub-models are not explicitly connected to each other, these sub-models are implicitly interconnected. The actual connections can be considered by reviewing the predicates and functions of the gates of these sub-models presented in

Table 2 and

Table 3. As an example, the predicate of

shown in

Table 2 indicates that the activation of this input gate of insertion sub-model is dependent on the markings of places

and

, which are elements of service and green computing sub-model and migration sub-model, respectively.

In

Figure 2b, the migration of VMs between VMMs is modeled. When the rejuvenation mechanism or the power saving scheme requires a VM to migrate, a token is put into place

, representing the migration queue. Then, timed activity

randomly selects one of the active VMMs that have enough capacity to accept the migrating VM. When there is no VMM with free capacity to serve a migrating VM, input gate

prevents timed activity

from completion. Assuming that

VMMi is selected to serve the migrating VM, if the VM is small, output gate

is enabled, and the number of active VMs and the age of that VMM increases by one. If the migrating VM is large, output gate

is enabled, the number of active VMs running on

VMMi increases by one, and the age of the corresponding VMM increases by two. The completion rate of activity

depends on the number of VMs that can be assigned to all available VMMs and the number of VMs waiting for migration in place

, whichever is smaller, and the migration rate of a VM is called

[

40]. The rate of activity

is demonstrated in

Table 1. We also assumed a constant number for probability of failure of a VM migration task. Therefore, we assumed that output gate

is enabled with probability

. In this output gate, the removed token from place

turns back to place

. Using this mechanism, the migration of a VM fails and should be retried. The predicate and function corresponding to the input gate of the migration sub-model are presented in row 3 of

Table 2, and the output functions of the output gates of this sub-model are presented in rows 3–5 of

Table 3.

In

Figure 2c, timed activity

models the serving process of a VM on the VMM

i. Upon completion of activity

, the number of tokens in place

decreases by one. The tokens in place

represent the number of active VMs on the VMM

i. When there is no token in place

, input gate

disables time activity

. Serving individual VMs occurs at rate

, so the actual service rate of all VMs running on a VMM during the execution of timed activity

is equal to

, where

indicates the number of tokens inside place

. In other words, the actual service rate of VMs running on a VMM is the product of the

and the number of active VMs running on that VMM.

Timed activity , with completion rate , models the PM wakeup process. The tokens inside place represent the number of inactive or switched-off PMs in the system. Right after system start up, all PMs are assumed to be switched off, so place holds as many tokens as there are PMs in the cloud data center. Upon completion of activity , a token is removed from place by input gate . If there is no token in places or , input gate prevents the completion of activity . If more than one token exists in place , representing several switched-off PMs in the system, one token is randomly chosen by timed activity . In this case, PMi is activated by enabling output gate and becomes ready for accepting VMs.

Instantaneous activity

models the shutdown process of the active

VMMi that is lightly loaded or has no workload. When other active VMMs have enough free capacity to accept all VMs running on top of

VMMi, the VMs are placed into the migration queue, and then

VMMi and its hosting PM are switched off. Upon completion of instantaneous activity

, the number of tokens in place

increases by one, and the number of tokens in place

is set to zero. If the tokens in place

cannot be moved to places

(

i ≠ j), input gate

prevents instantaneous activity

from completion because other VMMs are unable to accept VMs running on

VMMi. The predicates and functions corresponding to the input gates of the service and green computing sub-model are presented in rows 4–6 of

Table 2, and the function corresponding to the output gate of this sub-model is presented in row 6 of

Table 3.

In

Figure 2d, the number of tokens in place

represents the age of the VMM

i. Therefore, the number of tokens in place

is set to zero just after rejuvenation or reboot of VMM

i. When the number of tokens in place

reaches threshold

, the input gate

is enabled and moves tokens from place

to place

. By applying this mechanism, the migration of VMs from

VMMi to other VMMs of the data center is modeled when a VMM has to be rejuvenated. Timed activity

models the rejuvenation process of a VMM with rate

. Upon completion of this activity, the number of tokens in place

is set to zero by output gate

, which represents the age of the newly rejuvenated

VMMi. A rejuvenated VMM is active and can accept and serve VMs. However, if there is no VM to be hosted by the rejuvenated VMM, the VMM is switched off by input gate

modeled in the service and green computing sub-model.

The model also uses timed activity

for early rejuvenation of aged VMM

i. When the number of tokens in place

reaches the threshold

, where

<

, and there are less tokens in place

than free capacity on other active VMMs, the input gate

is enabled. In this situation, input gate

moves all tokens of place

to place

. The completion rate of timed activity

is

. The output gate

sets the number of tokens in place

to zero right after the rejuvenation process. The predicates and functions corresponding to the input gates of the rejuvenation sub-model are presented in rows 7 and 8 of

Table 2, and the functions corresponding to the output gates of this sub-model are presented in rows 7 and 8 of

Table 3.

In

Figure 2e, the failure of active VMMs in the system is modeled. Whenever

VMMi fails, a token is put in place

. After failure detection and during the repair of VMM

i, a token is put into place

. Timed activity

models the failure event of VMM

i. If there is a token in place

or there are as many tokens in place

as in

, the input gate

disables activity

. Upon completion of activity

, the number of tokens in place

is set to zero by output gate

, and the number of tokens in place

increases by one. Once VMM

i is repaired, the numbers of tokens in places

and

become zero, and the VMM is reactivated. The completion rate of timed activity

is the product of the number of tokens in place

and the failure rate of an active VMM called

. The greater the age of a VMM, the greater the failure probability of that VMM.

Timed activity

represents the repair process of VMM

i. When there is a token in place

, timed activity

is enabled and can complete the activity. Then, the number of tokens in places

and

is set to zero by the output gate

. The completion rate of timed activity

is

. The predicate and function corresponding to the input gate of failure sub-model are presented in row 9 of

Table 2, and the functions corresponding to the output gates of this sub-model are presented in rows 9 and 10 of

Table 3.

6. Performance Evaluation

In this section, the desired output measures that can be obtained using the proposed SAN model are defined, and then the proposed model is evaluated. The output measures are computed applying the Markov reward approach [

41]. In this approach, appropriate reward rates are assigned to each feasible marking of a SAN model, and then the expected accumulated reward is computed in the steady state. To this end, the proposed analytical model is solved with the Mӧbius tool [

33]. In the following formulas,

returns the number of tokens inside place

.

The ratio of serving VMs to accepted requests is defined as the ratio of the steady-state number of VMs that are being served to the steady-state number of accepted VM requests in the cloud system. This measure is computed using Equation (1):

The other measure that is obtained by applying the proposed SAN model is the probability of VMM failure, which can be computed by the following equation:

One of the main goals of introducing rejuvenation in cloud systems is to reduce the mean number of failed VMs, which can be computed by the product of the mean number of VMs that are being served on each VMM and the probability of VMM failure in the steady state. The mean number of failed VMs can be calculated as follows:

The

total power consumption of cloud servers is another measure which can be computed by the proposed model and is the mean value of power consumed by PMs, VMMs, and VMs of the data center. It can be computed by Equation (4).

where

is the average power consumed by a switched-on PM running a VMM,

is the average power consumed by a VM, and

N denotes the number of PMs in the system.

In order to evaluate the proposed model, we assumed a system with 3 PMs and the maximum of 3 VMs per each VMM. In this setting, the input parameter is 8, which means a VMM of age 8 could be rejuvenated if other VMMs have enough capacity to accept its VMs. The parameter is set to 10, which means a VMM of age 10 has to be rejuvenated instantaneously. In other words, regardless of the capacity of other VMMs, the active VMs of a VMM aged 10 should be placed on the migration queue, and the VMM should be rejuvenated.

The other values set for the input parameters of the proposed model are presented in

Table 4. Most of the values used herein as input parameters of the proposed SAN model are in the range of the values considered in other related work [

10,

11,

15,

22,

29,

36,

37,

39,

42]. In order to compare different situations, the proposed model was evaluated in three modes: (1) pure power saving, (2) pure rejuvenation, and (3) with simultaneous application of rejuvenation and power saving, called power-aware rejuvenation. Modes 1 and 2 are the baselines for our main proposed scheme, which is mode

3. In mode 3, all five sub-models of

Figure 2 are used. In mode 1, the rejuvenation sub-model shown in

Figure 2d is omitted. In mode 2, the power saving sub-model represented in

Figure 2c is removed, and the model is adjusted so that no token would be placed in place

. The results obtained from solving the proposed model in the three modes are presented in

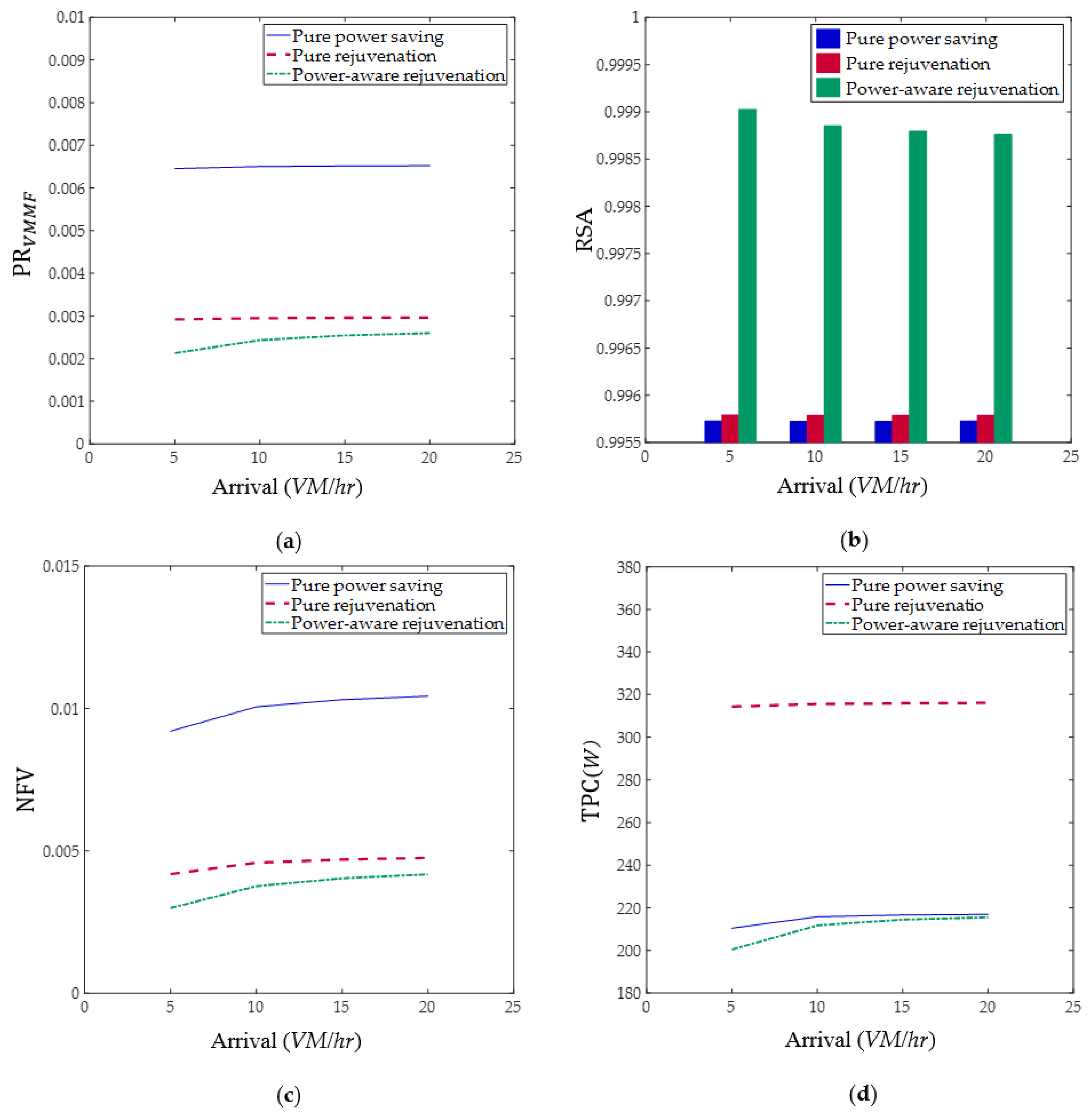

Figure 3.

Figure 3a shows the average probability of VMM failure in the proposed model in the three evaluation modes. One of the main causes of VMM failure is the increasing number of served VMs and the errors introduced due to VMM aging. With the pure rejuvenation scheme, aged VMMs are identified and rejuvenated before age-induced failures occur. Thus, the forced rejuvenation of mode 2 decreases the probability of VMM failure compared to mode 1. The power saving scheme switches off idle VMMs, which in turn restarts the age of those VMMs, and result in even lower probability of VMM failure when combined with pure rejuvenation in mode 3.

As shown in

Figure 3b, simultaneous modeling of power saving and rejuvenation schemes in mode 3 improves the availability-related measure RSA of the cloud system. Such implementation of power-aware rejuvenation involves the migration of VMs between VMMs, so the number of tokens in place

increases and the denominator of the fraction in Equation (1) increases. However, as shown in

Figure 3a, pure rejuvenation decreases the average probability of VMM failure, and thereby increases the mean number of active VMMs, increasing the average number of VMs that can be served at a given time. Since

increases more than

, power-aware rejuvenation causes a significant improvement in the ratio of serving VMs to accepted requests.

Figure 3c represents the effect of applying rejuvenation and power-awareness schemes to cloud systems on the mean number of failed VMs. The greater the average probability of VMM failure, the greater the number of failed VMs. Consequently, for the same VMM failure probability results shown in

Figure 3a, mode 3 performed the best and mode 1 the worst.

In

Figure 3d, the impact of software rejuvenation and power-awareness on power consumption of cloud system is considered. As indicated in Equation (4), the power consumption is directly related to the number of switched-on PMs and active VMs in a data center. In the pure rejuvenation mode, mode 2, all PMs are always on and therefore the power consumption in this mode is the greatest compared to the power consumed in modes 1 and 3, which are power-aware. In mode 3, newly rejuvenated VMMs are free of running VMs. Thus, they are more likely to be switched off by the power saving mode. Therefore, the total power consumption of mode 3 is less than that of mode 1.

Figure 3d acknowledges the fact that by applying rejuvenation and power saving simultaneously, the number of active PMs decreases compared to the situation where rejuvenation is not applied.