The Design and Engineering of a Fall and Near-Fall Detection Electronic Textile

Abstract

1. Introduction

2. Materials and Methods

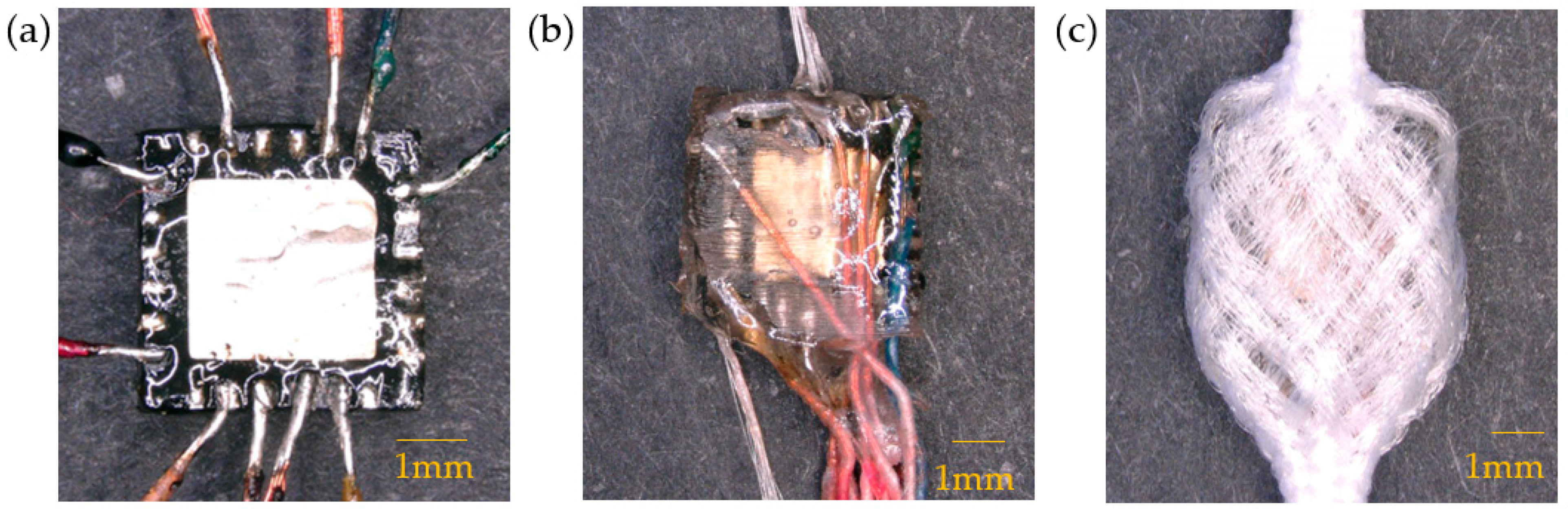

2.1. Hardware and Prototype

2.2. Testing Protocol

2.2.1. Participants

2.2.2. Activities

2.3. Data Analysis and Machine Learning Algorthm

3. Results

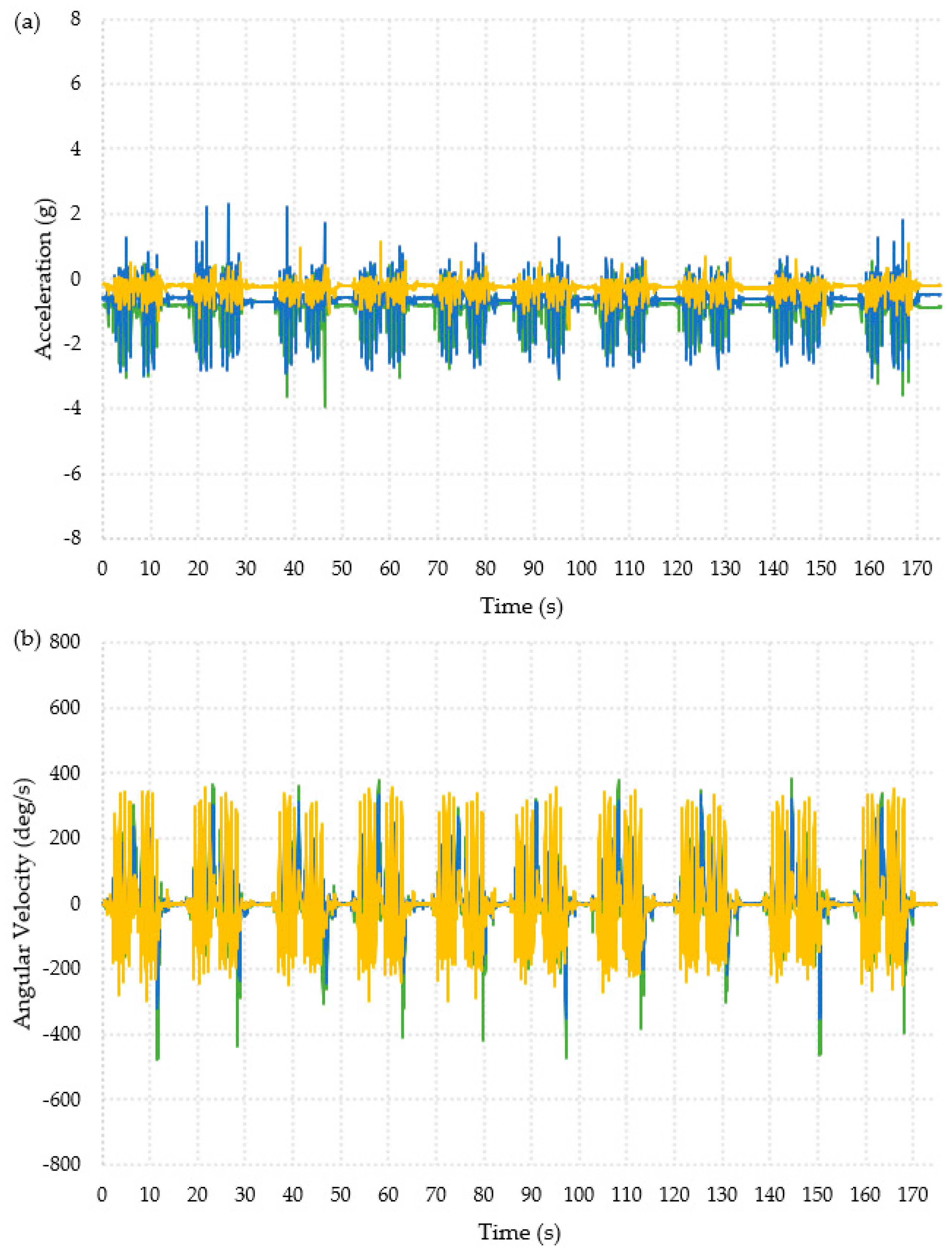

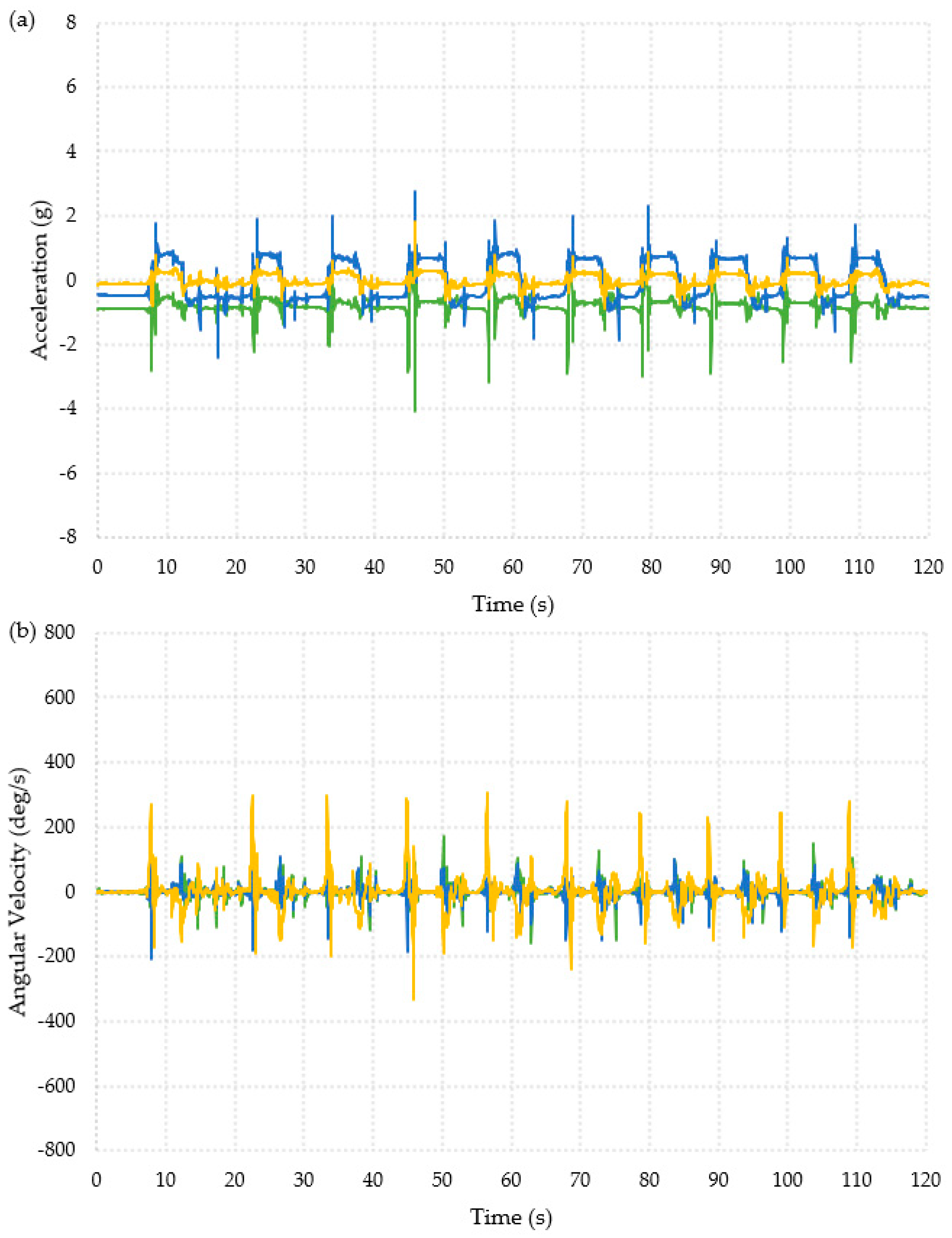

3.1. Visual Representation of the Data

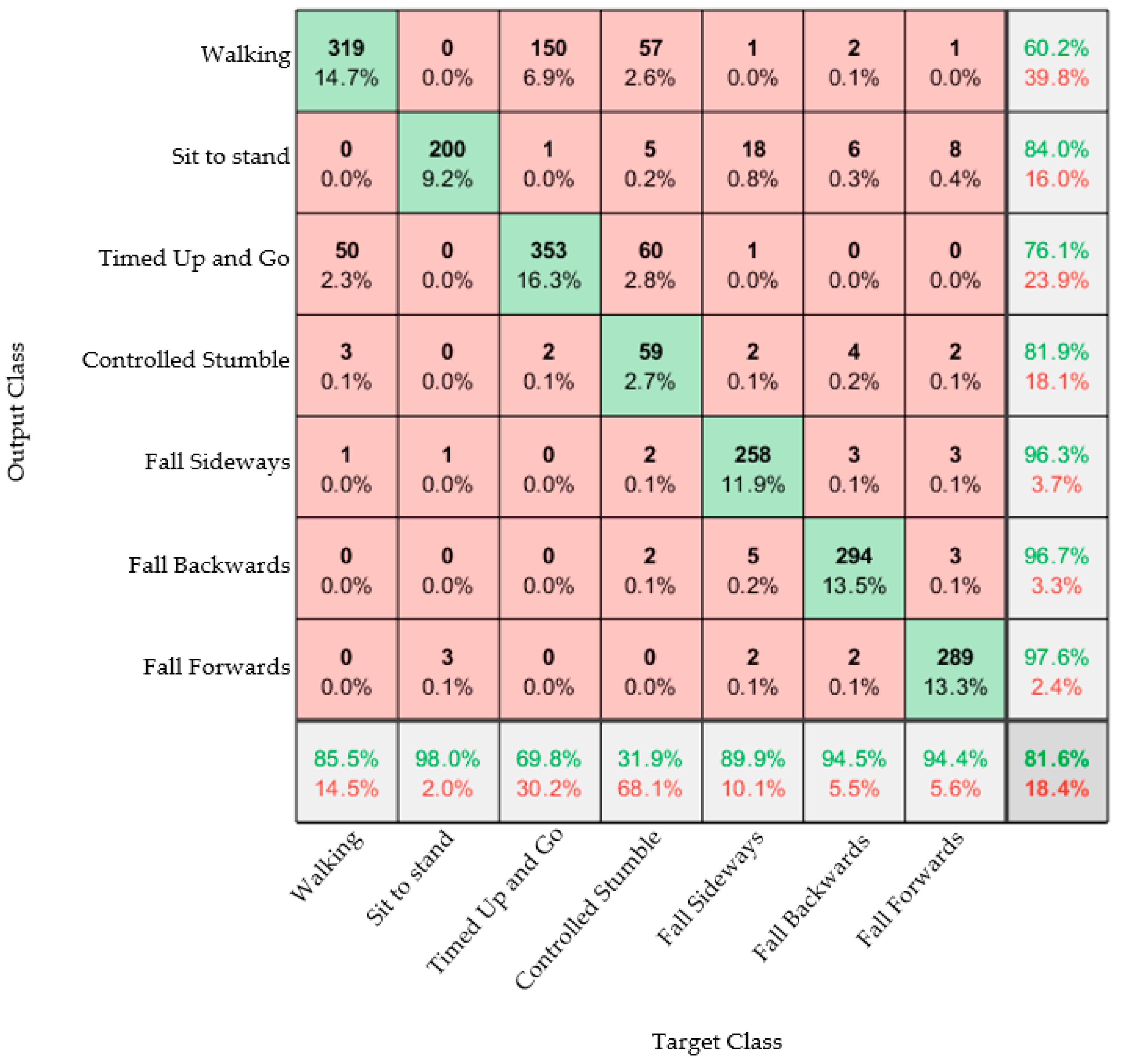

3.2. Using a Machine Learning Algorithm to Identify Falls

3.3. Controlled Stumble Data

3.4. Feedback on Sock Design

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Age UK Later Life in the United Kingdom. Available online: https://www.ageuk.org.uk/globalassets/age-uk/documents/reports-and-publications/later_life_uk_factsheet.pdf (accessed on 3 February 2021).

- Ambrose, A.F.; Paul, G.; Hausdorff, J.M. Risk factors for falls among older adults: A review of the literature. Maturitas 2013, 75, 51–61. [Google Scholar] [CrossRef] [PubMed]

- Pang, I.; Okubo, Y.; Sturnieks, D.; Lord, S.R.; Brodie, M.A. Detection of Near Falls Using Wearable Devices: A Systematic Review. J. Geriatr. Phys. Ther. 2019, 42, 48–56. [Google Scholar] [CrossRef] [PubMed]

- Nagai, K.; Yamada, M.; Komatsu, M.; Tamaki, A.; Kanai, M.; Miyamoto, T.; Tsukagoshi, R.; Tsuboyama, T. Near falls predict substantial falls in older adults: A prospective cohort study. Geriatr. Gerontol. Int. 2016, 17, 1477–1480. [Google Scholar] [CrossRef] [PubMed]

- Ren, L.; Peng, Y. Research of Fall Detection and Fall Prevention Technologies: A Systematic Review. IEEE Access 2019, 7, 77702–77722. [Google Scholar] [CrossRef]

- Vallabh, P.; Malekian, R. Fall detection monitoring systems: A comprehensive review. J. Ambient. Intell. Humaniz. Comput. 2017, 9, 1809–1833. [Google Scholar] [CrossRef]

- Nooruddin, S.; Islam, M.; Sharna, F.A.; Alhetari, H.; Kabir, M.N. Sensor-based fall detection systems: A review. J. Ambient. Intell. Humaniz. Comput. 2021, 13, 2735–2751. [Google Scholar] [CrossRef]

- Criado-Perez, C. Invisible Women: Exposing Data Bias in a World Designed for Men; Chatto & Windus: London, UK, 2019; ISBN 9781473548299. [Google Scholar]

- Falls. Available online: https://www.who.int/news-room/fact-sheets/detail/falls (accessed on 26 April 2021).

- Rahemtulla, Z.; Hughes-Riley, T.; Dias, T. Vibration-Sensing Electronic Yarns for the Monitoring of Hand Transmitted Vibrations. Sensors 2021, 21, 2780. [Google Scholar] [CrossRef] [PubMed]

- Lugoda, P.; Hayes, S.C.; Hughes-Riley, T.; Turner, A.; Martins, M.V.; Cook, A.; Raval, K.; Oliveira, C.; Breedon, P.; Dias, T. Classifying Gait Alterations Using an Instrumented Smart Sock and Deep Learning. IEEE Sens. J. 2022, 22, 23232–23242. [Google Scholar] [CrossRef]

- Rahemtulla, Z.; Hughes-Riley, T.; Kaner, J.; Dias, T. Using an Inertial Motion Unit to Find the Optimal Location to Continuously Monitor for near Falls. In Proceedings of the Sheldon 3rd Online Conference Meeting: Solutions for Ageing Well at Home, in the Community and at Work, Online, 14 September 2021; pp. 135–142. [Google Scholar]

- Wickenden, R.; Rahemtulla, Z. What Would You Be Willing to Wear to Monitor Your Risk of Falls? In Proceedings of the E-textiles Conference 2022, Nottingham, UK, 8–10 November 2022. [Google Scholar]

- Özdemir, A.T.; Barshan, B. Detecting Falls with Wearable Sensors Using Machine Learning Techniques. Sensors 2014, 14, 10691–10708. [Google Scholar] [CrossRef] [PubMed]

- GitHub-Adafruit/Adafruit_MPU6050: Arduino Library for MPU6050. Available online: https://github.com/adafruit/Adafruit_MPU6050 (accessed on 22 February 2023).

- Logging Arduino Serial Output to CSV/Excel (Windows/Mac/Linux)-Circuit Journal. Available online: https://circuitjournal.com/arduino-serial-to-spreadsheet (accessed on 22 February 2023).

- Stamm, T.A.; Pieber, K.; Crevenna, R.; Dorner, T.E. Impairment in the activities of daily living in older adults with and without osteoporosis, osteoarthritis and chronic back pain: A secondary analysis of population-based health survey data. BMC Musculoskelet. Disord. 2016, 17, 139. [Google Scholar] [CrossRef] [PubMed]

- National Institute for Health and Care Excellence Falls-Risk Assessment. Available online: https://cks.nice.org.uk/falls-risk-assessment#!scenario (accessed on 3 July 2019).

- Turner, A.; Scott, D.; Hayes, S. The Classification of Multiple Interacting Gait Abnormalities Using Insole Sensors and Machine Learning. In Proceedings of the 2022 IEEE International Conference on Digital Health, ICDH 2022, Barcelona, Spain, 10–16 July 2022; pp. 69–76. [Google Scholar] [CrossRef]

- Turner, A.; Hayes, S. The Classification of Minor Gait Alterations Using Wearable Sensors and Deep Learning. IEEE Trans. Biomed. Eng. 2019, 66, 3136–3145. [Google Scholar] [CrossRef] [PubMed]

- Srikanth, V.K.; Fryer, J.L.; Zhai, G.; Winzenberg, T.M.; Hosmer, D.; Jones, G. A meta-analysis of sex differences prevalence, incidence and severity of osteoarthritis. Osteoarthr. Cartil. 2005, 13, 769–781. [Google Scholar] [CrossRef] [PubMed]

- Petrovská, N.; Prajzlerová, K.; Vencovský, J.; Šenolt, L.; Filková, M. The pre-clinical phase of rheumatoid arthritis: From risk factors to prevention of arthritis. Autoimmun. Rev. 2021, 20, 102797. [Google Scholar] [CrossRef] [PubMed]

, y-axis =

, y-axis =  , z-axis =

, z-axis =  . (a) Acceleration. (b) Angular velocity.

. (a) Acceleration. (b) Angular velocity.

, y-axis =

, y-axis =  , z-axis =

, z-axis =  . (a) Acceleration. (b) Angular velocity.

. (a) Acceleration. (b) Angular velocity.

, y-axis =

, y-axis =  , z-axis =

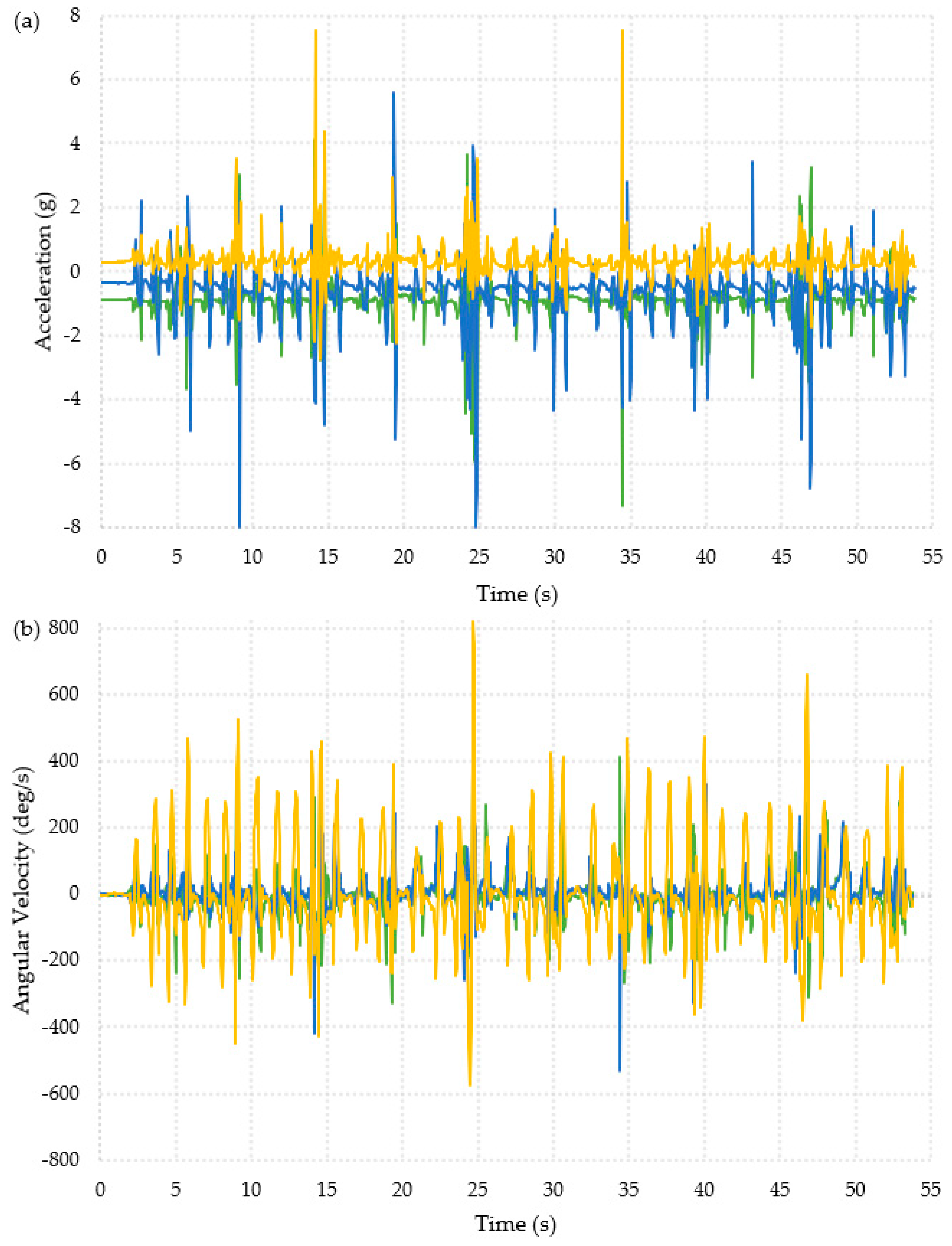

, z-axis =  . Ten repeated falls are shown. (a) Acceleration. (b) Angular velocity.

. Ten repeated falls are shown. (a) Acceleration. (b) Angular velocity.

, y-axis =

, y-axis =  , z-axis =

, z-axis =  . Ten repeated falls are shown. (a) Acceleration. (b) Angular velocity.

. Ten repeated falls are shown. (a) Acceleration. (b) Angular velocity.

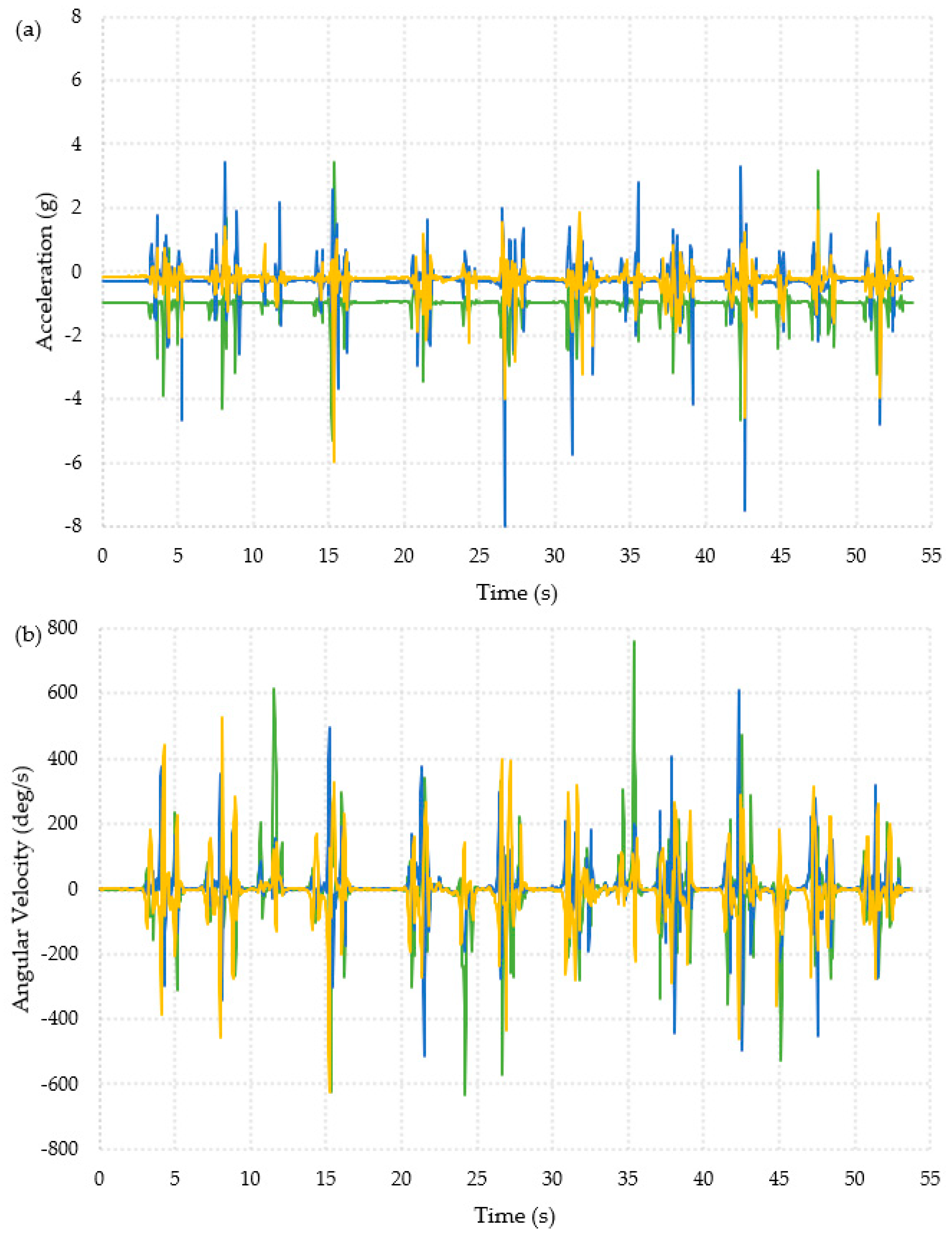

, y-axis =

, y-axis =  , z-axis =

, z-axis =  . Ten repeated stumbles are shown. (a) Acceleration. (b) Angular velocity.

. Ten repeated stumbles are shown. (a) Acceleration. (b) Angular velocity.

, y-axis =

, y-axis =  , z-axis =

, z-axis =  . Ten repeated stumbles are shown. (a) Acceleration. (b) Angular velocity.

. Ten repeated stumbles are shown. (a) Acceleration. (b) Angular velocity.

, y-axis =

, y-axis =  , z-axis =

, z-axis =  . Ten repeated stumbles are shown. (a) Acceleration. (b) Angular velocity.

. Ten repeated stumbles are shown. (a) Acceleration. (b) Angular velocity.

, y-axis =

, y-axis =  , z-axis =

, z-axis =  . Ten repeated stumbles are shown. (a) Acceleration. (b) Angular velocity.

. Ten repeated stumbles are shown. (a) Acceleration. (b) Angular velocity.

| Sequence input layer |

| Dropout layer |

| BiLSTM layer (200 nodes) |

| Dropout layer |

| ReLU layer |

| Fully connected layer |

| Softmax Layer |

| Output Layer |

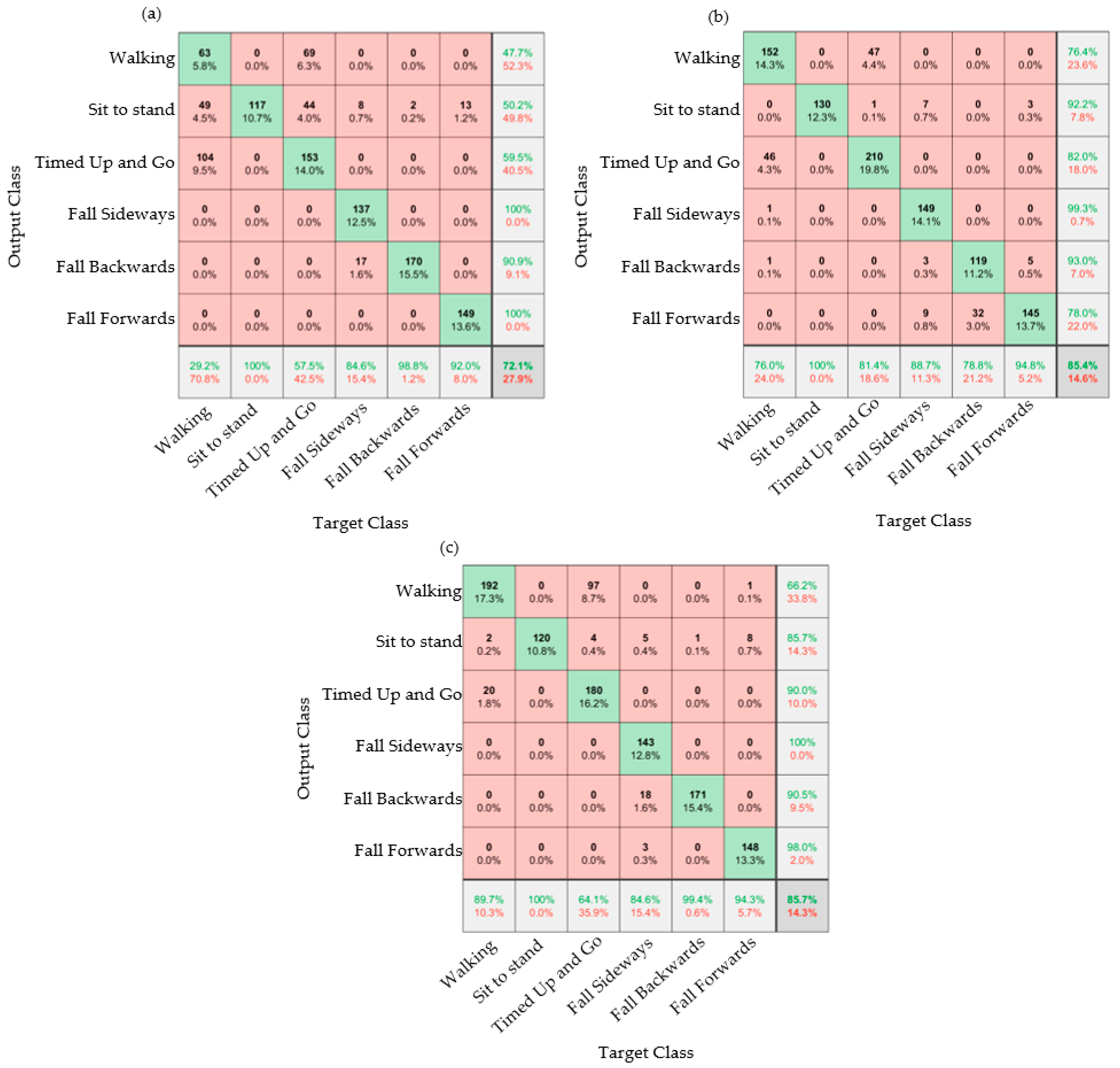

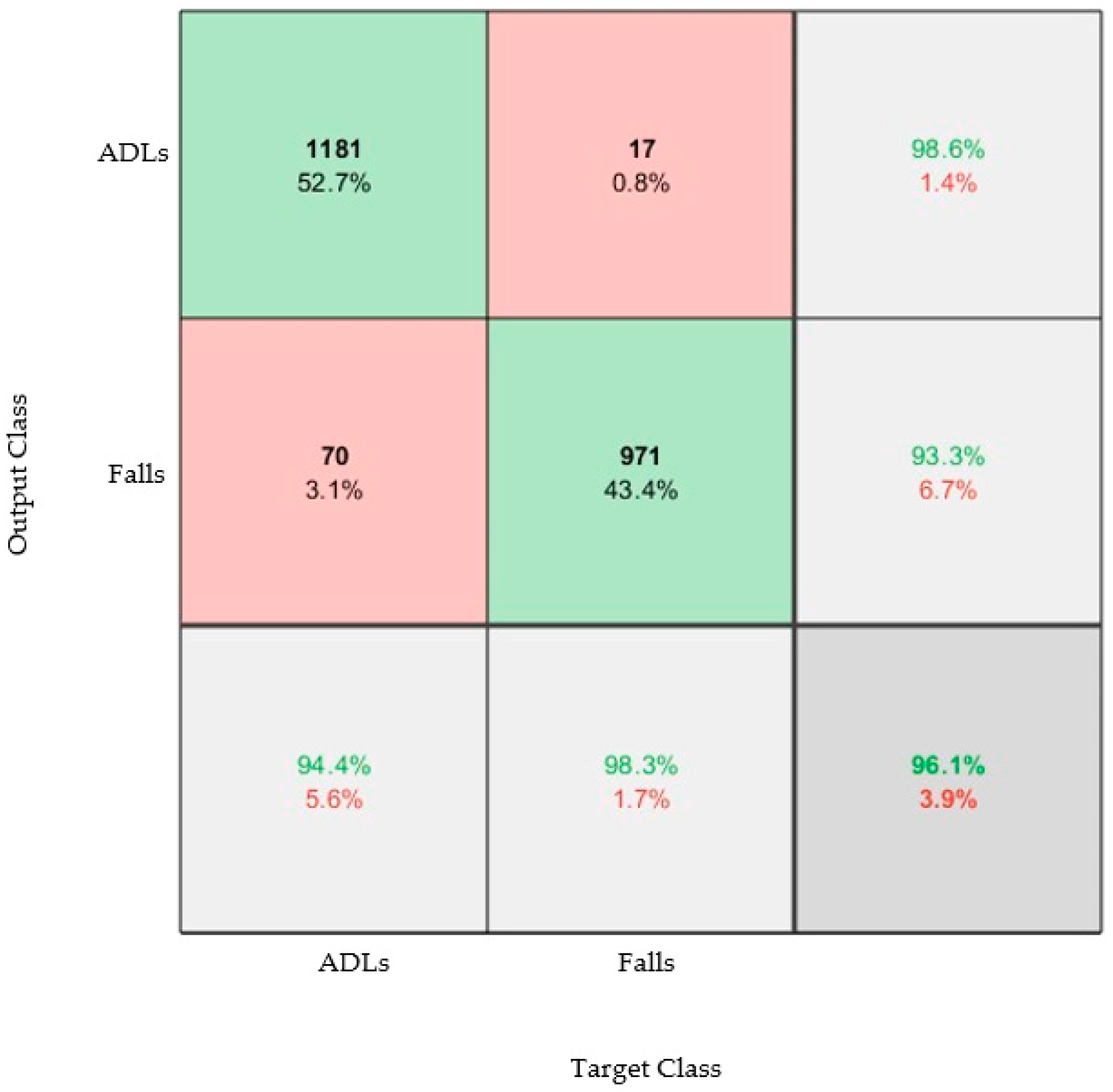

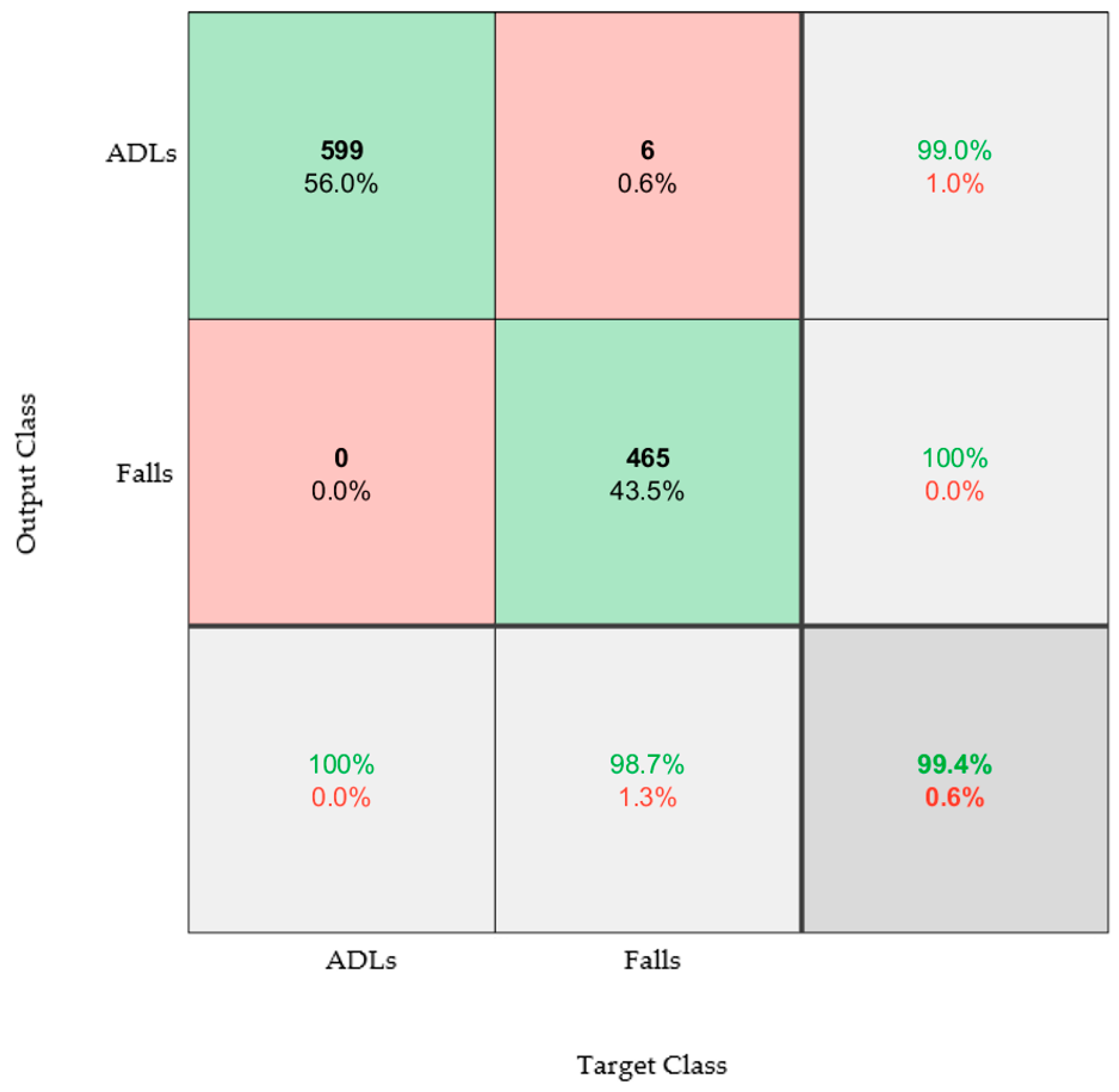

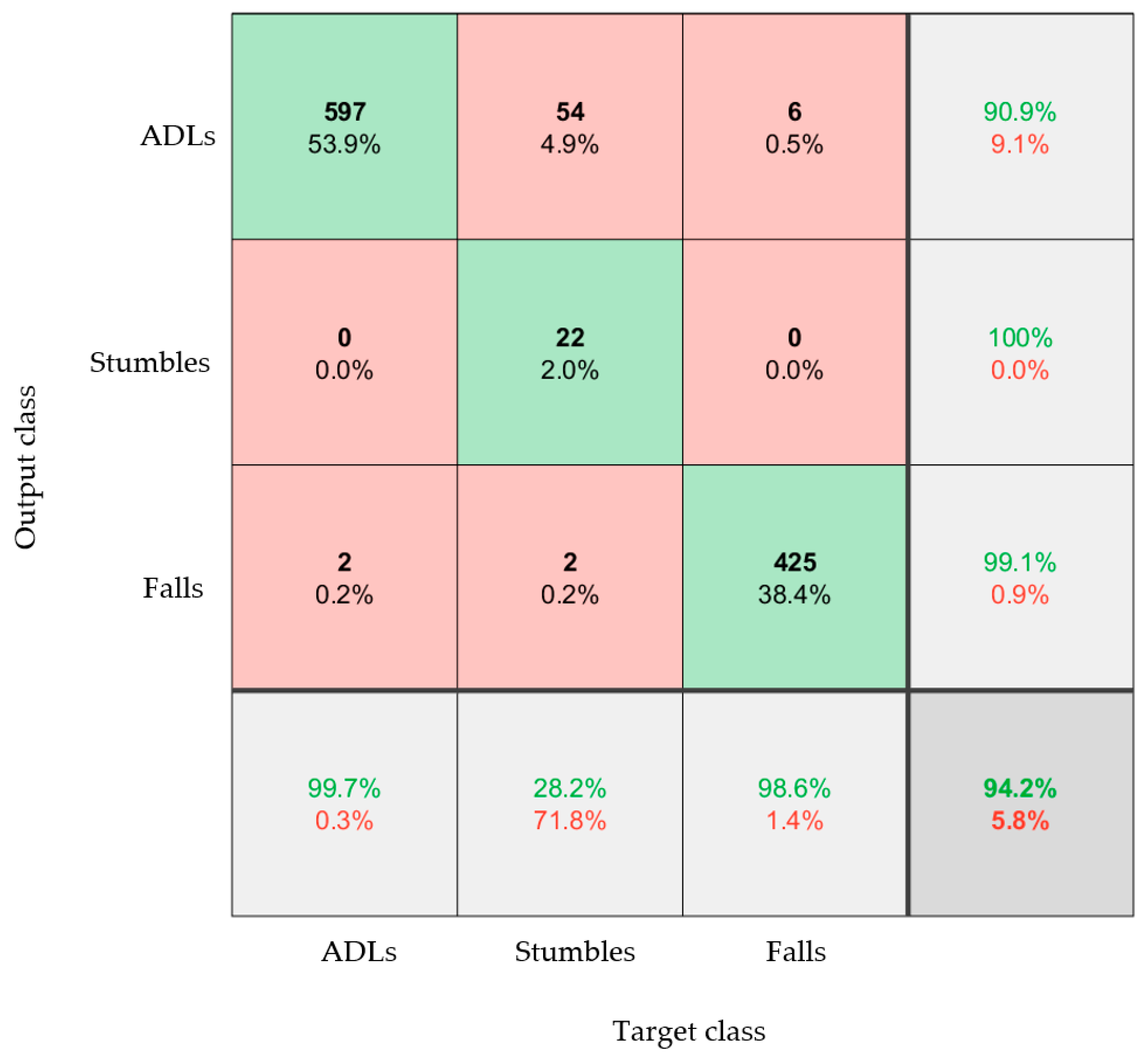

| Right Foot | Left Foot | Right and Left Foot | |

|---|---|---|---|

| Acceleration data | 72.1% | 73.5% | 73.2% |

| Angular velocity data | 85.4% | 87.9% | 83.9% |

| Combined acceleration and angular velocity | 85.7% | 71.6% | 81.2% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahemtulla, Z.; Turner, A.; Oliveira, C.; Kaner, J.; Dias, T.; Hughes-Riley, T. The Design and Engineering of a Fall and Near-Fall Detection Electronic Textile. Materials 2023, 16, 1920. https://doi.org/10.3390/ma16051920

Rahemtulla Z, Turner A, Oliveira C, Kaner J, Dias T, Hughes-Riley T. The Design and Engineering of a Fall and Near-Fall Detection Electronic Textile. Materials. 2023; 16(5):1920. https://doi.org/10.3390/ma16051920

Chicago/Turabian StyleRahemtulla, Zahra, Alexander Turner, Carlos Oliveira, Jake Kaner, Tilak Dias, and Theodore Hughes-Riley. 2023. "The Design and Engineering of a Fall and Near-Fall Detection Electronic Textile" Materials 16, no. 5: 1920. https://doi.org/10.3390/ma16051920

APA StyleRahemtulla, Z., Turner, A., Oliveira, C., Kaner, J., Dias, T., & Hughes-Riley, T. (2023). The Design and Engineering of a Fall and Near-Fall Detection Electronic Textile. Materials, 16(5), 1920. https://doi.org/10.3390/ma16051920