Artificial Intelligence Algorithms for Hybrid Electric Powertrain System Control: A Review

Abstract

1. Introduction

2. Architectures of Hybrid Electric Powertrain Systems

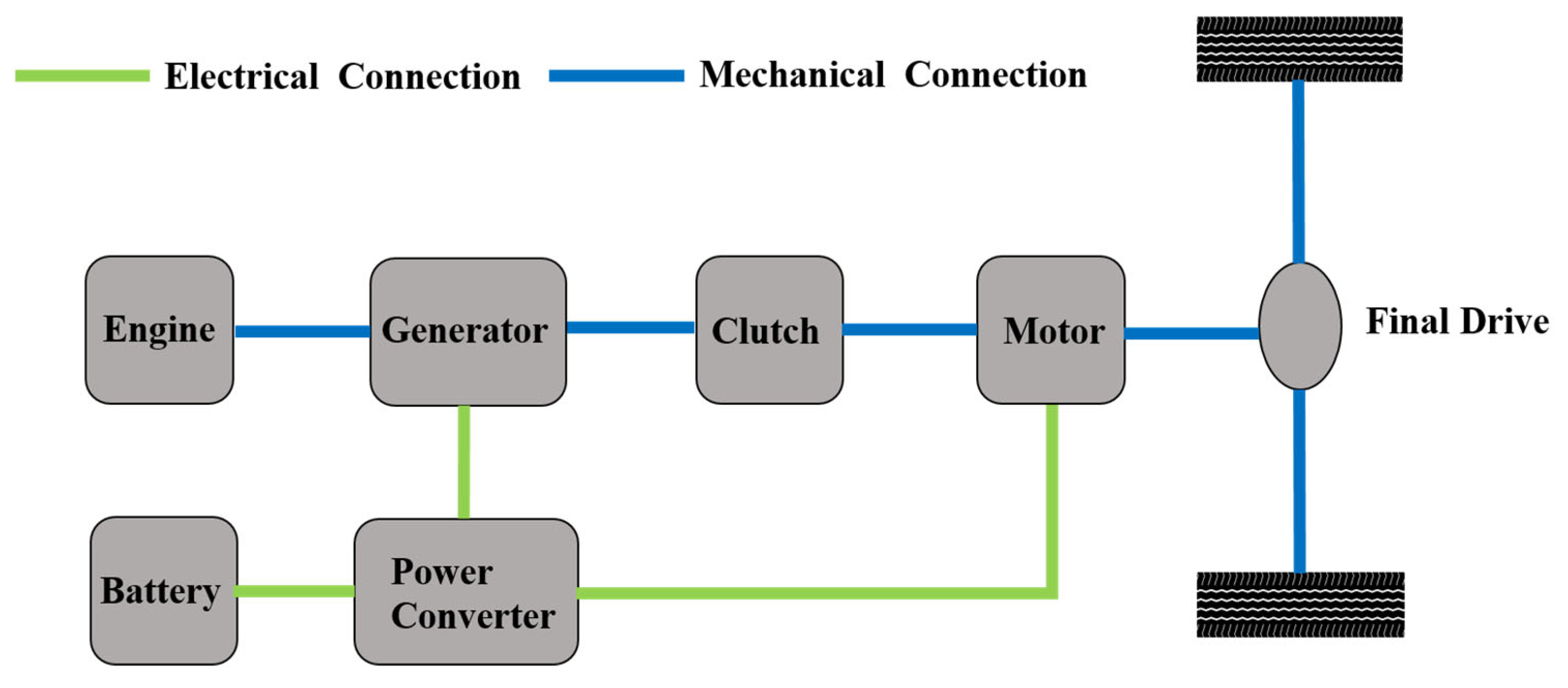

2.1. Series Hybrid Electric Powertrain Systems

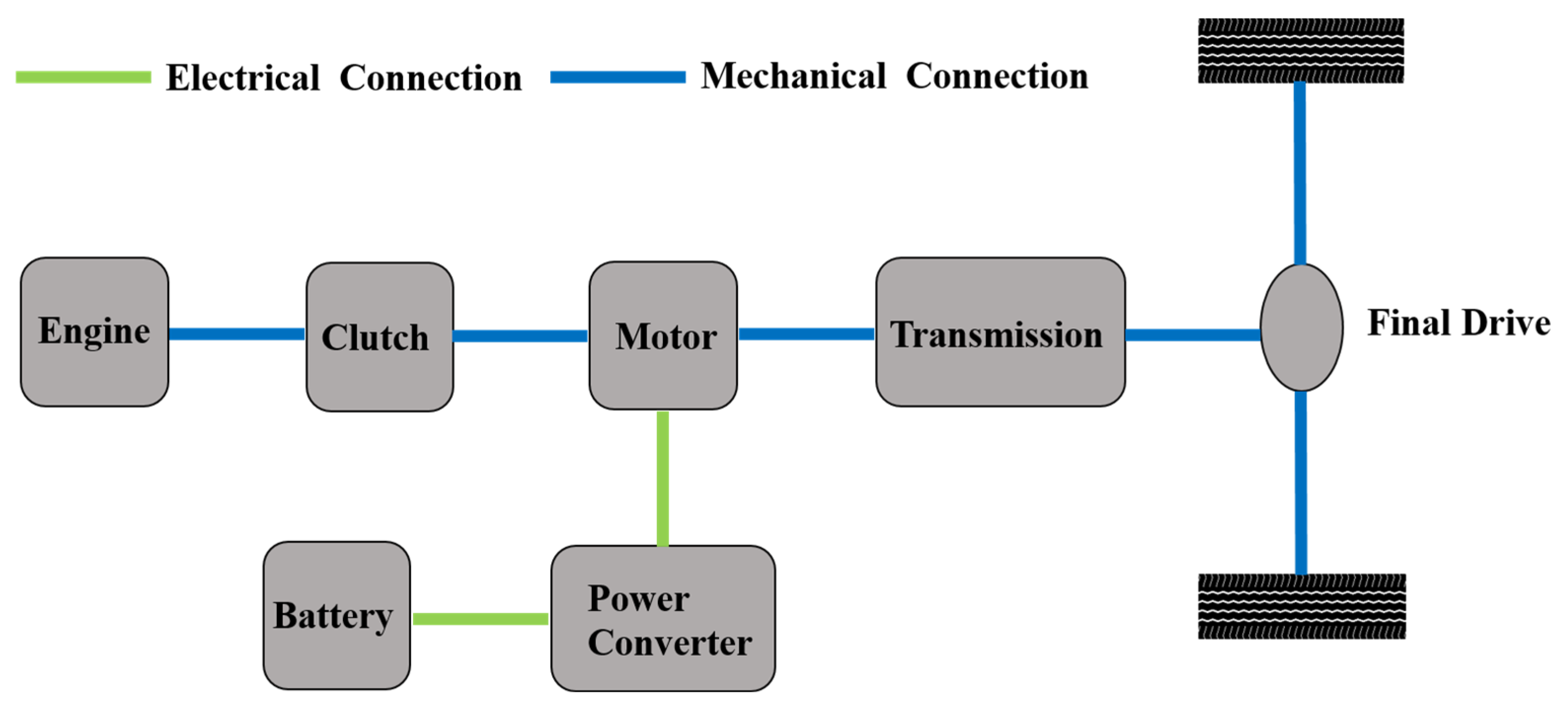

2.2. Parallel Hybrid Electric Powertrain System

2.3. Parallel–Parallel Hybrid Electric Powertrain System

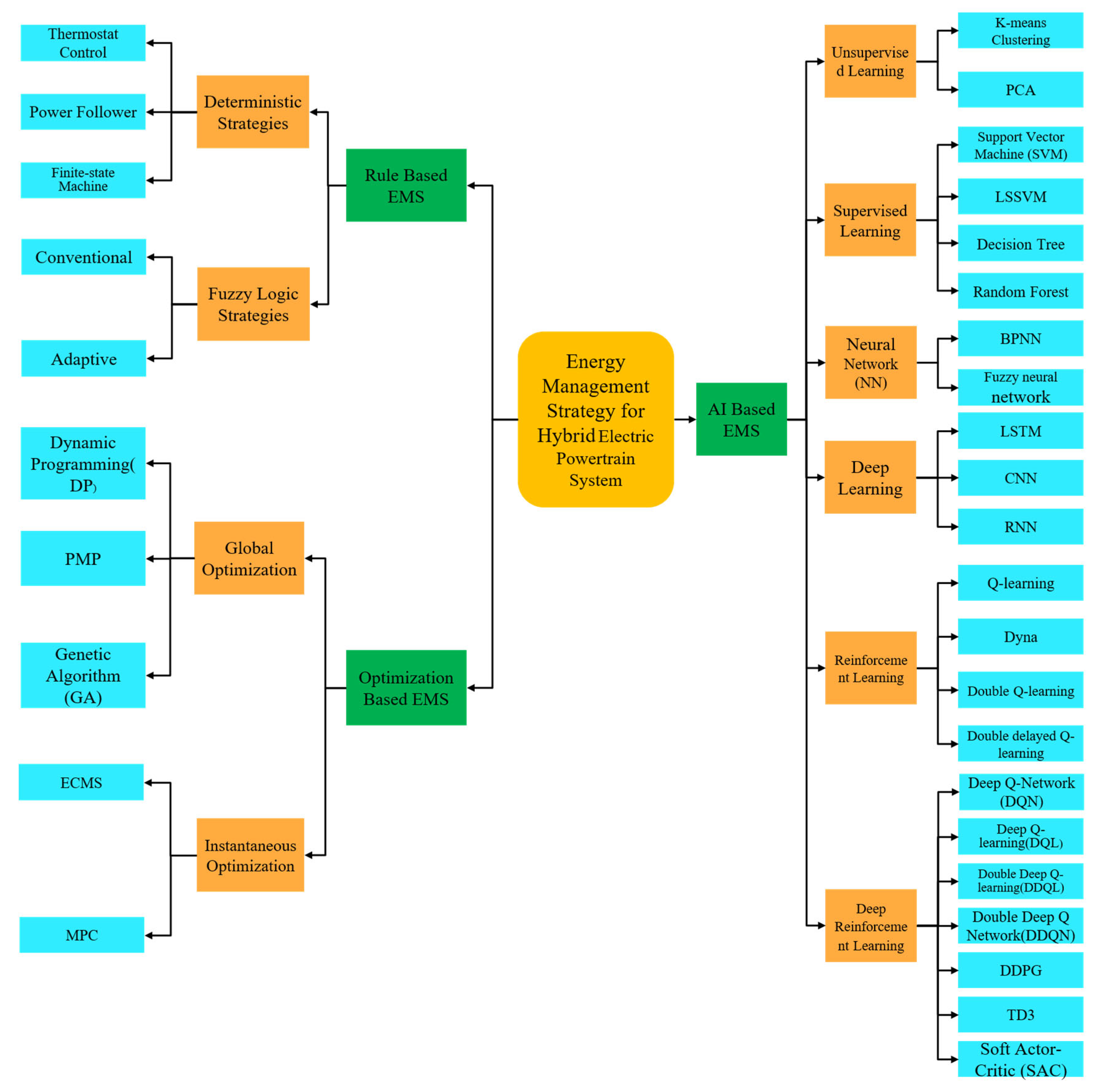

3. Classification of Energy Management Strategies

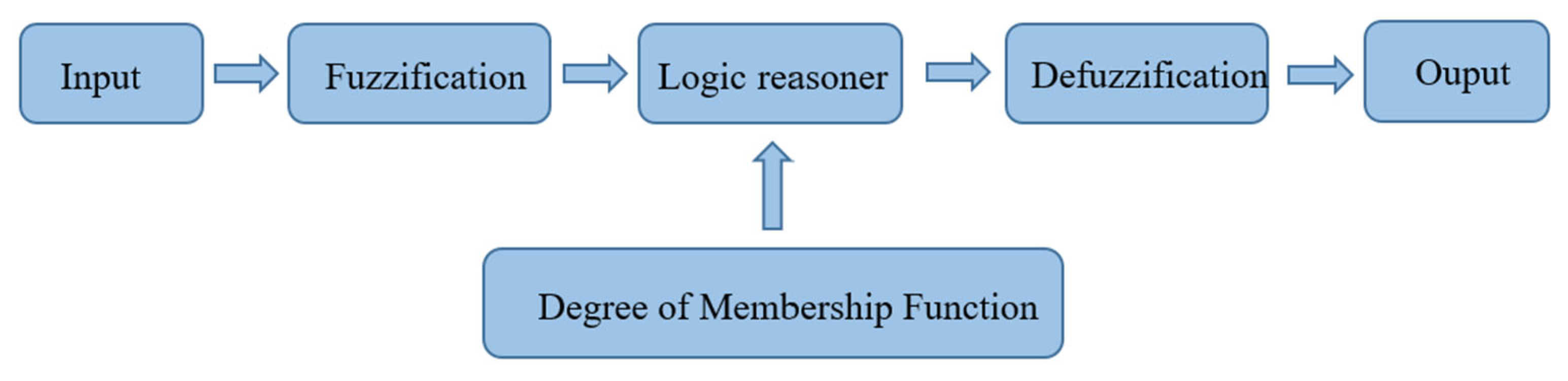

4. Rule-Based Energy Management Strategy

5. Energy Management Strategy Based on Optimization

6. Artificial Intelligence Algorithms

6.1. Unsupervised Learning

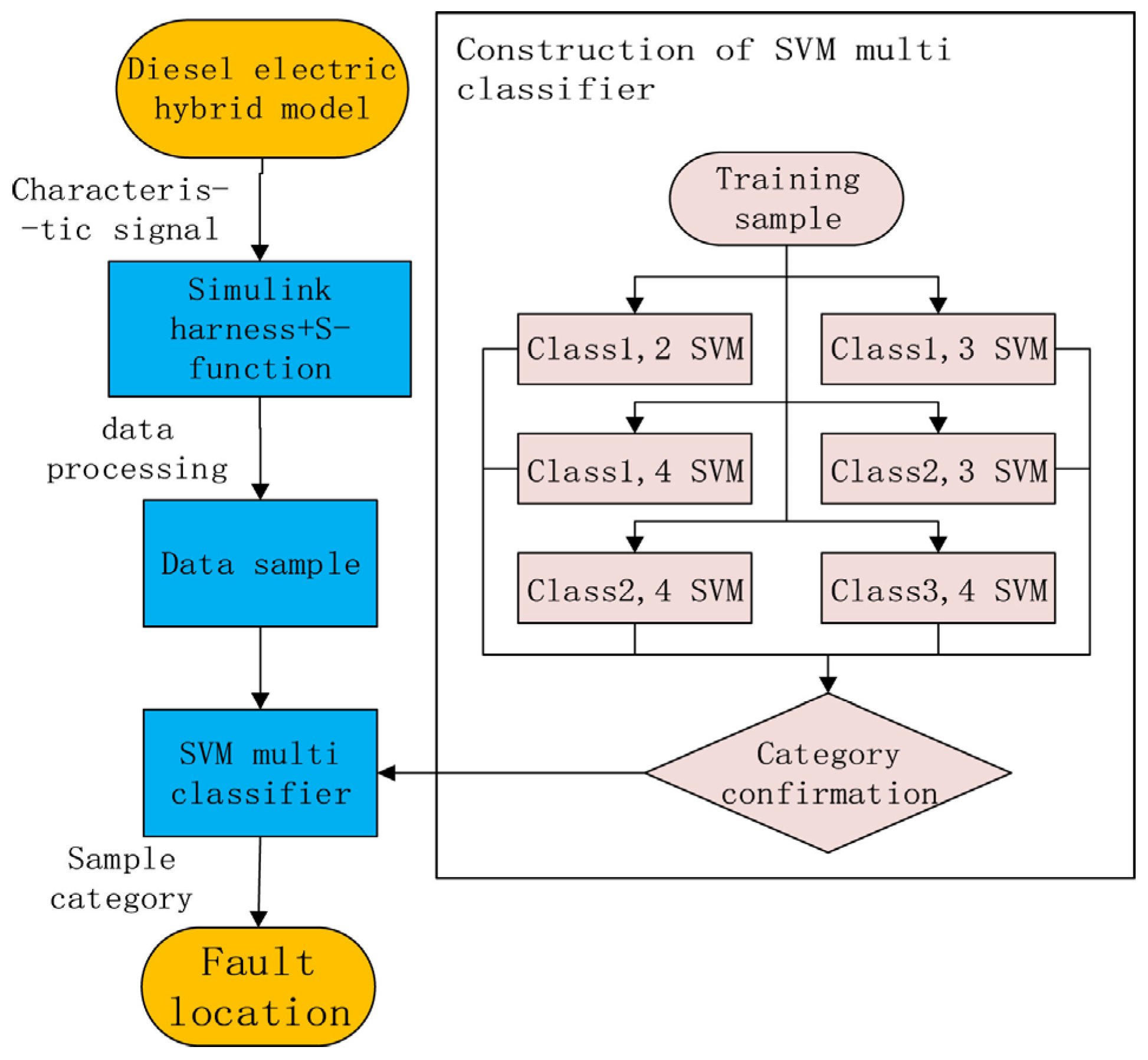

6.2. Supervised Learning

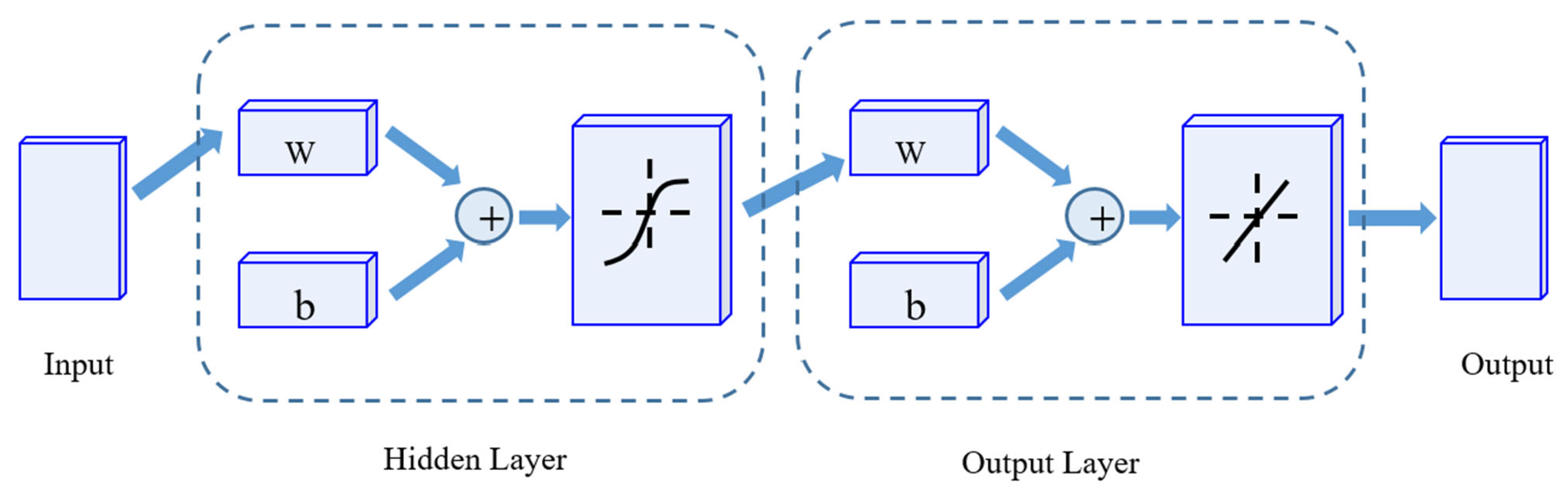

6.3. Neural Networks

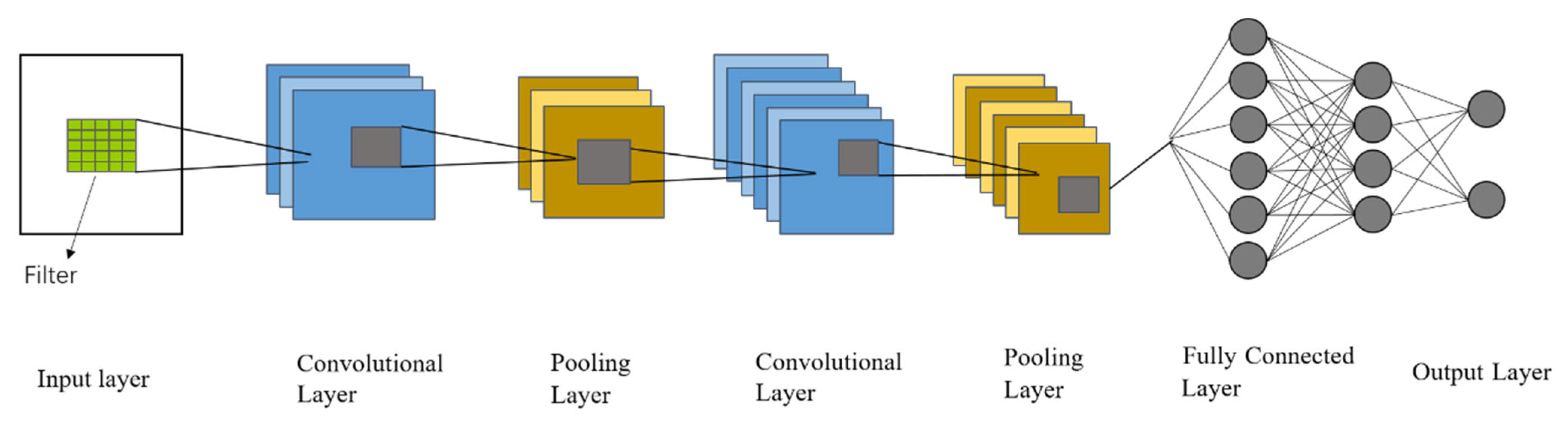

6.4. Deep Learning

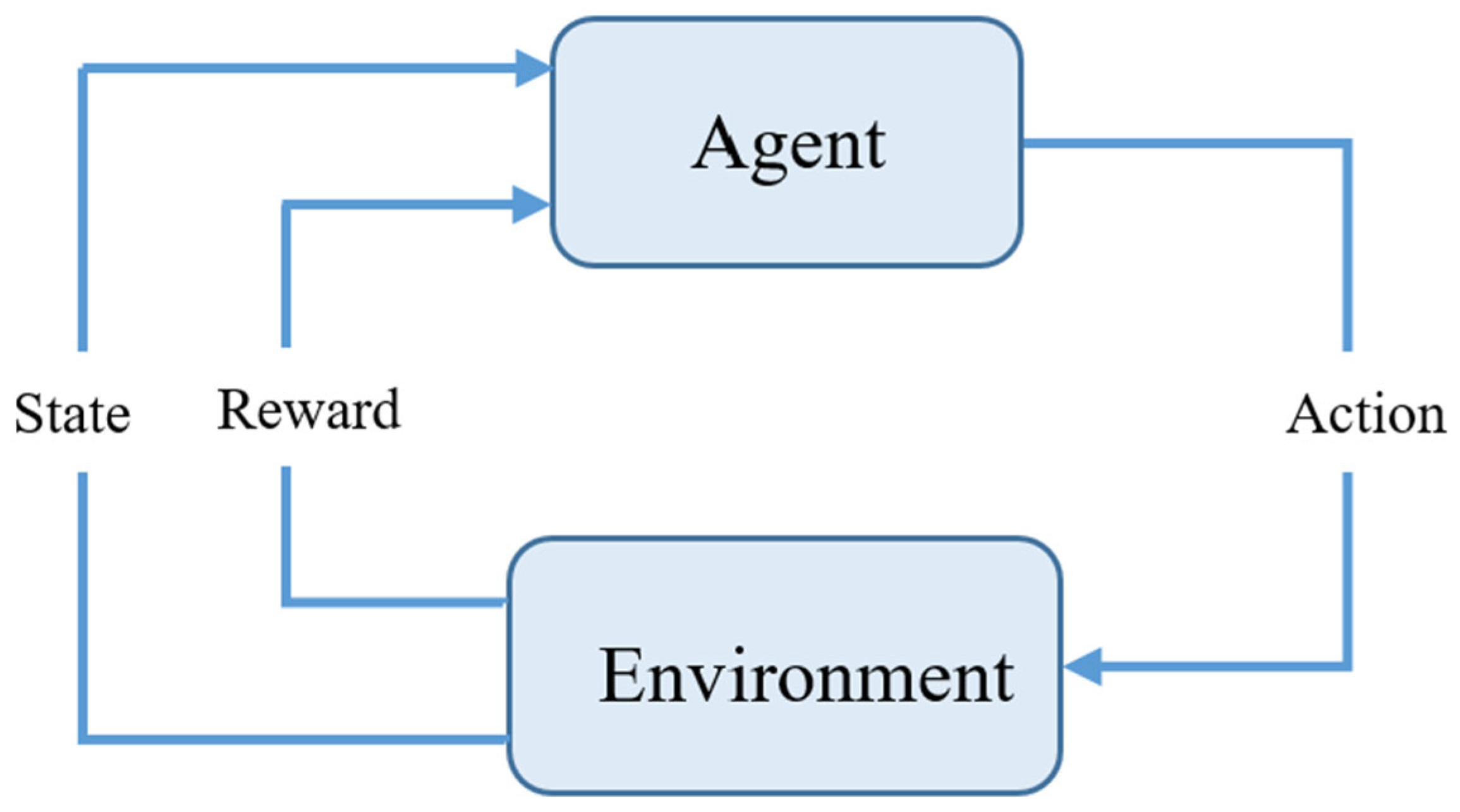

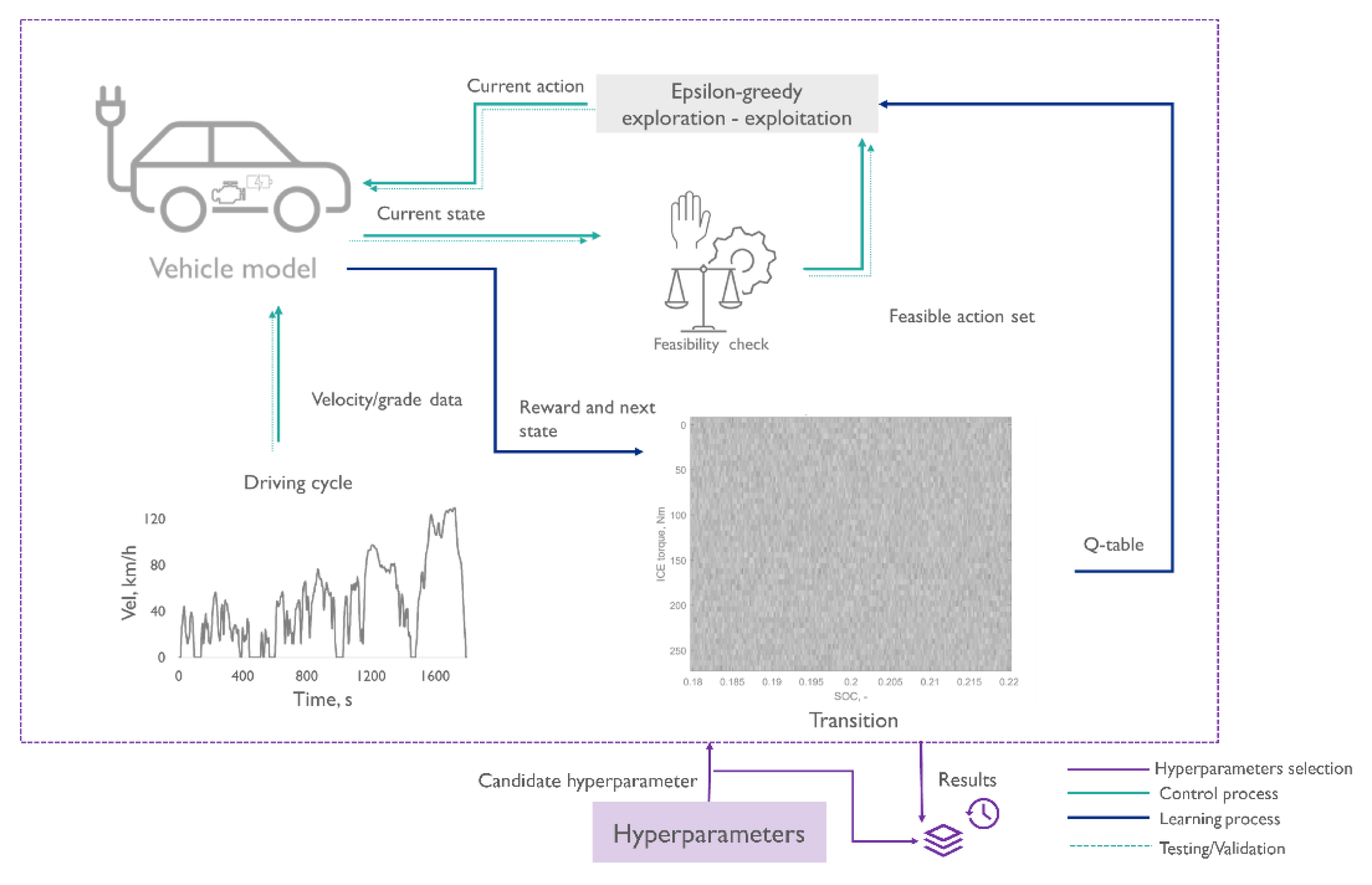

6.5. Reinforcement Learning

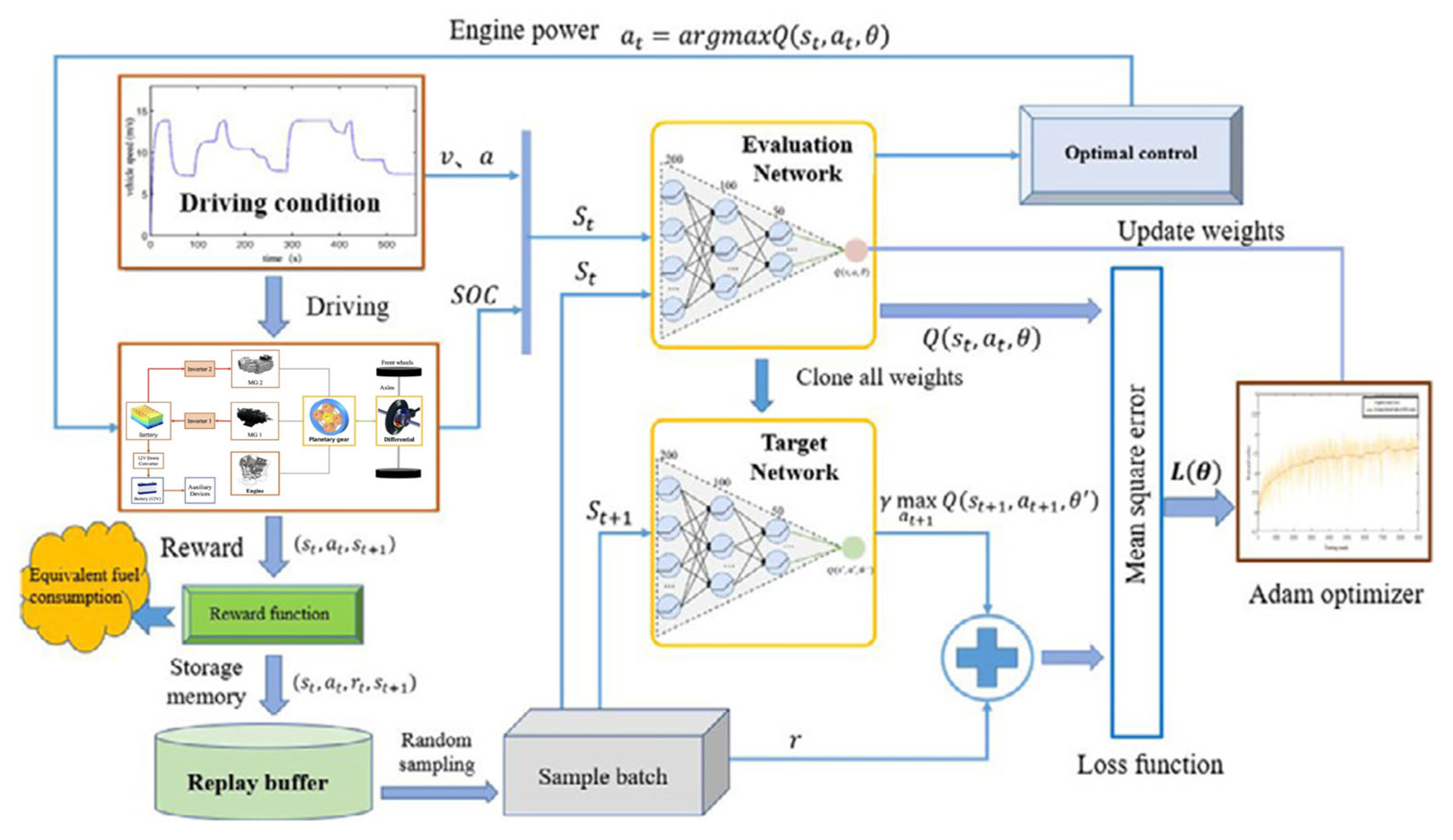

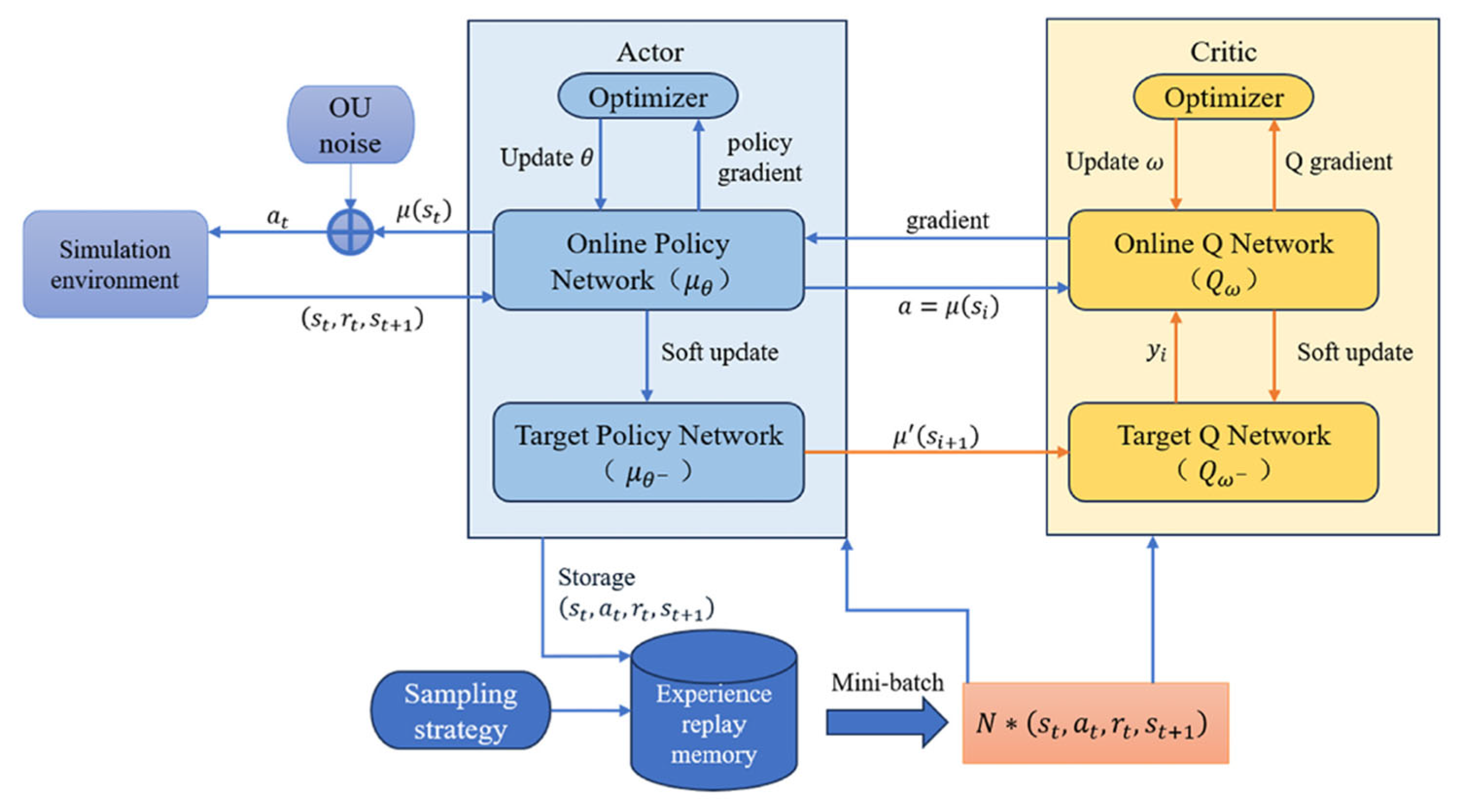

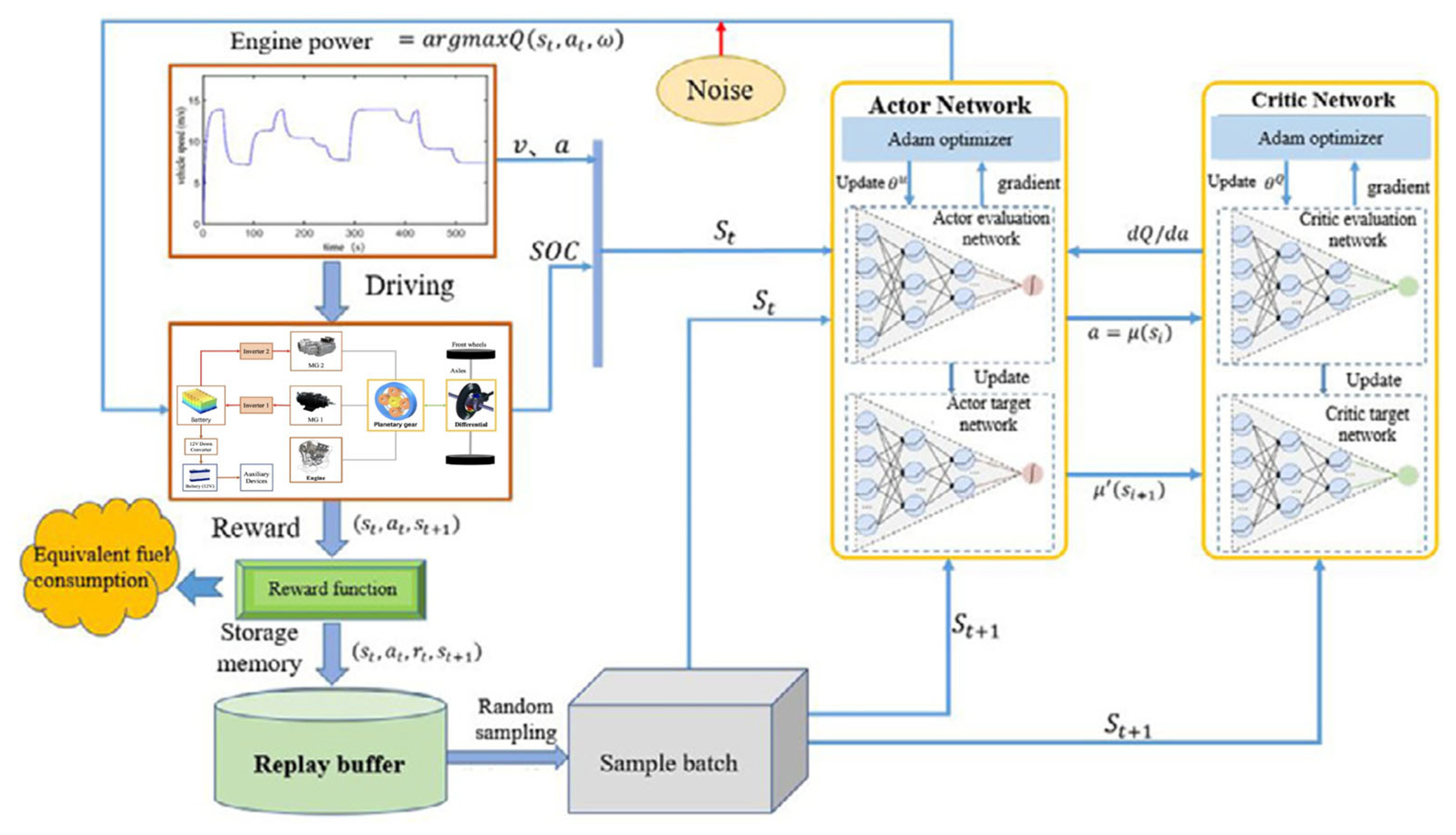

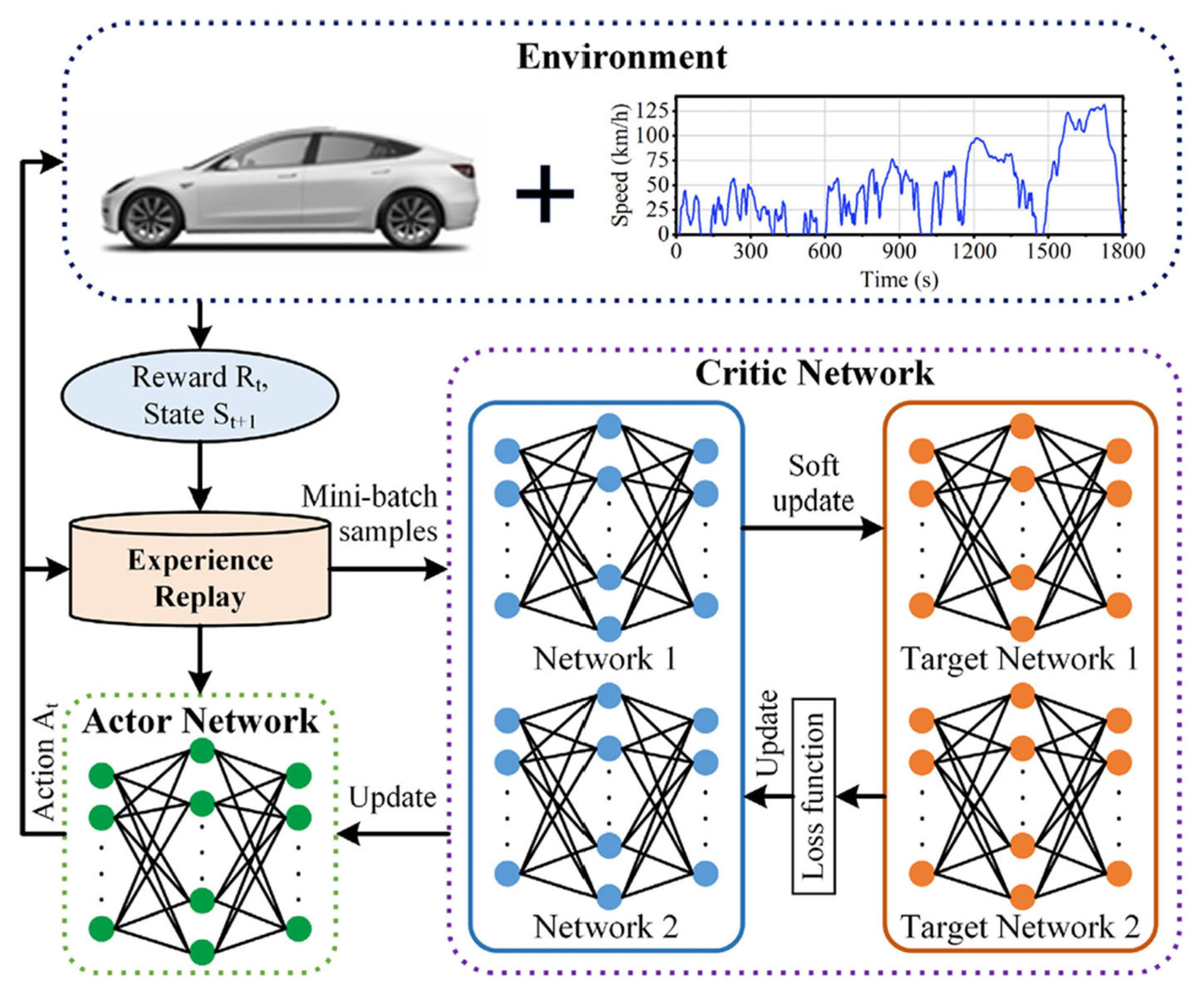

6.6. Deep Reinforcement Learning

7. Summary and Outlook

Author Contributions

Funding

Conflicts of Interest

References

- Jain, H. From pollution to progress: Groundbreaking advances in clean technology unveiled. Innov. Green Dev. 2024, 3, 100143. [Google Scholar] [CrossRef]

- Abbasi, K.R.; Zhang, Q.; Ozturk, I.; Alvarado, R.; Musa, M. Energy transition, fossil fuels, and green innovations: Paving the way to achieving sustainable development goals in the United States. Gondwana Res. 2024, 130, 326–341. [Google Scholar] [CrossRef]

- Yuan, X.; Liu, X.; Zuo, J. The development of new energy vehicles for a sustainable future: A review. Renew. Sustain. Energy Rev. 2015, 42, 298–305. [Google Scholar] [CrossRef]

- Khalatbarisoltani, A.; Zhou, H.; Tang, X.; Kandidayeni, M.; Boulon, L.; Hu, X. Energy management strategies for fuel cell vehicles: A comprehensive review of the latest progress in modeling, strategies, and future prospects. IEEE Trans. Intell. Transp. Syst. 2023, 25, 14–32. [Google Scholar] [CrossRef]

- Yi, C.; Epureanu, B.I.; Hong, S.K.; Ge, T.; Yang, X.G. Modeling, control, and performance of a novel architecture of hybrid electric powertrain system. Appl. Energy 2016, 178, 454–467. [Google Scholar] [CrossRef]

- Hayes, J.G.; Goodarzi, G.A. Electric powertrain: Energy systems, power electronics and drives for hybrid, electric and fuel cell vehicles. IEEE Aerosp. Electron. Syst. Mag. 2018, 34, 46–47. [Google Scholar] [CrossRef]

- Hannan, M.A.; Azidin, F.A.; Mohamed, A. Hybrid electric vehicles and their challenges: A review. Renew. Sustain. Energy Rev. 2014, 29, 135–150. [Google Scholar] [CrossRef]

- Martinez, C.M.; Hu, X.; Cao, D.; Velenis, E.; Gao, B.; Wellers, M. Energy management in plug-in hybrid electric vehicles: Recent progress and a connected vehicles perspective. IEEE Trans. Veh. Technol. 2016, 66, 4534–4549. [Google Scholar] [CrossRef]

- Stempien, J.P.; Chan, S.H. Comparative study of fuel cell, battery and hybrid buses for renewable energy constrained areas. J. Power Sources 2017, 340, 347–355. [Google Scholar] [CrossRef]

- Sabri, M.F.M.; Danapalasingam, K.A.; Rahmat, M.F. A review on hybrid electric vehicles architecture and energy management strategies. Renew. Sustain. Energy Rev. 2016, 53, 1433–1442. [Google Scholar] [CrossRef]

- Singh, K.V.; Bansal, H.O.; Singh, D. A comprehensive review on hybrid electric vehicles: Architectures and components. J. Mod. Transp. 2019, 27, 77–107. [Google Scholar] [CrossRef]

- Ahmadi, S.; Bathaee, S.M.T.; Hosseinpour, A.H. Improving fuel economy and performance of a fuel-cell hybrid electric vehicle (fuel-cell, battery, and ultra-capacitor) using optimized energy management strategy. Energy Convers. Manag. 2018, 160, 74–84. [Google Scholar] [CrossRef]

- Zhang, F.; Hu, X.; Langari, R.; Cao, D. Energy management strategies of connected HEVs and PHEVs: Recent progress and outlook. Prog. Energy Combust. Sci. 2019, 73, 235–256. [Google Scholar] [CrossRef]

- Lü, X.; Li, S.; He, X.; Xie, C.; He, S.; Xu, Y.; Fang, J.; Zhang, M.; Yang, X. Hybrid electric vehicles: A review of energy management strategies based on model predictive control. J. Energy Storage 2022, 56, 106112. [Google Scholar] [CrossRef]

- Tran, D.D.; Vafaeipour, M.; El Baghdadi, M.; Barrero, R.; Van Mierlo, J.; Hegazy, O. Thorough state-of-the-art analysis of electric and hybrid vehicle powertrains: Topologies and integrated energy management strategies. Renew. Sustain. Energy Rev. 2020, 119, 109596. [Google Scholar] [CrossRef]

- Jui, J.J.; Ahmad, M.A.; Molla, M.I.; Rashid, M.I.M. Optimal energy management strategies for hybrid electric vehicles: A recent survey of machine learning approaches. J. Eng. Res. 2024, 12, 454–467. [Google Scholar] [CrossRef]

- Denis, N.; Dubois, M.R.; Desrochers, A. Fuzzy-based blended control for the energy management of a parallel plug-in hybrid electric vehicle. IET Intell. Transp. Syst. 2015, 9, 30–37. [Google Scholar] [CrossRef]

- Banvait, H.; Anwar, S.; Chen, Y. A rule-based energy management strategy for plug-in hybrid electric vehicle (PHEV). In Proceedings of the 2009 American Control Conference, St. Louis, MO, USA, 10–12 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 3938–3943. [Google Scholar]

- Xie, S.; He, H.; Peng, J. An energy management strategy based on stochastic model predictive control for plug-in hybrid electric buses. Appl. Energy 2017, 196, 279–288. [Google Scholar] [CrossRef]

- Li, Y.; He, H.; Peng, J.; Wang, H. Deep reinforcement learning-based energy management for a series hybrid electric vehicle enabled by history cumulative trip information. IEEE Trans. Veh. Technol. 2019, 68, 7416–7430. [Google Scholar] [CrossRef]

- Liu, T.; Zou, Y.; Liu, D.; Sun, F. Reinforcement learning of adaptive energy management with transition probability for a hybrid electric tracked vehicle. IEEE Trans. Ind. Electron. 2015, 62, 7837–7846. [Google Scholar] [CrossRef]

- Enang, W.; Bannister, C. Modelling and control of hybrid electric vehicles (A comprehensive review). Renew. Sustain. Energy Rev. 2017, 74, 1210–1239. [Google Scholar] [CrossRef]

- Cao, Y.; Yao, M.; Sun, X. An overview of modelling and energy management strategies for hybrid electric vehicles. Appl. Sci. 2023, 13, 5947. [Google Scholar] [CrossRef]

- Song, C.; Kim, K.; Sung, D.; Kim, K.; Yang, H.; Lee, H.; Cho, G.Y.; Cha, S.W. A review of optimal energy management strategies using machine learning techniques for hybrid electric vehicles. Int. J. Automot. Technol. 2021, 22, 1437–1452. [Google Scholar] [CrossRef]

- Selvakumar, S.G. Electric and hybrid vehicles–A comprehensive overview. In Proceedings of the 2021 IEEE 2nd International Conference On Electrical Power and Energy Systems (ICEPES), Bhopal, India, 10–11 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Cheng, H.; Wang, L.; Xu, L.; Ge, X.; Yang, S. An integrated electrified powertrain topology with SRG and SRM for plug-in hybrid electrical vehicle. IEEE Trans. Ind. Electron. 2019, 67, 8231–8241. [Google Scholar] [CrossRef]

- Fathabadi, H. Fuel cell hybrid electric vehicle (FCHEV): Novel fuel cell/SC hybrid power generation system. Energy Convers. Manag. 2018, 156, 192–201. [Google Scholar] [CrossRef]

- Zhuang, W.; Li, S.; Zhang, X.; Kum, D.; Song, Z.; Yin, G.; Ju, F. A survey of powertrain configuration studies on hybrid electric vehicles. Appl. Energy 2020, 262, 114553. [Google Scholar] [CrossRef]

- Chen, B.; Evangelou, S.A.; Lot, R. Series hybrid electric vehicle simultaneous energy management and driving speed optimization. IEEE/ASME Trans. Mechatron. 2019, 24, 2756–2767. [Google Scholar] [CrossRef]

- Veerendra, A.S.; Mohamed, M.R.; Leung, P.K.; Shah, A.A. Hybrid power management for fuel cell/supercapacitor series hybrid electric vehicle. Int. J. Green Energy 2021, 18, 128–143. [Google Scholar] [CrossRef]

- Yang, Y.; Hu, X.; Pei, H.; Peng, Z. Comparison of power-split and parallel hybrid powertrain architectures with a single electric machine: Dynamic programming approach. Appl. Energy 2016, 168, 683–690. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, X.; Sun, Y.; You, S. Model predictive control strategy for energy optimization of series-parallel hybrid electric vehicle. J. Clean. Prod. 2018, 199, 348–358. [Google Scholar] [CrossRef]

- Xie, S.; Hu, X.; Qi, S.; Tang, X.; Lang, K.; Xin, Z.; Brighton, J. Model predictive energy management for plug-in hybrid electric vehicles considering optimal battery depth of discharge. Energy 2019, 173, 667–678. [Google Scholar] [CrossRef]

- Sarvaiya, S.; Ganesh, S.; Xu, B. Comparative analysis of hybrid vehicle energy management strategies with optimization of fuel economy and battery life. Energy 2021, 228, 120604. [Google Scholar] [CrossRef]

- Chrenko, D.; Gan, S.; Gutenkunst, C.; Kriesten, R.; Le Moyne, L. Novel classification of control strategies for hybrid electric vehicles. In Proceedings of the 2015 IEEE Vehicle Power and Propulsion Conference (VPPC), Montreal, QC, Canada, 19–22 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Wirasingha, S.G.; Emadi, A. Classification and review of control strategies for plug-in hybrid electric vehicles. IEEE Trans. Veh. Technol. 2010, 60, 111–122. [Google Scholar] [CrossRef]

- Yin, H.; Zhou, W.; Li, M.; Ma, C.; Zhao, C. An adaptive fuzzy logic-based energy management strategy on battery/ultracapacitor hybrid electric vehicles. IEEE Trans. Transp. Electrif. 2016, 2, 300–311. [Google Scholar] [CrossRef]

- Panday, A.; Bansal, H.O. A review of optimal energy management strategies for hybrid electric vehicle. Int. J. Veh. Technol. 2014, 2014, 160510. [Google Scholar] [CrossRef]

- Padmarajan, B.V.; McGordon, A.; Jennings, P.A. Blended rule-based energy management for PHEV: System structure and strategy. IEEE Trans. Veh. Technol. 2015, 65, 8757–8762. [Google Scholar] [CrossRef]

- Kim, M.; Jung, D.; Min, K. Hybrid thermostat strategy for enhancing fuel economy of series hybrid intracity bus. IEEE Trans. Veh. Technol. 2014, 63, 3569–3579. [Google Scholar] [CrossRef]

- Peng, C.; Feng, F.; Xiao, Y.; Liang, W. Multi-working points Power follower based energy management strategy for series hybrid electric vehicle. J. Phys. Conf. Series. 2020, 1601, 022039. [Google Scholar] [CrossRef]

- Shi, D.; Wang, S.; Pisu, P.; Chen, L.; Wang, R.; Wang, R. Modeling and optimal energy management of a power split hybrid electric vehicle. Sci. China Technol. Sci. 2017, 60, 713–725. [Google Scholar] [CrossRef]

- Mohd Sabri, M.F.; Danapalasingam, K.A.; Rahmat, M.F. Improved fuel economy of through-the-road hybrid electric vehicle with fuzzy logic-based energy management strategy. Int. J. Fuzzy Syst. 2018, 20, 2677–2692. [Google Scholar] [CrossRef]

- Montazeri-Gh, M.; Mahmoodi-K, M. Optimized predictive energy management of plug-in hybrid electric vehicle based on traffic condition. J. Clean. Prod. 2016, 139, 935–948. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, C.H.; Cui, N.X. Fuzzy energy management strategy for a hybrid electric vehicle based on driving cycle recognition. Int. J. Automot. Technol. 2012, 13, 1159–1167. [Google Scholar] [CrossRef]

- Dawei, M.; Yu, Z.; Meilan, Z.; Risha, N. Intelligent fuzzy energy management research for a uniaxial parallel hybrid electric vehicle. Comput. Electr. Eng. 2017, 58, 447–464. [Google Scholar] [CrossRef]

- Zou, K.; Luo, W.; Lu, Z. Real-Time Energy Management Strategy of Hydrogen Fuel Cell Hybrid Electric Vehicles Based on Power Following Strategy–Fuzzy Logic Control Strategy Hybrid Control. World Electr. Veh. J. 2023, 14, 315. [Google Scholar] [CrossRef]

- Shabbir, W.; Evangelou, S.A. Threshold-changing control strategy for series hybrid electric vehicles. Appl. Energy 2019, 235, 761–775. [Google Scholar] [CrossRef]

- Lü, X.; Wu, Y.; Lian, J.; Zhang, Y.; Chen, C.; Wang, P.; Meng, L. Energy management of hybrid electric vehicles: A review of energy optimization of fuel cell hybrid power system based on genetic algorithm. Energy Convers. Manag. 2020, 205, 112474. [Google Scholar] [CrossRef]

- Liu, Y.; Gao, J.; Qin, D.; Zhang, Y.; Lei, Z. Rule-corrected energy management strategy for hybrid electric vehicles based on operation-mode prediction. J. Clean. Prod. 2018, 188, 796–806. [Google Scholar] [CrossRef]

- Wu, J.; Ruan, J.; Zhang, N.; Walker, P.D. An optimized real-time energy management strategy for the power-split hybrid electric vehicles. IEEE Trans. Control Syst. Technol. 2018, 27, 1194–1202. [Google Scholar] [CrossRef]

- Wang, R.; Lukic, S.M. Dynamic programming technique in hybrid electric vehicle optimization. In Proceedings of the 2012 IEEE International Electric Vehicle Conference, Greenville, SC, USA, 4–8 March 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–8. [Google Scholar]

- Desai, C.; Williamson, S.S. Optimal design of a parallel hybrid electric vehicle using multi-objective genetic algorithms. In Proceedings of the 2009 IEEE Vehicle Power and Propulsion Conference, Dearborn, MI, USA, 7–11 September 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 871–876. [Google Scholar]

- Wang, Y.; Wu, Z.; Chen, Y.; Xia, A.; Guo, C.; Tang, Z. Research on energy optimization control strategy of the hybrid electric vehicle based on Pontryagin’s minimum principle. Comput. Electr. Eng. 2018, 72, 203–213. [Google Scholar] [CrossRef]

- Rezaei, A.; Burl, J.B.; Zhou, B. Estimation of the ECMS equivalent factor bounds for hybrid electric vehicles. IEEE Trans. Control. Syst. Technol. 2017, 26, 2198–2205. [Google Scholar] [CrossRef]

- Kim, T.S.; Manzie, C.; Sharma, R. Model predictive control of velocity and torque split in a parallel hybrid vehicle. In Proceedings of the 2009 IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 11–14 October 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 2014–2019. [Google Scholar]

- Montazeri-Gh, M.; Mahmoodi-K, M. Development a new power management strategy for power split hybrid electric vehicles. Transp. Res. Part D Transp. Environ. 2015, 37, 79–96. [Google Scholar] [CrossRef]

- Hu, J.; Li, J.; Hu, Z.; Xu, L.; Ouyang, M. Power distribution strategy of a dual-engine system for heavy-duty hybrid electric vehicles using dynamic programming. Energy 2021, 215, 118851. [Google Scholar] [CrossRef]

- Du, J.; Zhang, X.; Wang, T.; Song, Z.; Yang, X.; Wang, H.; Ouyang, M.; Wu, X. Battery degradation minimization oriented energy management strategy for plug-in hybrid electric bus with multi-energy storage system. Energy 2018, 165, 153–163. [Google Scholar] [CrossRef]

- Yao, Z.; Shao, R.; Zhan, S.; Mo, R.; Wu, Z. Energy management strategy for fuel cell hybrid electric vehicles using Pontryagin’s minimum principle and dynamic SoC planning. Energy Sources Part A Recovery Util. Environ. Eff. 2024, 46, 5112–5513. [Google Scholar] [CrossRef]

- Sun, X.; Chen, Z.; Han, S.; Tian, X.; Jin, Z.; Cao, Y.; Xue, M. Adaptive real-time ECMS with equivalent factor optimization for plug-in hybrid electric buses. Energy 2024, 304, 132014. [Google Scholar] [CrossRef]

- Zeng, T.; Zhang, C.; Zhang, Y.; Deng, C.; Hao, D.; Zhu, Z.; Ran, H.; Cao, D. Optimization-oriented adaptive equivalent consumption minimization strategy based on short-term demand power prediction for fuel cell hybrid vehicle. Energy 2021, 227, 120305. [Google Scholar] [CrossRef]

- Bonab, S.A.; Emadi, A. MPC-based energy management strategy for an autonomous hybrid electric vehicle. IEEE Open J. Ind. Appl. 2020, 1, 171–180. [Google Scholar] [CrossRef]

- Quan, S.; Wang, Y.X.; Xiao, X.; He, H.; Sun, F. Real-time energy management for fuel cell electric vehicle using speed prediction-based model predictive control considering performance degradation. Appl. Energy 2021, 304, 117845. [Google Scholar] [CrossRef]

- Mazouzi, A.; Hadroug, N.; Alayed, W.; Hafaifa, A.; Iratni, A.; Kouzou, A. Comprehensive optimization of fuzzy logic-based energy management system for fuel-cell hybrid electric vehicle using genetic algorithm. Int. J. Hydrogen Energy 2024, 81, 889–905. [Google Scholar] [CrossRef]

- Fan, L.; Wang, Y.; Wei, H.; Zhang, Y.; Zheng, P.; Huang, T.; Li, W. A GA-based online real-time optimized energy management strategy for plug-in hybrid electric vehicles. Energy 2022, 241, 122811. [Google Scholar] [CrossRef]

- Kapil, S.; Chawla, M.; Ansari, M.D. On K-means data clustering algorithm with genetic algorithm. In Proceedings of the 2016 Fourth International Conference on Parallel, Distributed and Grid Computing (PDGC), Waknaghat, India, 2–24 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 202–206. [Google Scholar]

- Ding, S.; Su, C.; Yu, J. An optimizing BP neural network algorithm based on genetic algorithm. Artif. Intell. Rev. 2011, 36, 153–162. [Google Scholar] [CrossRef]

- Belhadj, S.; Belmokhtar, K.; Ghedamsi, K. Improvement of Energy Management Control Strategy of Fuel Cell Hybrid Electric Vehicles Based on Artificial Intelligence Techniques. J. Eur. Des Systèmes Autom. 2019, 52, 541–550. [Google Scholar] [CrossRef]

- Xiong, R.; Cao, J.; Yu, Q. Reinforcement learning-based real-time power management for hybrid energy storage system in the plug-in hybrid electric vehicle. Appl. Energy 2018, 211, 538–548. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, J.; Pi, D.; Lin, X.; Grzesiak, L.M.; Hu, X. Battery health-aware and deep reinforcement learning-based energy management for naturalistic data-driven driving scenarios. IEEE Trans. Transp. Electrif. 2021, 8, 948–964. [Google Scholar] [CrossRef]

- Lemieux, J.; Ma, Y. Vehicle speed prediction using deep learning. In Proceedings of the 2015 IEEE Vehicle Power and Propulsion Conference (VPPC), Montreal, QC, Canada, 19–22 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–5. [Google Scholar]

- Chen, Z.; Wu, S.; Shen, S.; Liu, Y.; Guo, F.; Zhang, Y. Co-optimization of velocity planning and energy management for autonomous plug-in hybrid electric vehicles in urban driving scenarios. Energy 2023, 263, 126060. [Google Scholar] [CrossRef]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R.; Taylor, J. Unsupervised learning. In An Introduction to Statistical Learning: With Applications in Python; Springer International Publishing: Cham, Switzerland, 2023; pp. 503–556. [Google Scholar]

- Zhang, J.; Chu, L.; Wang, X.; Guo, C.; Fu, Z.; Zhao, D. Optimal energy management strategy for plug-in hybrid electric vehicles based on a combined clustering analysis. Appl. Math. Model. 2021, 94, 49–67. [Google Scholar] [CrossRef]

- Sun, X.; Cao, Y.; Jin, Z.; Tian, X.; Xue, M. An adaptive ECMS based on traffic information for plug-in hybrid electric buses. IEEE Trans. Ind. Electron. 2022, 70, 9248–9259. [Google Scholar] [CrossRef]

- Li, S.; Hu, M.; Gong, C.; Zhan, S.; Qin, D. Energy management strategy for hybrid electric vehicle based on driving condition identification using KGA-means. Energies 2018, 11, 1531. [Google Scholar] [CrossRef]

- Chang, C.; Zhao, W.; Wang, C.; Song, Y. A novel energy management strategy integrating deep reinforcement learning and rule based on condition identification. IEEE Trans. Veh. Technol. 2022, 72, 1674–1688. [Google Scholar] [CrossRef]

- Liu, T.; Yu, H.; Guo, H.; Qin, Y.; Zou, Y. Online energy management for multimode plug-in hybrid electric vehicles. IEEE Trans. Ind. Inform. 2018, 15, 4352–4361. [Google Scholar] [CrossRef]

- Ye, F.; Zhai, X. Research on energy management strategy of diesel hybrid electric vehicle based on decision tree CART algorithm. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 677, p. 032076. [Google Scholar]

- Liu, F.; Liu, B.; Zhang, J.; Wan, P.; Li, B. Fault mode detection of a hybrid electric vehicle by using support vector machine. Energy Rep. 2023, 9, 137–148. [Google Scholar] [CrossRef]

- Zhuang, W.; Zhang, X.; Li, D.; Wang, L.; Yin, G. Mode shift map design and integrated energy management control of a multi-mode hybrid electric vehicle. Appl. Energy 2017, 204, 476–488. [Google Scholar] [CrossRef]

- Ji, Y.; Lee, H. Event-based anomaly detection using a one-class SVM for a hybrid electric vehicle. IEEE Trans. Veh. Technol. 2022, 71, 6032–6043. [Google Scholar] [CrossRef]

- Shi, Q.I.N.; Qiu, D.; He, L.; Wu, B.; Li, Y. Support vector machine–based driving cycle recognition for dynamic equivalent fuel consumption minimization strategy with hybrid electric vehicle. Adv. Mech. Eng. 2018, 10, 1687814018811020. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y.; Yu, H.; Nie, Z.; Liu, Y.; Chen, Z. A novel data-driven controller for plug-in hybrid electric vehicles with improved adaptabilities to driving environment. J. Clean. Prod. 2022, 334, 130250. [Google Scholar] [CrossRef]

- Wang, H.; Arjmandzadeh, Z.; Ye, Y.; Zhang, J.; Xu, B. FlexNet: A warm start method for deep reinforcement learning in hybrid electric vehicle energy management applications. Energy 2024, 288, 129773. [Google Scholar] [CrossRef]

- Adedeji, B.P. Energy parameter modeling in plug-in hybrid electric vehicles using supervised machine learning approaches. E-Prime Adv. Electr. Eng. Electron. Energy 2024, 8, 100584. [Google Scholar] [CrossRef]

- Lu, Z.; Tian, H.; Li, R.; Tian, G. Neural network energy management strategy with optimal input features for plug-in hybrid electric vehicles. Energy 2023, 285, 129399. [Google Scholar] [CrossRef]

- Adedeji, B.P. A multivariable output neural network approach for simulation of plug-in hybrid electric vehicle fuel consumption. Green Energy Intell. Transp. 2023, 2, 100070. [Google Scholar] [CrossRef]

- Faria, J.; Pombo, J.; Calado, M.D.R.; Mariano, S. Power management control strategy based on artificial neural networks for standalone PV applications with a hybrid energy storage system. Energies 2019, 12, 902. [Google Scholar] [CrossRef]

- Lin, X.; Wang, Z.; Wu, J. Energy management strategy based on velocity prediction using back propagation neural network for a plug-in fuel cell electric vehicle. Int. J. Energy Res. 2021, 45, 2629–2643. [Google Scholar] [CrossRef]

- Huo, D.; Meckl, P. Power management of a plug-in hybrid electric vehicle using neural networks with comparison to other approaches. Energies 2022, 15, 5735. [Google Scholar] [CrossRef]

- Xie, S.; Hu, X.; Qi, S.; Lang, K. An artificial neural network-enhanced energy management strategy for plug-in hybrid electric vehicles. Energy 2018, 163, 837–848. [Google Scholar] [CrossRef]

- Shen, P.; Zhao, Z.; Zhan, X.; Li, J.; Guo, Q. Optimal energy management strategy for a plug-in hybrid electric commercial vehicle based on velocity prediction. Energy 2018, 155, 838–852. [Google Scholar] [CrossRef]

- Tang, D.; Zhang, Z.; Hua, L.; Pan, J.; Xiao, Y. Prediction of cold start emissions for hybrid electric vehicles based on genetic algorithms and neural networks. J. Clean. Prod. 2023, 420, 138403. [Google Scholar] [CrossRef]

- Chen, H.; Wu, G. Compensation fuzzy neural network power management strategy for hybrid electric vehicle. Tongji Daxue Xuebao J. Tongji Univ. 2009, 37, 525–530. [Google Scholar]

- Zhang, Q.; Fu, X. A neural network fuzzy energy management strategy for hybrid electric vehicles based on driving cycle recognition. Appl. Sci. 2020, 10, 696. [Google Scholar] [CrossRef]

- Wang, Q.; Luo, Y. Research on a new power distribution control strategy of hybrid energy storage system for hybrid electric vehicles based on the subtractive clustering and adaptive fuzzy neural network. Clust. Comput. 2022, 25, 4413–4422. [Google Scholar] [CrossRef]

- Estrada, P.M.; de Lima, D.; Bauer, P.H.; Mammetti, M.; Bruno, J.C. Deep learning in the development of energy management strategies of hybrid electric vehicles: A hybrid modeling approach. Appl. Energy 2023, 329, 120231. [Google Scholar] [CrossRef]

- Alaoui, C. Hybrid vehicle energy management using deep learning. In Proceedings of the 2019 International Conference on Intelligent Systems and Advanced Computing Sciences (ISACS), Taza, Morocco, 26–27 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Xia, J.; Wang, F.; Xu, X. A predictive energy management strategy for multi-mode plug-in hybrid electric vehicle based on long short-term memory neural network. IFAC-Pap. 2021, 54, 132–137. [Google Scholar] [CrossRef]

- Bao, S.; Tang, S.; Sun, P.; Wang, T. LSTM-based energy management algorithm for a vehicle power-split hybrid powertrain. Energy 2023, 284, 129267. [Google Scholar] [CrossRef]

- Han, S.; Zhang, F.; Xi, J.; Ren, Y.; Xu, S. Short-term vehicle speed prediction based on convolutional bidirectional lstm networks. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4055–4060. [Google Scholar]

- Chen, Z.; Yang, C.; Fang, S. A convolutional neural network-based driving cycle prediction method for plug-in hybrid electric vehicles with bus route. IEEE Access 2019, 8, 3255–3264. [Google Scholar] [CrossRef]

- Xie, L.; Tao, J.; Zhang, Q.; Zhou, H. CNN and KPCA-based automated feature extraction for real time driving pattern recognition. IEEE Access 2019, 7, 123765–123775. [Google Scholar] [CrossRef]

- Han, L.; Jiao, X.; Zhang, Z. Recurrent neural network-based adaptive energy management control strategy of plug-in hybrid electric vehicles considering battery aging. Energies 2020, 13, 202. [Google Scholar] [CrossRef]

- Millo, F.; Rolando, L.; Tresca, L.; Pulvirenti, L. Development of a neural network-based energy management system for a plug-in hybrid electric vehicle. Transp. Eng. 2023, 11, 100156. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, Y.; Li, G.; Shen, J.; Chen, Z.; Liu, Y. A predictive energy management strategy for multi-mode plug-in hybrid electric vehicles based on multi neural networks. Energy 2020, 208, 118366. [Google Scholar] [CrossRef]

- Lin, X.; Wang, Y.; Bogdan, P.; Chang, N.; Pedram, M. Reinforcement learning based power management for hybrid electric vehicles. In Proceedings of the 2014 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), San Jose, CA, USA, 2–6 November 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 33–38. [Google Scholar]

- Yang, N.; Ruan, S.; Han, L.; Liu, H.; Guo, L.; Xiang, C. Reinforcement learning-based real-time intelligent energy management for hybrid electric vehicles in a model predictive control framework. Energy 2023, 270, 126971. [Google Scholar] [CrossRef]

- Yang, N.; Han, L.; Xiang, C.; Liu, H.; Hou, X. Energy management for a hybrid electric vehicle based on blended reinforcement learning with backward focusing and prioritized sweeping. IEEE Trans. Veh. Technol. 2021, 70, 3136–3148. [Google Scholar] [CrossRef]

- Du, G.; Zou, Y.; Zhang, X.; Liu, T.; Wu, J.; He, D. Deep reinforcement learning based energy management for a hybrid electric vehicle. Energy 2020, 201, 117591. [Google Scholar] [CrossRef]

- Musa, A.; Anselma, P.G.; Belingardi, G.; Misul, D.A. Energy Management in Hybrid Electric Vehicles: A Q-Learning Solution for Enhanced Drivability and Energy Efficiency. Energies 2023, 17, 62. [Google Scholar] [CrossRef]

- Ahmadian, S.; Tahmasbi, M.; Abedi, R. Q-learning based control for energy management of series-parallel hybrid vehicles with balanced fuel consumption and battery life. Energy AI 2023, 11, 100217. [Google Scholar] [CrossRef]

- Chen, Z.; Mi, C.C.; Xia, B.; You, C. Stochastic model predictive control for energy management of power-split plug-in hybrid electric vehicles based on reinforcement learning. Energy 2020, 211, 118931. [Google Scholar] [CrossRef]

- Chen, Z.; Gu, H.; Shen, S.; Shen, J. Energy management strategy for power-split plug-in hybrid electric vehicle based on MPC and double Q-learning. Energy 2022, 245, 123182. [Google Scholar] [CrossRef]

- Shen, S.; Gao, S.; Liu, Y.; Zhang, Y.; Shen, J.; Chen, Z.; Lei, Z. Real-time energy management for plug-in hybrid electric vehicles via incorporating double-delay Q-learning and model prediction control. IEEE Access 2022, 10, 131076–131089. [Google Scholar] [CrossRef]

- Mei, P.; Karimi, H.R.; Xie, H.; Chen, F.; Huang, C.; Yang, S. A deep reinforcement learning approach to energy management control with connected information for hybrid electric vehicles. Eng. Appl. Artif. Intell. 2023, 123, 106239. [Google Scholar] [CrossRef]

- Tang, X.; Chen, J.; Liu, T.; Qin, Y.; Cao, D. Distributed deep reinforcement learning-based energy and emission management strategy for hybrid electric vehicles. IEEE Trans. Veh. Technol. 2021, 70, 9922–9934. [Google Scholar] [CrossRef]

- Wu, J.; He, H.; Peng, J.; Li, Y.; Li, Z. Continuous reinforcement learning of energy management with deep Q network for a power split hybrid electric bus. Appl. Energy 2018, 222, 799–811. [Google Scholar] [CrossRef]

- Wang, H.; He, H.; Bai, Y.; Yue, H. Parameterized deep Q-network based energy management with balanced energy economy and battery life for hybrid electric vehicles. Appl. Energy 2022, 320, 119270. [Google Scholar] [CrossRef]

- Qi, C.; Zhu, Y.; Song, C.; Yan, G.; Xiao, F.; Zhang, X.; Wang, D.; Cao, J.; Song, S. Hierarchical reinforcement learning based energy management strategy for hybrid electric vehicle. Energy 2022, 238, 121703. [Google Scholar] [CrossRef]

- Du, G.; Zou, Y.; Zhang, X.; Guo, L.; Guo, N. Energy management for a hybrid electric vehicle based on prioritized deep reinforcement learning framework. Energy 2022, 241, 122523. [Google Scholar] [CrossRef]

- Shi, D.; Xu, H.; Wang, S.; Hu, J.; Chen, L.; Yin, C. Deep reinforcement learning based adaptive energy management for plug-in hybrid electric vehicle with double deep Q-network. Energy 2024, 305, 132402. [Google Scholar] [CrossRef]

- Feng, Z.; Wang, C.; An, J.; Zhang, X.; Liu, X.; Ji, X.; Kang, L.; Quan, W. Emergency fire escape path planning model based on improved DDPG algorithm. J. Build. Eng. 2024, 95, 110090. [Google Scholar] [CrossRef]

- Zhang, D.; Li, J.; Guo, N.; Liu, Y.; Shen, S.; Wei, F.; Chen, Z.; Zheng, J. Adaptive deep reinforcement learning energy management for hybrid electric vehicles considering driving condition recognition. Energy 2024, 313, 134086. [Google Scholar] [CrossRef]

- Ruan, J.; Wu, C.; Liang, Z.; Liu, K.; Li, B.; Li, W.; Li, T. The application of machine learning-based energy management strategy in a multi-mode plug-in hybrid electric vehicle, part II: Deep deterministic policy gradient algorithm design for electric mode. Energy 2023, 269, 126792. [Google Scholar] [CrossRef]

- He, H.; Huang, R.; Meng, X.; Zhao, X.; Wang, Y.; Li, M. A novel hierarchical predictive energy management strategy for plug-in hybrid electric bus combined with deep deterministic policy gradient. J. Energy Storage 2022, 52, 104787. [Google Scholar] [CrossRef]

- Ma, Z.; Huo, Q.; Zhang, T.; Hao, J.; Wang, W. Deep deterministic policy gradient based energy management strategy for hybrid electric tracked vehicle with online updating mechanism. IEEE Access 2021, 9, 7280–7292. [Google Scholar] [CrossRef]

- Zhou, J.; Xue, S.; Xue, Y.; Liao, Y.; Liu, J.; Zhao, W. A novel energy management strategy of hybrid electric vehicle via an improved TDdeep reinforcement learning. Energy 2021, 224, 120118. [Google Scholar] [CrossRef]

- Yazar, O.; Coskun, S.; Li, L.; Zhang, F.; Huang, C. Actor-critic TD3-based deep reinforcement learning for energy management strategy of HEV. In Proceedings of the 2023 5th International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Istanbul, Turkey, 8–10 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Huang, R.; He, H.; Zhao, X.; Wang, Y.; Li, M. Battery health-aware and naturalistic data-driven energy management for hybrid electric bus based on TD3 deep reinforcement learning algorithm. Appl. Energy 2022, 321, 119353. [Google Scholar] [CrossRef]

- Huang, R.; He, H.; Su, Q. Smart energy management for hybrid electric bus via improved soft actor-critic algorithm in a heuristic learning framework. Energy 2024, 309, 133091. [Google Scholar] [CrossRef]

- Huang, R.; He, H. Naturalistic data-driven and emission reduction-conscious energy management for hybrid electric vehicle based on improved soft actor-critic algorithm. J. Power Sources 2023, 559, 232648. [Google Scholar] [CrossRef]

- Li, T.; Cui, W.; Cui, N. Soft actor-critic algorithm-based energy management strategy for plug-in hybrid electric vehicle. World Electr. Veh. J. 2022, 13, 193. [Google Scholar] [CrossRef]

- Li, C.; Xu, X.; Zhu, H.; Gan, J.; Chen, Z.; Tang, X. Research on car-following control and energy management strategy of hybrid electric vehicles in connected scene. Energy 2024, 293, 130586. [Google Scholar] [CrossRef]

| Artificial Intelligence Algorithms | Algorithm Examples | Advantages | Disadvantages | References |

|---|---|---|---|---|

| Unsupervised Learning | K-means, PCA | No need for labeled data, identifies hidden patterns | Hard to interpret, unclear evaluation metrics | [75,76,77,78,79] |

| Supervised Learning | SVM, LSSVM, decision tree, random forest | High accuracy, interpretable | Requires large labeled datasets | [81,82,83,84,85,86,87,88] |

| Neural Networks | BPNN, fuzzy neural network | Handles complex nonlinear relationships | Prone to overfitting on limited training data | [92,93,94,95,96,97,98] |

| Deep Learning | LSTM, CNN, RNN | Utilizes deep architectures to model complex nonlinear system relationships | Requires a large amount of data | [101,102,103,104,105,106,107,108] |

| Reinforcement Learning | Q-Learning, Dyna, Double Q-Learning, Double Delayed Q-Learning | Adapts to dynamic environments, optimizes long-term rewards | Tedious training time | [110,111,112,113,114,115,116,117] |

| Deep Reinforcement Learning | Deep Q-Network (DQN), Deep Q-Learning (DQL), Double Deep Q-Learning (DDQL), Double Deep Q-Network (DDQN), DDPG, TD3, SAC | Suitable for high-dimensional state spaces | Tedious training time, hyperparameter sensitivity affects reproducibility | [118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, D.; Liu, B.; Liu, L.; Fan, W.; Tang, J. Artificial Intelligence Algorithms for Hybrid Electric Powertrain System Control: A Review. Energies 2025, 18, 2018. https://doi.org/10.3390/en18082018

Zhong D, Liu B, Liu L, Fan W, Tang J. Artificial Intelligence Algorithms for Hybrid Electric Powertrain System Control: A Review. Energies. 2025; 18(8):2018. https://doi.org/10.3390/en18082018

Chicago/Turabian StyleZhong, Dawei, Bolan Liu, Liang Liu, Wenhao Fan, and Jingxian Tang. 2025. "Artificial Intelligence Algorithms for Hybrid Electric Powertrain System Control: A Review" Energies 18, no. 8: 2018. https://doi.org/10.3390/en18082018

APA StyleZhong, D., Liu, B., Liu, L., Fan, W., & Tang, J. (2025). Artificial Intelligence Algorithms for Hybrid Electric Powertrain System Control: A Review. Energies, 18(8), 2018. https://doi.org/10.3390/en18082018