Elastic Momentum-Enhanced Adaptive Hybrid Method for Short-Term Load Forecasting

Abstract

1. Introduction

- (1)

- We propose an adaptive inertia weight adjustment method for PSO. Since inertia weight is the predominant factor governing the search capability of particle swarm in PSO, we develop an adaptive inertia weight adjustment mechanism to enhance the algorithm’s self-adaptation in optimization processes. Comprehensive comparative experiments conducted on benchmark functions validate the effectiveness of the proposed improvement method.

- (2)

- We conducted a comparative analysis of commonly used single prediction models, examining the characteristics and applicable scenarios of different model types. Through case studies, we validated the prediction performance and identified an optimal short-term load forecasting model with superior accuracy and efficiency.

- (3)

- We propose a hybrid forecasting model with adaptive momentum based on an improved PSO. By employing an enhanced particle swarm optimization approach with adaptive inertia weights, the model dynamically determines the optimal combination weights for two forecasting sub-models. This architecture enables autonomous weight adjustment based on individual model predictions and target objectives, thereby significantly improving load forecasting accuracy.

2. Model Framework and Theoretical Principles

2.1. Model Framework

2.2. Single Prediction Model

2.2.1. Rolling Grey Model

2.2.2. ARIMA

2.2.3. SVR

3. Formulation of the Hybrid Prediction Model

3.1. Hybrid Forecasting Model Based on Standard Deviation Method

3.1.1. Combination Weight Determination via Standard Deviation Method

3.1.2. RGM-SVR Hybrid Model

3.1.3. ARIMA-SVR Hybrid Model

3.2. Adaptive Weighted Hybrid Forecasting Model with Flexible Momentum

3.2.1. Improved Particle Swarm Optimization Algorithm

3.2.2. Comparison of APSO with Competing Algorithms

- Reduced parametric complexity.

- Inherent structural efficiency.

- Competitive solution accuracy relative to newer alternatives.

- For unimodal continuous functions, APSO attains the highest precision in optimizing F1.

- In optimizing F2 and F3, APSO slightly surpasses the other algorithms.

- For multimodal functions with multiple local optima (F4, F5, F6), APSO demonstrates significantly superior accuracy compared to the alternatives.

- The WOA algorithm performs better than the other methods (excluding APSO) on F2, F4, and F5.

- GWO shows moderate performance on some functions (e.g., F1 and F3), but its stability is inferior to APSO and WOA.

- PSO and MFO exhibit poor performance on complex functions (F2 and F4), frequently trapping in local optima.

3.2.3. Construction of a Hybrid Adaptive Weighting Prediction Model with Elastic Momentum

4. Case Study

4.1. Data Processing

4.1.1. Temporal Influencing Factors

4.1.2. Temperature Influencing Factors

4.1.3. Weather Type Influencing Factors

4.1.4. Data Preprocessing

4.1.5. Evaluation Method

4.2. Adaptive Inertia-Weighted Hybrid Model for Short-Term Load Forecasting

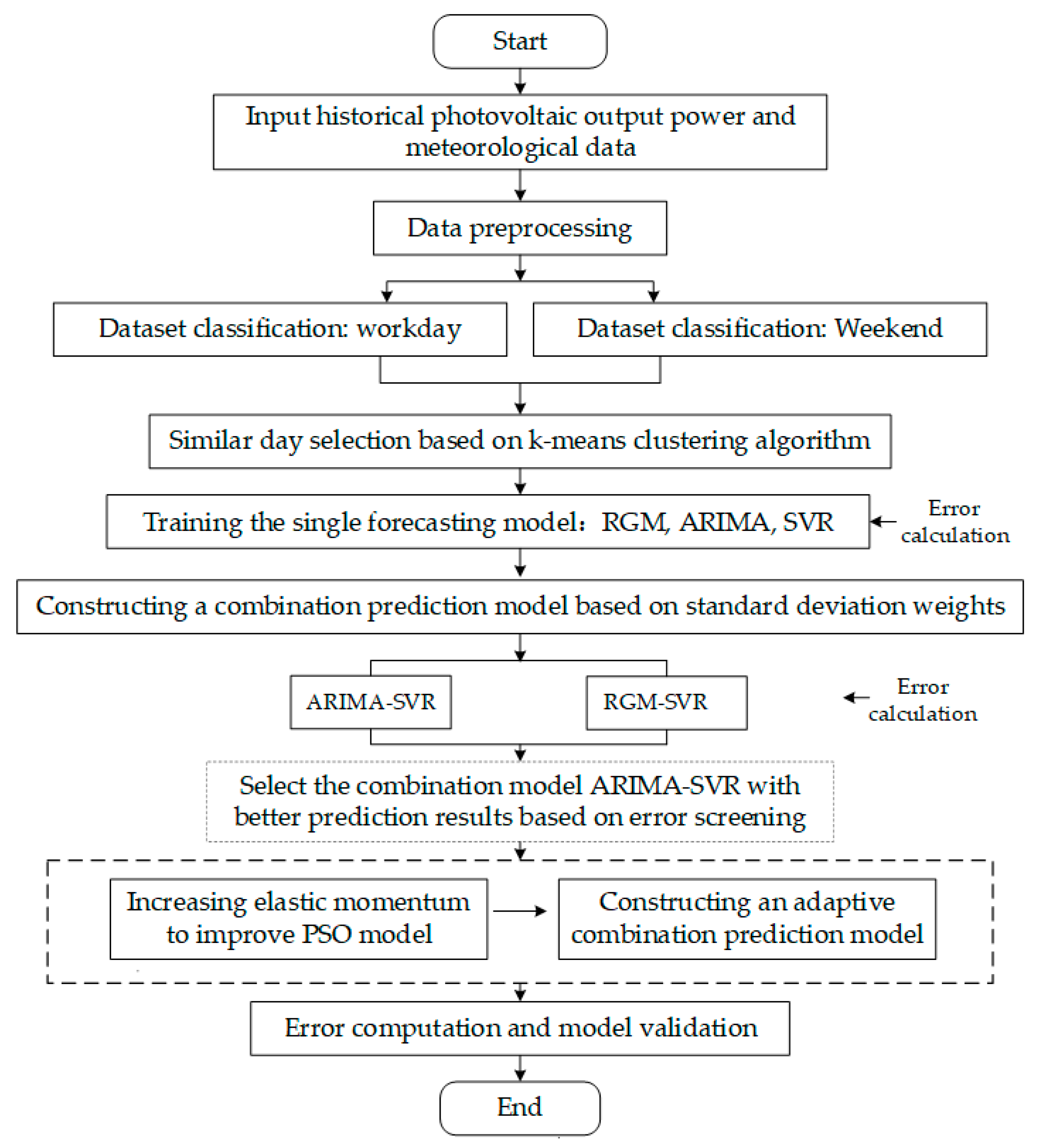

- (1)

- The characteristics of historical user load and meteorological data were analyzed, followed by data preprocessing.

- (2)

- The dataset was classified into workdays and non-workdays, and then K-means clustering was applied to identify similar days.

- (3)

- Based on the forecasted meteorological conditions of the prediction day, corresponding historical load and weather data were selected from similar days as the training set, while using the predicted workday and non-workday as test sets.

- (4)

- Individual prediction models (RGM, ARIMA, and SVR) were employed for short-term load forecasting. The prediction errors were quantitatively calculated, followed by comprehensive model evaluation using standardized metrics.

- (5)

- Two hybrid models were developed by combining time series methods (RGM and ARIMA) with the machine learning approach (SVR) using standard deviation-based weighting. The RGM-SVR and ARIMA-SVR models were constructed for short-term load forecasting.

- (6)

- Prediction errors were computed, and based on the evaluation results, the more accurate and stable ARIMA-SVR hybrid model was selected for weight optimization.

- (7)

- The inertia weight of the PSO algorithm was improved by introducing elastic momentum, enhancing its search capability. The modified PSO (APSO) was then used to optimize the weights of the ARIMA-SVR model, ultimately establishing an adaptive hybrid forecasting model with elastic momentum, APSO-ARIMA-SVR.

- (8)

- The proposed adaptive weighted hybrid forecasting model APSO-ARIMA-SVR was trained and compared against individual prediction models (ARIMA, RGM, SVR), standard deviation-based combination models (ARIMA-SVR, RGM-SVR), and commonly used deep learning models (Transformer, LSTM) to verify its effectiveness. Prediction errors were calculated and evaluated using MAE, RMSE, and Diebold-Mariano test metrics. The comparative results demonstrated that the APSO-ARIMA-SVR model with adaptive weight optimization significantly outperformed all benchmark models in terms of both prediction accuracy and stability, as confirmed by rigorous statistical testing. This comprehensive validation establishes the proposed model as a robust solution for short-term load forecasting applications, combining the strengths of time series analysis, machine learning, and optimized ensemble techniques.

4.3. Results Analysis

5. Conclusions

- (1)

- Comparative simulations demonstrate that combined models (both standard deviation-based and adaptive weight-based) achieve superior generalization capability and higher accuracy compared to individual models.

- (2)

- The inertia weight in the particle swarm optimization algorithm is adaptively adjusted to propose an improved A-PSO model. Comparative experiments with other optimization algorithms demonstrate that the standard deviations of the optimization results for functions F1, F3, F4, F5, and F6 by the APSO algorithm are 1.00 × 10−10, 5.27 × 10−5, 2.39 × 10, 0.04 × 100, and 8.25 × 10−9, respectively. For most functions, the optimization accuracy of the APSO algorithm surpasses that of the PSO algorithm by several orders of magnitude, and it also outperforms traditional GWO, MFO, and WOA algorithms in terms of optimization precision. Notably, in solving continuous unimodal functions, the optimization accuracy of APSO is significantly superior to that of other algorithms.

- (3)

- We propose an adaptive weight hybrid forecasting model (APSO-ARIMA-SVR) by employing the APSO algorithm to optimize the parameter configuration and weight allocation of the ARIMA-SVR framework. Simulation case studies demonstrate that compared to the combined forecasting models RGM-SVR and ARIMA-SVR (based on standard deviation) as well as the single models ARIMA, RGM, and SVR, the adaptive weight combined forecasting model APSO-ARIMA-SVR achieves the best fitting performance and the lowest prediction error. The DM statistical test results indicate that, at a 95% confidence level, APSO-ARIMA-SVR significantly outperforms all other comparative models. Due to the small sample size of the dataset selected in this study, Transformer and LSTM may fail to fully learn the data patterns, resulting in relatively higher prediction errors. While the RGM model shows acceptable precision in initial prediction points, its performance deteriorates significantly with extended forecasting horizons due to inherent limitations in handling complex dataset characteristics, thus proving unsuitable for this application. The developed APSO-ARIMA-SVR framework effectively balances linear and nonlinear components through adaptive weight adjustment, demonstrating robust performance across varying load conditions while maintaining computational efficiency, thereby establishing itself as a recommended solution for short-term load forecasting tasks. In subsequent research, the model will be further optimized and trained/validated on more diverse types of datasets.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Niu, D.; Wang, Y.; Wu, D. Power load forecasting using support vector machine and ant colony optimization. Expert Syst. Appl. 2010, 37, 2531–2539. [Google Scholar] [CrossRef]

- Hong, T.; Fan, S. Probabilistic electric load forecasting: A tutorial review. Int. J. Forecast. 2016, 32, 914–938. [Google Scholar] [CrossRef]

- Friedrich, L.; Afshari, A. Short-term Forecasting of the Abu Dhabi Electricity Load Using Multiple Weather Variables. Energy Procedia 2015, 75, 3014–3026. [Google Scholar] [CrossRef]

- Hamzacebi, C.; Es, H.A. Forecasting the annual electricity consumption of Turkey using an optimized grey model. Energy 2014, 70, 165–171. [Google Scholar] [CrossRef]

- Xiong, D.L.; Zhang, X.Y.; Yu, Z.W.; Zhang, X.F.; Long, H.M.; Chen, L.J. Development and application of an intelligent thermal state monitoring system for sintering machine tails based on cnn-lstm hybrid neural networks. J. Iron Steel Res. Int. 2025, 32, 52–63. [Google Scholar] [CrossRef]

- Wang, H.; Huang, S.; Yin, Y.; Gu, T. Short-term load forecasting based on pelican optimization algorithm and dropout long short-term memories–fully convolutional neural network optimization. Energies 2024, 17, 6115. [Google Scholar] [CrossRef]

- Singh, P.; Dwivedi, P. Integration of new evolutionary approach with artificial neural network for solving short term load forecast problem. Appl. Energy 2018, 217, 537–549. [Google Scholar] [CrossRef]

- Koen, R.; Holloway, J. Application of multiple regression analysis to forecasting South Africa’s electricity demand. J. Energy South. Afr. 2014, 25, 48–58. [Google Scholar] [CrossRef]

- Bianco, V.; Manca, O.; Nardini, S. Electricity consumption forecasting in Italy using linear regression models. Energy 2009, 34, 1413–1421. [Google Scholar] [CrossRef]

- Zhu, J.; Dong, H.; Zheng, W.; Li, S.; Huang, Y.; Xi, L. Review and prospect of data-driven techniques for load forecasting in integrated energy systems. Appl. Energy 2022, 321, 119269. [Google Scholar] [CrossRef]

- Xiong, P.P.; Dang, Y.G.; Yao, T.X. Optimal modeling and forecasting of the energy consumption and production in China. Energy 2014, 77, 623–634. [Google Scholar] [CrossRef]

- Xiong, X.; Hu, X.; Guo, H. A hybrid optimized grey seasonal variation index model improved by whale optimization algorithm for forecasting the residential electricity consumption. Energy 2021, 234, 121127. [Google Scholar] [CrossRef]

- Zeng, S.; Su, B.; Zhang, M.; Gao, Y.; Liu, J.; Luo, S.; Tao, Q. Analysis and forecast of China’s energy consumption structure. Energy Policy 2021, 159, 112630. [Google Scholar] [CrossRef]

- Karlsson, S. Forecasting with Bayesian vector autoregression. Handb. Econ. Forecast. 2013, 2, 791–897. [Google Scholar]

- Khan, S.; Alghulaiakh, H. ARIMA model for accurate time series stocks forecasting. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 524–528. [Google Scholar] [CrossRef]

- Contreras, J.; Espinola, R.; Nogales, F.J.; Conejo, A.J. ARIMA models to predict next-day electricity prices. IEEE Trans. Power Syst. 2003, 18, 1014–1020. [Google Scholar] [CrossRef]

- Zhao, H.; Guo, S. An optimized grey model for annual power load forecasting. Energy 2016, 107, 272–286. [Google Scholar] [CrossRef]

- Box, G.E.P.; Jenkins, G. Time Series Analysis, Forecasting and Control; Holden-Day, Incorporated: San Francisco, CA, USA, 1990; pp. 238–242. [Google Scholar]

- Sen, P.; Roy, M.; Pal, P. Application of ARIMA for forecasting energy consumption and GHG emission: A case study of an Indian pig iron manufacturing organization. Energy 2016, 116, 1031–1038. [Google Scholar] [CrossRef]

- Abdel-Aal, R.E.; Al-Garni, A.Z. Forecasting monthly electric energy consumption in eastern Saudi Arabia using univariate time-series analysis. Energy 2014, 22, 1059–1069. [Google Scholar] [CrossRef]

- Wang, Q.; Li, S.; Li, R. China’s dependency on foreign oil will exceed 80% by 2030: Developing a novel NMGM-ARIMA to forecast China’s foreign oil dependence from two dimensions. Energy 2018, 163, 151–167. [Google Scholar] [CrossRef]

- Deng, J. Control problems of grey systems. J. Huazhong Inst. Technol. 1982, 10, 9–18. [Google Scholar]

- Wang, Q.; Song, X. Forecasting China’s oil consumption: A comparison of novel nonlinear-dynamic grey model (GM), linear GM, nonlinear GM and metabolism GM. Energy 2019, 183, 160–171. [Google Scholar] [CrossRef]

- Wang, Z.X.; Hao, P. An improved grey multivariable model for predicting industrial energy consumption in China. Appl. Math. Model. 2016, 40, 5745–5758. [Google Scholar] [CrossRef]

- Ding, S.; Hipel, K.W.; Dang, Y. Forecasting China’s electricity consumption using a new grey prediction model. Energy 2018, 149, 314–328. [Google Scholar] [CrossRef]

- Abisoye, B.O.; Sun, Y.; Zenghui, W. A survey of artificial intelligence methods for renewable energy forecasting: Methodologies and insights. Renew. Energy Focus 2024, 48, 100529. [Google Scholar] [CrossRef]

- Onwusinkwue, S.; Osasona, F.; Ahmad, I.A.I.; Anyanwu, A.C.; Dawodu, S.O.; Obi, O.C.; Hamdan, A. Artificial intelligence (AI) in renewable energy: A review of predictive maintenance and energy optimization. World J. Adv. Res. Rev. 2024, 21, 2487–2499. [Google Scholar] [CrossRef]

- Huang, X.; Li, Q.; Tai, Y.; Chen, Z.; Liu, J.; Shi, J.; Liu, W. Time series forecasting for hourly photovoltaic power using conditional generative adversarial network and Bi-LSTM. Energy 2022, 246, 123403. [Google Scholar] [CrossRef]

- Zhao, E.; Sun, S.; Wang, S. New developments in wind energy forecasting with artificial intelligence and big data: A scientometric insight. Data Sci. Manag. 2022, 5, 84–95. [Google Scholar] [CrossRef]

- Raza, M.Q.; Khosravi, A. A review on artificial intelligence based load demand forecasting techniques for smart grid and buildings. Renew. Sustain. Energy Rev. 2015, 50, 1352–1372. [Google Scholar] [CrossRef]

- Azadeh, A.; Babazadeh, R.; Asadzadeh, S.M. Optimum estimation and forecasting of renewable energy consumption by artificial neural networks. Renew. Sustain. Energy Rev. 2013, 27, 605–612. [Google Scholar] [CrossRef]

- Liu, M.; Cao, Z.; Zhang, J.; Wang, L.; Huang, C.; Luo, X. Short-term wind speed forecasting based on the Jaya-SVM model. Int. J. Electr. Power Energy Syst. 2020, 121, 106056. [Google Scholar] [CrossRef]

- Mengshu, S.; Yuansheng, H.; Xiaofeng, X.; Dunnan, L. China’s coal consumption forecasting using adaptive differential evolution algorithm and support vector machine. Resour. Policy 2021, 74, 102287. [Google Scholar] [CrossRef]

- Manzoor, H.U.; Khan, A.R.; Flynn, D.; Alam, M.M.; Akram, M.; Imran, M.A.; Zoha, A. Fedbranched: Leveraging federated learning for anomaly-aware load forecasting in energy networks. Sensors 2023, 23, 3570. [Google Scholar] [CrossRef]

- Fekri, M.N.; Grolinger, K.; Mir, S. Distributed load forecasting using smart meter data: Federated learning with Recurrent Neural Networks. Int. J. Electr. Power Energy Syst. 2022, 137, 107669. [Google Scholar] [CrossRef]

- Ba Dri, A.; Ameli, Z.; Birjandi, A.M. Application of Artificial Neural Networks and Fuzzy logic Methods for Short Term Load Forecasting. Energy Procedia 2012, 14, 1883–1888. [Google Scholar] [CrossRef]

- Wang, H.; Lei, Z.; Zhang, X.; Zhou, B.; Peng, J. A review of deep learning for renewable energy forecasting. Energy Convers. Manag. 2019, 198, 111799. [Google Scholar] [CrossRef]

- Aslam, S.; Herodotou, H.; Mohsin, S.M.; Javaid, N.; Ashraf, N.; Aslam, S. A survey on deep learning methods for power load and renewable energy forecasting in smart microgrids. Renew. Sustain. Energy Rev. 2021, 144, 110992. [Google Scholar] [CrossRef]

- Mamun, A.A.; Sohel, M.; Mohammad, N.; Sunny, M.S.H.; Dipta, D.R.; Hossain, E. A Comprehensive Review of the Load Forecasting Techniques Using Single and Hybrid Predictive Models. IEEE Access 2020, 8, 134911–134939. [Google Scholar] [CrossRef]

- Dedinec, A.; Filiposka, S.; Dedinec, A.; Kocarev, L. Deep belief network based electricity load forecasting: An analysis of Macedonian case. Energy 2016, 115, 1688–1700. [Google Scholar] [CrossRef]

- Dong, X.; Deng, S.; Wang, D. A short-term power load forecasting method based on k-means and SVM. J. Ambient. Intell. Humaniz. Comput. 2022, 13, 5253–5267. [Google Scholar] [CrossRef]

- Hu, S.; Xiang, Y.; Huo, D.; Jawad, S.; Liu, J. An improved deep belief network based hybrid forecasting method for wind power. Energy 2021, 224, 120185. [Google Scholar] [CrossRef]

- Wei, Y.; Zhang, H.; Dai, J.; Zhu, R.; Qiu, L.; Dong, Y.; Fang, S. Deep belief network with swarm spider optimization method for renewable energy power forecasting. Processes 2023, 11, 1001. [Google Scholar] [CrossRef]

- Chaturvedi, S.; Rajasekar, E.; Natarajan, S.; McCullen, N. A comparative assessment of SARIMA, LSTM RNN and Fb Prophet models to forecast total and peak monthly energy demand for India. Energy Policy 2022, 168, 113097. [Google Scholar] [CrossRef]

- Fang, W.; Chen, Y.; Xue, Q. Survey on research of RNN-based spatio-temporal sequence prediction algorithms. J. Big Data 2021, 3, 97. [Google Scholar] [CrossRef]

- Abumohsen, M.; Owda, A.Y.; Owda, M. Electrical load forecasting using LSTM, GRU, and RNN algorithms. Energies 2023, 16, 2283. [Google Scholar] [CrossRef]

- Sun, L.; Qin, H.; Przystupa, K.; Majka, M.; Kochan, O. Individualized short-term electric load forecasting using data-driven meta-heuristic method based on LSTM network. Sensors 2022, 22, 7900. [Google Scholar] [CrossRef]

- Kim, J.; Obregon, J.; Park, H.; Jung, J.Y. Multi-step photovoltaic power forecasting using transformer and recurrent neural networks. Renew. Sustain. Energy Rev. 2024, 200, 114479. [Google Scholar] [CrossRef]

- Giacomazzi, E.; Haag, F.; Hopf, K. Short-term electricity load forecasting using the temporal fusion transformer: Effect of grid hierarchies and data sources. In Proceedings of the 14th ACM International Conference on Future Energy Systems, Orlando, FL, USA, 20–23 June 2023; pp. 353–360. [Google Scholar]

- Galindo Padilha, G.A.; Ko, J.; Jung, J.J.; de Mattos Neto, P.S.G. Transformer-based hybrid forecasting model for multivariate renewable energy. Appl. Sci. 2022, 12, 10985. [Google Scholar] [CrossRef]

- Xu, H.; Hu, F.; Liang, X. A framework for electricity load forecasting based on attention mechanism time series depthwise separable convolutional neural network. Energy 2024, 299, 131258. [Google Scholar] [CrossRef]

- Singh, S.N.; Mohapatra, A. Data driven day-ahead electrical load forecasting through repeated wavelet transform assisted SVM model. Appl. Soft Comput. 2021, 111, 107730. [Google Scholar]

- Zeng, A.; Chen, M.; Zhang, L.; Xu, Q. Are transformers effective for time series forecasting? In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 11121–11128. [Google Scholar]

- Wang, J.; Wang, Y.; Li, Z. A combined framework based on data preprocessing, neural networks and multi-tracker optimizer for wind speed prediction. Sustain. Energy Technol. Assess. 2020, 40, 100757. [Google Scholar] [CrossRef]

- Zheng, J.; Du, J.; Wang, B.; Klemeš, J.J.; Liao, Q.; Liang, Y. A hybrid framework for forecasting power generation of multiple renewable energy sources. Renew. Sustain. Energy Rev. 2023, 172, 113046. [Google Scholar] [CrossRef]

- Hajirahimi, Z.; Khashei, M. Hybridization of hybrid structures for time series forecasting: A review. Artif. Intell. Rev. 2023, 56, 1201–1261. [Google Scholar] [CrossRef]

- Song, C.; Fu, X. Research on different weight combination in air quality forecasting models. J. Clean. Prod. 2020, 261, 121169. [Google Scholar] [CrossRef]

- Wang, Y.M.; Luo, Y. Integration of correlations with standard deviations for determining attribute weights in multiple attribute decision making. Math. Comput. Model. 2010, 51, 1–12. [Google Scholar] [CrossRef]

- Qu, W.; Li, J.; Song, W.; Li, X.; Zhao, Y.; Dong, H.; Qi, Y. Entropy-weight-method-based integrated models for short-term intersection traffic flow prediction. Entropy 2022, 24, 849. [Google Scholar] [CrossRef]

- Raziani, S.; Ahmadian, S.; Jalali, S.M.J.; Chalechale, A. An efficient hybrid model based on modified whale optimization algorithm and multilayer perceptron neural network for medical classification problems. J. Bionic Eng. 2022, 19, 1504–1521. [Google Scholar] [CrossRef]

- Yang, S.S.; Yang, X.H.; Jiang, R.; Zhang, Y.C. New optimal weight combination model for forecasting precipitation. Math. Probl. Eng. 2012, 1, 376010. [Google Scholar] [CrossRef]

- Wang, C.; Lin, H.; Hu, H.; Yang, M.; Ma, L. A hybrid model with combined feature selection based on optimized VMD and improved multi-objective coati optimization algorithm for short-term wind power prediction. Energy 2024, 293, 130684. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, D.; Tang, Y. Clustered hybrid wind power prediction model based on ARMA, PSO-SVM, and clustering methods. IEEE Access 2020, 8, 17071–17079. [Google Scholar] [CrossRef]

- Tang, T.; Jiang, W.; Zhang, H.; Nie, J.; Xiong, Z.; Wu, X.; Feng, W. GM (1, 1) based improved seasonal index model for monthly electricity consumption forecasting. Energy 2022, 252, 124041. [Google Scholar] [CrossRef]

- Box, G.E.; Pierce, D.A. Distribution of residual autocorrelations in autoregressive-integrated moving average time series models. J. Am. Stat. Assoc. 1970, 65, 1509–1526. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support vector machine. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Aburomman, A.A.; Reaz, M.B.I. A novel SVM-kNN-PSO ensemble method for intrusion detection system. Appl. Soft Comput. 2016, 38, 360–372. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

| Models | Feature | Advantages | Disadvantages | Applied to |

|---|---|---|---|---|

| Regression analysis | Estimating and predicting the former through the latter; the earliest forecasting method applied in the field of energy management | The calculation process is simple and easy to understand | Large sample size; Poor adaptability and not flexible enough | Forecasting electricity demand in South Africa [12]; Forecasting China’s energy consumption structure [13] |

| ARIMA | Transform a non-stationary time series into a stationary time series and then regressing the dependent variable’s hysteresis value and the present and late value of the random error term | The model is simple and only requires endogenous variables | Requires stable time series data, or stable after differentiation; Essentially only capture linear relationships, not nonlinear relationships | Forecasting monthly electricity consumption [20]; Forecasting energy consumption and greenhouse gas emissions from Indian pig iron manufacturing companies [19] |

| Grey | GM can be established with a small amount of incomplete information | Less sample size required; Short-term prediction accuracy is higher | Cannot consider the relationship between factors; Long-term prediction error is large | Forecasting China’s electricity consumption [23]; Predicting industrial energy consumption in China [24] |

| ANN | A highly complex nonlinear dynamic learning system; Suitable for handling inaccurate | Can fully approximate complex nonlinear relationships | More model parameters; Large sample size required for model training | Renewable energy consumption [31]; Forecasting electricity load [40] |

| SVR | Map data to a high-dimensional feature space through a nonlinear mapping and perform regression | Small sample; Simplify regression problems; High flexibility | Difficult to train large sample data | Forecasting short-term wind speed [32]; Forecasting China’s coal consumption forecasting [33] |

| LSTM | Persistent input storage enables; assessment of information | Capable of learning long-term; suited for addressing time series-dependent problems | Requires extended training durations | Forecasting electrical load [46,47]; |

| Transform-er | Processes multiple attention subspaces in parallel | Support parallel computing; Adapt to multimodal tasks | Small-sample scenarios are prone to overfitting | Forecasting multi-step photovoltaic power [48]; Forecasting multivariate renewable energy [50] |

| Parameter | ||||||||

|---|---|---|---|---|---|---|---|---|

| estimated value | −0.055 | 0.054 | 0.059 | 0.055 | 2.651 | 2.815 | 2.930 | 3.101 |

| Model Parameter | p | d | q |

|---|---|---|---|

| Value | 2 | 1 | 2 |

| Function | Formula | Dimension | Range | Theoretical Optimum |

|---|---|---|---|---|

| Schwefel 2.21 | 30 | [−100, 100] | 0 | |

| Rosenbrock | 30 | [−30, 30] | 0 | |

| Quartic | 30 | [−1.28, 1.28] | 0 | |

| Schwefel 2.26 | 30 | [−500, 500] | 0 | |

| Rastrigin | 30 | [−5.12, 5.12] | 0 | |

| Griewank | 30 | [−600, 600] | 0 |

| Function | GWO | MFO | WOA | PSO | APSO | |

|---|---|---|---|---|---|---|

| F1 | Std.Dev | 8.24 × 10−7 | 3.34 × 10 | 1.07 × 10−6 | 2.32 × 10 | 1.00 × 10−10 |

| Mean | 1.20 × 10−10 | 3.52 × 100.1 | 5.01 × 10−11 | 2.50 × 10 | 0.00 × 100 | |

| F2 | Std.Dev | 8.47 × 10−1 | 4.22 × 10−1 | 1.26 × 10−1 | 1.95 × 105 | 2.63 × 10−1 |

| Mean | 2.49 × 10−2 | 5.00 × 10−2 | 1.00 × 10−2 | 1.50 × 105 | 5.00 × 10−3 | |

| F3 | Std.Dev | 7.67 × 10−4 | 2.80 × 10−3 | 3.85 × 10−4 | 7.97 × 10−2 | 5.27 × 10−5 |

| Mean | 5.00 × 10−5 | 1.51 × 10−3 | 2.99 × 10−5 | 8.12 × 10−2 | 1.01 × 10−6 | |

| F4 | Std.Dev | 1.03 × 103 | 6.45 × 102 | 2.75 × 102 | 3.94 × 102 | 2.39 × 10 |

| Mean | 1.53 × 103 | 1.17 × 103 | 5.04 × 102 | 1.00 × 103 | 2.03 × 102 | |

| F5 | Std.Dev | 5.69 × 100 | 0.79 × 100 | 0.12 × 100 | 2.98 × 10 | 0.04 × 100 |

| Mean | 0.98 × 10 | 5.02 × 100 | 2.00 × 100 | 2.77 × 10 | 0.99 × 100 | |

| F6 | Std.Dev | 6.45 × 10−3 | 6.50 × 10−2 | 3.78 × 10−3 | 3.98 × 10−1 | 8.25 × 10−9 |

| Mean | 1.00 × 10−0.4 | 5.17 × 10−2 | 4.92 × 10−5 | 3.97 × 10−1 | 0.00 × 100 | |

| k Value | Comfort Level |

|---|---|

| ≤0 | extremely cold |

| [0, 25] | cold |

| [26, 38] | comparative cold |

| [39, 50] | micro cold |

| [51, 58] | comparative comfortable |

| [59, 70] | comfortable |

| [71, 75] | warmer |

| [76, 79] | bit hot |

| [80, 84] | comparative hot |

| [85, 88] | hot |

| ≥89 | extremely hot |

| Weather Type | Sunny | Cloudy | Overcast | Drizzle | Moderate Rain | Heavy Rainfall |

|---|---|---|---|---|---|---|

| quantized value | 1 | 0.8 | 0.6 | 0.5 | 0.3 | 0.1 |

| Model | Weekday | Weekend | ||

|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | |

| APSO-ARIMA-SVR | 274.23 | 321.50 | 249.81 | 304.10 |

| ARIMA-SVR | 378.30 | 426.97 | 302.97 | 346.06 |

| RGM-SVR | 634.89 | 721.87 | 442.65 | 510.88 |

| SVR | 420.68 | 496.54 | 298.28 | 341.09 |

| ARIMA | 367.69 | 451.67 | 276.63. | 336.63 |

| RGM | 874.19 | 961.47 | 681.68 | 883.33 |

| Transformer | 566.83 | 633.56 | 410.66 | 467.28 |

| LSTM | 511.72 | 611.29 | 333.65 | 395.87 |

| Model | DM | p |

|---|---|---|

| ARIMA-SVR | −2.1558 | 0.0417 |

| RGM-SVR | −4.6302 | 0.0001 |

| ARIMA | −2.6436 | 0.0145 |

| SVR | −2.6006 | 0.0159 |

| RGM | −5.3833 | 0.000018 |

| Transformer | −2.9749 | 0.0678 |

| LSTM | −2.2153 | 0.0369 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, W.; Xu, H.; Chen, P.; Zhang, J.; Li, J.; Cai, T. Elastic Momentum-Enhanced Adaptive Hybrid Method for Short-Term Load Forecasting. Energies 2025, 18, 3263. https://doi.org/10.3390/en18133263

Zhao W, Xu H, Chen P, Zhang J, Li J, Cai T. Elastic Momentum-Enhanced Adaptive Hybrid Method for Short-Term Load Forecasting. Energies. 2025; 18(13):3263. https://doi.org/10.3390/en18133263

Chicago/Turabian StyleZhao, Wenting, Haoran Xu, Peng Chen, Juan Zhang, Jing Li, and Tingting Cai. 2025. "Elastic Momentum-Enhanced Adaptive Hybrid Method for Short-Term Load Forecasting" Energies 18, no. 13: 3263. https://doi.org/10.3390/en18133263

APA StyleZhao, W., Xu, H., Chen, P., Zhang, J., Li, J., & Cai, T. (2025). Elastic Momentum-Enhanced Adaptive Hybrid Method for Short-Term Load Forecasting. Energies, 18(13), 3263. https://doi.org/10.3390/en18133263