Voltage Regulation Strategies in Photovoltaic-Energy Storage System Distribution Network: A Review

Abstract

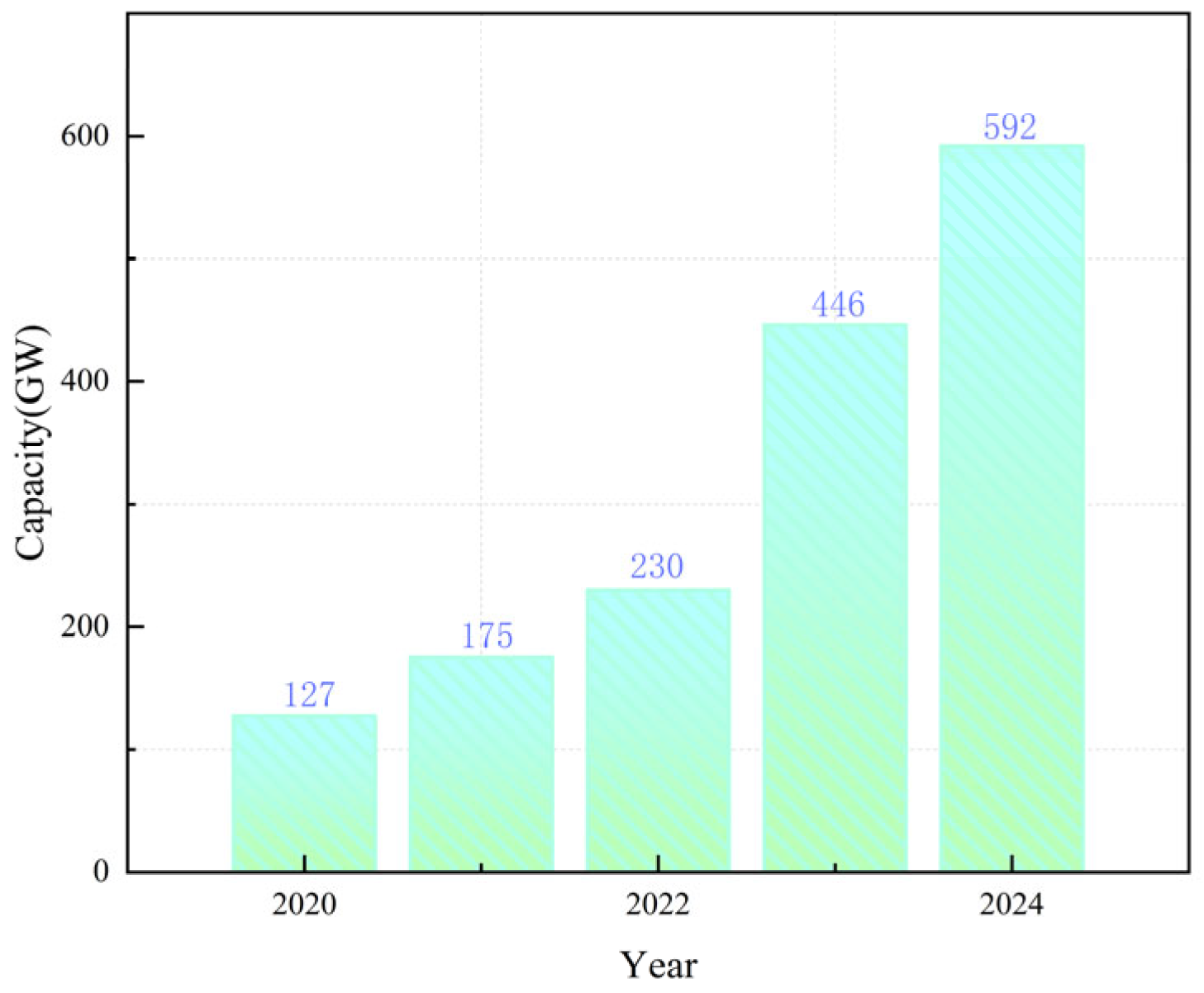

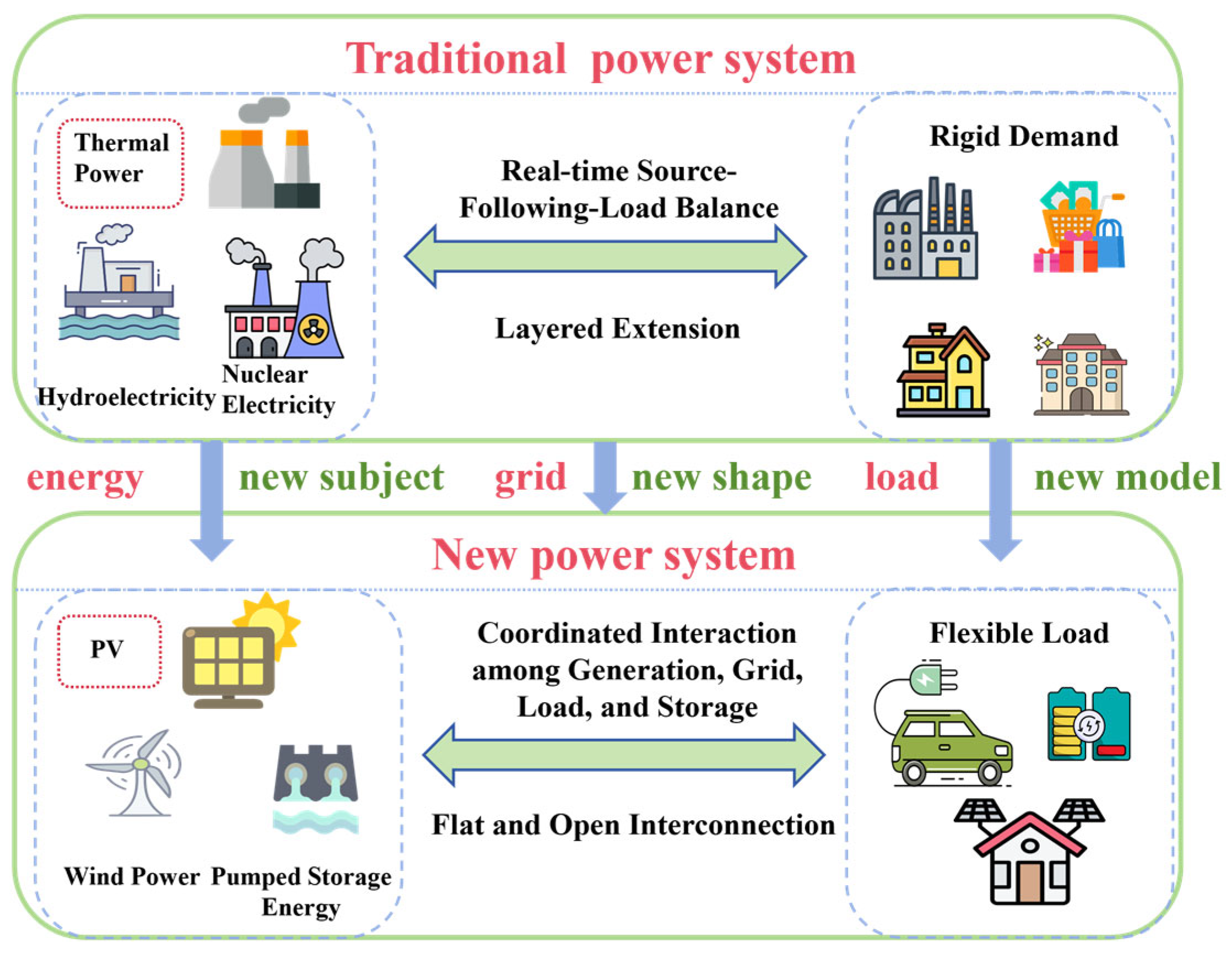

1. Introduction

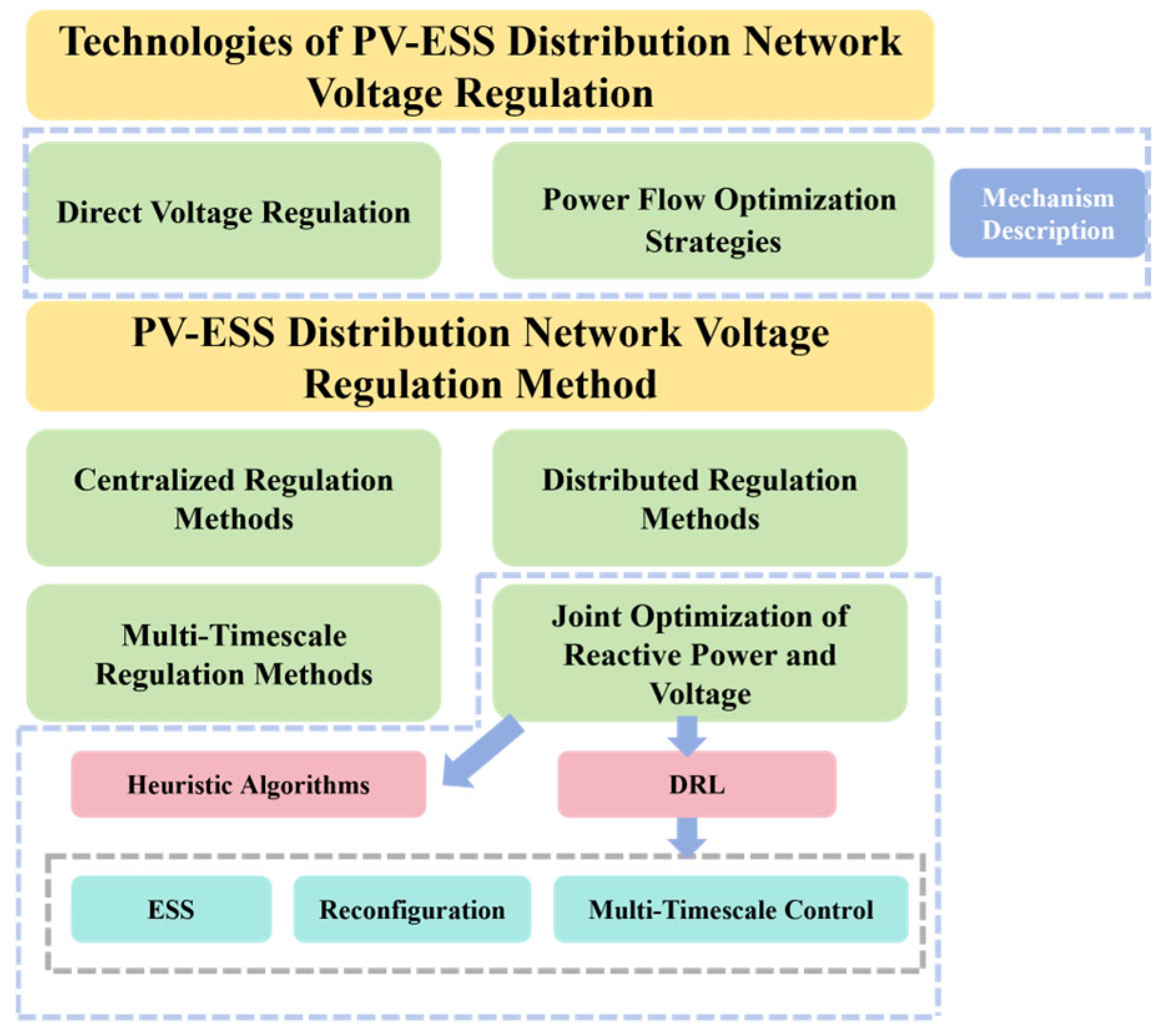

2. Analysis of Voltage Violation Mechanism in PV-ESS Distribution Network

2.1. Over-Runs Caused by DPV Access

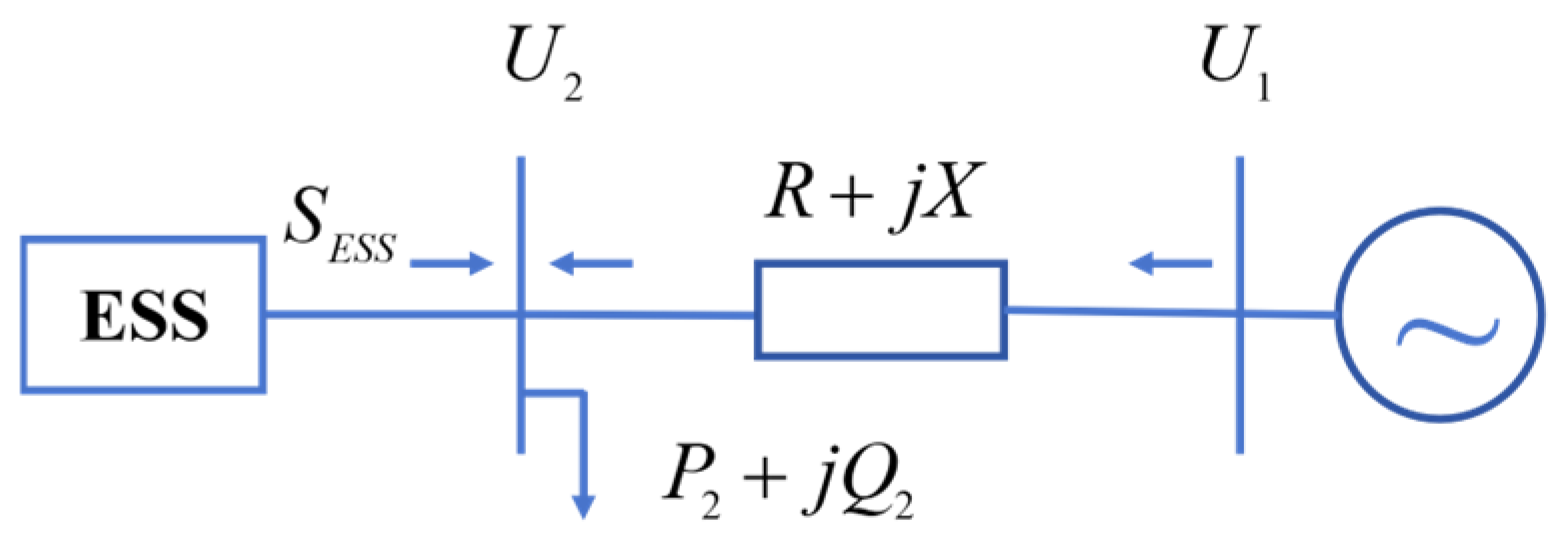

2.2. Over-Runs Caused by Charging and Discharging Behavior of Energy Storage System

3. Technologies of PV-ESS Distribution Network Voltage Regulation

3.1. Direct Voltage Regulation

3.1.1. Principle of OLTC

3.1.2. Principle of Voltage Regulation Using SVC

3.2. Power Flow Optimization Strategies

3.2.1. PV Inverter Voltage Regulation Principle

3.2.2. Voltage Regulation Principles for ESS

3.2.3. Principles of Voltage Regulation Based on Cluster Partitioning in Distribution Network

4. PV-ESS Distribution Network Voltage Regulation Method

4.1. Centralized Regulation Methods

4.2. Distributed Regulation Methods

4.3. Multi-Timescale Regulation Methods

4.4. Joint Optimization of Reactive Power and Voltage

4.4.1. Application of Commonly Used Heuristic Algorithms for Voltage Regulation in PV-ESS Distribution Network

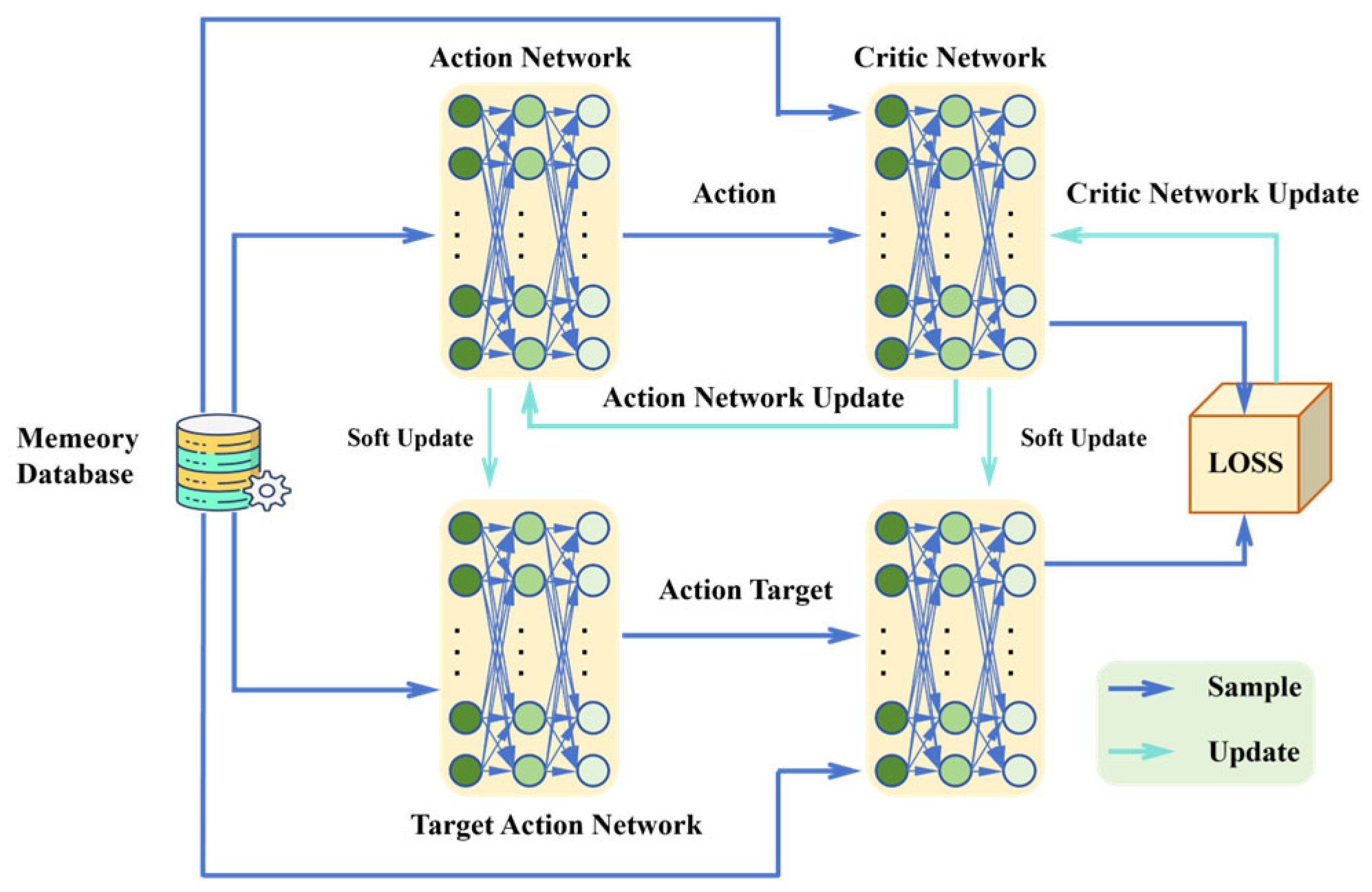

4.4.2. Deep Reinforcement Learning for Voltage Regulation in PV-ESS and Distribution Network

- Reinforcement Learning

- (1)

- State set: S is the set of environment states, where the state of the intelligence at moment t is ;

- (2)

- Action set: A is the set of actions of an agent, where the action of the agent at moment t is ;

- (3)

- State transfer process: the state transfer process denotes the probability that an agent performs an action at in state and then transfers to the next moment state ;

- (4)

- Reward function: the reward function is the immediate reward obtained by an agent after performing the action at in the state .

- Deep Reinforcement Learning Algorithm Summary

- Deep Reinforcement Learning for PV-ESS and Distribution Network

- 1.

- Energy Storage System

- 2.

- Distribution Network Reconfiguration

- 3.

- Multi-Timescale Control

5. Challenges and Prospects of Voltage Regulation in PV-ESS Distribution Network

5.1. Challenges

- The contradiction between timeliness and accuracy of dynamic cluster partitioning: Traditional partitioning methods have minute delays in thousand-node systems, making it difficult to meet real-time requirements [95,96,97]; static models have a failure rate as high as 42% in photovoltaic fluctuation scenarios. The network loss in multidimensional optimization shows a significant negative correlation with the reliability index, and the heterogeneous nature of the resources also exacerbates the fragmentation of the resources. A breakthrough should be achieved through distributed computing, digital twin modeling, and multi-timescale collaborative optimization.

- Reinforcement learning policy migration and security issues: The current training mainly relies on a simulation environment, in which it is difficult to realistically reproduce the dynamic characteristics of a distribution network, and random policy exploration may threaten the system security. It is necessary to construct a high-fidelity digital twin system to achieve safe migration and to integrate data-driven methods with physical models to improve generalization ability and control credibility. Digital twins provide a real-time virtual replica of the power system, enabling safer testing and the adaptation of reinforcement learning policies. By bridging the gap between simulation and reality, they help reduce policy transfer risks and improve control reliability under dynamic grid conditions. The introduction of multi-objective learning, migration learning, and security constraint mechanisms is the key to improve the practicality of the algorithm.

- The multi-dimensional challenges of EV participation in voltage regulation: The privacy level requires the introduction of privacy computing frameworks such as federated learning; the control level faces response delay and control granularity issues. Interface differences lead to a decline in the accuracy of voltage regulation; the market mechanism lacks a universal solution that takes into account the interests of both the grid and the user; and, at the same time, frequent charging and discharging will exacerbate battery degradation, which requires the construction of a fine-grained battery life assessment model. The essence of the overall problem is the balance game between user flexibility, the real-time grid, and equipment life, which needs to be dealt with through edge computing, standardized interfaces, and digital evaluation system.

- Three-phase imbalance and harmonics on the grid voltage challenges are mainly manifested in the deterioration of voltage quality prone to neutral shift, waveform distortion, equipment loss and life reduction, resonance risk and protection false operation, as well as new energy grid-connected harmonic superposition, imbalance aggravation, and other issues. In the future, it is necessary to break through from intelligent monitoring and dynamic compensation, power electronic harmonic suppression using multi-level converter and AI predictive control, and new energy synergistic control and other multi-dimensional breakthroughs to build a highly resilient power grid system from intelligent perception to multi-dimensional analysis to efficient management.

5.2. Prospects

- Uncertainty modeling and distributed cooperative control: the strong volatility and uncertainty of distributed photovoltaic and customer-side loads make it difficult for traditional centralized regulation to fully cope with them. The centralized method based on probabilistic prediction can quantify the source-load bilateral uncertainty and improve the system robustness by combining stochastic optimization and robust optimization. In the distributed regulation framework, the existing methods mostly rely on point prediction results or measurement data and lack the comprehensive utilization of source-load probabilistic information. In the future, there is an urgent need to construct a distributed communication mechanism that supports probabilistic information transmission to maximize the benefits of multifaceted device regulation under multiple uncertainties.

- The enhancement of strategy migration and model adaptation: Existing deep reinforcement learning algorithms assume that the system model is static and unchanged, making it difficult to cope with dynamic changes in the physical model caused by changes in the grid topology or access to new energy sources, resulting in a decline in the performance of the trained strategy or even its failure [98]. To address these challenges, the integration of digital twins, edge computing, and federated learning is emerging as a promising solution. Digital twins provide a real-time virtual replica of the physical grid system, enabling rapid simulation and testing of control strategies under various operating scenarios. Edge computing brings computational intelligence closer to data sources, allowing for faster local decision making and reducing dependence on centralized infrastructure. Meanwhile, federated learning enables distributed devices to collaboratively train models without sharing raw data, thus enhancing adaptability while preserving data privacy. In the future, we need to develop learning algorithms with model-aware and adaptive capabilities so that they can quickly adjust their strategies after system changes to achieve continuous and stable control performance.

- Privacy protection mechanism and system governance model management: The participation of EVs in voltage regulation involves user privacy issues, reflecting the conflict between individual rights and system effectiveness in the process of energy digitization. It is crucial to build a “privacy–efficiency” balance mechanism. In the future, we can rely on homomorphic encryption, federated learning, and other technologies to shift from the data level to the knowledge level of regulation to realize secure dispatching. In addition, the integration of cryptography and market mechanism is expected to realize the orderly regulation of power grid public power while guaranteeing privacy and promote the transformation of energy governance mode [99].

- Unified framework under multi-device convergence: The key to achieving unified cooperative control of distributed resources such as photovoltaic, energy storage, and electric vehicles in the future lies in the breakthrough of the two core challenges of heterogeneous model integration and cross-timescale optimization. In terms of control models, a hybrid control mode combining distributed and centralized control can be explored, and distributed algorithms, such as the ADMM, can achieve the global optimization goal while guaranteeing the autonomous regulation capability of each device. In terms of modeling methodology, efforts should be made to develop hybrid modeling technology that integrates physical mechanisms and data-driven modeling, which not only portrays the dynamic characteristics of the equipment by using mechanism models such as state space equations but also predicts the fluctuation of photovoltaic output and EV charging behavior with the help of machine learning methods.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ding, M.; Xu, Z.; Wang, W.; Song, Y.; Chen, D. A review on China’ s large-scale PV integration: Progress, challenges and recommendations. Renew. Sustain. Energy Rev. 2016, 53, 639–652. [Google Scholar] [CrossRef]

- Zhao, B.; Zhang, X.; Li, P.; Wang, K.; Xue, M.; Wang, C. Optimal sizing, operating strategy and operational experience of a stand-alone micro-grid on Dongfushan Island. Appl. Energy 2014, 113, 1656–1666. [Google Scholar] [CrossRef]

- Zhang, C.; Xu, Y.; Wang, Y.; Dong, Z.; Zhang, R. Three-stage hierarchically-coordinated voltage/var control based on PV inverters considering distribution network voltage stability. IEEE Trans. Sustain. Energy 2022, 13, 868–881. [Google Scholar] [CrossRef]

- Etxegarai, A.; Eguia, P.; Torres, E.; Iturregi, A.; Valverde, V. Review of grid connection requirements for generation assets in weak power grids. Renew. Sustain. Energy Rev. 2015, 41, 1501–1514. [Google Scholar] [CrossRef]

- Antoniadou-Plytaria, K.E.; Kouveliotis-Lysikatos, I.N.; Georgilakis, P.S.; Hatziargyriou, N.D. Distributed and Decentralized Voltage Control of Smart Distribution Networks: Models, Methods, and Future Research. IEEE Trans. Smart Grid 2017, 8, 2999–3008. [Google Scholar] [CrossRef]

- Karimi, M.; Mokhlis, H.; Naidu, K.; Uddin, S.; Bakar, A. Photovoltaic penetration issues and impacts in distribution network—A review. Renew. Sustain. Energy Rev. 2016, 53, 594–605. [Google Scholar] [CrossRef]

- Srirattanawichaikul, W. Modified coordination of voltage-dependent reactive power control with inverter-based DER for voltage regulation in distribution networks. In Proceedings of the 2022 4th International Conference on Smart Power & Internet Energy Systems (SPIES), Beijing, China, 27–30 October 2022. [Google Scholar]

- Zhao, B.; Xu, Z.; Xu, C.; Wang, C.; Lin, F. Network partition based zonal voltage control for distribution networks with distributed PV systems. IEEE Trans. Smart Grid 2018, 9, 4087–4098. [Google Scholar] [CrossRef]

- Zhang, H.; Xia, C.; Peng, P.; Chen, N.; Gao, B. Research on the Voltage Regulation Strategy of Photovoltaic Power Plant. In Proceedings of the 2018 China International Conference on Electricity Distribution (CICED), Tianjin, China, 17–19 September 2018. [Google Scholar]

- Zuo, H.; Teng, Y.; Cheng, S.; Sun, P.; Chen, Z. Distributed multi-energy storage cooperative optimization control method for power grid voltage stability enhancement. Electr. Power Syst. Res. 2023, 216, 109012. [Google Scholar] [CrossRef]

- Wang, L.; Liang, D.H.; Crossland, A.F.; Taylor, P.C.; Jones, D.; Wade, N.S. Coordination of multiple energy storage units in a low-voltage distribution network. IEEE Trans. Smart Grid 2015, 6, 2906–2918. [Google Scholar] [CrossRef]

- Boglou, V.; Karlis, A. A many-objective investigation on electric vehicles’ integration into low-voltage energy distribution networks with rooftop PVs and distributed ESSs. IEEE Access 2024, 12, 132210–132235. [Google Scholar] [CrossRef]

- Akbari, H.; Browne, M.C.; Ortega, A.; Huang, M.; Hewitt, N.J.; Norton, B.; McCormack, S.J. Efficient energy storage technologies for photovoltaic systems. Sol. Energy 2019, 192, 144–168. [Google Scholar] [CrossRef]

- Yin, Z.; Ji, X.; Zhang, Y.; Liu, Q.; Bai, X. Data-driven approach for real-time distribution network reconfiguration. IET Gener. Transm. Distrib. 2020, 14, 2450–2463. [Google Scholar] [CrossRef]

- Wang, L.; Bai, F.; Yan, R.; Saha, T.K. Real- time coordinated voltage control of PV inverters and energy storage for weak networks with high PV penetration. IEEE Trans. Power Syst. 2018, 33, 3383–3395. [Google Scholar] [CrossRef]

- Dorostkar-Ghamsari, M.R.; Fotuhi-Firuzabad, M.; Lehtonen, M.; Safdarian, A. Value of distribution network reconfiguration in presence of renewable energy resources. IEEE Trans. Power Syst. 2015, 31, 1879–1888. [Google Scholar] [CrossRef]

- Thomson, M. Automatic voltage control relays and embedded generation. I. POWER ENG-US. 2000, 14, 71–76. [Google Scholar] [CrossRef]

- Salman, S.K.; Wan, Z. Voltage control of distribution network with distributed/embedded generation using fuzzy logic-based AVC relay. In Proceedings of the 42nd International Universities Power Engineering Conference (UPEC), Brighton, UK, 4–6 September 2007. [Google Scholar]

- Raghavendra, P.; Gaonkar, D.N. Online voltage estimation and control for smart distribution network with DG. J. Mod. Power Syst. Clean Energy 2016, 4, 40–46. [Google Scholar] [CrossRef]

- You, Y.; Liu, D.; Yu, N.; Pan, F.; Chen, F. Research on solutions for implement of active distribution network. Prz. Elektrotech.-Niczn. 2012, 88, 238–242. [Google Scholar]

- Li, S.; Ding, M.; Wang, J.; Zhang, W. Voltage control capability of SVC with var dispatch and slope setting. Electr. Power Syst. Res. 2009, 79, 818–825. [Google Scholar] [CrossRef]

- Calasan, M.; Konjic, T.; Kecojevic, K.; Nikitovic, L. Optimal allocation of static var compensators in electric power systems. Energies 2020, 13, 3219. [Google Scholar] [CrossRef]

- Abdel-Rahman, M.H.; Youssef, F.M.; Saber, A.A. New static var compensator control strategy and coordination with under-load tap changer. IEEE Trans. Power Deliv. 2006, 21, 1630–1635. [Google Scholar] [CrossRef]

- Dash, P.; Sharaf, A.; Hill, E. An adaptive stabilizer for thyristor controlled static VAR compensators for power systems. IEEE Trans. Power Syst. 1989, 4, 403–410. [Google Scholar] [CrossRef]

- Li, M.; Li, W.; Zhao, J.; Chen, W.; Yao, W. Three-layer coordinated control of the hybrid operation of static var compensator and static synchronous compensator. IET Gener. Transm. Distrib. 2016, 10, 2185–2193. [Google Scholar] [CrossRef]

- Samadi, A.; Eriksson Robert Soder, L.; Rawn, B.G.; Boemer, J.C. Coordinated active power-dependent voltage regulation in distribution grids with PV systems. IEEE Trans. Power Deliv. 2014, 29, 1454–1464. [Google Scholar] [CrossRef]

- Alam, M.J.E.; Muttaqi, K.M.; Sutanto, D. Mitigation of rooftop solar PV impacts and evening peak support by managing available capacity of distributed energy storage systems. IEEE Trans. Sustain. Energy 2013, 28, 3874–3884. [Google Scholar] [CrossRef]

- Cui, J.; Liu, Y.; Qin, H.; Hua, Y.; Zheng, L. A novel voltage regulation strategy for distribution networks by coordinating control of OLTC and air conditioners. Appl. Sci. 2022, 12, 8104. [Google Scholar] [CrossRef]

- Wang, Y.; Tan, K.T.; Peng, X.; So, P. Coordinated control of distributed energy storage systems for voltage regulation in distribution networks. IEEE Trans. Power Deliv. 2015, 31, 1132–1141. [Google Scholar] [CrossRef]

- Kabir, M.N.; Mishra, Y.; Ledwich, G.; Dong, Z.; Wong, K. Coordinated control of grid-connected photovoltaic reactive power and battery energy storage systems to improve the voltage profile of a residential distribution feeder. IEEE Trans. Ind. Inf. 2014, 10, 967–977. [Google Scholar] [CrossRef]

- Alam, M.J.E.; Muttaqi, K.M.; Sutanto, D. A novel approach for ramp-rate control of solar PV using energy storage to mitigate output fluctuations caused by cloud passing. IEEE Trans. Sustain. Energy 2014, 29, 507–518. [Google Scholar]

- Shu, Z.; Jirutitijaroen, P. Optimal operation strategy of energy storage system for grid-connected wind power plants. IEEE Trans. Sustain. Energy 2013, 5, 190–199. [Google Scholar] [CrossRef]

- Fang, X.; Hodge, B.; Bai, L.; Cui, H.; Li, F. Mean-variance optimization-based energy storage scheduling considering day-ahead and real-time LMP uncertainties. IEEE Trans. Power Syst. 2018, 33, 7292–7295. [Google Scholar] [CrossRef]

- Chai, Y.; Guo, L.; Wang, C.; Zhao, Z.; Du, X.; Pan, J. Network partition and voltage coordination control for distribution networks with high penetration of distributed PV Units. IEEE Trans. Power Syst. 2018, 33, 3396–3407. [Google Scholar] [CrossRef]

- Vinothkumar, K.; Selvan, M. Hierarchical agglomerative clustering algorithm method for distributed generation planning. Int. J. Electr. Power Energy Syst. 2014, 56, 259–269. [Google Scholar] [CrossRef]

- Salman, S.K.; Wan, Z. Hierarchical clustering based zone formation in power networks. In Proceedings of the 2016 National Power Systems Conference (NPSC), Bhubaneswar, India, 19–21 December 2016. [Google Scholar]

- Li, H.; Kun, S.; Meng, F.; Wang, Z.; Wang, C. Voltage control strategy of a high-permeability photovoltaic distribution network based on cluster division. Front. Energy Res. Front. Energy Res. 2024, 12, 1377841. [Google Scholar] [CrossRef]

- Li, H.; Zhou, L.; Mao, M.; Zhang, Q. Three-layer voltage/var control strategy for PV cluster considering steady-state voltage stability. J. Cleaner Prod. 2024, 12, 1377841. [Google Scholar] [CrossRef]

- Liu, Z.; Hu, W.; Guo, G.; Wang, J.; Xuan, L.; He, F.; Zhou, D. A Graph-Based Genetic Algorithm for Distributed Photovoltaic Cluster Partitioning. Energies 2024, 17, 2893. [Google Scholar] [CrossRef]

- Qiu, S.; Deng, Y.; Ding, M.; Han, W. An Optimal Scheduling Method for Distribution Network Clusters Considering Source–Load–Storage Synergy. Sustainability 2024, 16, 6399. [Google Scholar] [CrossRef]

- Deshmukh, S.; Natarajan, B.; Pahwa, A. Voltage/VAR control in distribution networks via reactive power injection through distributed generators. IEEE Trans. Smart Grid 2012, 3, 1226–1234. [Google Scholar] [CrossRef]

- Augugliaro, A.; Dusonchet, L.; Favuzza, S.; Sanseverino, E.R. Voltage regulation and power losses minimization in automated distribution networks by an evolutionary multi-objective approach. IEEE Trans. Power Syst. 2004, 19, 1516–1527. [Google Scholar] [CrossRef]

- Abido, M.A. Optimal power flow using particle swarm optimization. Int. J. Electr. Power Energy Syst. 2002, 24, 563–571. [Google Scholar] [CrossRef]

- Torres, G.L.; Quintana, V.H. An interior-point method for nonlinear optimal power flow using voltage rectangular coordinates. IEEE Trans. Power Syst. 1998, 13, 1211–1218. [Google Scholar] [CrossRef]

- Fortenbacher, P.; Mathieu, J.L.; Andersson, G. Modeling and optimal operation of distributed battery storage in low voltage grids. IEEE Trans. Power Syst. 2017, 32, 4340–4350. [Google Scholar] [CrossRef]

- Liu, M.B.; Canizares, C.A.; Huang, W. Reactive power and voltage control in distribution systems with limited switching operations. IEEE Trans. Power Syst. 2009, 24, 889–899. [Google Scholar] [CrossRef]

- Lavaei, J.; Low, S.H. Zero duality gap in optimal power flow problem. IEEE Trans. Power Syst. 2011, 27, 92–107. [Google Scholar] [CrossRef]

- Gan, L.; Li, N.; Topcu, U.; Low, S.H. Exact convex relaxation of optimal power flow in radial networks. IEEE Trans. Autom. Control 2014, 60, 72–87. [Google Scholar] [CrossRef]

- Nazir, F.U.; Pal, B.C.; Jabr, R.A. A two-stage chance constrained volt/var control scheme for active distribution networks with nodal power uncertainties. IEEE Trans. Power Syst. 2018, 34, 314–325. [Google Scholar] [CrossRef]

- Usman, M.; Capitanescu, F. Three solution approaches to stochastic multi-period AC optimal power flow in active distribution systems. IEEE Trans. Sustain. Energy 2022, 14, 178–192. [Google Scholar] [CrossRef]

- Ding, T.; Li, C.; Yang, Y.; Jiang, J.; Bie, Z.; Blaabjerg, F. A two-stage robust optimization for centralized-optimal dispatch of photovoltaic inverters in active distribution networks. IEEE Trans. Sustain. Energy 2016, 8, 744–754. [Google Scholar] [CrossRef]

- Guo, Y.; Baker, K.; Dall’Anese, E.; Hu, Z.; Summers, T.H. Data-based distributionally robust stochastic optimal power flow—Part I: Methodologies. IEEE Trans. Power Syst. 2018, 34, 1483–1492. [Google Scholar] [CrossRef]

- Cui, W.; Wan, C.; Song, Y. Ensemble deep learning-based non-crossing quantile regression for nonparametric probabilistic forecasting of wind power generation. IEEE Trans. Power Syst. 2022, 38, 3163–3178. [Google Scholar] [CrossRef]

- Jiang, Y.; Wan, C.; Wang, J.; Song, Y.; Dong, Z. Stochastic receding horizon control of active distribution networks with distributed renewables. IEEE Trans. Power Syst. 2018, 34, 1325–1341. [Google Scholar] [CrossRef]

- Guo, Y.; Wu, Q.; Gao, H.; Chen, X.; Østergaard, J.; Xin, H. MPC-based coordinated voltage regulation for distribution networks with distributed generation and energy storage system. IEEE Trans. Sustain. Energy 2018, 10, 1731–1739. [Google Scholar] [CrossRef]

- Yazdanian, M.; Mehrizi-Sani, A. Distributed control techniques in microgrids. IEEE Trans. Smart Grid 2014, 5, 2901–2909. [Google Scholar] [CrossRef]

- Molzahn, D.K.; Dörfler, F.; Sandberg, H.; Low, S.H.; Chakrabarti, S.; Baldick, R.; Lavaei, J. A survey of distributed optimization and control algorithms for electric power systems. IEEE Trans. Smart Grid 2017, 8, 2941–2962. [Google Scholar] [CrossRef]

- Zhao, M.; Shi, Q.; Cai, Y.; Zhao, M.; Li, Y. Distributed penalty dual decomposition algorithm for optimal power flow in radial networks. IEEE Trans. Power Syst. 2019, 35, 2176–2189. [Google Scholar] [CrossRef]

- Robbins, B.A.; Hadjicostis, C.N.; Domínguez-García, A.D. A two-stage distributed architecture for voltage control in power distribution systems. IEEE Trans. Power Syst. 2012, 28, 1470–1482. [Google Scholar] [CrossRef]

- Peng, Q.; Low, H. Distributed optimal power flow algorithm for radial networks, I: Balanced single phase case. IEEE Trans. Smart Grid 2016, 9, 111–121. [Google Scholar] [CrossRef]

- Bazrafshan, M.; Gatsis, N. Decentralized stochastic optimal power flow in radial networks with distributed generation. IEEE Trans. Smart Grid 2016, 8, 787–801. [Google Scholar] [CrossRef]

- Tang, Z.; Hill, D.J.; Liu, T. Fast distributed reactive power control for voltage regulation in distribution networks. IEEE Trans. Power Syst. 2016, 34, 802–805. [Google Scholar] [CrossRef]

- Macedo, L.H.; Franco, J.F.; Rider, M.J.; Romero, R. Optimal operation of distribution networks considering energy storage devices. IEEE Trans. Smart Grid 2015, 6, 2825–2836. [Google Scholar] [CrossRef]

- Jiao, W.; Chen, J.; Wu, Q.; Li, C.; Zhou, B.; Huang, S. Distributed coordinated voltage control for distribution networks with DG and OLTC based on MPC and gradient projection. IEEE Trans. Power Syst. 2021, 37, 680–690. [Google Scholar] [CrossRef]

- Zhang, Y.; Ai, X.; Wen, J.; Fang, J.; He, H. Data-adaptive robust optimization method for the economic dispatch of active distribution networks. IEEE Trans. Smart Grid 2018, 10, 3791–3800. [Google Scholar] [CrossRef]

- Tewari, T.; Mohapatra, A.; Anand, S. Coordinated control of OLTC and energy storage for voltage regulation in distribution network with high PV penetration. IEEE Trans. Sustain. Energy 2020, 12, 262–272. [Google Scholar] [CrossRef]

- Wang, L.; Yan, R.; Saha, T.K. Voltage management for large scale PV integration into weak distribution systems. IEEE Trans. Smart Grid 2017, 9, 4128–4139. [Google Scholar] [CrossRef]

- Yan, Q.; Chen, X.; Xing, L.; Guo, X.; Zhu, C. Multi-Timescale Voltage Regulation for Distribution Network with High Photovoltaic Penetration via Coordinated Control of Multiple Devices. Energies (19961073) 2024, 17, 3830. [Google Scholar] [CrossRef]

- Zhang, Z.; Dong, Z.; Yue, D. Multiple time-scale voltage regulation for active distribution networks via three-level coordinated control. IEEE Trans. Ind. Inf. 2023, 20, 4429–4439. [Google Scholar] [CrossRef]

- Malekpour, A.R.; Annaswamy, A.M.; Shah, J. Hierarchical hybrid architecture for volt/var control of power distribution grids. IEEE Trans. Power Syst. 2019, 35, 854–863. [Google Scholar] [CrossRef]

- Stanelyte, D.; Radziukynas, V. Review of voltage and reactive power control algorithms in electrical distribution networks. Eneries 2019, 13, 58. [Google Scholar] [CrossRef]

- Hashemi, S.; Østergaard, J. Methods and strategies for overvoltage prevention in low voltage distribution systems with PV. IET Renew. Power Gener. 2017, 11, 205–214. [Google Scholar] [CrossRef]

- Cao, D.; Hu, W.; Zhao, J.; Zhang, G.; Zhang, B.; Liu, Z.; Chen, Z.; Blaabjerg, F. Reinforcement learning and its applications in modern power and energy systems: A review. J. Mod. Power Syst. Clean Energy 2020, 8, 1029–1042. [Google Scholar] [CrossRef]

- Mocanu, E.; Mocanu, D.C.; Nguyen, P.H.; Liotta, A.; Webber, M.E.; Gibescu, M.; Slootweg, J.G. Online building energy optimization using deep reinforcement learning. IEEE Trans. Smart Grid 2018, 10, 3698–3708. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 1st ed.; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Suchithra, J.; Rajabi, A.; Robinson, D.A. Enhancing PV Hosting Capacity of Electricity Distribution Networks Using Deep Reinforcement Learning-Based Coordinated Voltage Control. Energies 2024, 17, 5037. [Google Scholar] [CrossRef]

- Shuai, H.; Fang, J.; Ai, X.; Wen, J.; He, H. Optimal real-time operation strategy for microgrid: An ADP-based stochastic nonlinear optimization approach. IEEE Trans. Sustain. Energy 2018, 10, 931–942. [Google Scholar] [CrossRef]

- Shang, Y.; Wu, W.; Guo, J.; Ma, Z.; Sheng, W.; Lv, Z.; Fu, C. Stochastic dispatch of energy storage in microgrids: An augmented reinforcement learning approach. Appl. Energy 2020, 261, 114423. [Google Scholar] [CrossRef]

- Bui, V.; Hussain, A.; Kim, H. Double deep Q-learning-based distributed operation of battery energy storage system considering uncertainties. IEEE Trans. Smart Grid 2019, 11, 457–469. [Google Scholar] [CrossRef]

- Ding, T.; Zeng, Z.; Bai, J.; Qin, B.; Yang, Y.; Shahidehpour, M. Optimal electric vehicle charging strategy with Markov decision process and reinforcement learning technique. IEEE TIA 2020, 56, 5811–5823. [Google Scholar] [CrossRef]

- Cao, D.; Hu, W.; Xu, X.; Wu, Q.; Huang, Q.; Chen, Z.; Blaabjerg, F. Deep reinforcement learning based approach for optimal power flow of distribution networks embedded with renewable energy and storage devices. J. Mod. Power Syst. Clean Energy 2021, 9, 1101–1110. [Google Scholar] [CrossRef]

- Wang, S.; Du, L.; Fan, X.; Huang, Q. Deep reinforcement scheduling of energy storage systems for real-time voltage regulation in unbalanced LV networks with high PV penetration. IEEE Trans. Sustain. Energy 2021, 12, 2342–2352. [Google Scholar] [CrossRef]

- Tang, H.; Lv, K.; Bak-Jensen, B.; Pillai, J.R.; Wang, Z. Deep neural network-based hierarchical learning method for dispatch control of multi-regional power grid. IEEE Trans. Smart Grid 2022, 34, 5063–5079. [Google Scholar] [CrossRef]

- Gao, Y.; Wang, W.; Shi, J.; Yu, N. Batch-constrained reinforcement learning for dynamic distribution network reconfiguration. IEEE Trans. Smart Grid 2020, 11, 5357–5369. [Google Scholar] [CrossRef]

- Li, Y.; Hao, G.; Liu, Y.; Yu, Y.; Ni, Z.; Zhao, Y. Many-objective distribution network reconfiguration via deep reinforcement learning assisted optimization algorithm. IEEE Trans. Power Deliv. 2021, 37, 2230–2244. [Google Scholar] [CrossRef]

- Liang, Z.; Chung, C.Y.; Zhang, W.; Wang, Q.; Lin, W.; Wang, C. Enabling high-efficiency economic dispatch of hybrid AC/DC networked microgrids: Steady-state convex bi-directional converter models. IEEE Trans. Smart Grid 2024, 16, 45–61. [Google Scholar] [CrossRef]

- Ingalalli, A.; Kamalasadan, S.; Dong, Z.; Bharati, G.R.; Chakraborty, S. Event-driven Q-Routing-based Dynamic Optimal Reconfiguration of the Connected Microgrids in the Power Distribution System. IEEE Trans. Ind. Appl. 2023, 60, 1849–1859. [Google Scholar] [CrossRef]

- Yang, Q.; Wang, G.; Sadeghi, A.; Giannakis, G.B.; Sun, J. Two-timescale voltage control in distribution grids using deep reinforcement learning. IEEE Trans. Smart Grid 2019, 11, 2313–2323. [Google Scholar] [CrossRef]

- Hu, D.; Peng, Y.; Wei, W.; Xiao, T.; Cai, T.; Xi, W. Multi-timescale deep reinforcement learning for reactive power optimization of distribution network. Proc. CSEE 2022, 42, 5034–5044. [Google Scholar]

- Sun, X.; Qiu, J. Two-stage volt/var control in active distribution networks with multi-agent deep reinforcement learning method. IEEE Trans. Smart Grid 2021, 12, 2903–2912. [Google Scholar] [CrossRef]

- Cao, D.; Zhao, J.; Hu, W.; Yu, N.; Ding, F.; Huang, Q.; Chen, Z. Deep reinforcement learning enabled physical-model-free two-timescale voltage control method for active distribution systems. IEEE Trans. Smart Grid 2021, 13, 149–165. [Google Scholar] [CrossRef]

- Liu, H.; Wu, W. Bi-level off-policy reinforcement learning for volt/var control involving continuous and discrete devices. IEEE Trans. Power Syst. 2023, 38, 385–395. [Google Scholar] [CrossRef]

- Sun, X.; Qiu, J.; Yi, Y.; Tao, Y. Cost-effective coordinated voltage control in active distribution networks with photovoltaics and mobile energy storage systems. IEEE Trans. Sustain. Energy 2021, 13, 501–513. [Google Scholar] [CrossRef]

- Tang, W.; Cai, Y.; Zhang, L.; Zhan, B.; Wang, Z.; Fu, Y.; Xiao, X. Hierarchical coordination strategy for three-phase MV and LV distribution networks with high-penetration residential PV units. IET Renew. Power Gener. 2021, 13, 501–513. [Google Scholar] [CrossRef]

- Pereira, E.C.; Barbosa, C.; Vasconcelos, J.A. Distribution network reconfiguration using iterative branch exchange and clustering technique. Energies 2023, 16, 2395. [Google Scholar] [CrossRef]

- Wang, X.; Liu, X.; Jian, S.; Peng, X.; Yuan, H.A. distribution network reconfiguration method based on comprehensive analysis of operation scenarios in the long-term time period. Energy Rep. 2021, 7, 369–379. [Google Scholar] [CrossRef]

- Ning, L.; Si, L.; Nian, L.; Fei, Z. Network reconfiguration based on an edge-cloud-coordinate framework and load forecasting. Front. Energy Res. 2021, 9, 679275. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, Z.; Sun, Q.; Gu, W.; Zheng, S.; Zhao, J. Application and progress of artificial intelligence technology in the field of distribution network voltage Control: A review. Renew. Sustain. Energy Rev. 2024, 192, 114282. [Google Scholar] [CrossRef]

- Sun, H.; Guo, Q.; Qi, J.; Ajjarapu, V.; Bravo, R.; Chow, J.; Li, Z.; Moghe, R.; Nasr-Azadani, E.; Tamrakar, U. Review of challenges and research opportunities for voltage control in smart grids. IEEE Trans. Power Syst. 2019, 34, 2790–2801. [Google Scholar] [CrossRef]

| Method | Suitable Scenarios | Advantages | Limitations |

|---|---|---|---|

| OLTC | Older or stable power grids with few solar panels | Reliable and well tested | Slow response and inadequate for handling fast system fluctuations |

| SVC | Areas with frequent voltage changes | Adjusts voltage quickly | Expensive and only works in a limited area |

| PV inverter | Places with lots of solar power | Quick and easy to use and already built into solar systems | May not be strong enough alone and needs coordination |

| BESS | Areas with big voltage swings or peak usage times | Can respond quickly and help balance supply and demand | Costly and performance drops over time |

| Cluster partitioning | Grids with lots of solar and uneven electricity use | Smart way to group and manage resources | Needs fast computing and is hard to set up |

| Algorithm | Execution Efficiency | Scalability | Computational Complexity |

|---|---|---|---|

| DQN | Moderate | Low | Low from the main grid |

| DDQN | Slightly higher than DQN | Low | Low |

| DDPG | Moderate | High | High |

| TD3 | High | High | High |

| SAC | High | Very high | Very high |

| PPO | Moderate to high | High | Moderate |

| Type | Algorithms | Characteristic | Advantages | Disadvantages |

|---|---|---|---|---|

| Value Function Based | DQN | Uses deep neural networks to approximate Q-values with experience replay and target networks | Handles high-dimensional state spaces effectively | Prone to overestimation of Q-values |

| DDQN | Introduces Double Q-learning to separate action selection and evaluation | Reduces Q-value overestimation, improving policy stability | Slightly higher computational complexity | |

| Dueling DQN | Decomposes Q-values into state value and advantage | Better evaluation of state values, especially in large action spaces | More complex network architecture, requiring additional tuning | |

| Actor–Critic | AC | Uses separate actor and critic networks to improve policy updates | More stable than pure policy-based methods | High variance and sample inefficiency |

| A3C | Parallel training with multiple agents to speed up learning | Faster convergence, with better exploration | High computational cost and complex implementation | |

| DDPG | Off-policy, model-free, and uses deterministic policy with target networks | Handles continuous action spaces and undergoes stable updates with experience replay | Sensitive to hyperparameters and prone to overestimation bias | |

| TD3 | Improves DDPG with twin Q-networks and delayed policy updates | Reduces overestimation bias and improves training stability | Higher computational complexity due to twin critics | |

| SAC | Uses entropy regularization for better exploration | More stable training, robust to hyperparameters, and effective in complex environments | Requires careful tuning of temperature parameter | |

| Policy Gradient | PPO | Uses a clipped objective function to ensure stable policy updates | Simple to implement, computationally efficient, and widely used in deep RL applications | Still requires careful hyperparameter tuning and may struggle with highly stochastic environments |

| TRPO | Constrains policy updates using a trust region to ensure monotonic improvement | Guarantees monotonic policy improvement and provides strong theoretical convergence properties | Computationally expensive due to second-order optimization and Hessian-vector product calculations |

| Field of Application | Document | Algorithm | Purpose |

|---|---|---|---|

| ES device scheduling and control | [79] | DDQN | Minimize the cost of purchasing electricity from the main grid |

| [80] | DDPG | Maximizing profits for distribution system operators | |

| [81] | PPO | Minimize network loss | |

| [82] | SAC | Minimizing system voltage offset | |

| Dynamic reconfiguration | [84] | DQN | Minimizing network losses and switching action costs |

| Multi-timescale voltage regulation | [88] | DQN | Minimize system voltage excursion and capacitor operation costs |

| [89] | SCOP+MLTI-DDPG | Minimizing system network losses on long timescales and minimizing them on short timescales | |

| [91] | MLTI-SAC | Minimizing system voltage excursions and mechanical device actions |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, Q.; Song, X.; Gong, C.; Hu, C.; Rui, J.; Wang, T.; Xia, Z.; Wang, Z. Voltage Regulation Strategies in Photovoltaic-Energy Storage System Distribution Network: A Review. Energies 2025, 18, 2740. https://doi.org/10.3390/en18112740

Dong Q, Song X, Gong C, Hu C, Rui J, Wang T, Xia Z, Wang Z. Voltage Regulation Strategies in Photovoltaic-Energy Storage System Distribution Network: A Review. Energies. 2025; 18(11):2740. https://doi.org/10.3390/en18112740

Chicago/Turabian StyleDong, Qianwen, Xingyuan Song, Chunyang Gong, Chenchen Hu, Junfeng Rui, Tingting Wang, Ziyang Xia, and Zhixin Wang. 2025. "Voltage Regulation Strategies in Photovoltaic-Energy Storage System Distribution Network: A Review" Energies 18, no. 11: 2740. https://doi.org/10.3390/en18112740

APA StyleDong, Q., Song, X., Gong, C., Hu, C., Rui, J., Wang, T., Xia, Z., & Wang, Z. (2025). Voltage Regulation Strategies in Photovoltaic-Energy Storage System Distribution Network: A Review. Energies, 18(11), 2740. https://doi.org/10.3390/en18112740