A Future Direction of Machine Learning for Building Energy Management: Interpretable Models

Abstract

1. Introduction

2. Fields of Application

2.1. Load and Power Prediction

2.2. Fault Detection and Diagnosis

2.3. Occupancy-Related Applications

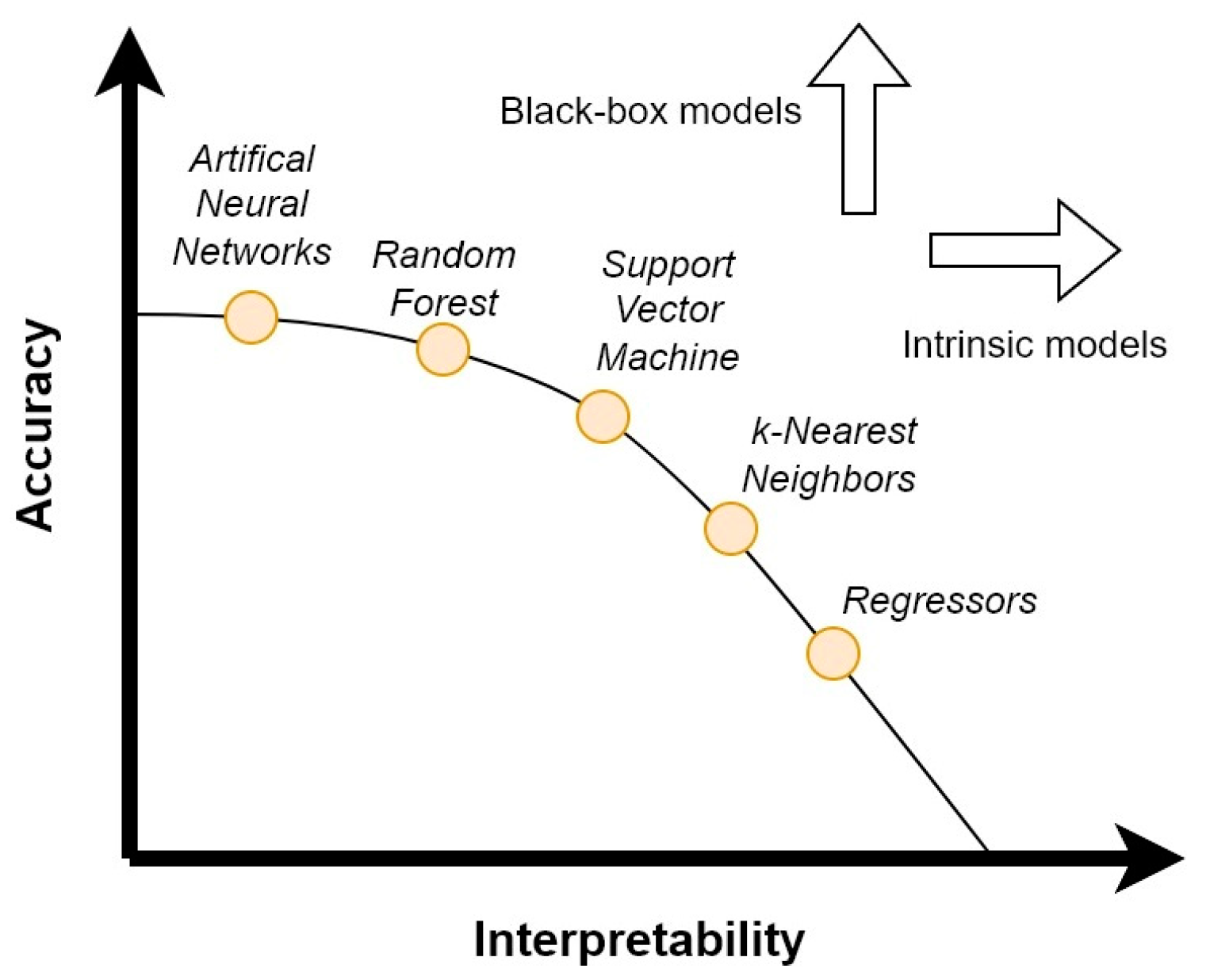

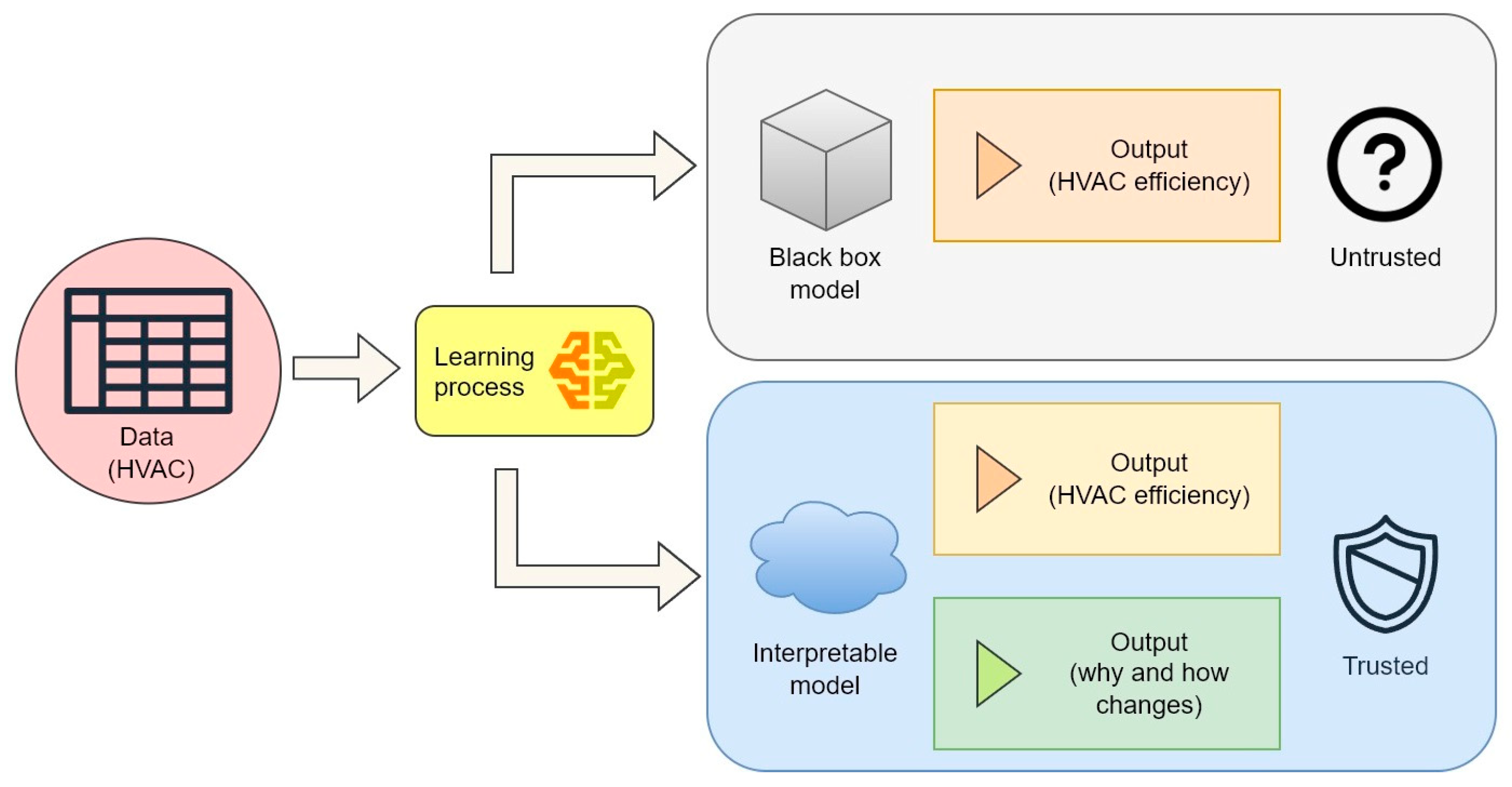

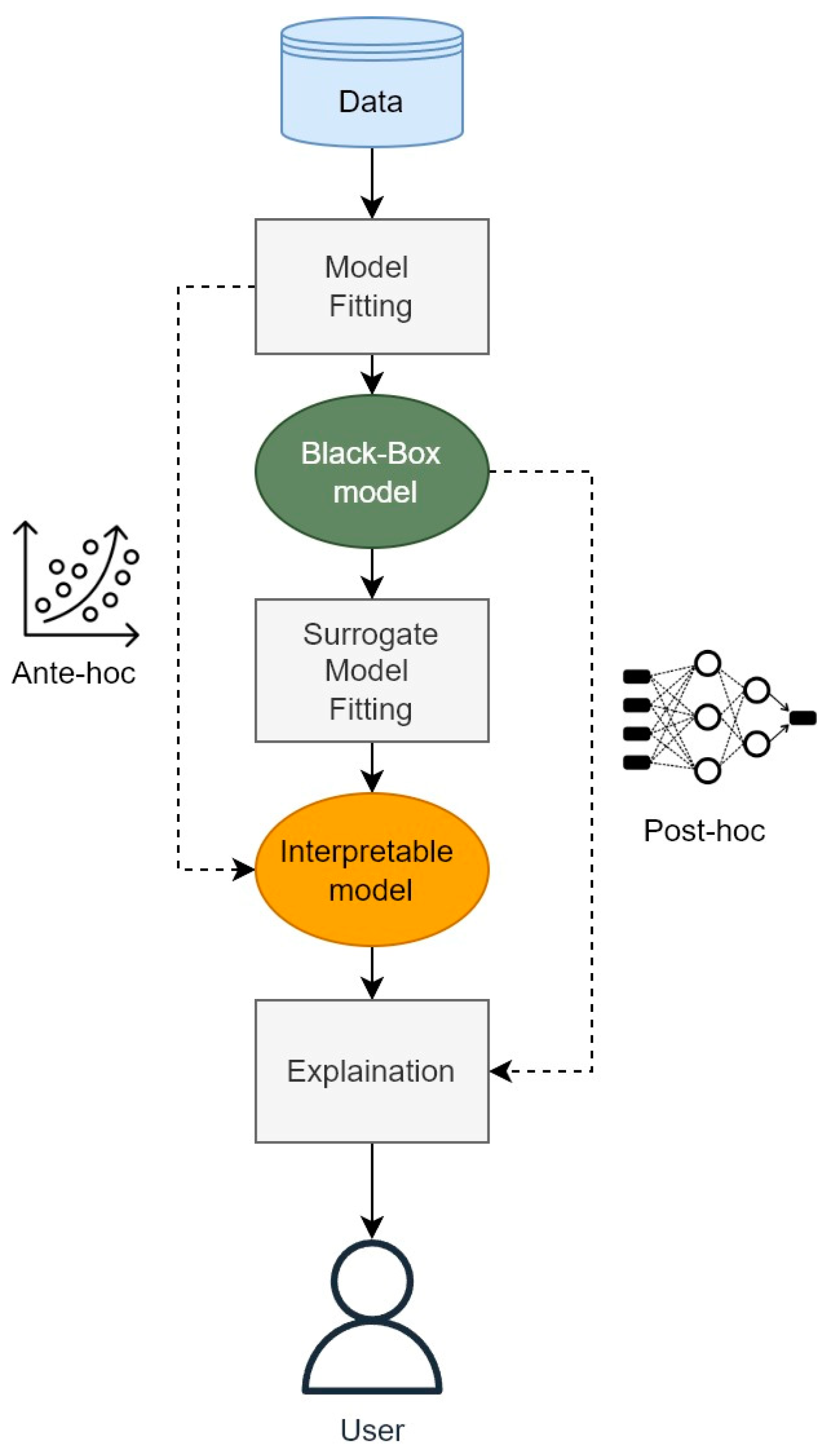

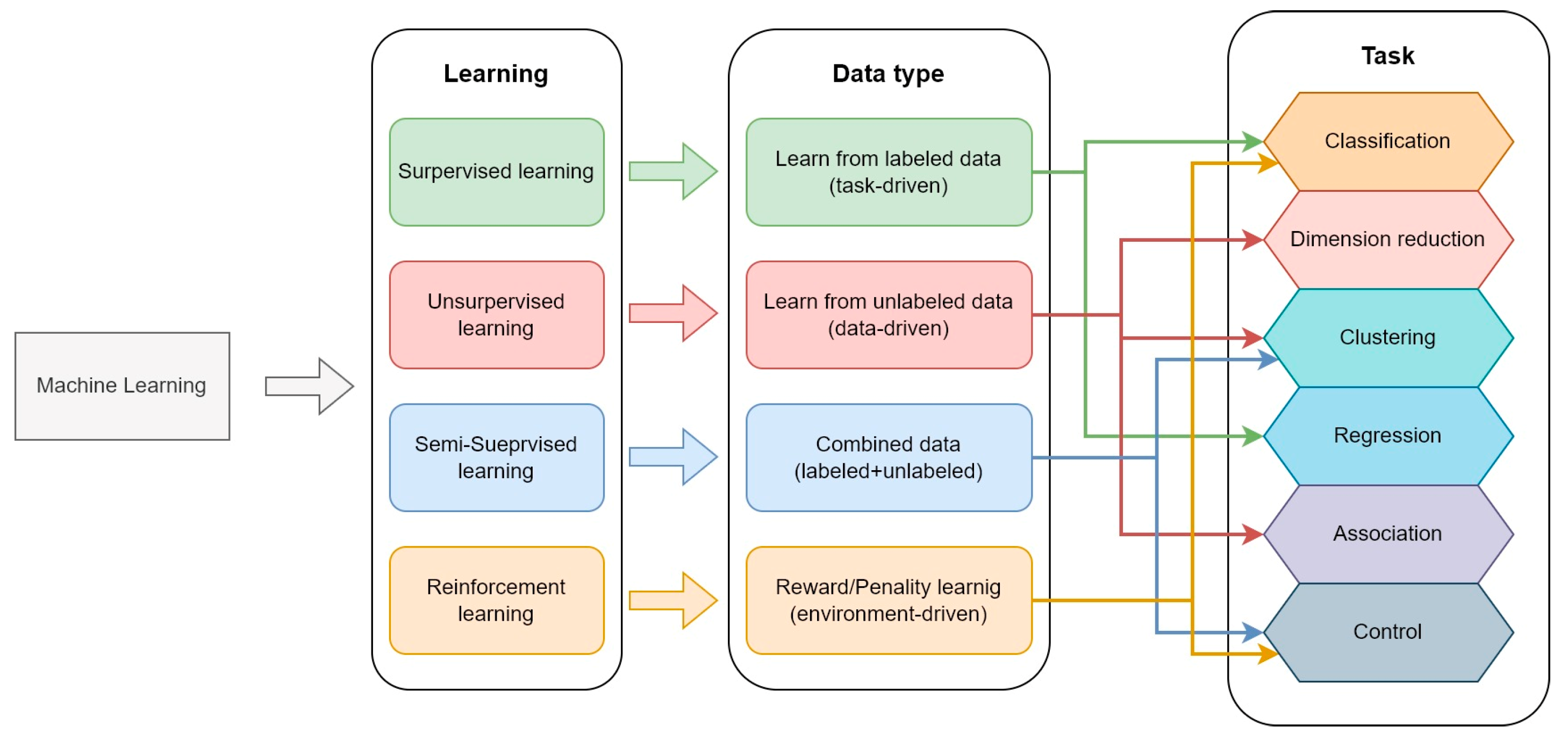

3. Machine Learning Interpretability

- Ante-hoc models are interpretable before the training process;

- Post-Hoc models are interpretable after the training process.

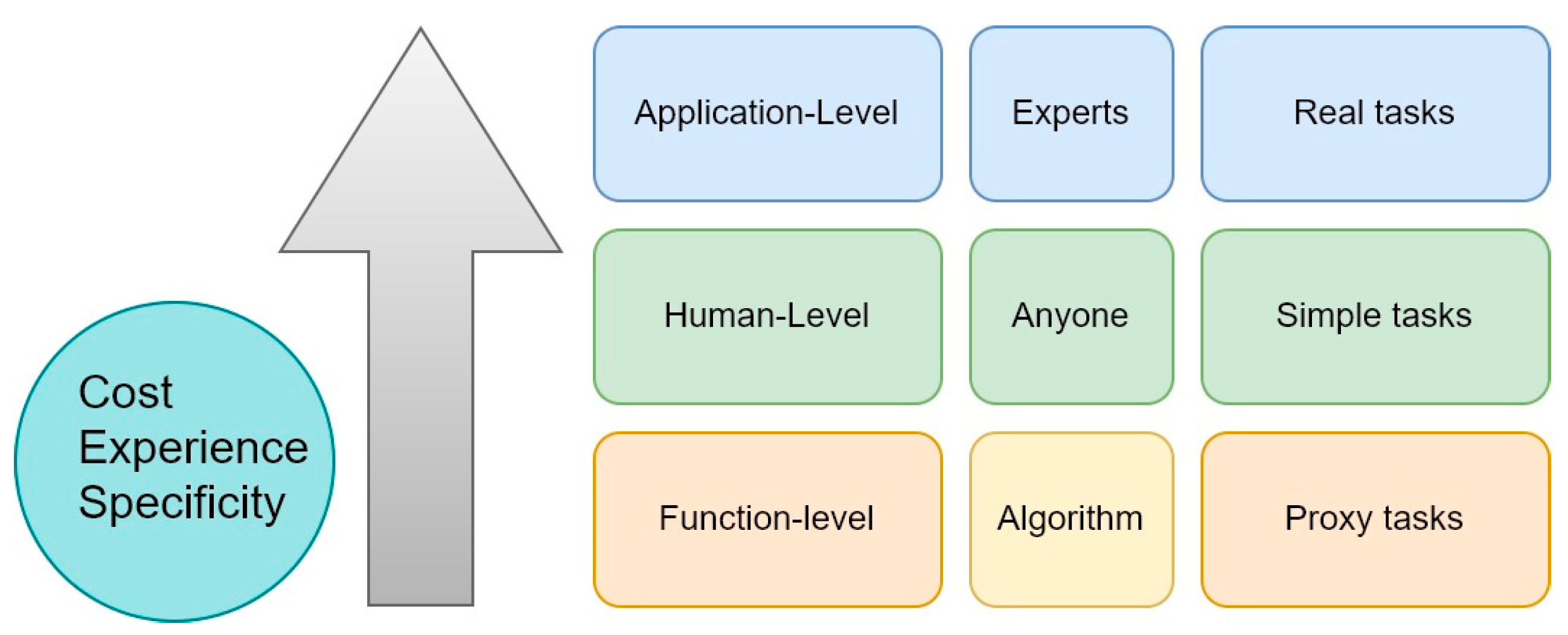

How to Assess ML Interpretability

- Application-level (real task): The explanation is given at the end of the process, and its quality is evaluated by the end user. For example, a ML crack-detection software can locate cracks and marks them in images. At the application level, the end-user can test the crack-detection software directly to evaluate the model. This testing requires a good experimental setup and a good understanding of how the quality of the output is assessed. Therefore, the quality of the evaluation relies on the user knowledge/experience with regard to both the specific task and models.

- Human-level (simple task): the explanation is given at the application level. The difference is that the assessment is carried out not by a domain expert, but by anyone. This approach makes evaluation cheaper (because it is not necessary to find an expert), and it is easier to find testers. In this case, many explanations can be developed by the ML model, and the users choose the best one. However, this method is somewhat limited by the user and their capacity to make the correct choice; therefore, it is not suitable for complex tasks for which an expert is required for output evaluation.

- Function-level (proxy task): at this level, users are not required to assess the explanation. This level is intended for a model has been already evaluated and tested with human-level evaluation (level 2) or in an application-level evaluation (level 1). The task is performed autonomously by the ML algorithm, and the user is only a supervisor who trusts the model’s output.

4. Models and Techniques for Energy Assessment and Optimization for Built Environment

- Structured: input data must be well-defined and structured, with information organized and described in detail. For instance, device names, times, power, temperatures, locations, occupancy, etc. are examples of structured data.

- Unstructured: if the data has no pre-defined format or organization, it is considered unstructured. In these cases, the analysis of relevant information is much more difficult to perform. For example, textual input, word processing, audio files, videos, images, etc. can be considered unstructured data.

- Semi-structured: data are not stored in an organized structure (such as a relational database), but have some organizational properties. For example, XML, JSON documents, NoSQL databases, etc., are examples of semi-structured data.

4.1. Interpretable Artificial Neural Networks

4.2. Encoder–Decoder

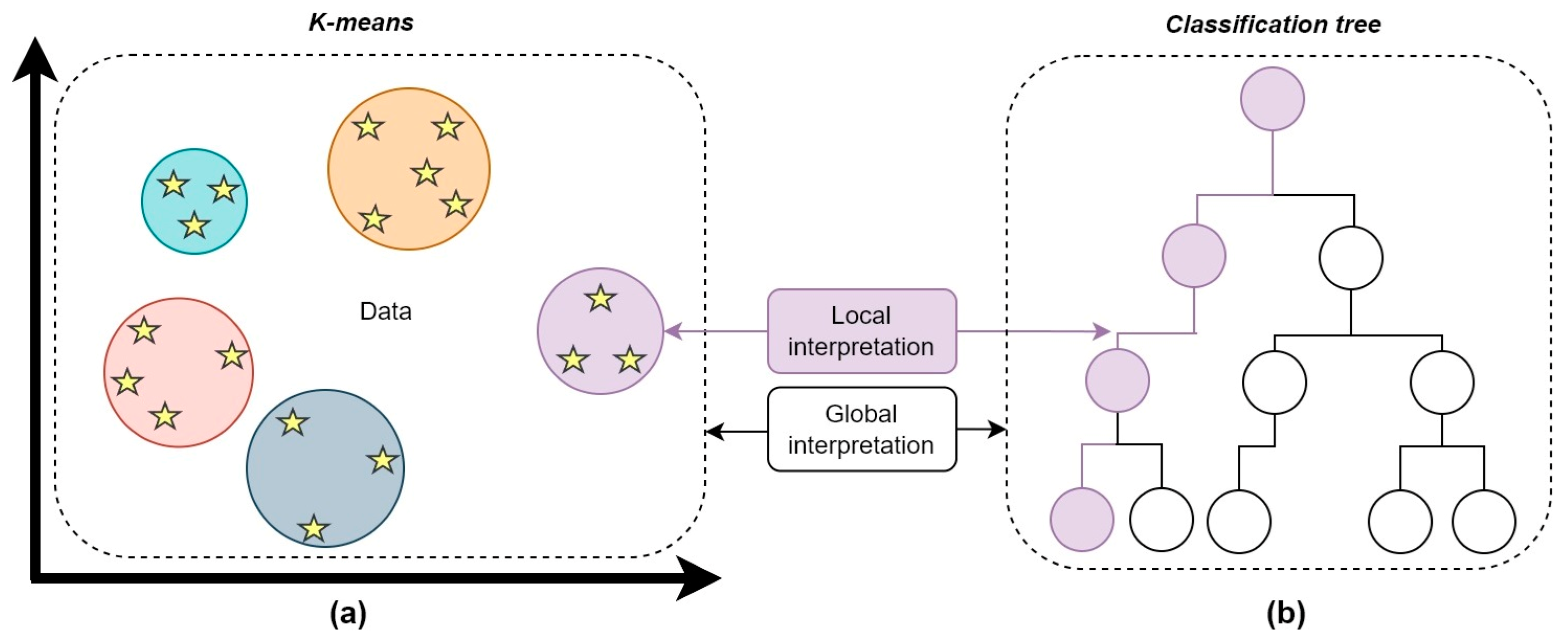

4.3. Clustering and Feature Extraction

4.4. Generalized Additive Models

4.5. Local Interpretable Model-Agnostic Explanations

4.6. SHapley Additive exPlanations

4.7. Other Techniques

5. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mesa-Jiménez, J.J.; Stokes, L.; Yang, Q.; Livina, V.N. Machine Learning for BMS Analysis and Optimisation. Eng. Res. Express 2020, 2, 045003. [Google Scholar] [CrossRef]

- Zarco-Periñán, P.J.; Zarco-Soto, F.J.; Zarco-Soto, I.M.; Martínez-Ramos, J.L.; Sánchez-Durán, R. CO2 Emissions in Buildings: A Synopsis of Current Studies. Energies 2022, 15, 6635. [Google Scholar] [CrossRef]

- OECD/IEA. Global Energy & CO2 Status Report 2019–Analysis–IEA; IEA: Paris, France, 2019. [Google Scholar]

- Ramesh, T.; Prakash, R.; Shukla, K.K. Life Cycle Energy Analysis of Buildings: An Overview. Energy Build. 2010, 42, 1592–1600. [Google Scholar] [CrossRef]

- Leal Filho, W.; Vidal, D.G.; Chen, C.; Petrova, M.; Dinis, M.A.P.; Yang, P.; Rogers, S.; Álvarez-Castañón, L.; Djekic, I.; Sharifi, A.; et al. An Assessment of Requirements in Investments, New Technologies, and Infrastructures to Achieve the SDGs. Environ. Sci. Eur. 2022, 34, 58. [Google Scholar] [CrossRef] [PubMed]

- Parra-Domínguez, J.; Gil-Egido, A.; Rodríguez-González, S. SDGs as One of the Drivers of Smart City Development: The Indicator Selection Process. Smart Cities 2022, 5, 1025–1038. [Google Scholar] [CrossRef]

- Kazak, T. European Green Deal. Yearb. Law. Dep. 2022, 9, 304–315. [Google Scholar] [CrossRef]

- Tang, H.; Wang, S.; Li, H. Flexibility Categorization, Sources, Capabilities and Technologies for Energy-Flexible and Grid-Responsive Buildings: State-of-the-Art and Future Perspective. Energy 2021, 219, 119598. [Google Scholar] [CrossRef]

- Hurtado, L.A.; Mocanu, E.; Nguyen, P.H.; Gibescu, M.; Kamphuis, R.I.G. Enabling Cooperative Behavior for Building Demand Response Based on Extended Joint Action Learning. IEEE Trans. Ind. Inf. 2018, 14, 127–136. [Google Scholar] [CrossRef]

- Etxegarai, G.; Zapirain, I.; Camblong, H.; Ugartemendia, J.; Hernandez, J.; Curea, O. Photovoltaic Energy Production Forecasting in a Short Term Horizon: Comparison between Analytical and Machine Learning Models. Appl. Sci. 2022, 12, 2171. [Google Scholar] [CrossRef]

- Chinchero, H.F.; Alonso, J.M. A Review on Energy Management Methodologies for LED Lighting Systems in Smart Buildings. In Proceedings of the 2020 IEEE International Conference on Environment and Electrical Engineering and 2020 IEEE Industrial and Commercial Power Systems Europe, EEEIC/I and CPS Europe 2020, Singapore, 14–17 December 2020. [Google Scholar]

- Manic, M.; Wijayasekara, D.; Amarasinghe, K.; Rodriguez-Andina, J.J. Building Energy Management Systems: The Age of Intelligent and Adaptive Buildings. IEEE Ind. Electron. Mag. 2016, 10, 25–39. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, Z.; Yuan, X.; Su, L.; Li, K. Optimal Control Strategies for Demand Response in Buildings under Penetration of Renewable Energy. Buildings 2022, 12, 371. [Google Scholar] [CrossRef]

- Whitmore, A.; Agarwal, A.; Da Xu, L. The Internet of Things—A Survey of Topics and Trends. Inf. Syst. Front. 2015, 17, 261–274. [Google Scholar] [CrossRef]

- Hwang, Y.-M.; Kim, M.G.; Rho, J.-J. Understanding Internet of Things (IoT) Diffusion. Inf. Dev. 2016, 32, 969–985. [Google Scholar] [CrossRef]

- Rejeb, A.; Rejeb, K.; Zailani, S.H.M.; Abdollahi, A. Knowledge Diffusion of the Internet of Things (IoT): A Main Path Analysis. Wirel. Pers. Commun. 2022, 126, 1177–1207. [Google Scholar] [CrossRef]

- Firouzi, F.; Farahani, B.; Marinšek, A. The Convergence and Interplay of Edge, Fog, and Cloud in the AI-Driven Internet of Things (IoT). Inf. Syst. 2022, 107, 101840. [Google Scholar] [CrossRef]

- Marotta, I.; Guarino, F.; Cellura, M.; Longo, S. Investigation of Design Strategies and Quantification of Energy Flexibility in Buildings: A Case-Study in Southern Italy. J. Build. Eng. 2021, 41, 102392. [Google Scholar] [CrossRef]

- Capozzoli, A.; Cerquitelli, T.; Piscitelli, M.S. Enhancing Energy Efficiency in Buildings through Innovative Data Analytics Technologies. In Pervasive Computing: Next Generation Platforms for Intelligent Data Collection; Morgan Kaufmann: Cambridge, MA, USA, 2016. [Google Scholar]

- Sengupta, P.P.; Chandrashekhar, Y.S. Building Trust in AI. JACC Cardiovasc. Imaging 2021, 14, 520–522. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Tai, Y. Secure Medical Digital Twin via Human-Centric Interaction and Cyber Vulnerability Resilience. Conn. Sci. 2022, 34, 895–910. [Google Scholar] [CrossRef]

- Bononi, L.; Donatiello, L.; Longo, D.; Massari, M.; Montori, F.; Stacchio, L.; Marfia, G. Digital Twin Collaborative Platforms: Applications to Humans-in-the-Loop Crafting of Urban Areas. IEEE Consum. Electron. Mag. 2023, 12, 38–46. [Google Scholar] [CrossRef]

- Geselschap, C.; Meskers, G.; Van Dijk, R.; Van Winsen, I. Digital Twin–Engineering with the Human Factor in the Loop. In Proceedings of the Annual Offshore Technology Conference, Houston, TX, USA, 6–9 May 2019; Volume 2019. [Google Scholar]

- Chen, Z.; Xiao, F.; Guo, F.; Yan, J. Interpretable Machine Learning for Building Energy Management: A State-of-the-Art Review. Adv. Appl. Energy 2023, 9, 100123. [Google Scholar] [CrossRef]

- Khan, A.; Laghari, A.; Awan, S. Machine Learning in Computer Vision: A Review. ICST Trans. Scalable Inf. Syst. 2018, 8, e4. [Google Scholar] [CrossRef]

- Cheng, Y.; Niu, J.; Gao, N. Thermal Comfort Models: A Review and Numerical Investigation. Build. Environ. 2012, 47, 13–22. [Google Scholar] [CrossRef]

- Zhang, L.; Wen, J.; Li, Y.; Chen, J.; Ye, Y.; Fu, Y.; Livingood, W. A Review of Machine Learning in Building Load Prediction. Appl. Energy 2021, 285, 116452. [Google Scholar] [CrossRef]

- Papadonikolaki, E.; Krystallis, I.; Morgan, B. Digital Technologies in Built Environment Projects: Review and Future Directions. Proj. Manag. J. 2022, 53, 501–519. [Google Scholar] [CrossRef]

- Ciaburro, G. Machine Fault Detection Methods Based on Machine Learning Algorithms: A Review. Math. Biosci. Eng. 2022, 19, 11453–11490. [Google Scholar] [CrossRef] [PubMed]

- Shaharuddin, S.; Abdul Maulud, K.N.; Syed Abdul Rahman, S.A.F.; Che Ani, A.I. Digital Twin for Indoor Disaster in Smart City: A Systematic Review. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences–ISPRS Archives, Nice, France, 6–11 June 2022; Volume 46. [Google Scholar]

- Wong, J.; Wang, X.; Li, H.; Chan, G.; Li, H. A Review of Cloud-Based Bim Technology in the Construction Sector. J. Inf. Technol. Constr. 2014, 19, 281–291. [Google Scholar]

- Drobnyi, V.; Hu, Z.; Fathy, Y.; Brilakis, I. Construction and Maintenance of Building Geometric Digital Twins: State of the Art Review. Sensors 2023, 23, 4382. [Google Scholar] [CrossRef] [PubMed]

- Qavidel Fard, Z.; Zomorodian, Z.S.; Korsavi, S.S. Application of Machine Learning in Thermal Comfort Studies: A Review of Methods, Performance and Challenges. Energy Build. 2022, 256, 111771. [Google Scholar] [CrossRef]

- Dai, X.; Liu, J.; Zhang, X. A Review of Studies Applying Machine Learning Models to Predict Occupancy and Window-Opening Behaviours in Smart Buildings. Energy Build. 2020, 223, 110159. [Google Scholar] [CrossRef]

- Teng, S.Y.; Touš, M.; Leong, W.D.; How, B.S.; Lam, H.L.; Máša, V. Recent Advances on Industrial Data-Driven Energy Savings: Digital Twins and Infrastructures. Renew. Sustain. Energy Rev. 2021, 135, 110208. [Google Scholar] [CrossRef]

- Gomez-Cabrera, A.; Escamilla-Ambrosio, P.J. Review of Machine-Learning Techniques Applied to Structural Health Monitoring Systems for Building and Bridge Structures. Appl. Sci. 2022, 12, 10754. [Google Scholar] [CrossRef]

- Djenouri, D.; Laidi, R.; Djenouri, Y.; Balasingham, I. Machine Learning for Smart Building Applications: Review and Taxonomy. ACM Comput. Surv. 2019, 52, 24. [Google Scholar] [CrossRef]

- Ardabili, S.; Abdolalizadeh, L.; Mako, C.; Torok, B.; Mosavi, A. Systematic Review of Deep Learning and Machine Learning for Building Energy. Front. Energy Res. 2022, 10, 786027. [Google Scholar] [CrossRef]

- El Khatib, M.M.; Ahmed, G. Improving Efficiency in IBM Asset Management Software System “Maximo”: A Case Study of Dubai Airports and Abu Dhabi National Energy Company. Theor. Econ. Lett. 2018, 8, 1816–1829. [Google Scholar] [CrossRef]

- Fahim, M.; Sharma, V.; Cao, T.V.; Canberk, B.; Duong, T.Q. Machine Learning-Based Digital Twin for Predictive Modeling in Wind Turbines. IEEE Access 2022, 10, 14184–14194. [Google Scholar] [CrossRef]

- Meijers, A. Hands-On Azure Digital Twins: A Practical Guide to Building Distributed IoT Solutions; Packt Publishing Ltd.: Birmingham, UK, 2022. [Google Scholar]

- Wang, Z.; Gupta, R.; Han, K.; Wang, H.; Ganlath, A.; Ammar, N.; Tiwari, P. Mobility Digital Twin: Concept, Architecture, Case Study, and Future Challenges. IEEE Internet Things J. 2022, 9, 17452–17467. [Google Scholar] [CrossRef]

- Saad, A.; Faddel, S.; Mohammed, O. IoT-Based Digital Twin for Energy Cyber-Physical Systems: Design and Implementation. Energies 2020, 13, 4762. [Google Scholar] [CrossRef]

- Suuronen, S.; Ukko, J.; Eskola, R.; Semken, R.S.; Rantanen, H. A Systematic Literature Review for Digital Business Ecosystems in the Manufacturing Industry: Prerequisites, Challenges, and Benefits. CIRP J. Manuf. Sci. Technol. 2022, 37, 414–426. [Google Scholar] [CrossRef]

- van Dinter, R.; Tekinerdogan, B.; Catal, C. Predictive Maintenance Using Digital Twins: A Systematic Literature Review. Inf. Softw. Technol. 2022, 151, 107008. [Google Scholar] [CrossRef]

- Agostinelli, S.; Cumo, F.; Nezhad, M.M.; Orsini, G.; Piras, G. Renewable Energy System Controlled by Open-Source Tools and Digital Twin Model: Zero Energy Port Area in Italy. Energies 2022, 15, 1817. [Google Scholar] [CrossRef]

- Zhou, Y. Advances of Machine Learning in Multi-Energy District Communities—Mechanisms, Applications and Perspectives. Energy AI 2022, 10, 100187. [Google Scholar] [CrossRef]

- Zhao, Y.; Qin, X.; Shi, X. A Comprehensive Evaluation Model on Optimal Operational Schedules for Battery Energy Storage System by Maximizing Self-Consumption Strategy and Genetic Algorithm. Sustainability 2022, 14, 8821. [Google Scholar] [CrossRef]

- Schopfer, S.; Tiefenbeck, V.; Staake, T. Economic Assessment of Photovoltaic Battery Systems Based on Household Load Profiles. Appl. Energy 2018, 223, 229–248. [Google Scholar] [CrossRef]

- Chen, Y.; Guo, M.; Chen, Z.; Chen, Z.; Ji, Y. Physical Energy and Data-Driven Models in Building Energy Prediction: A Review. Energy Rep. 2022, 8, 2656–2671. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, Y.; He, R.; Liu, J.; Gao, M.; Zhang, L. Multi-Objective Residential Load Scheduling Approach for Demand Response in Smart Grid. Sustain. Cities Soc. 2022, 76, 103530. [Google Scholar] [CrossRef]

- Mellit, A.; Pavan, A.M.; Ogliari, E.; Leva, S.; Lughi, V. Advanced Methods for Photovoltaic Output Power Forecasting: A Review. Appl. Sci. 2020, 10, 487. [Google Scholar] [CrossRef]

- Luo, X.J.; Oyedele, L.O.; Ajayi, A.O.; Akinade, O.O. Comparative Study of Machine Learning-Based Multi-Objective Prediction Framework for Multiple Building Energy Loads. Sustain. Cities Soc. 2020, 61, 102283. [Google Scholar] [CrossRef]

- Sierla, S.; Pourakbari-Kasmaei, M.; Vyatkin, V. A Taxonomy of Machine Learning Applications for Virtual Power Plants and Home/Building Energy Management Systems. Autom. Constr. 2022, 136, 104174. [Google Scholar] [CrossRef]

- Bahani, K.; Ali-Ou-Salah, H.; Moujabbir, M.; Oukarfi, B.; Ramdani, M. A Novel Interpretable Model for Solar Radiation Prediction Based on Adaptive Fuzzy Clustering and Linguistic Hedges. In Proceedings of the 13th International Conference on Intelligent Systems: Theories and Applications, Sydney, Australia, 19 May 2008. [Google Scholar]

- Le, L.T.; Nguyen, H.; Dou, J.; Zhou, J. A Comparative Study of PSO-ANN, GA-ANN, ICA-ANN, and ABC-ANN in Estimating the Heating Load of Buildings’ Energy Efficiency for Smart City Planning. Appl. Sci. 2019, 9, 2630. [Google Scholar] [CrossRef]

- Li, G.; Li, F.; Xu, C.; Fang, X. A Spatial-Temporal Layer-Wise Relevance Propagation Method for Improving Interpretability and Prediction Accuracy of LSTM Building Energy Prediction. Energy Build. 2022, 271, 112317. [Google Scholar] [CrossRef]

- Zhang, W.; Wu, Y.; Calautit, J.K. A Review on Occupancy Prediction through Machine Learning for Enhancing Energy Efficiency, Air Quality and Thermal Comfort in the Built Environment. Renew. Sustain. Energy Rev. 2022, 167, 112704. [Google Scholar] [CrossRef]

- Bell, N.O.; Bilbao, J.I.; Kay, M.; Sproul, A.B. Future Climate Scenarios and Their Impact on Heating, Ventilation and Air-Conditioning System Design and Performance for Commercial Buildings for 2050. Renew. Sustain. Energy Rev. 2022, 162, 112363. [Google Scholar] [CrossRef]

- Chikkam, S.; Singh, S. Condition Monitoring and Fault Diagnosis of Induction Motor Using DWT and ANN. Arab. J. Sci. Eng. 2023, 48, 6237–6252. [Google Scholar] [CrossRef]

- Hussain, M.; Memon, T.D.; Hussain, I.; Memon, Z.A.; Kumar, D. Fault Detection and Identification Using Deep Learning Algorithms in Induction Motors. Comput. Model. Eng. Sci. 2022, 133, 435–470. [Google Scholar] [CrossRef]

- Zhang, R.; Hong, T. Modeling of HVAC Operational Faults in Building Performance Simulation. Appl. Energy 2017, 202, 178–188. [Google Scholar] [CrossRef]

- Castangia, M.; Sappa, R.; Girmay, A.A.; Camarda, C.; Macii, E.; Patti, E. Anomaly Detection on Household Appliances Based on Variational Autoencoders. Sustain. Energy Grids Netw. 2022, 32, 100823. [Google Scholar] [CrossRef]

- Zenebe, T.M.; Midtgård, O.M.; Völler, S.; Cali, Ü. Machine Learning for PV System Operational Fault Analysis: Literature Review. In Proceedings of the Communications in Computer and Information Science, Stellenbosch, South Africa, 5–9 December 2022; Volume 1616. [Google Scholar]

- Meyer, A. Vibration Fault Diagnosis in Wind Turbines Based on Automated Feature Learning. Energies 2022, 15, 1514. [Google Scholar] [CrossRef]

- Strobl, C.; Schafer, M.; Rabenstein, R. Predictive Circuit Protection for DC Grids. In Proceedings of the ICEC 2016—28th International Conference on Electric Contacts, Edinburgh, UK, 9 June 2016. [Google Scholar]

- Liu, M.; Xia, L.; Wang, Y.; Chakrabarty, K. Fault Tolerance for RRAM-Based Matrix Operations. In Proceedings of the International Test Conference, Harbin, China, 15–17 August 2018; Volume 2018. [Google Scholar]

- Gros, A.; Guillem, A.; De Luca, L.; Baillieul, É.; Duvocelle, B.; Malavergne, O.; Leroux, L.; Zimmer, T. Faceting the Post-Disaster Built Heritage Reconstruction Process within the Digital Twin Framework for Notre-Dame de Paris. Sci. Rep. 2023, 13, 5981. [Google Scholar] [CrossRef]

- Cinquepalmi, F.; Cumo, F. Using Digital Twin Models (DTM) for managing, protecting and restoring historical buildings. Conserv. Sci. Cult. Herit. 2022, 22, 425–445. [Google Scholar] [CrossRef]

- Kohno, M. High-Rise Building Fires. J. Disaster Res. 2007, 2, 236–249. [Google Scholar] [CrossRef]

- Arbatskaya, E.O.; Borisova, I.O. Responses to disasters in Russian and French speaking Twitter: Notre Dame de Paris and St. Peter and St. Paul of Nantes cathedrals fires cases. Sign Probl. Field Mediaeduc. 2022, 3, 164–174. [Google Scholar] [CrossRef]

- Yang, B.; Haghighat, F.; Fung, B.C.M.; Panchabikesan, K. Season-Based Occupancy Prediction in Residential Buildings Using Machine Learning Models. e-Prime-Adv. Electr. Eng. Electron. Energy 2021, 1, 100003. [Google Scholar] [CrossRef]

- Mumma, S.A. Transient Occupancy Ventilation By Monitoring CO2. IAQ Appl. 2004, 5, 21–23. [Google Scholar]

- Wickramathilaka, M.; Abdullah, M.P.; Hassan, M.Y.; Abdullah, H. Detection of Occupancy Status from Internet Connectivity for Non-Intrusive Load Monitoring. Indones. J. Electr. Eng. Comput. Sci. 2023, 30, 2502–4752. [Google Scholar] [CrossRef]

- Barbour, E.; Davila, C.C.; Gupta, S.; Reinhart, C.; Kaur, J.; González, M.C. Planning for Sustainable Cities by Estimating Building Occupancy with Mobile Phones. Nat. Commun. 2019, 10, 3736. [Google Scholar] [CrossRef] [PubMed]

- Panchabikesan, K.; Haghighat, F.; Mankibi, M. El Data Driven Occupancy Information for Energy Simulation and Energy Use Assessment in Residential Buildings. Energy 2021, 218, 119539. [Google Scholar] [CrossRef]

- Kim, Y.S.; Heidarinejad, M.; Dahlhausen, M.; Srebric, J. Building Energy Model Calibration with Schedules Derived from Electricity Use Data. Appl. Energy 2017, 190, 997–1007. [Google Scholar] [CrossRef]

- Hobson, B.W.; Lowcay, D.; Gunay, H.B.; Ashouri, A.; Newsham, G.R. Opportunistic Occupancy-Count Estimation Using Sensor Fusion: A Case Study. Build. Environ. 2019, 159, 106154. [Google Scholar] [CrossRef]

- Ding, Y.; Chen, W.; Wei, S.; Yang, F. An Occupancy Prediction Model for Campus Buildings Based on the Diversity of Occupancy Patterns. Sustain. Cities Soc. 2021, 64, 102533. [Google Scholar] [CrossRef]

- D’Oca, S.; Hong, T. Occupancy Schedules Learning Process through a Data Mining Framework. Energy Build. 2015, 88, 395–408. [Google Scholar] [CrossRef]

- Candanedo, L.M.; Feldheim, V. Accurate Occupancy Detection of an Office Room from Light, Temperature, Humidity and CO2 Measurements Using Statistical Learning Models. Energy Build. 2016, 112, 28–39. [Google Scholar] [CrossRef]

- Wang, W.; Hong, T.; Xu, N.; Xu, X.; Chen, J.; Shan, X. Cross-Source Sensing Data Fusion for Building Occupancy Prediction with Adaptive Lasso Feature Filtering. Build. Environ. 2019, 162, 106280. [Google Scholar] [CrossRef]

- Wang, W.; Chen, J.; Hong, T. Occupancy Prediction through Machine Learning and Data Fusion of Environmental Sensing and Wi-Fi Sensing in Buildings. Autom. Constr. 2018, 94, 233–243. [Google Scholar] [CrossRef]

- Fayed, N.S.; Elmogy, M.M.; Atwan, A.; El-Daydamony, E. Efficient Occupancy Detection System Based on Neutrosophic Weighted Sensors Data Fusion. IEEE Access 2022, 10, 13400–13427. [Google Scholar] [CrossRef]

- Štěpán, P.; Kulich, M.; Přeuǐl, L. Robust Data Fusion with Occupancy Grid. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2005, 35, 106–115. [Google Scholar] [CrossRef]

- Alanezi, A.; Hallinan, K.P.; Huang, K. Automated Residential Energy Audits Using a Smart Wifi Thermostat-Enabled Data Mining Approach. Energies 2021, 14, 2500. [Google Scholar] [CrossRef]

- Wang, C.; Pattawi, K.; Lee, H. Energy Saving Impact of Occupancy-Driven Thermostat for Residential Buildings. Energy Build. 2020, 211, 109791. [Google Scholar] [CrossRef]

- Casella, E.; Khamesi, A.R.; Silvestri, S.; Baker, D.A.; Das, S.K. HVAC Power Conservation through Reverse Auctions and Machine Learning. In Proceedings of the 2022 IEEE International Conference on Pervasive Computing and Communications, PerCom 2022, Pisa, Italy, 21–25 March 2022. [Google Scholar]

- Wang, M.; Wang, Z.; Geng, Y.; Lin, B. Interpreting the Neural Network Model for HVAC System Energy Data Mining. Build. Environ. 2022, 209, 108449. [Google Scholar] [CrossRef]

- Nikdel, L.; Janoyan, K.; Bird, S.D.; Powers, S.E. Multiple Perspectives of the Value of Occupancy-Based HVAC Control Systems. Build. Environ. 2018, 129, 15–25. [Google Scholar] [CrossRef]

- Dong, J.; Winstead, C.; Nutaro, J.; Kuruganti, T. Occupancy-Based HVAC Control with Short-Term Occupancy Prediction Algorithms for Energy-Efficient Buildings. Energies 2018, 11, 2427. [Google Scholar] [CrossRef]

- Turley, C.; Jacoby, M.; Pavlak, G.; Henze, G. Development and Evaluation of Occupancy-Aware HVAC Control for Residential Building Energy Efficiency and Occupant Comfort. Energies 2020, 13, 5396. [Google Scholar] [CrossRef]

- Huchuk, B.; Sanner, S.; O’Brien, W. Comparison of Machine Learning Models for Occupancy Prediction in Residential Buildings Using Connected Thermostat Data. Build. Environ. 2019, 160, 106177. [Google Scholar] [CrossRef]

- Batra, N.; Arjunan, P.; Singh, A.; Singh, P. Experiences with Occupancy Based Building Management Systems. In Proceedings of the 2013 IEEE 8th International Conference on Intelligent Sensors, Sensor Networks and Information Processing: Sensing the Future, ISSNIP 2013, Melbourne, Australia, 2–5 April 2013; Volume 1. [Google Scholar]

- Javed, A.; Larijani, H.; Wixted, A. Improving Energy Consumption of a Commercial Building with IoT and Machine Learning. IT Prof. 2018, 20, 30–38. [Google Scholar] [CrossRef]

- Kanna, K.; AIT Lachguer, K.; Yaagoubi, R. MyComfort: An Integration of BIM-IoT-Machine Learning for Optimizing Indoor Thermal Comfort Based on User Experience. Energy Build. 2022, 277, 112547. [Google Scholar] [CrossRef]

- Salamone, F.; Belussi, L.; Currò, C.; Danza, L.; Ghellere, M.; Guazzi, G.; Lenzi, B.; Megale, V.; Meroni, I. Integrated Method for Personal Thermal Comfort Assessment and Optimization through Users’ Feedback, IoT and Machine Learning: A Case Study. Sensors 2018, 18, 1602. [Google Scholar] [CrossRef] [PubMed]

- Tardioli, G.; Filho, R.; Bernaud, P.; Ntimos, D. An Innovative Modelling Approach Based on Building Physics and Machine Learning for the Prediction of Indoor Thermal Comfort in an Office Building. Buildings 2022, 12, 475. [Google Scholar] [CrossRef]

- Rudin, C.; Chen, C.; Chen, Z.; Huang, H.; Semenova, L.; Zhong, C. Interpretable Machine Learning: Fundamental Principles and 10 Grand Challenges. Stat. Surv. 2022, 16, 1–85. [Google Scholar] [CrossRef]

- Bourdeau, M.; Zhai, X.Q.; Nefzaoui, E.; Guo, X.; Chatellier, P. Modeling and Forecasting Building Energy Consumption: A Review of Data-Driven Techniques. Sustain. Cities Soc. 2019, 48, 101533. [Google Scholar] [CrossRef]

- Fathi, S.; Srinivasan, R.; Fenner, A.; Fathi, S. Machine Learning Applications in Urban Building Energy Performance Forecasting: A Systematic Review. Renew. Sustain. Energy Rev. 2020, 133, 110287. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, D.; Liu, Y.; Han, Z.; Lun, L.; Gao, J.; Jin, G.; Cao, G. Accuracy Analyses and Model Comparison of Machine Learning Adopted in Building Energy Consumption Prediction. Energy Explor. Exploit. 2019, 37, 1426–1451. [Google Scholar] [CrossRef]

- Burkart, N.; Huber, M.F. A Survey on the Explainability of Supervised Machine Learning. J. Artif. Intell. Res. 2021, 70, 245–317. [Google Scholar] [CrossRef]

- Yan, K.; Zhong, C.; Ji, Z.; Huang, J. Semi-Supervised Learning for Early Detection and Diagnosis of Various Air Handling Unit Faults. Energy Build. 2018, 181, 75–83. [Google Scholar] [CrossRef]

- Saisubramanian, S.; Galhotra, S.; Zilberstein, S. Balancing the Tradeoff between Clustering Value and Interpretability. In Proceedings of the AIES 2020—AAAI/ACM Conference on AI, Ethics, and Society, New York, NY, USA, 7–8 February 2020. [Google Scholar]

- Fan, F.L.; Xiong, J.; Li, M.; Wang, G. On Interpretability of Artificial Neural Networks: A Survey. IEEE Trans. Radiat. Plasma Med. Sci. 2021, 5, 741–760. [Google Scholar] [CrossRef] [PubMed]

- Vigneau, E. Clustering of Variables for Enhanced Interpretability of Predictive Models. Informatica 2021, 45, 507–515. [Google Scholar] [CrossRef]

- Kamath, U.; Liu, J. Explainable Artificial Intelligence: An Introduction to Interpretable Machine Learning; Springer: Cham, Switzerland, 2021. [Google Scholar]

- El Fiorenza Caroline, J.; Parmar, P.; Tiwari, S.; Dixit, A.; Gupta, A. Accuracy Prediction Using Analysis Methods and F-Measures. In Proceedings of the Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2019; Volume 1362. [Google Scholar]

- Sha, H.; Xu, P.; Lin, M.; Peng, C.; Dou, Q. Development of a Multi-Granularity Energy Forecasting Toolkit for Demand Response Baseline Calculation. Appl. Energy 2021, 289, 116652. [Google Scholar] [CrossRef]

- Ledmaoui, Y.; El Maghraoui, A.; El Aroussi, M.; Saadane, R.; Chebak, A.; Chehri, A. Forecasting Solar Energy Production: A Comparative Study of Machine Learning Algorithms. Energy Rep. 2023, 10, 1004–1012. [Google Scholar] [CrossRef]

- Lin, Y.; Li, B.; Moiser, T.M.; Griffel, L.M.; Mahalik, M.R.; Kwon, J.; Alam, S.M.S. Revenue Prediction for Integrated Renewable Energy and Energy Storage System Using Machine Learning Techniques. J. Energy Storage 2022, 50, 104123. [Google Scholar] [CrossRef]

- Vilone, G.; Longo, L. A Quantitative Evaluation of Global, Rule-Based Explanations of Post-Hoc, Model Agnostic Methods. Front. Artif. Intell. 2021, 4, 717899. [Google Scholar] [CrossRef]

- Friston, K.; Penny, W. Post Hoc Bayesian Model Selection. Neuroimage 2011, 56, 2089–2099. [Google Scholar] [CrossRef]

- Zafar, M.R.; Khan, N. Deterministic Local Interpretable Model-Agnostic Explanations for Stable Explainability. Mach. Learn. Knowl. Extr. 2021, 3, 27. [Google Scholar] [CrossRef]

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J.; Zhang, S.H.; Martin, R.R.; Cheng, M.M.; Hu, S.M. Attention Mechanisms in Computer Vision: A Survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Robnik-Šikonja, M.; Bohanec, M. Perturbation-Based Explanations of Prediction Models. In Human and Machine Learning: Visible, Explainable, Trustworthy and Transparent; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Doshi-Velez, F.; Kim, B. A Roadmap for a Rigorous Science of Interpretability. arXiv 2017, arXiv:1702.08608v1. [Google Scholar]

- Doshi-Velez, F.; Kim, B. Considerations for Evaluation and Generalization in Interpretable Machine Learning. In Explainable and Interpretable Models in Computer Vision and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Li, Z.; Unger, H.; Kyamakya, K. Guest Editorial: Explainability of Machine Learning in Methodologies and Applications. Knowl. Based Syst. 2023, 264, 110357. [Google Scholar] [CrossRef]

- Franchini, G.; Ruggiero, V.; Porta, F.; Zanni, L. Neural Architecture Search via Standard Machine Learning Methodologies. Math. Eng. 2023, 5, 1–21. [Google Scholar] [CrossRef]

- Choi, R.Y.; Coyner, A.S.; Kalpathy-Cramer, J.; Chiang, M.F.; Peter Campbell, J. Introduction to Machine Learning, Neural Networks, and Deep Learning. Transl. Vis. Sci. Technol. 2020, 9, 14. [Google Scholar] [PubMed]

- Vijaychandra, J.; Sesha Sai, B.; Sateesh Babu, B.; Jagannadh, P. A Comprehensive Review on McCulloch-Pitts Neuron Model. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 1516–1522. [Google Scholar]

- Benítez, J.M.; Castro, J.L.; Requena, I. Are Artificial Neural Networks Black Boxes? IEEE Trans. Neural Netw. 1997, 8, 1156–1164. [Google Scholar] [CrossRef]

- Jang, J.; Jeong, W.; Kim, S.; Lee, B.; Lee, M.; Moon, J. RAID: Robust and Interpretable Daily Peak Load Forecasting via Multiple Deep Neural Networks and Shapley Values. Sustainability 2023, 15, 6951. [Google Scholar] [CrossRef]

- Li, A.; Xiao, F.; Zhang, C.; Fan, C. Attention-Based Interpretable Neural Network for Building Cooling Load Prediction. Appl. Energy 2021, 299, 117238. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, Q.; Chen, Z.; Yan, C.; Zeng, S.; Dai, M. Physics-Informed Neural Networks for Building Thermal Modeling and Demand Response Control. Build. Environ. 2023, 234, 110149. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, D. Theory-Guided Deep-Learning for Electrical Load Forecasting (TgDLF) via Ensemble Long Short-Term Memory. Adv. Appl. Energy 2021, 1, 100004. [Google Scholar] [CrossRef]

- Di Natale, L.; Svetozarevic, B.; Heer, P.; Jones, C.N. Physically Consistent Neural Networks for Building Thermal Modeling: Theory and Analysis. Appl. Energy 2022, 325, 119806. [Google Scholar] [CrossRef]

- Wang, H.; Cai, R.; Zhou, B.; Aziz, S.; Qin, B.; Voropai, N.; Gan, L.; Barakhtenko, E. Solar Irradiance Forecasting Based on Direct Explainable Neural Network. Energy Convers. Manag. 2020, 226, 113487. [Google Scholar] [CrossRef]

- Cengiz, A.B.; Birant, K.U.; Cengiz, M.; Birant, D.; Baysari, K. Improving the Performance and Explainability of Indoor Human Activity Recognition in the Internet of Things Environment. Symmetry 2022, 14, 2022. [Google Scholar] [CrossRef]

- Yuan, L.; Andrews, J.; Mu, H.; Vakil, A.; Ewing, R.; Blasch, E.; Li, J. Interpretable Passive Multi-Modal Sensor Fusion for Human Identification and Activity Recognition. Sensors 2022, 22, 5787. [Google Scholar] [CrossRef]

- Zhang, X.; Chung, F.L.; Wang, S. An Interpretable Fuzzy DBN-Based Classifier for Indoor User Movement Prediction in Ambient Assisted Living Applications. IEEE Trans. Ind. Inf. 2020, 16, 42–53. [Google Scholar] [CrossRef]

- Kim, E. Interpretable and Accurate Convolutional Neural Networks for Human Activity Recognition. IEEE Trans. Ind. Inf. 2020, 16, 7190–7198. [Google Scholar] [CrossRef]

- Li, L.; Yan, J.; Yang, X.; Jin, Y. Learning Interpretable Deep State Space Model for Probabilistic Time Series Forecasting. In Proceedings of the IJCAI International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; Volume 2019. [Google Scholar]

- Daza, A.; Frank, A. Translate and Label! An Encoder-Decoder Approach for Cross-Lingual Semantic Role Labeling. In Proceedings of the EMNLP-IJCNLP 2019—2019 Conference on Empirical Methods in Natural Language Processing and 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Bahdanau, D.; Cho, K.H.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Li, C.; Dong, Z.; Ding, L.; Petersen, H.; Qiu, Z.; Chen, G.; Prasad, D. Interpretable Memristive LSTM Network Design for Probabilistic Residential Load Forecasting. IEEE Trans. Circuits Syst. I Regul. Pap. 2022, 69, 2297–2310. [Google Scholar] [CrossRef]

- Luo, T.; Cao, X.; Li, J.; Dong, K.; Zhang, R.; Wei, X. Multi-Task Prediction Model Based on ConvLSTM and Encoder-Decoder. Intell. Data Anal. 2021, 25, 359–382. [Google Scholar] [CrossRef]

- Li, D.; Li, D.; Li, C.; Li, L.; Gao, L. A Novel Data-Temporal Attention Network Based Strategy for Fault Diagnosis of Chiller Sensors. Energy Build. 2019, 198, 377–394. [Google Scholar] [CrossRef]

- Gao, Y.; Ruan, Y. Interpretable Deep Learning Model for Building Energy Consumption Prediction Based on Attention Mechanism. Energy Build. 2021, 252, 111379. [Google Scholar] [CrossRef]

- Ellis, M.J.; Chinde, V. An Encoder–Decoder LSTM-Based EMPC Framework Applied to a Building HVAC System. Chem. Eng. Res. Des. 2020, 160, 508–520. [Google Scholar] [CrossRef]

- Azam, M.F.; Younis, M.S. Multi-Horizon Electricity Load and Price Forecasting Using an Interpretable Multi-Head Self-Attention and EEMD-Based Framework. IEEE Access 2021, 9, 85918–85932. [Google Scholar] [CrossRef]

- Liu, X.; Ding, Y.; Tang, H.; Xiao, F. A Data Mining-Based Framework for the Identification of Daily Electricity Usage Patterns and Anomaly Detection in Building Electricity Consumption Data. Energy Build. 2021, 231, 110601. [Google Scholar] [CrossRef]

- Prabhakaran, K.; Dridi, J.; Amayri, M.; Bouguila, N. Explainable K-Means Clustering for Occupancy Estimation. In Proceedings of the Procedia Computer Science; Elsevier: Amsterdam, The Netherlands, 2022; Volume 203, pp. 326–333. [Google Scholar]

- Galli, A.; Piscitelli, M.S.; Moscato, V.; Capozzoli, A. Bridging the Gap between Complexity and Interpretability of a Data Analytics-Based Process for Benchmarking Energy Performance of Buildings. Expert. Syst. Appl. 2022, 206, 117649. [Google Scholar] [CrossRef]

- Choi, S.Y.; Kim, S.H. Selection of a Transparent Meta-Model Algorithm for Feasibility Analysis Stage of Energy Efficient Building Design: Clustering vs. Tree. Energies 2022, 15, 6620. [Google Scholar] [CrossRef]

- Tang, W.; Wang, H.; Lee, X.L.; Yang, H.T. Machine Learning Approach to Uncovering Residential Energy Consumption Patterns Based on Socioeconomic and Smart Meter Data. Energy 2022, 240, 122500. [Google Scholar] [CrossRef]

- Grimaldo, A.; Novak, J. Explainable Needn’t Be (Much) Less Accurate: Evaluating an Explainable AI Dashboard for Energy Forecasting. In Proceedings of the IFIP Advances in Information and Communication Technology, Virtual, 12–13 May 2021; Volume 628. [Google Scholar]

- Grimaldo, A.I.; Novak, J. User-Centered Visual Analytics Approach for Interactive and Explainable Energy Demand Analysis in Prosumer Scenarios. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); IOP Publishing: Bristol, UK, 2019; Volume 11754, LNCS. [Google Scholar]

- Bhatia, A.; Garg, V.; Haves, P.; Pudi, V. Explainable Clustering Using Hyper-Rectangles for Building Energy Simulation Data. In Proceedings of the IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2019; Volume 238. [Google Scholar]

- Kasuya, T.; Takeshi, T.; Esaki, H. Building Activity Profiling: Explainable and Predictive Modeling for Building Automation. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication, ICAIIC 2020, Fukuoka, Japan, 19–21 February 2020. [Google Scholar]

- Xiao, T.; Xu, P.; Ding, R.; Chen, Z. An Interpretable Method for Identifying Mislabeled Commercial Building Based on Temporal Feature Extraction and Ensemble Classifier. Sustain. Cities Soc. 2022, 78, 103635. [Google Scholar] [CrossRef]

- Miller, C. What’s in the Box?! Towards Explainable Machine Learning Applied to Non-Residential Building Smart Meter Classification. Energy Build. 2019, 199, 523–536. [Google Scholar] [CrossRef]

- Khamma, T.R.; Zhang, Y.; Guerrier, S.; Boubekri, M. Generalized Additive Models: An Efficient Method for Short-Term Energy Prediction in Office Buildings. Energy 2020, 213, 118834. [Google Scholar] [CrossRef]

- Voss, M.; Heinekamp, J.F.; Krutzsch, S.; Sick, F.; Albayrak, S.; Strunz, K. Generalized Additive Modeling of Building Inertia Thermal Energy Storage for Integration into Smart Grid Control. IEEE Access 2021, 9, 71699–71711. [Google Scholar] [CrossRef]

- Li, Y.; Cao, L.; Zhang, J.; Jiang, Y.; Han, Y.; Wei, J. Energy Benchmarking in Healthcare Facilities: A Comparative Study. J. Constr. Eng. Manag. 2021, 147, 04021159. [Google Scholar] [CrossRef]

- Ghose, A.; Pizzol, M.; McLaren, S.J.; Vignes, M.; Dowdell, D. Refurbishment of Office Buildings in New Zealand: Identifying Priorities for Reducing Environmental Impacts. Int. J. Life Cycle Assess. 2019, 24, 1480–1495. [Google Scholar] [CrossRef]

- González-Mahecha, R.E.; Lucena, A.F.P.; Szklo, A.; Ferreira, P.; Vaz, A.I.F. Optimization Model for Evaluating On-Site Renewable Technologies with Storage in Zero/Nearly Zero Energy Buildings. Energy Build. 2018, 172, 505–516. [Google Scholar] [CrossRef]

- Charalampopoulos, I. A Comparative Sensitivity Analysis of Human Thermal Comfort Indices with Generalized Additive Models. Theor. Appl. Clim. 2019, 137, 1605–1622. [Google Scholar] [CrossRef]

- Pathak, N.; Ba, A.; Ploennigs, J.; Roy, N. Forecasting Gas Usage for Big Buildings Using Generalized Additive Models and Deep Learning. In Proceedings of the 2018 IEEE International Conference on Smart Computing, SMARTCOMP 2018, Taormina, Italy, 18–20 June 2018. [Google Scholar]

- Sundararajan, A.; Ollis, B. Regression and Generalized Additive Model to Enhance the Performance of Photovoltaic Power Ensemble Predictors. IEEE Access 2021, 9, 111899–111914. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should i Trust You?” Explaining the Predictions of Any Classifier. In Proceedings of the NAACL-HLT 2016—2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016. [Google Scholar]

- Hülsmann, J.; Barbosa, J.; Steinke, F. Local Interpretable Explanations of Energy System Designs. Energies 2023, 16, 2161. [Google Scholar] [CrossRef]

- Wastensteiner, J.; Michael Weiss, T.; Haag, F.; Hopf, K. Explainable AI for Tailored Electricity Consumption Feedback–An Experimental Evaluation of Visualizations Experimental Evaluation of Visualizations. arXiv 2021, arXiv:2208.11408. [Google Scholar]

- Tsoka, T.; Ye, X.; Chen, Y.Q.; Gong, D.; Xia, X. Building Energy Performance Certificate Labelling Classification Based on Explainable Artificial Intelligence. In Proceedings of the Communications in Computer and Information Science, Guangzhou, China, 27–30 August 2021; Springer Science and Business Media: Berlin/Heidelberg, Germany, 2021; Volume 1449, pp. 181–196. [Google Scholar]

- Chung, W.J.; Liu, C. Analysis of Input Parameters for Deep Learning-Based Load Prediction for Office Buildings in Different Climate Zones Using EXplainable Artificial Intelligence. Energy Build. 2022, 276, 112521. [Google Scholar] [CrossRef]

- Srinivasan, S.; Arjunan, P.; Jin, B.; Sangiovanni-Vincentelli, A.L.; Sultan, Z.; Poolla, K. Explainable AI for Chiller Fault-Detection Systems: Gaining Human Trust. Computer 2021, 54, 60–68. [Google Scholar] [CrossRef]

- Carlsson, L.S.; Samuelsson, P.B.; Jönsson, P.G. Interpretable Machine Learning—Tools to Interpret the Predictions of a Machine Learning Model Predicting the Electrical Energy Consumption of an Electric Arc Furnace. Steel Res. Int. 2020, 91, 2000053. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 2017. [Google Scholar]

- Dinmohammadi, F.; Han, Y.; Shafiee, M. Predicting Energy Consumption in Residential Buildings Using Advanced Machine Learning Algorithms. Energies 2023, 16, 3748. [Google Scholar] [CrossRef]

- Sun, M.; Han, C.; Nie, Q.; Xu, J.; Zhang, F.; Zhao, Q. Understanding Building Energy Efficiency with Administrative and Emerging Urban Big Data by Deep Learning in Glasgow. Energy Build. 2022, 273, 112331. [Google Scholar] [CrossRef]

- Park, S.; Moon, J.; Hwang, E. Explainable Anomaly Detection for District Heating Based on Shapley Additive Explanations. In Proceedings of the IEEE International Conference on Data Mining Workshops, ICDMW, Virtual, 7–10 December 2020; Volume 2020. [Google Scholar]

- Ryu, S.H.; Moon, H.J. Development of an Occupancy Prediction Model Using Indoor Environmental Data Based on Machine Learning Techniques. Build. Environ. 2016, 107, 1–9. [Google Scholar] [CrossRef]

- Zhang, W.; Wen, Y.; Tseng, K.J.; Jin, G. Demystifying Thermal Comfort in Smart Buildings: An Interpretable Machine Learning Approach. IEEE Internet Things J. 2021, 8, 8021–8031. [Google Scholar] [CrossRef]

- Yang, Y.; Yuan, Y.; Han, Z.; Liu, G. Interpretability Analysis for Thermal Sensation Machine Learning Models: An Exploration Based on the SHAP Approach. Indoor Air 2022, 32, e12984. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Pan, Y. BIM-Supported Automatic Energy Performance Analysis for Green Building Design Using Explainable Machine Learning and Multi-Objective Optimization. Appl. Energy 2023, 333, 120575. [Google Scholar] [CrossRef]

- Chang, X.; Li, W.; Ma, J.; Yang, T.; Zomaya, A.Y. Interpretable Machine Learning in Sustainable Edge Computing: A Case Study of Short-Term Photovoltaic Power Output Prediction. In Proceedings of the ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing, Seoul, Republic of Korea, 14–19 April 2024; Volume 2020. [Google Scholar]

- Arjunan, P.; Poolla, K.; Miller, C. EnergyStar++: Towards More Accurate and Explanatory Building Energy Benchmarking. Appl. Energy 2020, 276, 115413. [Google Scholar] [CrossRef]

- Gao, Y.; Han, H.; Lu, H.; Jiang, S.X.; Zhang, Y.; Luo, M.W. Knowledge Mining for Chiller Faults Based on Explanation of Data-Driven Diagnosis. Appl. Therm. Eng. 2022, 205, 118032. [Google Scholar] [CrossRef]

- Ugwuanyi, C. Using Interpretable Machine Learning for Indoor CO2 Level Prediction and Occupancy Estimation. Ph.D. Thesis, University of Strathclyde, Glasgow, UK, 2021. [Google Scholar]

- Park, H.; Park, D.Y. Comparative Analysis on Predictability of Natural Ventilation Rate Based on Machine Learning Algorithms. Build. Environ. 2021, 195, 107744. [Google Scholar] [CrossRef]

- Wenninger, S.; Karnebogen, P.; Lehmann, S.; Menzinger, T.; Reckstadt, M. Evidence for Residential Building Retrofitting Practices Using Explainable AI and Socio-Demographic Data. Energy Rep. 2022, 8, 13514–13528. [Google Scholar] [CrossRef]

- Papadopoulos, S.; Kontokosta, C.E. Grading Buildings on Energy Performance Using City Benchmarking Data. Appl. Energy 2019, 233–234, 244–253. [Google Scholar] [CrossRef]

- Golizadeh Akhlaghi, Y.; Aslansefat, K.; Zhao, X.; Sadati, S.; Badiei, A.; Xiao, X.; Shittu, S.; Fan, Y.; Ma, X. Hourly Performance Forecast of a Dew Point Cooler Using Explainable Artificial Intelligence and Evolutionary Optimisations by 2050. Appl. Energy 2021, 281, 116062. [Google Scholar] [CrossRef]

- Carlsson, L.S.; Samuelsson, P.B.; Jönsson, P.G. Using Interpretable Machine Learning to Predict the Electrical Energy Consumption of an Electric Arc Furnace. Stahl. Eisen 2019, 139, 24–29. [Google Scholar]

- Chahbi, I.; Ben Rabah, N.; Ben Tekaya, I. Towards an Efficient and Interpretable Machine Learning Approach for Energy Prediction in Industrial Buildings: A Case Study in the Steel Industry. In Proceedings of the IEEE/ACS International Conference on Computer Systems and Applications, AICCSA, Abu Dhabi, United Arab Emirates, 5–8 December 2022; IEEE Computer Society: Washington, DC, USA, 2022; Volume 2022. [Google Scholar]

- Singh, M.M.; Singaravel, S.; Geyer, P. Improving Prediction Accuracy of Machine Learning Energy Prediction Models. In Proceedings of the 36th CIB W78 2019 Conference, Northumbria, UK, 18–20 September 2019. [Google Scholar]

- Zhang, C.; Li, J.; Zhao, Y.; Li, T.; Chen, Q.; Zhang, X. A Hybrid Deep Learning-Based Method for Short-Term Building Energy Load Prediction Combined with an Interpretation Process. Energy Build. 2020, 225, 110301. [Google Scholar] [CrossRef]

- Alfalah, B.; Shahrestani, M.; Shao, L. Developing a Hidden Markov Model for Occupancy Prediction in High-Density Higher Education Buildings. J. Build. Eng. 2023, 73, 106795. [Google Scholar] [CrossRef]

- Kim, J.Y.; Cho, S.B. Electric Energy Consumption Prediction by Deep Learning with State Explainable Autoencoder. Energies 2019, 12, 739. [Google Scholar] [CrossRef]

- Moon, J.; Park, S.; Rho, S.; Hwang, E. Robust Building Energy Consumption Forecasting Using an Online Learning Approach with R Ranger. J. Build. Eng. 2022, 47, 103851. [Google Scholar] [CrossRef]

- Moon, J.; Park, S.; Rho, S.; Hwang, E. Interpretable Short-Term Electrical Load Forecasting Scheme Using Cubist. Comput. Intell. Neurosci. 2022, 2022, 6892995. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Y.; Zeng, R.; Srinivasan, R.S.; Ahrentzen, S. Random Forest Based Hourly Building Energy Prediction. Energy Build. 2018, 171, 11–25. [Google Scholar] [CrossRef]

- Smarra, F.; Jain, A.; de Rubeis, T.; Ambrosini, D.; D’Innocenzo, A.; Mangharam, R. Data-Driven Model Predictive Control Using Random Forests for Building Energy Optimization and Climate Control. Appl. Energy 2018, 226, 1252–1272. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, H.; Zhang, L.; Feng, Z. Enhancing Building Energy Efficiency Using a Random Forest Model: A Hybrid Prediction Approach. Energy Rep. 2021, 7, 5003–5012. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, F.; Wen, Y.; Nee, B. Toward Explainable and Interpretable Building Energy Modelling: An Explainable Artificial Intelligence Approach. In Proceedings of the BuildSys 2021—2021 ACM International Conference on Systems for Energy-Efficient Built Environments, Coimbra, Portugal, 17–18 November 2021. [Google Scholar]

- Touzani, S.; Granderson, J.; Fernandes, S. Gradient Boosting Machine for Modeling the Energy Consumption of Commercial Buildings. Energy Build. 2018, 158, 1533–1543. [Google Scholar] [CrossRef]

- Zhang, Y.; Teoh, B.K.; Wu, M.; Chen, J.; Zhang, L. Data-Driven Estimation of Building Energy Consumption and GHG Emissions Using Explainable Artificial Intelligence. Energy 2023, 262, 125468. [Google Scholar] [CrossRef]

- Yigit, S. A Machine-Learning-Based Method for Thermal Design Optimization of Residential Buildings in Highly Urbanized Areas of Turkey. J. Build. Eng. 2021, 38, 102225. [Google Scholar] [CrossRef]

- Sauer, J.; Mariani, V.C.; dos Santos Coelho, L.; Ribeiro, M.H.D.M.; Rampazzo, M. Extreme Gradient Boosting Model Based on Improved Jaya Optimizer Applied to Forecasting Energy Consumption in Residential Buildings. Evol. Syst. 2022, 13, 577–588. [Google Scholar] [CrossRef]

- Mohammadiziazi, R.; Bilec, M.M. Application of Machine Learning for Predicting Building Energy Use at Different Temporal and Spatial Resolution under Climate Change in USA. Buildings 2020, 10, 139. [Google Scholar] [CrossRef]

- Huang, J.; Algahtani, M.; Kaewunruen, S. Energy Forecasting in a Public Building: A Benchmarking Analysis on Long Short-Term Memory (LSTM), Support Vector Regression (SVR), and Extreme Gradient Boosting (XGBoost) Networks. Appl. Sci. 2022, 12, 9788. [Google Scholar] [CrossRef]

- Sipple, J. Interpretable, Multidimensional, Multimodal Anomaly Detection with Negative Sampling for Detection of Device Failure. In Proceedings of the 37th International Conference on Machine Learning, ICML 2020, Virtual, 13–18 July 2020; Volume 119. [Google Scholar]

- Zhang, Z.; Chen, Y.; Wang, H.; Fu, Q.; Chen, J.; Lu, Y. Anomaly Detection Method for Building Energy Consumption in Multivariate Time Series Based on Graph Attention Mechanism. PLoS ONE 2023, 18, e0286770. [Google Scholar] [CrossRef]

- Lei, L.; Wu, B.; Fang, X.; Chen, L.; Wu, H.; Liu, W. A Dynamic Anomaly Detection Method of Building Energy Consumption Based on Data Mining Technology. Energy 2023, 263, 125575. [Google Scholar] [CrossRef]

- Sakkas, N.; Yfanti, S.; Daskalakis, C.; Barbu, E.; Domnich, M. Interpretable Forecasting of Energy Demand in the Residential Sector. Energies 2021, 14, 6568. [Google Scholar] [CrossRef]

- Tran, B.; Sudusinghe, C.; Nguyen, S.; Alahakoon, D. Building Interpretable Predictive Models with Context-Aware Evolutionary Learning. Appl. Soft Comput. 2023, 132, 109854. [Google Scholar] [CrossRef]

- Jain, R.K.; Damoulas, T.; Kontokosta, C.E. Towards Data-Driven Energy Consumption Forecasting of Multi-Family Residential Buildings: Feature Selection via the Lasso. In Proceedings of the 2014 International Conference on Computing in Civil and Building Engineering, Orlando, FL, USA, 23–25 June 2014. [Google Scholar]

- Krishnan, M. Against Interpretability: A Critical Examination of the Interpretability Problem in Machine Learning. Philos. Technol. 2020, 33, 487–502. [Google Scholar] [CrossRef]

| Model | Advantages/Disadvantages | Applicability |

|---|---|---|

| Interpretable Artificial Neural Networks | Advantages:

| ANN, CNN, DNN, RNN |

| Encoder–Decoder | Advantages:

| RNN |

| Clustering and feature extraction | Advantages:

| K-means, DBSCAN, GBT, CIT, XGBoost, kNN, DBC, GM, DT, RF |

| Regressors | Advantages:

| Linear and logistic regression, SVR, MLR, Lasso |

| Generalized Additive Models (GAM) | Advantages:

| |

| Local Interpretable Model-Agnostic Explanations (LIME) | Advantages:

| All models. |

| SHapley Additive exPlanations (SHAP) | Advantages:

| All models. |

| Other techniques | Advantages:

| GA, PI, DiCE, and combinations of other ML models. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gugliermetti, L.; Cumo, F.; Agostinelli, S. A Future Direction of Machine Learning for Building Energy Management: Interpretable Models. Energies 2024, 17, 700. https://doi.org/10.3390/en17030700

Gugliermetti L, Cumo F, Agostinelli S. A Future Direction of Machine Learning for Building Energy Management: Interpretable Models. Energies. 2024; 17(3):700. https://doi.org/10.3390/en17030700

Chicago/Turabian StyleGugliermetti, Luca, Fabrizio Cumo, and Sofia Agostinelli. 2024. "A Future Direction of Machine Learning for Building Energy Management: Interpretable Models" Energies 17, no. 3: 700. https://doi.org/10.3390/en17030700

APA StyleGugliermetti, L., Cumo, F., & Agostinelli, S. (2024). A Future Direction of Machine Learning for Building Energy Management: Interpretable Models. Energies, 17(3), 700. https://doi.org/10.3390/en17030700