An Applied Framework for Smarter Buildings Exploiting a Self-Adapted Advantage Weighted Actor-Critic

Abstract

1. Introduction

1.1. Related Work

1.2. Main Contributions

- Address the main gap by exploiting offline training through reinforcement learning.

- Apply offline training utilizing the AWAC as the main algorithm in simulated use case scenarios as close as possible to a real building replica.

- Propose an adaptive AWAC (SA-AWAC) to enhance the accuracy and possibilities of AWAC.

- Apply SA-AWAC in a real life use case scenario to test both its accuracy with the AWAC and other methods (RBC, DDPG).

2. Methodology

2.1. Design Approach for a Smart Building

2.1.1. IoT (Things) Layer

2.1.2. Protocol Layer

2.1.3. Middleware

2.1.4. Data Layer

2.2. Management Layer

2.2.1. Advantage Weighted Actor-Critic

| Algorithm 1 AWAC(T) |

|

2.2.2. Self-Adapted Advantage Weighted Actor-Critic

| Algorithm 2 SA-AWAC |

|

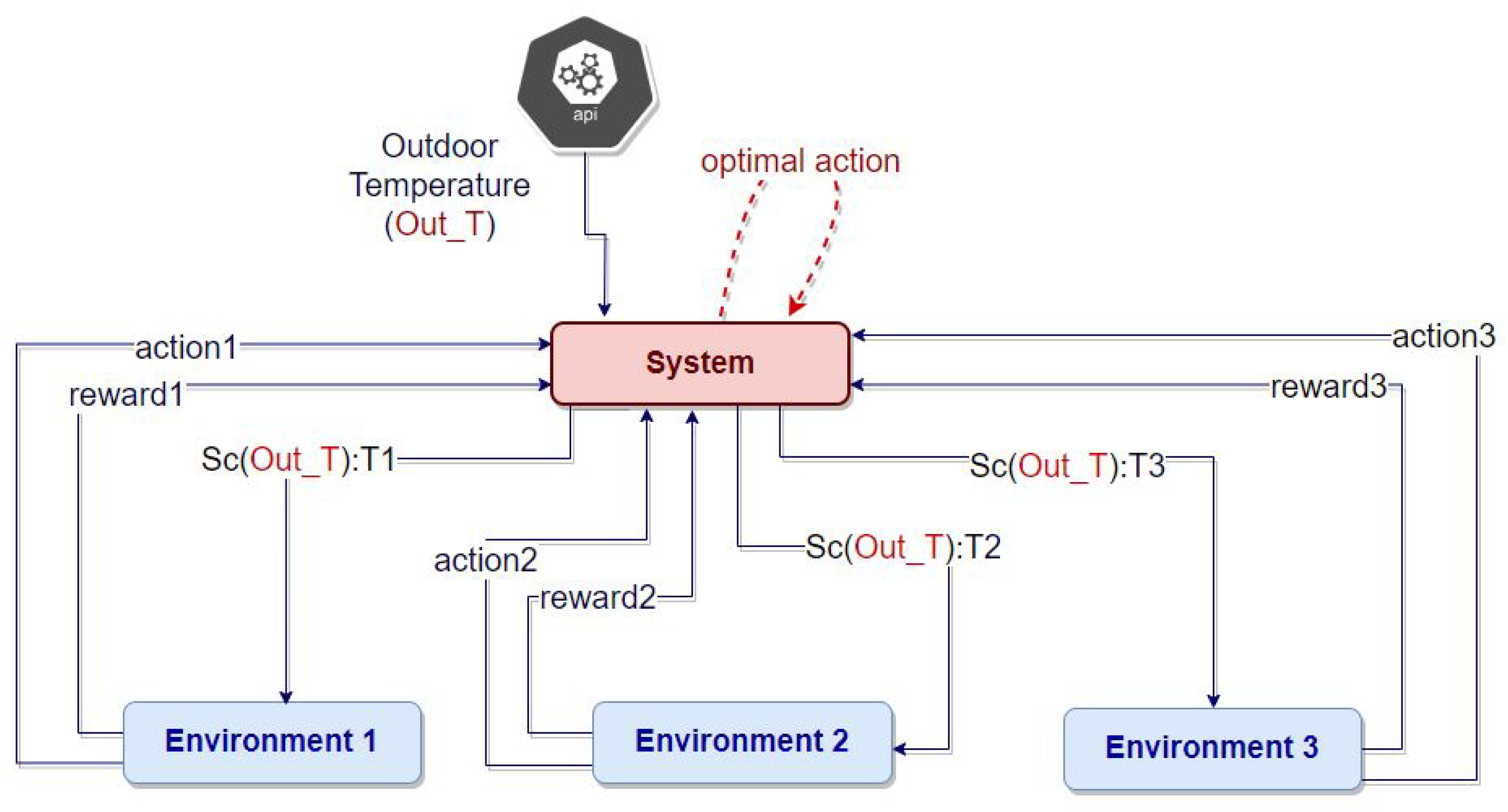

2.3. Overall Architecture of a Smart Building Intelligent Solution

3. Experimental Results

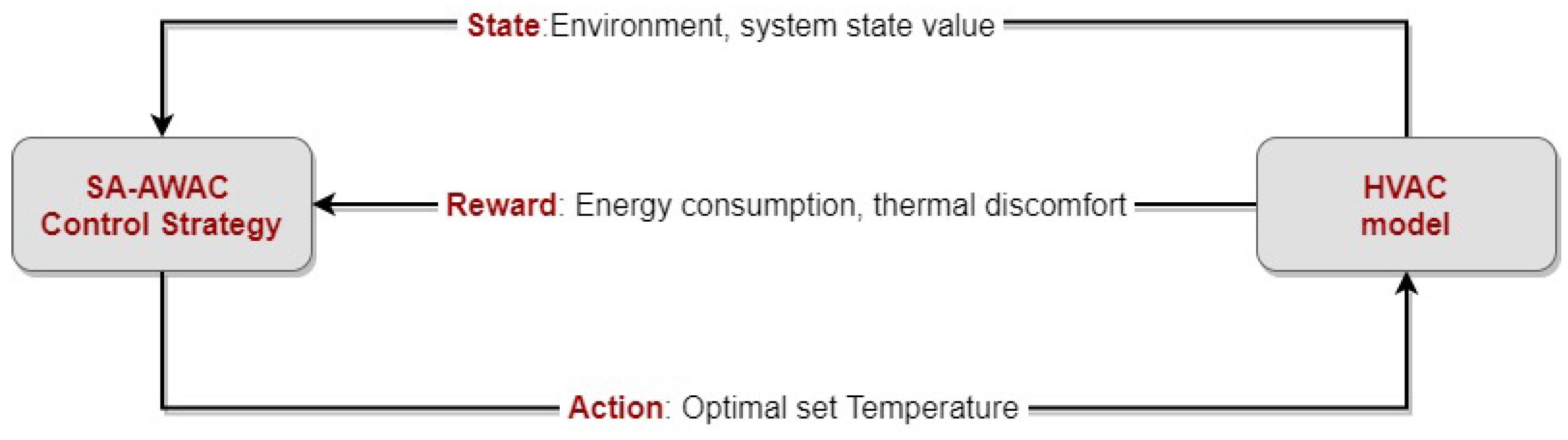

3.1. SA-AWAC Configuration for Modeling Heating Ventilation Air Conditioning Systems

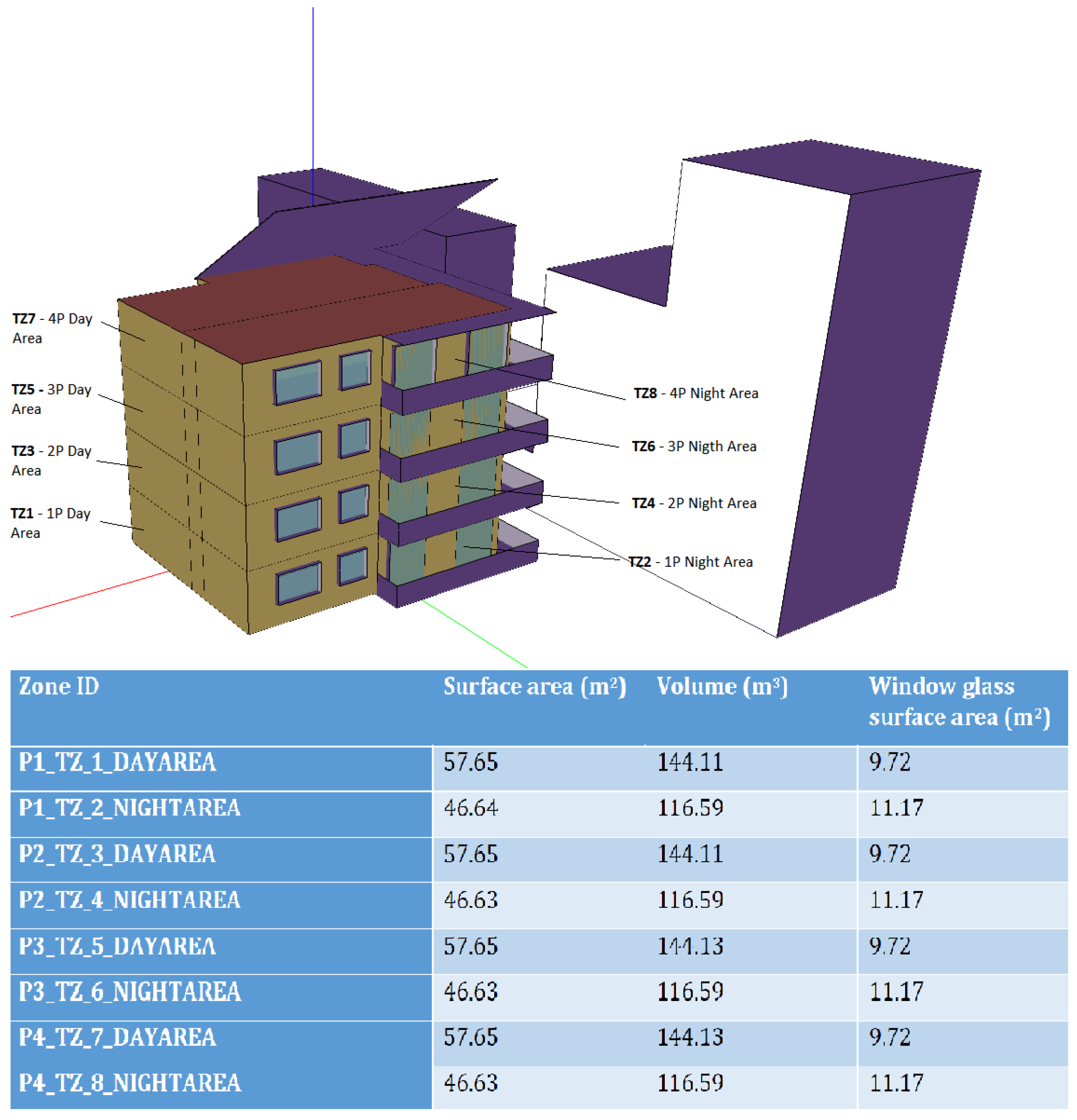

- State: The state represents the information about the current state of the building energy system that is used as input for the control strategy. The state variables capture various aspects of the building and its environment, providing a comprehensive representation of the system’s dynamics. To describe all of the information, state space needs to be established with the appropriate variables. The variables under examination in this study, as presented in Table 2, encompass the indoor environment conditions. This includes the air temperature and humidity levels for each zone. Additionally, the study considers the state of the external environment, which involves outdoor temperature and humidity. Furthermore, the analysis considers elements related to the battery, including the battery’s state of charge and the energy used for charging the battery.

- Action: The four air-to-water heat pumps (HPs) make up the building’s thermal system. Every unit has an integrated water tank that supplies hot water for the fan, heating coils, and domestic hot water (DHW) use. Since the DHW consumption profiles were investigated using a fixed temperature (50 °C), neither the HP supply temperature nor the temperature of the storage tanks may be controlled due to the system setup (they change over time). As seen in Table 3, a single set point is assumed for each thermal zone to regulate its temperature. To minimize the impact on the building, the suggested strategy controls energy consumption and maintains the control set points.

- Reward: In the context of this work, the AWAC control strategy is designed to optimize the energy consumption of the HVAC system, representing the cost and thermal discomfort factors in our environment. A reward function is formulated, integrating both energy and comfort to guide the optimization process.where:

- −

- and are weighting factors that balance the importance of energy and comfort in the optimization process.

- −

- Consumption represents the energy component, which takes into account elements such as energy usage and operating costs, reflecting the economic aspect of the control approach.

- −

- Comfort represents the comfort component, measuring the variance between predicted mean vote (PMV) and predicted percentage of dissatisfied (PPD) [36]. Minimizing thermal discomfort prioritizes occupant comfort, striving to maintain temperatures within a comfortable range.

3.2. Simulated Building Use Case

3.2.1. Set-Up Configurations

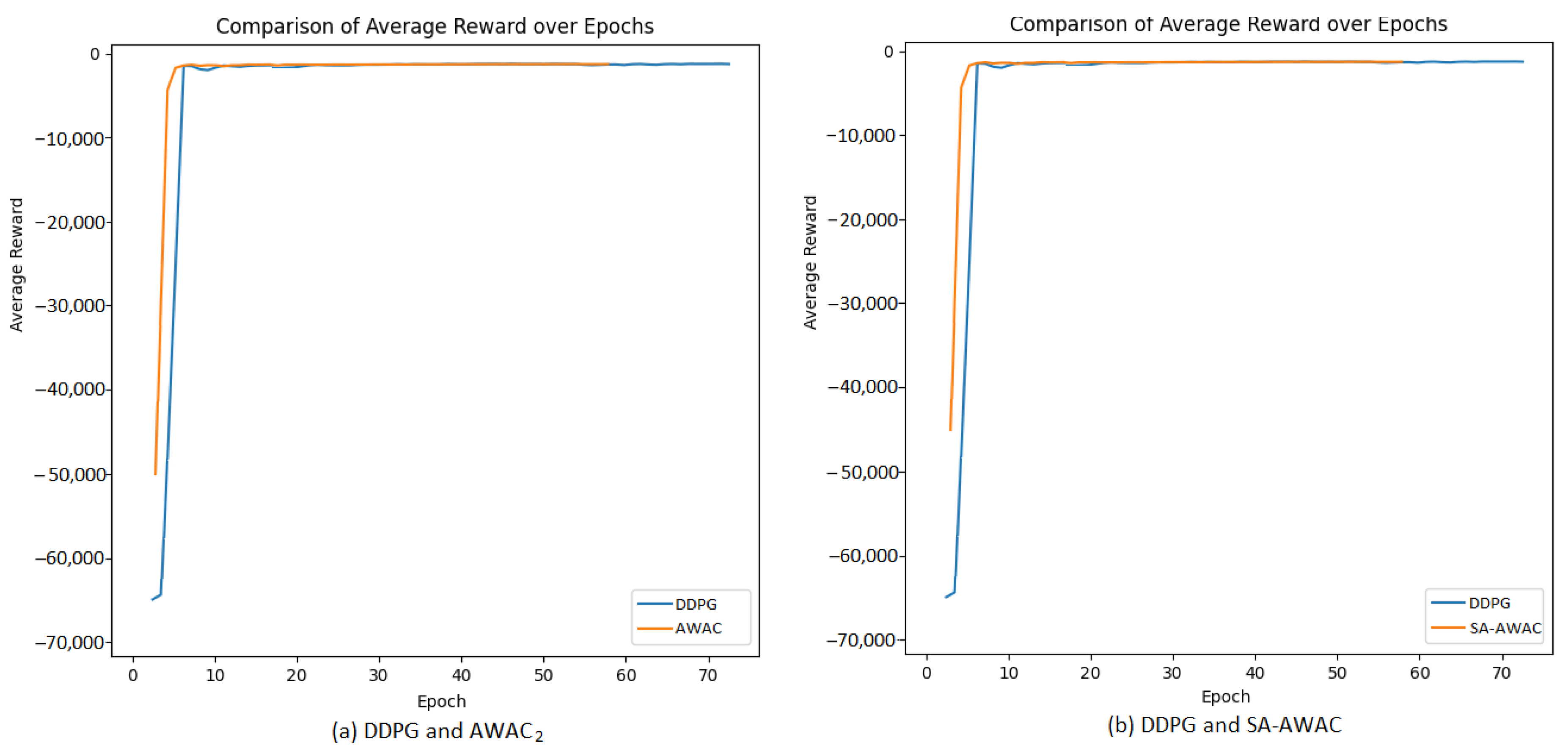

3.2.2. Results

- 1.

- AWAC1, all thermostat setpoints were randomly selected between [21 °C, 22 °C], and a 2000 experience AWAC replay buffer was created.

- 2.

- AWAC2, all thermostat setpoints were randomly selected between [22 °C, 23 °C], and a 2000 experience AWAC replay buffer was created.

- 3.

- AWAC3, all thermostat setpoints were randomly selected between [23 °C, 24 °C], and a 2000 experience AWAC replay buffer was created.

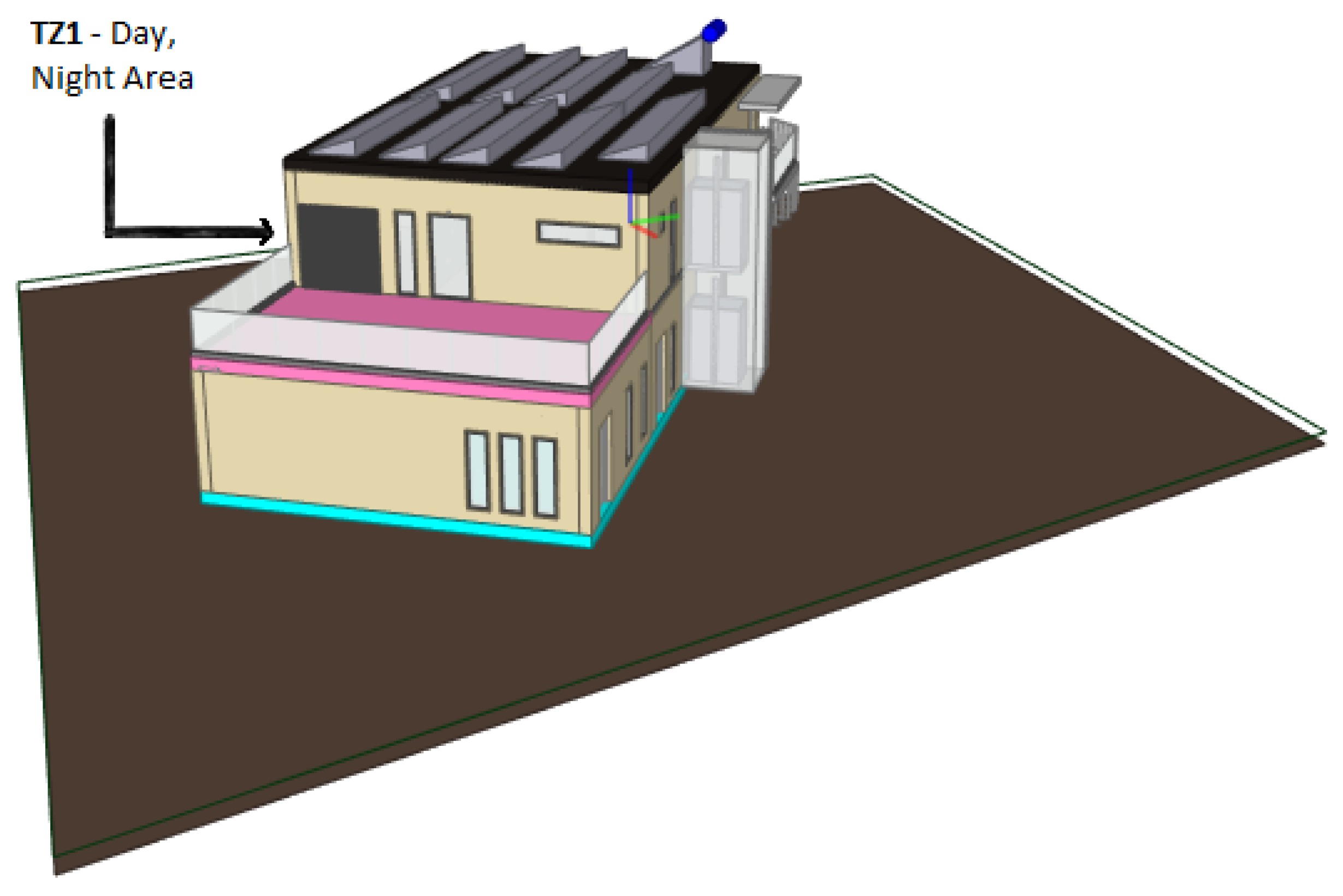

3.3. Real-Life Use Case

3.3.1. Set-Up Configuration

3.3.2. Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- US EIA. Global Energy & CO2 Status Report: The Latest Trends in Energy and Emissions in 2018; US EIA: Washington, DC, USA, 2018.

- Korkas, C.; Dimara, A.; Michailidis, I.; Krinidis, S.; Marin-Perez, R.; Martínez García, A.I.; Skarmeta, A.; Kitsikoudis, K.; Kosmatopoulos, E.; Anagnostopoulos, C.N.; et al. Integration and Verification of PLUG-N-HARVEST ICT Platform for Intelligent Management of Buildings. Energies 2022, 15, 2610. [Google Scholar] [CrossRef]

- Papaioannou, A.; Dimara, A.; Michailidis, I.; Stefanopoulou, A.; Karatzinis, G.; Krinidis, S.; Anagnostopoulos, C.N.; Kosmatopoulos, E.; Ioannidis, D.; Tzovaras, D. Self-protection of IoT Gateways Against Breakdowns and Failures Enabling Automated Sensing and Control. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, León, Spain, 14–17 June 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 231–241. [Google Scholar]

- Şerban, A.C.; Lytras, M.D. Artificial intelligence for smart renewable energy sector in europe—Smart energy infrastructures for next generation smart cities. IEEE Access 2020, 8, 77364–77377. [Google Scholar] [CrossRef]

- Alanne, K.; Sierla, S. An overview of machine learning applications for smart buildings. Sustain. Cities Soc. 2022, 76, 103445. [Google Scholar] [CrossRef]

- Lamnatou, C.; Chemisana, D.; Cristofari, C. Smart grids and smart technologies in relation to photovoltaics, storage systems, buildings and the environment. Renew. Energy 2022, 185, 1376–1391. [Google Scholar] [CrossRef]

- Labonnote, N.; Høyland, K. Smart home technologies that support independent living: Challenges and opportunities for the building industry—A systematic mapping study. Intell. Build. Int. 2017, 9, 40–63. [Google Scholar] [CrossRef]

- Huang, Q. Energy-Efficient Smart Building Driven by Emerging Sensing, Communication, and Machine Learning Technologies. Eng. Lett. 2018, 26, 3. [Google Scholar]

- Nair, A.; Gupta, A.; Dalal, M.; Levine, S. Awac: Accelerating online reinforcement learning with offline datasets. arXiv 2020, arXiv:2006.09359. [Google Scholar]

- Rager, M.; Gahm, C.; Denz, F. Energy-oriented scheduling based on evolutionary algorithms. Comput. Oper. Res. 2015, 54, 218–231. [Google Scholar] [CrossRef]

- Touqeer, H.; Zaman, S.; Amin, R.; Hussain, M.; Al-Turjman, F.; Bilal, M. Smart home security: Challenges, issues and solutions at different IoT layers. J. Supercomput. 2021, 77, 14053–14089. [Google Scholar] [CrossRef]

- Zaidan, A.; Zaidan, B. A review on intelligent process for smart home applications based on IoT: Coherent taxonomy, motivation, open challenges, and recommendations. Artif. Intell. Rev. 2020, 53, 141–165. [Google Scholar] [CrossRef]

- Nguyen, N.H.; Le, B.C.; Bui, T.T. Benefit Analysis of Grid-Connected Floating Photovoltaic System on the Hydropower Reservoir. Appl. Sci. 2023, 13, 2948. [Google Scholar] [CrossRef]

- Han, J.; Choi, C.S.; Park, W.K.; Lee, I.; Kim, S.H. Smart home energy management system including renewable energy based on ZigBee and PLC. IEEE Trans. Consum. Electron. 2014, 60, 198–202. [Google Scholar] [CrossRef]

- Sathisshkumar, A.; Jayamani, S. Renewable energy management system in home appliance. In Proceedings of the 2015 International Conference on Circuits, Power and Computing Technologies [ICCPCT-2015], Nagercoil, India, 19–20 March 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–4. [Google Scholar]

- Kim, D.; Yoon, Y.; Lee, J.; Mago, P.J.; Lee, K.; Cho, H. Design and implementation of smart buildings: A review of current research trend. Energies 2022, 15, 4278. [Google Scholar] [CrossRef]

- Chernbumroong, S.; Atkins, A.; Yu, H. Perception of smart home technologies to assist elderly people. In Proceedings of the 4th International Conference on Software, Knowledge, Information Management and Applications, Suwon, Republic of Korea, 14–15 January 2010; pp. 90–97. [Google Scholar]

- Tao, M.; Zuo, J.; Liu, Z.; Castiglione, A.; Palmieri, F. Multi-layer cloud architectural model and ontology-based security service framework for IoT-based smart homes. Future Gener. Comput. Syst. 2018, 78, 1040–1051. [Google Scholar] [CrossRef]

- Elkhorchani, H.; Grayaa, K. Novel home energy management system using wireless communication technologies for carbon emission reduction within a smart grid. J. Clean. Prod. 2016, 135, 950–962. [Google Scholar] [CrossRef]

- Fang, X.; Gong, G.; Li, G.; Chun, L.; Peng, P.; Li, W.; Shi, X.; Chen, X. Deep reinforcement learning optimal control strategy for temperature setpoint real-time reset in multi-zone building HVAC system. Appl. Therm. Eng. 2022, 212, 118552. [Google Scholar] [CrossRef]

- Badar, A.Q.; Anvari-Moghaddam, A. Smart home energy management system—A review. Adv. Build. Energy Res. 2022, 16, 118–143. [Google Scholar] [CrossRef]

- Yu, L.; Xie, W.; Xie, D.; Zou, Y.; Zhang, D.; Sun, Z.; Zhang, L.; Zhang, Y.; Jiang, T. Deep reinforcement learning for smart home energy management. IEEE Internet Things J. 2019, 7, 2751–2762. [Google Scholar] [CrossRef]

- Ye, Y.; Qiu, D.; Wang, H.; Tang, Y.; Strbac, G. Real-time autonomous residential demand response management based on twin delayed deep deterministic policy gradient learning. Energies 2021, 14, 531. [Google Scholar] [CrossRef]

- Zanette, A.; Wainwright, M.J.; Brunskill, E. Provable benefits of actor-critic methods for offline reinforcement learning. Adv. Neural Inf. Process. Syst. 2021, 34, 13626–13640. [Google Scholar]

- Koohang, A.; Sargent, C.S.; Nord, J.H.; Paliszkiewicz, J. Internet of Things (IoT): From awareness to continued use. Int. J. Inf. Manag. 2022, 62, 102442. [Google Scholar] [CrossRef]

- Friess, P.; Ibanez, F. Putting the Internet of Things forward to the next nevel. In Internet of Things Applications—From Research and Innovation to Market Deployment; River Publishers: Aalborg, Denmark, 2022; pp. 3–6. [Google Scholar]

- Mahapatra, B.; Nayyar, A. Home energy management system (HEMS): Concept, architecture, infrastructure, challenges and energy management schemes. Energy Syst. 2022, 13, 643–669. [Google Scholar] [CrossRef]

- Ramalingam, S.P.; Shanmugam, P.K. A Comprehensive Review on Wired and Wireless Communication Technologies and Challenges in Smart Residential Buildings. Recent Adv. Comput. Sci. Commun. (Former. Recent Patents Comput. Sci.) 2022, 15, 1140–1167. [Google Scholar] [CrossRef]

- Banerji, S.; Chowdhury, R.S. On IEEE 802.11: Wireless LAN technology. arXiv 2013, arXiv:1307.2661. [Google Scholar] [CrossRef]

- D’Ortona, C.; Tarchi, D.; Raffaelli, C. Open-Source MQTT-Based End-to-End IoT System for Smart City Scenarios. Future Internet 2022, 14, 57. [Google Scholar] [CrossRef]

- MQTT: The Standard for IoT Messaging. Available online: https://mqtt.org/ (accessed on 15 May 2023).

- Dimara, A.; Vasilopoulos, V.G.; Papaioannou, A.; Angelis, S.; Kotis, K.; Anagnostopoulos, C.N.; Krinidis, S.; Ioannidis, D.; Tzovaras, D. Self-healing of semantically interoperable smart and prescriptive edge devices in IoT. Appl. Sci. 2022, 12, 11650. [Google Scholar] [CrossRef]

- Villaflor, A.; Dolan, J.; Schneider, J. Fine-Tuning Offline Reinforcement Learning with Model-Based Policy Optimization. 2020. Available online: https://openreview.net/forum?id=wiSgdeJ29ee (accessed on 19 December 2023).

- Comanici, G.; Precup, D. Optimal policy switching algorithms for reinforcement learning. In Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems, Toronto, ON, Canada, 10–14 May 2010; Volume 1, pp. 709–714. [Google Scholar]

- Grondman, I.; Busoniu, L.; Lopes, G.A.D.; Babuska, R. A Survey of Actor-Critic Reinforcement Learning: Standard and Natural Policy Gradients. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2012, 42, 1291–1307. [Google Scholar] [CrossRef]

- Kaushal, A.A.; Anand, P.; Aithal, B.H. Assessment of the Impact of Building Orientation on PMV and PPD in Naturally Ventilated Rooms during Summers in Warm and Humid Climate of Kharagpur, India. In Proceedings of the 10th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, Istanbul, Turkey, 15–16 November 2023; pp. 528–533. [Google Scholar]

- Wu, J.; Hou, Z.; Shen, J.; Lian, Z. A method for the determination of optimal indoor environmental parameters range considering work performance. J. Build. Eng. 2021, 35, 101976. [Google Scholar] [CrossRef]

- Dimara, A.; Timplalexis, C.; Krinidis, S.; Schneider, C.; Bertocchi, M.; Tzovaras, D. Optimal comfort conditions in residential houses. In Proceedings of the 2020 5th International Conference on Smart and Sustainable Technologies (SpliTech), Split, Croatia, 23–26 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Scharnhorst, P.; Schubnel, B.; Fernández Bandera, C.; Salom, J.; Taddeo, P.; Boegli, M.; Gorecki, T.; Stauffer, Y.; Peppas, A.; Politi, C. Energym: A building model library for controller benchmarking. Appl. Sci. 2021, 11, 3518. [Google Scholar] [CrossRef]

| Outdoor Temperature | Season | Optimal Conditions | Scenario 1 | Scenario 2 | Scenario 3 |

|---|---|---|---|---|---|

| <15 °C | Winter | 21–23 °C | 21 °C | 22 °C | 23 °C |

| 15–28 °C | Spring, Autumn | 22–24 °C | 22 °C | 23 °C | 24 °C |

| 28 °C | Summer | 23–25 °C | 23 °C | 24 °C | 25 °C |

| Variable Name | Type | Lower Bound | Upper Bound | Description |

|---|---|---|---|---|

| Ext_T | scalar | −10 | 40 | Outdoor temperature (°C) |

| Ext_RH | scalar | 0 | 100 | Outdoor relative humidity (%RH) |

| Ext_Irr | scalar | 0 | 1000 | Direct normal radiation (W/m2) |

| Ext_P | scalar | 80,000.0 | 130,000.0 | Outdoor air pressure (Pa) |

| P1_T_Thermostat_sp_out | scalar | 16 | 26 | Floor 1 thermostat temperature (°C) |

| P2_T_Thermostat_sp_out | scalar | 16 | 26 | Floor 2 thermostat temperature (°C) |

| P3_T_Thermostat_sp_out | scalar | 16 | 26 | Floor 3 thermostat temperature (°C) |

| P4_T_Thermostat_sp_out | scalar | 16 | 26 | Floor 4 thermostat temperature (°C) |

| Z01_T | scalar | 10 | 40 | Zone 1 temperature (°C) |

| Z01_RH | scalar | 0 | 100 | Zone 1 relative humidity (%RH) |

| Z02_T | scalar | 10 | 40 | Zone 2 temperature (°C) |

| Z02_RH | scalar | 0 | 100 | Zone 2 relative humidity (%RH) |

| Z03_T | scalar | 10 | 40 | Zone 3 temperature (°C) |

| Z03_RH | scalar | 0 | 100 | Zone 3 relative humidity (%RH) |

| Z04_T | scalar | 10 | 40 | Zone 4 temperature (°C) |

| Z04_RH | scalar | 0 | 100 | Zone 4 relative humidity (%RH) |

| Z05_T | scalar | 10 | 40 | Zone 5 temperature (°C) |

| Z05_RH | scalar | 0 | 100 | Zone 5 relative humidity (%RH) |

| Z06_T | scalar | 10 | 40 | Zone 6 temperature (°C) |

| Z06_RH | scalar | 0 | 100 | Zone 6 relative humidity (%RH) |

| Z07_T | scalar | 10 | 40 | Zone 7 temperature (°C) |

| Z07_RH | scalar | 0 | 100 | Zone 7 relative humidity (%RH) |

| Z08_T | scalar | 10 | 40 | Zone 8 temperature (°C) |

| Z08_RH | scalar | 0 | 100 | Zone 8 relative humidity (%RH) |

| FA_ECh_Bat | scalar | 0 | 4000.0 | Battery charging energy (Wh) |

| Bd_FracCh_Bat | scalar | 0 | 1 | Battery state of charge |

| Variabel Name | Type | Lower Bound | Upper Bound | Description |

|---|---|---|---|---|

| P1_T_Thermostat_sp | scalar | 16 | 26 | Floor 1 thermostat setpoint (°C) |

| P2_T_Thermostat_sp | scalar | 16 | 26 | Floor 2 thermostat setpoint (°C) |

| P3_T_Thermostat_sp | scalar | 16 | 26 | Floor 3 thermostat setpoint (°C) |

| P4_T_Thermostat_sp | scalar | 16 | 26 | Floor 4 thermostat setpoint (°C) |

| RL Algorithm | Cumulative Reward | Percentage Diff (%) |

|---|---|---|

| SA-AWAC | ||

| DDPG | −48,883 | 44.16% |

| AWAC1 | −34,205 | 20.19% |

| AWAC2 | −31,523 | 13.40% |

| AWAC3 | −32,424 | 15.81% |

| SA-AWAC | −27,296 | - |

| RL Algorithm | Cumulative Reward | Percentage Diff (%) |

|---|---|---|

| SA-AWAC | ||

| RBC19 | −114,138 | >100% |

| RBC20 | −112,201 | >100% |

| RBC21 | −42,165 | >100% |

| RBC22 | −1633 | 37.34% |

| RBC23 | −2417 | >100% |

| DDPG | −1257 | 5.71% |

| AWAC1 | −1390 | 16.9% |

| AWAC2 | −1239 | 4.2% |

| AWAC3 | −1241 | 4.37% |

| SA-AWAC | −1189 | - |

| Type | Description | Model | Protocol |

|---|---|---|---|

| Actuator | Wireless actuator Light Dimmer switch | EnOcean Wireless Dimmer 1–10 V model FSG71/ | EnOcean |

| Sensor | Mutisensor | Fibaro Fgms-001-zw5 Motion Detector | Zwave |

| Sensor | Temperature- humidity sensor | Plugwise | Zigbee |

| Sensor | luminance sensor | FIH65B EnOcean Wireless Indoor Luminance | EnOcean |

| Device/Actuator | Heating ventilation air conditioning system (HVAC) | LG Ceiling mountain Cassette and HVAC controller | WiFi |

| Energy analyzer | Energy meter | Carlo Gavazzi 3phase | Modbus |

| Variable Name | Type | Lower Bound | Upper Bound | Description |

|---|---|---|---|---|

| Ext_T | scalar | −10 | 40 | Outdoor temperature(°C) |

| Ext_RH | scalar | 0 | 100 | Outdoor relative humidity (%RH) |

| P1_T_Thermostat_sp_out | scalar | 16 | 26 | Room 1 thermostat temperature (°C) |

| Z01_T | scalar | 10 | 40 | Zone 1 temperature (°C) |

| Z01_RH | scalar | 0 | 100 | Zone 1 relative humidity (%RH) |

| Variable Name | Type | Lower Bound | Upper Bound | Description |

|---|---|---|---|---|

| P1_T_Thermostat_sp | scalar | 16 | 26 | Floor 1 thermostat setpoint (°C) |

| RL Algorithm | Cumulative Reward | Percentage Diff (%) |

|---|---|---|

| SA-AWAC | ||

| DDPG | −65,498 | 34.91% |

| AWAC1 | −51,434 | 5.94% |

| AWAC2 | −49,766 | 2.50% |

| AWAC3 | −50,325 | 3.65% |

| SA-AWAC | −48,549 | - |

| RL Algorithm | Cumulative Reward | Percentage Diff (%) |

|---|---|---|

| SA-AWAC | ||

| RBC19 | −136,328 | >100% |

| RBC20 | −135,267 | >100% |

| RBC21 | −67,249 | >100% |

| RBC22 | −1997 | 31.2% |

| RBC23 | −3060 | >100% |

| DDPG | −1658 | 8.9% |

| AWAC1 | −1717 | 12.81% |

| AWAC2 | −1577 | 3.61% |

| AWAC3 | −1673 | 9.92% |

| SA-AWAC | −1522 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Papaioannou, I.; Dimara, A.; Korkas, C.; Michailidis, I.; Papaioannou, A.; Anagnostopoulos, C.-N.; Kosmatopoulos, E.; Krinidis, S.; Tzovaras, D. An Applied Framework for Smarter Buildings Exploiting a Self-Adapted Advantage Weighted Actor-Critic. Energies 2024, 17, 616. https://doi.org/10.3390/en17030616

Papaioannou I, Dimara A, Korkas C, Michailidis I, Papaioannou A, Anagnostopoulos C-N, Kosmatopoulos E, Krinidis S, Tzovaras D. An Applied Framework for Smarter Buildings Exploiting a Self-Adapted Advantage Weighted Actor-Critic. Energies. 2024; 17(3):616. https://doi.org/10.3390/en17030616

Chicago/Turabian StylePapaioannou, Ioannis, Asimina Dimara, Christos Korkas, Iakovos Michailidis, Alexios Papaioannou, Christos-Nikolaos Anagnostopoulos, Elias Kosmatopoulos, Stelios Krinidis, and Dimitrios Tzovaras. 2024. "An Applied Framework for Smarter Buildings Exploiting a Self-Adapted Advantage Weighted Actor-Critic" Energies 17, no. 3: 616. https://doi.org/10.3390/en17030616

APA StylePapaioannou, I., Dimara, A., Korkas, C., Michailidis, I., Papaioannou, A., Anagnostopoulos, C.-N., Kosmatopoulos, E., Krinidis, S., & Tzovaras, D. (2024). An Applied Framework for Smarter Buildings Exploiting a Self-Adapted Advantage Weighted Actor-Critic. Energies, 17(3), 616. https://doi.org/10.3390/en17030616