In order to cope with the energy and environmental crises, energy transition is imperative, and integrated renewable energy systems (IRESs) have become an important method of energy utilization in the process of energy transition by bringing the links between various energy systems closer. Therefore, the development of integrated renewable energy systems will be an important method of energy utilization in the future. IRESs emphasize the integration of the generation, conversion, storage, distribution, and consumption of multiple forms of energy [

1], which can greatly improve the utilization rate of energy. Unlike traditional energy systems that consider a single load forecast, IRESs need to consider a multivariate load forecast that contains complex coupled information, which puts higher requirements on the accuracy of the load forecast.

1.1. Related Work

The key to improving the accuracy of load forecasting lies in the selection of load forecasting models and the extraction of valid information from raw data. In terms of load forecasting model selection, it can be mainly categorized into traditional machine learning and deep learning methods.

For traditional machine learning, Ref. [

2] proposes a predictive model for power load forecasting based on phase space reconstruction and support vector machine (SVM). Ref. [

3] uses LSSVM combined with the multitask learning weight sharing mechanism for IRES multivariate load forecasting, and Ref. [

4] analyzes a variety of regression models by comparing them, and ultimately recommends the use of the Gaussian process regression model for power load forecasting.

Deep learning methods use multi-layer neural network stacking to construct models for load forecasting. Ref. [

5] developed a complex neural network (ComNN) for multivariate load forecasting. Ref. [

6] utilized a long short-term neural network (LSTM) combined with genetic algorithms (GAs) for pre-training, and then fine-tuned it using transfer learning to build a multivariate load forecasting model GA-LSTM. Ref. [

7] proposed a multivariate load prediction model combining the transformer and Gaussian process.

In order to further improve the overall performance of the model and fully exploit the effective information of relevant features and historical load data, signal decomposition techniques and convolutional neural networks are applied in load forecasting.

For signal decomposition techniques, Ref. [

8] utilized the backpropagation (BP) neural network for the initial load prediction and utilized improved complete ensemble empirical mode decomposition with adaptive noise (ICEEMDAN) to decompose and reconstruct the results, before utilizing the gated recurrent unit (GRU) algorithm for the further prediction of the reconstructed components and combining it with the prediction results of the BP neural network. Ref. [

9] proposed a two-stage decomposition hybrid forecasting model for the multivariate load forecasting of IRESs by combining modal decomposition with the bidirectional long short-term memory neural network (Bi-LSTM). Ref. [

10] utilized variational mode decomposition (VMD) with the k-means clustering algorithm (FK) combined with the SecureBoost model for the forecasting of electricity load, and Ref. [

11] used a combination of complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) and VMD to form a secondary mode decomposition. CEEMDAN decomposes the load sequence into multiple subsequences, while VMD further decomposes the high-frequency components for multivariate load prediction. Ref. [

12] combines ICEEMDAN and successive variational mode decomposition (SVMD) to form an aggregated hybrid mode decomposition (AHMD) for modal decomposition, and after that, the multivariate linear regression (MLR) model is used, respectively, with the temporal convolutional network-bidirectional gated recurrent unit (TCN-BiGRU) fusion neural network for the prediction of low-frequency components and medium–high-frequency components, respectively, and the prediction results are superimposed for IES load prediction. In Ref. [

13], modal decomposition is combined with the multitask learning model; the middle- and high-frequency sequences of multivariate loads after modal decomposition are input into the multitask transformer model for prediction; the low-frequency sequences are input into the multitask bi-directional gated logic recurrent unit for prediction; and the results of the two are ultimately superimposed to obtain the final prediction results.

In terms of the convolutional neural network, Ref. [

14] performed feature extraction by fusing the convolutional neural network (CNN) and GRU, followed by a multitask learning approach to build a multivariate load prediction model. Ref. [

15] utilized the multi-channel convolutional neural network (MCNN) to extract high-dimensional spatial features and combined it with the LSTM network for IRES load prediction. Ref. [

16], on the other hand, used a three-layer CNN to extract features, followed by load prediction using the transformer model.

The analysis of the previous related research revealed the following aspects that can be improved in the research related to multivariate load forecasting:

- (1)

Most of the different feature extraction methods use traditional modal decomposition and convolutional neural networks, and more advanced networks can be further adopted and improved to enhance the model’s feature analysis capability and improve the model’s performance.

- (2)

Most of the deep prediction models in the research use traditional recurrent neural networks, and attempts can be made to use other types of network structures to capture correlations between sequences and to improve the ability of deep prediction models to parallelize the computation and the learning of long-term dependencies of sequences.

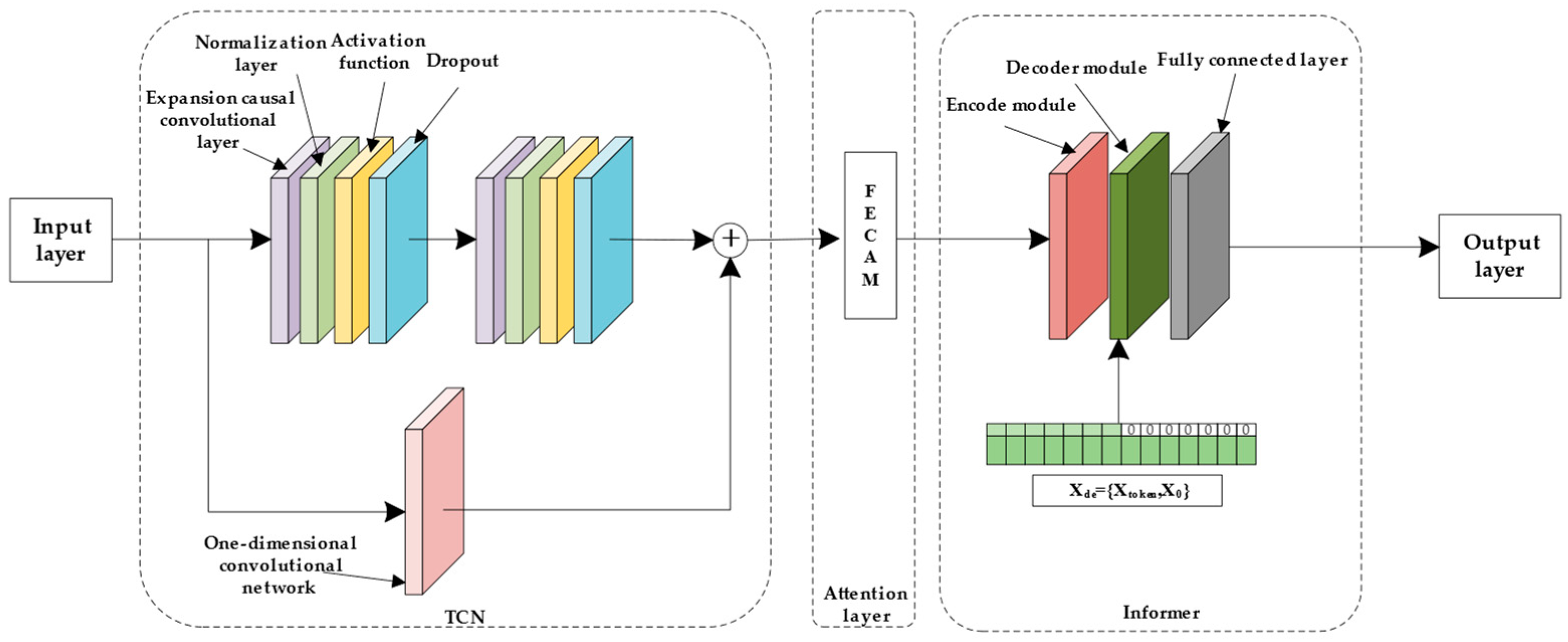

Therefore, a multivariate load forecasting model combining an improved time-series convolutional TCN and Informer network is proposed for multivariate load prediction. First, the channel attention mechanism (CAM) and discrete cosine transform (DCT) are combined with the TCN to improve the feature processing capability of the model. Second, the overall prediction model is constructed by combining the Informer network. Finally, by considering an arithmetic example, the model’s effectiveness in multivariate load forecasting is demonstrated.