1. Introduction

One of the necessities of existence, fresh water, is insufficiently accessible to billions of people worldwide. Although governments and humanitarian organizations have recently assisted many people living in water-stressed areas acquire access, the issue is expected to worsen due to the rapid population rise. About 97% of the water sources on earth are salty, and there are almost 800 million people who lack access to clean drinking water. Furthermore, by 2050, it is anticipated that 50% of the world’s water would be utilized [

1]. Physical scarcity, which occurs when there is a lack of water due to regional ecological circumstances, and economic scarcity, which occurs when there is insufficient water infrastructure, are the two main types of water scarcity. Most experts agree that the Middle East and North Africa (MENA) region experiences the most physical water stress. The MENA region experiences lower rainfall than other places, yet several of its nations have rapidly expanding, highly populated urban areas that demand more water. However, many of these nations, particularly the wealthier ones, continue to fulfil their water needs. Desalination is a clever and practical solution that grows proportionally with population growth to the problem of freshwater resources. To address this issue successfully, numerous water desalination techniques have been employed. Due to their straightforward construction, long operational lives, and affordable clean water production, solar stills (SSs) are the most widely used technique of desalinating water. When combined with low-grade and renewable energy sources such as wind and solar, SS, which is seen as a promising entrant to the desalination process, gives an alternative way for lowering dependence on fossil fuels while also delivering different environmental benefits. SS, on the other hand, produces little. Therefore, numerous performance improvement initiatives were made to increase the output of SSs. Hybridization with other techniques, such as HDH, is one technique used to enhance the SS to achieve high performance. In order to produce a more cost-effective product, provide a better match between power demand and water requirements, and achieve the best possible combination of the properties of the two processes, a hybrid desalination system combines two or more processes [

2]. Three models have been used to predict the effectiveness of solar stills and other desalination techniques. These models use numerical solutions [

3], regression models [

4], Artificial Neural Network (ANN) and machine learning technology, which is widely used in many energy system aspects based on actual experimental measurements, such as desalination systems [

5].

ANNs are a prediction model and classification strategy when simulating complex interactions between sets of cause-and-effect variables or discovering patterns in data. Methods from transient detection, pattern recognition, approximation, and time series prediction are all potentially applicable [

6,

7]. ANN is a system of information processing that mimics how the human brain does. Neurons are the central component of this network, and they solve problems by collaborating. In situations where it is essential to extract the structure from existing data to formulate an algorithmic solution, a neural network comes in helpful [

8,

9]. Meta-heuristic algorithms are one of the most powerful techniques available for resolving challenges encountered in a variety of application contexts [

10]. Most of these algorithms derive their logic from the physical algorithms present in the natural world. These optimization methods often yield acceptable solutions with minimal computational work and in a fair amount of time. Early detection of coronavirus can significantly reduce the spread of the disease, improving the prognosis for patients. This has led to the suggestion of several forms of artificial intelligence (AI) for use in solar desalination systems [

11,

12]. Ensemble method, the objective is to ensemble a prediction model by integrating the features of several independent base models. There are numerous ways in which this idea can be put into practice. Some more effective ways involve resampling the training set, while others use different prediction algorithms or tweak various parameters of the predictive strategy, etc. Finally, an ensemble of methods is used to combine the results of each prediction [

13,

14]. When more variables are included in the optimization process, Al-Biruni Earth Radius (BER) optimization algorithm [

15] performs worse. Additionally, the algorithm’s convergence is premature. A significant advantage is the successful balancing of exploration and exploitation. The suggested method takes use of this advantage by using the BER algorithm. Despite its ease of use and balancing ability between exploration and exploitation, the Particle Swarm Optimization (PSO) algorithm [

16] has drawbacks including performance declines when many local optimum solutions are present and a low exploration rate. This study employs the Al-Biruni Earth Radius optimizer to exploit the advantages and overcome the drawbacks of the PSO technique.

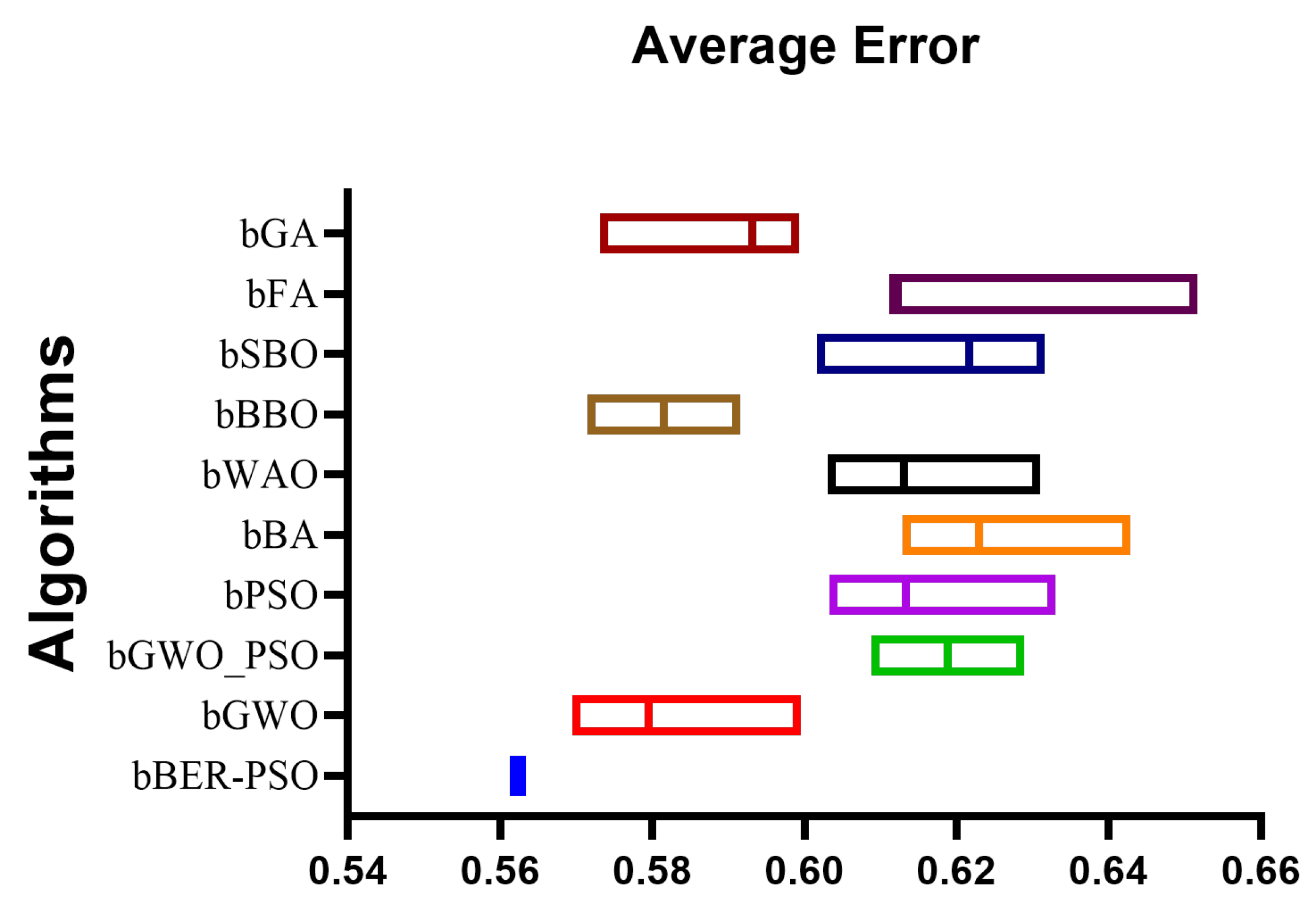

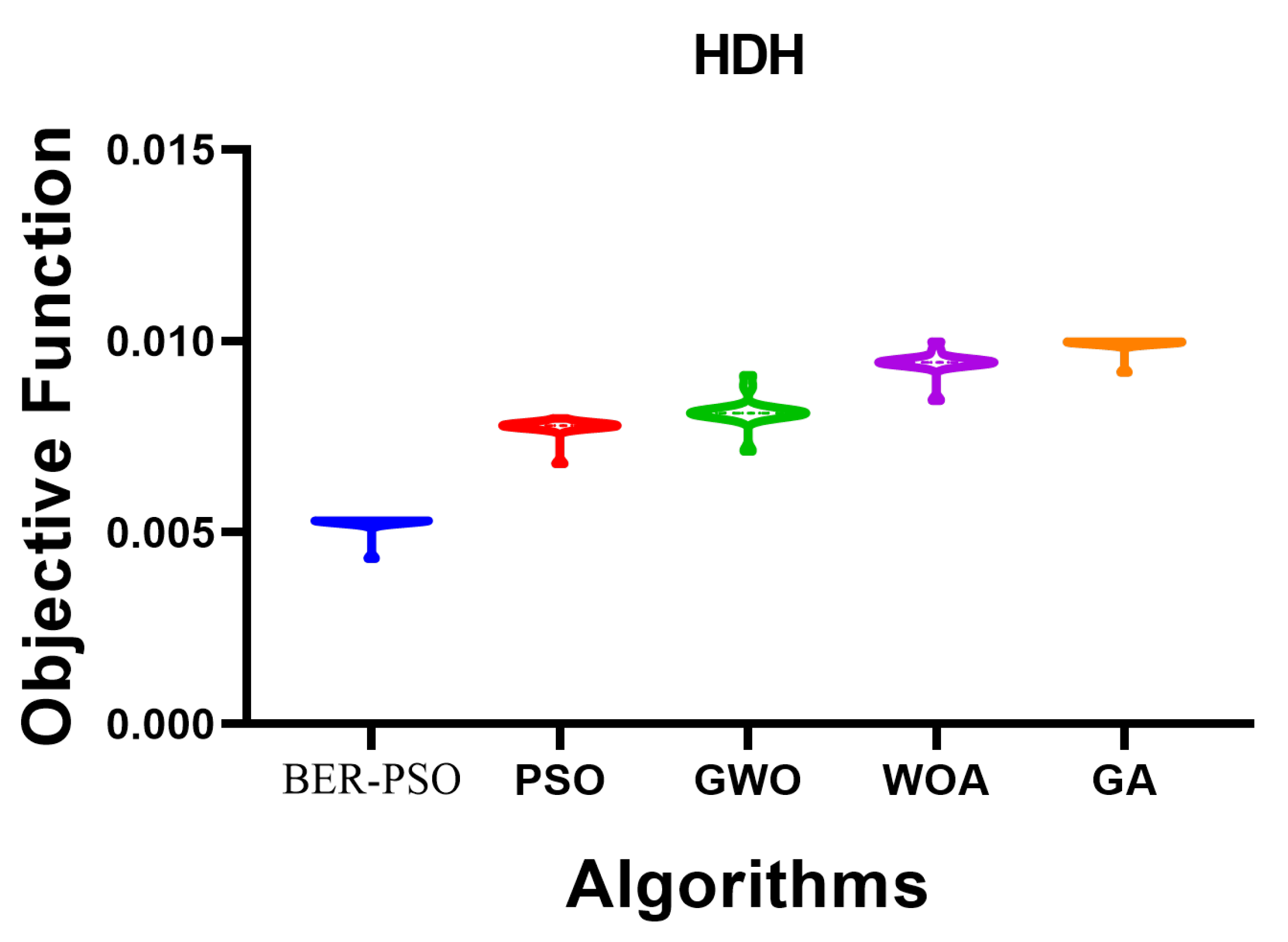

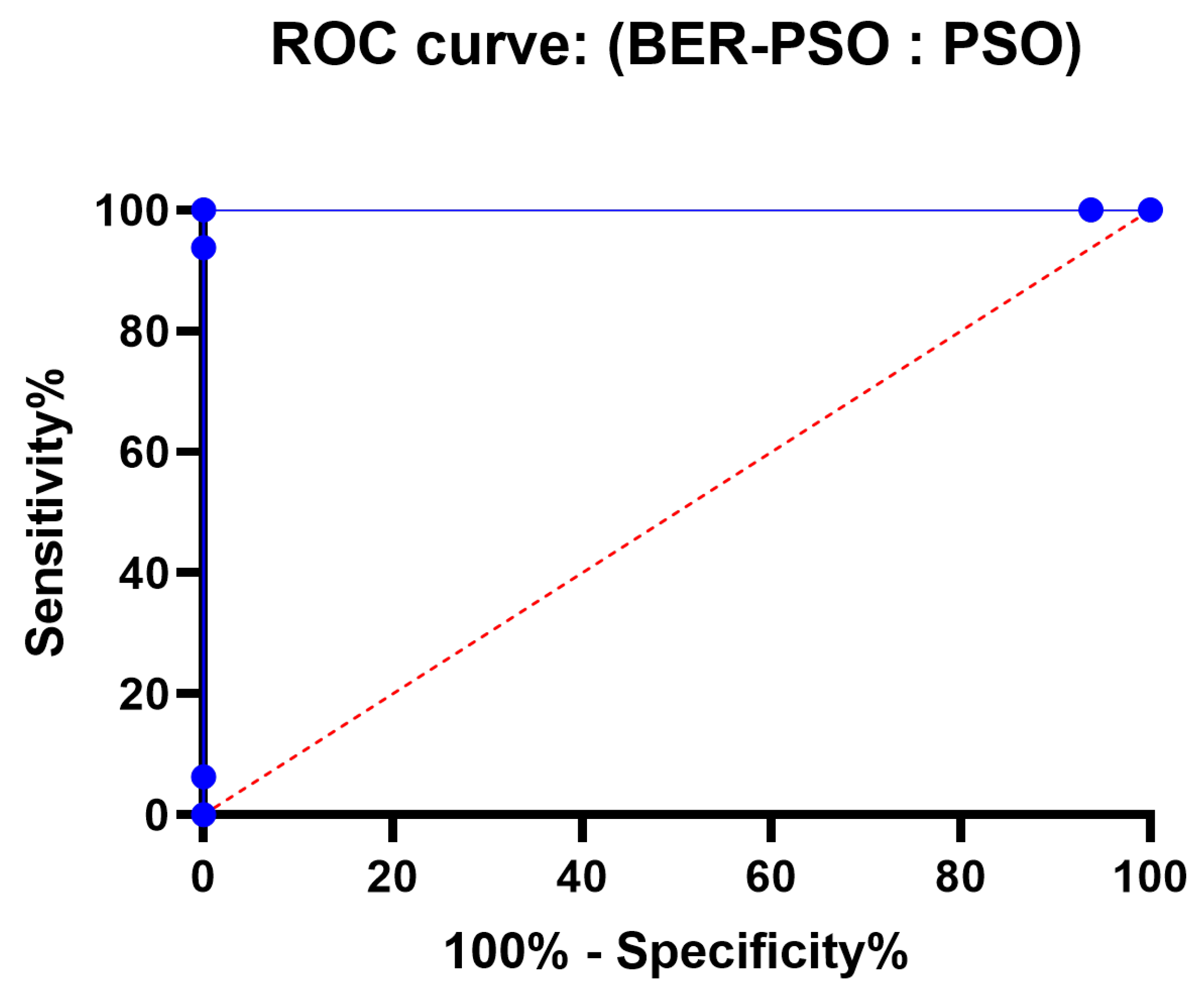

Based on measured values of water and air temperatures and yield, Kabeel and El-Said El-Said [

17] offered some of the relations that help evaluate the hybrid desalination system (HDH-SSF) and the suggested BER–PSO classification model, excellent prediction accuracy of parameters include water yield, GOR, cost, and thermal efficiency is achieved. The proposed (BER–PSO) technique is initially applied to feature selection from the tested dataset using a binary version. The binary BER–PSO (bBER–PSO) algorithm is tested first compared to PSO [

16], Grey Wolf Optimizer (GWO) [

18], hybrid of PSO and GWO (GWO-PSO) [

19], Whale Optimization Algorithm (WOA) [

20], Biogeography-Based Optimizer (BBO) [

21], Bowerbird Optimizer (SBO) [

22], Firefly Algorithm (FA) [

23], Genetic Algorithm (GA) [

24], and Bat Algorithm (BA) [

25]. The tested dataset is next assessed using a classifier built using the specified BER–PSO algorithm. Comparisons are made between the Decision Tree Regressor (DTR) [

26], MLP Regressor (MLP) [

27], K-Neighbors Regressor (KNR) [

28], Support Vector Regression (SVR) [

29], and Random Forest Regressor (RFR) [

30] models and the “BER–PSO” algorithm. Additionally, two ensembles for creating new estimators are Average Ensemble (AVE) and Ensemble utilizing KNR (EKNR).

The following is a condensed list of the most important contributions that can be drawn from this body of work:

Novel machine-learning techniques to predict the impact of various design and operational parameters on the thermos-fluid functionality of the HDH-SSF system.

An improved Al-Biruni Earth Radius (BER) optimization-based Particle Swarm Optimization (BER–PSO) algorithm is suggested.

The suggested algorithm’s binary variant, the binary BER–PSO algorithm, is used to select features from the dataset under test.

For the purpose of raising the accuracy of tested dataset prediction, a BER–PSO-based classifier is introduced.

The statistical significance of the BER–PSO algorithm can be determined by employing the Wilcoxon rank-sum and ANOVA tests.

Both the binary BER–PSO and the BER–PSO-based classification algorithms can be tested for a variety of datasets.

The remaining portions of the document are arranged as follows. A description of the system and applications of AI is covered in

Section 2. The methods utilized by machine learning (ML) to estimate the HDH-SSF’s thermos-fluid performance parameters are introduced in

Section 3. Meanwhile,

Section 4 presents a description of the proposed BER–PSO algorithm.

Section 5 presents performance metrics, statistical parameters, experimental results, findings, and discussion. The study’s key conclusions are presented in

Section 6.

2. Background

Based on Kabeel and El-Said [

17], the experimental setup of the hybrid desalination system (HDH-SSF) is under investigation in this study. HDH-SSF system was primarily based on two concepts: the air humidification and dehumidification process (HDH), and the flash evaporation of saline water (SSF), as shown in

Figure 1. A humidifier, dehumidifier (water cooled exchanger), air heater (flat plate collector), water heater (flat plate collector), and flashing evaporation unit make up the majority of the system. HDH system is made up of two loops, one for heating water and the other for heating air with a packed tower as a humidifier. Hot air passes through the humidifier and transfers the water that has evaporated to the dehumidifier, which cools to extract the fresh water. The condenser and flashing chamber are the main components of the single stage flashing evaporation system (SSF). Pump (P4) in the water loop delivers water from the mixing tank and splits it into two major lines, a feeding line and a bypass line, which are both controlled by valves (V15). The humidifier’s top is where the heated saline water is sprayed. The water drips to the humidifier’s bottom, where it is sent to a mixing tank for reheating. A centrifugal blower (placed on the humidifier’s input) pulls air from the atmosphere into the air loop, where it passes through the air solar heater. Hot air passes through the humidifier and transfers the water that has evaporated to the dehumidifier, which cools and dehumidifies the air. Condenser and flashing chamber make up the SSF system. In the closed loop of saline water flow between the flashing chamber and heat exchanger (HHEx), a portion of the salty water in the mixing tank (MT) is flowed to the helical heat exchanger (HHEx) to backup water, while the remainder is drained using valve (V5). Pumped to a flashing chamber under sub-atmospheric pressure, hot saline water from a heat exchanger evaporates by flashing. The condenser receives the water vapor that was extracted from the flashing chamber. The flashing unit condenser receives the saline-cooled water, which condenses the water vapor and discharges it. The bottom tray of the condenser holds the desalinated water, which is drawn up and pumped to the product tank. The pressure drop affects the flashing evaporation. In order to vacuum the condenser and flashing chamber, a vacuum pump is used. The rejected brine water from the humidifier is then combined with the saline water that exits the flashing unit condenser. Since the saline water is cold when it leaves the dehumidifier, it must be emptied. The following are some stages that can be used to illustrate the precise operational procedures of the experimental apparatus shown in

Figure 1 [

17]:

Until the operational levels are reached, fill the system’s salty water loops.

Set and modify the test case’s flow rates and temperature settings.

Run the electrical heater (EH) and pump (P1) in the solar water heater loop until the tank’s water temperature (TK1) reaches the desired level.

Set and modify the solenoid valve’s (SV1) open and closing periods in accordance with the flow rates of the backup water.

Operate the vacuum pump (P3) until the desired pressure is reached inside the flashing chamber.

Start the feeding pump (P2) to dispense saline water throughout the flashing chamber.

Use the level indicator, water bleeding through valve (V4), and control valve (V8) to adjust the brine pool’s height.

Turn on the air blower.

Turn on the feeding pump (P4) to supply the salty water to the humidifier sprayers.

Run the cooling water pump (P6) to pump the saline water to the condenser (C1) and dehumidifier.

Run the circulation pump (P5) to pump the saline water mixing tank (C2)

The performance of the HDH-SSF system is predicted in this work using an enhanced prediction method based on a supervised machine-learning algorithm. It is essential to be aware of the intricate physical processes that occur inside the system. Some examples of these processes include the process of heat and mass transfer between water air streams, as well as the formation of humid air. Because of this, it is difficult to evaluate the effects of the design and operational parameters on the HDH-SSF’s thermal and economic performance [

31,

32,

33]. Another difficult problem that needs to be taken into account is the existence of phase change processes. Additionally, the abrupt variations in the air and water streams’ temperature, and flow rate would cause a complicated behavior [

34]. Therefore, it is believed that the variation in the air and water streams flow rates and temperature, are crucial parameters affect the performance of HDH-SSF. As a consequence, more emphasis should be given to analyzing its implications on the overall performance of hybrid desalination systems such as HDH-SSF, which includes thermos-fluid processes. The majority of the evaluation the effect of design and operational parameters on the HDH-SSF’s performance is conducted through the utilization of numerical and experimental methodologies. Mathematical problems involving complicated nonlinear systems should be solved with the use of simplified assumptions and numerical methods [

35]. Contrarily, in experimental approaches, expensive and time-consuming trials are conducted out before system characteristics and the considered results are correlated statistically. Unfortunately, both approaches’ outcomes may be impacted by noisy circumstances [

36]. Therefore, it would be ideal to create a reliable and accurate approach for simulating and forecasting the effects design and operational parameters on the HDH-SSF’s performance. Artificial intelligence-based algorithms can be learned by doing through a training process that is inspired by the brain [

37]. Once trained, these algorithms can be a potent tool for predicting the link between the HDH-SSF parameters and the design and operational parameters. The ability of artificial intelligence approaches to generalize means that they can forecast behavior in situations that they were not exposed to during the training phase. By using these techniques, one can avoid issues with experimental methods, such as the use of low accuracy statistical-based correlations between HDH-SSF’s parameters derived from experimental data, or issues with numerical methods, such as those associated with solving nonlinear mathematical models [

38]. The relationship between HDH-SSF design and operating variables is poorly understood and has not been the subject of extensive experimental research.