Abstract

The steep rise in reinforcement learning (RL) in various applications in energy as well as the penetration of home automation in recent years are the motivation for this article. It surveys the use of RL in various home energy management system (HEMS) applications. There is a focus on deep neural network (DNN) models in RL. The article provides an overview of reinforcement learning. This is followed with discussions on state-of-the-art methods for value, policy, and actor–critic methods in deep reinforcement learning (DRL). In order to make the published literature in reinforcement learning more accessible to the HEMS community, verbal descriptions are accompanied with explanatory figures as well as mathematical expressions using standard machine learning terminology. Next, a detailed survey of how reinforcement learning is used in different HEMS domains is described. The survey also considers what kind of reinforcement learning algorithms are used in each HEMS application. It suggests that research in this direction is still in its infancy. Lastly, the article proposes four performance metrics to evaluate RL methods.

1. Introduction

The largest group of consumers of electricity in the US are residential units. In the year 2020, this sector alone accounted for approximately 40% of all electricity usage [1]. The average daily residential consumption of electricity is 12 kWh per person [2]. Therefore, effectively managing the usage of electricity in homes, while maintaining acceptable comfort levels, is vital to address the global challenges of dwindling natural resources and climate change. Rapid technological advances have now made home energy management systems (HEMS) an attainable goal that is worth pursuing. HEMS consist of automation technologies that can respond to a continuously or periodically changing home environmental as well as relevant external conditions, without human intervention [3,4]. In this review, the term ‘home’ is taken in a broad context to also include all residential units, classrooms, apartments, offices complexes, and other buildings in the smart grid [5,6,7,8].

Artificial Intelligence (AI), more specifically machine learning, is one of the key contributing factors that have helped realize HEMS today [9,10,11]. Reinforcement learning (RL) is a class of machine learning algorithms that is making deep inroads in various applications in HEMS. This learning paradigm incorporates the twin capabilities of learning from experience and learning at higher levels of abstraction. It allows algorithmic agents to replace human beings in the real world, including in homes and buildings, in applications that had hitherto been considered to be beyond today’s capabilities.

RL allows an algorithmic entity to make sequences of decisions and implement actions from experience in the same manner as a human being [12,13,14,15,16,17]. DNN has proven to be a powerful tool in RL, for it endows the RL agent with the capability to adapt to a wide variety of complex real-world applications [18,19]. Moreover, it has been proposed in [20] that RL can attain the ultimate goal of artificial general intelligence [21].

Consequently, RL is making deep inroads into many application domains today. It has been applied extensively to robotics [22]. Specific applications in this area include robotic manipulation with many degrees of freedom [23,24] and the navigation and path planning of mobile robots and UAVs [25,26,27]. RL finds widespread applications in communications and networking [28,29,30]. It has been used in 5G-enabled UAVs with cognitive capabilities [31], cybersecurity [32,33,34], and edge computing [35]. In intelligent transportation systems, RL is used in a range of applications such as vehicle dispatching in online ride-hailing platforms [36].

Other domains where RL has been used include hospital decision making [37], precision agriculture [38], and fluid mechanics [39]. The financial industry is another important sector where RL has been adopted for several scenarios [40,41,42]. It is of little surprise that RL has been extensively used to solve various problems in energy systems [43,44,45,46,47]. Another review article on the use of RL [47] considers three application areas in frequency and voltage control as well as in energy management.

RL is increasingly being used in HEMS applications and several review papers have already been published. The review article in [48] focuses on RL for HVAC and water heaters. The paper in [49] is based on research published between 1997 and 2019. The survey observes that only 11% of published research reports the deployment of RL in actual HEMS. The article in [50] specifically focuses on occupant comfort in residences and offices. A more recent review on building energy management [51] focuses on deep neural network-based RL. A recent article [52] considers RL along with model predictive control in smart building applications. The article in [53] is a survey of RL in demand response.

In contrast to the previous reviews, the scope of our review is broad enough to cover all areas of HEMS, including HEMS interfacing with the energy grid. More importantly, it provides a comprehensive overview of all major RL methods, providing a sufficient level of explanation for readers’ understanding. Therefore, this article would be of benefit for researchers and practitioners in other areas of the energy systems, and beyond, to acquire a theoretical level understanding of basic RL techniques.

The rest of this article is organized in the following manner. Section 2 addresses the various elements of HEMS in greater detail. Section 3 introduces basic ideas on reinforcement learning. Further details of value-based RL and associated deep architectures are discussed in Section 4, while policy-based and actor–critic architectures—the other class of RL algorithms—are described in Section 5. Section 6 and Section 7 discuss the results of the research survey: while Section 6 focuses on the application of RL, Section 7 is a study on the classes of algorithms that were used. The article concludes in Section 8, where the authors propose four metrics to evaluate the performances of RL algorithms in HEMS.

2. Home Energy Management Systems

HEMS refers to a slew of automation techniques that can respond to continuously or periodically changing the home/building’s internal as well as relevant external conditions, and without the need for human intervention. This section addresses the enabling technologies that make this an attainable goal.

2.1. Networking and Communication

All HEMS devices must have the ability to send/receive data with each other using the same communication protocol. HEMS provides the occupants with the tools that allow them to monitor, manage, and control all the activities within the system. The advancements in technologies and more specifically in IoT-enabled devices and wireless communications protocols such as ZigBee, Wi-Fi, and Z-Wave made HEMS feasible [54,55]. These smart devices are connected through a home area network (HAN) and/or to the internet, i.e., a wide area network (WAN).

The choice of communication protocol for home automation is an open question. To a large extent, it depends on the user’s personal requirements. If it is desired to automate a smaller set of home appliances with ease of installation, and operability in a plug-and-play manner, Wi-Fi is the appropriate one to use. However, with more extensive automation requirements, involving tens through to hundreds of smart devices, Wi-Fi is no longer the optimal choice. There are issues relating to scalability and signal interference in Wi-Fi. More importantly, due to its relatively high energy consumption, Wi-Fi is not appropriate for battery-powered devices.

Under these circumstances, ZigBee and Z-Wave are more appropriate [56]. These communication protocols dominate today’s home automation market. There are many common features shared between the two protocols. Both protocols use RF communication mode and offer two-way communication. Both ZigBee and Z-Wave enjoy well established commercial relationships with various companies, with tens of hundreds of smart devices using one of these protocols.

Z-Wave is superior to than ZigBee in terms of the range of transmission (120 m with three devices as repeaters vs. 60 m with two devices working as repeaters). In terms of inter-brand operability, Z-Wave again holds the advantage. However, ZigBee is more competitive in terms of data rate of transmission as well as in the number of connected devices. Z-Wave was specially created for home automation applications, while ZigBee is used in a wider range of places such as industry, research, health care, and home automation [57]. A study conducted by [58] foresees that ZigBee is most likely to be the standard communication protocol for HEMS. However, due to the presence of numerous factors, it is still difficult to tell with high certainty if this forecast would take place in future. It is also possible that an alternative communication protocol will emerge in future.

HEMS requires this level of connectivity to be able to access electricity price from the smart grid through the smart meter and control all the system’s elements accordingly (e.g., turn on/off the TV, control the thermostat settings, determine the charge/discharge battery timings, etc.). In some scenarios, HEMS uses the forecasted electricity prices to schedule shiftable loads (e.g., washing machine, dryer, electric vehicle charging) [54].

2.2. Sensors and Controller Platforms

HEMS consists of smart appliances with sensors, these IoT-enabled devices communicate with the controller by sending and receiving data. They collect information from the environment and/or about their electricity usage using built-in sensors. The smart meter gathers information regarding the total consumers’ consumption from the appliances, the peak load period, and electricity price from the smart grid.

The controller can be in the form of a physical computer located within the premises, that is equipped with the ability to run complex algorithms. An alternate approach is to leverage any of the cloud services that are available to the consumers through cloud computing firms.

The controller gathers information from the following sources: (i) the energy grid through the smart meter, which includes the power supply status and electricity price, (ii) the status of renewable energy and the energy storage systems, (iii) the electricity usage of each smart device at home, and (iv) the outside environment. Then it processes all the data through a computational algorithm to take specific action for each device in the whole system separately [5].

2.3. Control Algorithms

AI and machine learning methods are making deep inroads into HEMS [10,59]. HEMS algorithms incorporated into the controller might be in the form of simple knowledge-based systems. These approaches embody a set of if-then-else rules, which may be crisp or fuzzy. However, due to their reliance on a fixed set of rules, such methods may not be of much practical use with real-time controllers. Moreover, they cannot effectively leverage the large amount of data available today [5]. Although it is possible to impart a certain degree of trainability to fuzzy systems, the structural bottleneck of consolidating all inputs using only conjunctions (and) and disjunctions (or) still persists.

Numerical optimization comprises of another class of computational methods for the smart home controller. These methods entail an objective function that is to be either minimized (e.g., cost) or maximized (e.g., occupant comfort), as well as a set of constraints imposed by the underlying physical HEMS appliances and limitations. Due to its simplicity, linear programing is a popular choice for this class of algorithms. More recently, game theoretic approaches have emerged as an alternative approach for various HEMS optimization problems [5].

In recent years, artificial intelligence and machine learning, more specifically deep learning techniques, have become popular for HEMS applications. Deep learning takes advantage of all the available data for training the neural network to predict the output and control the connected devices. It is very helpful to forecast the weather, load, and electricity price. Furthermore, it handles non-linearities without resorting to explicit mathematical models. Since 2013, there have been significant efforts directed at using deep neural networks within an RL framework [60,61], that have met with much success.

3. Overview of Reinforcement Learning

3.1. Deep Neural Networks

A deep neural network (DNN) is a trainable highly nonlinear function approximator of the form where and are the dimensionalities of the input and output spaces. Structurally, the DNN consists of an input layer and output layer, and at least one hidden layer. The input layer receives the DNN input vector . The neurons in any other layer receive, as their inputs, the weighted outputs of neurons in the preceding layer. The weights of the DNN make up its weight parameter, denoted . For simplicity, we consider DNNs with scalar outputs so that . The actual output of the DNN is represented as , which is that of the sole neuron in the output layer.

In a typical regression application, the DNN’s training set consists of pairs where is the sample index (for the sake of conciseness, this relationship is often denoted as in this article). The quantity is the target, or desired output. During training, is updated in steps so that for each input , the DNN’s output is as close as possible to . Supervised learning algorithms aim to minimize the DNN’s loss function . The subscript indicates that the loss is an empirically estimate over the sample in . A popular choice of the latter is the averaged squared norms of the difference between the target and output for all samples in ,

Training the DNN comprises of multiple passes called epochs, with each epoch comprising of one pass through all samples in . In stochastic gradient descent (SGD), with being the learning rate, the parameter is incremented once for every sample as,

This increment is equivalent to a single gradient step with .

While SGD is useful in many online applications, minibatch gradient descent is the most common training method. In each epoch, are divided into non-overlapping minibatches (i.e., , and ). The parameter is updated once for every minibatch as,

One of the advantages of training in minibatches is that the trajectory taken by the training algorithm is straightened out, thereby speeding up convergence. It can be seen that the loss function is , which is identical to that in Equation (1), with sample set .

Typically, the loss function includes an additional regularization term designed to keep the weights in low in order to prevent overfitting; overfitting results in poorer performance after the DNN is deployed into the real world. Nowadays, faster training is accomplished by using extensions of gradient descent such as ADAM. These as well as many other important aspects of DNNs and training algorithms have not been addressed here; the above discussion minimally suffices to understand how DNNs are used in reinforcement learning (RL). A brief exposition to DNNs is available at [62]. For a rigorous treatment of DNNs, the interested reader is referred to [63].

3.2. Reinforcement Learning

An agent in RL is a learning entity, such as a deep neural network (DNN) that exerts control over a stochastic, external environment by means of a sequence of actions over time. The agent learns to improve the performance of its environment using reward signals that it receives from the environment.

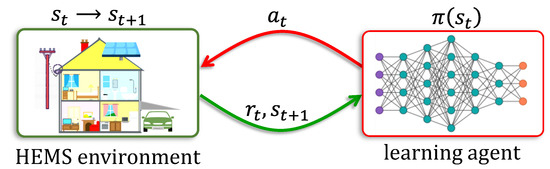

Rewards are quantitative metrics that indicate the immediate performance of the environment (e.g., average instantaneous user comfort). The sets and are the state and action spaces and can be discrete or continuous. Everywhere in this article it is assumed that all temporal signals are sampled at discrete, regularly spaced intervals [62]. At each discrete time instance , the current state of the environment is known to the agent, which then implements an action . The environment transitions to the next state with a probability while returning an immediate reward signal ; where denotes the environment’s reward function that it unknown to the agent. The transition can be denoted concisely as The overall schematic is shown in Figure 1.

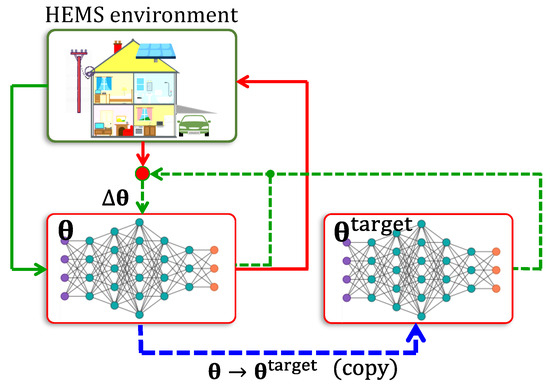

Figure 1.

The quantities shown are associated with the transition . Although the agent is depicted as a neural network (cf. [62]), it may be in the form of a tabular structure.

Instead of greedily aiming to improve the immediate reward at every time instance , the agent may be iteratively trained to maximize the sum of the immediate and the weighted future rewards, which is called the return,

The quantity is called the discount factor. This lookahead feature prevents the agent to learn greedy actions that fetch large instantaneous rewards, at each instant , while adversely affect the environment later on. The process begins at time and terminates at time , the time horizon. The environment’s initial state at is denoted as . The initial state may be probabilistic, following a distribution . It should be noted that if , then the discount must be less than unity () so that the return stays finitely bounded at all times .

The 5-tuple defines a Markov decision process (MDP). The initial state distribution is assumed to be subsumed by the transition probabilities . The MDP can be viewed as an extension of a discrete Markov model.

The entire sequence of states, actions, and rewards is an episode, denoted , so that,

The policy can be deterministic or stochastic. A deterministic policy can be treated as a function (see Figure 1) so that , whereas a stochastic policy represents a probability distribution over such that . In several domains, the probability distribution is determined from the nature of the application itself.

During an episode, the action taken by the agent is in accordance with a policy , where is the policy space. From the Markovian (memoryless) property of the MDP, it follows that the optimal action of an agent at each state in terms of its stated goal of maximizing the total return , is independent of all previous states of the environment. Therefore, the action taken by the agent at time under policy is based solely on the state , and the prior history of states and actions need not be taken into account.

The overall aim of reinforcement learning is usually to maximize an objective function . Let denote the total return of a given episode . If the MDP is initialized to any state at (such that ), the expected value of this return which is dependent on policy may be expressed as,

The operator is the expectation when all episodes are generated by the MDP under policy . When it follows the MDP’s initial state distribution, i.e., , the expectation may be denoted simply as without any argument. This informal function overloaded convention is adopted throughout this manuscript as there are other ways to define the objective function. The policy that at each state implements the action that maximize is referred to as the optimal policy and represented as .

3.3. Taxonomy of Algorithms

RL methods can be classified in several ways. In model-free training, RL takes place with the agent connected to the real-world environment, whereas in model-based RL, the agent is trained using a simulation platform to represent the environment.

In model-based RL, as the transition probabilities and the reward function are available through the environmental model platform, the algorithm must be implemented in an offline manner. Of more practical interest is online RL where the agent can be trained in real time by interacting with the real physical environment as shown in Figure 1. Although online RL is considered to be model-free, practically all research papers report the use of HEMS models for training [49].

In on-policy RL, a referential policy , such as that of a human, is considered to be the optimal policy and is known a priori. The goal of RL is to learn a policy . In off-policy approaches, the goal is to obtain the optimal policy , which maximizes in Equation (6).

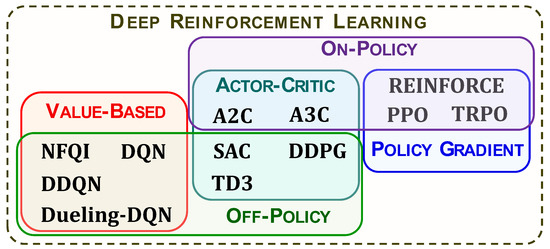

Another fundamental trichotomy of the plethora of RL approaches used today includes value-based RL, policy-based RL, and actor–critic RL, the latter having emerged more recently. Actor–critic methods are hybrid approaches that borrow features from value-based as well as policy-based RL [64]. The classification of various approaches used in HEMS applications is shown in Figure 2. These are also described at great length in this article, which may be used as a tutorial style exposition to RL for the interested reader.

Figure 2.

Taxonomy of Deep Reinforcement Learning. Classification of all deep reinforcement learning methods that are described in this article are shown. Section 3.2 provides a description of each class. (See also [64].)

4. Value-Based Reinforcement Learning

Historically value-based RL, first proposed in [65], heralds the advent of the broad area of reinforcement learning as a distinct branch of AI. These approaches are based on dynamic programming. The formal definition of an MDP was introduced shortly thereafter [66,67]. As noted earlier, an MDP is memoryless. An implication of this feature is that when the environment is in any given state at any instant , the prior history is not of any consequence in deciding the future course of actions [19]. Accordingly, one can define the state–action value, or Q-value of the state and for each action , as the expected return when taking from (cf. [19]),

Referring to a specific policy may be achieved by using a superscript in the above equation, so that the left-hand side of Equation (7) is written as .

It must be noted that even under a deterministic policy, can still be defined for any action merely by treating as an evaluative action and following the policy at all future times. Whence (cf. [19]),

The Q-value function can be defined using (6) irrespective of whether the policy is stochastic or deterministic. In case of a deterministic policy, Equation (8) can be applied by letting when , and otherwise.

A stochastic policy is intrinsic to many real-world applications. For instance, in order to decrease the ambient temperature by manually lowering the thermostatic setting, the final setting involves a degree of randomness arising from human imprecision. In multiagent environments, the best course may often be to adopt a stochastic policy. As an example, in a repeated game of rock–paper–scissors, randomly selecting each action (‘rock’, ‘paper’, or ‘scissors’) with equal probabilities of ⅓ is the only policy that would ensure that the probability of losing a round of the game does not exceed that of winning.

From a machine learning standpoint, stochastic policies help explore and assess the effects of the entire repertoire of actions available in . Such exploration is critical during the initial stages of the learning algorithm. The two most commonly used stochastic policies are the -greedy and the softmax policies. Under an -greedy policy , the probability of picking an action when the environmental state is is given by,

It is always a good idea to lower the parameter steadily so that as learning progresses, the agent is greedier—being likelier to select actions with the highest Q-values, . The softmax policy is the other popular method to incorporate exploration into a policy. The probability of applying action under such a policy is,

Initialized to a high value, the Gibbs–Boltzmann parameter may be steadily lowered as the learning algorithm progresses, so that the policy becomes increasingly exploitative, that is, taking the action with the highest Q-value more often. Unless specified otherwise, it shall be assumed hereafter that the policy space is stochastic so that actions follow probability distribution ().

Exploration is applied to stochastically search and evaluate the available repertoire of actions at each state, before converging towards the optimal one. It is an essential component of value-based RL. Since exploitation is the strategy of picking the best actions in , it should not be applied until the algorithm has all actions in a sufficient manner. However, endowing the learning algorithm with too much exploration slows down the learning. Identifying the right tradeoff between exploration and exploitation is a widely studied problem in machine learning [68]. It is for this reason that the parameters in Equation (9), and in Equation (10) are steadily lowered as learning progresses.

Instead of an evaluative action , suppose the policy is applied from state (so that either or ), then the expected return is called the state’s value,

As with the Q-value function, the policy becomes explicit if the value of is written as . The value of can be expressed in terms of Q-values as,

The difference between value of any state and the Q-value of implementing an action from under policy is the advantage function, so that,

Although the preferred notation in this manuscript is to use lowercase letters to denote variables, the advantage function is represented using uppercase as the lowercase is reserved to denote an action.

The value function allows the optimal policy to be defined in a formal manner. If the objective function with the MDP initialized to some is defined as in Equation (6), then it is evident from Equation (10) that . Furthermore, if the MDP visits state at the instant , then from Equation (4),

At this stage, we invoke the memoryless property of the underlying MDP. At instant the partial sum of the terms in the right-hand side are part of the episode’s history, while is the expected future return. The optimal policy at every such state is to implement the action that maximizes , so that,

When the policy is deterministic, it can also be inferred that the optimal action from state is to select the action with the highest Q-value. From Equation (15) it follows that,

The Q-value is equal to . It can be mathematically established that the Q-values corresponding to the optimal policy are higher than those associated with other policies, i.e., [69].

The Bellman’s equation for optimality follows from the above consideration,

The difference between Equation (8) and Equation (17) is in the second term in each summand. The policy-based Q-value in Equation (8) is replaced with the maximum Q-value in Equation (17). A mathematically rigorous coverage of various RL methods can be found in the seminal book [70] that is available online.

4.1. Tabular Q-Learning

The simplest possible implementation of the Q-learning algorithm is tabular Q-learning where an sized array is maintained to store for every state–action pair [71]. Initialized to either zeros or small random values, the tabular entries are periodically updated. As it is an online approach, Q-learning cannot use transition probabilities . For each transition , the tabular entry for is incremented as,

The quantity is the learning rate; usually . The quantity is the target,

In order to impart an exploratory component to Q-learning, the action must be selected probabilistically as in Equation (9) or Equation (10). In many cases, increments are applied in real time at the end of each time instance. It can be shown mathematically that the tabular entries converge towards the maximum values, [69], implying that Q-learning is an off-policy approach. The fully trained agent can select actions as per Equation (16) during actual use.

SARSA (State–Action–Reward–State–Action) [70,72] is the on-policy RL algorithm that can be implemented in a tabular manner. The update rule for SARSA is identical to the earlier expression in Equation (19). However, since SARSA is an on-policy algorithm, the target is specific to the policy and is given by,

Both Q-learning and SARSA use the tabular entries of the environment’s new state following the transition . The difference is in how the entries are used. Whereas Q-learning uses the tabular entry corresponding to the action with the highest , SARSA applies the specified policy , using , the Q-value of the action . This difference is analogous to that between Equation (17) and Equation (8).

Tabular Q-learning and SARSA can handle continuous state as well as action spaces by discretizing them into a finite and tractable number of subdivisions. Unfortunately, such tabular learning methods cannot be applied in many large-scale domains. This is because too many discrete levels would make the algorithm computationally too intensive if not outright intractable.

When the state space is too large (e.g., in chess), tabular learning becomes prohibitively expensive not only in terms of storage requirements but also in terms of computational time needed by the RL algorithm. DQNs are well equipped to handle such large discrete as well as continuous state spaces [73]. However, , the cardinality of the action space, must still be tractably small. In a DQN the mapping from every state action pair to its Q-value is carried out by means of a DNN.

In reality, the DNN input is some feature vector of the state , where and is the dimensionality of the feature space. In the same manner, the action can be represented in terms of its feature vectors. However, as is small, it is assumed that the action itself is the other input. In practice, a unary encoding scheme may be used to represent actions. For instance, if , the four discrete actions may be encoded as 0001, 0010, 0100, and 1000. Under these circumstances, the actual input to the DNN is , and its actual output is . For simplicity we will treat the DNN input as and the output as , that is, , and . The Q-value is conditioned in terms of the weight parameter in this manner so as to explicitly reflect its dependence on the latter.

4.2. Deep Q-Networks

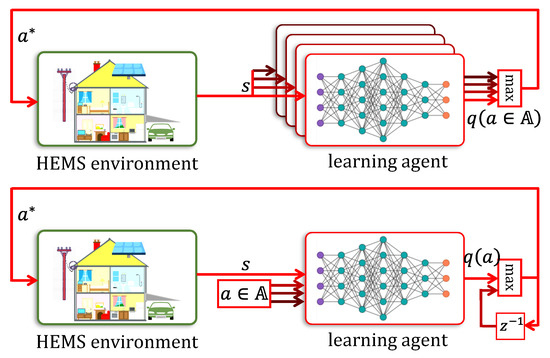

There are two possible ways in which the mapping of a state–action pair to its Q-value can be accomplished, which are as follows.

- (i)

- A different DNN for each action is maintained, so that the total of DNNs in this arrangement is . The state (encoded appropriately using the state’s features), serves as the common input to all the DNNs.

- (ii)

- A single DNN with separate inputs for state and action is maintained and its output is . While this manner of storing Q-values requires the use of only a single DNN, in order to obtain , the actions must be applied sequentially to it.

The two schemes are depicted in Figure 3.

Figure 3.

Deep Q-Network Layouts. One scheme uses a uses a separate DNN for each action (top). The other scheme uses only one DNN that receives actions as another input (bottom).

Stochastic gradient descent can be applied in a straightforward fashion to train the weight parameter as in Equation (2) for the squared error loss , and with the DNN’s output now being ,

This simple approach is the neural-fitted Q-iteration (NFQI) that was proposed in [74]. The target is determined in accordance with Equation (19) with used to obtain the target so that . When using tabular entries in place of , it becomes the fitted Q-iteration (FQI) When the learning agent interacts with the environment, the actions are generally selected using the -greedy method shown in Equation (9).

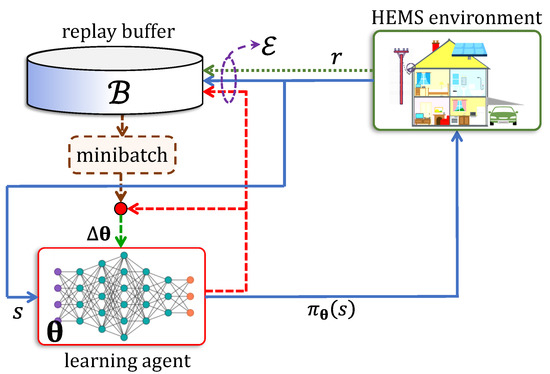

Temporal correlation in real-time training samples is an unfortunate drawback when directly implementing stochastic gradient descent. Unlike in tabular learning, in DQN updating changes not only the output for the relevant state–action pair but the Q-values of every other pair as well. The change may be barely noticeable when is at a large distance from within the feature space ; unfortunately, this is not usually the case in most real-world domains.

Consider two successive transitions . Due to the property of temporal correlation between successive states, it is highly reasonable to expect that the distance is very small. Therefore, applying Equation (21) to update will have an undesirable yet pronounced effect on . A similar argument holds for time sequences of actions as well.

To address the ill effects of temporal correlatedness, DNN training is carried out only after the completion of an episode or multiple episodes, during which time the DQN agent is allowed to exert control over the environment, while remains unchanged. All training samples are stored in an experience replay buffer [75], which plays the role of a mini-batch in DNN training. After enough training samples have been accumulated in , it is shuffled randomly before incrementing . The increment may be implemented either as in Equation (21), or through minibatch gradient descent as indicated earlier in Equation (3) with replacing (see Figure 4). For convenience, the update is shown below,

Figure 4.

Replay Buffer. Shown are the replay buffer, environment, and agent. The pathways are involved during the agent’s interaction with the environment (solid blue) and training (dashed red).

The buffer is flushed before the next cycle begins with the updated parameter .

An improvement over this scheme is prioritized replay [76], where the probability of a getting selected chosen for a training step is proportional to . The small constant is added to the squared loss term to ensure that all samples have non-zero probabilities.

Target non-stationarity is another closely related problem that arises in DQNs, one that is not seen in tabular Q-learning. For any given sample transition as the DNN weight parameter is incremented in accordance with Equation (21), an undesirable effect is that the target also changes. This is because the target is determined as and this DNN is used to obtain . Target non-stationarity is handled by storing an older copy of the primary DNN in memory and using this stored copy to compute the target . Effectively, the RL algorithm maintains a separate target DNN parametrized by . Thus, the target is,

The target DNN’s weight parameter is updated infrequently, and only after undergoes a significant amount of training. In this manner, the targets remain stationary when training the primary DNN’s parameter so that gradient descent steps can be implemented in a straightforward manner using terms in the loss function . This scheme is shown in Figure 5.

Figure 5.

Use of Target Network. The scheme used to correct temporal correlatedness is shown. Pathways for control (solid red), learning (dashed green), and intermittent copying (dashed, thick blue) are shown. The replay buffer has been omitted for simplicity.

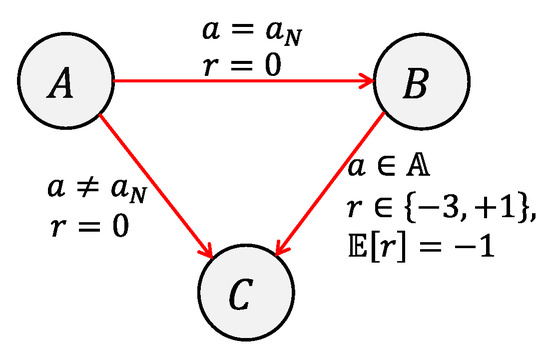

Overestimation bias [77,78] is another problem frequently encountered in stochastic environments. This is an outcome of maximization. As an example, consider an MDP with where is the terminal state. This is shown in Figure 6. The action space where is relatively large, is available to the agent. From state , only action leads to whereas the remaining ones, through , lead to . The reward received from state is always zero, (i.e., . From state all actions lead to , with the reward being either or and with equal probabilities of . In other words, the possible transitions are Since the rewards of and 1 have the same probability when the environment transitions from to , the expected reward from to is i.e., . For simplicity, let us assume that . The Q-values for some actions would be updated to , whereas those of others, to . Since is large enough, it is very likely that at least one of them, say has the higher of the two. Consider the Q-values of actions from state . It is clear that for through , . However, when the agent selects action from , thereby reaching , the operation is likely to return so that would be updated to . This makes appear to be the optimal action from state , when in fact it is the worst choice in .

Figure 6.

Overestimation Bias. This example is used to illustrate the effect of overestimation bias (see text for complete explanation).

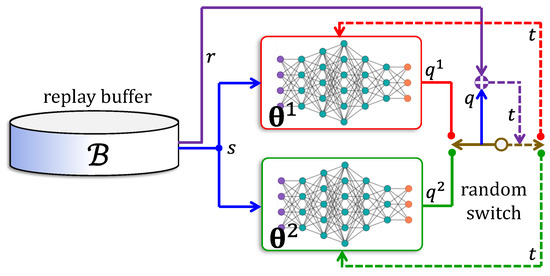

Double Q-learning [79] is a popular approach to circumvent overestimation bias in off-policy RL (see Figure 7). Although first proposed in a tabular setting [77], more recent research implements double Q-learning in conjunction with DNNs, which is called the double deep Q-network (DDQN). It incorporates two DNNs with the parameters and . Samples are collected by implementing actions using their mean Q-values, . For each sample transition in during training, one of the two DNNs, say DNN (), is picked randomly and with equal probability to compute the target, and the other DNN, is trained with it. Whence,

Figure 7.

Double DQN. One DQN ( or ) is picked at random and its Q value ( or ) is used to obtain the target (), which is used to train the other DQN. For simplicity only the pathways involved in training are shown. The target pathways are depicted with dotted lines.

Each DNN has a 0.5 probability of getting trained with the transition sample. This is the manner of updating that was originally proposed in [77].

An extension of DDQN is clipped DDQN [80,81,82]. Instead of selecting the target randomly, it is obtained as minimum of the Q-values, and ,

Dueling DQN architectures (Dueling-DQN) [83] use a different scheme to avoid overestimation bias (see Figure 8). It divides the state–action value into two parts, the state value and the state–action advantage . As shown in Equation (13), is the difference between the two quantities. The advantage of action in state , is the expected gain in the return obtained by picking action . The DNN layout consists of an input layer for the state . After a few initial preprocessing layers, it splits into two separate pathways, each of which is a fully connected DNN. Letting the symbols and denote the weight parameters of the pathways, the scalar output of the value pathway is the state’s value, and the output of the advantage pathway is an dimensional vector comprising of the advantages of all available actions in . The Q-value of the state–action pair can be obtained in a straightforward manner as provided in the following equation,

Figure 8.

Dueling DQN. Shown is the dueling DQN architecture. The two outputs of the DNN are parametrized by and . The target pathway (dotted green) is for training.

The quantity denotes the set of all weight parameters of the dueling-DQN, including and as well as those present in the earlier preprocessing layers.

5. Policy-Based and Actor–Critic Reinforcement Learning

Like tabular Q-learning, tabular policy-based RL uses an array of Q-values. Initialized with an arbitrary policy , the tabular policy RL algorithm is an iterative process comprising of two steps [70,84]. Policy evaluation is carried out in the first stage, where Q-values are learned as shown in Equation (18) and Equation (20). In the second step, the policy is refined by defining the action for each state as shown in Equation (16). The two-step process is repeated until the policy can be refined no further.

Gradient descent policy learning methods do not directly draw upon tabular policy learning in the same way that value-based learning does. These methods are realized through DNNs as the agents. An attractive feature of deep policy RL is its intrinsic ability to handle continuous states as well as continuous actions.

5.1. Deep Policy Networks

Policy gradient uses an experience replay buffer is the same manner as a DQN. The buffer stores full episodes of sequences. Instead of using Equation (6), it is convenient to directly express the loss function in terms of episodes and the DNN’s weight parameter , in the following manner,

Policy gradient methods try to maximize this loss. The operator is the expected with the DNN agent operating under the probabilistic policy . The initial state in the above expression is implicitly defined in . Moreover, the distribution of within is in accordance with the underlying MDP. The quantity is the total return of episode starting from .

Note that for a transition , the reward is a feedback signal that is determined by the environment (such as a home or residential complex) which is external to the agent. So is the discounted, aggregate return , which is also equal to that in Equation (4). No function that maps a sequence of states and action of time horizon to a return is available to the agent. Consequently, a straightforward gradient descent step in the direction of cannot be applied. In an apparent paradox, it turns out that its expected value , can be differentiated by the agent, which is also the rationale behind expressing the loss as in Equation (27). This is due to a mathematical result known as the policy gradient theorem [14,85,86]. The policy gradient theorem establishes the theoretical foundation for the majority of deep policy gradient methods. It can be stated mathematically as below,

The significance of the theorem is that the gradient of the expected return, does not require the gradient of the return . Only the log probability of the episode must be differentiated. Fortunately, this gradient can readily be computed by the DNN agent. The probability of a transition in (see Equation (5)) is the product ; its logarithm is . The second term is intrinsic to the environment, and independent of the DNN so that differentiating it with respect to is zero. Since is the product , we arrive at the following interesting result,

The left-hand side of Equation (28) to be estimated rather easily using the expression in Equation (29). This is because the policy is, in fact, based on the DPN output. Whereas [85] uses softmax policies as in Equation (10), it is quite usual in later research to adopt Gaussian policies (cf. [14]). Since is the same policy that is used to obtain transition samples, Equation (28) pertains to on-policy learning.

The expected gradient can be estimated as the average of several Monte Carlo samples of episodes (also called rollouts) that are stored in . This provides an estimate of the gradient of the loss function defined in Equation (27). An early policy gradient method, REINFORCE [73] uses Equation (29) to increment . The REINFORCE on-policy update rule is expressed as,

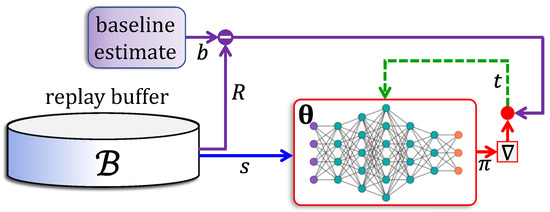

In the above expression, it is assumed for simplicity that the time horizon is fixed across all samples. The quantity is called the baseline [87]. It can be set to zero in the basic implementation of policy learning. Figure 9 shows a schematic of this approach.

Figure 9.

Policy Gradient with Baseline. Shown is the overall scheme used in REINFORCE with the baseline. There are different ways to implement the baseline.

Unfortunately, when the bias , the variance in the set of samples of the form, becomes too large. This in turn requires a very large number of Monte Carlo episode samples to be collected. Including the baseline in Equation (30) that is close to helps reduce the variance to tractable limits. The theoretical optimal baseline estimate is given by,

There are ways to obtain reasonable baseline estimates in practice that reduce the variance without affecting the bias [87,88]. The purpose of actor–critic architectures, which will be described subsequently, are also designed to obtain reliable bias estimates. Before proceeding further, we will make improvements to Equation (30) on the basis of the following two observations.

The first observation is that in Equation (30) the gradient linked with is weighted by in the outer summation. In this manner, the episode would receive a higher weight if it fetched a higher return. However, the weighting scheme is rather arbitrary. For instance, with , if all returns were non-negative, then all gradients would receive positive weights. On the other hand, suppose the bias were to be replaced with the expected return, then the gradients of the episodes with lower-than-expected returns would receive negative weights, whereas those with better-than-expected returns would be assigned positive weights. Using Equation (11), it is observed that the bias is also the value of the starting state . Our first improvement would be to replace the bias with a value function.

The second observation is subtler, requiring the scrutiny of the weighting scheme at each time instant . To simplify the discussion, it will be assumed that the discount . Consider the episode consisting of transitions of the form . Ignoring , the corresponding term in the inner summation, which is , is weighted by the return . At time instant , the prior rewards until represent the past history of the episode ; it has no role in how good the action was from state . Removing these terms, the weight becomes , which is, in fact, . Replacing in Equation (30) with is the other improvement. Once the prior history of the episode is removed, the bias must be set to instead of .

From the above discussion, it is seen that each factor in Equation (30) should be replaced with . From Equation (13), this is the advantage function , so that we replace the step original increment rule with the following update rule,

5.2. Natural Gradient Methods

One of the problems associated with policy gradient learning approaches as in Equation (30) or Equation (32) is choosing an adequate learning rate . Too small a value of would necessitate a large training period, whereas a value that is too large would produce a ‘jump’ in large enough to yield a new policy that is too different from the previous one. Although there are several reliable methods to address this effect in gradient descent for supervised learning as in Equation (3), it is too pronounced in RL diminishing the efficacies of such methods. In extreme cases, a seemingly small increment along the direction of the gradient may lead to irretrievable distortion of the policy itself. The underlying reason behind this limitation is that unlike in Equation (1), the loss function in Equation (25) incorporates a probability distribution.

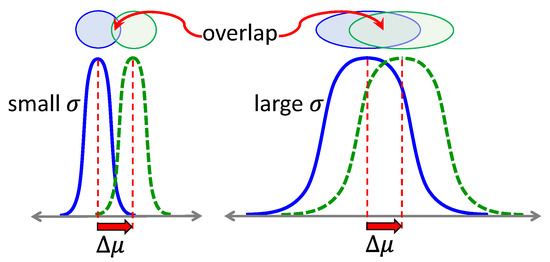

The change in any policy whenever a perturbation is applied to the parameter should not be quantified in terms of the norm , but using the Kullback–Leibler divergence between to the distributions and [89]. The K-L divergence is denoted as where the new and previous values of the parameter are and . Figure 10 illustrates the relevance of the K-L divergence. The Hessian (2nd derivative) of is known as the Fisher information matrix . The increment applied to should be in proportion to , which is referred to as the natural gradient [90] of the expectation . The Fisher information matrix can be estimated as in [14],

Figure 10.

K-L Divergence. Two Gaussian distributions (solid, blue) with low variance (left) and high variance (right) are shown, and . Incrementing by (dashed green) results in equal change in the norm whereas is higher in the distribution appearing to the left. The smaller distance in the right is due to the greater overlapping region shown on top.

Recent policy gradient algorithms use concepts derived from natural gradients [14] to rectify the downside of ‘vanilla’ gradient descent to eliminate the use of an effective learning rate . The use of the natural gradient greatly reduces the natural gradient algorithm’s dependence on how the policy is parametrized. Unfortunately, the gains of using the natural gradient come at the cost of increased computational overheads associated with matrix inversion. The overheads may outweigh the gains when the Fisher matrix is very large. When the policy is represented effectively through the parameter , natural gradient training may not provide enough speed-up over vanilla gradient descent.

Trust region policy optimization (TRPO) is a class of training algorithms that directly uses the Kullback–Leibler divergence [91]. In TRPO, a hard upper bound is imposed on the divergence produced due to the increment is applied to the DNN weights . Denoting this bound as ,

Under these circumstances it can be shown that the increment in TRPO is,

The expectation can be estimated in the same manner as in Equation (30) or Equation (32).

Proximal policy optimization (PPO) [92] is another RL method that uses natural gradients. PPO replaces the bound in TRPO with a penalty term. An expression for the PPO’s objective function for a single episode is as shown below,

5.3. Off-Policy Methods

Only on-policy algorithms have been discussed so far in this section, including TRPO and PPO. Nevertheless, policy gradient can also be applied for off-policy learning. Since such an algorithm would be trained for the optimal policy, the samples in the replay buffer (that are collected using earlier policies) can be recycled multiple times. This feature is a significant advantage of off-policy learning.

Policy gradient methods for off-policy learning can be implemented using importance sampling. Let be any function of the random variable , which follows some distribution . Importance sampling can be used to estimate the expectation as follows. Samples are drawn from a more tractable distribution . Using as the weight for each sampled value of , the weighted expectation is computed from several such samples. This serves as the estimated value i.e., .

This approach is adopted in off-policy RL. Suppose samples in the replay buffer are based on the policy . The gradient can be empirically estimated using some action distribution as,

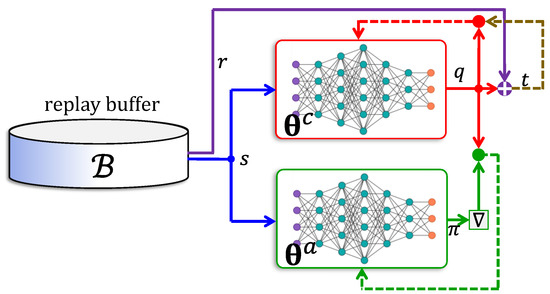

5.4. Actor–Critic Networks

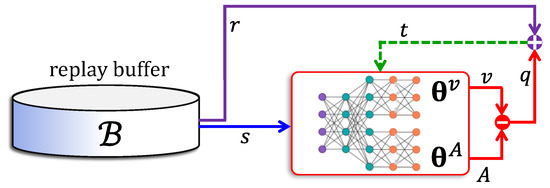

Actor–critic methods combine policy gradient and value-based RL methods [93]. The actor–critic architecture consists of two learning agents, the actor and the critic (see Figure 11). From any environmental state, the actor is trained using policy gradient to respond with an action. The critic is trained with a value-based RL method to evaluate the effectiveness of the actor’s output, which is then used to train the latter.

Figure 11.

Actor–Critic Network. Shown is the overall schematic used in actor–critic learning, comprising of an actor DNN and a critic DNN.

Let us denote the actor’s and the critic’s parameters with the symbols and . The critic network can be modeled as a DQN, although it is trained with the advantage function as defined in Equation (13). When the environmental state is, for every action the critic network provides as its output, the value of the state–action pair . The actor is incremented using gradient ascent,

Equation (38) shown above closely resembles the policy gradient increment in Equation (30) (with ). The only difference is that the critic is used in order to compute the gradient’s weight .

The update rule for the critic network, which is similar to Equation (22), is shown below,

The advantage actor–critic (A2C) algorithm [94] is very effective in reducing the variance in the policy gradient algorithm of the actor. The A2C architecture entails a two-fold improvement over the ‘vanilla’ actor–critic method, which are outlined below.

- (i)

- The actor network uses an advantage function , which is the difference between a return value and the value of state . Accordingly, the critic is trained to approximate the value function.

- (ii)

- The reward is computed using a -step lookahead feature, where the log-gradient is weighted using the sum of the next rewards.

To better understand how the -step lookahead works, let us turn our attention to Equation (30). In this expression the gradient at time instant is weighted by the factor where is the return of an entire episode from until , so that The baseline is is the value of . It is reasoned that the sum of the past rewards does not have any bearing on the quality of the action taken at the instant . Hence all past rewards are dropped from . Furthermore, rewards received in the distant future, i.e., after instants are also dropped. In other words, consists of the sum of the discounted rewards between the instant and the instant . Whereupon, the actor’s update rule is expressed as,

The critic is updated using the same return , in accordance with the expression shown below,

The asynchronous advantage actor–critic (A3C) method [94] is an extension of A2C that can be applied in parallel processing environments. A global network and a set of ‘workers’ are maintained in A3C. Each worker receives the actor and critic parameters that it implements on its own independent environment and collecting reward signals. The rewards are then used to determine increments and , which are then used to asynchronously update the parameters in the global network. An advantage of A3C is that due to the parallel action of multiple workers, an experience replay buffer does not have to be incorporated.

The deterministic policy gradient (DPG) algorithm was described in [85], and more recently in SLH+14]. It was later extended to a deep framework in [95], known as the deep deterministic policy gradient (DDPG). DDPG is an off-policy actor–critic method that concurrently learns the optimal Q-function , as well as the optimal policy .

In any off-policy actor–critic model, the critic must be trained to output the optimal policy . Hence, the term in Equation (39) should be replaced with the maximum over all actions , where the optimal action as in Equation (19). Unfortunately, when the action space is continuous () an exhaustive search to find is impossible. Moreover, in a majority of applications, using numerical optimization to obtain is computationally too intensive to be used within the training algorithm.

In order to circumvent these difficulties in identifying the optimal action that maximizes the Q-value, there are three options available for use. These are outlined below.

- (i)

- can be sampled for several different actions and be assigned the action corresponding to the sample maximum [96].

- (ii)

- A convex approximation of around can be devised and obtained over the approximate function [97].

- (iii)

- A separate off-policy policy network can be used to learn the optimal policy [98].

Out of the above three available options discussed above, the third and last has been adopted in DDPG. The critic parameter is updated in accordance with the expression shown below,

In Equation (42), is the output of DDPG’s actor. DDPG uses a replay buffer that includes samples from older policies. The actor’s parameter is trained using any off-policy policy gradient as in Equation (37).

One of the drawbacks of DDPG is the problem of overestimation [99]. Suppose during the course of training, the function acquires a sharp local peak. Under these circumstances, further training would converge towards this local optimum, leading to undesirable results. This issue has been tackled by twin delayed deterministic policy gradient (TD3) in [80]. TD3 maintains a pair of critics whose parameters we shall denote as and , or more concisely as , where .

In Equation (42), it can be seen that DDPG has a target where is obtained from the critic. TD3 has two targets, , . The actions in TD3 are clipped to lie within the interval . In order to increase exploration, Gaussian noise is added to this action. Finally, the target is obtained as , which is used for training.

The soft actor–critic (SAC) RL proposed recently in [81,100] is an off-policy RL approach. The striking feature of SAC is the presence of an entropy term in the objective function,

Incorporating the entropy in Equation (43) increases the degree of randomness in the policy which helps in exploration. As with TD3, SAC uses two critic networks.

6. Use of Reinforcement Learning in Home Energy Management Systems

This section addresses aspects of the survey on the use of RL approaches for various HEMS applications. All articles in this survey have been published in established technical journals that were published or made available online within the past five years.

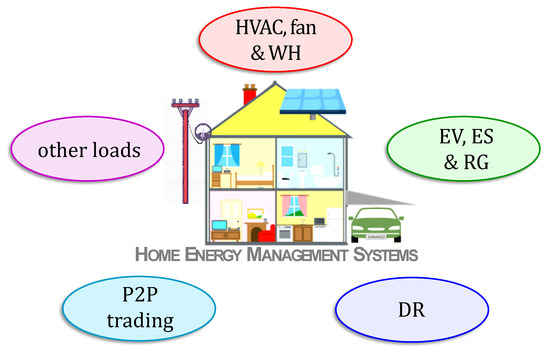

6.1. Application Classes

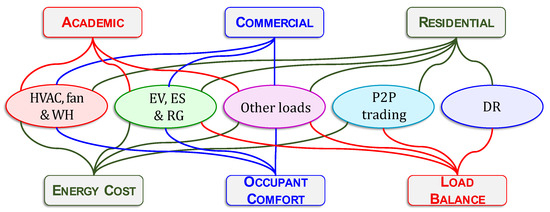

In this study, all applications were divided into five classes as in Figure 12 below.

Figure 12.

HEMS Applications. All applications of reinforcement learning in home energy management systems are classified into the five categories shown.

- (i)

- Heating, Ventilation and Air Conditioning, Fans and Water Heaters: Heating, ventilation, and air conditioning (HVAC) systems alone are responsible for about half of the total electricity consumption [48,101,102,103,104]. In this survey, HVAC, fans and water heaters (WH) have been placed under a single category. Effective control of these loads is a major research topic in HEMS.

- (ii)

- Electric Vehicles, Energy Storage, and Renewable Generation: The charging of electric vehicles (EVs) and energy storage (ES) devices, i.e., batteries are studied in the literature as in [105,106]. Wherever applicable, EV and ES must be charged in coordination with renewable generation (RG) such as solar panels and wind turbines. The aim is to make decisions in order to save energy costs, while addressing comfort and other consumer requirements. Thus, EV, ES, and RG have been placed under a single class for the purpose of this survey.

- (iii)

- Other Loads: Suitable scheduling of several home appliances such as dishwasher, washing machine, etc., can be achieved through HEMS to save energy usage or cost. Lighting schedules are important in buildings with large occupancy. These loads have been lumped into a single class.

- (iv)

- Demand Response: With the rapid proliferation of green energies into homes and buildings, and these sources merged into the grid, demand response (DR) has acquired much research significance in HEMS. DR programs help in load balancing, by scheduling and/or controlling shiftable loads and in incentivizing participants [107,108] to do so through HEMS. RL for DR is one of the classes in this survey.

- (v)

- Peer-to-Peer Trading: Home energy management has been used to maximize the profit for the prosumers by trading the electricity with each other directly in peer-to-peer (P2P) trading or indirectly through a third party as in [109]. Currently, theoretical research on automated trading is receiving significant attention. P2P trading is the fifth and final application category to have been considered in this survey.

Each application class is associated with an objective function and a building type that are discussed in subsequent paragraphs. The schematic in Figure 13 shows all links that have been covered by the articles in this survey.

Figure 13.

Building Types and Objectives. The building type and the RL’s objective of each application class. Note that the links are based on the existing literature covered in the survey. The absence of a link does not necessarily imply that the building type/objective cannot be used for the application class.

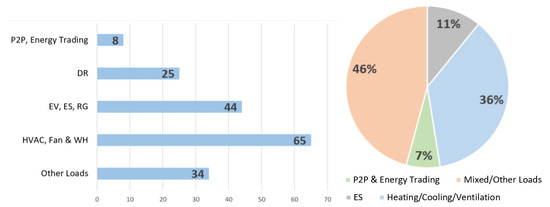

Figure 14 shows the number of research articles that applied RL to each class. Note that a significant proportion of these papers addressed more than one class. More than third of the papers we reviewed focused only on HVAC, fans and water heaters. Just above 10% of the papers studied RL control for the energy storage (ES) systems. Only 7% of the papers focused on the energy trading. However, most of the papers (46%) are targeting more than one object. These results are shown in Figure 14.

Figure 14.

Application Classes. The total number of articles in each application class (left), as well as their corresponding proportions (right).

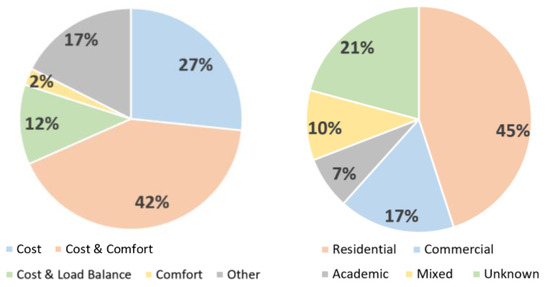

6.2. Objectives and Building Types

Within these HEMS applications, RL has been applied in several ways. It has been used to reduce energy consumption within residential units and buildings [110]. It has also been used to achieve a higher comfort level for the occupants [111]. In operations at the interface between the residential units and the energy grid, RL has been applied to maximize prosumers profit in energy trading as well as for load balancing.

For this purpose, we break down the objectives into three different types as listed below.

- (i)

- Energy Cost: The cost of using any electrical device by the consumer and in most of the cases it is proportionally related to its energy consumption. In this paper we use the terms ‘cost’ and ‘consumption’ interchangeably.

- (ii)

- Occupant Comfort: the main factor that can affect the occupant’s comfort is the thermal comfort, which depends mainly on the room temperature and humidity.

- (iii)

- Load Balance: Power supply companies try to achieve load balance by reducing the power consumption of consumers at peak periods to match the station power supply. The consumers are motivated to participate in such programs by price incentives.

Figure 13 illustrates the RL objectives that were used in each application class.

Next, all buildings and complexes were categorized into the following three types.

- (i)

- Residential: for the purpose of this survey, individual homes, residential communities, as well as apartment complexes fall under this type of building.

- (ii)

- Commercial: these buildings include offices, office complexes, shops, malls, hotels, as well as industrial buildings.

- (iii)

- Academic: academic buildings range from schools, university classrooms, buildings, research laboratories, up to entire campuses.

The research literature in this survey revealed that for residential buildings, RL was applied in all five application classes. However, in case of commercial and academic buildings, RL was typically applied to the first three categories, i.e., to HVAC, fans and WH, to EVs, ESs and RGs, as well as to other loads. This is shown in Figure 13.

Figure 15 illustrates the outcome of this survey. It may be noted that in the largest proportion of articles (42%) the RL algorithm took into account both cost and comfort. About 27% of all articles addressed cost as the only objective, thereby defining the second largest proportion.

Figure 15.

Objectives and Building Types. Proportions of articles in each objective (left) and building type (right).

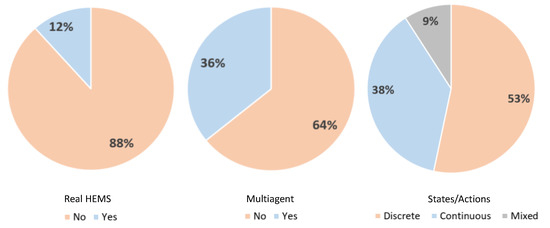

6.3. Deployment, Multi-Agents, and Discretization

The proportion of research articles where RL was actually deployed in the real world was studied. It was found that only 12% of research articles report results where RL was used with real HEMS. The results are consistent with an earlier survey [49] where this proportion was 11%. The results are shown in Figure 16.

Figure 16.

Real-World, Multi-Agents, and Discretization. Proportions of articles deployed in real world HEMS (left), using multi-agents (middle), and whether the states/actions are discrete or continuous (right).

7. Reinforcement Learning Algorithms in Home Energy Management Systems

This section focuses on how the RL and DRL algorithms described in earlier sections were used in HEMS applications. The references have been categorized in terms of the application class, objective function, and building type, that were described in the immediately preceding section. Table 1 provides a list of references that used tabular RL methods. About 28% of articles used tabular methods.

Table 1.

References using Tabular Reinforcement Learning.

In a similar manner, Table 2 considers references that used DQN. Most algorithms in the survey used DQN. However, DDQN was also popular in the HEMS research community. The survey found that dueling-DQN was applied in only one article. Table 3 categorizes references in the survey that used deep policy learning. PPO and TRPO are the only approaches that have been used so far in HEMS.

Table 2.

References using Deep Q Networks.

Table 3.

References using Deep Policy Networks.

The survey also indicates that actor–critic was the preferred approach in comparison with deep policy learning. Table 4 provides a list of references that applied actor–critic learning, which constituted 53% of all deep learning methods. It shows that PPO is more popular than TRPO. We believe that this observation is due to the closer recency of the latter algorithm. References that used either a combination of two or more approaches, or any other approach not commonly used in RL literature, are shown in Table 5.

Table 4.

References using Actor–Critic Networks.

Table 5.

References using Combination of Methods and/or Miscellaneous Methods.

8. Conclusions

This article surveys how effectively RL has been leveraged for various HEMS applications. The survey reveals the following:

- (i)

- Although 66% of all articles used deep RL, many articles used tabular learning. This may indicate that only simplified application were considered.

- (ii)

- Around 53% of all articles used discrete states and actions. This is another indication that the HEMS scenarios may have been simplified.

- (iii)

- Around 12% of all approaches covered in this survey were deployed in the real world, their use being limited to simulation platforms only.

These observations strongly suggest that the use of RL in HEMS application is at a research stage and is yet to gain maturity. More in-depth investigation is necessary, particularly on RL algorithms that use DNN agents. Nonetheless, it was seen that 36% of all articles made use of multiagent schemes, which is an encouraging sign.

The only truly viable alternative is to use nonlinear control, more specifically model predictive control (MPC) [224]. MPC is widely used in various engineering applications (cf. [225]). The benefit of MPC is in the explicit manner by which it handles physical constraints. At each iteration, MPC considers a receding time horizon into the future, and applies a constrained optimization algorithm to determine the best control actions. However, in most cases, MPC uses linear or quadratic objective functions. This is a basic limitation that must be taken into account before applying MPC to large-scale problems and is in sharp contrast to RL that does not place any restriction on the reward signal. Moreover, MPC is a model-based approach, whereas an overwhelming majority of references in this survey used model-free RL methods ([149] being the sole exception).

There is a diverse array of algorithms available in the RL literature. Since tabular methods require discrete states and actions, and furthermore, that these spaces have low cardinalities, they may not be much use for most HEMS applications. Not surprisingly, this survey shows that tabular methods have been used less frequently than DNN methods. In future, as the HEMS community investigates increasingly complex HEMS domains, tabular methods would become even less likely to be used. Consequently, the choice of algorithm would usually be confined to DNN methods.

Out of the DNN methods, it must be noted that DQN and its derivatives can only be used in applications only when the action space is finite and small, such as in controlling OFF–ON switches. The survey reveals that actor–critic methods, which include Q-learning and policy learning, are the most popular in HEMS applications. Another deciding factor is whether to use policy-free or policy-based RL. On-policy learning may be used is applications where abandoning the policy in the initial stages may occasionally very negatively impact the environment. Thus, they may be used if the environment does not require too much exploration. On the other hand, off-policy RL can discover more novel policies.

Unlike in the unsupervised and supervised learning where simple performance metrics are readily available, performance evaluation in RL is an open problem [226]. The steadily increasing reward with iteration is the best means for any real application. The authors suggest that the following four criteria should be considered.

- (i)

- Saturation reward (): the expected reward must be relatively high at saturation.

- (ii)

- Variance at saturation (): the reward must not have excessive variance at saturation.

- (iii)

- Exploitation risk (): The minimum possible reward must not be so low that the environment is adversely affected. This is the risk associated with exploration and tends to occur during the initial exploratory stages of the RL training.

- (iv)

- Convergence rate (): the number of iterations before the reward starts to saturate should not be large.

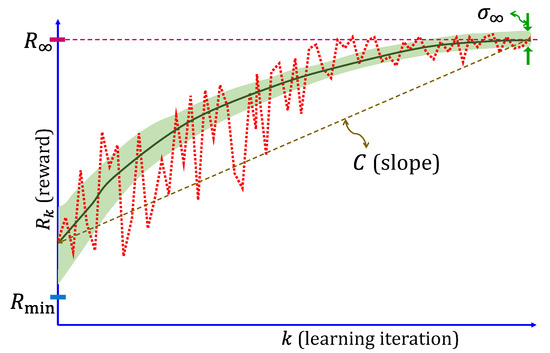

Figure 17 shows how to graphically interpret , , , .

Figure 17.

Proposed Performance Metrics. The four metrics across multiple runs for performance evaluation of an RL algorithm that have been suggested by the authors for HEMS and other practical applications. A typical trajectory obtained from a single run (dashed red), the average of multiple runs (solid green), and the variance (shaded light green) are shown. The quantity is the minimum attained from all runs.

Since the articles in this survey have always used some HEMS simulation platform, it is assumed that the RL algorithm can be run at least a few times. The above four performance metrics (, , , ) proposed by the authors can be empirically estimated using Monte Carlo samples of such runs. Suppose the sequence of rewards obtained from the th run is . Each is some reward and represents an iteration of the RL algorithm. The precise meanings of the terms (reward and iteration) are entirely dependent on the specific HEMS application, how the reward function is implemented, whether a replay buffer is used, and the RL algorithm.

A reward may be the either an aggregate return value, the instantaneous reward at the time horizon , or the reward at last parameter update, etc. Likewise, the iteration index may be an instantaneous time step , Alternately, may refer to the number of times the training algorithm adjusts the model parameter , or flushes the replay buffer, etc. The exact meanings of the terms are left to the reader. However it must be remembered that at the beginning of each run, all relevant model parameters should be reinitialized, that at the end of each run after iterations (subscripted since may vary with run), the RL training algorithm converges to a different final model parameter, and that truly reflects the quality of the model. Moreover, it must be ensured that the algorithm terminates after attains saturation—i.e., there is no perceptible gain from more iterations.

If the runs are indexed where is the set of runs, the suggested performance metrics can be estimated as,

In Equation (46), it is assumed that is the estimated average value of , determined in accordance with Equation (45). In some situations, it may be computationally too expensive to obtain multiple runs. In such cases, as well as when the RL is implemented on a real HEMS environment, may be a singleton set (). In this case, in Equation (46) is meaningless. An alternate metric may be used by using the last few iterations before termination.

Author Contributions

Conceptualization, S.D.; methodology, S.D. and O.A.-A.; software, O.A.-A. and S.D.; validation, S.D. and O.A.-A.; formal analysis, O.A.-A. and S.D.; investigation, S.D.; resources, S.D.; data curation, O.A.-A.; writing—original draft preparation, S.D.; writing—review and editing, S.D. and O.A.-A.; visualization, O.A.-A. and S.D.; supervision, S.D.; project administration, S.D.; funding acquisition, N/A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- U.S. Energy Information Administration. Electricity Explained: Use of Electricity. 14 May 2021. Available online: www.eia.gov/energyexplained/electricity/use-of-electricity.php (accessed on 10 April 2022).

- Center for Sustainable Systems. U.S. Energy System Factsheet. Pub. No. CSS03-11; Center for Sustainable Systems, University of Michigan: Ann Arbor, MI, USA, 2021; Available online: https://css.umich.edu/publications/factsheets/energy/us-energy-system-factsheet (accessed on 10 April 2022).

- Shakeri, M.; Shayestegan, M.; Abunima, H.; Reza, S.S.; Akhtaruzzaman, M.; Alamoud, A.; Sopian, K.; Amin, N. An intelligent system architecture in home energy management systems (HEMS) for efficient demand response in smart grid. Energy Build. 2017, 138, 154–164. [Google Scholar] [CrossRef]

- Leitão, J.; Gil, P.; Ribeiro, B.; Cardoso, A. A survey on home energy management. IEEE Access 2020, 8, 5699–5722. [Google Scholar] [CrossRef]

- Shareef, H.; Ahmed, M.S.; Mohamed, A.; Al Hassan, E. Review on Home Energy Management System Considering Demand Responses, Smart Technologies, and Intelligent Controllers. IEEE Access 2018, 6, 24498–24509. [Google Scholar] [CrossRef]

- Mahapatra, B.; Nayyar, A. Home energy management system (HEMS): Concept, architecture, infrastructure, challenges and energy management schemes. Energy Syst. 2019, 13, 643–669. [Google Scholar] [CrossRef]

- Dileep, G. A survey on smart grid technologies and applications. Renew. Energy 2020, 146, 2589–2625. [Google Scholar] [CrossRef]

- Zafar, U.; Bayhan, S.; Sanfilippo, A. Home energy management system concepts, configurations, and technologies for the smart grid. IEEE Access 2020, 8, 119271–119286. [Google Scholar] [CrossRef]

- Alanne, K.; Sierla, S. An overview of machine learning applications for smart buildings. Sustain. Cities Soc. 2022, 76, 103445. [Google Scholar] [CrossRef]

- Aguilar, J.; Garces-Jimenez, A.; R-Moreno, M.D.; García, R. A systematic literature review on the use of artificial intelligence in energy self-management in smart buildings. Renew. Sustain. Energy Rev. 2021, 151, 111530. [Google Scholar] [CrossRef]

- Himeur, Y.; Ghanem, K.; Alsalemi, A.; Bensaali, F.; Amira, A. Artificial intelligence based anomaly detection of energy consumption in buildings: A review, current trends and new perspectives. Appl. Energy 2021, 287, 116601. [Google Scholar] [CrossRef]

- Barto, A.G.; Sutton, R.S.; Anderson, C.W. Neuronlike elements that can solve difficult learning control problems. IEEE Trans. Syst. Man Cybern. 1983, 13, 835–846. [Google Scholar] [CrossRef]

- Tesauro, G. TD-Gammon, a self-teaching backgammon program, achieves master-level play. Neural Comput. 1994, 6, 215–219. [Google Scholar] [CrossRef]

- Peters, J.; Schaal, S. Reinforcement learning of motor skills with policy gradients. Neural Netw. 2008, 21, 682–697. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of Go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. A brief survey of deep reinforcement learning. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- François-Lavet, V.; Henderson, P.; Islam, R.; Bellemare, M.G.; Pineau, J. An introduction to deep reinforcement learning. Found. Trends Mach. Learn. 2018, 11, 219–354. [Google Scholar] [CrossRef]

- Silver, D.; Singh, S.; Precup, D.; Sutton, R.S. Reward is enough. Artif. Intell. 2021, 299, 103535. [Google Scholar] [CrossRef]

- Goertzel, B. Artificial General Intelligence; Pennachin, C., Ed.; Springer: New York, NY, USA, 2007; Volume 2. [Google Scholar]

- Zhang, T.; Mo, H. Reinforcement learning for robot research: A comprehensive review and open issues. Int. J. Adv. Robot. Syst. 2021, 18, 17298814211007305. [Google Scholar] [CrossRef]

- Bhagat, S.; Banerjee, H.; Tse, Z.T.H.; Ren, H. Deep reinforcement learning for soft, flexible robots: Brief review with impending challenges. Robotics 2019, 8, 4. [Google Scholar] [CrossRef] [Green Version]

- Lee, C.; An, D. AI-Based Posture Control Algorithm for a 7-DOF Robot Manipulator. Machines 2022, 10, 651. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Zeng, F.; Wang, C.; Ge, S.S. A survey on visual navigation for artificial agents with deep reinforcement learning. IEEE Access 2020, 8, 135426–135442. [Google Scholar] [CrossRef]

- Sun, H.; Zhang, W.; Yu, R.; Zhang, Y. Motion planning for mobile robots-focusing on deep reinforcement learning: A systematic review. IEEE Access 2021, 9, 69061–69081. [Google Scholar] [CrossRef]

- Luong, N.C.; Hoang, D.T.; Gong, S.; Niyato, D.; Wang, P.; Liang, Y.-C.; Kim, D.I. Applications of deep reinforcement learning in communications and networking: A survey. IEEE Commun. Surv. Tutor. 2019, 21, 3133–3174. [Google Scholar] [CrossRef]

- Zhang, G.; Li, Y.; Niu, Y.; Zhou, Q. Anti-jamming path selection method in a wireless communication network based on Dyna-Q. Electronics 2022, 11, 2397. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, J.; Wang, H.; Shen, X.; Wang, B.; Dong, Y. Deep reinforcement learning-based adaptive modulation for underwater acoustic communication with outdated channel state information. Remote Sens. 2022, 14, 3947. [Google Scholar] [CrossRef]

- Ullah, Z.; Al-Turjman, F.; Mostarda, L. Cognition in UAV-aided 5G and beyond communications: A survey. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 872–891. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Reddi, V.J. Deep reinforcement learning for cyber security. arXiv 2019, arXiv:1906.05799. [Google Scholar] [CrossRef] [PubMed]

- Alavizadeh, H.; Alavizadeh, H.; Jang-Jaccard, J. Deep Q-Learning Based Reinforcement Learning Approach for Network Intrusion Detection. Computers 2022, 11, 41. [Google Scholar] [CrossRef]

- Jin, Z.; Zhang, S.; Hu, Y.; Zhang, Y.; Sun, C. Security state estimation for cyber-physical systems against DoS attacks via reinforcement learning and game theory. Actuators 2022, 11, 192. [Google Scholar] [CrossRef]