Meta In-Context Learning: Harnessing Large Language Models for Electrical Data Classification

Abstract

:1. Introduction

- In electrical power edge–end interaction classification, we are the first to utilize the internal pre-trained knowledge in large language models for classifying time-series data. Combining large language models with electrical power edge–end interaction classification is an under-explored research question.

- We introduce a target method called M-ICL, which employs extensive tasks to fine-tune a pre-trained language model. This process enhances the model’s in-context learning ability, allowing it to adapt effectively to new tasks.

- Extensive experiments conducted on 13 electrical datasets collected in real-world scenarios demonstrate that our proposed M-ICL achieves superior classification performance in various settings. In addition, the ablation study further demonstrates the effectiveness of different components in M-ICL.

2. Related Work

2.1. Electrical Power Edge–End Interaction Modeling

2.2. Large Language Models

2.3. In-Context Learning

3. Method

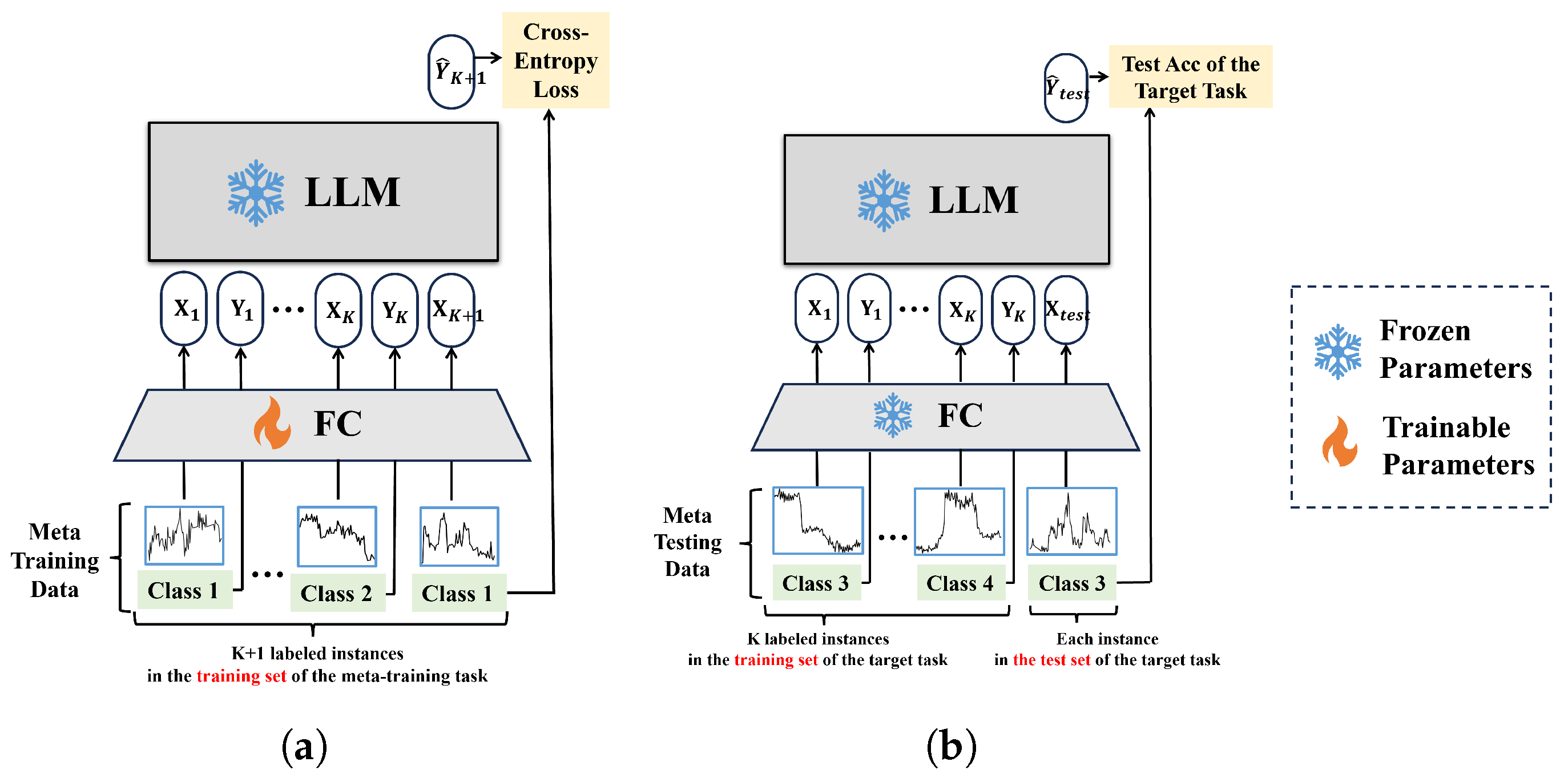

3.1. Overview of M-ICL

3.2. Meta-Training with Time-Series Data

| Algorithm 1: The template for meta-training or meta-testing in the proposed M-ICL. |

1 Please classify the time series data into the following categories: {the label set of the meta-training/testing data}. 2 Examples: 3 ### Input: {the embedding of } 4 ### Label: {} 5 ### Input: {the embedding of } 6 ### Label: {} 7 ⋮ 8 ### Input: {the embedding of } 9 ### Label: {} 10 ### Input: {the embedding of /} 11 ### Label: |

3.3. Meta-Testing on Target Tasks

| Algorithm 2: The meta-training phase in the proposed M-ICL framework. |

|

| Algorithm 3: The meta-testing phase in the proposed M-ICL framework. |

|

4. Experiments

4.1. Experiment Settings

4.1.1. Datasets Collection

4.1.2. Architecture

4.1.3. Baselines

- Vanilla Fine-Tuning: Demonstrated as a straightforward and effective technique, fine-tuning adapts large pre-trained language models to specific downstream tasks.

- BSS (Batch Spectral Shrinkage) [40] (https://github.com/thuml/Batch-Spectral-Shrinkage accessed on 1 September 2022): BSS mitigates negative transfer by penalizing small singular values in the feature matrix. The minimum singular value is penalized, with a recommended regularization weight of .

- ChildTune-F and ChildTune-D [41] (https://github.com/alibaba/AliceMind/tree/main/ChildTuning accessed on 1 September 2022): ChildTune-F & ChildTune-D train a subset of parameters (referred to as the child network) of large language models during the backward process. ChildTune-D leverages the pre-trained model’s Fisher Information Matrix to identify the child network, while ChildTune-F employs a Bernoulli distribution for this purpose.

- Mixout (https://github.com/bloodwass/mixout accessed on 1 September 2022) [42]: Mixout introduces randomness by blending parameters from the pre-trained and fine-tuned models, thereby regularizing the fine-tuning process. The mixing probability, denoted as p, is set to 0.9 in the experiments.

- NoisyTune [43]: NoisyTune introduces uniform noise to pre-trained model parameters based on their standard deviations. The scaling factor , which controls noise intensity, is set at 0.15.

- R3F (https://github.com/facebookresearch/fairseq/tree/main/\examples/rxf accessed on 1 September 2022) [44]: R3F addresses representational collapse by introducing parametric noise. Noise is generated from normal or uniform distributions.

- RecAdam (https://github.com/Sanyuan-Chen/RecAdam accessed on 1 September 2022) [45]: RecAdam optimizes a multi-task objective, gradually transitioning the objective from pre-training to downstream tasks using an annealing coefficient.

4.1.4. Implementation Details

4.2. Comparison with State-of-the-Art Methods

4.3. Ablation Analysis

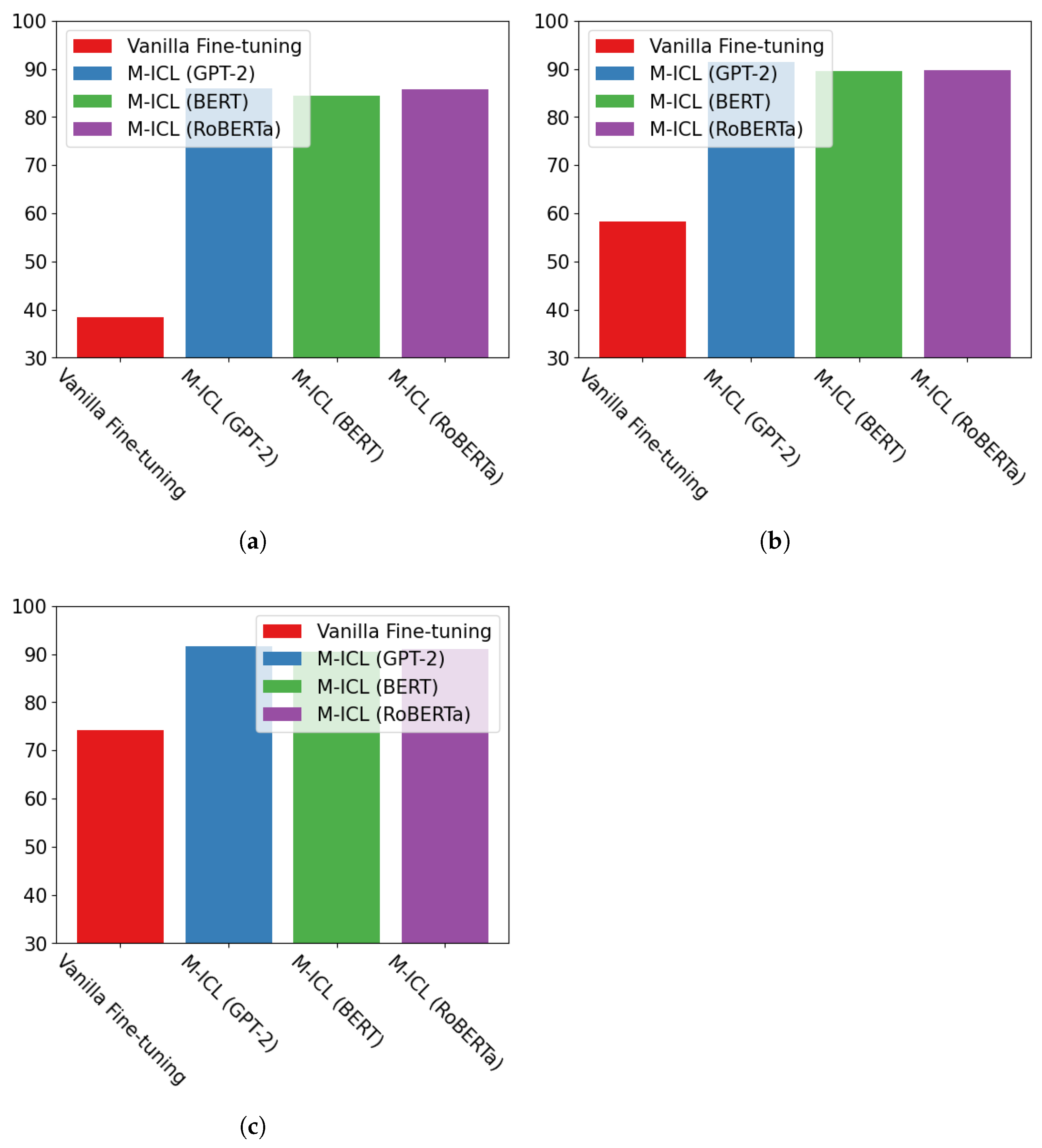

4.4. The Analysis of Using Different Large Language Models

4.5. The Analysis of Using Different K for In-Context Learning

5. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fan, W.; Yang, L.; Bouguila, N. Unsupervised grouped axial data modeling via hierarchical Bayesian nonparametric models with Watson distributions. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 9654–9668. [Google Scholar] [CrossRef] [PubMed]

- Cheng, B.; Wang, M.; Zhao, S.; Zhai, Z.; Zhu, D.; Chen, J. Situation-aware dynamic service coordination in an IoT environment. IEEE/ACM Trans. Netw. 2017, 25, 2082–2095. [Google Scholar] [CrossRef]

- Lv, Z.; Song, H. Mobile internet of things under data physical fusion technology. IEEE Internet Things J. 2019, 7, 4616–4624. [Google Scholar] [CrossRef]

- Yang, H.F.; Chen, Y.P.P. Hybrid deep learning and empirical mode decomposition model for time series applications. Expert Syst. Appl. 2019, 120, 128–138. [Google Scholar] [CrossRef]

- Mollik, M.S.; Hannan, M.A.; Reza, M.S.; Abd Rahman, M.S.; Lipu, M.S.H.; Ker, P.J.; Mansor, M.; Muttaqi, K.M. The Advancement of Solid-State Transformer Technology and Its Operation and Control with Power Grids: A Review. Electronics 2022, 11, 2648. [Google Scholar] [CrossRef]

- Yan, Z.; Wen, H. Electricity theft detection base on extreme gradient boosting in AMI. IEEE Trans. Instrum. Meas. 2021, 70, 2504909. [Google Scholar] [CrossRef]

- Wang, H.; Wang, B.; Luo, P.; Ma, F.; Zhou, Y.; Mohamed, M.A. State evaluation based on feature identification of measurement data: For resilient power system. CSEE J. Power Energy Syst. 2021, 8, 983–992. [Google Scholar]

- Zhang, H.; Bosch, J.; Olsson, H.H. Real-time end-to-end federated learning: An automotive case study. In Proceedings of the 2021 IEEE 45th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 12–16 July 2021; pp. 459–468. [Google Scholar]

- Wu, Q.; Chen, X.; Zhou, Z.; Zhang, J. Fedhome: Cloud-edge based personalized federated learning for in-home health monitoring. IEEE Trans. Mob. Comput. 2020, 21, 2818–2832. [Google Scholar] [CrossRef]

- Chen, R.; Cheng, Q.; Zhang, X. Power Distribution IoT Tasks Online Scheduling Algorithm Based on Cloud-Edge Dependent Microservice. Appl. Sci. 2023, 13, 4481. [Google Scholar] [CrossRef]

- Teimoori, Z.; Yassine, A.; Hossain, M.S. A secure cloudlet-based charging station recommendation for electric vehicles empowered by federated learning. IEEE Trans. Ind. Inform. 2022, 18, 6464–6473. [Google Scholar] [CrossRef]

- Fekri, M.N.; Grolinger, K.; Mir, S. Distributed load forecasting using smart meter data: Federated learning with Recurrent Neural Networks. Int. J. Electr. Power Energy Syst. 2022, 137, 107669. [Google Scholar] [CrossRef]

- Sallam, M. ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef]

- Bubeck, S.; Chandrasekaran, V.; Eldan, R.; Gehrke, J.; Horvitz, E.; Kamar, E.; Lee, P.; Lee, Y.T.; Li, Y.; Lundberg, S.; et al. Sparks of artificial general intelligence: Early experiments with gpt-4. arXiv 2023, arXiv:2303.12712. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Akyürek, E.; Schuurmans, D.; Andreas, J.; Ma, T.; Zhou, D. What learning algorithm is in-context learning? investigations with linear models. arXiv 2022, arXiv:2211.15661. [Google Scholar]

- Zheng, J.; Chen, H.; Ma, Q. Cross-domain Named Entity Recognition via Graph Matching. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; pp. 2670–2680. [Google Scholar] [CrossRef]

- Zheng, J.; Chan, P.P.; Chi, H.; He, Z. A concealed poisoning attack to reduce deep neural networks’ robustness against adversarial samples. Inf. Sci. 2022, 615, 758–773. [Google Scholar] [CrossRef]

- Zheng, J.; Liang, Z.; Chen, H.; Ma, Q. Distilling Causal Effect from Miscellaneous Other-Class for Continual Named Entity Recognition. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 3602–3615. [Google Scholar]

- Zheng, J.; Ma, Q.; Qiu, S.; Wu, Y.; Ma, P.; Liu, J.; Feng, H.; Shang, X.; Chen, H. Preserving Commonsense Knowledge from Pre-trained Language Models via Causal Inference. arXiv 2023, arXiv:2306.10790. [Google Scholar]

- Wei, J.; Bosma, M.; Zhao, V.Y.; Guu, K.; Yu, A.W.; Lester, B.; Du, N.; Dai, A.M.; Le, Q.V. Finetuned language models are zero-shot learners. arXiv 2021, arXiv:2109.01652. [Google Scholar]

- Min, S.; Lyu, X.; Holtzman, A.; Artetxe, M.; Lewis, M.; Hajishirzi, H.; Zettlemoyer, L. Rethinking the role of demonstrations: What makes in-context learning work? arXiv 2022, arXiv:2202.12837. [Google Scholar]

- Xie, S.M.; Raghunathan, A.; Liang, P.; Ma, T. An Explanation of In-context Learning as Implicit Bayesian Inference. In Proceedings of the International Conference on Learning Representations, Virtual Event, 25–29 April 2022. [Google Scholar]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-learning in neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5149–5169. [Google Scholar] [CrossRef]

- Mach, P.; Becvar, Z. Cloud-aware power control for real-time application offloading in mobile edge computing. Trans. Emerg. Telecommun. Technol. 2016, 27, 648–661. [Google Scholar] [CrossRef]

- Smadi, A.A.; Ajao, B.T.; Johnson, B.K.; Lei, H.; Chakhchoukh, Y.; Abu Al-Haija, Q. A Comprehensive survey on cyber-physical smart grid testbed architectures: Requirements and challenges. Electronics 2021, 10, 1043. [Google Scholar] [CrossRef]

- Wang, Y.; Bennani, I.L.; Liu, X.; Sun, M.; Zhou, Y. Electricity consumer characteristics identification: A federated learning approach. IEEE Trans. Smart Grid 2021, 12, 3637–3647. [Google Scholar] [CrossRef]

- Taïk, A.; Cherkaoui, S. Electrical load forecasting using edge computing and federated learning. In Proceedings of the ICC 2020–2020 IEEE international conference on communications (ICC), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Lv, Z.; Kumar, N. Software defined solutions for sensors in 6G/IoE. Comput. Commun. 2020, 153, 42–47. [Google Scholar] [CrossRef]

- Xiong, L.; Tang, Y.; Liu, C.; Mao, S.; Meng, K.; Dong, Z.; Qian, F. Meta-Reinforcement Learning-Based Transferable Scheduling Strategy for Energy Management. IEEE Trans. Circuits Syst. I Regul. Pap. 2023, 70, 1685–1695. [Google Scholar] [CrossRef]

- Zhao, J.; Li, F.; Sun, H.; Zhang, Q.; Shuai, H. Self-attention generative adversarial network enhanced learning method for resilient defense of networked microgrids against sequential events. IEEE Trans. Power Syst. 2022, 38, 4369–4380. [Google Scholar] [CrossRef]

- Atkinson, G.; Metsis, V. A Survey of Methods for Detection and Correction of Noisy Labels in Time Series Data. In Proceedings of the Artificial Intelligence Applications and Innovations: 17th IFIP WG 12.5 International Conference, AIAI 2021, Hersonissos, Crete, Greece, 25–27 June 2021; pp. 479–493. [Google Scholar]

- Ravindra, P.; Khochare, A.; Reddy, S.P.; Sharma, S.; Varshney, P.; Simmhan, Y. An Adaptive Orchestration Platform for Hybrid Dataflows across Cloud and Edge. In Proceedings of the International Conference on Service-Oriented Computing, Malaga, Spain, 13–16 November 2017; pp. 395–410. [Google Scholar]

- Li, Z.; Shi, L.; Shi, Y.; Wei, Z.; Lu, Y. Task offloading strategy to maximize task completion rate in heterogeneous edge computing environment. Comput. Netw. 2022, 210, 108937. [Google Scholar] [CrossRef]

- Rubin, O.; Herzig, J.; Berant, J. Learning to retrieve prompts for in-context learning. arXiv 2021, arXiv:2112.08633. [Google Scholar]

- Min, S.; Lewis, M.; Hajishirzi, H.; Zettlemoyer, L. Noisy Channel Language Model Prompting for Few-Shot Text Classification. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 5316–5330. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI blog 2019, 1, 9. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Chen, X.; Wang, S.; Fu, B.; Long, M.; Wang, J. Catastrophic forgetting meets negative transfer: Batch spectral shrinkage for safe transfer learning. Adv. Neural Inf. Process. Syst. 2019, 32, 1–11. [Google Scholar]

- Xu, R.; Luo, F.; Zhang, Z.; Tan, C.; Chang, B.; Huang, S.; Huang, F. Raise a Child in Large Language Model: Towards Effective and Generalizable Fine-tuning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 9514–9528. [Google Scholar]

- Lee, C.; Cho, K.; Kang, W. Mixout: Effective Regularization to Finetune Large-scale Pretrained Language Models. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 30 April 2020. [Google Scholar]

- Wu, C.; Wu, F.; Qi, T.; Huang, Y. NoisyTune: A Little Noise Can Help You Finetune Pretrained Language Models Better. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Dublin, Ireland, 22–27 May 2022; pp. 680–685. [Google Scholar] [CrossRef]

- Aghajanyan, A.; Shrivastava, A.; Gupta, A.; Goyal, N.; Zettlemoyer, L.; Gupta, S. Better Fine-Tuning by Reducing Representational Collapse. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 30 April 2020. [Google Scholar]

- Chen, S.; Hou, Y.; Cui, Y.; Che, W.; Liu, T.; Yu, X. Recall and Learn: Fine-tuning Deep Pretrained Language Models with Less Forgetting. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 7870–7881. [Google Scholar] [CrossRef]

- Zhang, T.; Wu, F.; Katiyar, A.; Weinberger, K.Q.; Artzi, Y. Revisiting Few-sample BERT Fine-tuning. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 30 April 2020. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Huggingface’s transformers: State-of-the-art natural language processing. arXiv 2019, arXiv:1910.03771. [Google Scholar]

- Liu, L.; Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Han, J. On the Variance of the Adaptive Learning Rate and Beyond. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

| Company | Dataset | # Categories | # Training Samples | # Testing Samples | # Points in Each Sample |

|---|---|---|---|---|---|

| C1 | C1S1 | 2 | 50 | 13 | 96 |

| C1S2 | 2 | 77 | 20 | 96 | |

| C1S3 | 2 | 111 | 28 | 96 | |

| C2 | C2S1 | 2 | 176 | 44 | 96 |

| C2S2 | 2 | 78 | 20 | 96 | |

| C3 | C3S1 | 2 | 404 | 101 | 96 |

| C3S2 | 2 | 376 | 95 | 96 | |

| C3S3 | 2 | 422 | 106 | 96 | |

| C3S4 | 2 | 416 | 104 | 96 | |

| C3S5 | 2 | 410 | 103 | 96 | |

| C4 | C4S1 | 2 | 329 | 83 | 96 |

| C4S2 | 2 | 33 | 9 | 96 | |

| C4S3 | 2 | 336 | 84 | 96 |

| C1S1 | C1S2 | C1S3 | C2S1 | C2S2 | C3S1 | C3S2 | C3S3 | C3S4 | C3S5 | C4S1 | C4S2 | C4S3 | Average | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Vanilla Fine-tuning | 15.38 | 25.00 | 7.14 | 47.73 | 35.00 | 96.04 | 84.21 | 18.87 | 18.27 | 69.90 | 15.66 | 66.67 | 0.00 | 38.45 |

| BSS | 15.38 | 45.00 | 10.71 | 40.91 | 35.00 | 95.05 | 84.21 | 38.68 | 28.85 | 70.87 | 13.25 | 33.33 | 0.00 | 39.33 |

| ChildTune-F | 38.46 | 25.00 | 7.14 | 47.73 | 50.00 | 95.05 | 97.90 | 30.19 | 36.54 | 69.90 | 25.30 | 33.33 | 46.43 | 46.38 |

| ChildTune-D | 7.69 | 40.00 | 42.86 | 47.73 | 50.00 | 95.05 | 84.21 | 36.79 | 74.04 | 69.90 | 26.51 | 33.33 | 46.43 | 50.35 |

| Mixout | 15.38 | 45.00 | 46.43 | 47.73 | 50.00 | 96.04 | 97.90 | 38.68 | 51.92 | 70.87 | 43.37 | 55.56 | 45.24 | 54.16 |

| NoisyTune | 46.15 | 45.00 | 53.57 | 47.73 | 50.00 | 96.04 | 97.90 | 66.04 | 70.19 | 71.84 | 51.81 | 66.67 | 46.43 | 62.26 |

| R3F | 61.54 | 75.00 | 53.57 | 47.73 | 60.00 | 98.02 | 98.95 | 67.92 | 80.77 | 71.84 | 38.55 | 88.89 | 53.57 | 68.95 |

| RecAdam | 61.54 | 75.00 | 96.43 | 40.91 | 35.00 | 98.02 | 97.90 | 67.92 | 74.04 | 70.87 | 51.81 | 55.56 | 45.24 | 66.94 |

| ReInit | 53.85 | 60.00 | 50.00 | 40.91 | 50.00 | 98.02 | 84.21 | 68.87 | 51.92 | 70.87 | 59.04 | 77.78 | 98.81 | 66.48 |

| M-ICL (Ours) | 69.23 | 80.00 | 100.00 | 65.91 | 60.00 | 98.02 | 98.95 | 87.74 | 99.04 | 88.35 | 81.93 | 88.89 | 100.00 | 86.00 |

| C1S1 | C1S2 | C1S3 | C2S1 | C2S2 | C3S1 | C3S2 | C3S3 | C3S4 | C3S5 | C4S1 | C4S2 | C4S3 | Average | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Vanilla Fine-tuning | 46.15 | 15.00 | 53.57 | 31.82 | 35.00 | 97.03 | 94.74 | 39.62 | 62.50 | 75.73 | 27.71 | 77.78 | 100.00 | 58.20 |

| BSS | 23.08 | 20.00 | 53.57 | 45.45 | 40.00 | 97.03 | 94.74 | 20.76 | 18.27 | 72.82 | 20.48 | 77.78 | 88.10 | 51.70 |

| ChildTune-F | 53.85 | 15.00 | 46.43 | 45.45 | 45.00 | 98.02 | 94.74 | 37.74 | 39.42 | 72.82 | 55.42 | 77.78 | 100.00 | 60.13 |

| ChildTune-D | 38.46 | 70.00 | 21.43 | 45.45 | 40.00 | 98.02 | 94.74 | 33.96 | 62.50 | 70.87 | 55.42 | 88.89 | 100.00 | 63.06 |

| Mixout | 53.85 | 15.00 | 21.43 | 43.18 | 40.00 | 97.03 | 97.90 | 66.98 | 42.31 | 75.73 | 50.60 | 88.89 | 100.00 | 60.99 |

| NoisyTune | 46.15 | 70.00 | 46.43 | 43.18 | 40.00 | 97.03 | 94.74 | 66.98 | 42.31 | 72.82 | 57.83 | 100.00 | 88.10 | 66.58 |

| R3F | 46.15 | 90.00 | 46.43 | 43.18 | 35.00 | 98.02 | 97.90 | 53.77 | 76.92 | 70.87 | 71.08 | 100.00 | 88.10 | 70.57 |

| RecAdam | 84.62 | 70.00 | 100.00 | 50.00 | 45.00 | 97.03 | 94.74 | 68.87 | 76.92 | 75.73 | 69.88 | 100.00 | 100.00 | 79.45 |

| ReInit | 76.92 | 75.00 | 92.86 | 45.45 | 40.00 | 98.02 | 97.90 | 52.83 | 83.65 | 72.82 | 60.24 | 88.89 | 88.10 | 74.82 |

| M-ICL (Ours) | 84.62 | 90.00 | 100.00 | 77.27 | 60.00 | 99.01 | 98.95 | 88.68 | 100.00 | 92.23 | 98.80 | 100.00 | 100.00 | 91.50 |

| C1S1 | C1S2 | C1S3 | C2S1 | C2S2 | C3S1 | C3S2 | C3S3 | C3S4 | C3S5 | C4S1 | C4S2 | C4S3 | Average | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Vanilla Fine-tuning | 61.54 | 55.00 | 100.00 | 56.82 | 35.00 | 97.03 | 97.90 | 56.60 | 42.31 | 85.44 | 77.11 | 100.00 | 100.00 | 74.21 |

| BSS | 61.54 | 50.00 | 100.00 | 50.00 | 35.00 | 97.03 | 97.90 | 85.85 | 41.35 | 87.38 | 60.24 | 100.00 | 100.00 | 74.33 |

| ChildTune-F | 76.92 | 50.00 | 96.43 | 45.46 | 35.00 | 97.03 | 97.90 | 85.85 | 32.69 | 85.44 | 60.24 | 100.00 | 100.00 | 74.07 |

| ChildTune-D | 53.85 | 45.00 | 100.00 | 45.46 | 40.00 | 98.02 | 97.90 | 85.85 | 42.31 | 85.44 | 60.24 | 100.00 | 100.00 | 73.39 |

| Mixout | 76.92 | 45.00 | 100.00 | 45.46 | 40.00 | 97.03 | 97.90 | 87.74 | 58.65 | 85.44 | 71.08 | 100.00 | 100.00 | 77.32 |

| NoisyTune | 76.92 | 50.00 | 96.43 | 56.82 | 45.00 | 97.03 | 98.95 | 85.85 | 65.38 | 90.29 | 60.24 | 88.89 | 100.00 | 77.83 |

| R3F | 61.54 | 55.00 | 100.00 | 45.46 | 45.00 | 98.02 | 100.00 | 87.74 | 50.00 | 85.44 | 96.39 | 88.89 | 100.00 | 77.96 |

| RecAdam | 61.54 | 95.00 | 100.00 | 56.82 | 40.00 | 98.02 | 97.90 | 58.49 | 58.65 | 92.23 | 71.08 | 100.00 | 100.00 | 79.21 |

| ReInit | 92.31 | 80.00 | 100.00 | 56.82 | 35.00 | 97.03 | 98.95 | 88.68 | 75.00 | 85.44 | 96.39 | 100.00 | 100.00 | 85.05 |

| M-ICL (Ours) | 92.31 | 95.00 | 100.00 | 68.18 | 60.00 | 99.01 | 100.00 | 88.68 | 100.00 | 92.23 | 96.39 | 100.00 | 100.00 | 91.68 |

| K = 1 | K = 2 | K = 5 | ||||

|---|---|---|---|---|---|---|

| M-ICL (Ours) | 86.00 | / | 91.50 | / | 91.68 | / |

| M-ICL w/o Meta-Training | 41.75 | −44.25 | 42.61 | −48.89 | 45.94 | −45.74 |

| M-ICL w/o Predefined Template | 84.43 | −1.57 | 87.95 | −3.55 | 90.84 | −0.84 |

| M-ICL w/o Posterior Prediction | 85.14 | −0.86 | 90.43 | −1.07 | 90.75 | −0.93 |

| M-ICL w/o Fixing LLM | 69.65 | −16.35 | 76.11 | −15.39 | 87.48 | −4.20 |

| K = 1 | K = 2 | K = 5 | ||||

|---|---|---|---|---|---|---|

| Vanilla Fine-Tuning | 38.45 | / | 58.20 | / | 74.21 | / |

| M-ICL (GPT-2) | 86.00 | +47.55 | 91.50 | +33.30 | 91.68 | +17.47 |

| M-ICL (BERT) | 84.37 | +45.92 | 89.63 | +31.43 | 90.54 | +16.33 |

| M-ICL (RoBERTa) | 85.71 | +47.26 | 89.80 | +31.60 | 91.06 | +16.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, M.; Li, F.; Zhang, F.; Zheng, J.; Ma, Q. Meta In-Context Learning: Harnessing Large Language Models for Electrical Data Classification. Energies 2023, 16, 6679. https://doi.org/10.3390/en16186679

Zhou M, Li F, Zhang F, Zheng J, Ma Q. Meta In-Context Learning: Harnessing Large Language Models for Electrical Data Classification. Energies. 2023; 16(18):6679. https://doi.org/10.3390/en16186679

Chicago/Turabian StyleZhou, Mi, Fusheng Li, Fan Zhang, Junhao Zheng, and Qianli Ma. 2023. "Meta In-Context Learning: Harnessing Large Language Models for Electrical Data Classification" Energies 16, no. 18: 6679. https://doi.org/10.3390/en16186679

APA StyleZhou, M., Li, F., Zhang, F., Zheng, J., & Ma, Q. (2023). Meta In-Context Learning: Harnessing Large Language Models for Electrical Data Classification. Energies, 16(18), 6679. https://doi.org/10.3390/en16186679