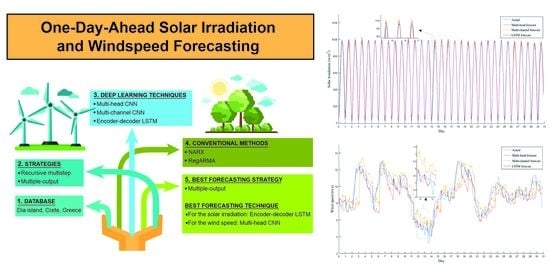

One-Day-Ahead Solar Irradiation and Windspeed Forecasting with Advanced Deep Learning Techniques

Abstract

:1. Introduction

- A series of experiments applying advanced deep-learning-based forecasting techniques were conducted, achieving high statistical accuracy forecasts.

- A thorough comparison is conducted successfully among advanced deep learning techniques and well-known conventional techniques for medium-term solar irradiance and windspeed forecasting to highlight the most effective among them.

- A cloud index per hour (NDD(h,d)) was introduced and used for the first time in order to improve medium-term solar irradiance forecasting.

- Data were categorized by each month for successive years, firstly due to the similarity of patterns of solar irradiation by month during the year, and secondly because of the relative seasonal similarity of the windspeed patterns, resulting in a monthly timeseries dataset, which is more significant for high-performance forecasting.

- A walk-forward validation forecast strategy in combination first with a recursive multistep forecast strategy and secondly with a multiple-output forecast strategy was successfully implemented in order to significantly improve medium-term windspeed and solar irradiation forecasts.

- The recursive multistep forecast strategy was compared to the multiple-output forecast strategy.

2. The Proposed Deep Learning Model Framework

2.1. Dataset Presentation

2.2. Presentation of the Proposed Deep Learning Models

2.2.1. Multi-Channel and Multi-Head CNNs

2.2.2. Encoder–Decoder LSTM

2.3. Solar and Wind Data Preprocessing and Forecasting Model Configurations

- 1.

- Direct Multistep Forecast Strategy.

- 2.

- Recursive Multistep Forecast Strategy.

- 3.

- Direct–Recursive Hybrid Multistep Forecast Strategy.

- 4.

- Multiple Output Forecast Strategy.

3. Deep Learning and Conventional Forecasting Model Performance and Discussion

3.1. Deep Learning Forecasting Performance Evaluation Using Well-Established Error Metrics

3.2. Evaluation of Conventional Forecasting Performance Methods Using Error Metrics

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations and Nomenclature

| Variable | Definition |

| ANN | Artificial neural networks |

| ARIMA | Autoregressive integrated moving average model |

| ARMA | Autoregressive moving average model |

| BiLSTM | Bidirectional long short-term memory neural network |

| BPNN | Back propagation neural network |

| CEEMD | Complementary ensemble empirical mode decomposition |

| CI | Clearness index |

| CNN | Convolutional neural network |

| DBN | Deep belief network |

| EMD-ENN | Empirical mode decomposition and Elman neural network |

| EWT | Empirical wavelet transform |

| FFNN | Feed forward neural networks |

| Gon | Normalized extraterrestrial irradiance |

| Gsn | Normalized surface irradiance |

| HTD | Hybrid timeseries decomposition strategy |

| GSRT | General Secretariat for Research and Technology |

| HFRI | Hellenic Foundation for Research and Innovation |

| HMD | Hybrid model decomposition method |

| K | Number of hours of each day |

| LSSVM | Least-square support vector machine |

| LSTM | Long short-term memory |

| MAE | Mean absolute error |

| MAPE | Mean absolute percentage error |

| ML | Machine learning |

| MOBBSA | Multi-objective binary backtracking search algorithm |

| MSE | Mean squared error |

| NARX | Nonlinear autoregressive exogenous model |

| NDD(d) | Normalized discrete difference per day |

| NDD(h) | Normalized discrete difference per hour |

| nMAE | Normalized mean absolute error |

| nRMSE | Normalized root mean squared error |

| NWP | Numerical weather prediction forecasting model |

| obs | Observation |

| OSORELM | Online sequential outlier robust extreme learning machine method |

| RegARMA | Regression model with autoregressive moving average errors |

| RES | Renewable energy sources |

| RMSE | Root mean squared error |

| RNN | Recurrent neural networks |

| seq2seq | Sequence-to-sequence |

| SEMS | smart energy management system |

| SVM | Support vector machine |

| TI | Turbulence intensity |

| VMD | Variational mode decomposition |

| WRF | Weather research and forecasting model |

| WT-ARIMA | Wavelet transform-autoregressive integrated moving average model |

| xi | Current value |

| xmax | Maximum original value |

| xmin | Minimum original value |

| y | Normalized value |

References

- Brahimi, T. Using Artificial Intelligence to Predict Wind Speed for Energy Application in Saudi Arabia. Energies 2019, 12, 4669. [Google Scholar] [CrossRef] [Green Version]

- Akarslan, E.; Hocaoglu, F.O. A novel method based on similarity for hourly solar irradiance forecasting. Renew. Energy 2017, 112, 337–346. [Google Scholar] [CrossRef]

- Kariniotakis, G.N.; Stavrakakis, G.S.; Nogaret, E.F. Wind power forecasting using advanced neural networks models. IEEE Trans. Energy Convers. 1996, 11, 762–767. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, L. On practical challenges of decomposition-based hybrid forecasting algorithms for wind speed and solar irradiation. Energy 2016, 112, 208–220. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Pan, G.; Chen, B.; Han, J.; Zhao, Y.; Zhang, C. Short-term wind speed prediction model based on GA-ANN improved by VMD. Renew. Energy 2020, 156, 1373–1388. [Google Scholar] [CrossRef]

- Wang, J.; Song, Y.; Liu, F.; Hou, R. Analysis and application of forecasting models in wind power integration: A review of multi-step-ahead wind speed forecasting models. Renew. Sustain. Energy Rev. 2016, 60, 960–981. [Google Scholar] [CrossRef]

- Husein, M.; Chung, I.Y. Day-ahead solar irradiance forecasting for microgrids using a long short-term memory recurrent neural network: A deep learning approach. Energies 2019, 12, 1856. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Zou, R.; Liu, F.; Zhang, L.; Liu, Q. A review of wind speed and wind power forecasting with deep neural networks. Appl. Energy 2021, 304, 117766. [Google Scholar] [CrossRef]

- Wu, B.; Wang, L.; Zeng, Y.R. Interpretable wind speed prediction with multivariate time series and temporal fusion transformers. Energy 2022, 252, 123990. [Google Scholar] [CrossRef]

- Liu, Z.; Jiang, P.; Wang, J.; Zhang, L. Ensemble forecasting system for short-term wind speed forecasting based on optimal sub-model selection and multi-objective version of mayfly optimization algorithm. Expert Syst. Appl. 2021, 177, 114974. [Google Scholar] [CrossRef]

- Bellinguer, K.; Girard, R.; Bontron, G.; Kariniotakis, G. Short-term Forecasting of Photovoltaic Generation based on Conditioned Learning of Geopotential Fields. In Proceedings of the 55th International Universities Power Engineering Conference—Virtual Conference UPEC 2020—“Verifying the Targets”, Torino, Italy, 1–4 September 2020; pp. 1–6. [Google Scholar]

- Mora-Lopez, L.I.; Sidrach-De-Cardona, M. Multiplicative ARMA models to generate hourly series of global irradiation. Sol. Energy 1998, 63, 283–291. [Google Scholar] [CrossRef]

- Erdem, E.; Shi, J. ARMA based approaches for forecasting the tuple of wind speed and direction. Appl. Energy 2011, 88, 1405–1414. [Google Scholar] [CrossRef]

- Wang, F.; Xu, H.; Xu, T.; Li, K.; Shafie-Khah, M.; Catalao, J.P.S. The values of market-based demand response on improving power system reliability under extreme circumstances. Appl. Energy 2017, 193, 220–231. [Google Scholar] [CrossRef]

- Yang, D.; Ye, Z.; Lim, L.H.I.; Dong, Z. Very short term irradiance forecasting using the lasso. Sol. Energy 2015, 114, 314–326. [Google Scholar] [CrossRef] [Green Version]

- Maafi, A.; Adane, A. A two-state Markovian model of global irradiation suitable for photovoltaic conversion. Sol. Wind Technol. 1989, 6, 247–252. [Google Scholar] [CrossRef]

- Shakya, A.; Michael, S.; Saunders, C.; Armstrong, D.; Pandey, P.; Chalise, S.; Tonkoski, R. Solar Irradiance Forecasting in Remote Microgrids Using Markov Switching Model. IEEE Trans. Sustain. Energy 2017, 8, 895–905. [Google Scholar] [CrossRef]

- Jiang, Y.; Long, H.; Zhang, Z.; Song, Z. Day-Ahead Prediction of Bihourly Solar Radiance with a Markov Switch Approach. IEEE Trans. Sustain. Energy 2017, 8, 1536–1547. [Google Scholar] [CrossRef]

- Ekici, B.B. A least squares support vector machine model for prediction of the next day solar insolation for effective use of PV systems. Measurement 2014, 50, 255–262. [Google Scholar] [CrossRef] [Green Version]

- Bae, K.Y.; Jang, H.S.; Sung, D.K. Hourly Solar Irradiance Prediction Based on Support Vector Machine and Its Error Analysis. IEEE Trans. Power Syst. 2017, 32, 935–945. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J. A novel decomposition-ensemble model for forecasting short term load-time series with multiple seasonal patterns. Appl. Soft Comput. 2018, 65, 478–494. [Google Scholar] [CrossRef]

- Yadab, A.K.; Chandel, S.S. Solar radiation prediction using Artificial Neural Network techniques: A review. Renew. Sustain. Energy Rev. 2014, 33, 772–781. [Google Scholar]

- Srivastava, S.; Lessmann, S. A comparative study of LSTM neural networks in forecasting day-ahead global horizontal irradiance with satellite data. Sol. Energy 2018, 162, 232–247. [Google Scholar] [CrossRef]

- Shi, Z.; Member, S.; Liang, H.; Dinavahi, V.; Member, S. Direct Interval Forecast of Uncertain Wind Power Based on Recurrent Neural Networks. IEEE Trans. Sustain. Energy 2018, 9, 1177–1187. [Google Scholar] [CrossRef]

- Cao, Q.; Ewing, B.T.; Thompson, M.A. Forecasting wind speed with recurrent neural networks. Eur. J. Oper. Res. 2012, 221, 148–154. [Google Scholar] [CrossRef]

- Liu, H.; Duan, Z.; Chen, C.; Wu, H. A novel two-stage deep learning wind speed forecasting method with adaptive multiple error corrections and bivariate Dirichlet process mixture model. Energy Convers. Manag. 2019, 199, 111975. [Google Scholar] [CrossRef]

- Zhu, A.; Li, X.; Mo, Z.; Wu, H. Wind Power Prediction Based on a Convolutional Neural Network. In Proceedings of the International Conference on Circuits, Devices and Systems, Tibet Hotel Chengdu, Chengdu, China, 5–8 September 2017; pp. 133–135. [Google Scholar]

- Li, Y.; Wu, H.; Liu, H. Multi-step wind speed forecasting using EWT decomposition, LSTM principal computing, RELM subordinate computing and IEWT reconstruction. Energy Convers. Manag. 2018, 167, 203–219. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Liu, H.; Mi, X.; Li, Y. Smart multi-step deep learning model for wind speed forecasting based on variational mode decomposition, singular spectrum analysis, LSTM network and ELM. Energy Convers. Manag. 2018, 159, 54–64. [Google Scholar] [CrossRef]

- Liu, H.; Mi, X.-W.; Li, Y.-F. Wind speed forecasting method based on deep learning strategy using empirical wavelet transform, long short term memory neural network and Elman neural network. Energy Convers. Manag. 2018, 156, 498–514. [Google Scholar] [CrossRef]

- Kotlyar, O.; Kamalian-Kopae, M.; Pankratova, M.; Vasylchenkova, A.; Prilepsky, J.E.; Turitsyn, S.K. Convolutional long short-term memory neural network equalizer for nonlinear Fourier transform-based optical transmission systems. Opt. Express 2021, 29, 11254–11267. [Google Scholar] [CrossRef]

- Wang, H.; Wang, G.; Li, G.; Peng, J.; Liu, Y. Deep belief network based deterministic and probabilistic wind speed forecasting approach. Appl. Energy 2016, 182, 80–93. [Google Scholar] [CrossRef]

- Zhou, Q.; Wang, C.; Zhang, G. Hybrid forecasting system based on an optimal model selection strategy for different wind speed forecasting problems. Appl. Energy 2019, 250, 1559–1580. [Google Scholar] [CrossRef]

- Viet, D.T.; Phuong, V.V.; Duong, M.Q.; Tran, Q.T. Models for short-term wind power forecasting based on improved artificial neural network using particle swarm optimization and genetic algorithms. Energies 2020, 13, 2873. [Google Scholar] [CrossRef]

- Wang, F.; Mi, Z.; Su, S.; Zhao, H. Short-Term Solar Irradiance Forecasting Model Based on Artificial Neural Network Using Statistical Feature Parameters. Energies 2012, 5, 1355–1370. [Google Scholar] [CrossRef] [Green Version]

- Arbizu-Barrena, C.; Ruiz-Arias, J.A.; Rodríguez-Benítez, F.J.; Pozo-Vázquez, D.; Tovar-Pescador, J. Short-term solar radiation forecasting by adverting and diffusing MSG cloud index. Sol. Energy 2017, 155, 1092–1103. [Google Scholar] [CrossRef]

- Voyant, C.; Muselli, M.; Paoli, C.; Nivet, M.-L. Numerical weather prediction (NWP) and hybrid ARMA/ANN model to predict global radiation. Energy 2012, 39, 341–355. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.; Zhen, Z.; Liu, C.; Mi, Z.; Hodge, B.M.; Shafie-khah, M.; Catalão, J.P.S. Image phase shift invariance based cloud motion displacement vector calculation method for ultra-short-term solar PV power forecasting. Energy Convers. Manag. 2018, 157, 123–135. [Google Scholar] [CrossRef]

- Wang, F.; Li, K.; Wang, X.; Jiang, L.; Ren, J.; Mi, Z.; Shafie-khah, M.; Catalão, J.P.S. A Distributed PV System Capacity Estimation Approach Based on Support Vector Machine with Customer Net Load Curve Features. Energies 2018, 11, 1750. [Google Scholar] [CrossRef] [Green Version]

- Verbois, H.; Huva, R.; Rusydi, A.; Walsh, W. Solar irradiance forecasting in the tropics using numerical weather prediction and statistical learning. Sol. Energy 2018, 162, 265–277. [Google Scholar] [CrossRef]

- Li, C.; Xiao, Z.; Xia, X.; Zou, W.; Zhang, C. A hybrid model based on synchronous optimization for multi-step short-term wind speed forecasting. Appl. Energy 2018, 215, 131–144. [Google Scholar] [CrossRef]

- Begam, K.M.; Deepa, S. Optimized nonlinear neural network architectural models for multistep wind speed forecasting. Comput. Electr. Eng. 2019, 78, 32–49. [Google Scholar] [CrossRef]

- Zhang, D.; Peng, X.; Pan, K.; Liu, Y. A novel wind speed forecasting based on hybrid decomposition and online sequential outlier robust extreme learning machine. Energy Convers. Manag. 2019, 180, 338–357. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, W.; Li, Y.; Wang, J.; Dang, Z. Forecasting wind speed using empirical mode decomposition and Elman neural network. Appl. Soft. Comput. 2014, 23, 452–459. [Google Scholar] [CrossRef]

- Singh, S.N.; Mohapatra, A. Repeated wavelet transform based ARIMA model for very short-term wind speed forecasting. Renew. Energy 2019, 136, 758–768. [Google Scholar]

- Hu, J.; Wang, J.; Ma, K. A hybrid technique for short-term wind speed prediction. Energy 2015, 81, 563–574. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, N.; Lu, H. A novel system based on neural networks with linear combination framework for wind speed forecasting. Energy Convers. Manag. 2019, 181, 425–442. [Google Scholar] [CrossRef]

- Tian, C.; Hao, Y.; Hu, J. A novel wind speed forecasting system based on hybrid data preprocessing and multi-objective optimization. Appl. Energy 2018, 231, 301–319. [Google Scholar] [CrossRef]

- Neshat, M.; Nezhad, M.M.; Abbasnejad, E.; Mirjalili, S.; Tjernberg, L.B.; Garcia, D.A.; Wagner, M. A deep learning-based evolutionary model for short-term wind speed forecasting: A case study of the Lillgrund offshore wind farm. Energy Convers. Manag. 2021, 236, 114002. [Google Scholar] [CrossRef]

- Lv, S.X.; Wang, L. Deep learning combined wind speed forecasting with hybrid time series decomposition and multi-objective parameter optimization. Appl. Energy 2022, 311, 118674. [Google Scholar] [CrossRef]

- Duan, J.; Zuo, H.; Bai, Y.; Duan, J.; Chang, M.; Chen, B. Short-term wind speed forecasting using recurrent neural networks with error correction. Energy 2021, 217, 119397. [Google Scholar] [CrossRef]

- Muneer, T. Solar radiation model for Europe. Build. Serv. Eng. Res. Technol. 1990, 11, 153–163. [Google Scholar] [CrossRef]

- Duffie, J.; Beckman, W.; Blair, N. Solar Engineering of Thermal Processes, Photovoltaics and Wind, 5th ed.; Wiley: Hoboken, NJ, USA, 2020; pp. 3–44. [Google Scholar]

- Voyant, C.; Notton, G.; Kalogirou, S.; Nivet, M.L.; Paoli, C.; Motte, F.; Fouilloy, A. Machine learning methods for solar radiation forecasting: A review. Renew. Energy 2017, 105, 569–582. [Google Scholar] [CrossRef]

- Chung, H.; Shin, K.-s. Genetic algorithm-optimized multi-channel convolutional neural network for stock market prediction. Neural Comput. Appl. 2020, 32, 7897–7914. [Google Scholar] [CrossRef]

- Karatzoglou, A. Multi-Channel Convolutional Neural Networks for Handling Multi-Dimensional Semantic Trajectories and Predicting Future Semantic Locations. International Workshop on Multiple-Aspect Analysis of Semantic Trajectories; Springer: Cham, Switzerland, 2019; pp. 117–132. [Google Scholar]

- Wikipedia. Available online: https://en.wikipedia.org/wiki/Convolutional_neural_network (accessed on 20 January 2021).

- Brownlee, J. Deep Learning for Time Series Forecasting: Predict the Future with MLPs, CNNs and LSTMs. In Python; Machine Learning Mastery: New York, NY, USA, 2018. [Google Scholar]

- Wikipedia. Available online: https://en.wikipedia.org/wiki/Long_short-term_memory (accessed on 23 January 2021).

- Medium. Available online: https://medium.com/ (accessed on 25 January 2021).

- Suradhaniwar, S.; Kar, S.; Durbha, S.S.; Jagarlapudi, A. Time Series Forecasting of Univariate Agrometeorological Data: A Comparative Performance Evaluation via One-Step and Multi-Step Ahead Forecasting Strategies. Sensors 2021, 21, 2430. [Google Scholar] [CrossRef] [PubMed]

- Neshat, M.; Nezhad, M.M.; Mirjalili, S.; Piras, G.; Garcia, D.A. Quaternion convolutional long short-term memory neural model with an adaptive decomposition method for wind speed forecasting: North aegean islands case studies. Energy Convers. Manag. 2022, 259, 115590. [Google Scholar] [CrossRef]

- Pareek, V.; Chaudhury, S. Deep learning-based gas identification and quantification with auto-tuning of hyper-parameters. Soft Comput. 2021, 25, 14155–14170. [Google Scholar] [CrossRef]

- Koutsoukas, A.; Monaghan, K.J.; Li, X.; Huan, J. Deep-learning: Investigating deep neural networks hyper-parameters and comparison of performance to shallow methods for modeling bioactivity data. J. Cheminformatics 2017, 9, 1–13. [Google Scholar] [CrossRef]

- Kwon, D.H.; Kim, J.B.; Heo, J.S.; Kim, C.M.; Han, Y.H. Time series classification of cryptocurrency price trend based on a recurrent LSTM neural network. J. Inf. Processing Syst. 2019, 15, 694–706. [Google Scholar]

- Qu, Z.; Xu, J.; Wang, Z.; Chi, R.; Liu, H. Prediction of electricity generation from a combined cycle power plant based on a stacking ensemble and its hyperparameter optimization with a grid-search method. Energy 2021, 227, 120309. [Google Scholar] [CrossRef]

- Lederer, J. Activation Functions in Artificial Neural Networks: A Systematic Overview. arXiv 2021, arXiv:2101.09957. [Google Scholar]

- Wang, J.; Qin, S.; Zhou, Q.; Jiang, H. Medium-term wind speeds forecasting utilizing hybrid models for three different sites in Xinjiang, China. Renew. Energy 2015, 76, 91–101. [Google Scholar] [CrossRef]

- Cai, H.; Jia, X.; Feng, J.; Yang, Q.; Hsu, Y.M.; Chen, Y.; Lee, J. A combined filtering strategy for short term and long term wind speed prediction with improved accuracy. Renew. Energy 2019, 136, 1082–1090. [Google Scholar] [CrossRef]

- Zhu, Q.; Chen, J.; Shi, D.; Zhu, L.; Bai, X.; Duan, X.; Liu, Y. Learning temporal and spatial correlations jointly: A unified framework for wind speed prediction. IEEE Trans. Sustain. Energy 2019, 11, 509–523. [Google Scholar] [CrossRef]

- Hošovský, A.; Piteľ, J.; Adámek, M.; Mižáková, J.; Židek, K. Comparative study of week-ahead forecasting of daily gas consumption in buildings using regression ARMA/SARMA and genetic-algorithm-optimized regression wavelet neural network models. J. Build. Eng. 2021, 34, 101955. [Google Scholar] [CrossRef]

- López, G.; Arboleya, P. Short-term wind speed forecasting over complex terrain using linear regression models and multivariable LSTM and NARX networks in the Andes Mountains, Ecuador. Renew. Energy 2022, 183, 351–368. [Google Scholar] [CrossRef]

- Github. Available online: https://github.com/tristanga/Machine-Learning (accessed on 21 January 2021).

- Github. Available online: https://github.com/vishnukanduri/Time-series-analysis-in-Python (accessed on 21 January 2021).

- Github. Available online: https://github.com/husnejahan/Multivariate-Time-series-Analysis-using-LSTM-ARIMA (accessed on 21 January 2021).

- Github. Available online: https://github.com/Alro10/deep-learning-time-series (accessed on 21 January 2021).

- Li, F.; Ren, G.; Lee, J. Multi-step wind speed prediction based on turbulence intensity and hybrid deep neural networks. Energy Convers. Manag. 2019, 186, 306–322. [Google Scholar] [CrossRef]

- Lan, H.; Zhang, C.; Hong, Y.Y.; He, Y.; Wen, S. Day-ahead spatiotemporal solar irradiation forecasting using frequency-based hybrid principal component analysis and neural network. Appl. Energy 2019, 247, 389–402. [Google Scholar] [CrossRef]

| Parameter | Unit |

|---|---|

| Air temperature | °C |

| Relative humidity | % |

| Windspeed | m/s |

| Wind direction | ° |

| Surface (air) pressure | Pa |

| Global irradiance on the horizontal plane | W/m2 |

| Beam/direct irradiance on a plane always normal to the sun rays | W/m2 |

| Diffuse irradiance on the horizontal plane | W/m2 |

| Surface infrared (thermal) irradiance on a horizontal plane | W/m2 |

| Extraterrestrial irradiation | W/m2 |

| Max | Min | Mean | Std | ||

|---|---|---|---|---|---|

| Solar Irradiation (W/m2) | 1032 | 0 | 208 | 305 | |

| Windspeed (m/s) | 17.88 | 0 | 5.84 | 3.05 | |

| Air temperature (°C) | 29.73 | 5 | 19.11 | 4.86 | |

| Relative humidity (%) | 99.88 | 48.55 | 77.23 | 7.82 | |

| Wind direction (°) | 360 | 0 | 253.2 | 118.9 | |

| Surface (air) pressure (Pa) | 103,845 | 97,349 | 100,306 | 576 | |

| Beam/direct irradiance on a plane always normal to the suns’ rays (W/m2) | 986 | 0 | 143 | 246 | |

| Diffuse irradiance on the horizontal plane (W/m2) | 646 | 0 | 65 | 85 | |

| Extraterrestrial irradiation (W/m2) | 1294 | 0 | 344 | 429 | |

| Windspeed and Solar Irradiation Forecasting | |||||

|---|---|---|---|---|---|

| Multi-Head CNN | Multi-Channel CNN | Encoder–Decoder LSTM | |||

| Layer | Configuration | Layer | Configuration | Layer | Configuration |

| Convolution 1 | Filters = 32 Kernel size = 3 | Convolution 1 | Filters = 32 Kernel size = 3 | LSTM 1 | Units = 200 |

| Convolution 2 | Filters = 32 Kernel size = 3 | Convolution 2 | Filters = 32 Kernel size = 3 | Repeat vector | - |

| Max-pooling 1 | Filters = 32 | Max-pooling 1 | Filters = 32 | LSTM 2 | Units = 200 |

| Flatten | - | Convolution 3 | Filters = 16 Kernel size = 3 | Dense 1 | Units = 100 |

| Concatenetion | - | Max-pooling 2 | Filters = 16 | Dense 2 | Units = 1 |

| Dense 1 | Neurons = 200 | Flatten | - | - | - |

| Dense 2 | Neurons = 100 | Dense 1 | Neurons = 100 | - | - |

| Dense 3 | Neurons = 24 | Dense 2 | Neurons = 24 | - | - |

| Multi-Channel CNN/Multi-Head CNN Encoder–Decoder LSTM |

|---|

| Optimizer: Adam |

| Activation function: Tanh |

| Mini-batch size: 16 |

| Learning Rate: 10−4 |

| Epochs for windspeed forecasting: 15 |

| Epochs for solar irradiation forecasting: 50 |

| Prior inputs: 24 |

| Mean Squared Error (MSE) | |

| Root Mean Squared Error (RMSE) | |

| Mean Absolute Percentage Error (MAPE) | |

| Mean Absolute Error (MAE) | |

| Normalized Root Mean Squared Error (nRMSE) | |

| Coefficient of Determination (r2) |

| (a) | |||||||||||||

| MAPE (%) | RMSE (W/m2) | MAE (W/m2) | nRMSE | ||||||||||

| CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | ||

| January | 114.35 | 93.35 | 91.57 | 195.09 | 186.68 | 180.37 | 140.01 | 133.16 | 125.55 | 0.79 | 0.74 | 0.72 | |

| February | 81.93 | 64.95 | 58.33 | 208.35 | 187.78 | 185.58 | 157.42 | 136.27 | 128.82 | 0.61 | 0.54 | 0.51 | |

| March | 144.02 | 132.22 | 129.16 | 282.32 | 265.99 | 251.70 | 186.67 | 179.94 | 176.40 | 0.69 | 0.68 | 0.64 | |

| April | 48.67 | 42.16 | 41.49 | 153.18 | 145.49 | 141.83 | 117.96 | 102.00 | 98.78 | 0.31 | 0.28 | 0.29 | |

| May | 88.36 | 75.90 | 73.99 | 216.97 | 206.20 | 201.86 | 138.29 | 126.18 | 122.88 | 0.40 | 0.37 | 0.35 | |

| June | 24.70 | 19.06 | 17.09 | 88.48 | 84.92 | 79.83 | 35.41 | 31.94 | 27.71 | 0.14 | 0.14 | 0.12 | |

| July | 16.19 | 17.10 | 12.26 | 50.08 | 47.75 | 43.84 | 25.56 | 27.32 | 22.31 | 0.07 | 0.05 | 0.05 | |

| August | 8.61 | 8.21 | 5.86 | 25.25 | 25.12 | 22.30 | 18.23 | 19.55 | 15.82 | 0.05 | 0.05 | 0.04 | |

| September | 44.29 | 40.34 | 23.99 | 100.33 | 95.60 | 85.73 | 74.04 | 57.74 | 53.77 | 0.24 | 0.25 | 0.17 | |

| October | 69.65 | 59.54 | 49.40 | 146.39 | 141.26 | 116.15 | 113.15 | 105.63 | 93.00 | 0.49 | 0.46 | 0.43 | |

| November | 79.15 | 68.20 | 65.89 | 155.85 | 145.30 | 137.58 | 119.54 | 106.59 | 104.28 | 0.51 | 0.48 | 0.43 | |

| December | 77.23 | 72.54 | 63.77 | 156.00 | 149.17 | 133.44 | 124.83 | 109.38 | 103.32 | 0.65 | 0.60 | 0.58 | |

| Average | 66.43 | 57.80 | 52.73 | 148.19 | 140.11 | 131.69 | 104.26 | 94.64 | 89.39 | 0.41 | 0.39 | 0.36 | |

| (b) | |||||||||||||

| MAPE (%) | RMSE (W/m2) | MAE (W/m2) | nRMSE | ||||||||||

| CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | ||

| January | 84.47 | 66.10 | 66.14 | 139.15 | 130.41 | 129.63 | 102.31 | 93.96 | 89.80 | 0.58 | 0.54 | 0.54 | |

| February | 50.36 | 44.98 | 42.23 | 148.53 | 133.71 | 132.86 | 99.92 | 97.15 | 93.55 | 0.40 | 0.39 | 0.37 | |

| March | 96.12 | 91.10 | 90.16 | 188.46 | 182.16 | 171.50 | 126.69 | 124.85 | 120.74 | 0.47 | 0.47 | 0.45 | |

| April | 36.18 | 32.10 | 32.32 | 122.56 | 109.86 | 112.59 | 88.81 | 80.30 | 78.78 | 0.23 | 0.22 | 0.23 | |

| May | 71.72 | 57.89 | 59.36 | 177.83 | 153.57 | 155.56 | 109.37 | 96.98 | 99.07 | 0.34 | 0.31 | 0.29 | |

| June | 20.21 | 15.51 | 13.99 | 72.53 | 71.31 | 65.64 | 28.64 | 26.65 | 23.40 | 0.11 | 0.12 | 0.11 | |

| July | 12.39 | 13.25 | 9.74 | 38.53 | 37.25 | 35.57 | 19.90 | 21.16 | 17.78 | 0.06 | 0.04 | 0.04 | |

| August | 6.42 | 6.59 | 4.72 | 19.05 | 19.97 | 17.74 | 14.30 | 15.50 | 12.64 | 0.04 | 0.04 | 0.03 | |

| September | 31.20 | 33.23 | 20.03 | 81.23 | 80.80 | 72.77 | 53.05 | 47.50 | 44.61 | 0.17 | 0.21 | 0.15 | |

| October | 48.21 | 41.27 | 37.98 | 98.56 | 98.01 | 90.85 | 76.27 | 72.40 | 71.21 | 0.35 | 0.33 | 0.31 | |

| November | 57.30 | 54.29 | 51.37 | 116.36 | 116.39 | 109.34 | 82.57 | 83.54 | 84.39 | 0.37 | 0.38 | 0.33 | |

| December | 58.40 | 51.00 | 45.59 | 107.10 | 107.95 | 96.64 | 84.88 | 77.35 | 76.35 | 0.46 | 0.44 | 0.42 | |

| Average | 47.75 | 42.28 | 39.47 | 109.16 | 103.45 | 99.23 | 73.89 | 69.78 | 67.69 | 0.30 | 0.29 | 0.27 | |

| (a) | |||||||||||||

| MAPE (%) | RMSE (m/s) | MAE (m/s) | nRMSE | ||||||||||

| CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | ||

| January | 30.3 | 34.06 | 31.56 | 2.9 | 3.04 | 3.04 | 2.05 | 2.28 | 2.16 | 0.33 | 0.35 | 0.35 | |

| February | 31.68 | 38.11 | 32.79 | 2.74 | 2.92 | 2.83 | 1.99 | 2.22 | 2.02 | 0.35 | 0.37 | 0.36 | |

| March | 39.31 | 41.83 | 41.67 | 2.87 | 3.10 | 3.10 | 1.98 | 2.19 | 2.19 | 0.39 | 0.40 | 0.40 | |

| April | 44.63 | 63.19 | 48.27 | 1.21 | 1.63 | 1.33 | 1.00 | 1.38 | 1.00 | 0.22 | 0.31 | 0.24 | |

| May | 37.50 | 40.8 | 39.9 | 2.16 | 2.39 | 2.29 | 1.64 | 1.85 | 1.79 | 0.35 | 0.39 | 0.38 | |

| June | 35.59 | 36.23 | 38.72 | 1.83 | 2.05 | 2.06 | 1.45 | 1.53 | 1.55 | 0.26 | 0.28 | 0.30 | |

| July | 13.02 | 13.53 | 14.11 | 1.69 | 1.75 | 1.76 | 1.09 | 1.14 | 1.14 | 0.18 | 0.19 | 0.19 | |

| August | 17.36 | 18.87 | 18.74 | 1.75 | 2.13 | 2.00 | 1.14 | 1.32 | 1.28 | 0.25 | 0.29 | 0.26 | |

| September | 17.67 | 20.37 | 19.81 | 1.78 | 2.07 | 1.83 | 1.12 | 1.36 | 1.31 | 0.23 | 0.27 | 0.24 | |

| October | 31.35 | 41.98 | 41.27 | 2.26 | 2.68 | 2.55 | 1.41 | 1.76 | 1.73 | 0.31 | 0.37 | 0.36 | |

| November | 36.45 | 40.96 | 39.73 | 2.33 | 2.77 | 2.69 | 1.63 | 2.00 | 1.82 | 0.36 | 0.44 | 0.42 | |

| December | 25.86 | 27.8 | 29.16 | 2.59 | 2.65 | 2.63 | 1.92 | 2.09 | 2.04 | 0.29 | 0.30 | 0.30 | |

| Average | 30.06 | 34.81 | 32.98 | 2.18 | 2.43 | 2.34 | 1.54 | 1.76 | 1.67 | 0.29 | 0.33 | 0.32 | |

| (b) | |||||||||||||

| MAPE (%) | RMSE (m/s) | MAE (m/s) | nRMSE | ||||||||||

| CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | CNN1 | CNN2 | LSTM | ||

| January | 24.98 | 26.23 | 25.94 | 2.48 | 2.40 | 2.49 | 1.78 | 1.78 | 1.77 | 0.29 | 0.28 | 0.29 | |

| February | 26.47 | 27.44 | 27.19 | 2.32 | 2.33 | 2.33 | 1.71 | 1.77 | 1.68 | 0.31 | 0.31 | 0.31 | |

| March | 34.78 | 34.91 | 37.85 | 2.55 | 2.58 | 2.61 | 1.79 | 1.88 | 1.86 | 0.33 | 0.33 | 0.35 | |

| April | 37.48 | 48.03 | 36.88 | 1.03 | 1.28 | 1.07 | 0.88 | 1.08 | 0.80 | 0.19 | 0.24 | 0.19 | |

| May | 33.70 | 34.92 | 34.30 | 1.93 | 2.06 | 1.97 | 1.44 | 1.56 | 1.59 | 0.33 | 0.33 | 0.33 | |

| June | 31.20 | 34.11 | 35.01 | 1.46 | 1.65 | 1.65 | 1.07 | 1.20 | 1.21 | 0.23 | 0.26 | 0.27 | |

| July | 11.02 | 10.47 | 11.52 | 1.44 | 1.41 | 1.44 | 0.95 | 0.90 | 0.93 | 0.16 | 0.16 | 0.16 | |

| August | 13.14 | 13.37 | 13.20 | 1.35 | 1.52 | 1.43 | 0.91 | 0.94 | 0.93 | 0.19 | 0.21 | 0.20 | |

| September | 13.41 | 15.01 | 14.62 | 1.40 | 1.56 | 1.37 | 0.88 | 0.98 | 0.97 | 0.18 | 0.21 | 0.18 | |

| October | 27.58 | 35.36 | 35.26 | 2.00 | 2.26 | 2.20 | 1.28 | 1.48 | 1.49 | 0.27 | 0.31 | 0.30 | |

| November | 30.67 | 33.39 | 31.43 | 1.93 | 2.24 | 2.15 | 1.38 | 1.58 | 1.53 | 0.30 | 0.35 | 0.33 | |

| December | 25.11 | 25.83 | 27.25 | 2.42 | 2.49 | 2.44 | 1.78 | 1.91 | 1.91 | 0.29 | 0.29 | 0.28 | |

| Average | 25.79 | 28.26 | 27.54 | 1.86 | 1.98 | 1.93 | 1.32 | 1.42 | 1.39 | 0.26 | 0.27 | 0.27 | |

| (a) | |||||||||||||

| Solar irradiation results | |||||||||||||

| MAPE (%) | RMSE (W/m2) | MAE (W/m2) | nRMSE | ||||||||||

| Reg ARMA | NARX | LSTM | Reg ARMA | NARX | LSTM | Reg ARMA | NARX | LSTM | Reg ARMA | NARX | LSTM | ||

| January | 146.08 | 127.72 | 91.57 | 221.53 | 206.23 | 180.37 | 154.25 | 149.91 | 125.55 | 0.91 | 0.82 | 0.72 | |

| February | 83.38 | 73.79 | 58.33 | 242.46 | 209.54 | 185.58 | 175.77 | 154.48 | 128.82 | 0.77 | 0.61 | 0.51 | |

| March | 176.89 | 160.73 | 129.16 | 291.50 | 280.21 | 251.70 | 200.03 | 195.26 | 176.40 | 0.76 | 0.73 | 0.64 | |

| April | 50.63 | 48.47 | 41.49 | 177.97 | 160.80 | 141.83 | 145.95 | 131.45 | 98.78 | 0.37 | 0.33 | 0.29 | |

| May | 88.58 | 84.86 | 73.99 | 231.43 | 224.74 | 201.86 | 140.49 | 136.35 | 122.88 | 0.46 | 0.41 | 0.35 | |

| June | 26.40 | 22.31 | 17.09 | 84.96 | 85.76 | 79.83 | 36.84 | 34.23 | 27.71 | 0.17 | 0.15 | 0.12 | |

| July | 18.57 | 15.84 | 12.26 | 49.12 | 48.01 | 43.84 | 30.54 | 27.95 | 22.31 | 0.11 | 0.08 | 0.05 | |

| August | 12.30 | 9.42 | 5.86 | 28.85 | 23.18 | 22.30 | 21.55 | 19.05 | 15.82 | 0.09 | 0.07 | 0.04 | |

| September | 51.03 | 42.04 | 23.99 | 111.34 | 98.45 | 85.73 | 77.06 | 65.22 | 53.77 | 0.32 | 0.28 | 0.17 | |

| October | 81.09 | 73.79 | 49.40 | 156.65 | 144.43 | 116.15 | 125.53 | 115.67 | 93.00 | 0.55 | 0.51 | 0.43 | |

| November | 87.39 | 74.39 | 65.89 | 177.22 | 158.83 | 137.58 | 123.56 | 107.99 | 104.28 | 0.61 | 0.55 | 0.43 | |

| December | 87.00 | 82.15 | 63.77 | 174.47 | 159.71 | 133.44 | 136.24 | 123.61 | 103.32 | 0.80 | 0.75 | 0.58 | |

| Average | 75.78 | 67.96 | 52.73 | 162.29 | 149.99 | 131.69 | 113.98 | 105.10 | 89.39 | 0.49 | 0.44 | 0.36 | |

| (b) | |||||||||||||

| Solar irradiation results | |||||||||||||

| MAPE (%) | RMSE (W/m2) | MAE (W/m2) | nRMSE | ||||||||||

| Reg ARMA | NARX | LSTM | Reg ARMA | NARX | LSTM | Reg ARMA | NARX | LSTM | Reg ARMA | NARX | LSTM | ||

| January | 105.26 | 95.25 | 66.14 | 158.32 | 151.70 | 129.63 | 113.13 | 110.70 | 89.80 | 0.69 | 0.63 | 0.54 | |

| February | 55.12 | 54.17 | 42.23 | 162.22 | 160.52 | 132.86 | 118.01 | 114.86 | 93.55 | 0.54 | 0.46 | 0.37 | |

| March | 124.37 | 115.31 | 90.16 | 204.58 | 202.59 | 171.50 | 144.22 | 140.60 | 120.74 | 0.55 | 0.55 | 0.45 | |

| April | 39.78 | 39.55 | 32.32 | 140.35 | 130.17 | 112.59 | 115.05 | 108.27 | 78.78 | 0.30 | 0.27 | 0.23 | |

| May | 74.63 | 68.87 | 59.36 | 194.54 | 179.89 | 155.56 | 120.10 | 111.14 | 99.07 | 0.40 | 0.36 | 0.29 | |

| June | 22.45 | 19.08 | 13.99 | 72.48 | 74.46 | 65.64 | 31.38 | 29.93 | 23.40 | 0.14 | 0.13 | 0.11 | |

| July | 14.75 | 13.01 | 9.74 | 39.57 | 39.80 | 35.57 | 24.54 | 23.02 | 17.78 | 0.09 | 0.07 | 0.04 | |

| August | 9.85 | 7.89 | 4.72 | 23.42 | 19.14 | 17.74 | 17.56 | 16.01 | 12.64 | 0.07 | 0.06 | 0.03 | |

| September | 38.19 | 35.88 | 20.03 | 84.49 | 85.88 | 72.77 | 58.86 | 56.80 | 44.61 | 0.25 | 0.23 | 0.15 | |

| October | 57.16 | 53.87 | 37.98 | 112.32 | 106.36 | 90.85 | 90.22 | 84.26 | 71.21 | 0.40 | 0.39 | 0.31 | |

| November | 63.49 | 62.14 | 51.37 | 132.11 | 133.82 | 109.34 | 90.94 | 90.33 | 84.39 | 0.46 | 0.45 | 0.33 | |

| December | 63.62 | 61.68 | 45.59 | 126.60 | 120.25 | 96.64 | 99.81 | 93.75 | 76.35 | 0.60 | 0.57 | 0.42 | |

| Average | 55.72 | 52.23 | 39.47 | 120.92 | 117.05 | 99.22 | 85.32 | 81.64 | 67.69 | 0.37 | 0.35 | 0.27 | |

| (a) | |||||||||||||

| Windspeed results | |||||||||||||

| MAPE(%) | RMSE (m/s) | MAE (m/s) | nRMSE | ||||||||||

| Reg ARMA | NARX | CNN1 | Reg ARMA | NARX | CNN1 | Reg ARMA | NARX | CNN1 | Reg ARMA | NARX | CNN1 | ||

| January | 48.81 | 40.09 | 30.30 | 3.41 | 3.23 | 2.90 | 2.71 | 2.40 | 2.05 | 0.39 | 0.37 | 0.33 | |

| February | 45.27 | 37.52 | 31.68 | 2.99 | 2.94 | 2.74 | 2.28 | 2.37 | 1.99 | 0.39 | 0.40 | 0.35 | |

| March | 49.48 | 47.56 | 39.31 | 3.27 | 3.26 | 2.87 | 2.30 | 2.30 | 1.98 | 0.43 | 0.42 | 0.39 | |

| April | 72.15 | 66.14 | 44.63 | 1.92 | 1.69 | 1.21 | 1.55 | 1.40 | 1.00 | 0.36 | 0.32 | 0.22 | |

| May | 44.37 | 42.37 | 37.50 | 2.61 | 2.48 | 2.16 | 1.96 | 1.89 | 1.64 | 0.43 | 0.41 | 0.35 | |

| June | 38.20 | 36.10 | 35.59 | 1.95 | 1.84 | 1.83 | 1.55 | 1.52 | 1.45 | 0.29 | 0.27 | 0.26 | |

| July | 19.06 | 15.21 | 13.02 | 2.44 | 2.05 | 1.69 | 1.71 | 1.32 | 1.09 | 0.27 | 0.23 | 0.18 | |

| August | 25.03 | 22.05 | 17.36 | 2.22 | 2.16 | 1.75 | 1.62 | 1.41 | 1.14 | 0.30 | 0.29 | 0.25 | |

| September | 25.83 | 22.43 | 17.67 | 2.23 | 2.22 | 1.78 | 1.70 | 1.58 | 1.12 | 0.30 | 0.29 | 0.23 | |

| October | 56.67 | 50.04 | 31.35 | 2.87 | 2.82 | 2.26 | 1.95 | 1.90 | 1.41 | 0.39 | 0.39 | 0.31 | |

| November | 52.14 | 49.50 | 36.45 | 2.88 | 2.88 | 2.33 | 2.10 | 2.07 | 1.63 | 0.45 | 0.44 | 0.36 | |

| December | 33.75 | 31.12 | 25.86 | 2.78 | 2.74 | 2.59 | 2.17 | 2.15 | 1.92 | 0.31 | 0.32 | 0.29 | |

| Average | 42.56 | 38.34 | 30.06 | 2.63 | 2.53 | 2.18 | 1.97 | 1.86 | 1.54 | 0.36 | 0.35 | 0.29 | |

| (b) | |||||||||||||

| Windspeed results | |||||||||||||

| MAPE(%) | RMSE (m/s) | MAE (m/s) | nRMSE | ||||||||||

| Reg ARMA | NARX | CNN1 | Reg ARMA | NARX | CNN1 | Reg ARMA | NARX | CNN1 | Reg ARMA | NARX | CNN1 | ||

| January | 38.58 | 33.92 | 24.98 | 2.78 | 2.76 | 2.48 | 2.15 | 2.06 | 1.78 | 0.32 | 0.33 | 0.29 | |

| February | 34.02 | 31.14 | 26.47 | 2.51 | 2.58 | 2.32 | 1.87 | 2.04 | 1.71 | 0.34 | 0.35 | 0.31 | |

| March | 42.18 | 40.96 | 34.78 | 2.82 | 2.82 | 2.55 | 2.02 | 2.13 | 1.79 | 0.37 | 0.37 | 0.33 | |

| April | 58.19 | 52.33 | 37.48 | 1.59 | 1.39 | 1.03 | 1.24 | 1.16 | 0.88 | 0.29 | 0.27 | 0.19 | |

| May | 40.05 | 38.17 | 33.70 | 2.33 | 2.22 | 1.93 | 1.73 | 1.70 | 1.44 | 0.38 | 0.37 | 0.33 | |

| June | 43.96 | 38.94 | 31.20 | 1.90 | 1.82 | 1.46 | 1.44 | 1.34 | 1.07 | 0.30 | 0.29 | 0.23 | |

| July | 15.47 | 12.73 | 11.02 | 1.99 | 1.73 | 1.44 | 1.41 | 1.11 | 0.95 | 0.22 | 0.20 | 0.16 | |

| August | 18.23 | 15.99 | 13.14 | 1.60 | 1.62 | 1.35 | 1.19 | 1.05 | 0.91 | 0.22 | 0.23 | 0.19 | |

| September | 19.42 | 16.87 | 13.41 | 1.70 | 1.74 | 1.40 | 1.31 | 1.21 | 0.88 | 0.23 | 0.24 | 0.18 | |

| October | 48.86 | 45.15 | 27.58 | 2.51 | 2.50 | 2.00 | 1.71 | 1.69 | 1.28 | 0.34 | 0.34 | 0.27 | |

| November | 43.60 | 40.93 | 30.67 | 2.36 | 2.40 | 1.93 | 1.73 | 1.87 | 1.38 | 0.37 | 0.37 | 0.30 | |

| December | 32.36 | 30.44 | 25.11 | 2.69 | 2.68 | 2.42 | 2.07 | 2.04 | 1.78 | 0.31 | 0.30 | 0.29 | |

| Average | 36.24 | 33.13 | 25.80 | 2.23 | 2.19 | 1.86 | 1.66 | 1.62 | 1.32 | 0.31 | 0.30 | 0.26 | |

| (a) | ||||||||||||

| January | February | March | April | May | June | July | August | September | October | November | December | |

| CNN1 MAPE | 30.3 | 31.68 | 39.31 | 44.63 | 37.5 | 35.59 | 13.02 | 17.36 | 17.67 | 31.35 | 36.45 | 25.86 |

| CNN1 MAPE improvement over NARX | 24.42% | 15.57% | 17.35% | 32.52% | 11.49% | 1.41% | 14.40% | 21.27% | 21.22% | 37.35% | 26.36% | 16.90% |

| Average TI | 0.402 | 0.459 | 0.429 | 0.592 | 0.388 | 0.434 | 0.226 | 0.303 | 0.333 | 0.519 | 0.461 | 0.408 |

| (b) | ||||||||||||

| January | February | March | April | May | June | July | August | September | October | November | December | |

| CNN1 MAPE | 24.98 | 26.47 | 34.78 | 37.48 | 33.7 | 31.2 | 11.02 | 13.14 | 13.41 | 27.58 | 30.67 | 25.11 |

| CNN1 MAPE improvement over NARX | 26.36% | 15.00% | 15.09% | 28.38% | 11.71% | 19.88% | 13.43% | 17.82% | 20.51% | 38.91% | 25.07% | 17.51% |

| Average TI | 0.402 | 0.459 | 0.429 | 0.592 | 0.388 | 0.434 | 0.226 | 0.303 | 0.333 | 0.519 | 0.461 | 0.408 |

| (a) | ||||||||||||

| January | February | March | April | May | June | July | August | September | October | November | December | |

| LSTM MAPE | 91.57 | 58.33 | 129.16 | 41.49 | 73.99 | 17.09 | 12.26 | 5.86 | 23.99 | 49.4 | 65.89 | 63.77 |

| LSTM MAPE improvement over NARX | 28.30% | 20.95% | 19.64% | 14.40% | 12.81% | 23.40% | 22.60% | 37.79% | 42.94% | 33.05% | 11.43% | 22.37% |

| Average CI | 0.42 | 0.45 | 0.49 | 0.56 | 0.60 | 0.64 | 0.65 | 0.64 | 0.62 | 0.55 | 0.50 | 0.43 |

| (b) | ||||||||||||

| January | February | March | April | May | June | July | August | September | October | November | December | |

| LSTM MAPE | 66.14 | 42.23 | 90.16 | 32.32 | 59.36 | 13.99 | 9.74 | 4.72 | 20.03 | 37.98 | 51.37 | 45.59 |

| LSTM MAPE improvement over NARX | 30.56% | 22.04% | 21.81% | 18.28% | 13.81% | 26.68% | 25.13% | 40.18% | 44.18% | 29.50% | 17.33% | 26.09% |

| Average CI | 0.42 | 0.45 | 0.49 | 0.56 | 0.60 | 0.64 | 0.65 | 0.64 | 0.62 | 0.55 | 0.50 | 0.43 |

| (a) | |||||||||||||

| Method | January | February | March | April | May | June | July | August | September | October | November | December | |

| Windspeed forecasting | CNN1 | 0.74 | 0.72 | 0.71 | 0.68 | 0.7 | 0.72 | 0.8 | 0.78 | 0.78 | 0.73 | 0.7 | 0.74 |

| Solar irradiation forecasting | LSTM | 0.64 | 0.71 | 0.59 | 0.75 | 0.68 | 0.86 | 0.87 | 0.92 | 0.85 | 0.76 | 0.72 | 0.72 |

| (b) | |||||||||||||

| Method | January | February | March | April | May | June | July | August | September | October | November | December | |

| Windspeed forecasting | CNN1 | 0.80 | 0.78 | 0.77 | 0.75 | 0.78 | 0.79 | 0.87 | 0.85 | 0.85 | 0.81 | 0.78 | 0.81 |

| Solar irradiation forecasting | LSTM | 0.71 | 0.78 | 0.67 | 0.84 | 0.74 | 0.95 | 0.95 | 0.97 | 0.93 | 0.85 | 0.80 | 0.79 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Blazakis, K.; Katsigiannis, Y.; Stavrakakis, G. One-Day-Ahead Solar Irradiation and Windspeed Forecasting with Advanced Deep Learning Techniques. Energies 2022, 15, 4361. https://doi.org/10.3390/en15124361

Blazakis K, Katsigiannis Y, Stavrakakis G. One-Day-Ahead Solar Irradiation and Windspeed Forecasting with Advanced Deep Learning Techniques. Energies. 2022; 15(12):4361. https://doi.org/10.3390/en15124361

Chicago/Turabian StyleBlazakis, Konstantinos, Yiannis Katsigiannis, and Georgios Stavrakakis. 2022. "One-Day-Ahead Solar Irradiation and Windspeed Forecasting with Advanced Deep Learning Techniques" Energies 15, no. 12: 4361. https://doi.org/10.3390/en15124361

APA StyleBlazakis, K., Katsigiannis, Y., & Stavrakakis, G. (2022). One-Day-Ahead Solar Irradiation and Windspeed Forecasting with Advanced Deep Learning Techniques. Energies, 15(12), 4361. https://doi.org/10.3390/en15124361