Comparative Analysis of Machine Learning Models for Day-Ahead Photovoltaic Power Production Forecasting †

Abstract

1. Introduction

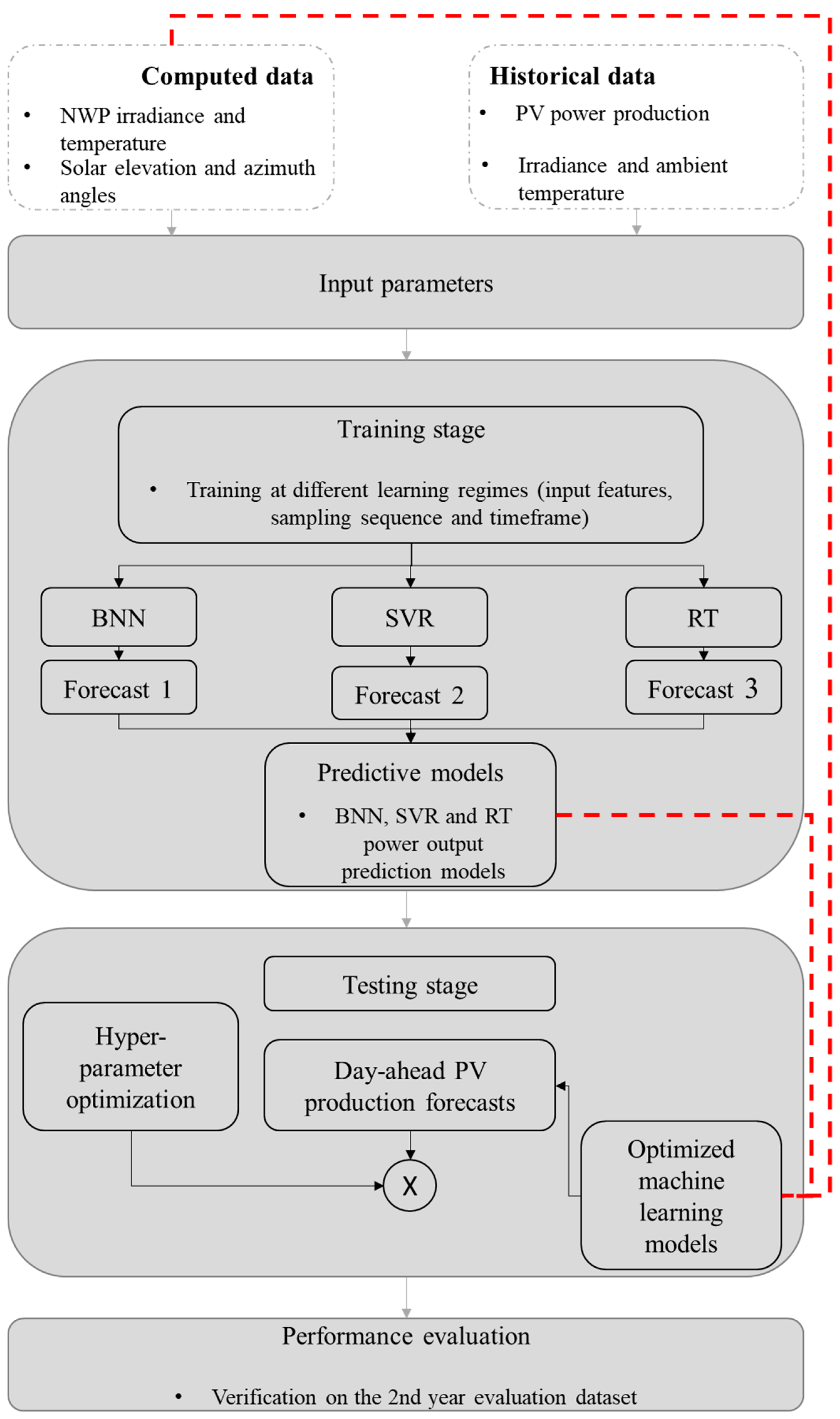

2. Materials and Methods

- The BNN, SVR, and RT models were trained with the same input features (input range, sampling rate, and parameters);

- The trained models were used to optimize their hyperparameters over a series of empirical and statistical procedures;

- The optimized models were verified through a series of performance evaluation techniques.

2.1. Experimental Setup

2.2. PV Power Output Predictive Models

2.2.1. Bayesian Neural Networks

2.2.2. Support Vector Regression

2.2.3. Regression Trees

2.3. Performance Evaluation

3. Results

3.1. Impact of Input Features

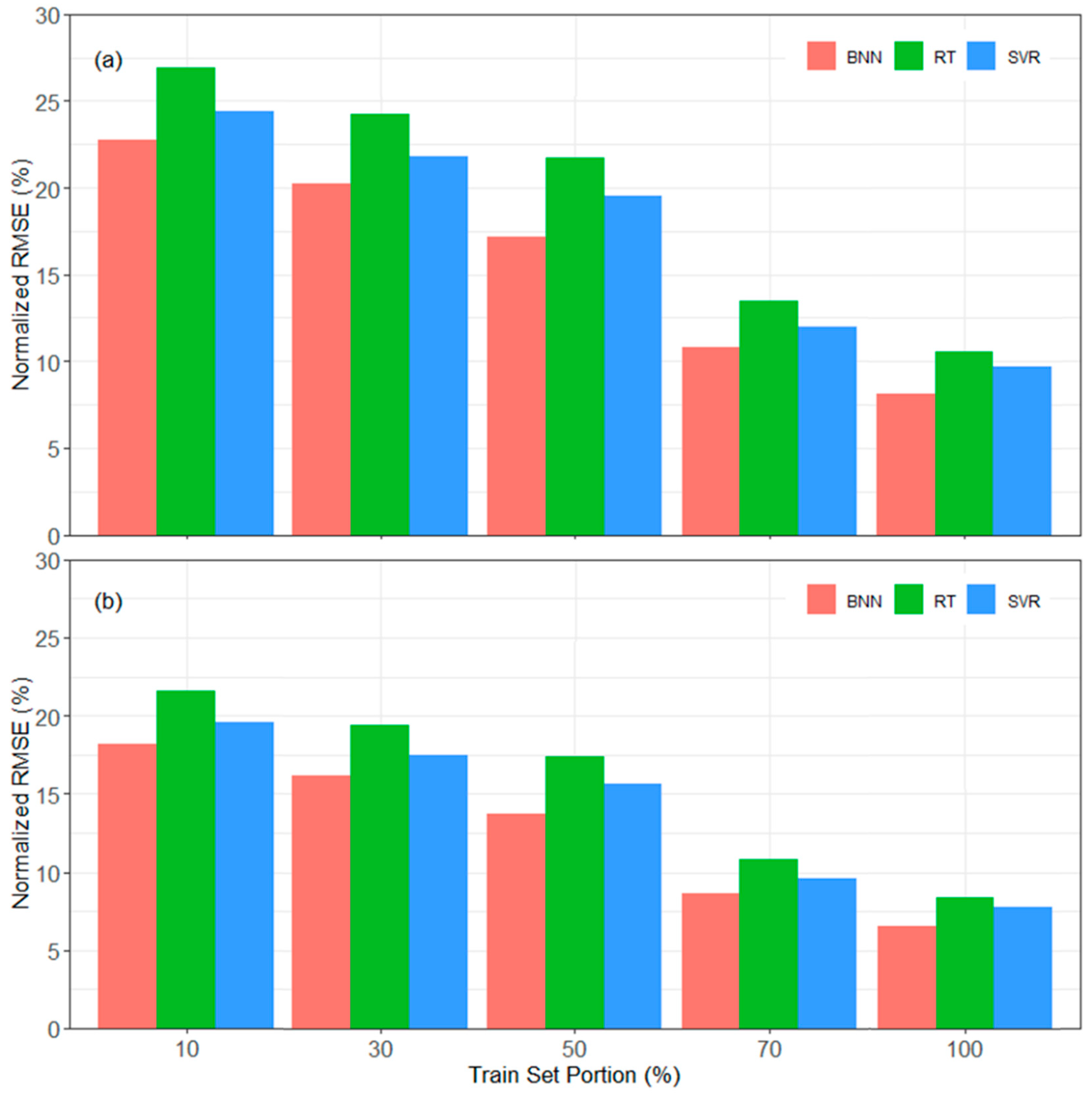

3.2. Impact of Training Set Timeframe

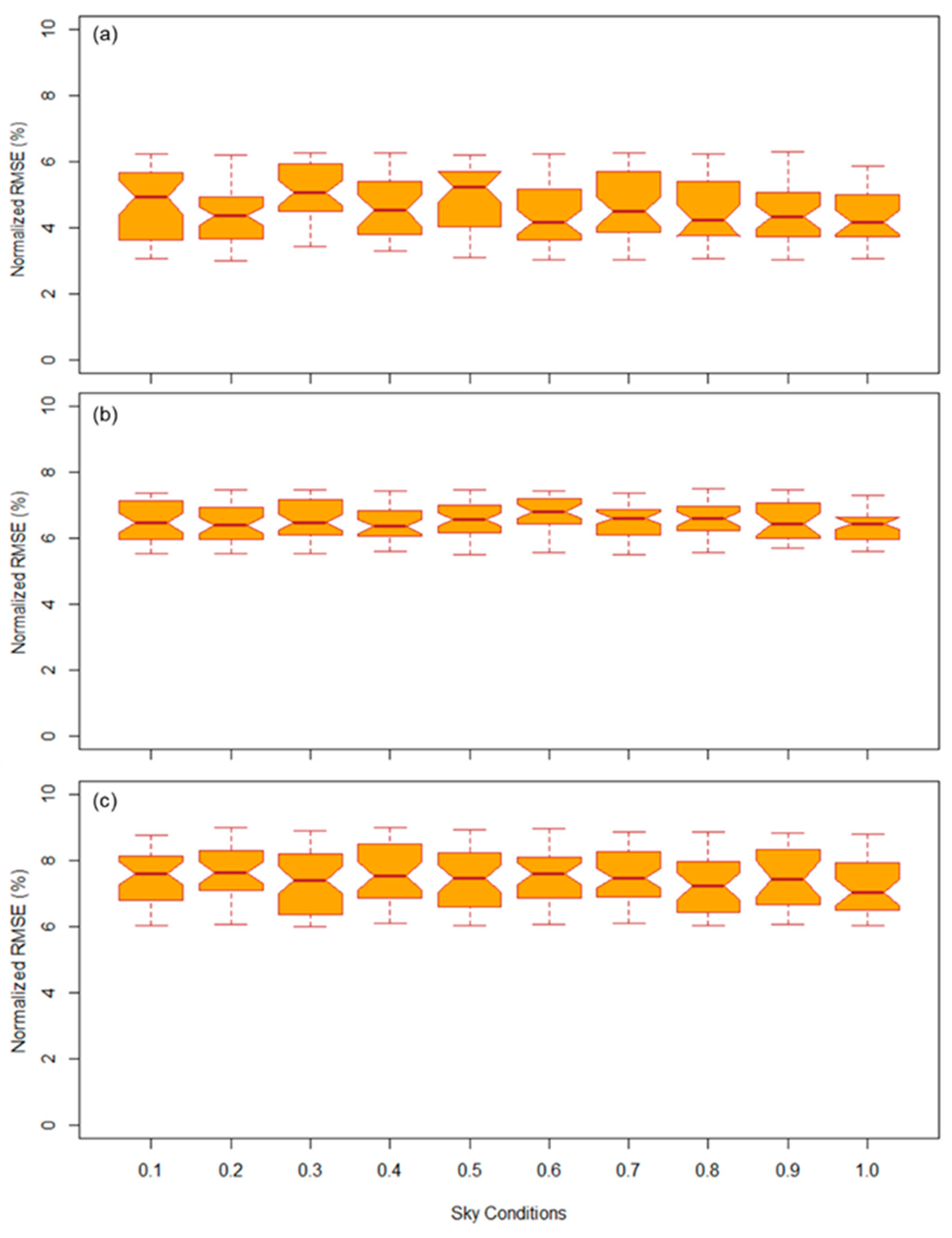

3.3. Impact of Irradiance Condition Filtering

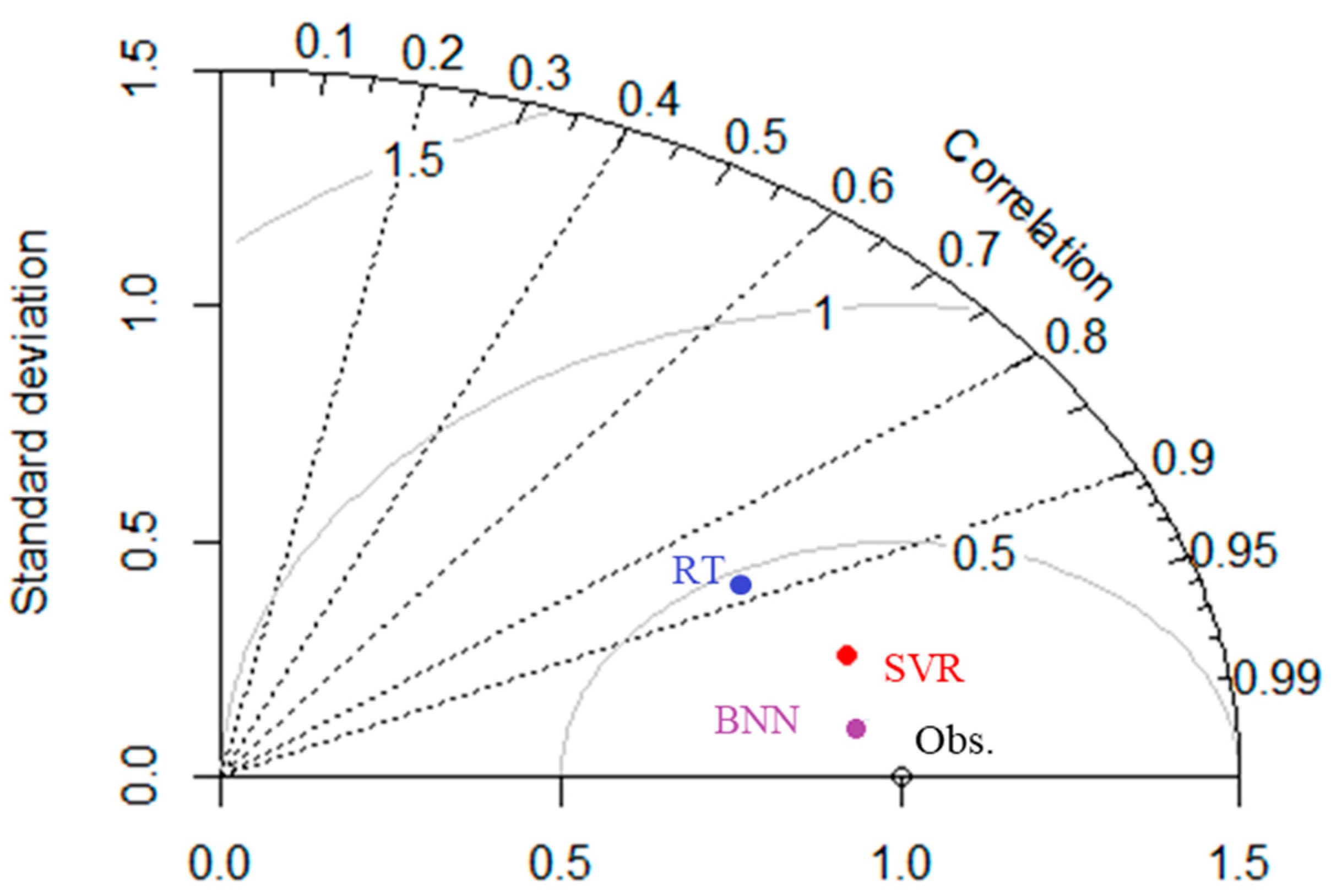

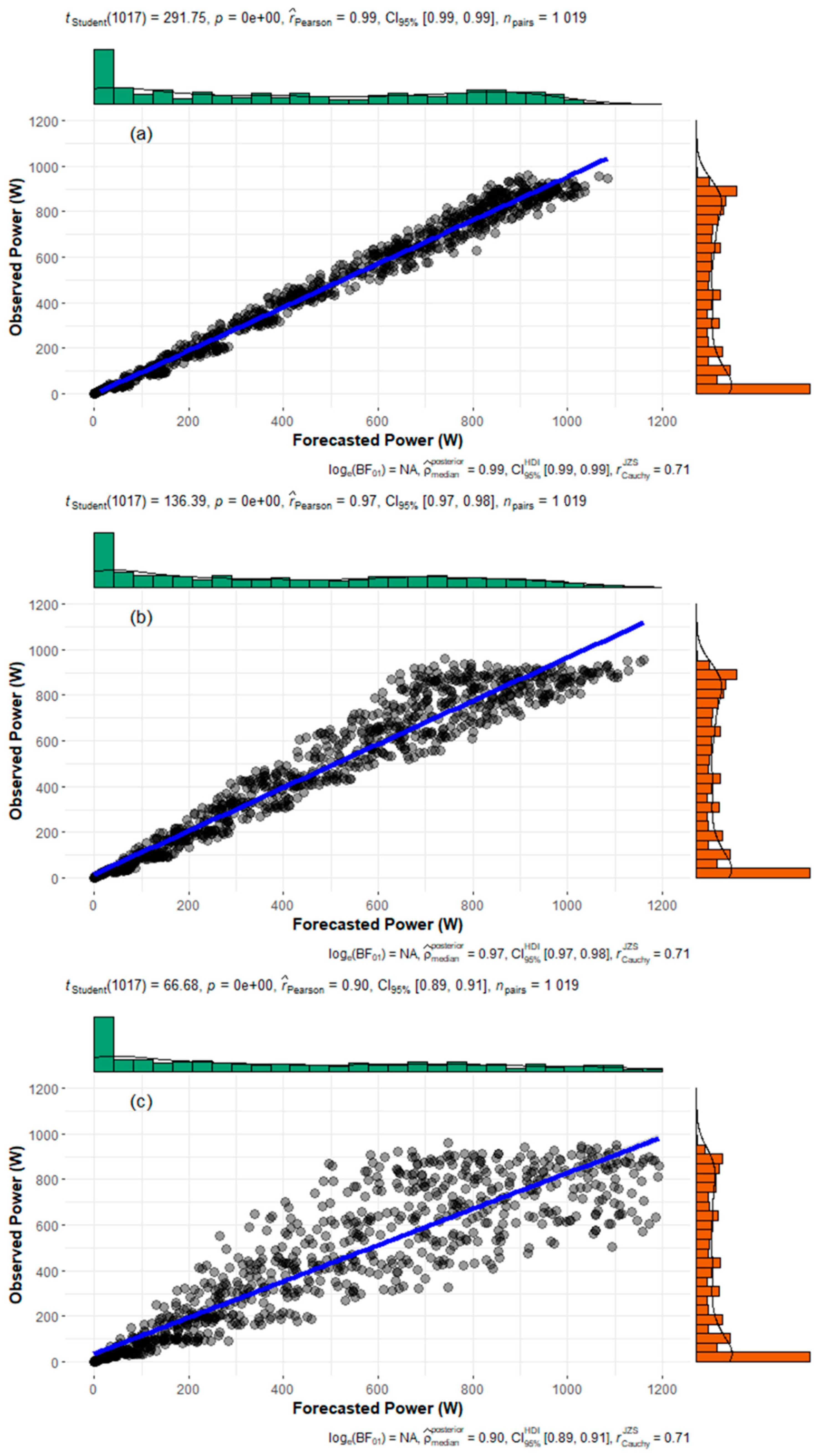

3.4. Optimized Day-Ahead PV Generation Forecasting Performance

4. Discussion

- Accurate day-ahead PV production forecasts were achieved without utilizing onsite weather measurements by inputting data that were computed from NWP models and solar position algorithms (, , and ) to machine learning models. The application of calculated input data compared to training with the respective on-site measured data provided maximum absolute improvements of up to 0.77% and 1.31% for the nRMSE and MAPE, respectively. This improvement is attributed to the correction of underlying biases of NWP forecasted data.

- Training the machine learning models at larger timeframes resulted in lower errors. This is attributed to the generic functionality of data-driven algorithms of capturing hidden behaviors from larger amounts of data.

- The application of irradiance filtering when training data-driven PV production forecasting models enhanced the performance of the constructed models. Specifically, the forecasting accuracy of all models was improved from the application of the irradiance condition filter (absolute difference in the range of 1.06–1.97% nRMSE and 0.71–2.22% MAPE when compared to the results without the application of an irradiance condition filter). The application of the high irradiance condition filter resulted in lower errors for all models rendering this filtering stage an important step in day-ahead data-driven methodologies. This can be attributed to the fact that low and medium irradiance conditions (<0.6 kW/m2) are associated with a higher power output dispersion (low-light and thermal effects), which in turn decreases the forecasting accuracy.

- Overall, the adoption of BNN principles outperformed all other investigated models (SVR and RT). More specifically, the study showed that the optimally trained BNN consistently outperformed all other models exhibiting nRMSE lower than 5% (nRMSE = 4.53%), while the SVR and RT models provided in general less accurate results. Several reasons to explain this effect include the ability of BNNs to simulate multiple possible models with an associated probability distribution and to become more certain with increasing data shares. The BNN model was substantially more accurate compared to the SVR and RT models for all sky conditions. This renders BNN approaches applicable for forecasting studies and favorable over other elaborate and computer intensive techniques.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- International Energy Agency. Electricity Security in Tomorrow’s Power Systems. 2020. Available online: https://www.iea.org/articles/electricity-security-in-tomorrow-s-power-systems (accessed on 17 December 2020).

- International Energy Agency. Renewables 2019: Market Analysis and Forecast from 2019 to 2024. 2019. Available online: https://www.iea.org/reports/renewables-2019 (accessed on 10 December 2020).

- International Energy Agency. Introduction to System Integration of Renewables: Decarbonising while Meeting Growing Demand. 2020. Available online: https://www.iea.org/reports/introduction-to-system-integration-of-renewables (accessed on 15 November 2020).

- Sadeghian, H.; Wang, Z. A novel impact-assessment framework for distributed PV installations in low-voltage secondary networks. Renew. Energy 2020, 147, 2179–2194. [Google Scholar] [CrossRef]

- Mahoor, M.; Majzoobi, A.; Khodaei, A. Distribution asset management through coordinated microgrid scheduling. IET Smart Grid 2018, 1, 159–168. [Google Scholar] [CrossRef]

- International Renewable Energy Agency. Innovation Landscape for a Renewable-Powered Future. 2019. Available online: https://www.irena.org/publications/2019/Feb/Innovation-landscape-for-a-renewable-powered-future (accessed on 10 November 2020).

- Inman, R.H.; Pedro, H.T.C.; Coimbra, C.F.M. Solar forecasting methods for renewable energy integration. Prog. Energy Combust. Sci. 2013, 39, 535–576. [Google Scholar] [CrossRef]

- Das, U.K.; Tey, K.S.; Seyedmahmoudian, M.; Mekhilef, S.; Idris, M.Y.I.; Van Deventer, W.; Horanc, B.; Stojcevskid, A. Forecasting of photovoltaic power generation and model optimization: A review. Renew. Sustain. Energy Rev. 2018, 81, 912–928. [Google Scholar] [CrossRef]

- International Energy Agency. Photovoltaic and Solar Forecasting: State of Art. 2013. Available online: https://iea-pvps.org/key-topics/photovoltaics-and-solar-forecasting-state-of-art-report-t1401-2013/ (accessed on 5 December 2020).

- Alanzi, M.; Alanzi, A.; Khodaei, A. Long-Term Solar Generation Forecasting. In Proceedings of the IEEE/PES Transmission and Distribution Conference and Exposition (T&D), Dallas, TX, USA, 3–5 May 2016; pp. 1–5. [Google Scholar]

- National Centers for Environmental Information. Global Forecast System. 2020. Available online: https://www.ncdc.noaa.gov/data-access/model-data/model-datasets/global-forcast-system-gfs (accessed on 17 November 2020).

- Perez, R.; Lorenz, E.; Pelland, S.; Beauharnois, M.; Van Knowe, G.; Hemker, K.J.; Heinemann, D.; Remund, J.; Mller, S.; Traunmller, W.; et al. Comparison of numerical weather prediction solar irradiance forecasts in the US, Canada and Europe. Sol. Energy 2013, 94, 305–326. [Google Scholar] [CrossRef]

- Voyant, C.; Muselli, M.; Paoli, C.; Nivet, M. Numerical weather prediction (NWP) and hybrid ARMA/ANN model to predict global radiation. Energy 2012, 39, 341–355. [Google Scholar] [CrossRef]

- Lara-Fanego, V.; Ruiz-Arias, J.; Pozo-Vazquez, D.; Santos-Alamillos, F.; Tovar-Pescador, J. Evaluation of the WRF model solar irradiance forecasts in Andalusia (southern Spain). Sol. Energy 2012, 86, 2200–2217. [Google Scholar] [CrossRef]

- Mathiesen, P.; Collier, C.; Kleissl, J. A high-resolution, cloud-assimilating numerical weather prediction model for solar irradiance forecasting. Sol. Energy 2013, 92, 47–61. [Google Scholar]

- Pelland, S.; Galanis, G.; Kallos, G. Solar and photovoltaic forecasting through post-processing of the global environmental multiscale numerical weather prediction model. Prog. Photovolt. Res. Appl. 2013, 21, 284–296. [Google Scholar] [CrossRef]

- Ohtake, H.; Shimose, K.; Fonseca, J.; Takashima, T.; Oozeki, T.; Yamada, Y. Accuracy of the solar irradiance forecasts of the Japan Meteorological Agency mesoscale model for the Kanto region, Japan. Sol. Energy 2013, 98, 138–152. [Google Scholar] [CrossRef]

- National Center for Atmospheric Research. Weather Research and Forecasting Model. 2020. Available online: https://www.mmm.ucar.edu/weather-research-and-forecasting-model (accessed on 17 December 2020).

- Jimenez, P.A.; Hacker, J.P.; Dudhia, J.; Haupt, S.E.; Ruiz-Arias, J.A.; Gueymard, C.A.; Thompson, G.; Eidhammer, T.; Deng, A. WRF-Solar: Description and clear-sky assessment of an augmented NWP model for solar power prediction. Bull. Am. Meteorol. Soc. 2016, 97, 1249–1264. [Google Scholar] [CrossRef]

- Da Silva Fonseca, J.G.; Oozeki, T.; Ohtake, H.; Shimose, K.I.; Takashima, T.; Ogimoto, K. Forecasting regional photovoltaic power generation—A comparison of strategies to obtain one-day-ahead data. Energy Procedia 2014, 57, 1337–1345. [Google Scholar]

- Ashraf, I.; Chandra, A. Artificial neural network based models for forecasting electricity generation of grid connected solar PV power plant. Int. J. Glob. Energy Issues 2004, 21, 119–130. [Google Scholar] [CrossRef]

- Caputo, D.; Grimaccia, F.; Mussetta, M.; Zich, R.E. Photovoltaic plants predictive model by means of ANN trained by a hybrid evolutionary algorithm. In Proceedings of the 2010 International Joint Conference on Neural Networks (IJCNN), Barcelona, Spain, 18–23 July 2010; pp. 1–6. [Google Scholar]

- Kalogirou, S.A. Applications of artificial neural-networks for energy systems. Appl. Energy 2000, 67, 17–35. [Google Scholar] [CrossRef]

- Mellit, A.; Pavan, A.M. A 24-h forecast of solar irradiance using artificial neural network: Application for performance prediction of a grid-connected PV plant at Trieste, Italy. Sol. Energy 2010, 84, 807–821. [Google Scholar] [CrossRef]

- Almonacid, F.; Pérez-Higueras, P.J.; Fernández, E.F.; Hontoria, L. A methodology based on dynamic artificial neural network for short-term forecasting of the power output of a PV generator. Energy Convers. Manag. 2014, 85, 389–398. [Google Scholar] [CrossRef]

- Yona, A.; Senjyu, T.; Saber, A.Y.; Funabashi, T.; Sekine, H.; Kim, C.H. Application of neural network to 24-hour-ahead generating power forecasting for PV system. In Proceedings of the 2007 International Conference on Intelligent Systems Applications to Power Systems, Nigata, Japan, 5–8 November 2007; pp. 1–6. [Google Scholar]

- Zhang, Y.; Beaudin, M.; Taheri, R.; Zareipour, H.; Wood, D. Day-ahead power output forecasting for small-scale solar photovoltaic electricity generators. IEEE Trans. Smart Grid 2015, 6, 2253–2262. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, N.; Zhao, Y.; Zhan, J. Photovoltaic system power forecasting based on combined grey model and BP neural network. In Proceedings of the 2011 International Conference on Electrical and Control Engineering, Yichang, China, 16–18 September 2011; pp. 1–6. [Google Scholar]

- Mellit, A.; Massi Pavan, A.; Lughi, V. Short-term forecasting of power production in a large-scale photovoltaic plant. Sol. Energy 2014, 105, 401–413. [Google Scholar] [CrossRef]

- Zamo, M.; Mestre, O.; Arbogast, P.; Pannekoucke, O. A benchmark of statistical regression methods for short-term forecasting of photovoltaic electricity production. Part I: Probabilistic forecast of daily production. Sol. Energy 2014, 105, 792–803. [Google Scholar] [CrossRef]

- Eseye, A.T.; Zhang, J.; Zheng, D. Short-term photovoltaic solar power forecasting using a hybrid Wavelet-PSO-SVM model based on SCADA and meteorological information. Renew. Energy 2018, 118, 357–367. [Google Scholar] [CrossRef]

- Preda, S.; Oprea, S.; Bâra, A.; Belciu, A. PV forecasting using support vector machine learning in a big data analytics context. Symmetry 2018, 10, 748. [Google Scholar] [CrossRef]

- Zendehboudia, A.; Baseerb, M.A.; Saidurcd, R. Application of support vector machine models for forecasting solar and wind energy resources: A review. J. Clean. Prod. 2018, 199, 272–285. [Google Scholar] [CrossRef]

- Zhu, H.; Lian, W.; Lu, L.; Dai, S.; Hu, Y. An improved forecasting method for photovoltaic power based on adaptive BP neural network with a scrolling time window. Energies 2017, 10, 1542. [Google Scholar] [CrossRef]

- Dumitru, C.; Gligor, A.; Enachescu, C. Solar photovoltaic energy production Forecast Using Neural Networks. Procedia Technol. 2016, 22, 808–815. [Google Scholar] [CrossRef]

- Liu, L.; Zhao, Y.; Chang, D.; Xie, J.; Ma, Z.; Sun, Q.; Yin, H.; Wennersten, R. Prediction of short-term PV power output and uncertainty analysis. Appl. Energy 2018, 228, 700–711. [Google Scholar] [CrossRef]

- Benali, L.; Notton, G.; Fouilloy, A.; Voyant, C.; Dizene, R. Solar radiation forecasting using artificial neural network and random forest methods: Application to normal beam, horizontal diffuse and global components. Renew. Energy 2019, 132, 871–884. [Google Scholar] [CrossRef]

- Prasad, R.; Ali, M.; Kwan, P.; Khan, H. Designing a multi-stage multivariate empirical mode decomposition coupled with ant colony optimization and random forest model to forecast monthly solar radiation. Appl. Energy 2019, 236, 778–792. [Google Scholar] [CrossRef]

- Torres, J.F.; Troncoso, A.; Koprinska, I.; Wang, Z.; Martínez-Álvarez, F. Big data solar power forecasting based on deep learning and multiple data sources. Expert Syst. 2019, 39, 1–14. [Google Scholar] [CrossRef]

- Wang, K.; Qi, X.; Liu, H. A comparison of day-ahead photovoltaic power forecasting models based on deep learning neural network. Appl. Energy 2019, 251, 1–14. [Google Scholar] [CrossRef]

- Abdel-Nasser, M.; Mahmoud, K. Accurate photovoltaic power forecasting models using deep LSTM-RNN. Neural Comput. Appl. 2017, 31, 2727–2740. [Google Scholar] [CrossRef]

- Wang, H.; Yi, H.; Peng, J.; Wang, G.; Liu, Y.; Jiang, H.; Liu, W. Deterministic and probabilistic forecasting of photovoltaic power based on deep convolutional neural network. Energy Convers. Manag. 2017, 153, 409–422. [Google Scholar] [CrossRef]

- Theocharides, S.; Makrides, G.; Georghiou, G.E.; Kyprianou, A. Machine learning algorithms for photovoltaic system power output prediction. In Proceedings of the 2018 IEEE International Energy Conference, Limassol, Cyprus, 3–7 June 2018; pp. 1–6. [Google Scholar]

- Glassley, W.; Kleissl, J.; Dam, C.P.; Shiu, H.; Huang, J. California Renewable Energy Forecasting, Resource Data and Mapping Executive Summary; Public Interest Energy Research (PIER) Program; California Energy Commission: Sacramento, CA, USA, 2010; pp. 1–135.

- Bermejo, J.H.; Fernández, J.F.; Polo, F.O.; Márquez, A.C. A review of the use of artificial neural network models for energy and reliability prediction. A study of the solar PV, hydraulic and wind energy sources. Appl. Sci. 2019, 9, 1844. [Google Scholar] [CrossRef]

- Raza, M.Q.; Nadarajah, M.; Ekanayake, C. On recent advances in PV output power forecast. Sol. Energy 2016, 136, 125–144. [Google Scholar] [CrossRef]

- Nespoli, A.; Ogliari, E.; Leva, S.; Massi Pavan, A.; Mellit, A.; Lughi, V.; Dolara, A. Day-Ahead Photovoltaic Forecasting: A Comparison of the Most Effective Techniques. Energies 2019, 12, 1621. [Google Scholar] [CrossRef]

- Chen, C.; Duan, S.; Cai, T.; Liu, B. Online 24-h solar power forecasting based on weather type classification using artificial neural network. Sol. Energy 2011, 85, 2856–2870. [Google Scholar] [CrossRef]

- Dolara, A.; Grimaccia, F.; Leva, S.; Mussetta, M.; Ogliari, E. A physical hybrid artificial neural network for short term forecasting of PV plant power output. Energies 2015, 8, 1138–1153. [Google Scholar] [CrossRef]

- IEC 61724-1. Photovoltaic System Performance—Part 1: Monitoring; IEC: Geneva, Switzerland, 2017. [Google Scholar]

- Reda, I.; Adreas, A. Solar position algorithm for solar radiation applications. Sol. Energy 2004, 76, 577–589. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2019. [Google Scholar]

- Livera, A.; Theristis, M.; Koumpli, E.; Theocharides, S.; Makrides, G.; Joshua, S.S.; Georghiou, G.E. Data processing and quality verification for improved photovoltaic performance and reliability analytics. Prog. Photovoltaics Res. Appl. 2020. [Google Scholar] [CrossRef]

- Theocharides, S.; Makrides, G.; Livera, A.; Theristis, M.; Kaimakis, P.; Georghiou, G.E. Day-ahead photovoltaic power production forecasting methodology based on machine learning and statistical post-processing. Appl. Energy 2020, 268, 1–14. [Google Scholar] [CrossRef]

- Jospin, L.; Buntine, W.; Boussaid, F.; Laga, H.; Bennamoun, M. Hands-on Bayesian neural networks—A tutorial for deep learning users. ACM Comput. Surv. 2020, 1, 1–35. [Google Scholar]

- Polson, N.; Sokolov, V. Deep learning: A Bayesian perspective. Bayesian Anal. 2017, 12, 1275–1304. [Google Scholar] [CrossRef]

- Zhou, Z. Ensemble Methods: Foundations and Algorithms, 1st ed.; Chapman and Hall/CRC: Boca Raton, FL, USA, 2012; pp. 1–236. [Google Scholar]

- MacKay, D.J.C. Bayesian Interpolation. Neural Comput. 1992, 4, 415–447. [Google Scholar] [CrossRef]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Gordon, A.D.; Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees. Biometrics 1984, 40, 358–361. [Google Scholar] [CrossRef]

- Taylor, K.E. Summarizing multiple aspects of model performance in a single diagram. J. Geophys. Res. 2001, 106, 7183–7192. [Google Scholar] [CrossRef]

- Shapiro, S.S.; Wilk, M.B. An analysis of variance test for normality (complete samples). Biometrika 1965, 52, 591–611. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons by ranking methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

- European Technology and Innovation Platform. Accessing the Need for Better Forecasting and Observability of PV; ETIP PV—SEC II; European Union: Brussels, Belgium, 2017; pp. 1–21. [Google Scholar]

| Parameter | Manufacturer Model | Manufacturer’s Headquarters | Accuracy |

|---|---|---|---|

| Data acquisition | Campbell Scientific CR1000 | Logan, UT, USA | ±0.06% |

| Ambient temperature | Rotronic HC2A-S3 | Bassersdorf, Switzerland | ±0.1 °C at 23 °C |

| In-plane irradiance | Kipp Zonen CMP11 | Delft, The Netherlands | ±2% expected daily accuracy, ±20 W/m2 for 1000 W/m2 |

| DC voltage | Muller Ziegler Ugt | Gunzenhausen Germany | ±0.5% |

| DC current | Muller Ziegler Igt | Gunzenhausen Germany | ±0.5% |

| AC energy | Muller Ziegler EZW | Gunzenhausen Germany | ±1% |

| Inputs | Timeframe Partition | Sampling | Output |

|---|---|---|---|

| , , and | 10–70% (at 20% resolution) | Sequential/Random | |

| , , and | 100% (entire year) | - | |

| , , and | 10–70% (at 20% resolution) | Sequential/Random | |

| , , and | 100% (entire year) | - |

| Model | nRMSE (%) | MAPE (%) |

|---|---|---|

| BNN | 6.95 | 6.07 |

| SVR | 8.51 | 7.92 |

| RT | 8.97 | 8.12 |

| Model | nRMSE (%) | MAPE (%) |

|---|---|---|

| BNN | 6.51 | 5.39 |

| SVR | 7.74 | 6.61 |

| RT | 8.43 | 6.98 |

| BNN | SVR | RT | ||||

|---|---|---|---|---|---|---|

| Irradiance | nRMSE (%) | MAPE (%) | nRMSE (%) | MAPE (%) | nRMSE (%) | MAPE (%) |

| <600 W/m2 | 5.32 | 3.78 | 6.82 | 6.14 | 8.09 | 7.85 |

| ≥600 W/m2 | 4.53 | 3.17 | 6.37 | 5.83 | 7.37 | 6.27 |

| No Filter | 6.51 | 5.39 | 7.74 | 6.61 | 8.43 | 6.98 |

| Performance Metrics | |||||

|---|---|---|---|---|---|

| Models | MAPE (%) | RMSE (W) | nRMSE (%) | nMBE (%) | SS (%) |

| BNN | 3.17 | 53.22 | 4.53 | 2.89 | 78.33 |

| SVR | 5.83 | 81.89 | 6.37 | 4.26 | 63.14 |

| RT | 6.27 | 86.59 | 7.37 | 5.42 | 53.71 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Theocharides, S.; Theristis, M.; Makrides, G.; Kynigos, M.; Spanias, C.; Georghiou, G.E. Comparative Analysis of Machine Learning Models for Day-Ahead Photovoltaic Power Production Forecasting. Energies 2021, 14, 1081. https://doi.org/10.3390/en14041081

Theocharides S, Theristis M, Makrides G, Kynigos M, Spanias C, Georghiou GE. Comparative Analysis of Machine Learning Models for Day-Ahead Photovoltaic Power Production Forecasting. Energies. 2021; 14(4):1081. https://doi.org/10.3390/en14041081

Chicago/Turabian StyleTheocharides, Spyros, Marios Theristis, George Makrides, Marios Kynigos, Chrysovalantis Spanias, and George E. Georghiou. 2021. "Comparative Analysis of Machine Learning Models for Day-Ahead Photovoltaic Power Production Forecasting" Energies 14, no. 4: 1081. https://doi.org/10.3390/en14041081

APA StyleTheocharides, S., Theristis, M., Makrides, G., Kynigos, M., Spanias, C., & Georghiou, G. E. (2021). Comparative Analysis of Machine Learning Models for Day-Ahead Photovoltaic Power Production Forecasting. Energies, 14(4), 1081. https://doi.org/10.3390/en14041081