Learning Feedforward Control Using Multiagent Control Approach for Motion Control Systems

Abstract

1. Introduction

2. Background and Related Works

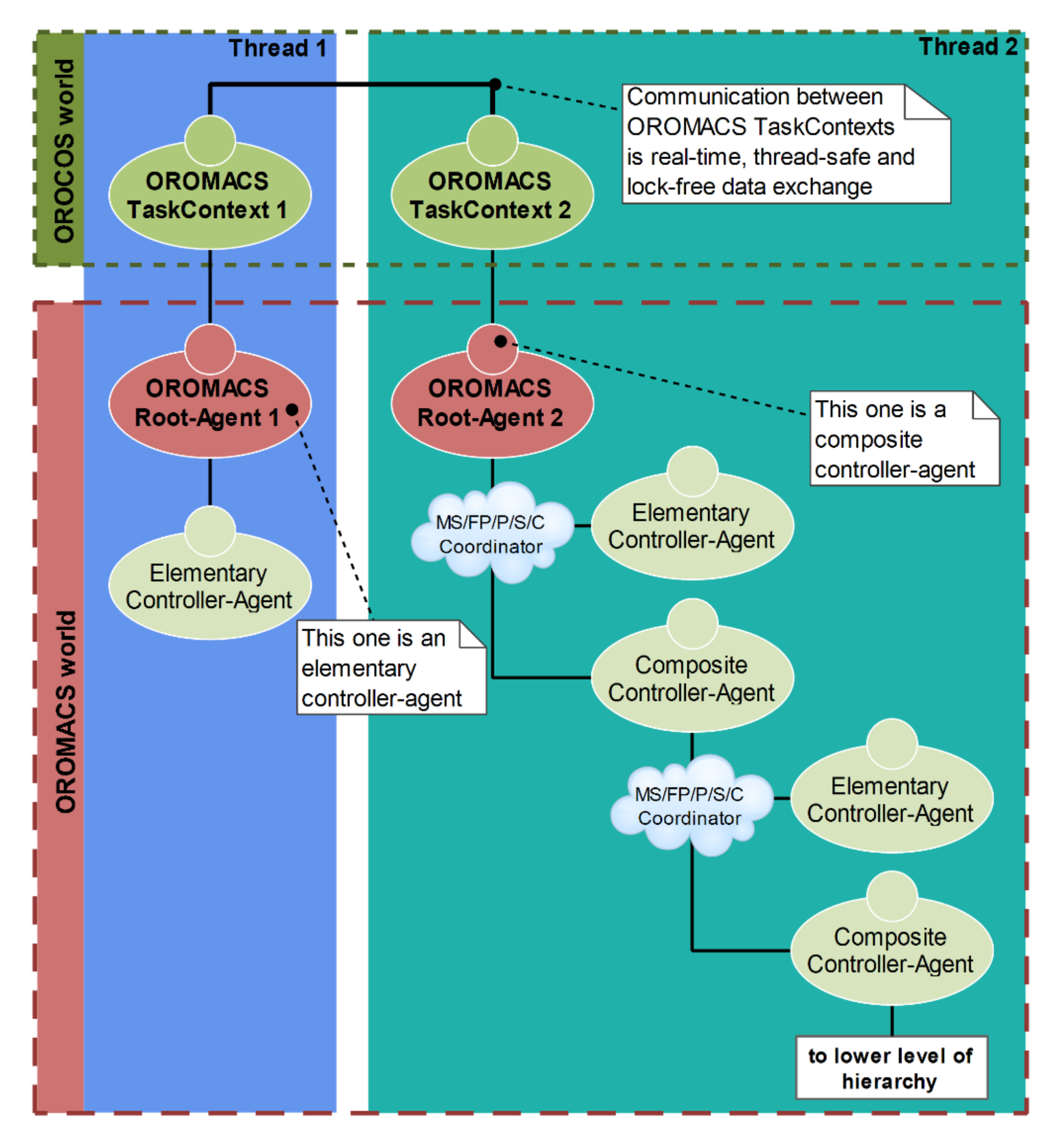

2.1. OROCOS Framework

2.2. Multiagent Control System (MACS)

- Fixed-Priority (FP) coordinator: each controller-agent in a MACS is assigned a fixed priority index. Only one controller-agent in a MACS may be active at a particular time instance. The controller-agent with the highest priority level that wants to be active, gets active. If the highest priority controller-agent does not want to be active (or after it turns into inactive), the next controller-agent in the list of priority levels can be active and so on.

- Master–Slave (MS) coordinator makes controller-agents in a MACS operate in a way that the slave-controller-agent(s) can be active only after the master-controller-agent is active.

- Parallel (P) coordinator makes controller-agents in a MACS be active concurrently. In other words, all controller-agents in a MACS work independently. When a controller-agent wants to get active, it gets active, independent of the other controller-agents.

- Sequential (S) coordinator makes controller-agents in a MACS become active in succession (or a sequential order) for only one round. When a particular controller-agent gets inactive, this triggers the activation of the next controller-agent.

- Cyclic (C) coordinator makes controller-agents in a MACS become active in succession repeatedly.

3. Case Study

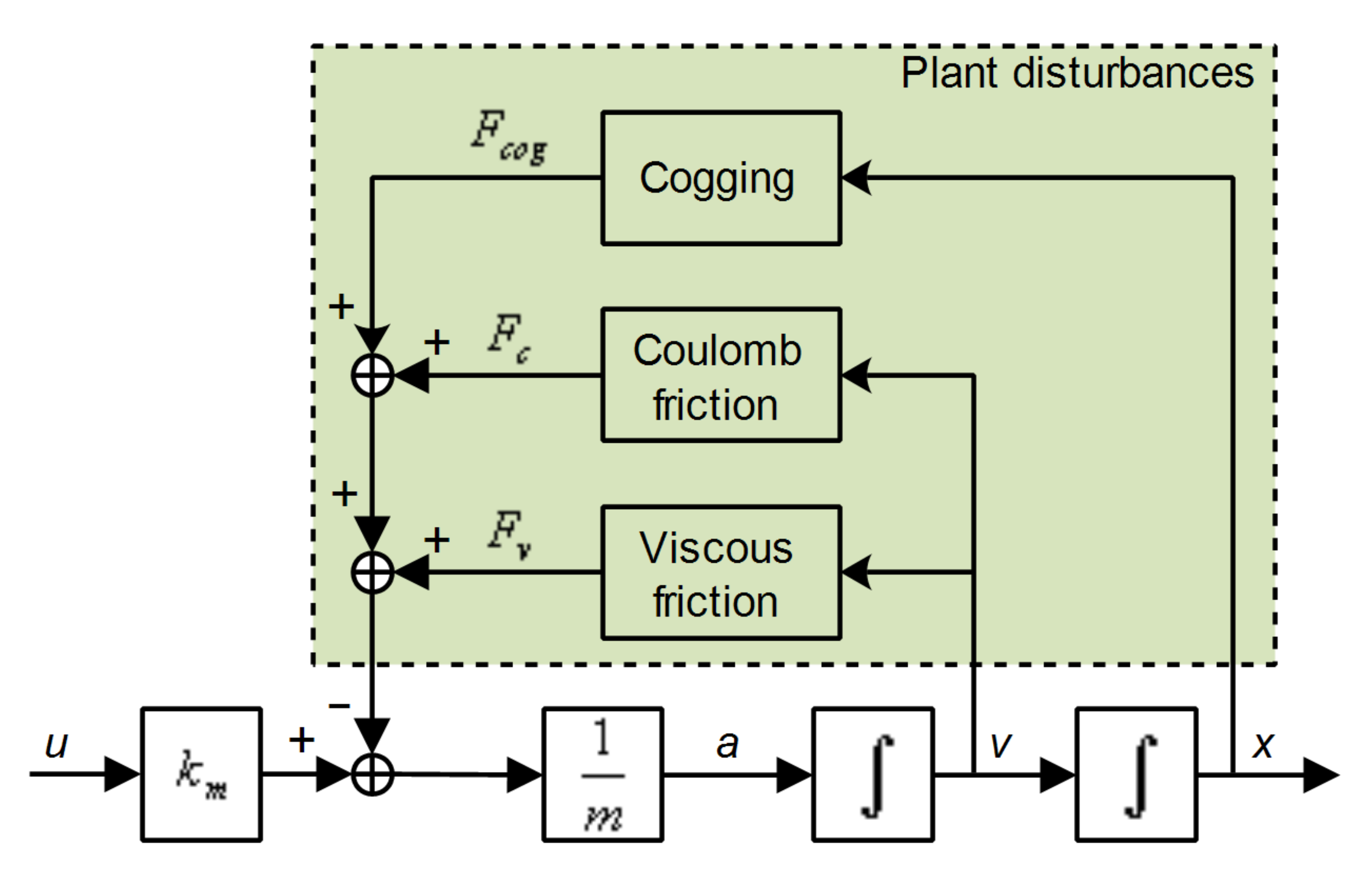

3.1. Simulated Linear Motor

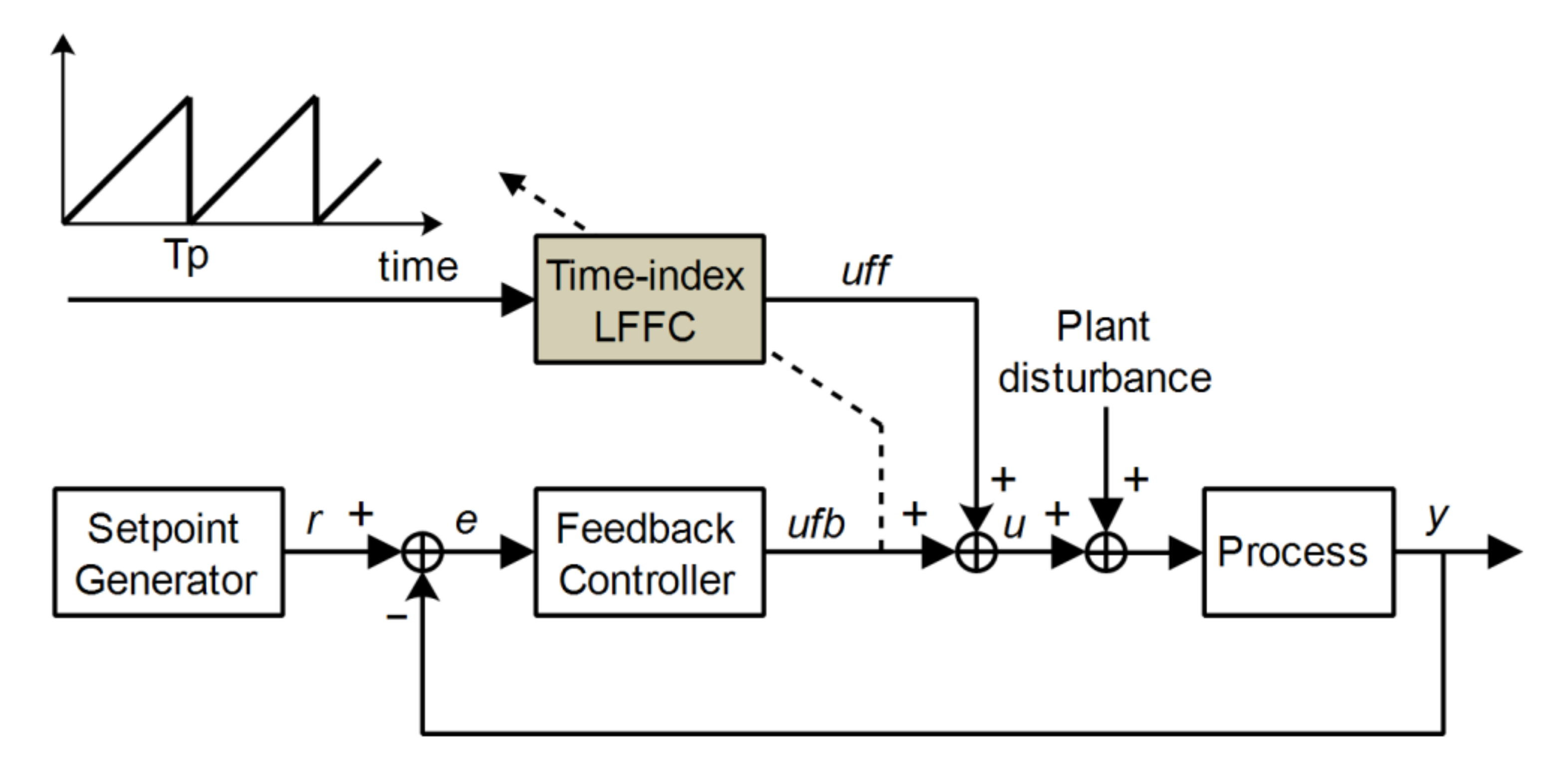

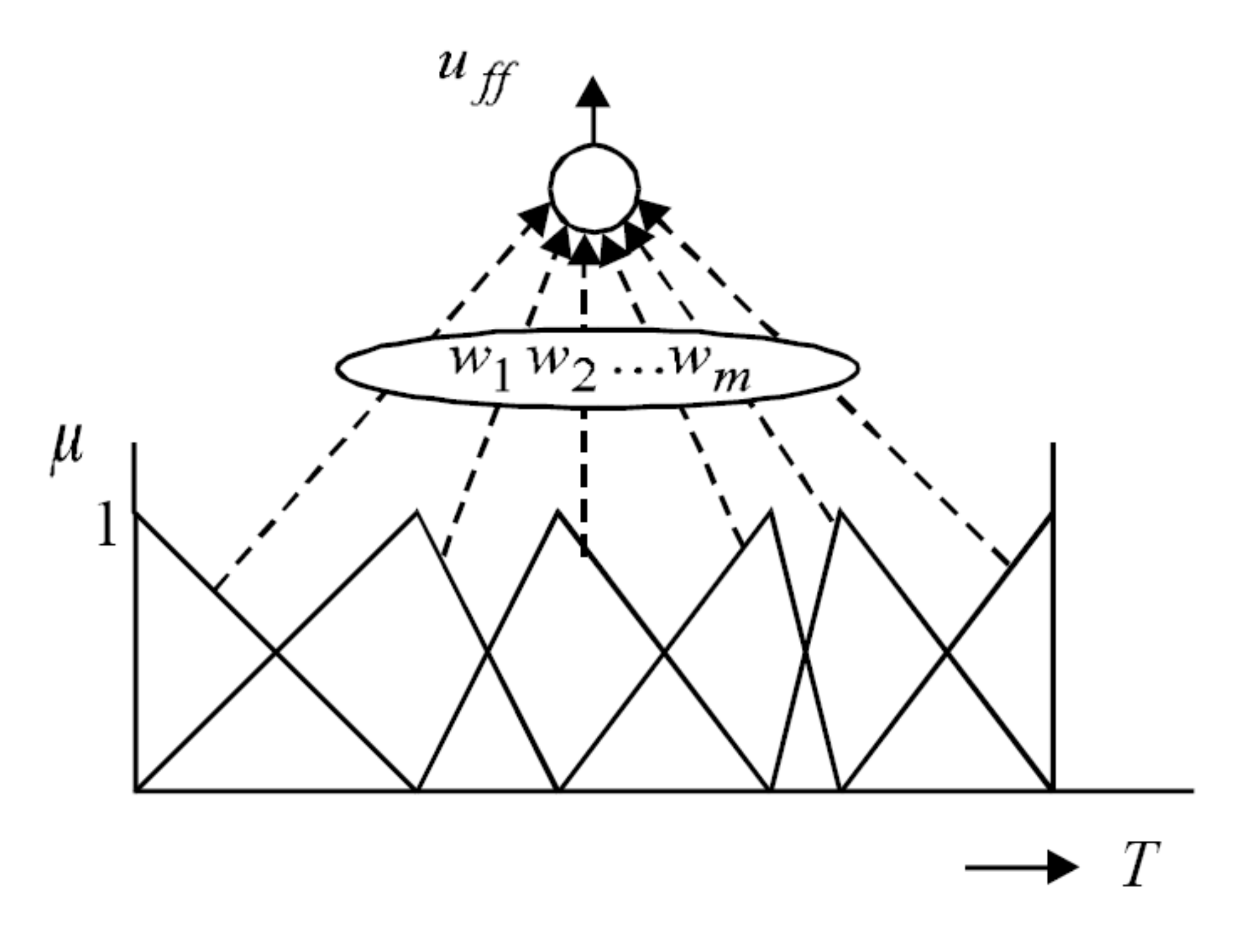

3.2. Design of a Time-Index LFFC

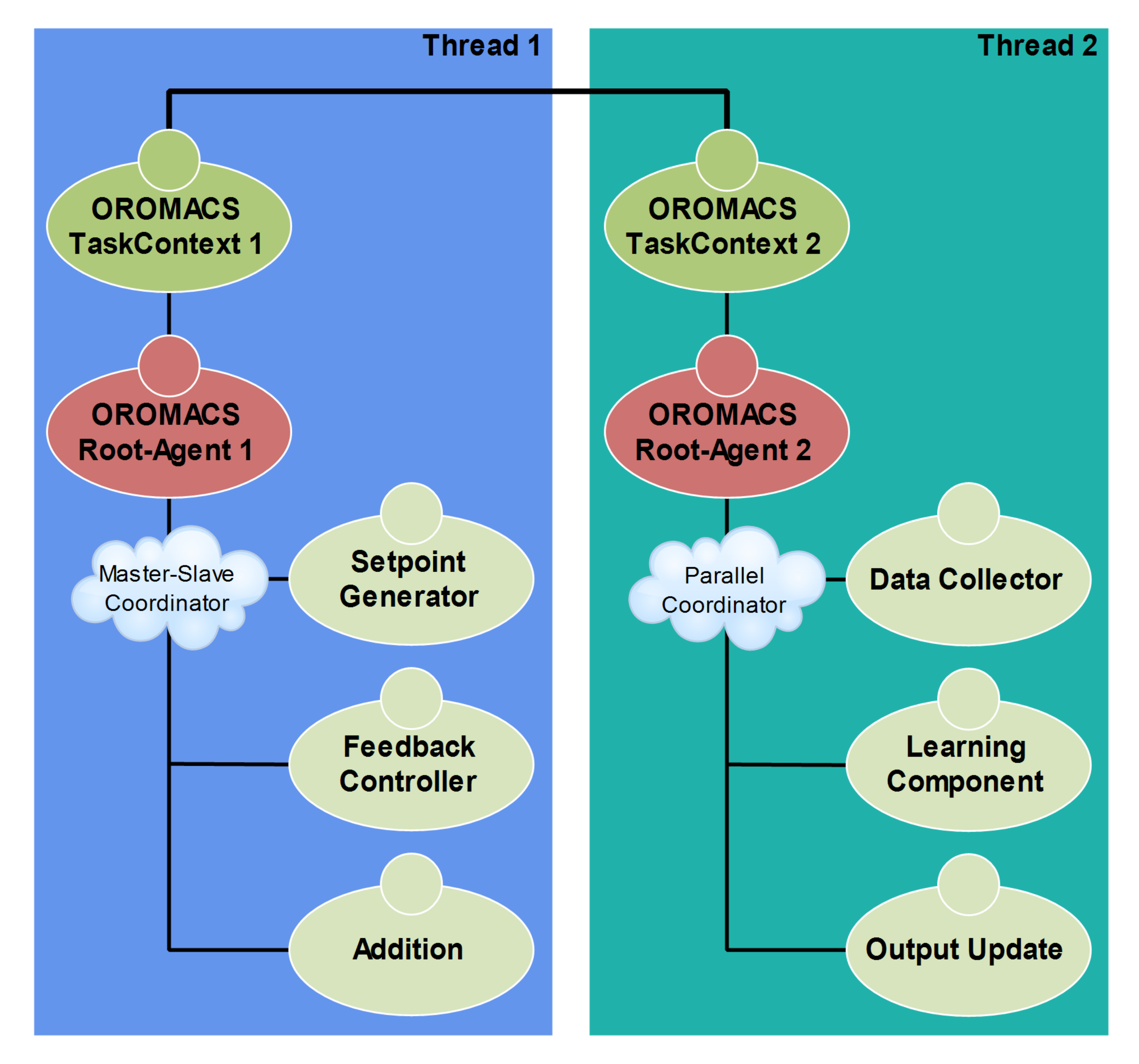

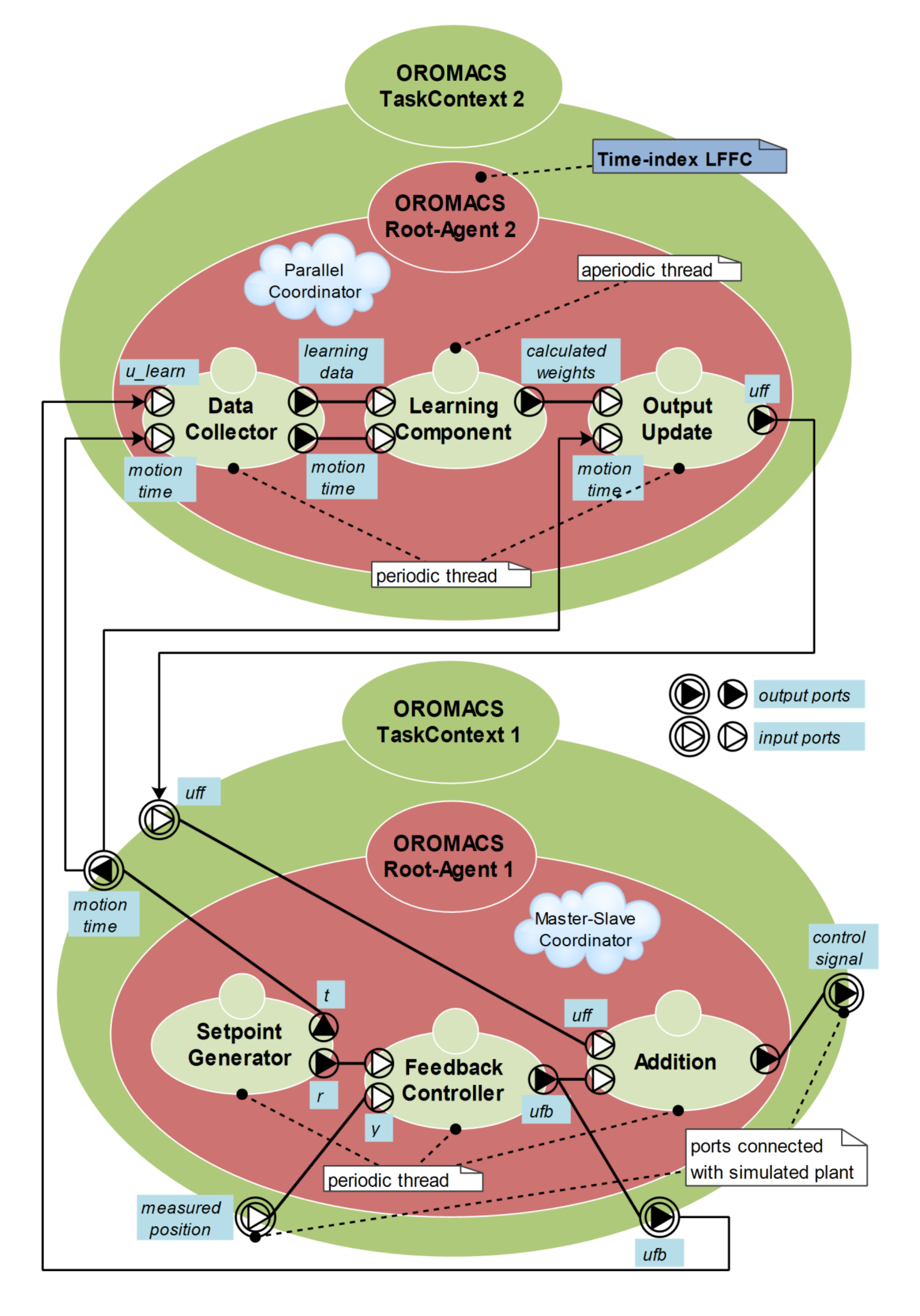

3.3. Design of a MACS-Based Time-Index LFFC System

3.4. Discussion

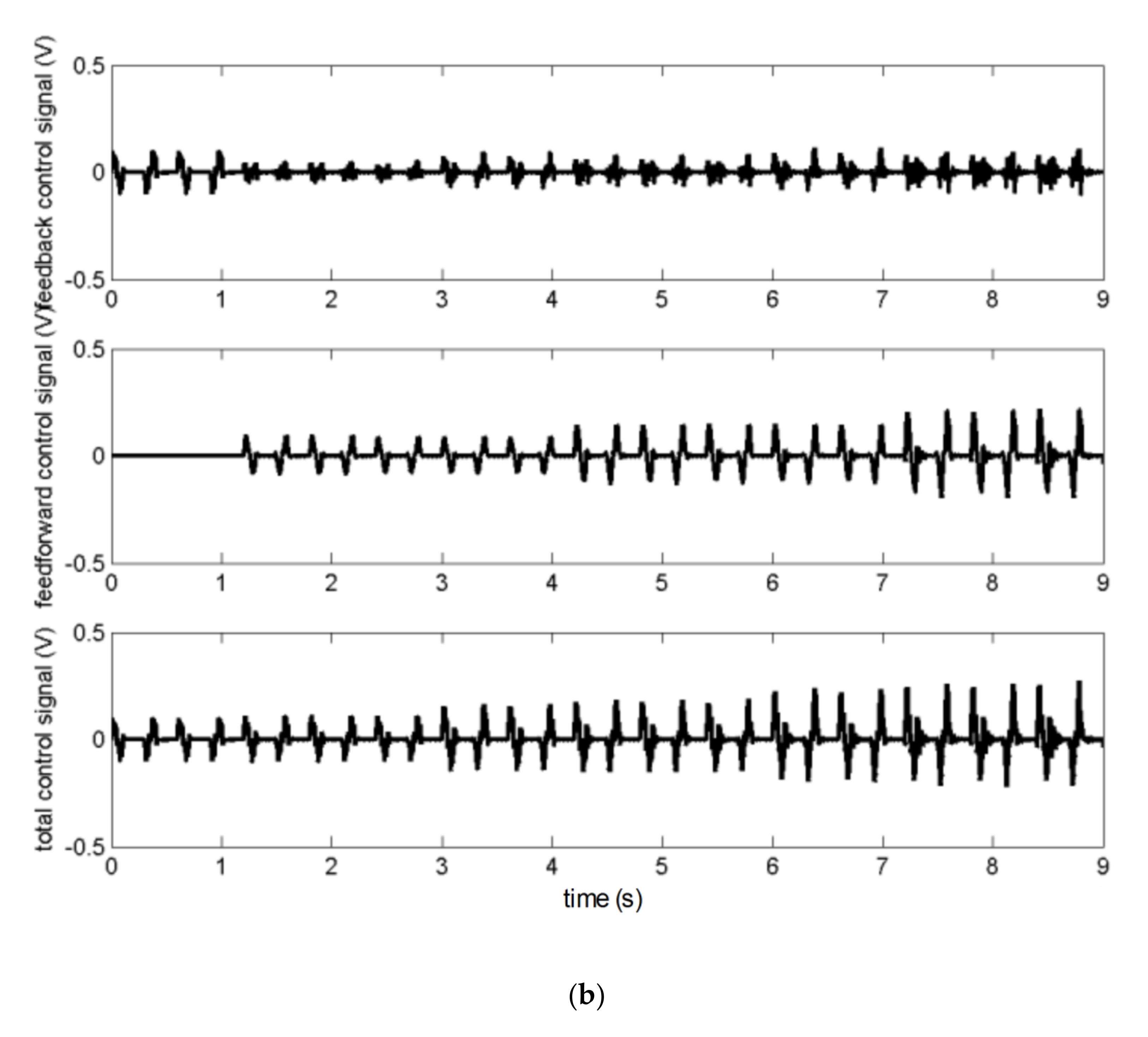

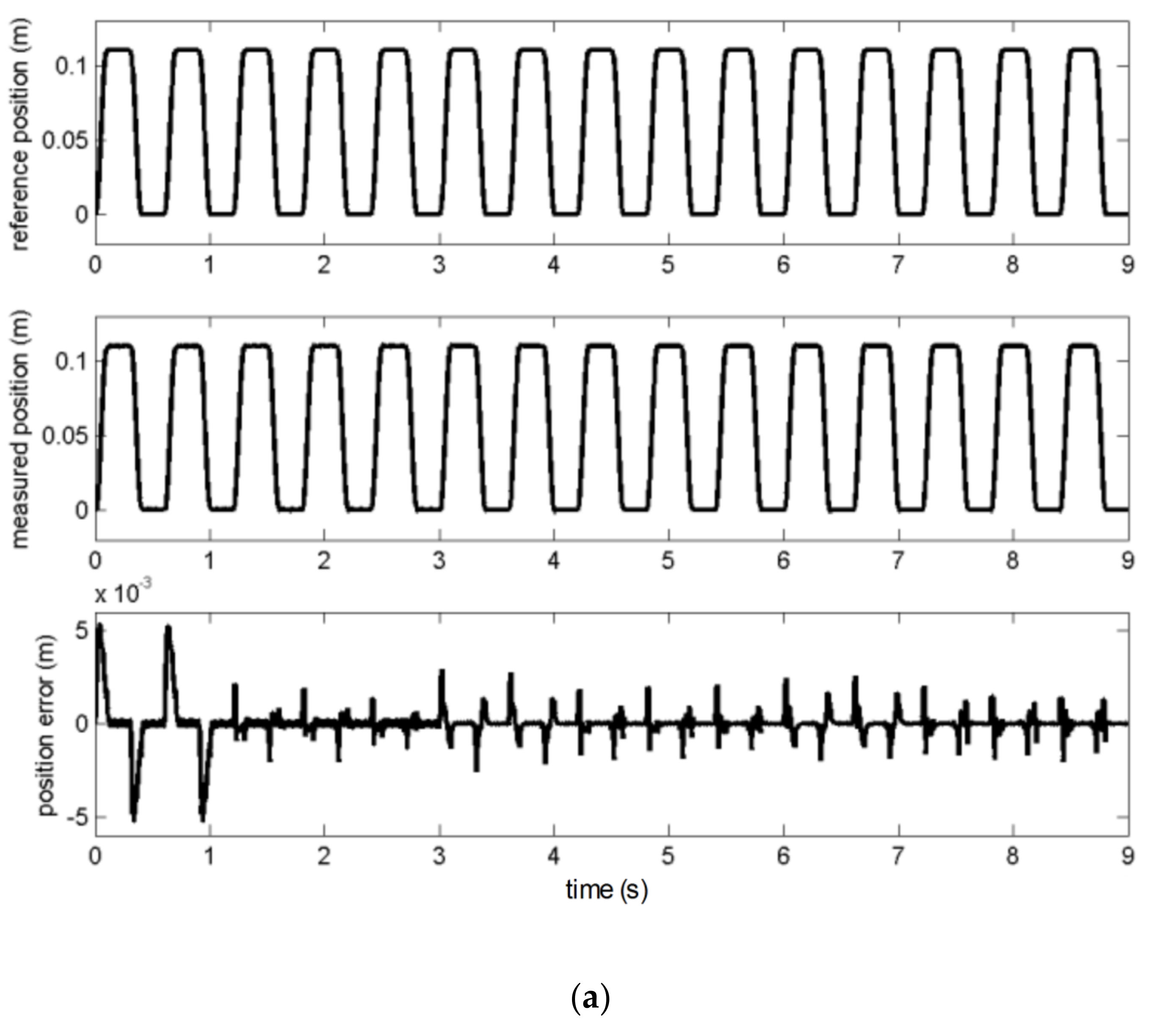

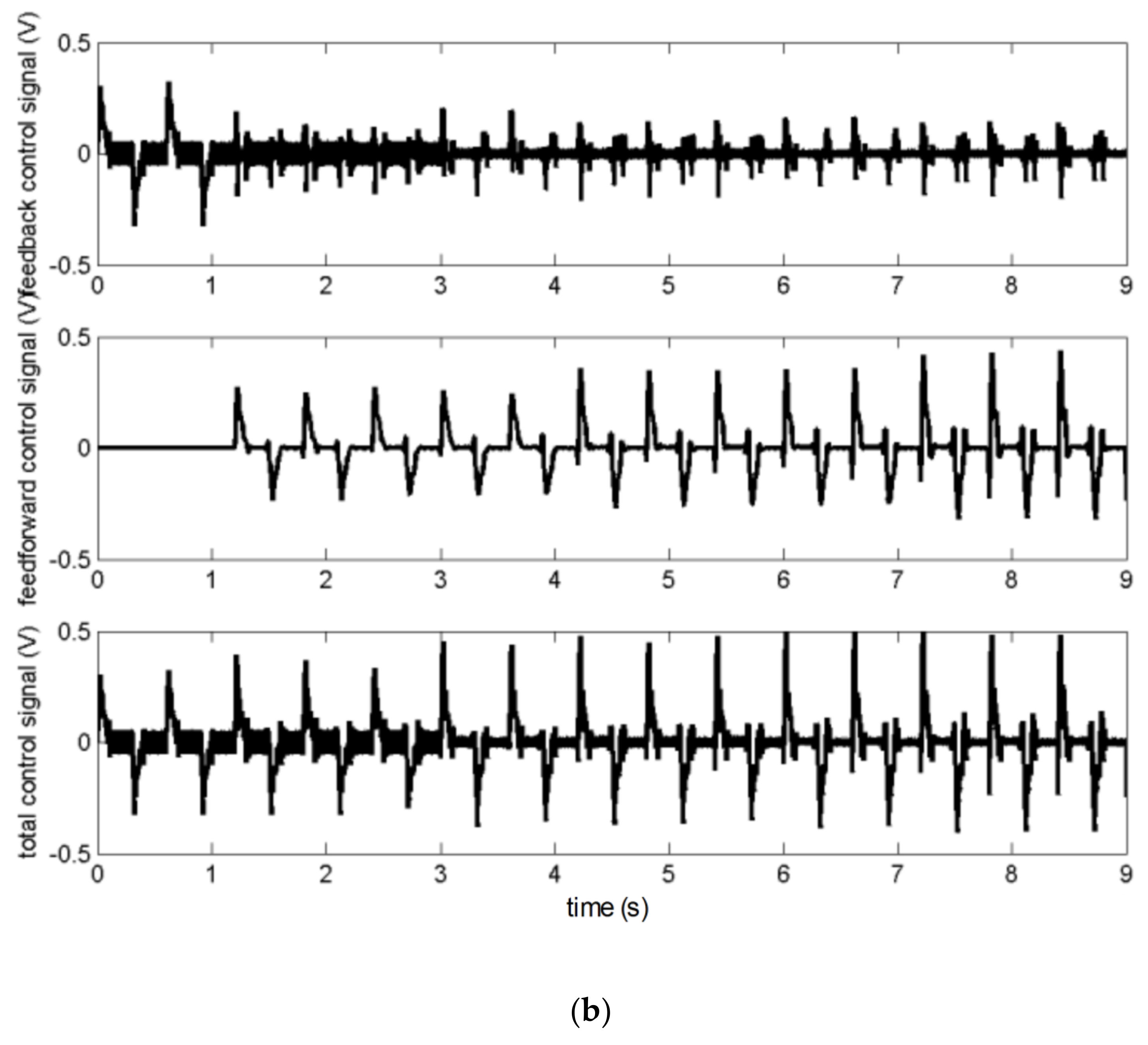

4. Simulation Results

- From t = 0 [s] to 30 [s], the plant mass is 10 kg (initial mass value, see Table 1).

- From t = 30 [s] to 60 [s], the plant mass is increased up to 15 kg.

- From t = 60 [s] to 90 [s], the plant mass is increased up to 20 kg.

5. Conclusions and Further Discussion

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Velthuis, W.J.R. Learning Feedforward Control: Theory, Design and Applications. Ph.D. Thesis, University of Twente, Enschede, The Netherlands, 2000. [Google Scholar]

- Astrom, K.J.; Wittenmark, B. Adaptive Control; Addison-Wesley: Boston, MA, USA, 1995. [Google Scholar]

- Babuška, R.; Damen, M.R.; Hellinga, C.; Maarleveld, H. Intelligent adaptive control of bioreactors. J. Intell. Manuf. 2003, 14, 255–265. [Google Scholar] [CrossRef]

- Tan, C.; Tao, G.; Qi, R.; Yang, H. A direct MRAC based multivariable multiple-model switching control scheme. Automatica 2017, 84, 190–198. [Google Scholar] [CrossRef]

- Jang, T.J.; Choi, C.H.; Ahn, H.S. Iterative learning control in feedback systems. Automatica 1995, 31, 243–245. [Google Scholar] [CrossRef]

- Verwoerd, M. Iterative Learning Control: A Critical Review. Ph.D. Thesis, University of Twente, Enschede, The Netherlands, 2005. [Google Scholar]

- Liu, L.; Tian, S.; Xue, D.; Zhang, T.; Chen, Y.Q. Industrial feedforward control technology: A review. J. Intell. Manuf. 2019, 30, 2819–2833. [Google Scholar] [CrossRef]

- Otten, G.; De Vries, T.J.A.; Van Amerongen, J.; Rankers, A.M.; Gaal, E.W. Linear motor motion control using a learning feedforward controller. IEEE ASME Trans. Mechatron. 1997, 2, 179–187. [Google Scholar] [CrossRef]

- Velthuis, W.J.R.; De Vries, T.J.A.; Schaak, P.; Gaal, E.W. Stability analysis of learning feed-forward control. Automatica 2000, 36, 1889–1895. [Google Scholar] [CrossRef]

- De Vries, T.J.A.; Velthuis, W.J.R.; Idema, L.J. Application of parsimonious learning feedforward control to mechatronic systems. IEE Proc. Control Theory Appl. 2001, 148, 318–322. [Google Scholar] [CrossRef]

- Chen, Y.; Moore, K.L.; Bahl, V. Learning feedforward control using a dilated B-spline network: Frequency domain analysis and design. IEEE Trans. Neural Netw. 2004, 15, 355–366. [Google Scholar] [CrossRef]

- Van Amerongen, J. A MRAS-based Learning Feed-forward Controller. In Proceedings of the 4th IFAC Symposium on Mechatronic Systems, Heidelberg, Germany, 12–14 September 2006; Volume 39, pp. 758–763. [Google Scholar]

- Lin, Z.; Wang, J.; Howe, D. A learning feed-forward current controller for linear reciprocating vapor compressors. IEEE Trans. Ind. Electron. 2011, 58, 3383–3390. [Google Scholar] [CrossRef]

- Lee, F.S.; Wang, J.C.; Chien, C.J. B-spline network-based iterative learning control for trajectory tracking of a piezoelectric actuator. Mech. Syst. Signal Process. 2009, 23, 523–538. [Google Scholar] [CrossRef]

- Deng, H.; Oruganti, R.; Srinivasan, D. Neural controller for UPS Inverters based on B-spline network. IEEE Trans. Ind. Electron. 2008, 55, 899–909. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, Y.; Dehghan, S.; Chen, Y.Q. Learning feedforward control of a one-stage refrigeration system. IEEE Access 2019, 7, 64120–64126. [Google Scholar] [CrossRef]

- Sprunt, B.; Sha, L.; Lehoczky, J. Aperiodic task scheduling for hard-real-time systems. Real. Time Syst. 1989, 1, 27–60. [Google Scholar] [CrossRef]

- Lin, T.H.; Tarng, W. Scheduling periodic and aperiodic tasks in hard real-time computing systems. In Proceedings of the ACM SIGMETRICS Conference on Measurement and Modeling of Computer Systems, San Diego, CA, USA, 21–24 May 1991; pp. 31–38. [Google Scholar]

- Spuri, M.; Buttazzo, G. Scheduling aperiodic tasks in dynamic priority systems. Real. Time Syst. 1996, 10, 179–210. [Google Scholar] [CrossRef]

- Park, S.J.; Yang, J.M. Supervisory control for real-time scheduling of periodic and sporadic tasks with resource constraints. Automatica 2009, 45, 2597–2604. [Google Scholar] [CrossRef]

- Buttazzo, G.C. Hard Real-Time Computing Systems: Predictable Scheduling Algorithms and Applications; Real-Time Systems Series; Springer: Boston, MA, USA, 2011; Volume 24. [Google Scholar]

- Johansen, T.A.; Murray-Smith, R. The Operating Regime Approach to Nonlinear Modelling and Control. In Multiple Model Approaches to Modelling and Control; Murray-Smith, R., Johansen, T.A., Eds.; Taylor and Francis: London, UK, 1997; pp. 3–72. [Google Scholar]

- Pagès, O.; Caron, B.; Ordónez, R.; Mouille, P. Control system design by using a multi-controller approach with a real-time experimentation for a robot wrist. Int. J. Control 2002, 75, 1321–1334. [Google Scholar] [CrossRef]

- Leitão, P. Agent-based distributed manufacturing control: A state-of-the-art survey. Eng. Appl. Artif. Intell. 2009, 22, 979–991. [Google Scholar] [CrossRef]

- Ribeiro, L.; Barata, J. Deployment of Multiagent Mechatronic Systems. In Industrial Applications of Holonic and Multi-Agent Systems; Lecture Notes in Computer, Science; Mařík, V., Lastra, J.L.M., Skobelev, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8062, pp. 71–82. [Google Scholar]

- Farid, A.M.; Ribeiro, L. An axiomatic design of a multi-agent reconfigurable mechatronic system architecture. IEEE Trans. Ind. Inform. 2015, 11, 1142–1155. [Google Scholar] [CrossRef]

- Leitão, P.; Karnouskos, S.; Ribeiro, L.; Lee, J.; Strasser, T.; Colombo, A.W. Smart agents in industrial cyber-physical systems. Proc. IEEE 2016, 104, 1086–1101. [Google Scholar] [CrossRef]

- Qin, J.; Ma, Q.; Shi, Y.; Wang, L. Recent advances in consensus of multi-agent systems: A brief survey. IEEE Trans. Ind. Electron. 2017, 64, 4972–4983. [Google Scholar] [CrossRef]

- Sahnoun, M.; Baudry, D.; Mustafee, N.; Louis, A.; Smart, P.A.; Godsiff, P.; Mazari, B. Modelling and simulation of operation and maintenance strategy for offshore wind farms based on multi-agent system. J. Intell. Manuf. 2019, 30, 2981–2997. [Google Scholar] [CrossRef]

- Jiménez, A.C.; García-Díaz, V.; Castro, S.J.B. A decentralized framework for multi-agent robotic systems. Sensors 2018, 18, 417. [Google Scholar] [CrossRef]

- Maoudj, A.; Bouzouia, B.; Hentout, A.; Kouider, A.; Toumi, R. Distributed multi-agent scheduling and control system for robotic flexible assembly cells. J. Intell. Manuf. 2019, 30, 1629–1644. [Google Scholar] [CrossRef]

- Fan, Y.; Chen, J.; Song, C.; Wang, Y. Event-triggered coordination control for multi-agent systems with connectivity preservation. Int. J. Control Autom. Syst. 2020, 18, 966–979. [Google Scholar] [CrossRef]

- Dao, P.B. OROMACS: A design framework for multi-agent control system. Int. J. Control Autom. Syst. accepted for publication. [CrossRef]

- The Open Robot Control Software (OROCOS) Project. Available online: http://www.orocos.org/ (accessed on 26 July 2020).

- Rezola, I. Learning Multi-Agent Control with OROCOS. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2009. [Google Scholar]

- Bruyninckx, H. Open robot control software: The OROCOS Project. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seoul, Korea, 21–26 May 2001; pp. 2523–2528. [Google Scholar]

- Bruyninckx, H.; Soetens, P.; Koninckx, B. The Real-time Motion Control Core of the Orocos Project. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Taipei, Taiwan, 14–19 September 2003; pp. 2766–2771. [Google Scholar]

- OROCOS Component Builder’s Manual. Available online: https://www.orocos.org/stable/documentation/rtt/v2.x/doc-xml/orocos-components-manual.html (accessed on 26 July 2020).

- GNU Scientific Library. Available online: http://www.gnu.org/software/gsl/ (accessed on 10 July 2020).

- Bruyninckx, H. Real-Time and Embedded Guide; Katholieke Universiteit Leuven: Leuven, Belgium, 2002. [Google Scholar]

| Parameter | Value |

|---|---|

| motor constant | 69.3 |

| plant mass | 10 [kg] |

| cogging force | [N] |

| cogging force magnitude | 0.1 [N] |

| coulomb friction | [N] |

| coulomb friction coefficient | 0.1 [N] |

| viscous friction | [N] |

| viscous friction coefficient | 0.2 [Ns/m] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dao, P.B. Learning Feedforward Control Using Multiagent Control Approach for Motion Control Systems. Energies 2021, 14, 420. https://doi.org/10.3390/en14020420

Dao PB. Learning Feedforward Control Using Multiagent Control Approach for Motion Control Systems. Energies. 2021; 14(2):420. https://doi.org/10.3390/en14020420

Chicago/Turabian StyleDao, Phong B. 2021. "Learning Feedforward Control Using Multiagent Control Approach for Motion Control Systems" Energies 14, no. 2: 420. https://doi.org/10.3390/en14020420

APA StyleDao, P. B. (2021). Learning Feedforward Control Using Multiagent Control Approach for Motion Control Systems. Energies, 14(2), 420. https://doi.org/10.3390/en14020420