Short-Term Net Load Forecasting with Singular Spectrum Analysis and LSTM Neural Networks

Abstract

:1. Introduction

- From a methodological standpoint, we propose a hybrid approach to combine time series decomposition with ANNs, which aims to improve individual performance by leveraging seasonal components as external regressors.

- From an application point of view, we provide extensive empirical results against a number of well known benchmarks, with and without using exogenous features, e.g., weather forecasts, thus quantifying the relative importance of adding them. The results are useful for TSOs and other stakeholders (e.g., retailers and aggregators) to improve their load forecasting capabilities, thus enhancing operational management and participation in electricity markets, respectively.

2. Methodology

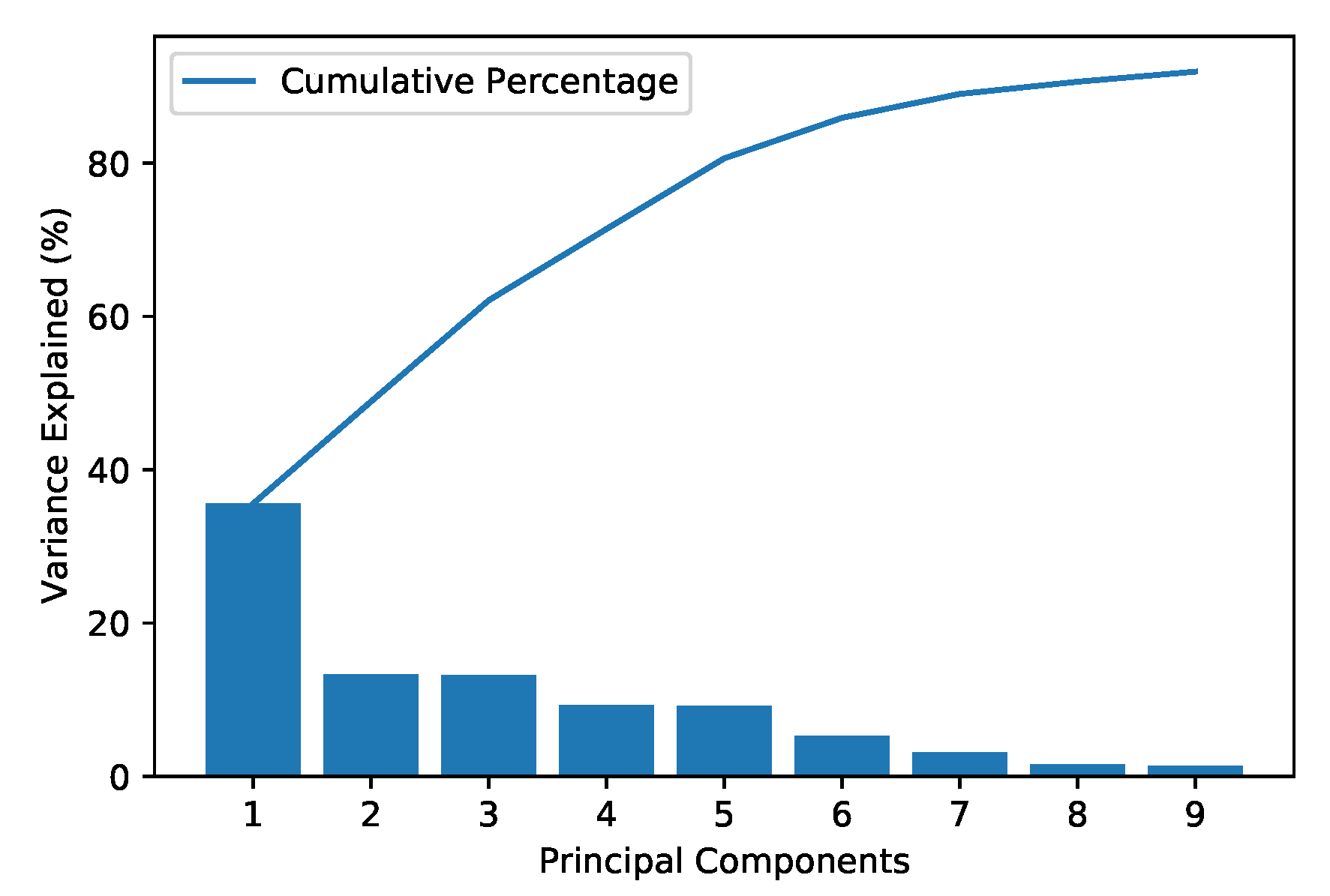

2.1. Singular Spectrum Analysis

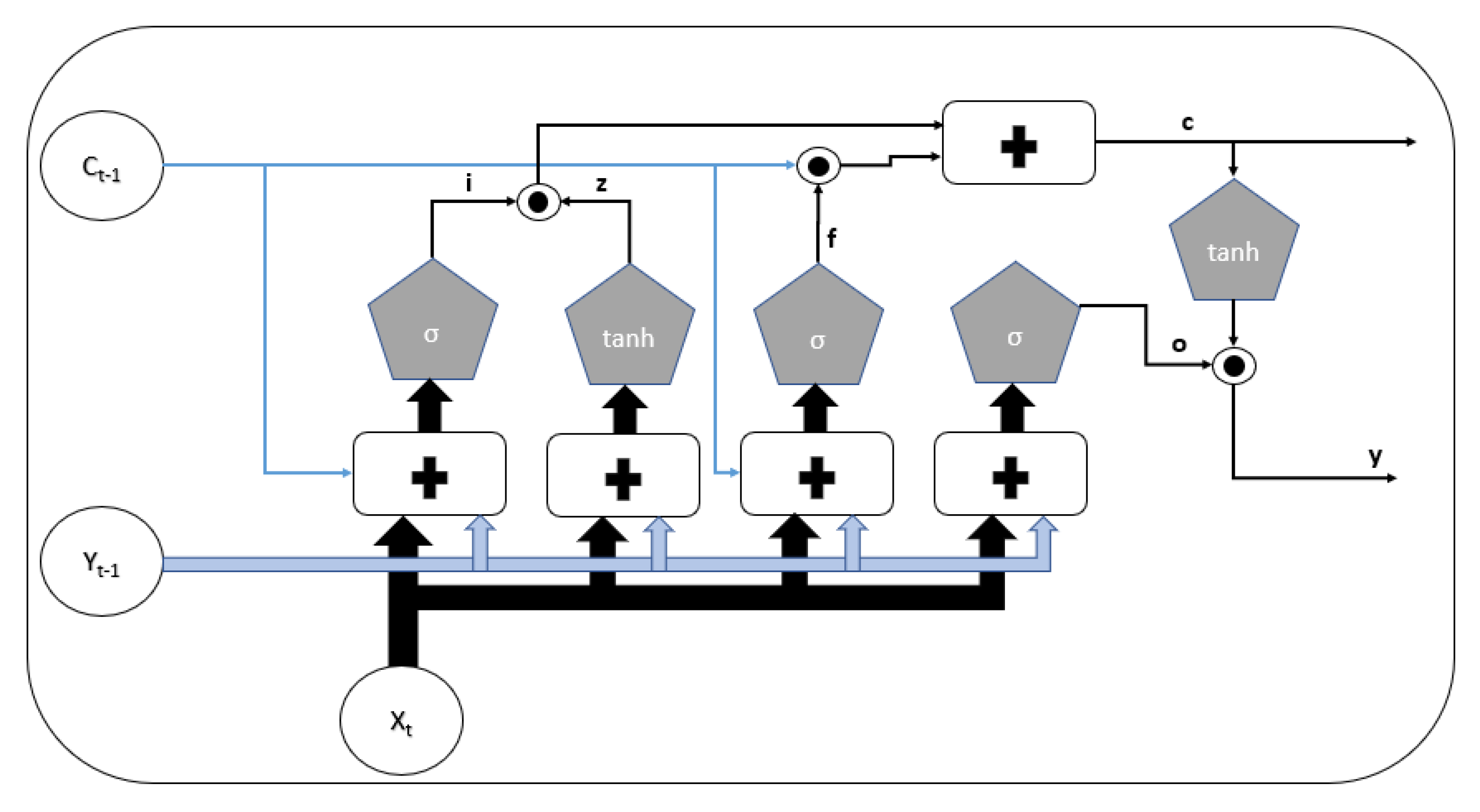

2.2. LSTM

2.3. Combining SSA and LSTM

- (1)

- Let be the input vector, where d is the embedding dimension selected. Therefore, this input vector corresponds to a new row in the trajectory matrix as defined in (1).

- (2)

- This vector is subsequently mapped onto the principal axes by means of linear transformation, as obtained by applying SVD (2) to the training dataset.

- (3)

- The transformed rows are finally fed into the prediction layer, which performs multi-step ahead prediction, thus producing a vector .

3. Experimental Setting

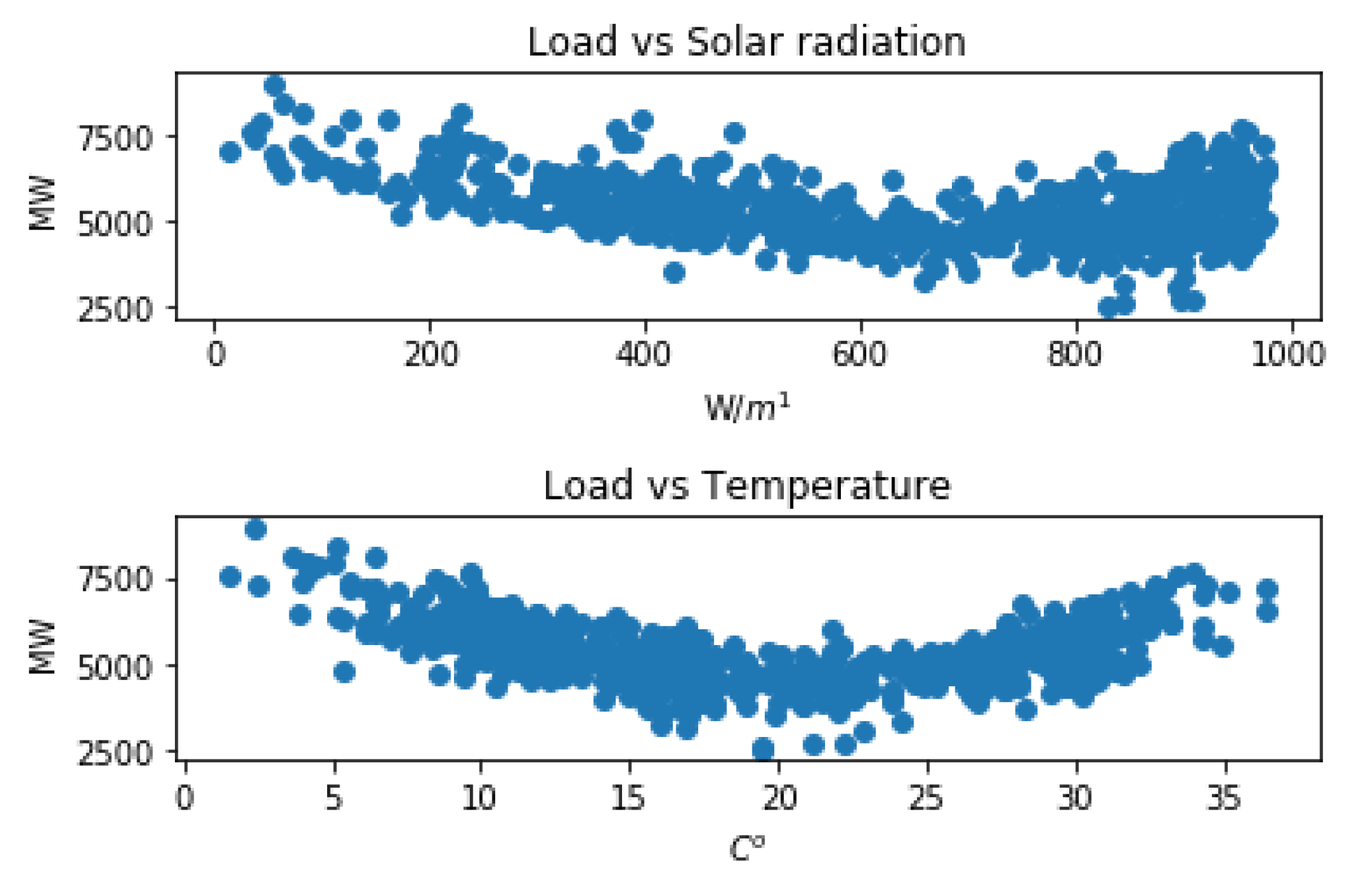

3.1. Datasets

3.2. Preprocessing

3.3. Hyperparameter Tuning

3.4. Benchmark Models

4. Results

4.1. Greek System Load

4.2. GEFCom Dataset

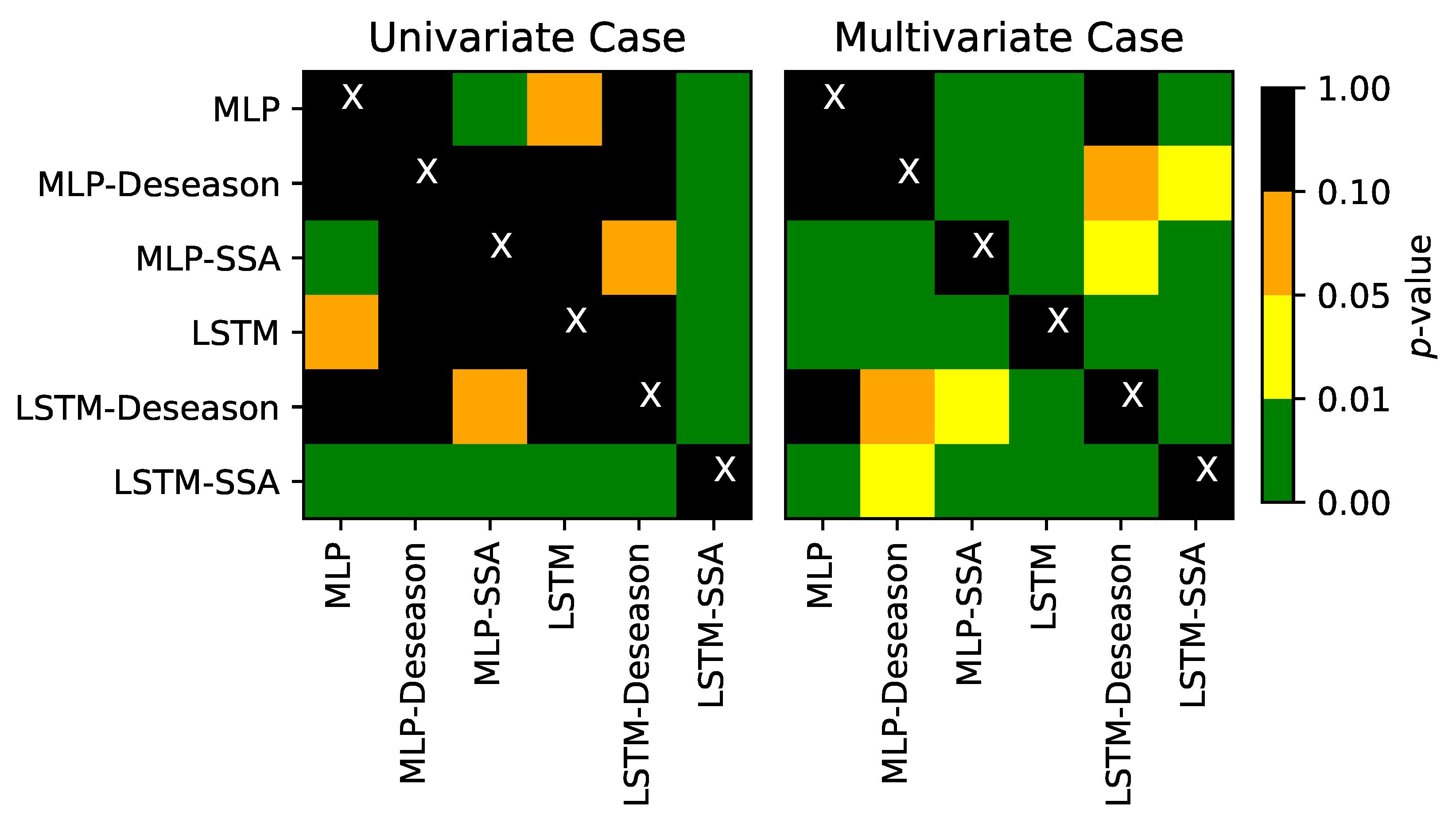

4.3. Assessing Statistical Significance

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Spodniak, P.; Ollikka, K.; Honkapuro, S. The impact of wind power and electricity demand on the relevance of different short-term electricity markets: The Nordic case. Appl. Energy 2021, 283, 116063. [Google Scholar] [CrossRef]

- Goodarzi, S.; Perera, H.N.; Bunn, D. The impact of renewable energy forecast errors on imbalance volumes and electricity spot prices. Energy Policy 2019, 134, 110827. [Google Scholar] [CrossRef]

- Hong, T.; Fan, S. Probabilistic electric load forecasting: A tutorial review. Int. J. Forecast. 2016, 32, 914–938. [Google Scholar] [CrossRef]

- Box, G.E.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Pappas, S.S.; Ekonomou, L.; Moussas, V.; Karampelas, P.; Katsikas, S. Adaptive load forecasting of the Hellenic electric grid. J. Zhejiang Univ. Sci. A 2008, 9, 1724–1730. [Google Scholar] [CrossRef]

- Hippert, H.S.; Pedreira, C.E.; Souza, R.C. Neural networks for short-term load forecasting: A review and evaluation. IEEE Trans. Power Syst. 2001, 16, 44–55. [Google Scholar] [CrossRef]

- Karampelas, P.; Vita, V.; Pavlatos, C.; Mladenov, V.; Ekonomou, L. Design of artificial neural network models for the prediction of the Hellenic energy consumption. In Proceedings of the 10th Symposium on Neural Network Applications in Electrical Engineering, Belgrade, Serbia, 23–25 September 2010; IEEE: New York, NY, USA, 2010; pp. 41–44. [Google Scholar]

- Ekonomou, L.; Christodoulou, C.; Mladenov, V. A short-term load forecasting method using artificial neural networks and wavelet analysis. Int. J. Power Syst 2016, 1, 64–68. [Google Scholar]

- Chen, B.J.; Chang, M.W. Load forecasting using support vector machines: A study on EUNITE competition 2001. IEEE Trans. Power Syst. 2004, 19, 1821–1830. [Google Scholar] [CrossRef] [Green Version]

- Taylor, J.W.; McSharry, P.E. Short-term load forecasting methods: An evaluation based on european data. IEEE Trans. Power Syst. 2007, 22, 2213–2219. [Google Scholar] [CrossRef] [Green Version]

- Papaioannou, G.; Dikaiakos, C.; Dramountanis, A.; Papaioannou, P. Analysis and modeling for short-to medium-term load forecasting using a hybrid manifold learning principal component model and comparison with classical statistical models (SARIMAX, Exponential Smoothing) and artificial intelligence models (ANN, SVM): The case of Greek electricity market. Energies 2016, 9, 635. [Google Scholar]

- Kuo, P.H.; Huang, C.J. A high precision artificial neural networks model for short-term energy load forecasting. Energies 2018, 11, 213. [Google Scholar] [CrossRef] [Green Version]

- Kiprijanovska, I.; Stankoski, S.; Ilievski, I.; Jovanovski, S.; Gams, M.; Gjoreski, H. Houseec: Day-ahead household electrical energy consumption forecasting using deep learning. Energies 2020, 13, 2672. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Bianchi, F.M.; Maiorino, E.; Kampffmeyer, M.C.; Rizzi, A.; Jenssen, R. Recurrent Neural Networks for Short-Term Load Forecasting: An Overview and Comparative Analysis; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-term residential load forecasting based on LSTM recurrent neural network. IEEE Trans. Smart Grid 2017, 10, 841–851. [Google Scholar] [CrossRef]

- Bouktif, S.; Fiaz, A.; Ouni, A.; Serhani, M.A. Single and multi-sequence deep learning models for short and medium term electric load forecasting. Energies 2019, 12, 149. [Google Scholar] [CrossRef] [Green Version]

- Tian, C.; Ma, J.; Zhang, C.; Zhan, P. A deep neural network model for short-term load forecast based on long short-term memory network and convolutional neural network. Energies 2018, 11, 3493. [Google Scholar] [CrossRef] [Green Version]

- He, W. Load forecasting via deep neural networks. Procedia Comput. Sci. 2017, 122, 308–314. [Google Scholar] [CrossRef]

- Taylor, J.W. Short-term electricity demand forecasting using double seasonal exponential smoothing. J. Oper. Res. Soc. 2003, 54, 799–805. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Athanasopoulos, G. Forecasting: Principles and Practice; OTexts: Melbourne, Australia, 2018. [Google Scholar]

- Zhang, G.P.; Qi, M. Neural network forecasting for seasonal and trend time series. Eur. J. Oper. Res. 2005, 160, 501–514. [Google Scholar] [CrossRef]

- Taieb, S.B.; Bontempi, G.; Atiya, A.F.; Sorjamaa, A. A review and comparison of strategies for multi-step ahead time series forecasting based on the NN5 forecasting competition. Expert Syst. Appl. 2012, 39, 7067–7083. [Google Scholar] [CrossRef] [Green Version]

- Cleveland, R.B.; Cleveland, W.S.; McRae, J.E.; Terpenning, I. STL: A seasonal-trend decomposition. J. Off. Stat. 1990, 6, 3–73. [Google Scholar]

- De Livera, A.M.; Hyndman, R.J.; Snyder, R.D. Forecasting time series with complex seasonal patterns using exponential smoothing. J. Am. Stat. Assoc. 2011, 106, 1513–1527. [Google Scholar] [CrossRef] [Green Version]

- Elsner, J.B.; Tsonis, A.A. Singular Spectrum Analysis: A New Tool in Time Series Analysis; Springer Science & Business Media: New York, NY, USA, 2013. [Google Scholar]

- Bandara, K.; Bergmeir, C.; Hewamalage, H. LSTM-MSNet: Leveraging forecasts on sets of related time series with multiple seasonal patterns. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 1586–1599. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Zhang, H.; Ma, P. Wind power forecasting based on singular spectrum analysis and a new hybrid Laguerre neural network. Appl. Energy 2020, 259, 114139. [Google Scholar] [CrossRef]

- Moreno, S.R.; dos Santos Coelho, L. Wind speed forecasting approach based on singular spectrum analysis and adaptive neuro fuzzy inference system. Renew. Energy 2018, 126, 736–754. [Google Scholar] [CrossRef]

- Liu, H.; Mi, X.; Li, Y.; Duan, Z.; Xu, Y. Smart wind speed deep learning based multi-step forecasting model using singular spectrum analysis, convolutional Gated Recurrent Unit network and Support Vector Regression. Renew. Energy 2019, 143, 842–854. [Google Scholar] [CrossRef]

- Liu, H.; Mi, X.; Li, Y. Smart multi-step deep learning model for wind speed forecasting based on variational mode decomposition, singular spectrum analysis, LSTM network and ELM. Energy Convers. Manag. 2018, 159, 54–64. [Google Scholar] [CrossRef]

- Moreno, S.R.; da Silva, R.G.; Mariani, V.C.; dos Santos Coelho, L. Multi-step wind speed forecasting based on hybrid multi-stage decomposition model and long short-term memory neural network. Energy Convers. Manag. 2020, 213, 112869. [Google Scholar] [CrossRef]

- Hong, T.; Pinson, P.; Fan, S. Global energy forecasting competition 2012. 2014. Available online: https://doi.org/10.1016 (accessed on 20 May 2021).

- Broomhead, D.S.; King, G.P. Extracting qualitative dynamics from experimental data. Phys. D Nonlinear Phenom. 1986, 20, 217–236. [Google Scholar] [CrossRef]

- Takens, F. Detecting strange attractors in turbulence. In Dynamical Systems and Turbulence, Warwick 1980; Springer: Berlin/Heidelberg, Germany, 1981; pp. 366–381. [Google Scholar]

- Golyandina, N.; Nekrutkin, V.; Zhigljavsky, A.A. Analysis of Time Series Structure: SSA and Related Techniques; Chapman and Hall/CRC: Boca Raton, FL, USA, 2001. [Google Scholar]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef] [PubMed]

- Hong, T.; Wang, P.; White, L. Weather station selection for electric load forecasting. Int. J. Forecast. 2015, 31, 286–295. [Google Scholar] [CrossRef]

- Wang, P.; Liu, B.; Hong, T. Electric load forecasting with recency effect: A big data approach. Int. J. Forecast. 2016, 32, 585–597. [Google Scholar] [CrossRef] [Green Version]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://github.com/fchollet/keras (accessed on 4 July 2021).

- Hyndman, R.J.; Khandakar, Y. Automatic time series forecasting: The forecast package for R. J. Stat. Softw. 2008, 26, 1–22. [Google Scholar]

- Diebold, F.X.; Mariano, R.S. Comparing predictive accuracy. J. Bus. Econ. Stat. 2002, 20, 134–144. [Google Scholar] [CrossRef]

| Use Case | Greek System Load | GEFCom | ||

|---|---|---|---|---|

| Models | Univariate | Multivariate | Univariate | Multivariate |

| ARIMA | 6.60 | - | 7.19 | - |

| STL | 5.92 | - | 6.19 | - |

| NAR | 6.79 | - | 7.08 | - |

| SVM | 7.31 | - | 6.98 | - |

| MLP | 5.91 | 3.99 | 5.21 | 3.47 |

| MPL-Deseason | 6.19 | 4.37 | 5.39 | 3.55 |

| MLP-SSA | 5.78 | 3.90 | 5.14 | 3.25 |

| LSTM | 5.94 | 3.67 | 5.35 | 3.33 |

| LSTM-Deseason | 6.02 | 4.24 | 5.58 | 3.79 |

| LSTM-SSA | 5.55 | 3.56 | 5.09 | 3.17 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stratigakos, A.; Bachoumis, A.; Vita, V.; Zafiropoulos, E. Short-Term Net Load Forecasting with Singular Spectrum Analysis and LSTM Neural Networks. Energies 2021, 14, 4107. https://doi.org/10.3390/en14144107

Stratigakos A, Bachoumis A, Vita V, Zafiropoulos E. Short-Term Net Load Forecasting with Singular Spectrum Analysis and LSTM Neural Networks. Energies. 2021; 14(14):4107. https://doi.org/10.3390/en14144107

Chicago/Turabian StyleStratigakos, Akylas, Athanasios Bachoumis, Vasiliki Vita, and Elias Zafiropoulos. 2021. "Short-Term Net Load Forecasting with Singular Spectrum Analysis and LSTM Neural Networks" Energies 14, no. 14: 4107. https://doi.org/10.3390/en14144107

APA StyleStratigakos, A., Bachoumis, A., Vita, V., & Zafiropoulos, E. (2021). Short-Term Net Load Forecasting with Singular Spectrum Analysis and LSTM Neural Networks. Energies, 14(14), 4107. https://doi.org/10.3390/en14144107