Introduction

In many everyday tasks, gaze is an active component in the control of action sequences. Humans skillfully use eye movements to foveate targets and integrate visual information to action and perception. Foveation is known to be necessary for high-spatial-resolution perceptual analysis, but may also be important for “vision for action”: the visuomotor programs responsible for coordination of limb and body motion in time and space may involve visual orientation as part of a motor routine even, to some extent, independently of the need to acquire detailed perceptual visual information (

Mars and Navarro, 2012).

In naturalistic dynamic tasks, different types of eye movements are chosen flexibly and integrated into ongoing behavior. The goal of this complex behavior is to maintain on the retinae images appropriate for the generation of accurately directed actions and stable perceptions in the face of the complex image transformations that are due to motion of objects, self-motion of the observer, and the movements of the eyes themselves. How this remarkable feat is accomplished in a great variety of everyday tasks humans are capable of, and what are the visual and other representations that support this rich repertoire of behaviors, is only beginning to be understood (

Land, 2006;

2007;

Tatler and Land 2011).

Studies of eye-movement in naturalistic tasks such as hand washing, sandwich making and car driving have revealed a common pattern: gaze is targeted to task-relevant objects and locations, with very high consistency within and even between subjects, with gaze leading action by about 1s. This tight temporal coupling cannot be dependent on stimulus saliency, but information about the identity of the ongoing and next task phase must be integrated in assessment of task relevance (cf.

Tatler et al., 2011). In addition to these “just in time” (

Ballard et al., 1995) or

guiding fixations, occasional

look-ahead fixations provide preview information about objects and locations relevant for future actions (

Mennie et al., 2007).

This is also the case in driving. We “look where we are going”, with gaze leading steering action by 1s (

Land, 1992;

Land & Lee, 1994), and relevant objects or locations in the road scene fixated “just in time”, with occasional look-ahead fixations further up the road (

Lehtonen et al., 2012a,

b).

In curve driving, orientation towards the future path (

Boer, 1996;

Wann & Swapp, 2000) and/or the tangent point (TP, Land & Lee, 1994) have been identified as the predominant gaze targets of guiding fixations (The future path refers to points on the road surface the driver wishes to pass through, the tangent point is the point on the inside lane edge where the apparent curvature of road edge reverses). That drivers actively foveate the tangent point area (within a few degrees) has been replicated many times. Yet there is still no consensus as to whether – or how often – drivers target the

tangent point itself, as Land and Lee suggested in their seminal (1994) paper, or whether – or how often – some point on the

future path in the vicinity of the tangent point may be the actual gaze target. Several models based on both the tangent point and the future path as gaze target have been put forward, showing how either could be used to “read” the road geometry and judge the appropriate amount of steering for the bend (

Land & Lee, 1994;

Boer, 1996;

Wann & Swapp, 2000;

Wann & Land, 2000;

Wann & Wilkie, 2004;

Wilkie et al., 2008;

Authié and Mestre, 2012).

Active foveation would be required in both cases because the tangent point typically moves (into the direction of the bend) in both allocentric and egocentric reference frames based on road geometry and vehicle motion (

Authié & Mestre, 2011;

Lappi & Lehtonen, 2012), while the visual motion of the road surface has a horizontal component in the opposite direction as well as a vertical downward component (

Authié & Mestre 2011,

Wann & Swapp, 2000). Thus, although it is customary to talk loosely about “fixations” on the road (e.g. tangent point fixation, “fixating” points one wishes to pass through), the fact that these putative targets move in relation to the vehicle implies that pursuit-like movement of the eyes is required to maintain foveation.

In fact, relatively little is known about the precise spatiotemporal aspects of guiding fixations on the road because usually field experiments only report the dwell time in some area of interest (AOI) – e.g. one centered on TP – interpreting the results in terms of putative gaze targets within the AOI. The eye movements themselves – what the “fixations” are like – is not quantified. To our knowledge, the present paper presents the first on-road data where gaze stability is analyzed quantitatively at the level of individual fixations.

The point of departure is similar to that of a recent simulator study by

Authie & Mestre (

2011), where they showed that in simulated driving gaze is not static when exposed to a global optical flow due to self-motion. Specifically, what they found was that gaze globally follows the tangent point (which is not stationary in the visual scene), but that overlaid on this global pattern there is a fast optokinetic movement around the tangent point location, consisting of “smooth pursuit eye movements (slow phases of OKN) and fast resetting saccades in the opposite direction”. As they note, “it remains that real driving analysis of gaze behavior at high spatial and temporal resolution would be necessary to test whether OKN behavior would still be present”. This study presents such an analysis (although the resolution cannot be considered “high” by laboratory experiment standards, it is sufficient for the present purpose) and confirms the presence of within-fixation pursuit movements in driving.

Like those authors (ibid.), we too feel that such results highlight the limitations of AOI methods, merely identifying the location of gaze in the visual scene, or even the identification of putative gaze targets (such as the tangent point) in the analysis of gaze behavior during natural tasks involving self-motion.

We also relate the gaze behavior to a fixed reference point on the future trajectory of the vehicle (the exit point of the curve), demonstrating that the fixations lose their pursuit-like character, and maintain instead a fixed angle in this frame of reference which rotates in driver’s egocentric coordinate system (vehicle/body axis), but which is stable relative to real allocentric locations. The findings are discussed in terms of steering models and different neural levels of oculomotor control.

Methods

Subjects, test route and procedure

Nineteen subjects participated in the study (10M, 9F, age 18y – 33y, mean 25y). The data of one subject were not used due to poor performance, leaving a dataset of eighteen participants. They were recruited through personal contacts and university mailing lists. All participants held a valid driver’s licence, and the experience level of the participants varied from less than 1000 km to over 300000 km reported lifetime kilometrage. The subjects reported no medical conditions that might affect eye movements, and had normal or corrected to normal eyesight. (The participants with corrected eyesight wore contact lenses in the experiment). The study was approved by the local ethics committee.

The instrumented car was a model year 2007 Toyota Corolla 1.6 compact sedan with a manual transmission. The passenger side was equipped with brake pedals and extra mirrors, as well as a computer display that allowed the experimenter to monitor vehicle speed, the operation of the eye-tracker and the data-logging systems. The car was equipped with a two-camera eye tracker (Smart Eye Pro version 5.5

www.smarteye.se) mounted on the vehicle dashboard, and measuring gaze direction in relation to the vehicle frame at 60 Hz, a forward looking VGA scene camera and a GPS-receiver. Vehicle telemetry (speed, steering, throttle, braking and horizontal rotational velocity, i.e. yaw-rate) were recorded from CAN-bus. All signals were synchronized and time stamped on-line, and stored on a computer.

The route was a 8.1 km long two-lane rural road (

Figure 1), with painted edgelines, a painted centerline, and 70km/h posted speed limit. It was driven four times in both directions for a total eight legs, and 64.8km. All drives were carried out in daylight, sometimes in varying weather conditions (overcast or light rain). In addition to the participant, a member of the research team acted as experimenter. (S)he was seated on the front passenger seat, giving route directions, ensuring safety, monitoring the recording and performing the calibrations. The eye cameras were calibrated at both ends of the route, i.e. before each leg. If there was a leading vehicle present when setting off to the route, the subject was asked to wait at a bus stop until the road was clear.

The participants drove the test route four times at their own pace, and were instructed to observe traffic laws and safety, and drive as they normally would, except that they were explicitly instructed to not cut into the lane of oncoming traffic in left-hand turns even if this was what they would do in normal driving.

Data analysis

The data were segmented into discrete curve-driving events based on GPS coordinates and vehicle telemetry. We use an operational definition of cornering phases, decomposing the physical geometry of the turn (or the vehicle’s physical trajectory through it) into discrete segments. The curve entry phase begins when the driver begins to rotate the vehicle by turning the steering wheel at her chosen turn point. Both the steering wheel angle and vehicle yaw rate increase progressively throughout the entry phase (in normal everyday driving, assuming no rear wheel skid). In very long curves, the entry phase may then be followed by a steady cornering phase where the steering wheel angle and vehicle rate of rotation are held relatively constant, with minor corrections only. This phase is not present in short corners (which is what the turns analyzed here are). The exit phase of a turn begins when the driver begins to steer out of the bend (to unwind the steering lock, the yaw rate beginning to reduce having reached a local maximum). The driver can be considered to have completely exited the corner, and having completed the entire cornering sequence, when he reaches an exit point where the vehicle is no longer in yaw. Curves where the exit point is visible during approach and turn-in (before the end of the entry phase) are considered sighted, curves where the exit point only becomes visible during the curve (after turning in) are considered blind. We chose sighted curves for analysis, because in these we could identify the visual direction of the exit point in the entry phase, for use as a behaviorally meaningful allocentric reference point.

To render trials comparable, the data were given a location-based representation. One trial, with no traffic or other “incidents” was chosen as a reference. The vehicle trajectory in an allocentric xy plane (GPS coordinate system) was computed by interpolating the GPS signal. This interpolated trajectory would then be used as the template of a route-location value (m from the beginning of the leg), with which all other signals could be associated. All participants’ trials were then mapped onto this frame of reference, by first best-matching the observed GPS values to the reference trajectory, and then projecting all observations onto the interpolated distance values.

Fixations were identified using a velocity threshold algorithm (IV-T, see

Salvucci & Goldberg, 2000), where the horizontal and vertical velocity thresholds (in vehicle frame of reference) were determined from the data. Before fixation detection the data was preprocessed by removing small gaps (1 or 2 missing samples) by interpolation and smoothed (second order Savitsky-Golay filtering, window size: 9).

The velocity threshold for horizontal and vertical gaze velocity were determined separately, for each run (i.e. between calibrations), as 0.8 times the standard deviation of horizontal gaze position and 2.0 times standard deviation of vertical gaze position, respectively. (These values were determined by visual inspection of fixation detection, different values were used for horizontal and vertical thresholds because of the lower signal to noise ratio of the vertical component). Fixations to the speedometer and mirrors were removed from analysis, based on tagging their visual angle for each subject, during initial calibration.

To analyze gaze origin and gaze landing points on the road on a fixation-by-fixation basis, the trajectory of the vehicle was also computed by starting from each origin location (location point corresponding to the time of fixation middle point), and integrating the vehicle yaw rate and speed over time to estimate the point of gaze landing for each fixation as projected on the future trajectory. This is the point where the visual direction of the path-integrated trajectory corresponds to the visual angle of the fixation – roughly speaking, the point where the line of sight intersects the road. These gaze-landing points could now be assigned route distance values, as well as lead-time values (see

Lehtonen et al, 2012b). Conversely, in this representation, route points or time-distances to a point on the future path could be assigned an instantaneous visual direction in the vehicle frame of reference. This was used as an operational criterion for guiding fixations: these were fixations with a gaze-landing point on the estimated trajectory with lead time less than 5 seconds. Fixations with a lead time higher than 5s were considered look-ahead fixations and removed from analysis. Eye rotational velocity during fixation was calculated by dividing the angular difference of fixation start and end point by fixation duration.

All data preparation, visualization and analysis was done using custom-made Python scripts, except for the final statistical analyses which were done with R (version 2.15.1) (

R Core Team, 2012), utilizing package ez (version 3.0-1) (

Lawrence, 2012) for ANOVA.

Results

Visual inspection of gaze data superimposed on video reveals the typical pattern of tangent point orientation, but not a fixed “staring” behavior (an illustrative example is given in the Appendix). The visual angle between gaze and the tangent point can be readily seen in the visualization, and while it is generally small (a few degrees, meaning that gaze will fall within an tangent point AOI of 3-5 degrees radius), gaze nevertheless often appears to be directed

not at the tangent point itself, but the driver’s lane in the zone beyond the tangent point. Most often the pattern appears to be one of

multiple guiding fixation targets on the road, within a few degrees of each other (and of the tangent point), between which the driver alternates. Going beyond this sort of phenomenological analysis of “gaze targets”, we can ask: what is the pattern of eye movement/stability within each individual “fixation”? Plotting horizontal gaze position against time (

Figure 2, top) reveals fixations themselves not to be stable with respect to the vehicle (or the tangent point). The general pattern is strikingly similar to the simulator-study data of

Authié and Mestre (

2011), with a low frequency component following the tangent point (whose visual field location increases in eccentricity, due to vehicle translation and rotation, as the curve is entered) and a superposed high frequency oscillation (several cycles per second). This high frequency pattern is especially prominent in the later stages of curve entry (when the driver is presumably preparing to accelerate out of the curve, rather than turning in): most “fixations” actually display an optokinetic pursuit-like movement in a direction opposite to the rotation of the vehicle, interspersed by quick saccade-like movements (typically but not in any mandatory way in the direction of the curve). Also, it is notable that there are no apparent discontinuities in the pattern at the end of the curve, when the TP disappears.

What are the characteristics of this optokinetic/pursuit-like motion during “fixations” in the tangent point region? Can they be meaningfully related to the motion of the observer? When the data are plotted in this

rotating coordinate system - whose origin is the point of observation but whose main axis runs from the point of origin to the curve exit - a step-like “staircase“ pattern emerges, where the fixations are stable (

Figure 2, bottom). During most “fixations”, gaze rotates in the vehicle coordinate system in the direction opposite to the curve (to the right in left hand curves and vice versa), but in a rotating frame of reference fixed to a reference direction towards a stationary point on the future path (exit point), fixations are more stable.

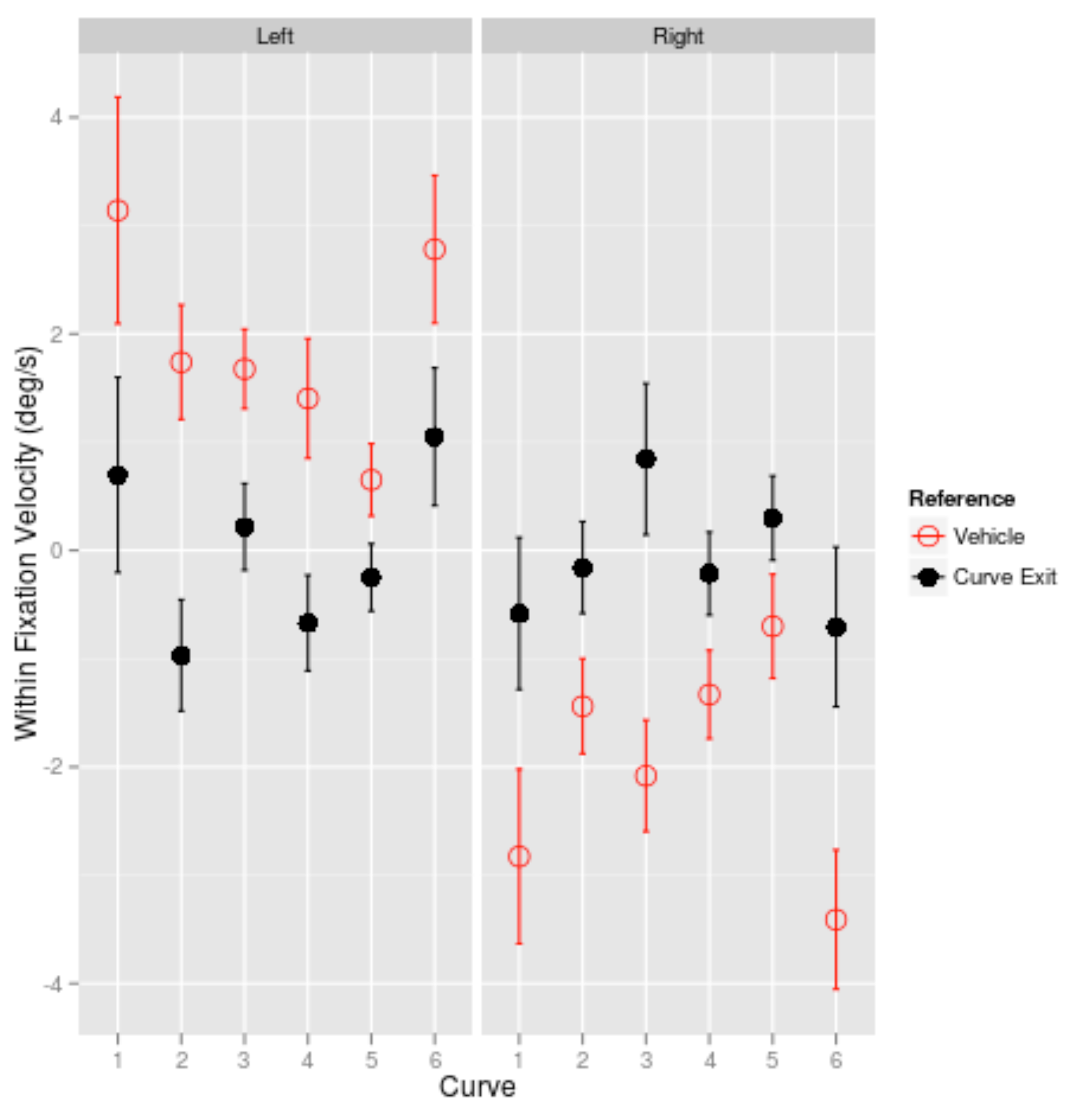

Figure 3 shows the data for all participants, for all curves analyzed. We can see the fixations are stable (“fixed”, mean gaze velocities 0-1 deg/s) in the rotating allocentric reference frame (black), but “pursuit like” (mean gaze velocities 1-4 deg/s) in the the vehicle frame of reference (red).

This result was tested for statistical significance with repeated measures ANOVA (curve direction (2) x curve (6) x reference (2)). The relevant measure is the significant interaction of curve direction (left vs. right) and reference (vehicle vs. exit) (F(1,17)=616.71, p < 0.001), which shows that within-fixation movements are consistent with the drivers choosing a target point on the road, and fixating it with a pursuit movement.

Discussion

The property of movement/stability of the eyes during fixation is always relative to the choice of a frame of reference in which the eye-movement is characterized. In driving, the eyes rotate during curve entry, the angular position of each fixation becoming increasingly more eccentric due to orientation towards the tangent point region. But even within “fixation”, the eye rotates (relative to vehicle), making these tangent point region “fixations” resemble optokinetic nystagmus – a smooth pursuit movement opposite to the direction of self-rotation and fast saccadic re-foveation of the road. In other words, even during “fixation”, gaze is anything but stable with respect to vehicle/body axis. This pursuit-like appearance often disappears, however, when the data are transformed into a rotating reference frame, referenced to a point on the planned future trajectory of the vehicle: the exit point of the curve. (This point was chosen as reference, as it is the only point on the future path of the vehicle which is visible through the entire length of the curve). This complex pattern must be coordinated by the oculomotor center responsible for directing gaze to objects and locations in apparent motion relative to self. It also has implications with respect to steering models accounting for vehicular control.

Several oculomotor mechanisms at different levels of neural and cognitive organization could produce this pattern.

Authié and Mestre (

2011) interpret the similar eye-movement pattern they found in their simulator study in terms of a retinally driven optokinetic reflex overlaid on endogenously targeting the tangent point. In other words, the driver “tries” to foveate the tangent point, but gaze is exogenously “captured” by (local) image flow on the retina. While their (and the present) data are qualitatively compatible with this hypothesis, we feel that the stability of gaze in the allocentric frame makes a case for arguing that the drivers are looking at some point in the road scene fixed in the allocentric frame of reference – likely a point on the future path over which they wish pass. This alternative explanation – that the drivers actually target

fixed points on the future path which happen to lie in the vicinity of the tangent point, and track them for a short while (the duration of a single fixation/pursuit), then selecting another one - is based on one of the alternative steering models invoked to explain “tangent point orientation”, the gaze polling model of

Wann & Swapp (

2000) (See also

Kim and Turvey, 1999;

Wann & Wilkie, 2004;

Wilkie et al. 2008). In this model visual flow on the retina is used to steer the car: the driver fixates a target point on the road she wishes to pass over and steers so that the visual flow lines remain straight. This state corresponds to a (circular) trajectory that will take him to the target point. Under these conditions, moreover, all those flow lines that fall on the observer's future path will be vertical.

Such planned pursuit of a target location represented in an allocentric frame of reference could recruit an optokinetic reflex, but also probably involve predictive pursuit generators, i.e. utilizing signals from cortical motionrepresentations in area MT implicated in pursuit (for review, see Krauzlis, 2004), and the representation of (planned) ego-path in the parietal cortex (cf. Billington et al., 2011). Eye-movement would in this case be driven endogenously by predictive model of target motion, where the “target” is an allocentric location moving in the egocentric frame of reference and falling on the future path. Indeed, both mechanisms could conceivably be used in control of the eyes, with brain systems at different levels of oculomotor organization working in synergy.

To our knowledge, only one previous study explicitly relates on-road data to the gaze polling hypothesis.

Kandil et al. (

2009) claimed gaze polling did not “appear” in their visualizations. However, no data were shown, nor any parameters computed to bolster this claim. The authors merely stated that casual observation of the gaze position (overlaid on the forward looking video image) did not reveal any immediately apparent pattern that could be interpreted as gaze polling. However, we would like to point out that the character of the “staircase” pattern is not apparent in raw visualization or even in the distance based representation (Results,

Figure 2, top). Only when the appropriate coordinate transformations are done does the pattern become “apparent”. What is more, the time scale of the phenomenon is also at the level of individual fixations, making any phenomenological analysis in terms of video-overlay challenging (as illustrated in the Appendix).

Nevertheless, while we consider our results to be most in agreement with the “gaze polling” model – which in contrast to other steering models explicitly predicts fixations towards future path, and their pursuit-like character in vehicle coordinates but stability in rotating allocentric coordinates – it would be premature to interpret is as definitive proof of gaze polling. (Also, our main concern here was to characterize the dynamical pattern of guiding fixations in different frames of reference, not identify definitively whether their visual target in the external world is the tangent point, or some reference point on the road in its vicinity).

Conclusions

The next step for field studies would be to model the environment in even more spatial and temporal detail to identify pattern of motion of the individual fixations’ landing locations themselves (this is not trivial, as fixations are both extended in time and non-stationary, as shown by our data), and to relate this movement also to visual flow in the road scene image in real driving. Also, quantification of the flow speed (at different driving speeds and rates of rotation) and its relation to retinal blurring and the biophysical constraints of the speed and accuracy of eye movements remains to be clarified.

It may be possible to determine whether the pursuit movements have a functional role (supporting a gaze polling strategy), or whether they are a reflexive movement preventing maintenance of tangent point fixation (a tangent point strategy). This could be studied, for example, in a simulator design where gaze is directed in the tangent point region without an active steering task, having the subject simply monitor the tangent point location (for the occasional appearance of a stimulus requiring foveal vision for discrimination). It would then be possible to see whether reflexive pursuit would still be elicited exogenously (in spite of the task), or whether successful tracking of the tangent point would occur. (

Authié and Mestre, 2012, had this type of design and they observed no optokinesis – but they attribute this to bottom-up stabilization due to the presence of a fixation cross, an external stimulus to help fixation, rather than top-down task dependence). If a top-down (task) dependent fixation of the tangent point area in the absence of a visual stimulus is possible, this would suggest the pursuit is not elicited mandatorily, and may be part of an active steering strategy recruiting the optokinetic reflex and integrating it into performance of the steering task.

Such studies, when carried out, will create a more accurate picture of human gaze behavior in this important and intriguing class of naturalistic behavior than the more traditional methods of merely identifying hypothetical gaze targets and relating gaze to AOI’s based on them can.