Eye-Based Recognition of User Traits and States—A Systematic State-of-the-Art Review

Abstract

1. Introduction

2. Foundations

3. Materials and Methods

3.1. Systematic Literature Review

- (eye * OR gaze* OR pupil*)

- AND (cognit* OR mental OR affect* OR emotion* OR physiolog* OR psycholog* OR personality)

- AND (state OR trait OR characteristic*)

- AND (recogni* OR detect* OR classif* OR model* OR predict*).

3.2. Framework Creation

4. Results

4.1. Descriptive Results

4.2. Framework

4.2.1. Dimensions: Task and Context

4.2.2. Dimension: Technology and Data Processing

4.2.3. Dimension: Recognition Targets

5. Discussion

5.1. Task

5.2. Context

5.3. Technology and Data Processing

5.4. Recognition Target

5.5. General Suggestions

5.6. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AOI | Area of interest |

| AUC | Area under curve |

| EEG | Electroencephalogram |

| ECG | Electrocardiogram |

| EDA | Electrodermal activity |

| GSR | Galvanic skin response |

| HCI | Human–computer interaction |

| ML | Machine learning |

| ROC | Receiver operating characteristic |

| SLR | Systematic literature review |

| SVM | Support Vector Machine |

| UI | User interface |

| WMC | Working memory capacity |

Appendix A

Appendix A.1. List of All Identified Publications

| Abbad-Andaloussi et al. [49] Abdelrahman et al. [59] Abdurrahman et al. [56] Alcañiz et al. [91] Alhargan et al. [8] Alhargan et al. [69] Appel et al. [35] Appel et al. [92] Appel et al. [7] Aracena et al. [39] Babiker et al. [93] Bao et al. [57] Bao et al. [94] Barral et al. [65] Behroozi and Parnin [50] Berkovsky et al. [10] Bixler and D’Mello [95] Bozkir et al. [43] Bühler et al. [51] Castner et al. [47] Chakraborty et al. [36] Chen et al. [46] Chen et al. [96] Chen and Epps [97] Conati et al. [41] Conati et al. [98] Dumitriu et al. [99] Fenoglio et al. [100] Gong et al. [101] Gong et al. [34] Hoppe et al. [64] Hoppe et al. [85] Horng and Lin [83] Hosp et al. [48] Hutt et al. [102] Hutt et al. [103] Hutt et al. [9] Jiménez-Guarneros and Fuentes-Pineda [54] Jyotsna et al. [104] Katsini et al. [68] Kim et al. [81] Ktistakis et al. [72] Kwok et al. [105] Lallé et al. [73] Zhao et al. [106] | Li et al. [84] Li et al. [37] Liang et al. [77] Liu et al. [78] Liu et al. [107] Liu et al. [108] Lobo et al. [109] Lu et al. [61] Lufimpu-Luviya et al. [86] Luong and Holz [110] Luong et al. [111] Ma et al. [112] Mills et al. [113] Mills et al. [63] Misra et al. [79] Miyaji et al. [80] Mou et al. [42] Oppelt et al. [60] Raptis et al. [67] Reich et al. [44] Ren et al. [114] Ren et al. [52] Salima et al. [45] Salminen et al. [74] Sims and Conati [75] Soleymani et al. [53] Steichen et al. [66] Stiber et al. [76] Tabbaa et al. [55] Taib et al. [40] Tao and Lu [115] Tarnowski et al. [70] Wang et al. [116] Wu et al. [117] Qi et al. [16] Xing et al. [58] Yang et al. [118] Zhai et al. [38] Zhai and Barreto [119] Zhang et al. [120] Li et al. [121] Zheng et al. [62] Zheng et al. [71] Zhong and Hou [122] Zhou et al. [82] |

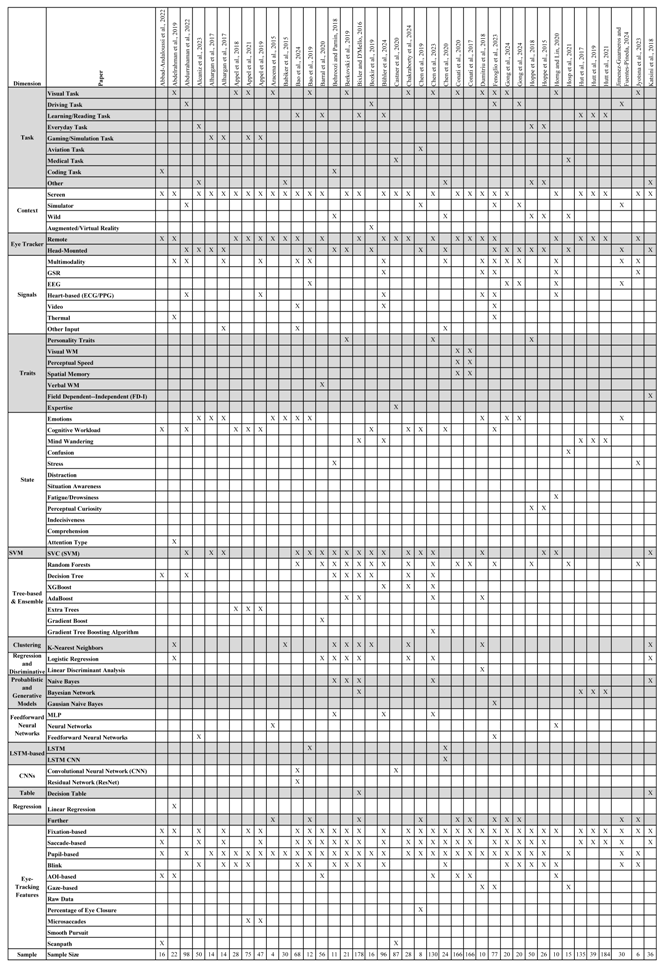

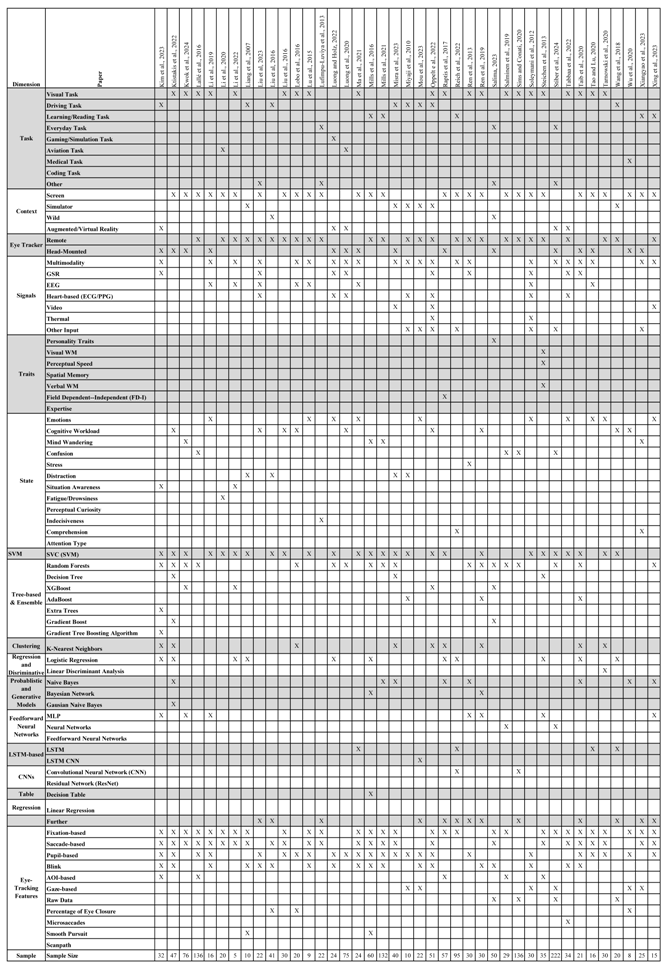

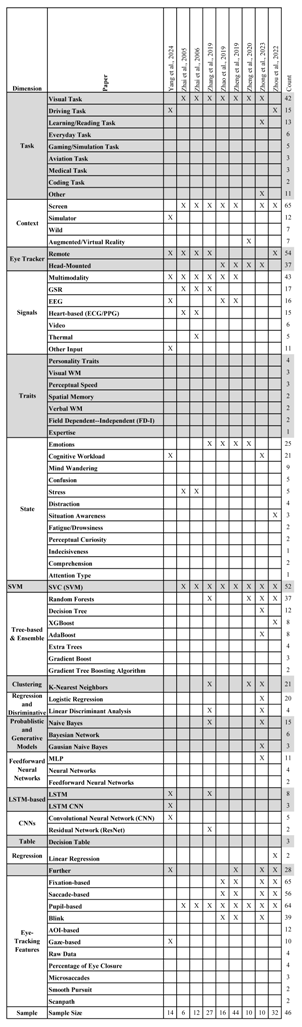

Appendix A.2. Concept Matrix

References

- Hettinger, L.J.; Branco, P.; Miguel Encarnacao, L.; Bonato, P. Neuroadaptive technologies: Applying neuroergonomics to the design of advanced interfaces. Theor. Issues Ergon. Sci. 2003, 4, 220–237. [Google Scholar] [CrossRef]

- Picard, R.W. Affective computing: Challenges. Int. J. Hum. Comput. Stud. 2003, 59, 55–64. [Google Scholar] [CrossRef]

- Schultz, T.; Maedche, A. Biosignals meet Adaptive Systems. SN Appl. Sci. 2023, 5, 234. [Google Scholar] [CrossRef]

- Fairclough, S.H. Fundamentals of physiological computing. Interact. Comput. 2009, 21, 133–145. [Google Scholar] [CrossRef]

- Steyer, R.; Schmitt, M.; Eid, M. Latent state-trait theory and research in personality and individual differences. Eur. J. Personal. 1999, 13, 389–408. [Google Scholar] [CrossRef]

- Duchowski, A.T. Eye Tracking Methodology; Springer International Publishing: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Appel, T.; Sevcenko, N.; Wortha, F.; Tsarava, K.; Moeller, K.; Ninaus, M.; Kasneci, E.; Gerjets, P. Predicting Cognitive Load in an Emergency Simulation Based on Behavioral and Physiological Measures. In Proceedings of the 2019 International Conference on Multimodal Interaction, Suzhou, China, 14–18 October 2019; pp. 154–163. [Google Scholar] [CrossRef]

- Alhargan, A.; Cooke, N.; Binjammaz, T. Multimodal Affect Recognition in an Interactive Gaming Environment Using Eye Tracking and Speech Signals. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; ICMI ’17. pp. 479–486. [Google Scholar] [CrossRef]

- Hutt, S.; Krasich, K.; Brockmole, J.R.; D’Mello, S.K. Breaking out of the Lab: Mitigating Mind Wandering with Gaze-Based Attention-Aware Technology in Classrooms. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021. CHI ’21. [Google Scholar] [CrossRef]

- Berkovsky, S.; Taib, R.; Koprinska, I.; Wang, E.; Zeng, Y.; Li, J.; Kleitman, S. Detecting Personality Traits Using Eye-Tracking Data. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; CHI ’19. pp. 1–12. [Google Scholar] [CrossRef]

- Skaramagkas, V.; Giannakakis, G.; Ktistakis, E.; Manousos, D.; Karatzanis, I.; Tachos, N.S.; Tripoliti, E.; Marias, K.; Fotiadis, D.I.; Tsiknakis, M. Review of Eye Tracking Metrics Involved in Emotional and Cognitive Processes. IEEE Rev. Biomed. Eng. 2023, 16, 260–277. [Google Scholar] [CrossRef]

- Steichen, B. Computational Methods to Infer Human Factors for Adaptation and Personalization Using Eye Tracking. In A Human-Centered Perspective of Intelligent Personalized Environments and Systems; Springer: Berlin/Heidelberg, Germany, 2024; pp. 183–204. [Google Scholar] [CrossRef]

- Pope, A.T.; Bogart, E.H.; Bartolome, D.S. Biocybernetic system evaluates indices of operator engagement in automated task. Biol. Psychol. 1995, 40, 187–195. [Google Scholar] [CrossRef]

- Allanson, J.; Fairclough, S.H. A research agenda for physiological computing. Interact. Comput. 2004, 16, 857–878. [Google Scholar] [CrossRef]

- Riedl, R.; Léger, P.M. Fundamentals of NeuroIS Information Systems and the Brain; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- Qi, X.; Lu, Q.; Pan, W.; Zhao, Y.; Zhu, R.; Dong, M.; Chang, Y.; Lv, Q.; Dick, R.P.; Yang, F.; et al. CASES: A Cognition-Aware Smart Eyewear System for Understanding How People Read. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2023, 7, 115. [Google Scholar] [CrossRef]

- Majaranta, P.; Bulling, A. Eye Tracking and Eye-Based Human–Computer Interaction. In Advances in Physiological Computing; Fairclough, S., Gilleade, K., Eds.; Springer: London, UK, 2014; pp. 39–65. [Google Scholar] [CrossRef]

- Morimoto, C.H.; Mimica, M.R. Eye gaze tracking techniques for interactive applications. Comput. Vis. Image Underst. 2005, 98, 4–24. [Google Scholar] [CrossRef]

- Holmqvist, K. Eye Tracking: A Comprehensive Guide to Methods and Measures; Oxford University Press: New York, NY, USA, 2011. [Google Scholar]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Oster, G.T. Eye Movement Analysis for Activity Recognition Using Electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 741–753. [Google Scholar] [CrossRef] [PubMed]

- Duchowski, A.T. A breadth-first survey of eye-tracking applications. Behav. Res. Methods Instruments Comput. 2002, 34, 455–470. [Google Scholar] [CrossRef]

- Salvucci, D.D.; Goldberg, J.H. Identifying fixations and saccades in eye-tracking protocols. In Proceedings of the Symposium on Eye Tracking Research & Applications—ETRA ’00, Palm Beach Gardens, FL, USA, 6–8 November 2000; pp. 71–78. [Google Scholar] [CrossRef]

- Srivastava, N.; Newn, J.; Velloso, E. Combining Low and Mid-Level Gaze Features for Desktop Activity Recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 189. [Google Scholar] [CrossRef]

- Just, M.A.; Carpenter, P.A. A theory of reading: From eye fixations to comprehension. Psychol. Rev. 1980, 87, 329–354. [Google Scholar] [CrossRef]

- Beatty, J. Task-evoked pupillary responses, processing load, and the structure of processing resources. Psychol. Bull. 1982, 91, 276–292. [Google Scholar] [CrossRef] [PubMed]

- Partala, T.; Jokiniemi, M.; Surakka, V. Pupillary responses to emotionally provocative stimuli. In Proceedings of the Symposium on Eye Tracking Research & Applications—ETRA ’00, Palm Beach Gardens, FL, USA, 6–8 November 2000; pp. 123–129. [Google Scholar] [CrossRef]

- Loewe, N.; Nadj, M. Physio-Adaptive Systems - A State-of-the-Art Review and Future Research Directions. In Proceedings of the ECIS 2020 Proceedings—Twenty-Eighth European Conference on Information Systems, Marrakesh, Morocco, 15–17 June 2020; p. 19. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for performing Systematic Literature Reviews in Software Engineering. In Proceedings of the 28th international Conference on Software Engineering, Shanghai, China, 20–28 May 2007. [Google Scholar] [CrossRef]

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef]

- Nickerson, R.C.; Varshney, U.; Muntermann, J. A method for taxonomy development and its application in information systems. Eur. J. Inf. Syst. 2013, 22, 336–359. [Google Scholar] [CrossRef]

- Benyon, D. Designing Interactive Systems: A Comprehensive Guide to HCI, UX and Interaction Design; Pearson: London, UK, 2014; Number 3. [Google Scholar]

- Wolfswinkel, J.F.; Furtmueller, E.; Wilderom, C.P. Using grounded theory as a method for rigorously reviewing literature. Eur. J. Inf. Syst. 2013, 22, 45–55. [Google Scholar] [CrossRef]

- Gong, X.; Chen, C.L.; Hu, B.; Zhang, T. CiABL: Completeness-induced Adaptative Broad Learning for Cross-Subject Emotion Recognition with EEG and Eye Movement Signals. IEEE Trans. Affect. Comput. 2024, 15, 1970–1984. [Google Scholar] [CrossRef]

- Appel, T.; Scharinger, C.; Gerjets, P.; Kasneci, E. Cross-subject workload classification using pupil-related measures. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications, Warsaw, Poland, 14–17 June 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Chakraborty, S.; Kiefer, P.; Raubal, M. Estimating Perceived Mental Workload From Eye-Tracking Data Based on Benign Anisocoria. IEEE Trans. Hum.-Mach. Syst. 2024, 54, 499–507. [Google Scholar] [CrossRef]

- Li, R.; Cui, J.; Gao, R.; Suganthan, P.N.; Sourina, O.; Wang, L.; Chen, C.H. Situation Awareness Recognition Using EEG and Eye-Tracking data: A pilot study. In Proceedings of the 2022 International Conference on Cyberworlds (CW), Kanazawa, Japan, 27–29 September 2022; pp. 209–212. [Google Scholar] [CrossRef]

- Zhai, J.; Barreto, A.B.; Chin, C.; Li, C. Realization of stress detection using psychophysiological signals for improvement of human-computer interactions. In Proceedings of the Proceedings, IEEE SoutheastCon 2005, Lauderdale, FL, USA, 8–10 April 2005; pp. 415–420. [Google Scholar] [CrossRef]

- Aracena, C.; Basterrech, S.; Snael, V.; Velasquez, J. Neural Networks for Emotion Recognition Based on Eye Tracking Data. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 2632–2637. [Google Scholar] [CrossRef]

- Taib, R.; Berkovsky, S.; Koprinska, I.; Wang, E.; Zeng, Y.; Li, J. Personality Sensing: Detection of Personality Traits Using Physiological Responses to Image and Video Stimuli. ACM Trans. Interact. Intell. Syst. 2020, 10, 18. [Google Scholar] [CrossRef]

- Conati, C.; Lallé, S.; Rahman, M.A.; Toker, D. Comparing and Combining Interaction Data and Eye-tracking Data for the Real-time Prediction of User Cognitive Abilities in Visualization Tasks. ACM Trans. Interact. Intell. Syst. 2020, 10, 12. [Google Scholar] [CrossRef]

- Mou, L.; Zhao, Y.; Zhou, C.; Nakisa, B.; Rastgoo, M.N.; Ma, L.; Huang, T.; Yin, B.; Jain, R.; Gao, W. Driver Emotion Recognition With a Hybrid Attentional Multimodal Fusion Framework. IEEE Trans. Affect. Comput. 2023, 14, 2970–2981. [Google Scholar] [CrossRef]

- Bozkir, E.; Geisler, D.; Kasneci, E. Person Independent, Privacy Preserving, and Real Time Assessment of Cognitive Load using Eye Tracking in a Virtual Reality Setup. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 1834–1837. [Google Scholar] [CrossRef]

- Reich, D.R.; Prasse, P.; Tschirner, C.; Haller, P.; Goldhammer, F.; Jäger, L.A. Inferring Native and Non-Native Human Reading Comprehension and Subjective Text Difficulty from Scanpaths in Reading. In Proceedings of the 2022 Symposium on Eye Tracking Research and Applications, Seattle, WA, USA, 8–11 June 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Salima, M.; M’hammed, S.; Messaadia, M.; Benslimane, S.M. Machine Learning for Predicting Personality Traits from Eye Tracking. In Proceedings of the 2023 International Conference on Decision Aid Sciences and Applications (DASA), Annaba, Algeria, 6–17 September 2023; pp. 126–130. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Q.; Cheng, L.; Gao, X.; Ding, L. A Cognitive Load Assessment Method Considering Individual Differences in Eye Movement Data. In Proceedings of the 2019 IEEE 15th International Conference on Control and Automation (ICCA), Edinburgh, UK, 16–19 July 2019; pp. 295–300. [Google Scholar] [CrossRef]

- Castner, N.; Kuebler, T.C.; Scheiter, K.; Richter, J.; Eder, T.; Huettig, F.; Keutel, C.; Kasneci, E. Deep semantic gaze embedding and scanpath comparison for expertise classification during OPT viewing. In Proceedings of the ACM Symposium on Eye Tracking Research and Applications, Stuttgart, Germany, 2–5 June 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Hosp, B.; Yin, M.S.; Haddawy, P.; Watcharopas, R.; Sa-Ngasoongsong, P.; Kasneci, E. States of Confusion: Eye and Head Tracking Reveal Surgeons’ Confusion during Arthroscopic Surgery. In Proceedings of the 2021 International Conference on Multimodal Interaction, Montréal, QC, Canada, 18–22 October 2021; ICMI ’21. pp. 753–757. [Google Scholar] [CrossRef]

- Abbad-Andaloussi, A.; Sorg, T.; Weber, B. Estimating developers’ cognitive load at a fine-grained level using eye-tracking measures. In Proceedings of the 30th IEEE/ACM International Conference on Program Comprehension, Pittsburgh, PA, USA, 16–17 May 2022; pp. 111–121. [Google Scholar] [CrossRef]

- Behroozi, M.; Parnin, C. Can We Predict Stressful Technical Interview Settings through Eye-Tracking? In Proceedings of the Workshop on Eye Movements in Programming, Warsaw, Poland, 15 June 2018. EMIP ’18. [Google Scholar] [CrossRef]

- Bühler, B.; Bozkir, E.; Deininger, H.; Goldberg, P.; Gerjets, P.; Trautwein, U.; Kasneci, E. Detecting Aware and Unaware Mind Wandering During Lecture Viewing: A Multimodal Machine Learning Approach Using Eye Tracking, Facial Videos and Physiological Data. In Proceedings of the 26th International Conference on Multimodal Interaction, San Jose, Costa Rica, 4–8 November 2024; ICMI ’24. pp. 244–253. [Google Scholar] [CrossRef]

- Ren, P.; Barreto, A.; Gao, Y.; Adjouadi, M. Affective Assessment by Digital Processing of the Pupil Diameter. IEEE Trans. Affect. Comput. 2013, 4, 2–14. [Google Scholar] [CrossRef]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A multimodal database for affect recognition and implicit tagging. IEEE Trans. Affect. Comput. 2012, 3, 42–55. [Google Scholar] [CrossRef]

- Jiménez-Guarneros, M.; Fuentes-Pineda, G. CFDA-CSF: A Multi-Modal Domain Adaptation Method for Cross-Subject Emotion Recognition. IEEE Trans. Affect. Comput. 2024, 15, 1502–1513. [Google Scholar] [CrossRef]

- Tabbaa, L.; Searle, R.; Bafti, S.M.; Hossain, M.M.; Intarasisrisawat, J.; Glancy, M.; Ang, C.S. VREED: Virtual Reality Emotion Recognition Dataset Using Eye Tracking & Physiological Measures. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 178. [Google Scholar] [CrossRef]

- Abdurrahman, U.A.; Zheng, L.; Sharifai, A.G.; Muraina, I.D. Heart Rate and Pupil Dilation As Reliable Measures of Neuro-Cognitive Load Classification. In Proceedings of the 2022 International Conference on Advancements in Smart, Secure and Intelligent Computing (ASSIC), Bhubaneswar, India, 19–20 November 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Bao, J.; Tao, X.; Zhou, Y. An Emotion Recognition Method Based on Eye Movement and Audiovisual Features in MOOC Learning Environment. IEEE Trans. Comput. Soc. Syst. 2024, 11, 171–183. [Google Scholar] [CrossRef]

- Xing, B.; Wang, K.; Song, X.; Pan, Y.; Shi, Y.; Pang, S. User Emotion Status Recognition in MOOCs Study Environment Based on Eye Tracking and Video Feature Fusion. In Proceedings of the 2023 15th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 26–27 August 2023; pp. 88–91. [Google Scholar] [CrossRef]

- Abdelrahman, Y.; Khan, A.A.; Newn, J.; Velloso, E.; Safwat, S.A.; Bailey, J.; Bulling, A.; Vetere, F.; Schmidt, A. Classifying Attention Types with Thermal Imaging and Eye Tracking. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 69. [Google Scholar] [CrossRef]

- Oppelt, M.P.; Foltyn, A.; Deuschel, J.; Lang, N.R.; Holzer, N.; Eskofier, B.M.; Yang, S.H. ADABase: A Multimodal Dataset for Cognitive Load Estimation. Sensors 2022, 23, 340. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Zheng, W.L.; Li, B.; Lu, B.L. Combining eye movements and EEG to enhance emotion recognition. In Proceedings of the IJCAI International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015; pp. 1170–1176. [Google Scholar]

- Zheng, W.L.; Liu, W.; Lu, Y.; Lu, B.L.; Cichocki, A. EmotionMeter: A Multimodal Framework for Recognizing Human Emotions. IEEE Trans. Cybern. 2019, 49, 1110–1122. [Google Scholar] [CrossRef] [PubMed]

- Mills, C.; Gregg, J.; Bixler, R.; D’Mello, S.K. Eye-Mind reader: An intelligent reading interface that promotes long-term comprehension by detecting and responding to mind wandering. Hum.-Comput. Interact. 2021, 36, 306–332. [Google Scholar] [CrossRef]

- Hoppe, S.; Loetscher, T.; Morey, S.A.; Bulling, A. Eye movements during everyday behavior predict personality traits. Front. Hum. Neurosci. 2018, 12, 328195. [Google Scholar] [CrossRef]

- Barral, O.; Lallé, S.; Guz, G.; Iranpour, A.; Conati, C. Eye-Tracking to Predict User Cognitive Abilities and Performance for User-Adaptive Narrative Visualizations. In Proceedings of the 2020 International Conference on Multimodal Interaction, Virtual, 25–29 October 2020; ICMI ’20. pp. 163–173. [Google Scholar] [CrossRef]

- Steichen, B.; Carenini, G.; Conati, C. User-adaptive information visualization - Using Eye Gaze Data to Infer Visualization Tasks and User Cognitive Abilities. In Proceedings of the 2013 International Conference on Intelligent User Interfaces, Los Angeles, CA, USA, 19–22 March 2013; IUI ’13. pp. 317–328. [Google Scholar] [CrossRef]

- Raptis, G.E.; Katsini, C.; Belk, M.; Fidas, C.; Samaras, G.; Avouris, N. Using Eye Gaze Data and Visual Activities to Infer Human Cognitive Styles: Method and Feasibility Studies. In Proceedings of the 25th Conference on User Modeling, Adaptation and Personalization, Bratislava, Slovakia, 9–12 July 2017; UMAP ’17. pp. 164–173. [Google Scholar] [CrossRef]

- Katsini, C.; Fidas, C.; Raptis, G.E.; Belk, M.; Samaras, G.; Avouris, N. Eye Gaze-driven Prediction of Cognitive Differences during Graphical Password Composition. In Proceedings of the 23rd International Conference on Intelligent User Interfaces, Tokyo, Japan, 7–11 March 2018; IUI ’18. pp. 147–152. [Google Scholar] [CrossRef]

- Alhargan, A.; Cooke, N.; Binjammaz, T. Affect recognition in an interactive gaming environment using eye tracking. In Proceedings of the 2017 7th International Conference on Affective Computing and Intelligent Interaction, ACII 2017, San Antonio, TX, USA, 23–26 October 2017; pp. 285–291. [Google Scholar] [CrossRef]

- Tarnowski, P.; Kołodziej, M.; Majkowski, A.; Rak, R.J. Eye-Tracking Analysis for Emotion Recognition. Comput. Intell. Neurosci. 2020, 2020, 2909267. [Google Scholar] [CrossRef]

- Zheng, L.J.; Mountstephens, J.; Teo, J. Four-class emotion classification in virtual reality using pupillometry. J. Big Data 2020, 7, 43. [Google Scholar] [CrossRef]

- Ktistakis, E.; Skaramagkas, V.; Manousos, D.; Tachos, N.S.; Tripoliti, E.; Fotiadis, D.I.; Tsiknakis, M. COLET: A dataset for COgnitive workLoad estimation based on eye-tracking. Comput. Methods Programs Biomed. 2022, 224, 106989. [Google Scholar] [CrossRef]

- Lallé, S.; Conati, C.; Carenini, G. Predicting confusion in information visualization from eye tracking and interaction data. In Proceedings of the IJCAI International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 2529–2535. [Google Scholar]

- Salminen, J.; Nagpal, M.; Kwak, H.; An, J.; Jung, S.g.; Jansen, B.J. Confusion Prediction from Eye-Tracking Data: Experiments with Machine Learning. In Proceedings of the 9th International Conference on Information Systems and Technologies, Cairo, Egypt, 24–26 March 2019. icist 2019. [Google Scholar] [CrossRef]

- Sims, S.D.; Conati, C. A Neural Architecture for Detecting User Confusion in Eye-Tracking Data. In Proceedings of the 2020 International Conference on Multimodal Interaction, Virtual, 25–29 October 2020; ICMI ’20. pp. 15–23. [Google Scholar] [CrossRef]

- Stiber, M.; Bohus, D.; Andrist, S. “Uh, This One?”: Leveraging Behavioral Signals for Detecting Confusion during Physical Tasks. In Proceedings of the 26th International Conference on Multimodal Interaction, San Jose, Costa Rica, 4–8 November 2024; ICMI ’24. pp. 194–203. [Google Scholar] [CrossRef]

- Liang, Y.; Reyes, M.L.; Lee, J.D. Real-Time Detection of Driver Cognitive Distraction Using Support Vector Machines. IEEE Trans. Intell. Transp. Syst. 2007, 8, 340–350. [Google Scholar] [CrossRef]

- Liu, T.; Yang, Y.; Huang, G.B.; Yeo, Y.K.; Lin, Z. Driver Distraction Detection Using Semi-Supervised Machine Learning. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1108–1120. [Google Scholar] [CrossRef]

- Misra, A.; Samuel, S.; Cao, S.; Shariatmadari, K. Detection of Driver Cognitive Distraction Using Machine Learning Methods. IEEE Access 2023, 11, 18000–18012. [Google Scholar] [CrossRef]

- Miyaji, M.; Kawanaka, H.; Oguri, K. Study on effect of adding pupil diameter as recognition features for driver’s cognitive distraction detection. In Proceedings of the 2010 7th International Symposium on Communication Systems, Networks & Digital Signal Processing (CSNDSP 2010), Newcastle, UK, 21–23 July 2010; pp. 406–411. [Google Scholar] [CrossRef]

- Kim, G.; Lee, J.; Yeo, D.; An, E.; Kim, S. Physiological Indices to Predict Driver Situation Awareness in VR. In Proceedings of the Adjunct 2023 ACM International Joint Conference on Pervasive and Ubiquitous Computing & the 2023 ACM International Symposium on Wearable Computing, Cancun, Mexico, 8–12 October 2023; UbiComp/ISWC ’23 Adjunct. pp. 40–45. [Google Scholar] [CrossRef]

- Zhou, F.; Yang, X.J.; De Winter, J.C. Using Eye-Tracking Data to Predict Situation Awareness in Real Time during Takeover Transitions in Conditionally Automated Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 2284–2295. [Google Scholar] [CrossRef]

- Horng, G.J.; Lin, J.Y. Using Multimodal Bio-Signals for Prediction of Physiological Cognitive State Under Free-Living Conditions. IEEE Sens. J. 2020, 20, 4469–4484. [Google Scholar] [CrossRef]

- Li, F.; Chen, C.H.; Xu, G.; Khoo, L.P. Hierarchical Eye-Tracking Data Analytics for Human Fatigue Detection at a Traffic Control Center. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 465–474. [Google Scholar] [CrossRef]

- Hoppe, S.; Loetscher, T.; Morey, S.; Bulling, A. Recognition of Curiosity Using Eye Movement Analysis. In Proceedings of the Adjunct 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers; Osaka, Japan, 7–11 September 2015, UbiComp/ISWC’15 Adjunct; pp. 185–188. [CrossRef]

- Lufimpu-Luviya, Y.; Merad, D.; Paris, S.; Drai-Zerbib, V.; Baccino, T.; Fertil, B. A Regression-Based Method for the Prediction of the Indecisiveness Degree through Eye Movement Patterns. In Proceedings of the 2013 Conference on Eye Tracking South Africa, Cape Town, South Africa, 29–31 August 2013; ETSA ’13. pp. 32–38. [Google Scholar] [CrossRef]

- Zheng, W.L.; Dong, B.N.; Lu, B.L. Multimodal emotion recognition using EEG and eye tracking data. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC 2014, Chicago, IL, USA, 26–30 August 2014; pp. 5040–5043. [Google Scholar] [CrossRef]

- Calvo, R.; Peters, D. Positive Computing: Technology forWellbeing and Human Potential; The MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Hassenzahl, M. Experience Design: Technology for All the Right Reasons; Morgan & Claypool Publishers: San Rafael, CA, USA, 2010; Volume 3, pp. 1–95. [Google Scholar]

- Riva, G.; Baños, R.M.; Botella, C.; Wiederhold, B.K.; Gaggioli, A. Positive technology: Using interactive technologies to promote positive functioning. Cyberpsychol. Behav. Soc. Netw. 2012, 15, 69–77. [Google Scholar] [CrossRef]

- Alcañiz, M.; Angrisani, L.; Arpaia, P.; De Benedetto, E.; Duraccio, L.; Gómez-Zaragozá, L.; Marín-Morales, J.; Minissi, M.E. Exploring the Potential of Eye-Tracking Technology for Emotion Recognition: A Preliminary Investigation. In Proceedings of the 2023 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE), Milano, Italy, 25–27 October 2023; pp. 763–768. [Google Scholar] [CrossRef]

- Appel, T.; Gerjets, P.; Hoffman, S.; Moeller, K.; Ninaus, M.; Scharinger, C.; Sevcenko, N.; Wortha, F.; Kasneci, E. Cross-task and Cross-participant Classification of Cognitive Load in an Emergency Simulation Game. IEEE Trans. Affect. Comput. 2021, 14, 1558–1571. [Google Scholar] [CrossRef]

- Babiker, A.; Faye, I.; Prehn, K.; Malik, A. Machine learning to differentiate between positive and negative emotions using pupil diameter. Front. Psychol. 2015, 6, 1921. [Google Scholar] [CrossRef]

- Bao, L.Q.; Qiu, J.L.; Tang, H.; Zheng, W.L.; Lu, B.L. Investigating Sex Differences in Classification of Five Emotions from EEG and Eye Movement Signals. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 6746–6749. [Google Scholar] [CrossRef]

- Bixler, R.; D’Mello, S. Automatic gaze-based user-independent detection of mind wandering during computerized reading. User Model. User-Adapt. Interact. 2016, 26, 33–68. [Google Scholar]

- Chen, L.; Cai, W.; Yan, D.; Berkovsky, S. Eye-tracking-based personality prediction with recommendation interfaces. User Model. User-Adapt. Interact. 2023, 33, 121–157. [Google Scholar] [CrossRef]

- Chen, S.; Epps, J. Multimodal Event-based Task Load Estimation from Wearables. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Conati, C.; Lallé, S.; Rahman, M.A.; Toker, D. Further Results on Predicting Cognitive Abilities for Adaptive Visualizations. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 1568–1574. [Google Scholar] [CrossRef]

- Dumitriu, T.; Cîmpanu, C.; Ungureanu, F.; Manta, V.I. Experimental Analysis of Emotion Classification Techniques. In Proceedings of the 2018 IEEE 14th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 6–8 September 2018; pp. 63–70. [Google Scholar] [CrossRef]

- Fenoglio, D.; Josifovski, D.; Gobbetti, A.; Formo, M.; Gjoreski, H.; Gjoreski, M.; Langheinrich, M. Federated Learning for Privacy-aware Cognitive Workload Estimation. In Proceedings of the 22nd International Conference on Mobile and Ubiquitous Multimedia, Vienna, Austria, 3–6 December 2023; MUM ’23. pp. 25–36. [Google Scholar] [CrossRef]

- Gong, X.; Chen, C.L.P.; Zhang, T. Cross-Cultural Emotion Recognition With EEG and Eye Movement Signals Based on Multiple Stacked Broad Learning System. IEEE Trans. Comput. Soc. Syst. 2024, 11, 2014–2025. [Google Scholar] [CrossRef]

- Hutt, S.; Mills, C.; Bosch, N.; Krasich, K.; Brockmole, J.; D’Mello, S. “Out of the Fr-Eye-ing Pan”. In Proceedings of the 25th Conference on User Modeling, Adaptation and Personalization, Bratislava, Slovakia, 9–12 July 2017; pp. 94–103. [Google Scholar] [CrossRef]

- Hutt, S.; Krasich, K.; Mills, C.; Bosch, N.; White, S.; Brockmole, J.R.; D’Mello, S.K. Automated gaze-based mind wandering detection during computerized learning in classrooms. User Model. User-Adapt. Interact. 2019, 29, 821–867. [Google Scholar] [CrossRef]

- Jyotsna, C.; Amudha, J.; Ram, A.; Fruet, D.; Nollo, G. PredictEYE: Personalized Time Series Model for Mental State Prediction Using Eye Tracking. IEEE Access 2023, 11, 128383–128409. [Google Scholar] [CrossRef]

- Kwok, T.C.K.; Kiefer, P.; Schinazi, V.R.; Hoelscher, C.; Raubal, M. Gaze-based detection of mind wandering during audio-guided panorama viewing. Sci. Rep. 2024, 14, 27955. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.M.; Li, R.; Zheng, W.L.; Lu, B.L. Classification of Five Emotions from EEG and Eye Movement Signals: Complementary Representation Properties. In Proceedings of the 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), San Francisco, CA, USA, 20–23 March 2019; pp. 611–614. [Google Scholar] [CrossRef]

- Liu, X.; Chen, T.; Xie, G.; Liu, G. Contact-free cognitive load recognition based on eye movement. J. Electr. Comput. Eng. 2016, 2016, 1601879. [Google Scholar] [CrossRef]

- Liu, Y.; Yu, Y.; Tao, H.; Ye, Z.; Wang, S.; Li, H.; Hu, D.; Zhou, Z.; Zeng, L.L. Cognitive Load Prediction from Multimodal Physiological Signals using Multiview Learning. IEEE J. Biomed. Health Informat. 2023, 1–11. [Google Scholar] [CrossRef]

- Lobo, J.L.; Ser, J.D.; De Simone, F.; Presta, R.; Collina, S.; Moravek, Z. Cognitive Workload Classification Using Eye-Tracking and EEG Data. In Proceedings of the International Conference on Human-Computer Interaction in Aerospace, Paris, France, 14–16 September 2016. HCI-Aero ’16. [Google Scholar] [CrossRef]

- Luong, T.; Holz, C. Characterizing Physiological Responses to Fear, Frustration, and Insight in Virtual Reality. IEEE Trans. Vis. Comput. Graph. 2022, 28, 3917–3927. [Google Scholar] [CrossRef] [PubMed]

- Luong, T.; Martin, N.; Raison, A.; Argelaguet, F.; Diverrez, J.M.; Lecuyer, A. Towards Real-Time Recognition of Users Mental Workload Using Integrated Physiological Sensors into a VR HMD. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality, ISMAR 2020, Virtual, 9–13 November 2020; pp. 425–437. [Google Scholar] [CrossRef]

- Ma, R.X.; Yan, X.; Liu, Y.Z.; Li, H.L.; Lu, B.L. Sex Difference in Emotion Recognition under Sleep Deprivation: Evidence from EEG and Eye-tracking. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Virtual, 1–5 November 2021; pp. 6449–6452. [Google Scholar] [CrossRef]

- Mills, C.; Bixler, R.; Wang, X.; D’Mello, S.K. Automatic gaze-based detection of mind wandering during narrative film comprehension. In Proceedings of the 9th International Conference on Educational Data Mining, EDM 2016, Raleigh, NC, USA, 29 June–2 July 2016; pp. 30–37. [Google Scholar]

- Ren, P.; Ma, X.; Lai, W.; Zhang, M.; Liu, S.; Wang, Y.; Li, M.; Ma, D.; Dong, Y.; He, Y.; et al. Comparison of the Use of Blink Rate and Blink Rate Variability for Mental State Recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 867–875. [Google Scholar] [CrossRef]

- Tao, L.Y.; Lu, B.L. Emotion Recognition under Sleep Deprivation Using a Multimodal Residual LSTM Network. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Wang, R.; Amadori, P.V.; Demiris, Y. Real-Time Workload Classification during Driving using HyperNetworks. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3060–3065. [Google Scholar] [CrossRef]

- Wu, C.; Cha, J.; Sulek, J.; Zhou, T.; Sundaram, C.P.; Wachs, J.; Yu, D. Eye-Tracking Metrics Predict Perceived Workload in Robotic Surgical Skills Training. Hum. Factors J. Hum. Factors Ergon. Soc. 2020, 62, 1365–1386. [Google Scholar] [CrossRef]

- Yang, H.; Wu, J.; Hu, Z.; Lv, C. Real-Time Driver Cognitive Workload Recognition: Attention-Enabled Learning With Multimodal Information Fusion. IEEE Trans. Ind. Electron. 2024, 71, 4999–5009. [Google Scholar] [CrossRef]

- Zhai, J.; Barreto, A. Stress Detection in Computer Users Based on Digital Signal Processing of Noninvasive Physiological Variables. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 1355–1358. [Google Scholar] [CrossRef]

- Zhang, T.; El Ali, A.; Wang, C.; Zhu, X.; Cesar, P. CorrFeat: Correlation-based Feature Extraction Algorithm using Skin Conductance and Pupil Diameter for Emotion Recognition. In Proceedings of the 2019 International Conference on Multimodal Interaction, Suzhou, China, 14–18 October 2019; ICMI ’19. pp. 404–408. [Google Scholar] [CrossRef]

- Li, T.H.; Liu, W.; Zheng, W.L.; Lu, B.L. Classification of Five Emotions from EEG and Eye Movement Signals: Discrimination Ability and Stability over Time. In Proceedings of the International IEEE/EMBS Conference on Neural Engineering, NER, San Francisco, CA, USA, 20–23 March 2019; pp. 607–610. [Google Scholar] [CrossRef]

- Zhong, X.; Hou, W. A Method for Classifying Cognitive Load of Visual Tasks Based on Eye Tracking Features. In Proceedings of the 2023 9th International Conference on Virtual Reality (ICVR), Xianyang, China, 12–14 May 2023; pp. 131–138. [Google Scholar] [CrossRef]

| No | Inclusion Criterion Description |

|---|---|

| 1 | Applied a high-quality video-based eye-tracking device to collect eye data. |

| 2 | Investigated a user trait or state with eye data. |

| 3 | Leveraged eye-tracking data collected within an experimental study. |

| 4 | Applied an advanced algorithm that leveraged a machine learning or deep learning approach to recognize a user state or trait. |

| 5 | Publication should be available in English. |

| Dimension | Suggestions |

|---|---|

| Task |

|

| Context |

|

| Technology and data processing |

|

| Recognition target |

|

| General suggestions |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Langner, M.; Toreini, P.; Maedche, A. Eye-Based Recognition of User Traits and States—A Systematic State-of-the-Art Review. J. Eye Mov. Res. 2025, 18, 8. https://doi.org/10.3390/jemr18020008

Langner M, Toreini P, Maedche A. Eye-Based Recognition of User Traits and States—A Systematic State-of-the-Art Review. Journal of Eye Movement Research. 2025; 18(2):8. https://doi.org/10.3390/jemr18020008

Chicago/Turabian StyleLangner, Moritz, Peyman Toreini, and Alexander Maedche. 2025. "Eye-Based Recognition of User Traits and States—A Systematic State-of-the-Art Review" Journal of Eye Movement Research 18, no. 2: 8. https://doi.org/10.3390/jemr18020008

APA StyleLangner, M., Toreini, P., & Maedche, A. (2025). Eye-Based Recognition of User Traits and States—A Systematic State-of-the-Art Review. Journal of Eye Movement Research, 18(2), 8. https://doi.org/10.3390/jemr18020008