Review on Eye-Hand Span in Sight-Reading of Music

Abstract

Introduction

Article selection

Origins of the EHS measure

Why is EHS a suitable measure for evaluating sight reading?

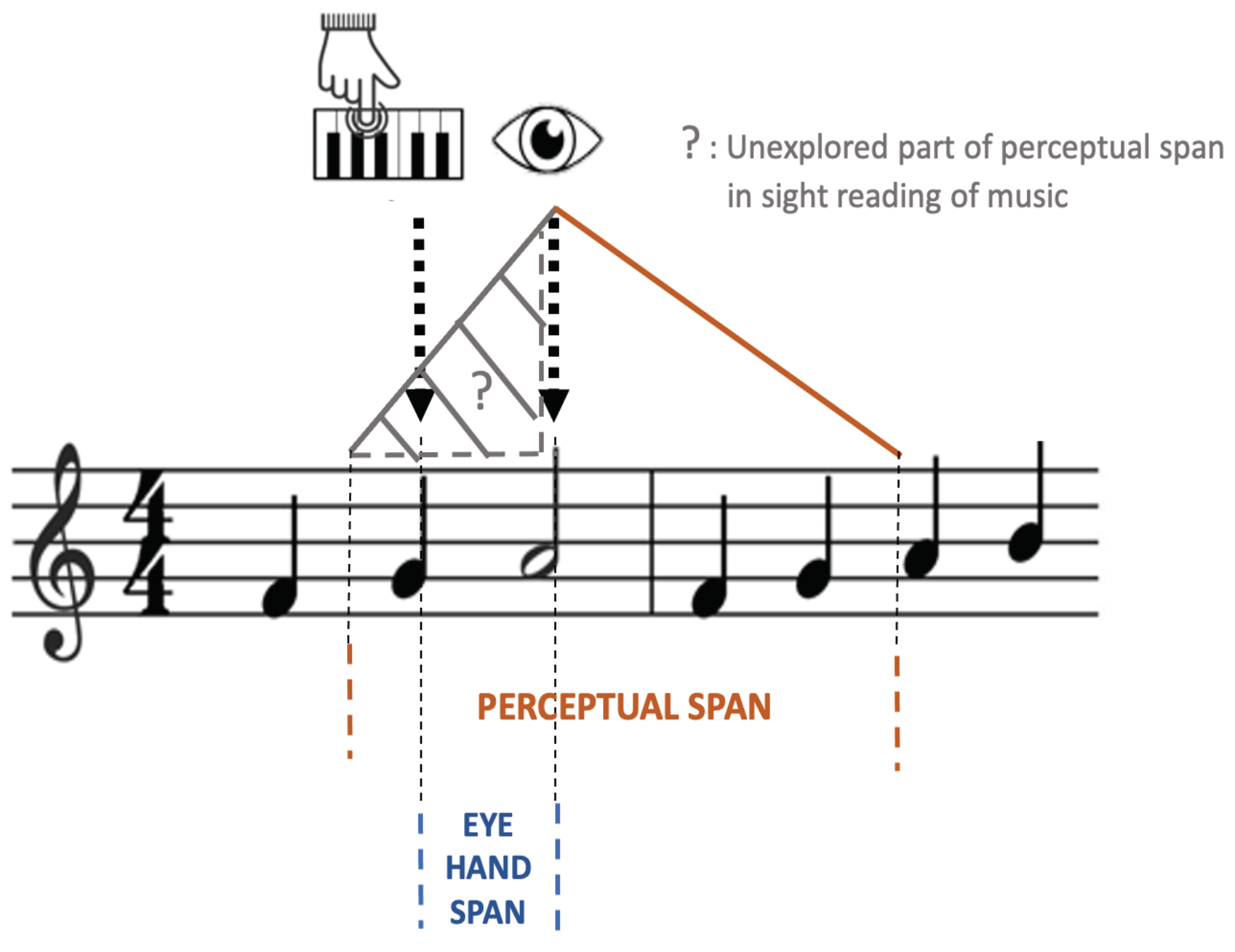

EHS: a complementary measure of perceptual span during sight reading

How to measure EHS?

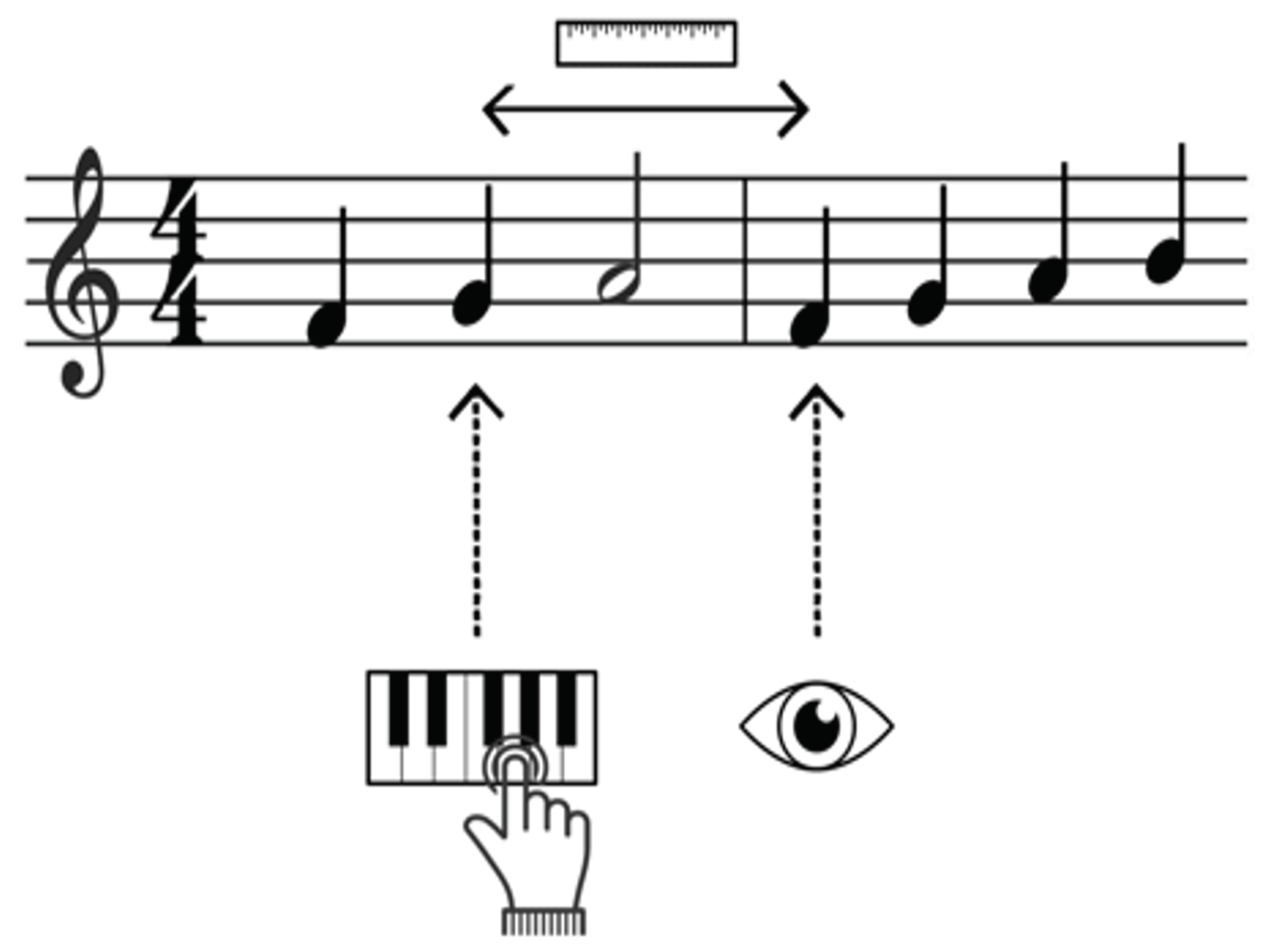

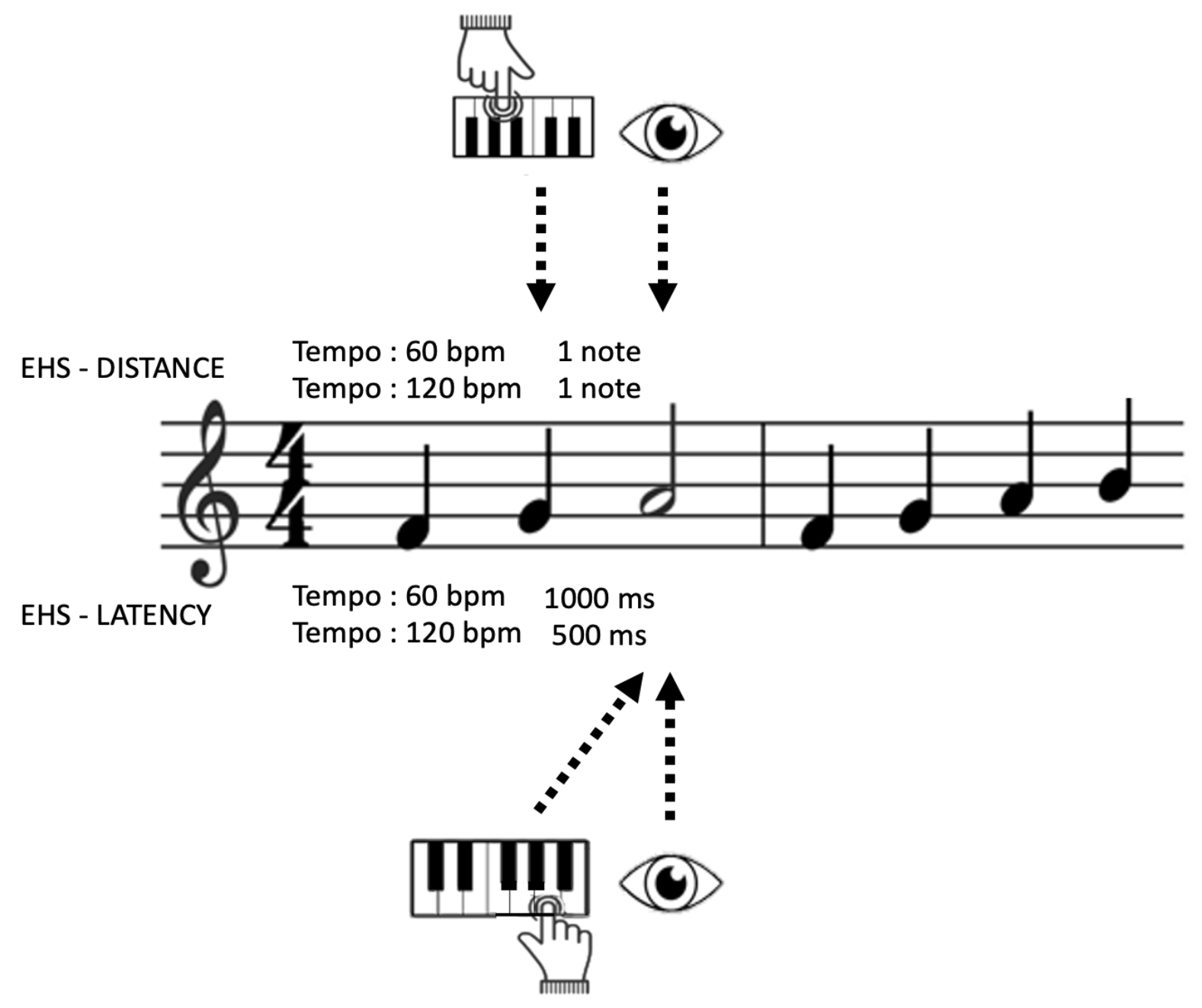

Distance in absolute space

Distance in musical units

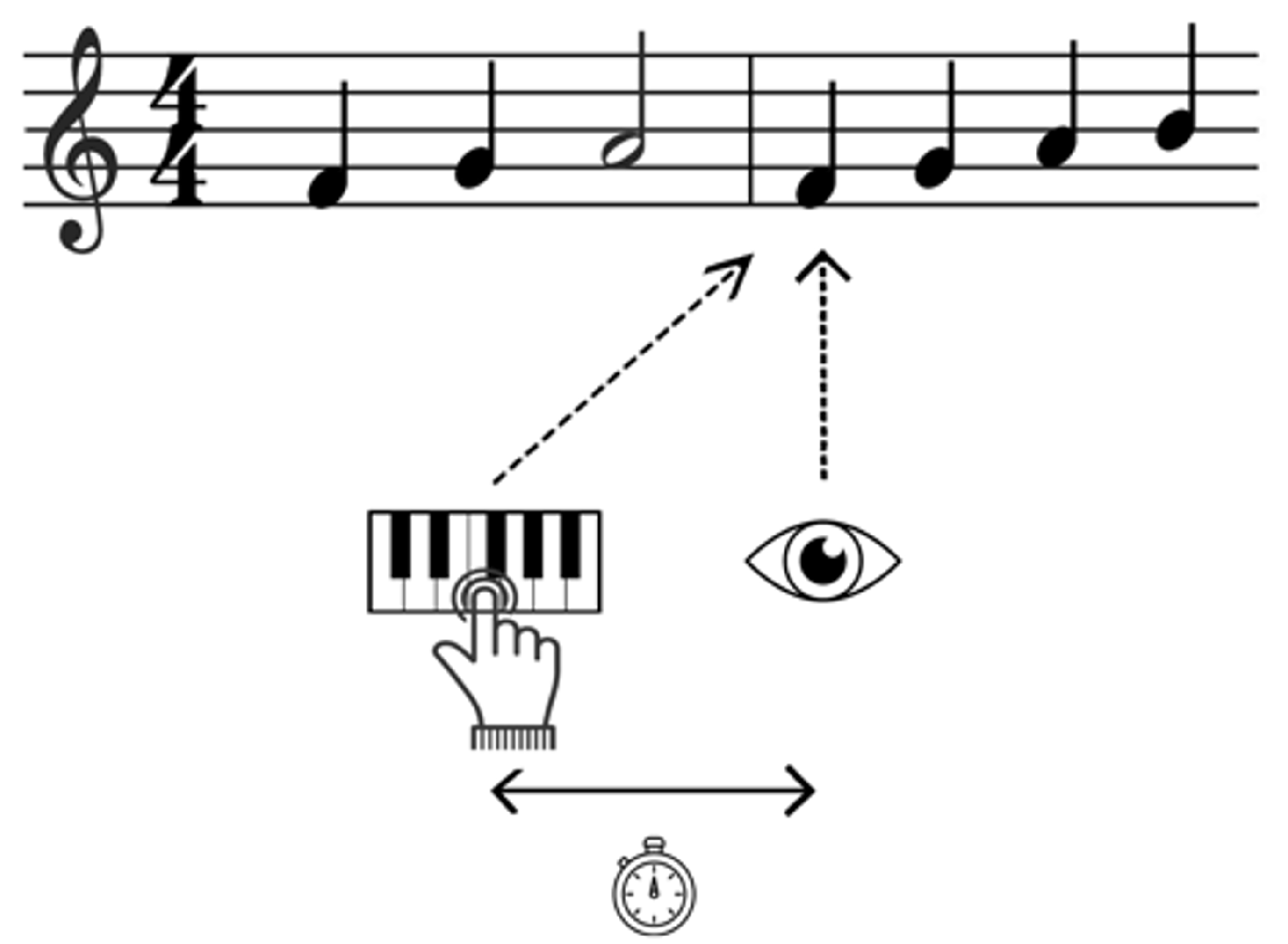

Latency in absolute time

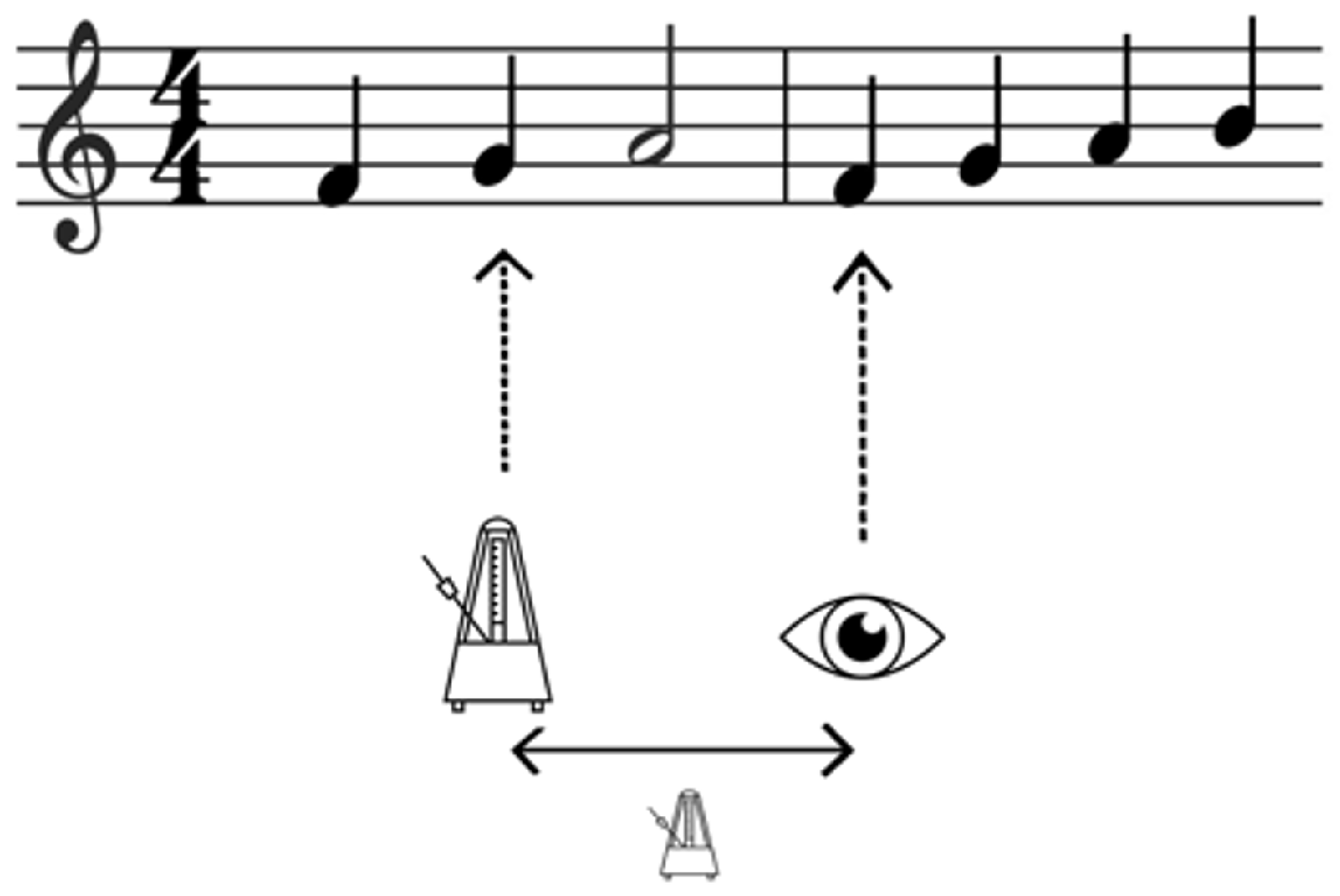

Latency in musical units (Eye-Time Span)

The main factors involved in eyehand span variability

Factors which depend on the musician’s expertise level

Differences in the definition of expertise: Learning vs. playing level

The effect of expertise

Factors which depend on the score

Differences in the employed musical material

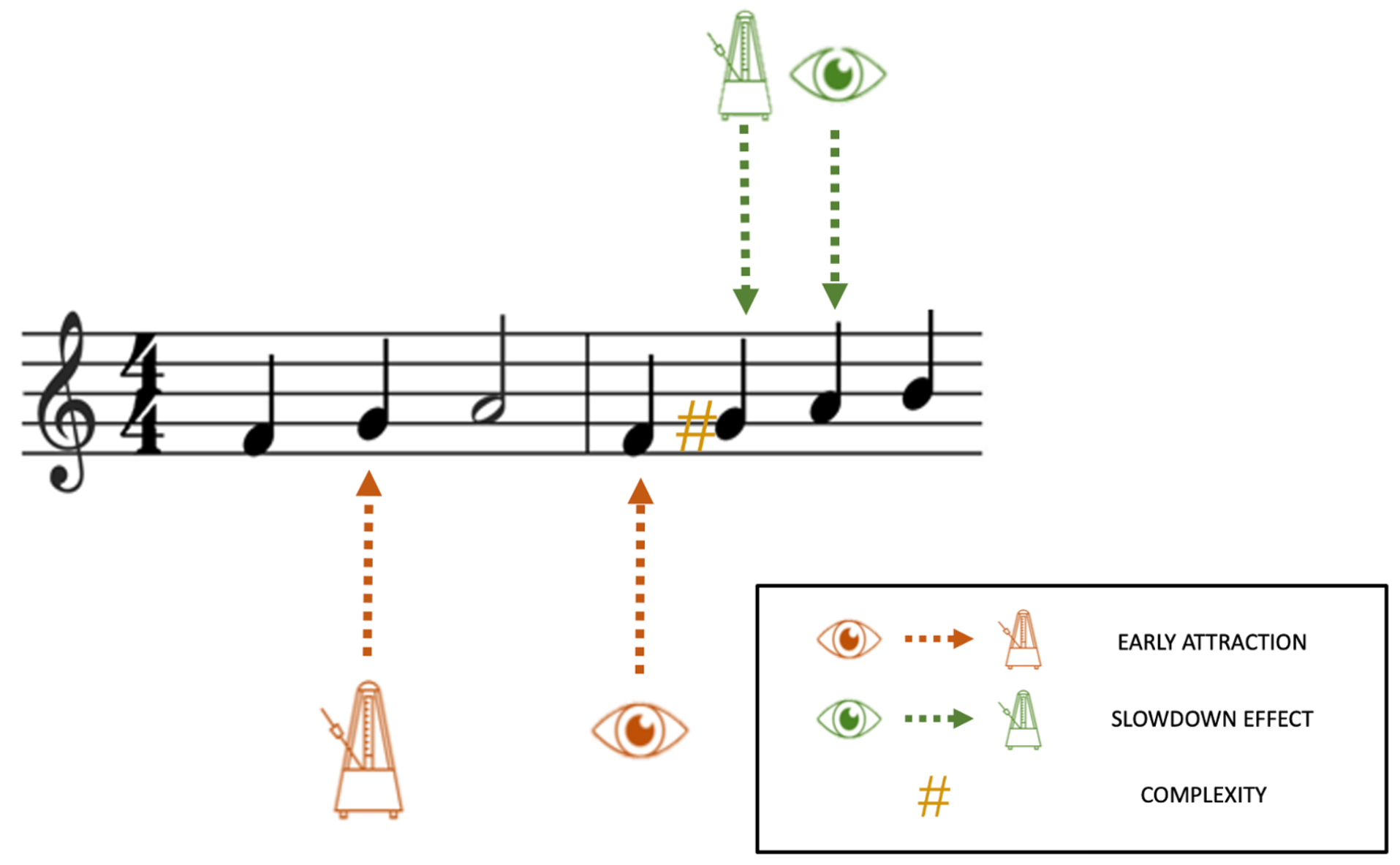

Complexity

How is musical complexity defined?

Effect of prolonged complexity on EHS

Effect of a temporary complexity on EHS: the attraction hypothesis

Factors which depend on context of music reading

Should tempo be controlled for and/or imposed in EHS studies?

Does training affect EHS?

Does the type of instrument influence EHS?

Conclusion

Ethics and Conflict of Interest

Acknowledgments

References

- Adachi, M., K. Takiuchi, and H. Shoda. 2012. Effects of melodic structure and meter on the sightreading performances of beginners and advanced pianists, 5–8. http://icmpc-escom2012.web.auth.gr/files/papers/5_Proc.pdf.

- Aiello, R. 2001. Playing the piano by heart: From behavior to cognition. Annals of the New York Academy of Sciences 930, 1: 389–393. [Google Scholar] [CrossRef]

- Bernstein, S. 1981. Self-discovery through music: With your own two hands. Music Sales Ltd. [Google Scholar]

- Burman, D. D., and J. R. Booth. 2009. Music rehearsal increases the perceptual span for notation. Music Perception: An Interdisciplinary Journal 26, 4: 303–320. [Google Scholar] [CrossRef]

- Buswell, G. T. 1920. An experimental study of the eye-voice span in reading. Supplementary Educational Monograph 17. https://hdl.handle.net/2027/uc2.ark:/13960/t8v.

- Butsch, R. L. C. 1932. Eye movements and the eyehand span in typewriting. Journal of Educational Psychology 23, 2: 104–121. [Google Scholar] [CrossRef]

- Cara, M. A. 2018. Anticipation awareness and visual monitoring in reading contemporary music. Musicae Scientiae 22, 3: 322–343. [Google Scholar] [CrossRef]

- Chaffin, R., and G. Imreh. 1997. « Pulling teeth and torture »: Musical memory and problem solving. Thinking & Reasoning 3, 4: 315–336. [Google Scholar] [CrossRef]

- Charness, N., E. M. Reingold, M. Pomplun, and D. M. Stampe. 2001. The perceptual aspect of skilled performance in chess: Evidence from eye movements. Memory & Cognition 29, 8: 1146–1152. [Google Scholar] [CrossRef]

- Chase, W. G., and H. A. Simon. 1973a. Perception in chess. Cognitive Psychology 4, 1: 55–81. [Google Scholar] [CrossRef]

- Chase, W. G., and H. A. Simon. 1973b. The mind’s eye in chess. In Visual Information Processing. Elsevier: pp. 215–281. [Google Scholar] [CrossRef]

- Chitalkina, N., M. Puurtinen, H. Gruber, and R. Bednarik. 2021. Handling of incongruences in music notation during singing or playing. International Journal of Music Education 39, 1: 18–38. [Google Scholar] [CrossRef]

- Choi, W., M. W. Lowder, F. Ferreira, and J. M. Henderson. 2015. Individual differences in the perceptual span during reading: Evidence from the moving window technique. Attention, Perception, & Psychophysics 77, 7: 2463–2475. [Google Scholar] [CrossRef]

- Drai-Zerbib, V. 2016. What if musical skill, talent, and creativity were just a matter of memory organization and strategies? International Journal for Talent Development and Creativity 4, 1,2: 87–95. [Google Scholar]

- Drai-Zerbib, V., and T. Baccino. 2018. Cross-modal music integration in expert memory: Evidence from eye movements. Journal of Eye Movement Research 11, 2: 4. [Google Scholar] [CrossRef]

- Drai-Zerbib, V., T. Baccino, and E. Bigand. 2011. Sight-reading expertise: Cross-modality integration investigated using eye tracking. Psychology of Music 40, 2: 216–235. [Google Scholar] [CrossRef]

- Drake, C., and C. Palmer. 2000. Skill acquisition in music performance: Relations between planning and temporal control. Cognition 74, 1: 1–32. [Google Scholar] [CrossRef]

- Ericsson, K. A., and W. Kintsch. 1995. Long-term working memory. Psychological Review 102, 2: 211–245. [Google Scholar] [CrossRef]

- Ferreira, F., and J. M. Henderson. 1990. Use of verb information in syntactic parsing: Evidence from eye movements and word-by-word selfpaced reading. Journal of Experimental Psychology: Learning, Memory, and Cognition 16, 4: 555–568. [Google Scholar] [CrossRef]

- Fink, L., E. Lange, and R. Groner. 2019. The application of eye-tracking in music research. Journal of Eye Movement Research 11, 2: 1. [Google Scholar] [CrossRef]

- Friedberg, R. 1993. The complete pianist: Body, mind, synthesis. Metuchen, NJ: The Scarecrow Press. [Google Scholar]

- Furneaux, S., and M. F. Land. 1999. The effects of skill on the eye–hand span during musical sight reading. Proceedings of the Royal Society of London. Series B: Biological Sciences 266, 1436: 2435–2440. [Google Scholar] [CrossRef]

- Gilman, E., and G. Underwood. 2003. Restricting the field of view to investigate the perceptual spans of pianists. Visual Cognition 10, 2: 201–232. [Google Scholar] [CrossRef]

- Gobet, F., and H. A. Simon. 1996. Templates in chess memory: A mechanism for recalling several boards. Cognitive Psychology 31, 1: 1–40. [Google Scholar] [CrossRef] [PubMed]

- Goolsby, T. W. 1994a. Profiles of processing: Eye movements during sightreading. Music Perception 12, 1: 97–123. [Google Scholar] [CrossRef]

- Goolsby, T. W. 1994b. Eye movement in music reading: Effects of reading ability, notational complexity, and encounters. Music Perception 12, 1: 77–96. [Google Scholar] [CrossRef]

- Holmqvist, K., and R. Andersson. 2017. Eye tracking: A comprehensive guide to methods, paradigms, and measures, 2nd edition. The Oxford University Press Inc. [Google Scholar]

- Huovinen, E., A.-K. Ylitalo, and M. Puurtinen. 2018. Early attraction in temporally controlled sight reading of music. Journal of Eye Movement Research 11, 2: 3. [Google Scholar] [CrossRef]

- Hyönä, J. 1995. Do irregular letter combinations attract readers’ attention? Evidence from fixation locations in words. Journal of Experimental Psychology: Human Perception and Performance 21, 1: 68–81. [Google Scholar] [CrossRef]

- Inhoff, A. W., M. Starr, and K. L. Shindler. 2000. Is the processing of words during eye fixations in reading strictly serial? Perception & Psychophysics 62, 7: 1474–1484. [Google Scholar] [CrossRef]

- Jacobsen, O. I. 1928. An experimental study of photographing eye-movements in reading music. Music Supervisors’ Journal 14, 3: 63–69. [Google Scholar] [CrossRef]

- Just, M. A., and P. A. Carpenter. 1980. A theory of reading: From eye fixations to comprehension. Psychological Review 87, 4: 329–354. [Google Scholar] [CrossRef]

- Kinsler, V., and R. H. S. Carpenter. 1995. Saccadic eye movements while reading music. Vision Research 35, 10: 1447–1458. [Google Scholar] [CrossRef]

- Kopiez, R., and J. I. Lee. 2008. Towards a general model of skills involved in sight reading music. Music Education Research 10, 1: 41–62. [Google Scholar] [CrossRef]

- Kopiez, R., C. Weihs, U. Ligges, and J. I. Lee. 2006. Classification of high and low achievers in a music sight reading task. Psychology of Music 34, 1: 5–26. [Google Scholar] [CrossRef]

- Krupinski, E. A., A. A. Tillack, L. Richter, J. T. Henderson, A. K. Bhattacharyya, K. M. Scott, A. R. Graham, M. R. Descour, J. R. Davis, and R. S. Weinstein. 2006. Eye-movement study and human performance using telepathology virtual slides. Implications for medical education and differences with experience. Human Pathology 37, 12: 1543–1556. [Google Scholar] [CrossRef] [PubMed]

- Kundel, H. L., C. F. Nodine, E. A. Krupinski, and C. Mello-Thoms. 2008. Using gaze-tracking data and mixture distribution analysis to support a holistic model for the detection of cancers on mammograms. Academic Radiology 15, 7: 881–886. [Google Scholar] [CrossRef] [PubMed]

- Laubrock, J., and R. Kliegl. 2015. The eye-voice span during reading aloud. Front. Psychol. 6: 1432. [Google Scholar] [CrossRef]

- Lee, J. I. 2003. The role of working memory and short-term memory in sight reading. pp. 121–126. https://www.epos.uni-osnabrueck.de/books/k/klww003/pdfs/175_Lee_A bs.pdf.

- Levin, H., and E. L. Kaplan. 1968. Eye-voice span (EVS) within active and passive sentences. Language and Speech 11, 4: 251–258. [Google Scholar] [CrossRef] [PubMed]

- Lewandowska, O. P., and M. A. Schmuckler. 2020. Tonal and textural influences on musical sight reading. Psychological Research 84, 7: 1920–1945. [Google Scholar] [CrossRef]

- Lim, Y., J. M. Park, S.-Y. Rhyu, C. K. Chung, Y. Kim, and S. W. Yi. 2019. Eye-hand span is not an indicator of but a strategy for proficient sight reading in piano performance. Scientific Reports 9, 1: 17906. [Google Scholar] [CrossRef]

- Madell, J., and S. Hébert. 2008. Eye movements and music reading: Where do we look next? Music Perception 26, 2: 157–170. [Google Scholar] [CrossRef]

- Marandola, F. 2019. Eye-hand synchronisation in xylophone performance: Two case-studies with african and western percussionists. Journal of Eye Movement Research 11, 2: 7. [Google Scholar] [CrossRef]

- McConkie, G. W., and K. Rayner. 1975. The span of the effective stimulus during a fixation in reading. Perception & Psychophysics 17, 6: 578–586. [Google Scholar] [CrossRef]

- Meinz, E. J., and D. Z. Hambrick. 2010. Deliberate practice is necessary but Not sufficient to explain individual differences in piano sight reading skill: The role of working memory capacity. Psychological Science 21, 7: 914–919. [Google Scholar] [CrossRef] [PubMed]

- Moussay, S., F. Dosseville, A. Gauthier, J. Larue, B. Sesboüe, and D. Davenne. 2002. Circadian rhythms during cycling exercise and fingertapping task. Chronobiol. Int. 19, 1137–1149. [Google Scholar] [CrossRef]

- Nivala, M., A. Cichy, and H. Gruber. 2018. How prior experience, cognitive skills and practice are related with eye-hand span and performance in video gaming. Journal of Eye Movement Research 11, 3: 1. [Google Scholar] [CrossRef]

- Palmer, C., and R. K. Meyer. 2000. Conceptual and motor learning in music performance. Psychological Science 11, 1: 63–68. [Google Scholar] [CrossRef]

- Parncutt, R., J. A. Sloboda, E. F. Clarke, M. Raekallio, and P. Desain. 1997. An ergonomic model of keyboard fingering for melodic fragments. Music Perception 14, 4: 341–382. [Google Scholar] [CrossRef][Green Version]

- Penttinen, M., E. Huovinen, and A.-K. Ylitalo. 2015. Reading ahead: Adult music students’ eye movements in temporally controlled performances of a children’s song. International Journal of Music Education 33, 1: 36–50. [Google Scholar] [CrossRef]

- Puurtinen, M. 2018. Eye on music reading: A methodological review of studies from 1994 to 2017. Journal of Eye Movement Research 11, 2: 1–16. [Google Scholar] [CrossRef]

- Quantz, J. O. 1897. Problems in the psychology of reading. The Psychological Review: Monograph Supplements 2, 1: 1–51. [Google Scholar] [CrossRef]

- Rayner, K. 1998. Eye movements in reading and information processing: 20 years of research. Psychological Bulletin 124, 3: 372–422. [Google Scholar] [CrossRef]

- Rayner, K. 2014. The gaze-contingent moving window in reading: Development and review. Visual Cognition 22, 3-4: 242–258. [Google Scholar] [CrossRef]

- Rayner, K., K. Binder, J. Ashby, and A. Pollatsek. 2001. Eye movement control in reading: Word predictability has little influence on initial landing positions in words. Vision Research 41, 7: 943–954. [Google Scholar] [CrossRef] [PubMed]

- Rayner, K., and G. W. McConkie. 1976. What guides a reader’s eye movements? Vision Research 16, 8: 829–837. [Google Scholar] [CrossRef] [PubMed]

- Rayner, K., and A. Pollatsek. 1981. Eye movement control during reading: Evidence for direct control. The Quarterly Journal of Experimental Psychology Section A 33, 4: 351–373. [Google Scholar] [CrossRef]

- Rayner, K., and A. Pollatsek. 1997. Eye movements, the eye-hand span, and the perceptual span during sight reading of music. Current Directions in Psychological Science 6, 2: 49–53. [Google Scholar] [CrossRef]

- Reichle, E. D., and E. M. Reingold. 2013. Neurophysiological constraints on the eye-mind link. Frontiers in Human Neuroscience 7. [Google Scholar] [CrossRef] [PubMed]

- Reingold, E. M., and H. Sheridan. 2011. Eye movements and visual expertise in chess and medicine. In The Oxford handbook of eye movements. Oxford University Press: pp. 523–550. [Google Scholar]

- Rosemann, S., E. Altenmüller, and M. Fahle. 2016. The art of sight-reading: Influence of practice, playing tempo, complexity and cognitive skills on the eye–hand span in pianists. Psychology of Music 44, 4: 658–673. [Google Scholar] [CrossRef]

- Shannon, C. E. 1948. A mathematical theory of communication. Bell System Technical Journal 27, 3: 379–423. [Google Scholar] [CrossRef]

- Sheridan, H., K. S. Maturi, and A. L. Kleinsmith. 2020. Eye movements during music reading: Toward a unified understanding of visual expertise. In Psychology of Learning and Motivation. Psychol Learn Motiv: Vol. 73, pp. 119–156. [Google Scholar] [CrossRef]

- Silva, S., and S. L. Castro. 2019. The time will come: Evidence for an eye-audiation span in silent music reading. Psychology of Music 47, 4: 504–520. [Google Scholar] [CrossRef]

- Sloboda, J. 1974. The eye-hand span: An approach to the study of sight reading. Psychology of Music 2, 2: 4–10. [Google Scholar] [CrossRef]

- Sloboda, J. A. 1977. Phrase units as determinants of visual processing in music reading. British Journal of Psychology 68, 1: 117–124. [Google Scholar] [CrossRef]

- Sloboda, J. A., E. F. Clarke, R. Parncutt, and M. Raekallio. 1998. Determinants of finger choice in piano sight reading. Journal of Experimental Psychology: Human Perception and Performance 24, 1: 185–203. [Google Scholar] [CrossRef]

- Stewart, L., R. Henson, K. Kampe, V. Walsh, R. Turner, and U. Frith. 2003a. Brain changes after learning to read and play music. NeuroImage 20, 1: 71–83. [Google Scholar] [CrossRef]

- Stewart, L., R. Henson, K. Kampe, V. Walsh, R. Turner, and U. Frith. 2003b. Becoming a Pianist. Annals of the New York Academy of Sciences 999, 1: 204–208. [Google Scholar] [CrossRef] [PubMed]

- Sweller, J. 2005. Edited by R. Mayer. Implications of cognitive load theory for multimedia learning. In The Cambridge Handbook of Multimedia Learning, 1re éd. Cambridge University Press: pp. 19–30. [Google Scholar] [CrossRef]

- Truitt, F. E., C. Clifton, A. Pollatsek, and K. Rayner. 1997. The perceptual span and the eye-hand span in sight reading music. Visual Cognition 4, 2: 143–161. [Google Scholar] [CrossRef]

- Waters, A. J., E. Townsend, and G. Underwood. 1998. Expertise in musical sight reading: A study of pianists. British Journal of Psychology 89, 1: 123–149. [Google Scholar] [CrossRef]

- Waters, A. J., and G. Underwood. 1998. Eye movements in a simple music reading task: A study of expert and novice musicians. Psychology of Music 26, 1: 46–60. [Google Scholar] [CrossRef]

- Weaver, H. E., ed. 1943. Studies of ocular behavior in music reading. Psychological Monographs 55, 1: 1–50. [Google Scholar] [CrossRef]

- White, S. 2008. Eye movement control during reading: Effects of word frequency and orthographic familiarity. Journal of Experimental Psychology: Human Perception and Performance 34, 1: 205–223. [Google Scholar] [CrossRef]

- Williamon, A., and E. Valentine. 2002. The role of retrieval structures in memorizing music. Cognitive Psychology 44, 1: 1–32. [Google Scholar] [CrossRef]

- Wolf, T. 1976. A cognitive model of musical sightreading. Journal of Psycholinguistic Research 5, 2: 143. [Google Scholar] [CrossRef]

- Wristen, B. 2005. Cognition and motor execution in piano sight reading: A review of literature. Update: Applications of Research in Music Education 24, 1: 44–56. [Google Scholar] [CrossRef]

- Wurtz, P., R. M. Mueri, and M. Wiesendanger. 2009. Sight reading of violinists: Eye movements anticipate the musical flow. Experimental Brain Research 194, 3: 445–450. [Google Scholar] [CrossRef] [PubMed]

| Author(s) | Year | N | Journal | Title |

| Weaver | 1943 | 15 | Psychological Monographs | Studies of ocular behavior in music reading |

| Sloboda | 1974 | 10 | Psychology of Music | The eye-hand span—an approach to the study of sight reading |

| Sloboda | 1977 | 6 | British Journal of Psychology | Phrase units as determinants of visual processing in music reading |

| Truitt, Clifton, Pollatsek, & Rayner | 1997 | 8 | Visual Cognition | The perceptual span and the eye-hand span in sight reading music |

| Furneaux & Land | 1999 | 8 | Proceedings of the Royal Society of London | The effects of skill on the eye-hand span during musical sight-reading |

| Gilman & Underwood | 2003 | 40 | Visual Cognition | Restricting the field of view to investigate the perceptual spans of pianists |

| Wurtz, Mueri, & Wiesendanger | 2009 | 7 | Experimental Brain Research | Sight-reading of violinists: Eye movements anticipate the musical flow |

| Adachi, Takiuchi, & Shoda | 2012 | 18 | 12th international Conference on Music Perception and Cognition Conference Thessaloniki, Greece | Effects of melodic structure and meter on the sight-reading performances of beginners and advanced pianists |

| Penttinen, Huovinen, & Ylitalo | 2015 | 38 | International Journal of Music Education: Research | Reading ahead: Adult music students’ eye movements in temporally controlled performances of a children’s song |

| Rosemann, Altenmüller, & Fahle | 2016 | 9 | Psychology of Music | The art of sight-reading: Influence of practice, playing tempo, complexity, and cognitive skills on the eye-hand span in pianists |

| Cara | 2018 | 22 | Musicae Scientiae | Anticipation awareness and visual monitoring in reading contemporary music |

| Huovinen, Ylitalo, & Puurtinen | 2018 | 37 | Journal of Eye Movement Research | Early attraction in temporally controlled sight reading of music |

| Marandola | 2019 | 30 | Journal of Eye Movement Research | Eye-hand synchronisation in xylophone performance: Two case-studies with African and Western percussionists |

| Lim, Park, Rhyu, Chung, Kim, & Yi | 2019 | 31 | Scientific Reports | Eye-hand span is not an indicator of but a strategy for proficient sight-reading in piano performance |

| Chitalkina, Puurtinen, Gruber, & Bednarik | 2021 | 24 | International Journal of Music Education | Handling of incongruences in music notation during singing or playing |

| STUDIES | LATENCY | DISTANCE | |||||

| ABSOLUTE | MUSICAL UNITS | ABSOLUTE | MUSICAL UNITS | ||||

| MS | BEATS | PIXELS | MM | NOTES | BEATS | EVENTS | |

| Weaver, 1943 | X | X | |||||

| Sloboda, 1974 | X | ||||||

| Sloboda, 1977 | X | ||||||

| Truitt et al., 1997 | X | X | |||||

| Furneaux & Land, 1999 | X | X | |||||

| Gilman & Underwood, 2003 | X | X | |||||

| Wurtz et al., 2009 | X | X | |||||

| Adachi et al., 2012 | X | ||||||

| Penttinen et al., 2015 | X | X | |||||

| Rosemann et al., 2016 | X | X | |||||

| Cara, 2018 | X | X | |||||

| Huovinen et al., 2018 | X | X | |||||

| Marandola, 2019 | X | ||||||

| Lim et al., 2019 | X | X | X | ||||

| Chitalkina et al., 2021 | X | ||||||

| STUDIES | SUBJECTS | EXPERTISE CRITERION | LATENCY | DISTANCE | ||||||

| LS | I | S | ABSOLUTE | MUSICAL UNITS | ABSOLUTE | MUSICAL UNITS | ||||

| MS | BEATS | PIXELS | MM | NOTES | BEATS | |||||

| Sloboda, 1974 | 10 | Playing Level (LS-S) | 3.6 – 6.8 ** | |||||||

| Truitt et al., 1997 | 4 | 4 | Playing Level (LS-S) | 11 – 42 ** | 1 – 2 ** | |||||

| Furneaux & Land, 1999 | 3 | 3 | 2 | Learning Level (LS: g.3/4 - I: g.6/7 -S: acc.) | 1000 NS | 2 – 2.5 – 3.75 ** | ||||

| Gilman & Underwood, 2003 | 13 | 17 | Playing Level (LS-S) | 15 – 19 * | 0.75 – 1 * | |||||

| Adachi et al., 2012 | 9 | 9 | Learning Level (LS: 9.22 yop - S: 16.22 yop) | 0.52 – 1.73 *** | ||||||

| Penttinen et al., 2015 | 14 | 24 | Learning Level (LS: 11.5 yop S: 14.8 yop) | (0-1-1+ Beats) LS 36 – 56.1 – 7.8 % S 30 – 55.7 – 14.3 % *** | ||||||

| Cara, 2018 | 11 | 11 | Playing Level (LS-S) | 3.69 – 4.70 * | 2.10 – 2.85 * | |||||

| Huovinen et al., 2018 | 14 | 23 | Learning Level (LS: 11.3 yop S: 14.8 yop) | S = LS + 0.29 to 0.53 *** | ||||||

| Lim et al., 2019 | 10 | 11 | 10 | Playing Level | r = .26 ** | NS | r = .22 * | |||

| STUDIES | COMPLEXITY | METHOD | LATENCY | DISTANCE | ||||||

| ABSOLUTE | MUSICAL UNITS | ABSOLUTE | MUSICAL UNITS | |||||||

| MS | BEATS | PIXELS | MM | NOTES | BEATS | |||||

| Sloboda, 1977 | PERC. | ALTERATION | STAFF | MARKERS (C – LC) | 4.9 – 5.1 NS | |||||

| Sloboda, 1977 | STRU. | PITCH | STAFF | HARMONIC NON-SENSE (C – LC) | 4.5 – 5.5 *** | |||||

| Truitt et al., 1997 | PERC. | REDUCTION | STAFF | WINDOW (2 – 4 – 6 beats – NO MW) | 21 - 26 - 30 -29 * | |||||

| Gilman & Underwood, 2003 - A | PERC. | REDUCTION | STAFF | WINDOW (1 – 2 – 4 beats – NO MW) | 14 - 16 - 17 – 17 ** | |||||

| Gilman & Underwood, 2003 - B | STRU. | PITCH | STAFF | TRANSPOSITION (C – LC) | 12 – 15 *** | |||||

| Wurtz et al., 2009 | STRU. | RYTHM | STAFF | NOTE DURATION (C – LC) | 1000 NS | 3.5 – 6 * | ||||

| Adachi et al., 2012 | STRU. | RYTHM | STAFF | TIME SIGNATURE (5/4 – 4/4) | 1.26 – 2.03 * | |||||

| Adachi et al., 2012 | STRU. | PITCH | NOTE | SKIP-WISE | N/A | |||||

| Penttinen et al., 2015 | STRU. | PITCH | BAR | STEP DOWN DIVISION | NS | |||||

| Penttinen et al., 2015 | STRU. | RYTHM | BEAT | NOTE DURATION (C – LC) | C < LC ** | |||||

| Rosemann et al., 2016 | STRU. | RYTHM | BAR | NOTE/DIVISION (C – LC) | 1258-1320 NS | 0.35 – 0.51 ** | ||||

| Cara, 2018 | STRU. | RYTHM + PITCH | STAFF | NOTE/DIVISION + HAND-CROSSING (C – LC) | 3.78 – 4.29 *** | 2.07 – 2.74 *** | ||||

| Huovinen et al., 2018 - A | STRU. | PITCH | NOTE | SKIP-WISE – ON TARGET BAR | C > LC * | |||||

| Huovinen et al., 2018 - B | STRU. | PITCH | NOTE | ACCIDENTAL – PRE TARGET-BAR | C > LC *** | |||||

| Lim et al., 2019 | STRU. | RYTHM + PITCH | STAFF | NOTES /DIVISION + ACCIDENTAL (C – LC) | 820 -1100 * | NS | 1.27 – 1.68 * | |||

| Chitalkina et al., 2021 | STRU. | PITCH | NOTE | INCONGRUENCY - PRE TARGET-BAR | C > LC *** | |||||

| Chitalkina et al., 2021 | STRU . | PITCH | NOTE | INCONGRUENCY - ON TARGET-BAR | C < LC * | |||||

| STUDIES | TEMPO | LATENCY | DISTANCE | |||

| ABOLUTE | MUSICAL UNITS | ABSOLUTE | MUSICAL UNITS | |||

| MS | BEATS | PIXELS MM | NOTES | BEATS | ||

| Furneaux & Land, 1999 | DEPENDING ON THE STAFF FAST / SLOW | 700 / 1300 ** | ||||

| Rosemann et al., 2016 | ORIGINAL DEPENDING ON THE STAFF FAST = O +20% SLOW = O -20% | O = 1342 F = 1143 S= 1475 * | O = 0.42 F = 0.47 S = 0.29 * | |||

| Huovinen et al., 2018 | 60 VS. 100 | F = S + 0.21 to 0.41 *** | ||||

| Lim et al., 2019 | 80 VS 104 | NS | NS | |||

Copyright © 2021. This article is licensed under a Creative Commons Attribution 4.0 International License.

Share and Cite

Perra, J.; Poulin-Charronnat, B.; Baccino, T.; Drai-Zerbib, V. Review on Eye-Hand Span in Sight-Reading of Music. J. Eye Mov. Res. 2021, 14, 1-25. https://doi.org/10.16910/jemr.14.4.4

Perra J, Poulin-Charronnat B, Baccino T, Drai-Zerbib V. Review on Eye-Hand Span in Sight-Reading of Music. Journal of Eye Movement Research. 2021; 14(4):1-25. https://doi.org/10.16910/jemr.14.4.4

Chicago/Turabian StylePerra, Joris, Bénédicte Poulin-Charronnat, Thierry Baccino, and Véronique Drai-Zerbib. 2021. "Review on Eye-Hand Span in Sight-Reading of Music" Journal of Eye Movement Research 14, no. 4: 1-25. https://doi.org/10.16910/jemr.14.4.4

APA StylePerra, J., Poulin-Charronnat, B., Baccino, T., & Drai-Zerbib, V. (2021). Review on Eye-Hand Span in Sight-Reading of Music. Journal of Eye Movement Research, 14(4), 1-25. https://doi.org/10.16910/jemr.14.4.4