Multimodality During Fixation—Part II: Evidence for Multimodality in Spatial Precision-Related Distributions and Impact on Precision Estimates

Abstract

:Introduction

Some studies have even larger amplitude criteria [see (Poletti & Rucci, 2016)]. Other authors choose 30 min arc, (0.5 deg) as a threshold (Poletti & Rucci, 2016). For purposes of the present analysis, any saccade < 0.5 deg was considered a microsaccade.“Microsaccades were distinguished from macrosaccades using an amplitude threshold of 1° (Martinez-Conde, Otero-Millan, & Macknik, 2013), and the median microsaccade amplitude was 0.65° (M1: 0.71°, M2: 0.65°, M3: 0.62°). This is larger than in most studies, although there is also considerable variability between the average microsaccade amplitudes described in past reports, which include 0.8° (Bair & O'Keefe, 1998), 0.73° (Guerrasio, Quinet, Buttner, & Goffart, 2010), 0.67° (Snodderly, Kagan, & Gur, 2001), 0.46° (Otero-Millan et al., 2011), 0.33° (Ko, Poletti, & Rucci, 2010), and 0.23° (Hafed, Goffart, & Krauzlis, 2009).”.(Arnstein, Junker, Smilgin, Dicke, & Thier, 2015)

Methods

The Eye-Tracking Database

The Signal Processing Steps

Removing Average Saccade Latency

Which Part of Fixation to Analyze

Removing “Blink saccades”

Removing Saccades - Step 1

Removing Saccades - Step 2

Removal of Anticipatory Saccades

Evaluation of the Success of These Efforts to Remove Non-Fixation Samples

Inclusion Criteria for Fixations

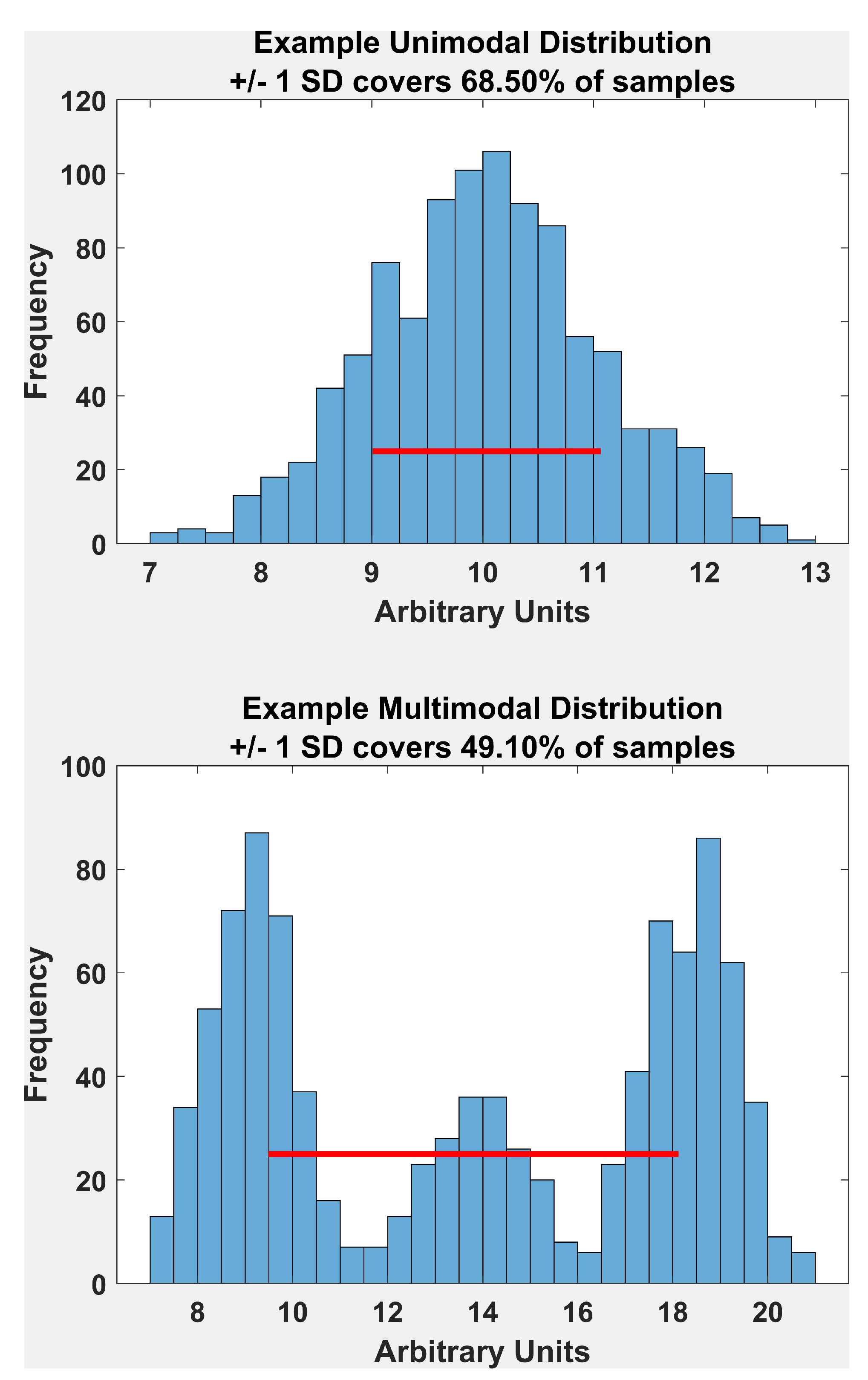

Assessing Unimodality

Precision Metric Names

Results

Characteristics of Accepted Fixations

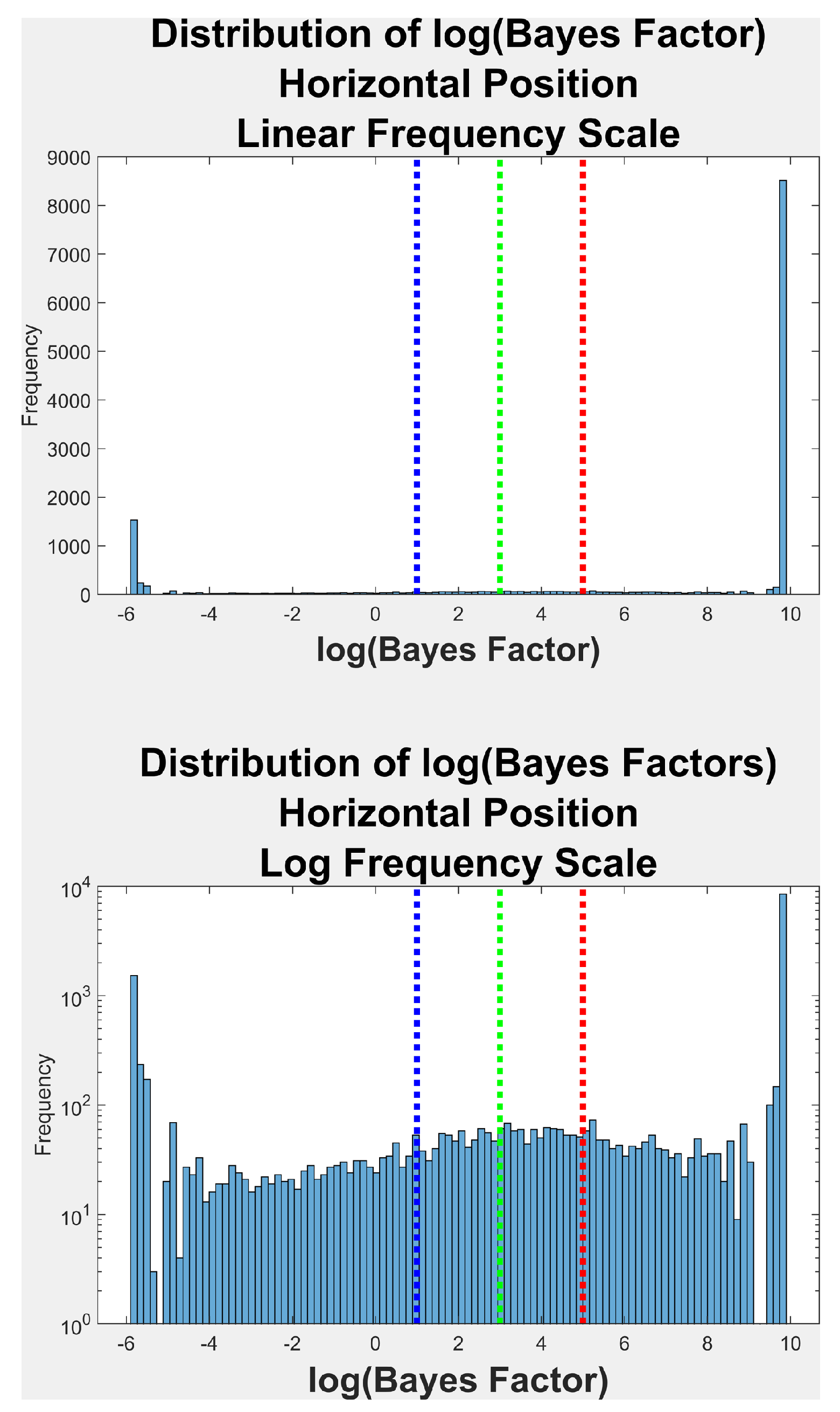

Bayes Factor Distribution

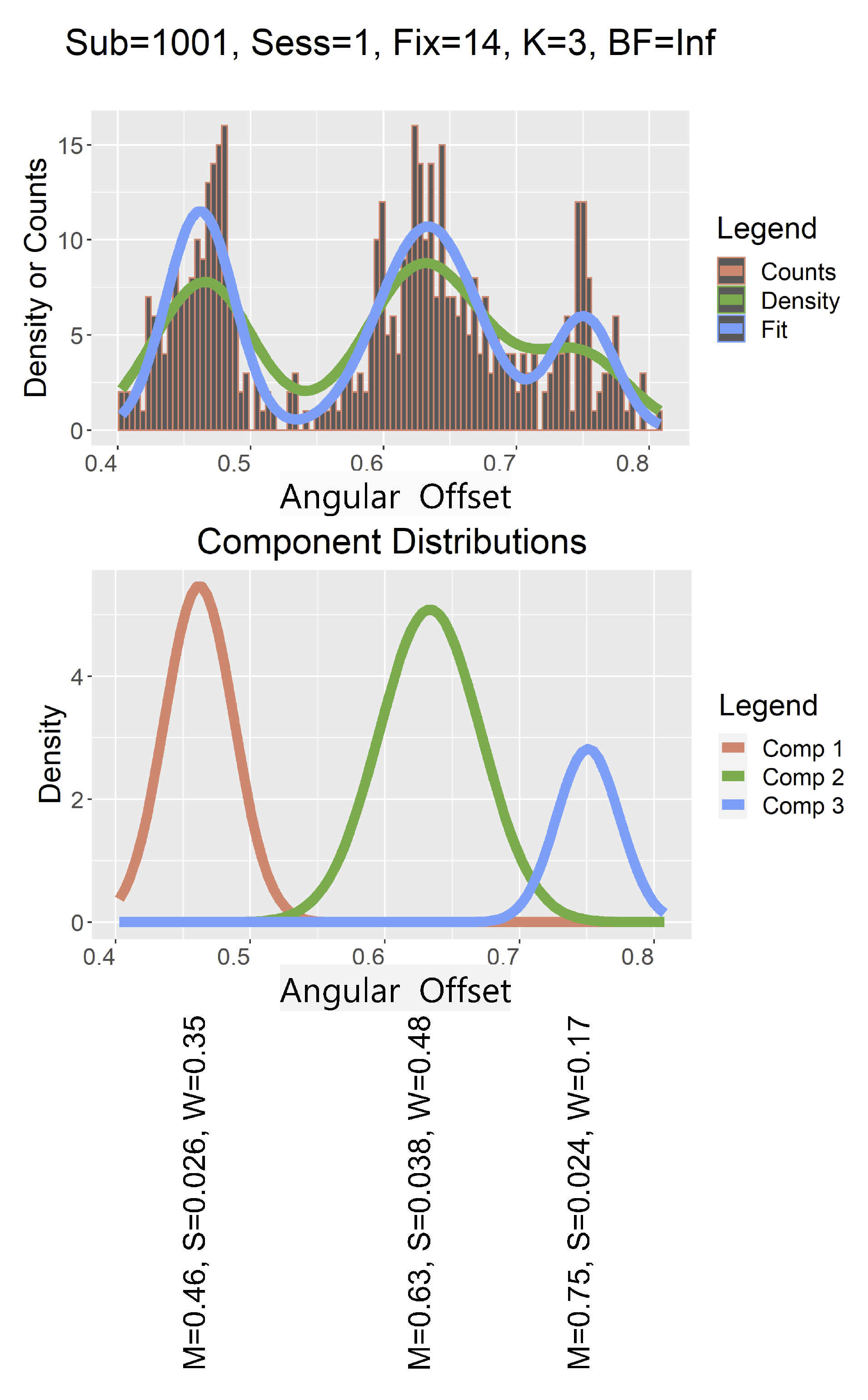

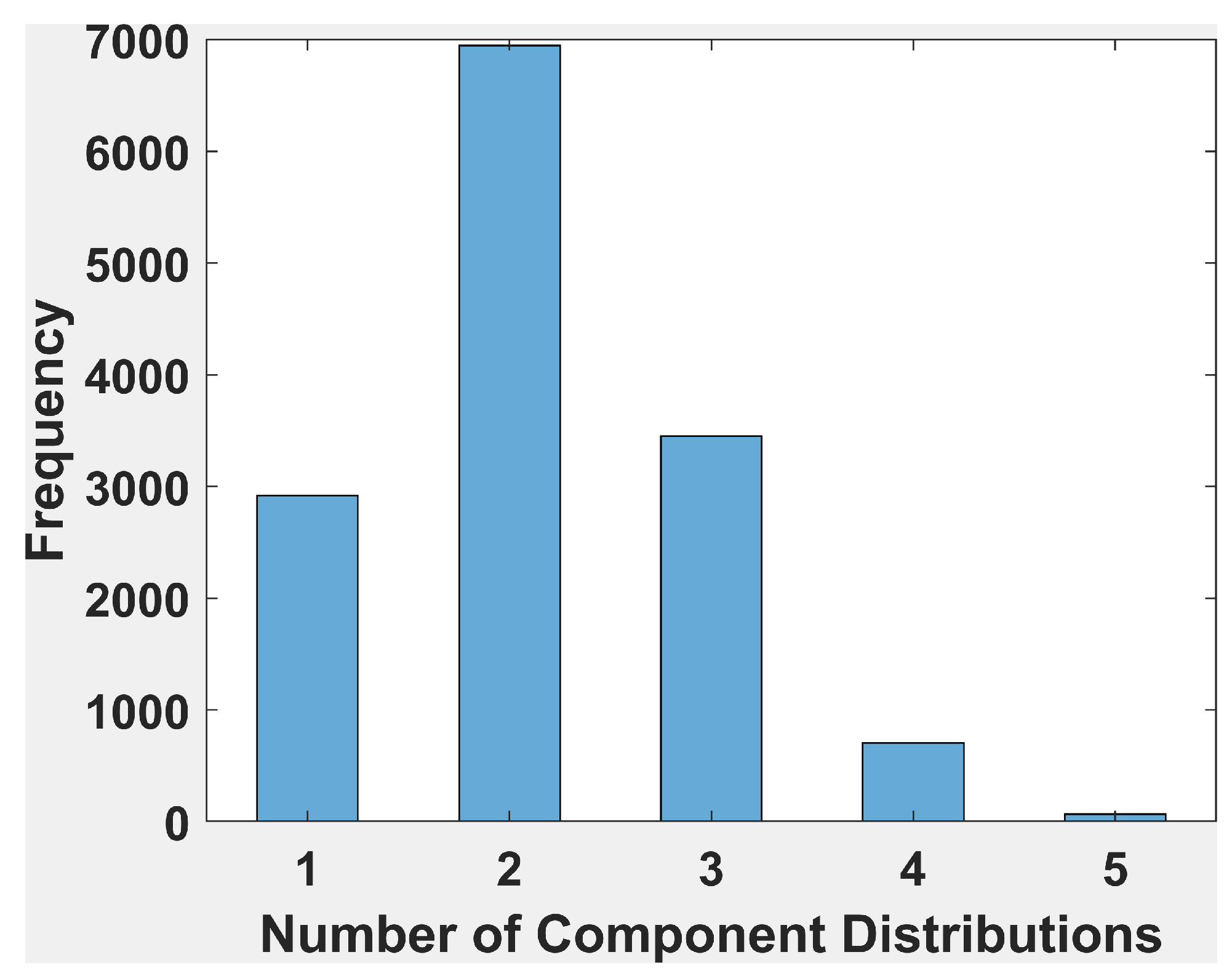

Histogram of Number of Components

Distributions of Measures of Precision

Oculomotor Basis for Multimodality

Discussion

“If histograms and probability plots indicate that your data are in fact reasonably approximated by a normal distribution, then it makes sense to use the standard deviation as the estimate of scale. However, if your data are not normal, and in particular if there are long tails, then using an alternative measure such as the median absolute deviation, average absolute deviation, or interquartile range makes sense.” Link to Textbook Page (NIST/SEMATECH, 2012)

Ethics and Conflict of Interest

Acknowledgments

References

- Abdulin, E., L. Friedman, and O. V. Komogortsev. 2017. Method to Detect Eye Position Noise from Video-Oculography when Detection of Pupil or Corneal Reflection Position Fails. arXiv arXiv:1709.02700. Retrieved from: https://ui.adsabs.harvard.edu/abs/2017arXiv170.

- Arnstein, D., M. Junker, A. Smilgin, P. W. Dicke, and P. Thier. 2015. Microsaccade control signals in the cerebellum. J Neurosci 35, 8: 3403–3411. [Google Scholar] [CrossRef] [PubMed]

- Bair, W., and L. P. O'Keefe. 1998. The influence of fixational eye movements on the response of neurons in area MT of the macaque. Vis Neurosci 15, 4: 779–786. [Google Scholar] [CrossRef] [PubMed]

- Blignaut, P. 2019. A cost function to determine the optimum filter and parameters for stabilising gaze data. J Eye Mov Res 12, 2. [Google Scholar] [CrossRef]

- Blignaut, P., and T. Beelders. 2012. The Precision of Eye-Trackers: A Case for a New Measure. Etra '12, 289–292. [Google Scholar] [CrossRef]

- Castet, E., and M. Crossland. 2012. Quantifying eye stability during a fixation task: a review of definitions and methods. Seeing Perceiving 25, 5: 449–469. [Google Scholar] [CrossRef]

- Friedman, L., D. Lohr, T. Hanson, and O. V. Komogortsev. 2021. Angular Offset Distributions During Fixation Are, More Often Than Not, Multimodal. J Eye Mov Res 14, 3. [Google Scholar] [CrossRef] [PubMed]

- Griffith, H., D. Lohr, E. Abdulin, and O. Komogortsev. 2020. GazeBase: A Large-Scale, Multi-Stimulus, Longitudinal Eye Movement Dataset. arXiv. Retrieved from: https://ui.adsabs.harvard.edu/abs/2020arXiv200.

- Guerrasio, L., J. Quinet, U. Buttner, and L. Goffart. 2010. Fastigial oculomotor region and the control of foveation during fixation. J Neurophysiol 103, 4: 1988–2001. [Google Scholar] [CrossRef]

- Hafed, Z. M., L. Goffart, and R. J. Krauzlis. 2009. A neural mechanism for microsaccade generation in the primate superior colliculus. Science 323, 5916: 940–943. [Google Scholar] [CrossRef]

- Holmqvist, K., and R. Anderssen. 2017. Eye tracking: A comprehensive guide to methods, paradigms, and measures. CreateSpace Publishing. [Google Scholar]

- Kass, R. E., and A. E. Raftery. 1995. Bayes Factors. Journal of the American Statistical Association 90, 430: 773–795. [Google Scholar] [CrossRef]

- Ko, H. K., M. Poletti, and M. Rucci. 2010. Microsaccades precisely relocate gaze in a high visual acuity task. Nat Neurosci 13, 12: 1549–1553. [Google Scholar] [CrossRef]

- Komárek, A. 2009. A new R package for Bayesian estimation of multivariate normal mixtures allowing for selection of the number of components and interval-censored data. Computational Statistics & Data Analysis 53, 12: 3932–3947. [Google Scholar] [CrossRef]

- Komárek, A., and L. Komárková. 2014. Capabilities of R. Package mixAK for Clustering Based on Multivariate Continuous and Discrete Longitudinal Data. Journal of Statistical Software 1, 12. [Google Scholar] [CrossRef]

- Komogortsev, O. V., I. Rigas, and E. Abdulin. 2016. Eye Movement Biometrics on Wearable Devices: What Are the Limits? Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI); pp. 1–6. [Google Scholar]

- Macinnes, J. J., S. Iqbal, J. Pearson, and E. N. Johnson. 2018. Wearable Eye-tracking for Research: Automated dynamic gaze mapping and accuracy/precision comparisons across devices. bioRxiv, 299925. [Google Scholar] [CrossRef]

- Martinez-Conde, S., J. Otero-Millan, and S. L. Macknik. 2013. The impact of microsaccades on vision: towards a unified theory of saccadic function. Nat Rev Neurosci 14, 2: 83–96. [Google Scholar] [CrossRef] [PubMed]

- NIST/SEMATECH. 2012. e-Handbook of Statistical Methods. Online Textbook. [Google Scholar] [CrossRef]

- Orquin, J. L., and K. Holmqvist. 2018. Threats to the validity of eye-movement research in psychology. Behav Res Methods 50, 4: 1645–1656. [Google Scholar] [CrossRef]

- Otero-Millan, J., A. Serra, R. J. Leigh, X. G. Troncoso, S. L. Macknik, and S. Martinez-Conde. 2011. Distinctive features of saccadic intrusions and microsaccades in progressive supranuclear palsy. J Neurosci 31, 12: 4379–4387. [Google Scholar] [CrossRef] [PubMed]

- Poletti, M., and M. Rucci. 2016. A compact field guide to the study of microsaccades: Challenges and functions. Vision Res 118: 83–97. [Google Scholar] [CrossRef]

- R Development Core Team. 2010. R: Language and environment for statistical computing. Vienna, Austria: R Foundation to Statistical Computing. [Google Scholar]

- Saez de Urabain, I. R., M. H. Johnson, and T. J. Smith. 2015. GraFIX: a semiautomatic approach for parsing low-and high-quality eye-tracking data. Behav Res Methods 47, 1: 53–72. [Google Scholar] [CrossRef]

- Snodderly, D. M., I. Kagan, and M. Gur. 2001. Selective activation of visual cortex neurons by fixational eye movements: implications for neural coding. Vis Neurosci 18, 2: 259–277. [Google Scholar] [CrossRef]

- Whittaker, S. G., J. Budd, and R. W. Cummings. 1988. Eccentric fixation with macular scotoma. Invest Ophthalmol Vis Sci 29, 2: 268–278. [Google Scholar] [PubMed]

- Xu, L., E. Bedrick, T. Hanson, and C. Restrepo. 2014. A Comparison of Statistical Tools for Identifying Modality in Body Mass Distributions. Journal of data science 12: 175–196. [Google Scholar]

| Step 1 | Remove saccade latency |

| Step 2 | Choose a portion of each fixation to analyze for precision |

| Step 3 | Remove blink saccades |

| Step 4 | Remove saccades – step 1 |

| Step 5 | Remove saccades, etc. - step 2 |

| Step 6 | Remove anticipatory saccades |

| Direction | N Events | % Unimodal * | % Positive † | % Strong ‡ | % Very Strong $ |

| Horiz. | 14,087 | 20.8 | 4.4 | 5.1 | 69.7 |

| Vert. | 14,087 | 23.2 | 4.6 | 4.6 | 67.5 |

| *-No evidence of multimodality [log(BF) <= 1] | |||||

| †-Positive evidence of multimodality [log(BF) > 1 & log(BF)<=3] | |||||

| ‡-Strong evidence of multimodality [log(BF) > 3 & log(BF)<=5] | |||||

| $-Very strong evidence of multimodality [log(BF) > 5] | |||||

Copyright © 2021. This article is licensed under a Creative Commons Attribution 4.0 International License.

Share and Cite

Friedman, L.; Hanson, T.; Komogortsev, O.V. Multimodality During Fixation—Part II: Evidence for Multimodality in Spatial Precision-Related Distributions and Impact on Precision Estimates. J. Eye Mov. Res. 2021, 14, 1-9. https://doi.org/10.16910/jemr.14.3.4

Friedman L, Hanson T, Komogortsev OV. Multimodality During Fixation—Part II: Evidence for Multimodality in Spatial Precision-Related Distributions and Impact on Precision Estimates. Journal of Eye Movement Research. 2021; 14(3):1-9. https://doi.org/10.16910/jemr.14.3.4

Chicago/Turabian StyleFriedman, Lee, Timothy Hanson, and Oleg V. Komogortsev. 2021. "Multimodality During Fixation—Part II: Evidence for Multimodality in Spatial Precision-Related Distributions and Impact on Precision Estimates" Journal of Eye Movement Research 14, no. 3: 1-9. https://doi.org/10.16910/jemr.14.3.4

APA StyleFriedman, L., Hanson, T., & Komogortsev, O. V. (2021). Multimodality During Fixation—Part II: Evidence for Multimodality in Spatial Precision-Related Distributions and Impact on Precision Estimates. Journal of Eye Movement Research, 14(3), 1-9. https://doi.org/10.16910/jemr.14.3.4