Introduction

Driving is a complex dynamic task in which the driver must continuously process information from the environment. This is necessary to control the speed and direction of the vehicle; to gather information about other vehicles, road signs or potential hazards; and to make decisions about the route to be taken. Theoretical models of driving activity have been developed to account for this complexity.

Michon (

1985), for example, proposed dividing driving activity into three hierarchically organised levels: the strategic level, the tactical level and the operational level. The strategic level corresponds to the definition of general driving goals, such as itinerary selection. At the tactical level, objects are recognised, danger is assessed and acquired rules are used to make short-term decisions. These decisions are implemented at the operational level, which is underpinned by online perceptual-motor loops.

During manual driving, drivers perform all the subtasks associated with the three control levels. However, during automated driving, some tasks are transferred to the automation. Then, the driver’s role depends on the level of automation, as defined by the Society of Automotive Engineers (

SAE International, 2016). When lateral and longitudinal control are delegated to automation (SAE level 2), drivers become system supervisors rather than actors. This transformation of the driver’s role changes their visual processing of the environment and their interaction with the vehicle (

Mole et al., 2019).

At SAE Level 2, drivers are required to maintain their attention on the road so that they can regain manual control of the vehicle without delay at all times. However, the visuo-motor coordination necessary for the online control of steering and braking is no longer required, which constitutes a neutralization of the operational control loop.

Land and Lee (

1994) showed that eye movements typically preceded steering-wheel movements by 800 ms in manual driving. Once control of the steering wheel is delegated to the automaton, this perceptual-motor coupling is no longer necessary. This explains why even in the absence of a secondary task, and with the instruction to monitor the driving scene, changes in gaze behaviour were observed under such conditions. Drivers who no longer have active control of the steering wheel tend to neglect short-term anticipation of the road ahead; they produce more distant fixations, known as “look-ahead fixations” (

Mars & Navarro, 2012;

Schnebelen et al., 2019).

At SAE Level 3, drivers might no longer actively monitor the driving scene. When the system perceives that it can no longer provide autonomous driving, it issues a takeover request, allowing the driver a time budget to restore situational awareness and regain control of the vehicle. Failure to monitor the driving scene for an extended period is tantamount to neutralizing both the tactical control loop and the operational loop. During this time, drivers can engage in secondary tasks. The distraction generated by the secondary task results in less attention being directed towards the road than in the case of manual driving (

Barnard & Lai, 2010;

Carsten et al., 2012;

Merat et al., 2012). One possible way to measure this shift of attention is to compute and compare the percentage of time spent in road centre (PRC;

Victor et al., 2005). Low PRC is associated with drivers being out of the operational control loop (

Carsten et al., 2012;

Jamson et al., 2013). However, even without a secondary task, sampling of the driving environment is affected, with higher horizontal dispersion of gaze during automated driving compared to manual (

Damböck et al., 2013;

Louw & Merat, 2017;

Mackenzie & Harris, 2015). All previous studies point to the same idea: the gaze is directed more towards the peripheral areas than the road during automated driving. However, observations based on the angular distribution of gaze only capture an overall effect and do not consider the gaze dynamics. For this purpose, a decomposition of the driving scene into areas of interest (AOIs) may be more appropriate. The decomposition into AOIs for the analysis of gaze while driving has been carried out in several studies (

Carsten et al., 2012;

Jamson et al., 2013;

Navarro et al., 2019), but only a few studies have examined the transitions between the different AOIs. Such a method was implemented by

Underwood et al. (

2003) to determine AOI fixation sequences as a function of driver experience and road type in manual driving.

Gonçalves et al. (

2019) similarly proposed analysing the fixation sequences during lane-change manoeuvres. The present study uses this approach to assess the driver’s gaze behaviour during an entire drive of either manual or automated driving.

Previous documented studies have examined the consequences of automated driving for drivers’ visual strategies. For example, PRC has been shown to be low during automated rides. The present study uses the opposite methodological approach, aiming to determine the state of automation (i.e., manual or automated) based on the driver’s spontaneous visual strategies. Of course, in a real driving situation, the state of automation of the vehicle is known and does not need to be estimated. Estimating the state of automation is therefore not an objective in itself, or at least not an objective with a direct applicative aim. Predicting the state of automation must be considered here as an original methodological approach that aims above all to identify the most critical oculometric indicators to estimate the consequences of automation on visual strategies. This methodology, if conclusive, could be useful for monitoring other driver states and understanding the underlying visual behaviour.

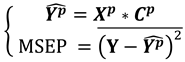

Driver visual strategies were considered in several ways. The analysis was performed by first considering only the PRC, and then a set of static indicators (percentage of time spent in the different AOIs) and/or dynamic indicators (transitions between AOIs). Partial Least Square (PLS) regressions were used to estimate a score between −1 (automated driving) and 1 (manual driving). This estimate of the state of automation from the visual indicators considered was used to classify the trials, with a calculation of the quality of the estimate in each case.

The objective was to determine whether considering the PRC was sufficient to discriminate between automated and manual driving. The extent to which the inclusion of other static and dynamic indicators would improve the driver-state estimation was also assessed. We hypothesised that considering the gaze dynamics would improve the ability to distinguish between manual and automated driving.

Methods

Participants

The study sample included 12 participants (9 male; 3 female) who had a mean age of 21.4 years (SD = 5.34). They all had normal or corrected vision (with contact lenses only). All participants held a French driver’s licence, with mean driving experience of 9950 km/year (SD = 5500). A signed written informed consent form, sent by e-mail one week before the experiment and printed for the day of experimentation, was required to participate in the study.

Materials

The study used a fixed-base simulator consisting of an adjustable seat, a digital dashboard, a steering wheel with force feedback, a gear lever, a clutch, an accelerator and brake pedals (see

Figure 1). The driving scene was generated with SCANeR Studio (v1.6) and displayed on three large screens in front of the driver (field of view ~= 120°). An additional screen, simulating a central console, provided information about the vehicle automation mode.

Gaze data were recorded using a Smart Eye Pro (V5.9) eye-tracker with four cameras (two below the central screen and one below each peripheral screen). The calibration of the eye-tracker occurred at the start of the experiment and required two steps. In the first step, a 3D model of the driver’s head was computed. In the second step, the gaze was computed using a 12-point procedure. The overall accuracy of calibration was 1.2° for the central screen and 2.1° for peripheral areas. Gaze data and vehicle data were directly synchronised at 20 Hz by the driving simulator software.

Procedure

After adjusting the seat and performing the eye-tracker calibration, participants were familiarised with the simulator by driving manually along a training track. Once this task was completed, instructions for automated driving were given orally. These were as follows: when the autonomous mode was activated, vehicle speed and position on the road would be automatically controlled, taking into account traffic, speed limits and overtaking other cars if necessary. To fit with SAE level 3 requirements, drivers were told that the automated function would only be available for a portion of the road, with the distance and time remaining in the autonomous mode displayed on the left side of the human-machine interface (HMI). When automation conditions were not met, the system would request the driver to regain control of the vehicle.

Two use cases were presented to the participants. In the first case, the vehicle was approaching the end of the automated road section. The drivers received mild auditory and visual warning signals and had 45 s to regain control. The second use case was an unexpected event, such as the loss of sensors. In this case, a more intense auditory alarm and a different pictogram were displayed, and drivers had only 8 s to resume control. All the pictograms (

Figure 2) and sounds used by the HMI were presented to participants at the end, before they started a second training session.

During the second training session, participants first experienced manual driving (with cruise control, corresponding to SAE level 1) and then automated driving (SAE level 3: conditional automation). At level 3, drivers experienced four transitions from automated to manual driving, two in each use case presented in the instructions. All takeovers were properly carried out during the training session.

Then, after a short break, the experimental phase started. All participants experienced the manual and automated conditions and the order of presentation was counter-balanced. The scenario was similar in the two driving conditions and comprised an 18-min drive in a highway context. Most of the road was a 40-km two-lane dual carriageway, with a speed limit of 130 km/h, in accordance with French regulations. Occasional changes in road geometry (temporary three-lane traffic flow, highway exits and slope variation) and speed limits (130 km/h to 110 km/h) were included to make the driving less monotonous. In both directions on the highway, traffic was fluid, with eight overtaking situations.

A critical incident occurred at the 18th minute of automated driving. Thereafter, a questionnaire was administered. However, these results are not reported as they are beyond the scope of this paper, which merely aims to characterise differences in gaze behaviour for manual versus automated conditions. Therefore, only the data common to both conditions is considered here; that is, 17 min of driving time, during which no major events occurred.

Data Structure and Annotations

The driving environment was divided into 13 AOIs as shown in

Figure 3. These were as follows:

The central screen contained six areas: the central mirror (area CM); the road centre (RC), defined as a circular area of 8° radius in front of the driver (

Victor et al., 2005); and four additional areas defined relative to the road centre (Up, Left, Down and Right;

Carsten et al., 2012).

- -

Each peripheral screen contained two areas: the lateral mirror (LM, RM) and the remaining peripheral scene (LS, RS).

- -

The dashboard (D).

- -

The HMI.

- -

All gaze data directed outside of the stipulated areas were grouped as an area called “Others”.

The percentage of time spent in each AOI was computed, as was a matrix of transitions between AOIs. The transition matrix indicated the probability to shift from one AOI to another or to remain in the same AOI. Probabilities were estimated by the observations made on the participants. As there were 13 AOIs that defined the entire world, the transition matrix was a 13*13 matrix.

24 statistical units were considered (= 12 participants * 2 driving conditions). For each of them, 182 visual indicators (= 13*13 transitions + 13 percentage of time on each AOI) were calculated, forming a complete set of data including both static and dynamic gaze indicators. The complete matrix was therefore 24*182 and was denoted as XDS. When only the transitions were considered, the matrix was denoted as XD and its size was 24*169. When only the percentages of time spent on each AOI were considered, the matrix was 24*13 and was denoted as XS. Another vector, constituted of only the PRC, was also computed (size: 24*1) and was labelled XPRC.

All the matrices were centred and reduced for the next step of the analysis. As described below, this entailed PLS regressions.

The data were structured according to the aim of predicting the automation state (Y) from either XPRC, XS, XD or XDS. The automation state was defined as follows: it was valued 1 for manual driving and -1 for automated driving.

The appropriate model of prediction was chosen with respect to certain constraints. First, the number of visual indicators (max 182 for XDS) available to explain Y was notably higher than the number of observations (n=24). Second, the visual indicators were correlated. Indeed, with the driving environment divided into 13 AOIs, the percentage of time spent on 12 AOIs enabled calculating the percentage of time spent on the 13th AOI. In mathematical terms, X might not be full rank. Given this correlation between variables, a simple linear model was not appropriate.

Considering these constraints, the PLS regression model was selected. PLS regression yields the best estimation of

Y available with a linear model given the matrix

X (

Abdi, 2010). All PLS regressions performed in this study used the PLS regression package on R (

R Core Team, 2018).

PLS regression is based on a simultaneous decomposition of both

X and

Y on orthogonal components. Once the optimal decomposition is found, the number of visual indicators is systematically reduced to consider only relevant visual indicators for the prediction. Ultimately, the most accurate model – involving optimal decomposition and only the most relevant visual indicators – is set up to provide the estimation of

Y, based on a linear combination of the relevant visual indicators. Validation was performed using a leave-one-out procedure. For more details, see the

Appendix A.

Data Analysis

Three sequential stages composed the analysis:

- -

The first step only considered the percentage of time spent on each AOI individually. The difference between manual and automated driving was evaluated using paired t-tests, corrected with the Holm-Bonferroni procedure to control the family-wise error rate.

- -

In the second step, prediction models of the automation state were developed using PLS regression. Several models were developed, depending on the nature of visual indicators that served as input: PRC only (XPRC), static indicators only (XS), dynamic indicators (XD) or a combination of static and dynamic indicators (XDS).

- -

In the final step, a binary classification of the prediction was performed. A given statistical individual was classified as “automated” if its prediction was negative and as “manual” in the opposite case. The number of errors was then considered.

Results

Distribution of visual attention as a function of the automation state

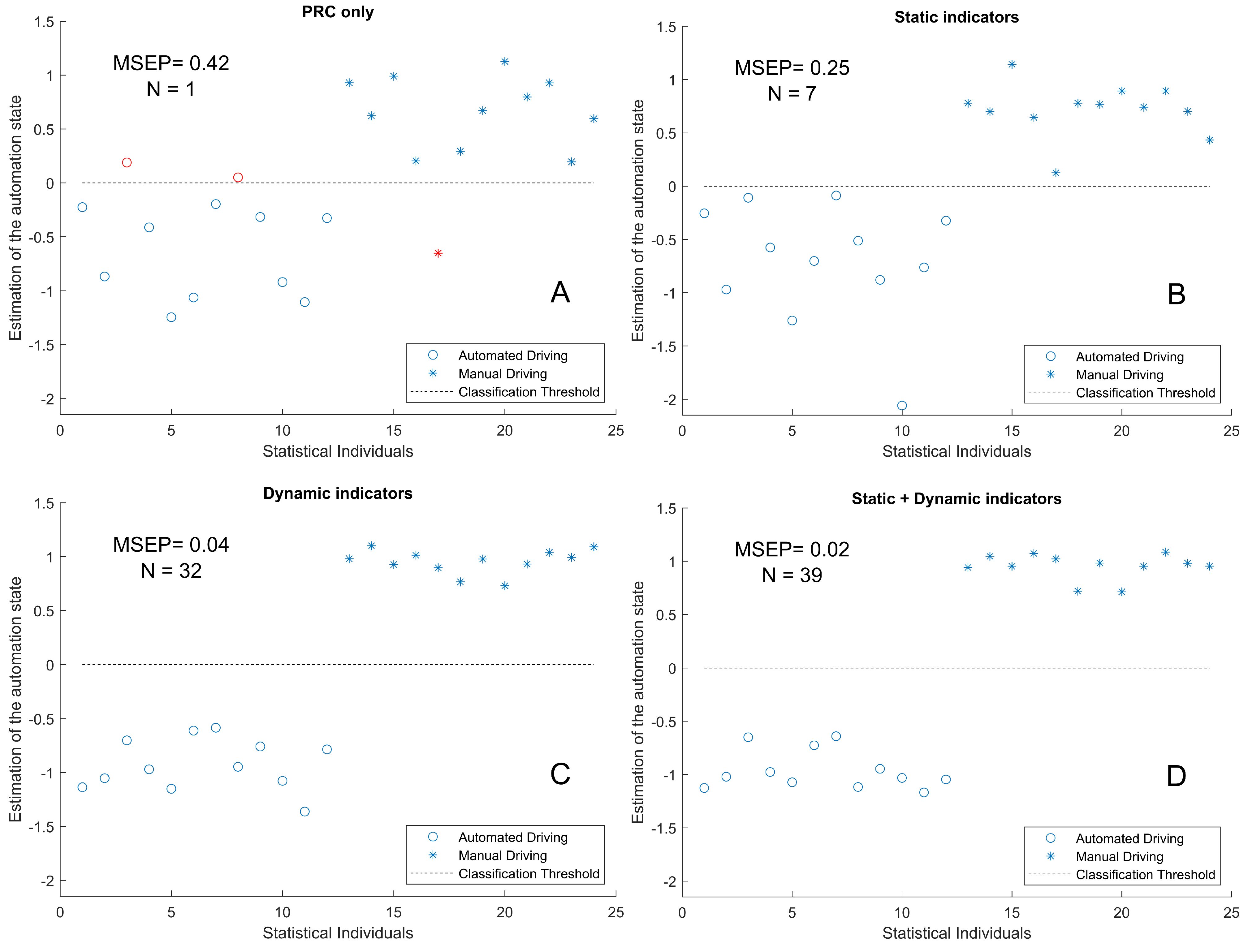

The percentage of time spent in each of the 13 AOIs during the entire drive is presented in

Table 1.

During manual driving, participants spent about 27% more time in the Road Centre area than during automated driving (p<.001). Conversely, automated driving was associated with more gazing directed all other areas, in particular to the left and right peripheral screens, the down area and the central mirror, although these differences failed to reach statistical significance (p<.10).

Predictions of the automated state with PLS regression

Several predictions were performed with PLS predictions, depending on the input matrix. These were as follows:

XPRC (PRC only),

XS (static indicators only),

XD (dynamic indicators) or the combination of dynamic and static indicators (

XDS). Estimations obtained with the PLS regression method are presented in

Figure 4.

Results showed that considering PRC alone provided a rough estimation of the automation state, with a mean square error of prediction (MSEP) of 0.42. It gave rise to three classification errors (

Figure 4-A). When all static indicators were considered as input, seven were selected for PLS regression (

Table 2, column 1). This analysis yielded a more accurate estimation of the automation state than prediction based on PRC alone; the MSEP was halved and there was no classification error. However, four statistical individuals (1, 3, 8 and 17) remained close to the classification threshold (

Figure 4-B).

When dynamic indicators alone were considered, 32 (out of 169) indicators were selected by the PLS regression. The prediction results were greatly improved, with an MSEP of 0.04. Moreover, the manual and automated driving conditions could be more clearly discriminated (

Figure 4-C). Considering static and dynamic indicators together yielded the lowest prediction error (MSEP = 0.02), although the pattern of results was quite similar to those obtained for dynamic PLS (

Figure 4-D). It should be noted that the indicators selected by the combined static and dynamic PLS were the same as those selected for static PLS and for dynamic PLS.

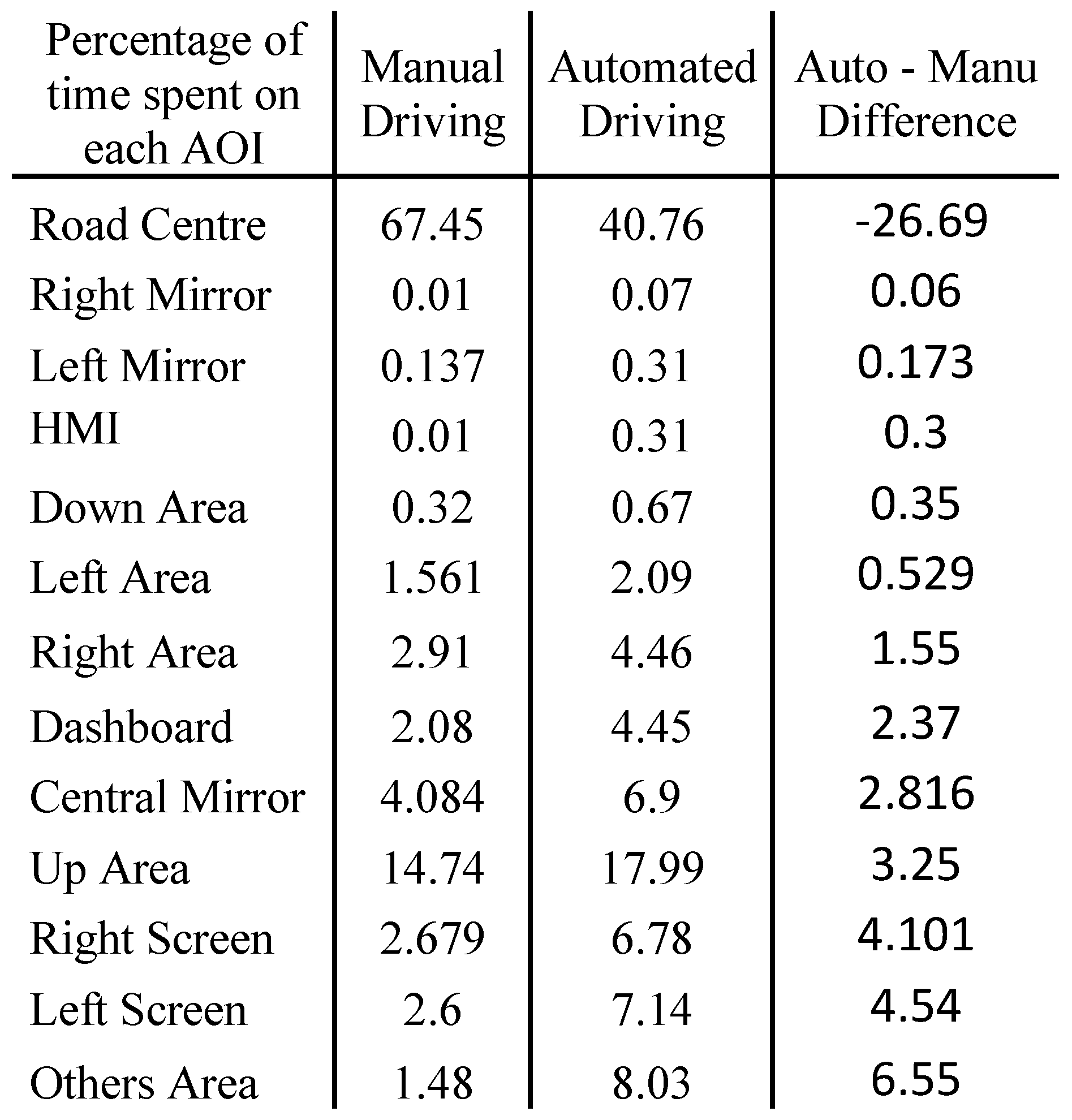

The PLS regression coefficients for each visual indicator retained in the different predictions are presented in

Table 2. The sign of the coefficients indicates the direction of their contribution to the estimate. A positive coefficient tends to increase the estimated score and is therefore a feature of manual driving. A negative coefficient decreases the estimated score, which corresponds to automated driving. A coefficient’s absolute value indicates its importance for the prediction, with large coefficients contributing strongly to the estimation.

In accordance with the analysis presented in

Table 1, the only static indicator selected by the PLS analyses as a feature of manual driving was the percentage of time spent looking at road centre. By contrast, automated driving was characterised by a combination of looking at the peripheral areas (left and right screen), the dashboard, Others, central mirror and the down area.

Both dynamic PLS and the combined static and dynamic PLS selected 32 AOI transitions, 14 related to manual driving and 18 related to automated driving. To facilitate the identification of dynamic visual patterns characteristic of manual or automated driving, we performed a final analysis of the AOI transitions. We examined whether the gaze mostly entered or exited each AOI. The difference between the number of transitions entering the AOI and the number of transitions exiting the AOI was calculated. If the resulting value was positive, the AOI was classed as an entering AOI; if the value was negative, the AOI was classed as an existing AOI. The results are presented in

Figure 5 and

Figure 6.

The analysis of gaze dynamics during manual driving showed that many more glances were coming in than going out the road centre area. To a lesser extent, the area just below (down area) and the left and central mirror also received more glances in than out. All other AOIs had more exiting glances, apart from the left screen, which was at equilibrium.

Automated driving was characterised by many glances moving away from the road (road centre, left area and down area), and a favouring of areas that provided information about the vehicle’s status (dashboard, HMI) and the adjacent lane (left mirror, left screen). More glances entered the areas not related to the driving task (Others, right screen) than exited.

Discussion

The aim of this work was to try to predict, on the basis of drivers’ visual strategies, whether they were driving manually or in autonomous mode. This estimation allowed the identification of the most important visual indicators in both driving modes. To do this, we analysed how drivers distributed their attention over a set of AOIs that composed the driving environment. We considered both static indicators (percentage of time spent on AOIs) and indicators of gaze dynamics (matrices of transitions between AOIs). The indicators that best predicted the driving mode were selected using PLS regression. The quality of the prediction was then evaluated by examining the ability of the models to distinguish between the two driving modes without error.

The results confirm a recurrent observation in studies of visual strategies in autonomous driving situations. That is, drivers spend less time looking at the road and more time looking at the periphery (

Barnard & Lai, 2010;

Damböck et al., 2013;

Louw & Merat, 2017;

Mackenzie & Harris, 2015). Under the experimental conditions in this study, the diversion of the gaze from the road centre area was partly in favour of the lateral visual scene, the central rear-view mirror, the dashboard and the down area. The results can be interpreted in relation to the concept of situation awareness (

Endsley, 1995). Situational awareness is built at three levels. First, drivers must perceive (level 1, perception) and understand visual information (level 2, comprehension). Drivers must also anticipate future driving situations (level 3, projection). During automated driving, in the absence of strong sources of distraction, drivers were freed from vehicle control and could therefore take information about the driving environment and the state of the vehicle at leisure. The dispersed gaze observed could thus have contributed to maintaining better situational awareness. However, an increase in the number of glances directed at places not relevant to the driving task (Other area) was also reported. Hence, even in the absence of a major source of distraction or secondary task, some disengagement was observed.

In comparison, manual driving appeared stereotypical, with more than two thirds of the driving time spent looking at the road ahead. There were also many transitions back to this area once the gaze had been turned away from it. During manual driving, visuomotor coordination is essential: gaze allows the anticipation of the future path and leads steering actions (

Land & Lee, 1994;

Mars, 2008;

Wilkie et al., 2008). It has been shown that autonomous driving makes visuomotor coordination inoperative, which can have deleterious consequences in the event of an unplanned takeover request (

Mars & Navarro, 2012;

Mole et al., 2019;

Navarro et al., 2016;

Schnebelen et al., 2019).

Previous work has shown that automation of driving is associated with reduced PRC (

Carsten et al., 2012;

Jamson et al., 2013). Here, we examined the corollary by attempting to predict the state of driving automation based on the observed PRC. The results showed that PRC was indeed a relevant indicator. Categorising drivers on this criterion showed fairly accurate results, although with some error. By considering all areas of potential interest in driving, the quality of prediction increased significantly. The improvement in prediction notably depended on the nature of the indicators. Although it was possible to categorise – without error – the mode of driving according to the percentage of time spent on all AOIs, the quality of prediction was far higher when we also considered the dynamics of transitions between AOIs. The MSEP was reduced 6-fold using the dynamic approach compared to the static approach. Moreover, adding static indicators to dynamic indicators yielded the same pattern of results as that obtained from dynamic indicators alone, with slightly less prediction error.

These results confirm the hypothesis that gaze dynamics are strongly impacted by automation of the vehicle. Specifically, gaze dynamics appear to explain the essence of the distinction between manual and autonomous driving.

As mentioned, considering the transitions between AOIs improved the prediction of the driving mode. It also enabled refining the visual patterns characteristic of manual or automated driving. For example, during manual driving, static indicators highlighted the predominance of information obtained from the road centre area. Accounting for the gaze dynamics confirmed this observation but also revealed a subtle imbalance in terms of reception versus emission for the left and central rear-view mirrors. Even if glances at the mirrors do not account for substantial visual processing time, they might be essential for the driver to create a mental model of the driving environment.

This study developed a methodology to analyse the influence of the level of automation based on a large set of visual indicators. This methodology is based on PLS regression. Other modeling approaches, such as Long Short-Term Memory (LSTM) networks or Hidden Markov Models (HMMs), could lead to comparable results in terms of prediction quality. However, non-parametric approaches such as those using neural networks might prove less useful to achieve the objective of our study, which was to determine the most relevant visual indicators to discriminate driving conditions. This problem of interpretation may not arise with HMMs, which are quite common in the analysis of visual exploration of natural scenes (

Coutrot et al., 2018). Nevertheless, to train an HMM requires to set prior probabilities, which is not the case with PLS regression.

The approach developed in this study could potentially be used to detect other driver states. For example, it could be a useful tool for detecting driver activity during automated conditional driving (

Braunagel et al., 2015). In the event of a takeover request, the method could also be used to assess driver readiness (

Braunagel et al., 2017). During autonomous driving, the driver may also gradually get out of the loop, which may lead to a decrease in the quality of takeover when it is requested (

Merat et al., 2019). Recently, we have shown that PLS modelling can discriminate between drivers who supervise the driving scene correctly and drivers who are out of the loop with a high level of mind wandering (

Schnebelen et al., 2020). The approach could also be transposed to other areas such as the evaluation of the out-of-the-loop phenomenon in aeronautics, for example (

Gouraud et al., 2017).