Multi-Intensity Optimization-Based CT and Cone Beam CT Image Registration

Abstract

:1. Introduction

2. Materials and Methods

2.1. Details of the Proposed Model

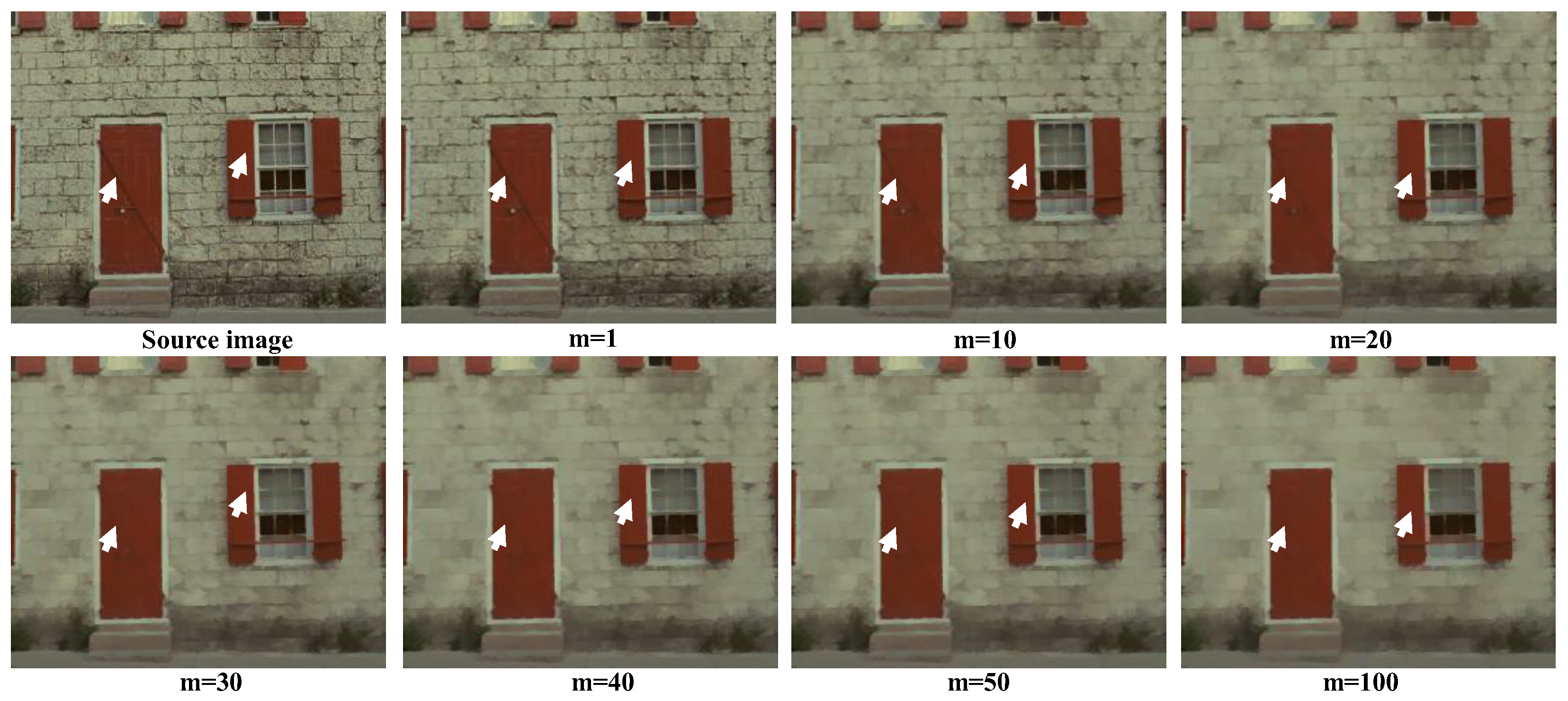

2.1.1. Multi-Weighted Mean Curvature Filter

2.1.2. Intensity-Based Registration

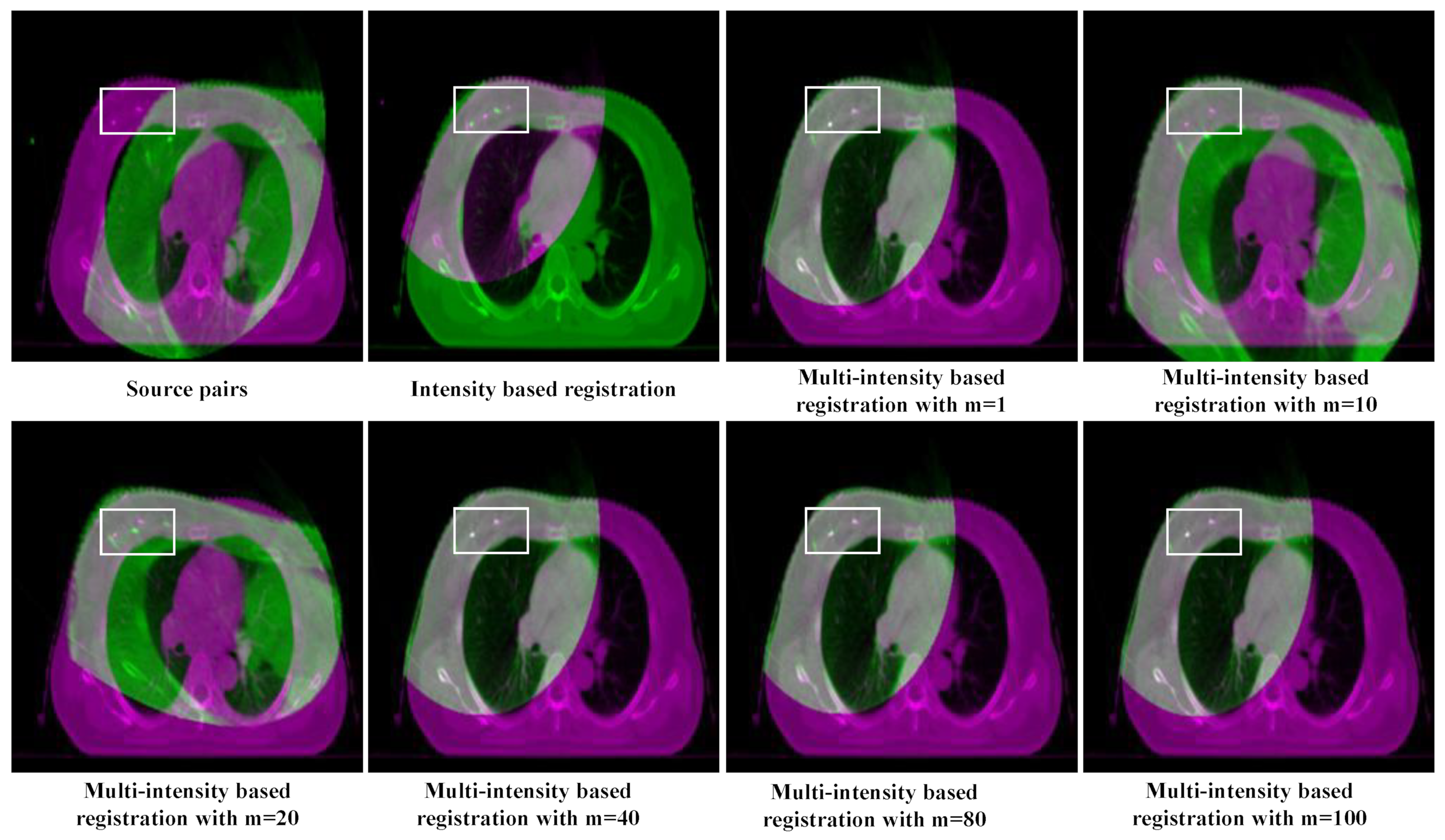

2.1.3. Multi-Intensity Based Registration

2.1.4. Intersection Mutual Information-Based Optimal Transformation Selection

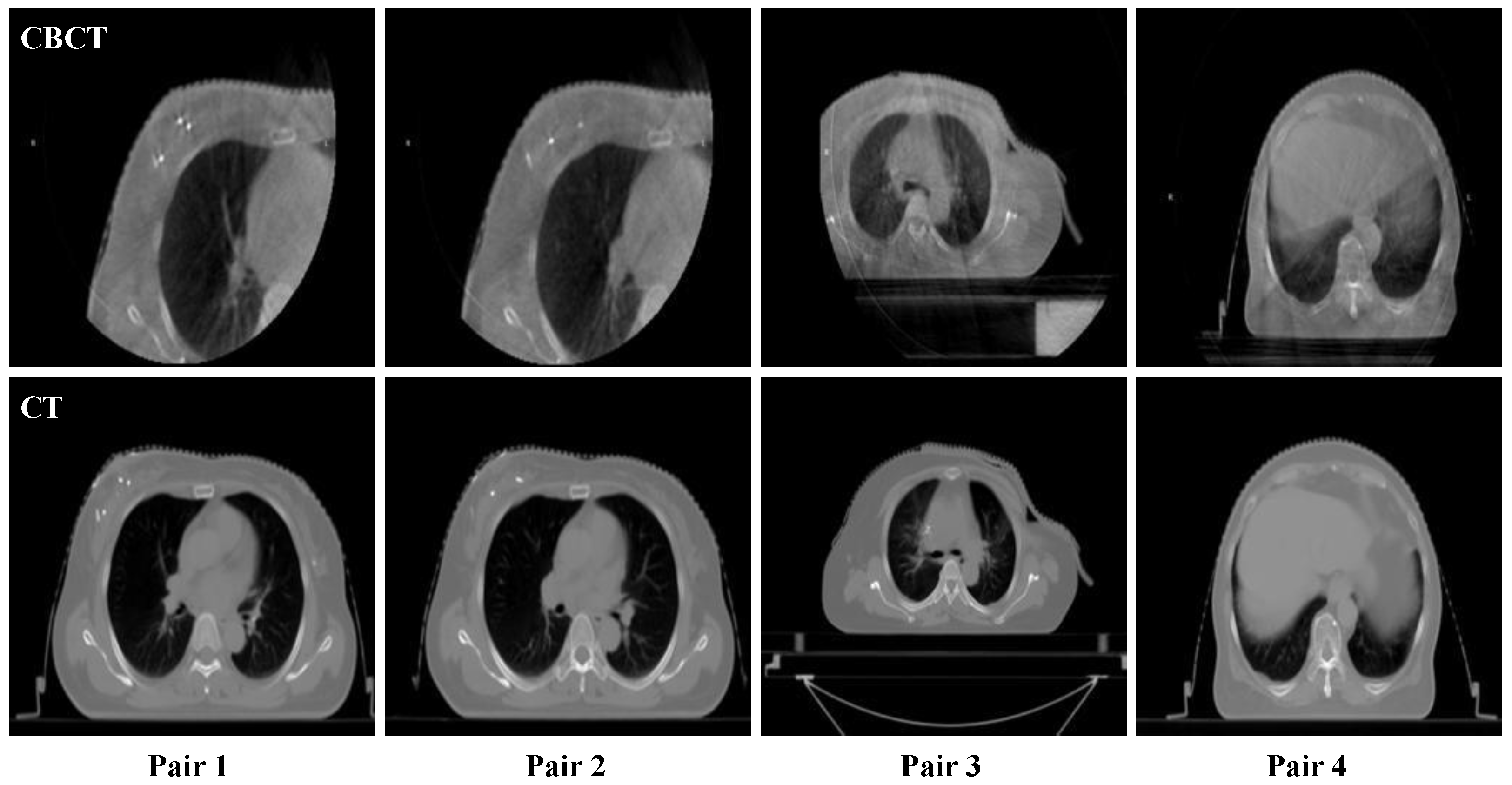

2.2. Sample and Data

2.3. Measures of Parameters

3. Experiments and Analysis

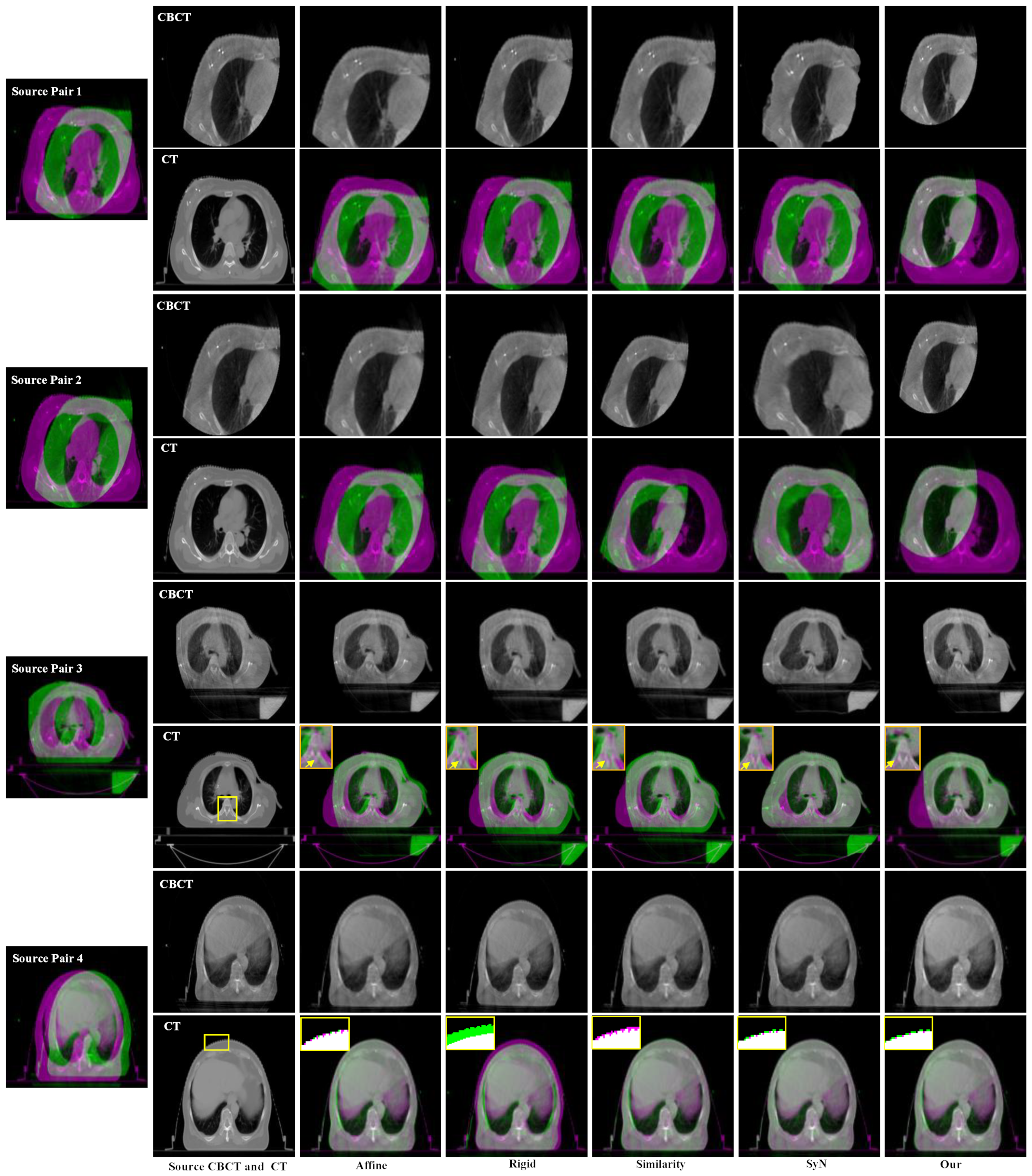

3.1. Experimental Settings

3.2. Experimental Results and Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zheng, R.; Zhang, S.; Zeng, H.; Wang, S.; Sun, K.; Chen, R.; Li, L.; Wei, W.; He, J. Cancer incidence and mortality in China, 2016. J. Natl. Cancer Cent. 2022, 2, 1–9. [Google Scholar] [CrossRef]

- Li, Z.; Fang, Y.; Chen, H.; Zhang, T.; Yin, X.; Man, J.; Yang, X.; Lu, M. Spatiotemporal trends of the global burden of melanoma in 204 countries and territories from 1990 to 2019: Results from the 2019 global burden of disease study. Neoplasia 2022, 24, 12–21. [Google Scholar] [CrossRef] [PubMed]

- Coccia, M.; Roshani, S.; Mosleh, M. Scientific Developments and New Technological Trajectories in Sensor Research. Sensors 2021, 21, 7803. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Wang, X.; Li, X.; Zhou, L.; Nie, S.; Li, C.; Wang, X.; Dai, G.; Deng, Z.; Zhong, R. Evaluating the impact of possible interobserver variability in CBCT-based soft-tissue matching using TCP/NTCP models for prostate cancer radiotherapy. Radiat. Oncol. 2022, 17, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Verellen, D.; Ridder, M.; Linthout, N.; Tournel, K.; Soete, G.; Storme, G. Innovations in image-guided radiotherapy. Nat. Rev. Cancer 2007, 7, 949–960. [Google Scholar] [CrossRef]

- Clough, A.; Sanders, J.; Banfill, K.; Faivre-Finn, C.; Price, G.; Eccles, C.L.; Aznar, M.; Van Herk, M. A novel use for routine CBCT imaging during radiotherapy to detect COVID-19. Radiography 2022, 28, 17–23. [Google Scholar] [CrossRef]

- Pollard, J.; Wen, Z.; Sadagopan, R.; Wang, J.; Ibbott, G. The future of image-guided radiotherapy will be MR guided. Br. J. Radiol. 2017, 90, 20160667. [Google Scholar] [CrossRef] [Green Version]

- Åström, L.M.; Behrens, C.P.; Calmels, L.; Sjöström, D.; Geertsen, P.; Mouritsen, L.S.; Serup-Hansen, E.; Lindberg, H.; Sibolt, P. Online adaptive radiotherapy of urinary bladder cancer with full re-optimization to the anatomy of the day: Initial experience and dosimetric benefits. Radiother. Oncol. 2022, 171, 37–42. [Google Scholar] [CrossRef]

- Coccia, M. Artificial intelligence technology in cancer imaging: Clinical challenges for detection of lung and breast cancer. J. Soc. Adm. Sci. 2019, 6, 82–98. [Google Scholar]

- Gong, J.; He, K.; Xie, L.; Xu, D.; Yang, T. A Fast Image Guide Registration Supported by Single Direction Projected CBCT. Electronics 2022, 11, 645. [Google Scholar] [CrossRef]

- Papp, J.; Simon, M.; Csiki, E.; Kovács, Á. CBCT Verification of SRT for Patients with Brain Metastases. Front. Oncol. 2021, 11, 745140. [Google Scholar] [CrossRef] [PubMed]

- Baeza, J.A.; Zegers, C.M.; de Groot, N.A.; Nijsten, S.M.; Murrer, L.H.; Verhoeven, K.; Boersma, L.; Verhaegen, F.; van Elmpt, W. Automatic dose verification system for breast radiotherapy: Method validation, contour propagation and DVH parameters evaluation. Phys. Med. 2022, 97, 44–49. [Google Scholar] [CrossRef] [PubMed]

- Coccia, M. Probability of discoveries between research fields to explain scientific and technological change. Technol. Soc. 2022, 68, 101874. [Google Scholar] [CrossRef]

- Coccia, M.; Finardi, U. New technological trajectories of non-thermal plasma technology in medicine. Int. J. Biomed. Eng. Technol. 2013, 11, 337–356. [Google Scholar] [CrossRef]

- Liang, J.; Liu, Q.; Grills, I.; Guerrero, T.; Stevens, C.; Yan, D. Using previously registered cone beam computerized tomography images to facilitate online computerized tomography to cone beam computerized tomography image registration in lung stereotactic body radiation therapy. J. Appl. Clin. Med. Phys. 2022, 23, e13549. [Google Scholar] [CrossRef] [PubMed]

- Woodford, K.; Panettieri, V.; Ruben, J.D.; Davis, S.; Tran Le, T.; Miller, S.; Senthi, S. Oesophageal IGRT considerations for SBRT of LA-NSCLC: Barium-enhanced CBCT and interfraction motion. Radiat. Oncol. 2021, 16, 218. [Google Scholar] [CrossRef] [PubMed]

- Gong, Y.; Goksel, O. Weighted mean curvature. Signal. Processing 2019, 164, 329–339. [Google Scholar] [CrossRef]

- Taylor, J.E. II—mean curvature and weighted mean curvature. Acta Metall. Et Mater. 1992, 40, 1475–1485. [Google Scholar] [CrossRef]

- Colding, T.; Minicozzi, W.; Pedersen, E. Mean curvature flow. Bull. Am. Math. Soc. 2015, 52, 297–333. [Google Scholar] [CrossRef] [Green Version]

- Papenberg, N.; Schumacher, H.; Heldmann, S.; Wirtz, S.; Bommersheim, S.; Ens, K.; Modersitzki, J.; Fischer, B. A Fast and Flexible Image Registration Toolbox. In Bildverarbeitung Für Die Medizin; Springer: Berlin/Heidelberg, Germany, 2007; pp. 106–110. [Google Scholar]

- Johnson, H.J.; Christensen, G.E. Consistent landmark and intensity-based image registration. IEEE Trans. Med. Imaging 2002, 21, 450–461. [Google Scholar] [CrossRef]

- Klein, S.; Staring, M.; Murphy, K.; Viergever, M.; Pluim, P. Elastix: A toolbox for intensity-based medical image registration. IEEE Trans. Med. Imaging 2009, 29, 196–205. [Google Scholar] [CrossRef] [PubMed]

- Duncan, T.E. On the calculation of mutual information. SIAM J. Appl. Math. 1970, 19, 215–220. [Google Scholar] [CrossRef] [Green Version]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active contour models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Chan, T.F.; Vese, L.A. Active contours without edges. IEEE Trans. Image Processing 2001, 10, 266–277. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Avants, B.B.; Tustison, N.; Song, G. Advanced normalization tools (ANTS). Insight J. 2009, 2, 1–35. [Google Scholar]

- Tustison, N.J.; Cook, P.A.; Klein, A.; Song, G.; Das, S.R.; Duda, J.T.; Kandel, B.; Strien, N.; Stone, J.; Gee, J.; et al. Large-scale evaluation of ANTs and FreeSurfer cortical thickness measurements. Neuroimage 2014, 99, 166–179. [Google Scholar] [CrossRef]

- Sanchez, C.E.; Richards, J.E.; Almli, C.R. Age-specific MRI templates for pediatric neuroimaging. Dev. Neuropsychol. 2012, 37, 379–399. [Google Scholar] [CrossRef] [Green Version]

- Tustison, N.J. Explicit B-spline regularization in diffeomorphic image registration. Front. Neuroinform. 2013, 7, 39. [Google Scholar] [CrossRef] [Green Version]

- Avants, B.B.; Tustison, N.; Song, G.; Cook, P.; Klein, A.; Gee, J. A reproducible evaluation of ANTs similarity metric performance in brain image registration. Neuroimage 2011, 54, 2033–2044. [Google Scholar] [CrossRef] [Green Version]

- Avants, B.B.; Epstein, C.L.; Grossman, M.; Gee, J. Symmetric diffeomorphic image registration with cross-correlation: Evaluating automated labeling of elderly and neurodegenerative brain. Med. Image Anal. 2008, 12, 26–41. [Google Scholar] [CrossRef] [Green Version]

- Omer, O.A.; Tanaka, T. Robust image registration based on local standard deviation and image intensity. In Proceedings of the 2007 6th International Conference on Information, Communications & Signal Processing, Singapore, 10–13 December 2007; pp. 1–5. [Google Scholar] [CrossRef]

- Sara, U.; Akter, M.; Uddin, M.S. Image quality assessment through FSIM, SSIM, MSE and PSNR—A comparative study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef] [Green Version]

| Method | Transformation | Similarity Measures |

|---|---|---|

| Affine | Affine registration | MI, Mean Squares, GC |

| Rigid | Rigid registration | MI, Mean Squares, GC |

| Similarity | Rotation + uniform scaling | MI, Mean Squares, GC |

| SyN | Symmetric diffeomorphic | CC, MI, Mean Squares, Demons |

| Pair 1 | Pair 2 | Pair 3 | Pair 4 | |||||

|---|---|---|---|---|---|---|---|---|

| Method | IMI | SSIM | IMI | SSIM | IMI | SSIM | IMI | SSIM |

| Affine | 0.4746 | 0.3183 | 0.5032 | 0.2705 | 0.6904 | 0.3030 | 0.8766 | 0.3134 |

| Rigid | 0.5711 | 0.2542 | 0.5035 | 0.2973 | 0.6923 | 0.2636 | 0.8351 | 0.3515 |

| Similarity | 0.4727 | 0.2569 | 0.5549 | 0.3885 | 0.6912 | 0.2667 | 0.8767 | 0.3138 |

| SyN | 0.4742 | 0.2712 | 0.4813 | 0.2283 | 0.7236 | 0.2906 | 0.8835 | 0.3188 |

| Our method | 0.5940 | 0.3898 | 0.5826 | 0.3847 | 0.7676 | 0.3066 | 0.8926 | 0.3159 |

| Method | Affine | Rigid | Similarity | SyN | Our Method |

|---|---|---|---|---|---|

| IMI | 0.6362 | 0.6505 | 0.6488 | 0.6406 | 0.7092 |

| SSIM | 0.3013 | 0.2916 | 0.3064 | 0.2772 | 0.3492 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, L.; He, K.; Gong, J.; Xu, D. Multi-Intensity Optimization-Based CT and Cone Beam CT Image Registration. Electronics 2022, 11, 1862. https://doi.org/10.3390/electronics11121862

Xie L, He K, Gong J, Xu D. Multi-Intensity Optimization-Based CT and Cone Beam CT Image Registration. Electronics. 2022; 11(12):1862. https://doi.org/10.3390/electronics11121862

Chicago/Turabian StyleXie, Lisiqi, Kangjian He, Jian Gong, and Dan Xu. 2022. "Multi-Intensity Optimization-Based CT and Cone Beam CT Image Registration" Electronics 11, no. 12: 1862. https://doi.org/10.3390/electronics11121862

APA StyleXie, L., He, K., Gong, J., & Xu, D. (2022). Multi-Intensity Optimization-Based CT and Cone Beam CT Image Registration. Electronics, 11(12), 1862. https://doi.org/10.3390/electronics11121862