Text-Guided Inpainting for Aesthetic Prediction in Orthofacial Surgery: A Patient-Centered AI Approach

Abstract

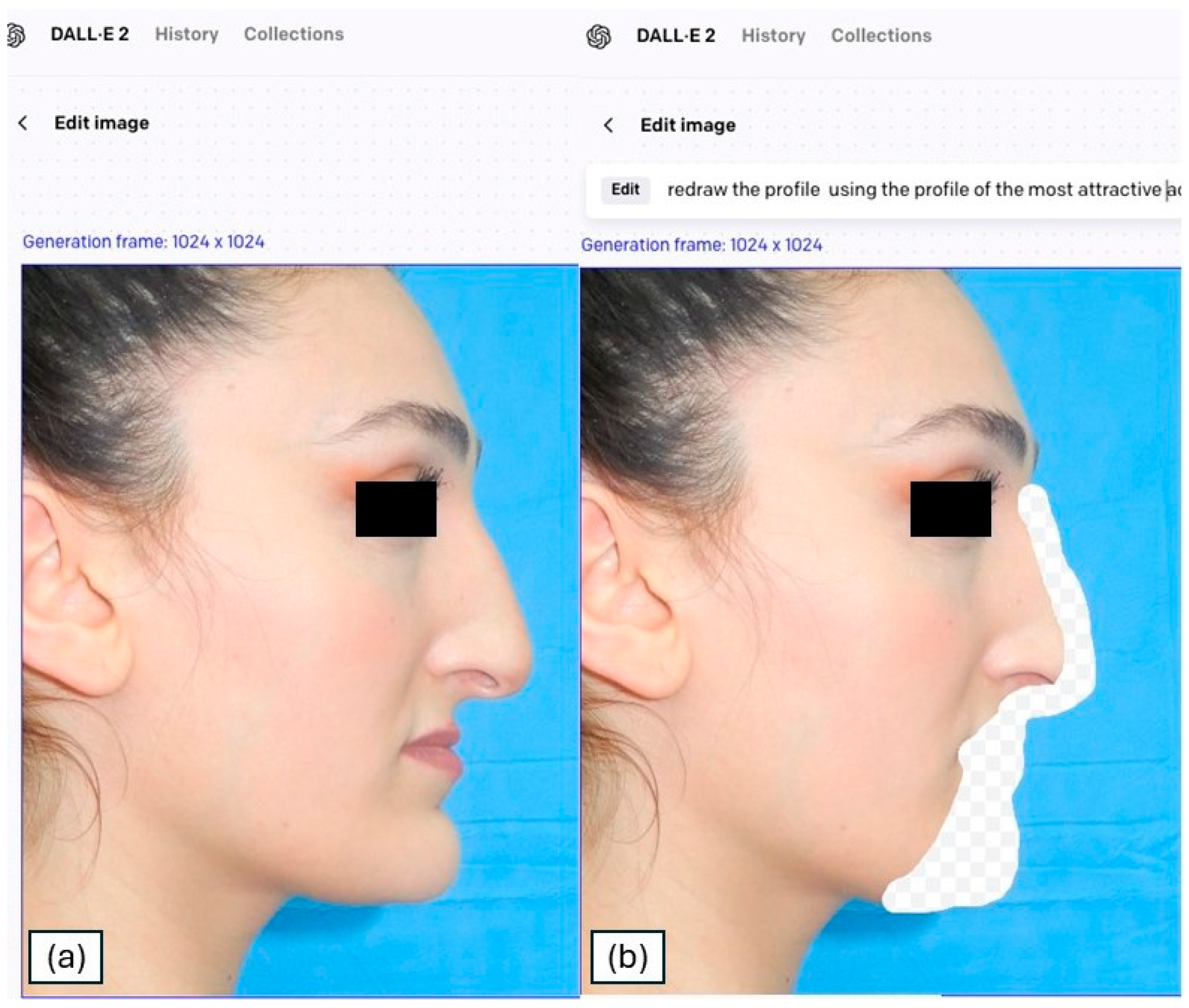

1. Introduction

2. Materials and Methods

2.1. Cohort Selection

- -

- Age ≥ 18 years.

- -

- Clinical and radiological diagnosis of DFDs.

- -

- Patients whose profilometric photographic documentation was available.

- -

- Patient who provided availability for the investigation and signed informed consent.

- -

- Age < 18 years

- -

- Prior orthognathic surgery or facial implants altering profile aesthetics.

- -

- Syndromic craniofacial disorders or major craniofacial trauma; chronic bone disease.

- -

- Inadequate or non-standardized photographs (see protocol) or missing key clinical data.

- -

- Active dermatological/soft-tissue conditions profoundly altering facial contours.

- -

- Refusal of image use for research.

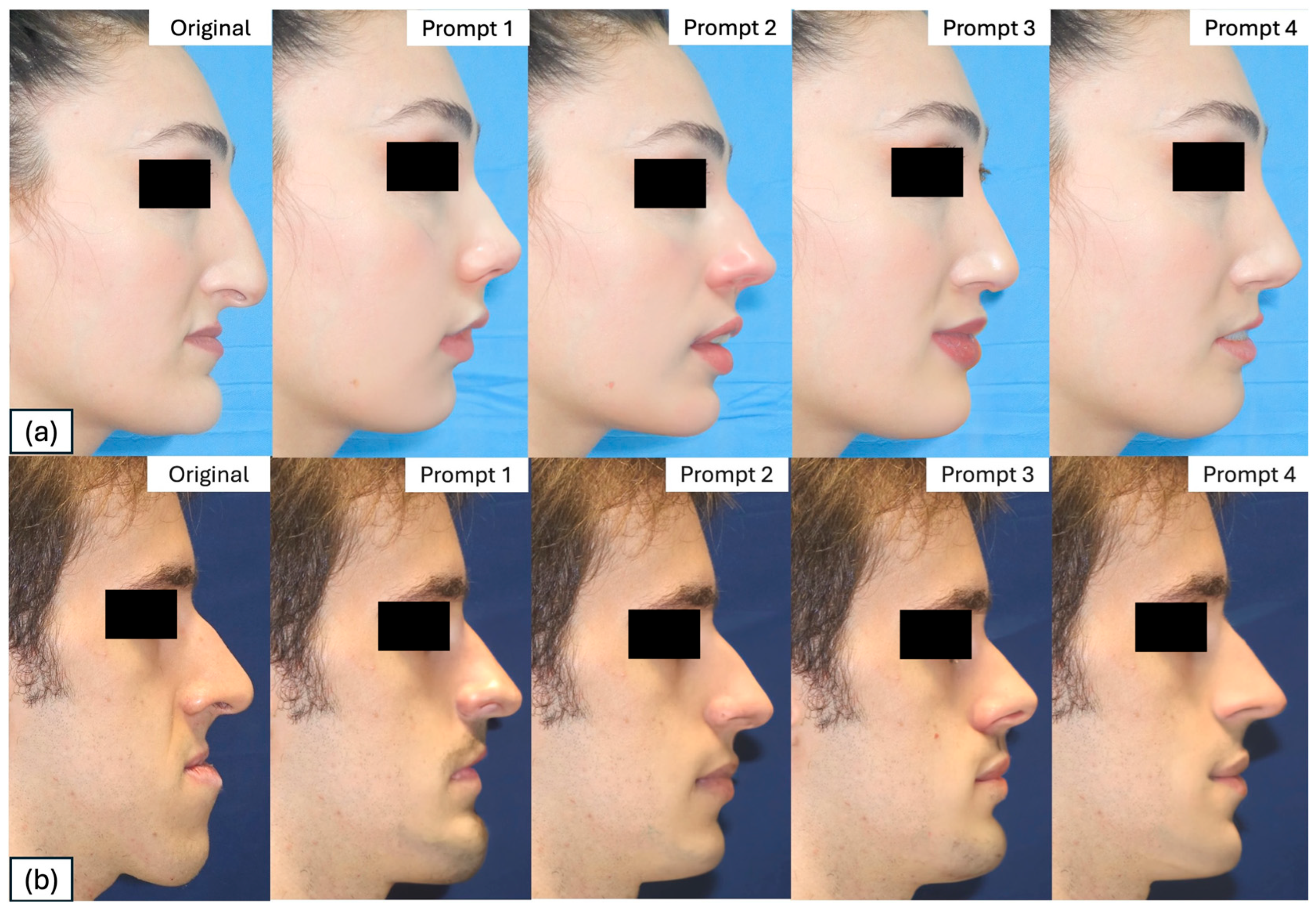

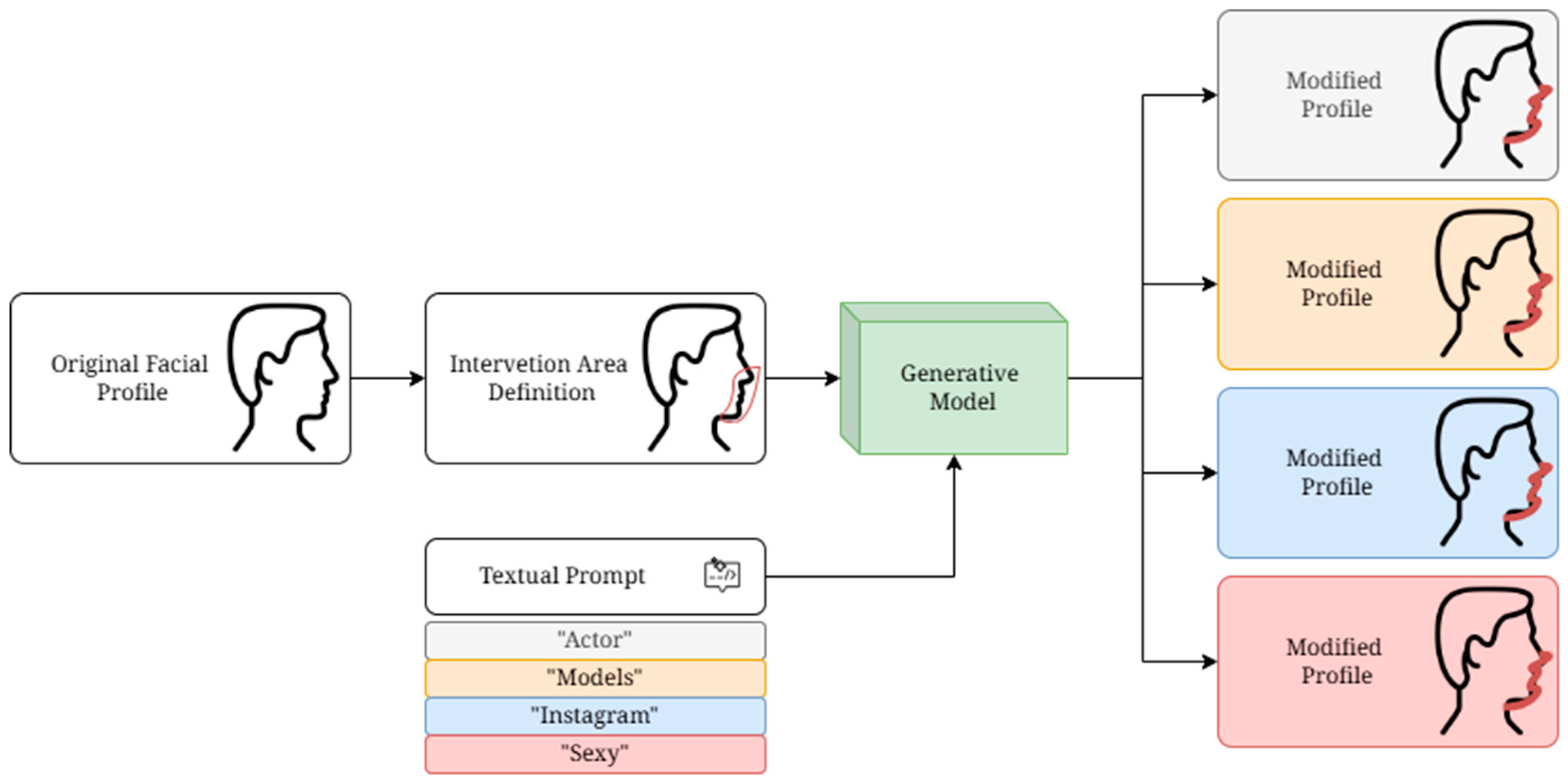

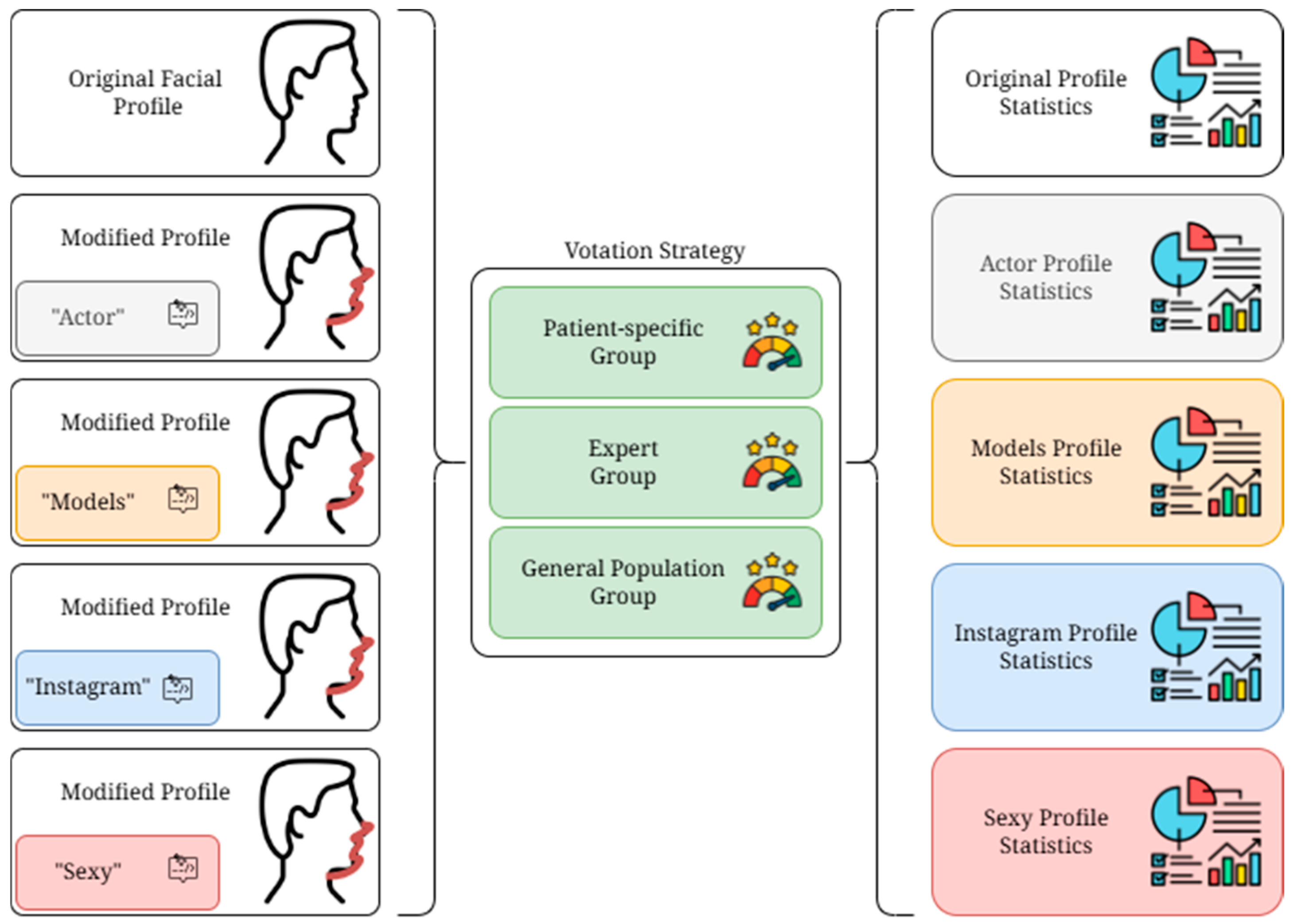

2.2. Study Design

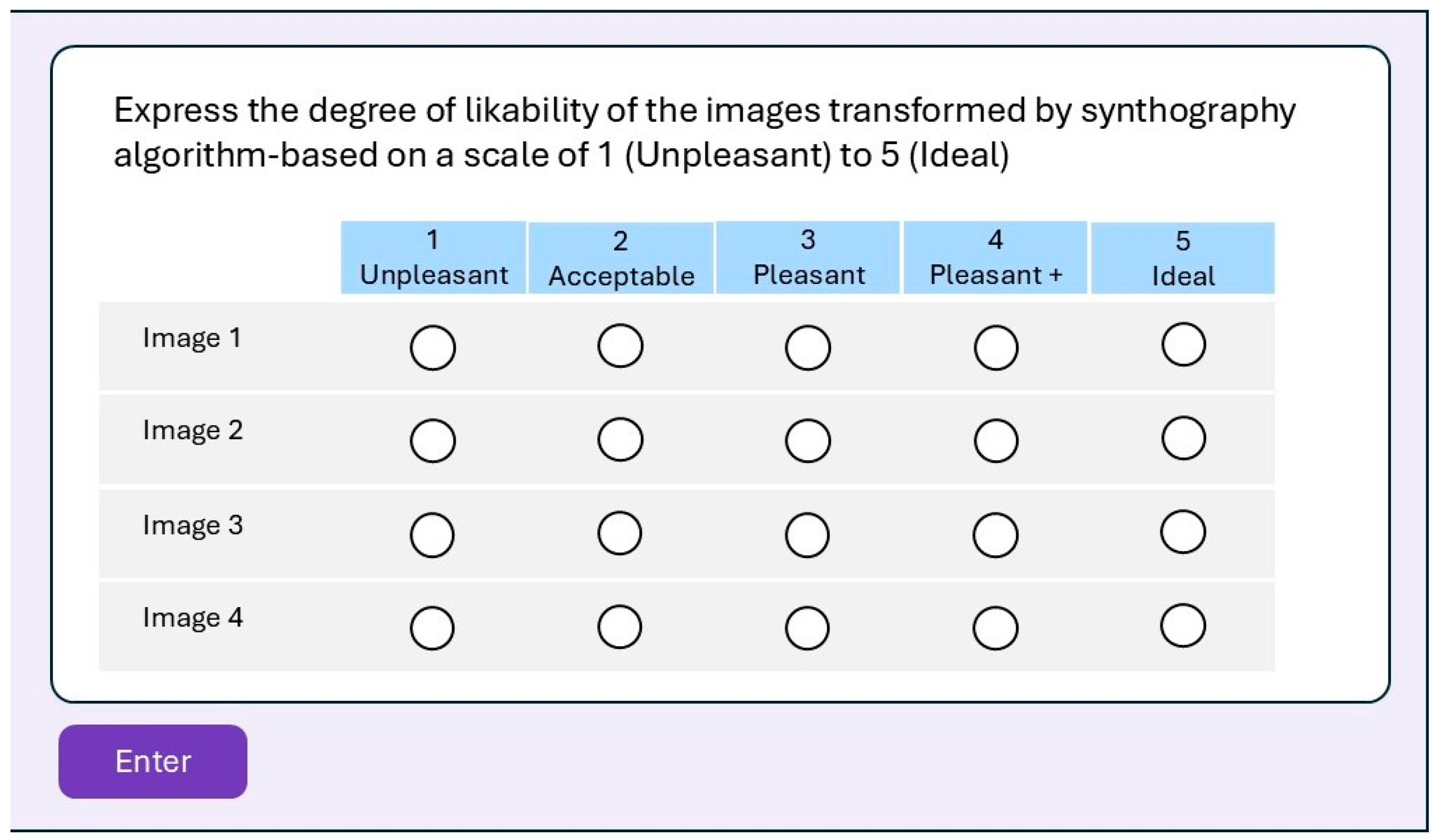

2.3. Google Form Survey

- -

- Patient-specific Group (PsG): The group composed of the 89 DFD patients on the waiting list to undergo surgery selected by the study. Each patient received the form containing the transformations of their own profile.

- -

- Expert Group (EG): The group composed of eight selected specialists in maxillofacial surgery from different institutions worldwide, experts in orthognathic surgery who agreed to participate in the study. Each expert received the form containing the transformations related to the profile of the 89 DFD patients selected. The group was randomly selected in terms of gender, ethnicity, and religious belief. All group members had to have more than 10 years of surgical experience.

- -

- General population Group (GpG): The group composed of six randomly selected individuals from the clinical database of the maxillofacial surgery unit who agreed to participate in the study. Each person received the form containing the transformations related to the profile of the 89 DFD patients selected. These individuals, patients treated or to be treated for other illnesses in our institute, were randomly selected from the point of view of education, religious beliefs, gender, and ethnicity, to obtain as heterogeneous a group as possible that would resemble a general population of common individuals.

2.4. Statistical Analysis

2.5. Definitions

Photography Protocol

3. Results

3.1. Cohort Results

3.2. Survey Results

4. Discussion

- Brain MRI Enhancements: Synthography tools like SynthSR improve brain MRI scans by converting low-resolution images into high-resolution T1-weighted scans. This enables more accurate morphometric analysis and helps to study neurological conditions such as Alzheimer’s disease and brain tumors [31].

- MR-to-CT Image Translation: Synthography creates CT images from MRI scans, improving diagnostic capabilities without additional radiation exposure. Techniques such as multi-resolution networks outperform traditional methods in synthesizing wholebody CT scans [32].

- Radiotherapy Planning: Synthetic CT (sCT) images are vital for radiation therapy planning when MRI is preferred for soft-tissue contrast. Approaches such as SynthRAD2023 challenge advance adaptive radiotherapy techniques [33].

- Synthetic Pathology for AI Training: Generative models create synthetic chest X-rays to balance datasets and enhance AI’s diagnostic performance. This reduces data imbalance and privacy concerns [34].

- Tumor Synthesis for AI Model Training: Synthetic tumors generated for various organs improve AI models for tumor detection and segmentation in various imaging protocols [35].

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| GANs | Generative Adversarial Networks |

| DFD | dentofacial deformities |

| VSP | virtual surgical planning |

| EG | Expert Group |

| GpG | General population Group |

References

- Causio, F.A.; DE Angelis, L.; Diedenhofen, G.; Talio, A.; Baglivo, F.; Participants, W. Perspectives on AI use in medicine: Views of the Italian Society of Artificial Intelligence in Medicine. J. Prev. Med. Hyg. 2024, 65, E285–E289. [Google Scholar] [CrossRef] [PubMed]

- Reinhuber, E. Synthography–An invitation to reconsider the rapidly changing toolkit of digital image creation as a new genre beyond photography. In International Conference on ArtsIT, Interactivity and Game Creation; Springer: Berlin/Heidelberg, Germany, 2021; pp. 321–331. [Google Scholar]

- Kazerouni, A.; Aghdam, E.K.; Heidari, M.; Azad, R.; Fayyaz, M.; Hacihaliloglu, I.; Merhof, D. Diffusion models in medical imaging: A comprehensive survey. Med. Image Anal. 2023, 88, 102846. [Google Scholar] [CrossRef]

- Varghese, C.; Harrison, E.M.; O’Grady, G.; Topol, E.J. Artificial intelligence in surgery. Nat. Med. 2024, 30, 1257–1268. [Google Scholar] [CrossRef]

- Sillmann, Y.M.; Monteiro, J.L.G.C.; Eber, P.; Baggio, A.M.P.; Peacock, Z.S.; Guastaldi, F.P.S. Empowering surgeons: Will artificial intelligence change oral and maxillofacial surgery? Int. J. Oral. Maxillofac. Surg. 2025, 54, 179–190. [Google Scholar] [CrossRef]

- Adegboye, F.O.; Peterson, A.A.; Sharma, R.K.; Stephan, S.J.; Patel, P.N.; Yang, S.F. Applications of Artificial Intelligence in Facial Plastic and Reconstructive Surgery: A Narrative Review. Facial Plast. Surg. Aesthet. Med. 2025, 27, 275–281. [Google Scholar] [CrossRef]

- Park, J.A.; Moon, J.H.; Lee, J.M.; Cho, S.J.; Seo, B.M.; Donatelli, R.E.; Lee, S.J. Does artificial intelligence predict orthognathic surgical outcomes better than conventional linear regression methods? Angle Orthod. 2024, 94, 549–556. [Google Scholar] [CrossRef] [PubMed]

- Swennen, G.R. Timing of three-dimensional virtual treatment planning of orthognathic surgery: A prospective single-surgeon evaluation on 350 consecutive cases. Oral. Maxillofac. Surg. Clin. N. Am. 2014, 26, 475–485. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Mo, S.; Fan, X.; You, Y.; Ye, G.; Zhou, N. A Meta-analysis and Systematic Review Comparing the Effectiveness of Traditional and Virtual Surgical Planning for Orthognathic Surgery: Based on Randomized Clinical Trials. J. Oral. Maxillofac. Surg. 2021, 79, e1–e471. [Google Scholar] [CrossRef]

- Almarhoumi, A.A. Accuracy of Artificial Intelligence in Predicting Facial Changes Post-Orthognathic Surgery: A Comprehensive Scoping Review. J. Clin. Exp. Dent. 2024, 16, e624–e633. [Google Scholar] [CrossRef]

- Zilio, C.; Tel, A.; Perrotti, G.; Testori, T.; Sembronio, S.; Robiony, M. Validation of ‘total face approach’ (TFA) three-dimensional cephalometry for the diagnosis of dentofacial dysmorphisms and correlation with clinical diagnosis. Int. J. Oral. Maxillofac. Surg. 2025, 54, 420–429. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 10684–10695. [Google Scholar]

- Solow, B.; Tallgren, A. Natural head position in standing subjects. Acta Odontol. Scand. 1971, 29, 591–607. [Google Scholar] [CrossRef] [PubMed]

- Lundström, A.; Lundström, F.; Lebret, L.M.; Moorrees, C.F. Natural head position and natural head orientation: Basic considerations in cephalometric analysis and research. Eur. J. Orthod. 1995, 17, 111–120. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Arnett, G.W.; Bergman, R.T. Facial keys to orthodontic diagnosis and treatment planning. Part I. Am. J. Orthod. Dentofacial Orthop. 1993, 103, 299–312. [Google Scholar] [CrossRef]

- Mohamed, W.V.; Perenack, J.D. Aesthetic adjuncts with orthognathic surgery. Oral. Maxillofac. Surg. Clin. N. Am. 2014, 26, 573–585. [Google Scholar] [CrossRef]

- Varazzani, A.; Tognin, L.; Corre, P.; Bouletreau, P.; Perrin, J.P.; Menapace, G.; Bergonzani, M.; Pedrazzi, G.; Anghinoni, M.; Poli, T. Virtual surgical planning in orthognathic surgery: A prospective evaluation of postoperative accuracy. J. Stomatol. Oral. Maxillofac. Surg. 2025, 126, 102025. [Google Scholar] [CrossRef] [PubMed]

- Abbate, V.; Committeri, U.; Troise, S.; Bonavolontà, P.; Vaira, L.A.; Gabriele, G.; Biglioli, F.; Tarabbia, F.; Califano, L.; Dell’Aversana Orabona, G. Virtual Surgical Reduction in Atrophic Edentulous Mandible Fractures: A Novel Approach Based on “in House” Digital Work-Flow. Appl. Sci. 2023, 13, 1474. [Google Scholar] [CrossRef]

- Barretto, M.D.A.; Melhem-Elias, F.; Deboni, M.C.Z. The untold history of planning in orthognathic surgery: A narrative review from the beginning to virtual surgical simulation. J. Stomatol. Oral. Maxillofac. Surg. 2022, 123, e251–e259. [Google Scholar] [CrossRef]

- Bertossi, D.; Albanese, M.; Mortellaro, C.; Malchiodi, L.; Kumar, N.; Nocini, R.; Nocini, P.F. Osteotomy in Genioplasty by Piezosurgery. J. Craniofac Surg. 2018, 29, 2156–2159. [Google Scholar] [CrossRef]

- Friscia, M.; Seidita, F.; Committeri, U.; Troise, S.; Abbate, V.; Bonavolontà, P.; Orabona, G.D.; Califano, L. Efficacy of Hilotherapy face mask in improving the trend of edema after orthognathic surgery: A 3D analysis of the face using a facial scan app for iPhone. Oral. Maxillofac. Surg. 2022, 26, 485–490. [Google Scholar] [CrossRef]

- Huang, A.; Fabi, S. Algorithmic Beauty: The New Beauty Standard. J. Drugs Dermatol. 2024, 23, 742–746. [Google Scholar] [CrossRef]

- Ioi, H.; Nakata, S.; Nakasima, A.; Counts, A.L. Comparison of cephalometric norms between Japanese and Caucasian adults in antero-posterior and vertical dimension. Eur. J. Orthod. 2007, 29, 493–499. [Google Scholar] [CrossRef]

- Lee, J.J.; Ramirez, S.G.; Will, M.J. Gender and racial variations in cephalometric analysis. Otolaryngol.—Head Neck Surg. 1997, 117, 326–329. [Google Scholar] [CrossRef]

- Jain, P.; Kalra, J.P. Soft tissue cephalometric norms for a North Indian population group using Legan and Burstone analysis. Int. J. Oral. Maxillofac. Surg. 2011, 40, 255–259. [Google Scholar] [CrossRef] [PubMed]

- Bertossi, D.; Malchiodi, L.; Albanese, M.; Nocini, R.; Nocini, P. Nonsurgical Rhinoplasty With the Novel Hyaluronic Acid Filler VYC-25L: Results Using a Nasal Grid Approach. Aesthet. Surg. J. 2021, 41, NP512–NP520. [Google Scholar] [CrossRef] [PubMed]

- Nocini, R.; Lippi, G.; Mattiuzzi, C. Periodontal disease: The portrait of an epidemic. J. Public Health Emerg. 2020, 4, 10. [Google Scholar] [CrossRef]

- Wong, K.F.; Lam, X.Y.; Jiang, Y.; Yeung, A.W.K.; Lin, Y. Artificial intelligence in orthodontics and orthognathic surgery: A bibliometric analysis of the 100 most-cited articles. Head. Face Med. 2023, 19, 38. [Google Scholar] [CrossRef]

- Committeri, U.; Monarchi, G.; Gilli, M.; Caso, A.R.; Sacchi, F.; Abbate, V.; Troise, S.; Consorti, G.; Giovacchini, F.; Mitro, V.; et al. Artificial Intelligence in the Surgery-First Approach: Harnessing Deep Learning for Enhanced Condylar Reshaping Analysis: A Retrospective Study. Life 2025, 15, 134. [Google Scholar] [CrossRef]

- Iglesias, J.E.; Billot, B.; Balbastre, Y.; Magdamo, C.; Arnold, S.E.; Das, S.; Edlow, B.; Alexander, D.C.; Golland, P.; Fischl, B. SynthSR: A public AI tool to turn heterogeneous clinical brain scans into high-resolution T1-weighted images for 3D morphometry. Sci. Adv. 2023, 9, eadd3607. [Google Scholar] [CrossRef]

- Kläser, K.; Varsavsky, T.; Markiewicz, P.; Vercauteren, T.; Hammers, A.; Atkinson, D.; Thielemans, K.; Hutton, B.; Cardoso, M.J.; Ourselin, S. Imitation learning for improved 3D PET/MR attenuation correction. Med. Image Anal. 2021, 71, 102079. [Google Scholar] [CrossRef]

- Huijben, E.M.; Terpstra, M.L.; Galapon, A., Jr.; Pai, S.; Thummerer, A.; Koopmans, P.; Afonso, M.; van Eijnatten, M.; Gurney-Champion, O.; Chen, Z.; et al. Generating synthetic computed tomography for radiotherapy: SynthRAD2023 challenge report. Med. Image Anal. 2024, 97, 103276. [Google Scholar] [CrossRef] [PubMed]

- Salehinejad, H.; Colak, E.; Dowdell, T.; Barfett, J.; Valaee, S. Synthesizing chest X-ray pathology for training deep convolutional neural networks. IEEE Trans. Med. Imaging 2018, 38, 1197–1206. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Chen, X.; Song, H.; Xiong, Z.; Yuille, A.; Wei, C.; Zhou, Z. Towards generalizable tumor synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 11147–11158. [Google Scholar]

- Dons, F.; Mulier, D.; Maleux, O.; Shaheen, E.; Politis, C. Body dysmorphic disorder (BDD) in the orthodontic and orthognathic setting: A systematic review. J. Stomatol. Oral Maxillofac. Surg. 2022, 123, e145–e152. [Google Scholar] [CrossRef] [PubMed]

| Prompt | Female Patient | Male Patients | Transformation Profile Keyword |

|---|---|---|---|

| 1 | “redraw the profile using the sideview of the most attractive actresses as a reference” | “redraw the profile using the sideview of the most attractive actors as a reference” | Actors/resses |

| 2 | “redraw the profile using the sideview of the women with the most followers on Instagram as a reference” | “redraw the profile using the sideview of the men with the most followers on Instagram as a reference” | |

| 3 | “redraw the profile using the sideview of the most of the sexiest women as a reference” | “redraw the profile using the sideview of the most of the sexiest men as a reference” | Sexiest |

| 4 | “redraw the profile using the sideview of the most beautiful runway models as a reference” | “redraw the profile using the sideview of the most beautiful runway models as a reference” | Models |

| Expert Group (EG) | |||||||||

| Answer | Surgeon 1 | Surgeon 2 | Surgeon 3 | Surgeon 4 | Surgeon 5 | Surgeon 6 | Surgeon 7 | Surgeon 8 | Total |

| NO | 5 (5.6%) | 16 (18%) | 4 (4.5%) | 1 (1.1%) | 1 (1.1%) | 9 (10%) | 6 (6.7%) | 1 (1.1%) | 5.2% |

| YES | 84 (94%) | 73 (82%) | 85 (96%) | 88 (99%) | 88 (99%) | 80 (90%) | 83 (93%) | 88 (99%) | 94% |

| General population Group (GpG): | |||||||||

| Answer | Extern 1 | Extern 2 | Extern 3 | Extern 4 | Extern 5 | Extern 6 | |||

| NO | 19 (21%) | 16 (18%) | 11 (12%) | 14 (16%) | 13 (15%) | 7 (7.9%) | 11.5% | ||

| YES | 70 (79%) | 73 (82%) | 78 (88%) | 75 (84%) | 76 (85%) | 82 (92%) | 85% | ||

| Patient-specific Group (PsG) | |||||||||

| Answer | Average | ||||||||

| NO | 19 (21%) | ||||||||

| YES | 70 (79%) | ||||||||

| Patient-Specific Group (PsG) | ||||||

|---|---|---|---|---|---|---|

| NO | YES | Raw Agreement | Cohen k | |||

| Expert Group (EG): | Surgeon 1 | NO | 3 | 2 | 79.7% | 0.18 |

| YES | 16 | 68 | ||||

| Surgeon 2 | NO | 6 | 10 | 74.2% | 0.18 | |

| YES | 13 | 60 | ||||

| Surgeon 3 | NO | 3 | 1 | 80.8% | 0.2 | |

| YES | 16 | 69 | ||||

| Surgeon 4 | NO | 0 | 1 | 77.5% | <0.01 | |

| YES | 19 | 69 | ||||

| Surgeon 5 | NO | 1 | 0 | 79.8% | 0.08 | |

| YES | 18 | 70 | ||||

| Surgeon 6 | NO | 4 | 5 | 77.5% | 0.17 | |

| YES | 15 | 65 | ||||

| Surgeon 7 | NO | 4 | 2 | 80.9% | 0.24 | |

| YES | 15 | 68 | ||||

| Surgeon 8 | NO | 1 | 0 | 79.8% | 0.08 | |

| YES | 18 | 70 | ||||

| General population Group (GpG): | Extern 1 | NO | 6 | 10 | 74.2% | 0.18 |

| YES | 13 | 60 | ||||

| Extern 2 | NO | 6 | 5 | 79.7% | 0.29 | |

| YES | 13 | 65 | ||||

| Extern 3 | NO | 6 | 8 | 76.3% | 0.22 | |

| YES | 13 | 62 | ||||

| Extern 4 | NO | 6 | 7 | 77.4% | 0.24 | |

| YES | 13 | 63 | ||||

| Extern 5 | NO | 4 | 3 | 79.7% | 0.22 | |

| YES | 15 | 67 | ||||

| Extern 6 | NO | 0 | 0 | 78.7% | <0.01 | |

| YES | 19 | 70 | ||||

| Transformation Profile | PsG N = 89 | EG N = 89 | GpG N = 89 | Total |

|---|---|---|---|---|

| Actor/Actress | 2.79 (1.13) | 3.36 (0.72) | 3.04 (0.69) | 9.19 |

| 2.57 (1.14) | 3.31 (0.69) | 2.92 (0.66) | 8.8 | |

| Sexiest | 2.37 (1.00) | 3.25 (0.69) | 2.89 (0.63) | 5.76 |

| Model | 2.79 (1.27) | 3.26 (0.71) | 2.88 (0.65) | 9.27 |

| Paired PsG/EG Differences | Paired PsG/GpG Differences | EG/GpG Differences | Total | ||||

|---|---|---|---|---|---|---|---|

| Transformation Profile | Mean (SD) | p-Value | Mean (SD) | p-Value | Mean (SD) | p-Value | |

| Actor/Actress | +0.57 (1.24) | <0.001 | +0.26 (1.28) | 0.084 | +0.31 (0.56) | <0.001 | +1.14 (3.08) |

| +0.73 (1.16) | <0.001 | +0.35 (1.08) | 0.005 | +0.39 (0.55) | <0.001 | +1.47 (2.79) | |

| Sexiest | +0.88 (1.02) | <0.001 | +0.52 (1.09) | <0.001 | +0.37 (0.58) | <0.001 | +1.77 (2.69) |

| Model | +0.47 (1.33) | 0.003 | +0.09 (1.40) | 0.531 | +0.38 (0.56) | <0.001 | +0.94 (3.29) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the Lithuanian University of Health Sciences. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abbate, V.; Carraturo, E.; Benfenati, D.; Tramontano, S.; Vaira, L.A.; Troise, S.; Canzi, G.; Novelli, G.; Pacella, D.; Russo, C.; et al. Text-Guided Inpainting for Aesthetic Prediction in Orthofacial Surgery: A Patient-Centered AI Approach. Medicina 2025, 61, 2075. https://doi.org/10.3390/medicina61122075

Abbate V, Carraturo E, Benfenati D, Tramontano S, Vaira LA, Troise S, Canzi G, Novelli G, Pacella D, Russo C, et al. Text-Guided Inpainting for Aesthetic Prediction in Orthofacial Surgery: A Patient-Centered AI Approach. Medicina. 2025; 61(12):2075. https://doi.org/10.3390/medicina61122075

Chicago/Turabian StyleAbbate, Vincenzo, Emanuele Carraturo, Domenico Benfenati, Sara Tramontano, Luigi Angelo Vaira, Stefania Troise, Gabriele Canzi, Giorgio Novelli, Daniela Pacella, Cristiano Russo, and et al. 2025. "Text-Guided Inpainting for Aesthetic Prediction in Orthofacial Surgery: A Patient-Centered AI Approach" Medicina 61, no. 12: 2075. https://doi.org/10.3390/medicina61122075

APA StyleAbbate, V., Carraturo, E., Benfenati, D., Tramontano, S., Vaira, L. A., Troise, S., Canzi, G., Novelli, G., Pacella, D., Russo, C., Tommasino, C., Nocini, R., Rinaldi, A. M., & Dell’Aversana Orabona, G. (2025). Text-Guided Inpainting for Aesthetic Prediction in Orthofacial Surgery: A Patient-Centered AI Approach. Medicina, 61(12), 2075. https://doi.org/10.3390/medicina61122075