Machine Learning Classification of Cognitive Status in Community-Dwelling Sarcopenic Women: A SHAP-Based Analysis of Physical Activity and Anthropometric Factors

Abstract

1. Introduction

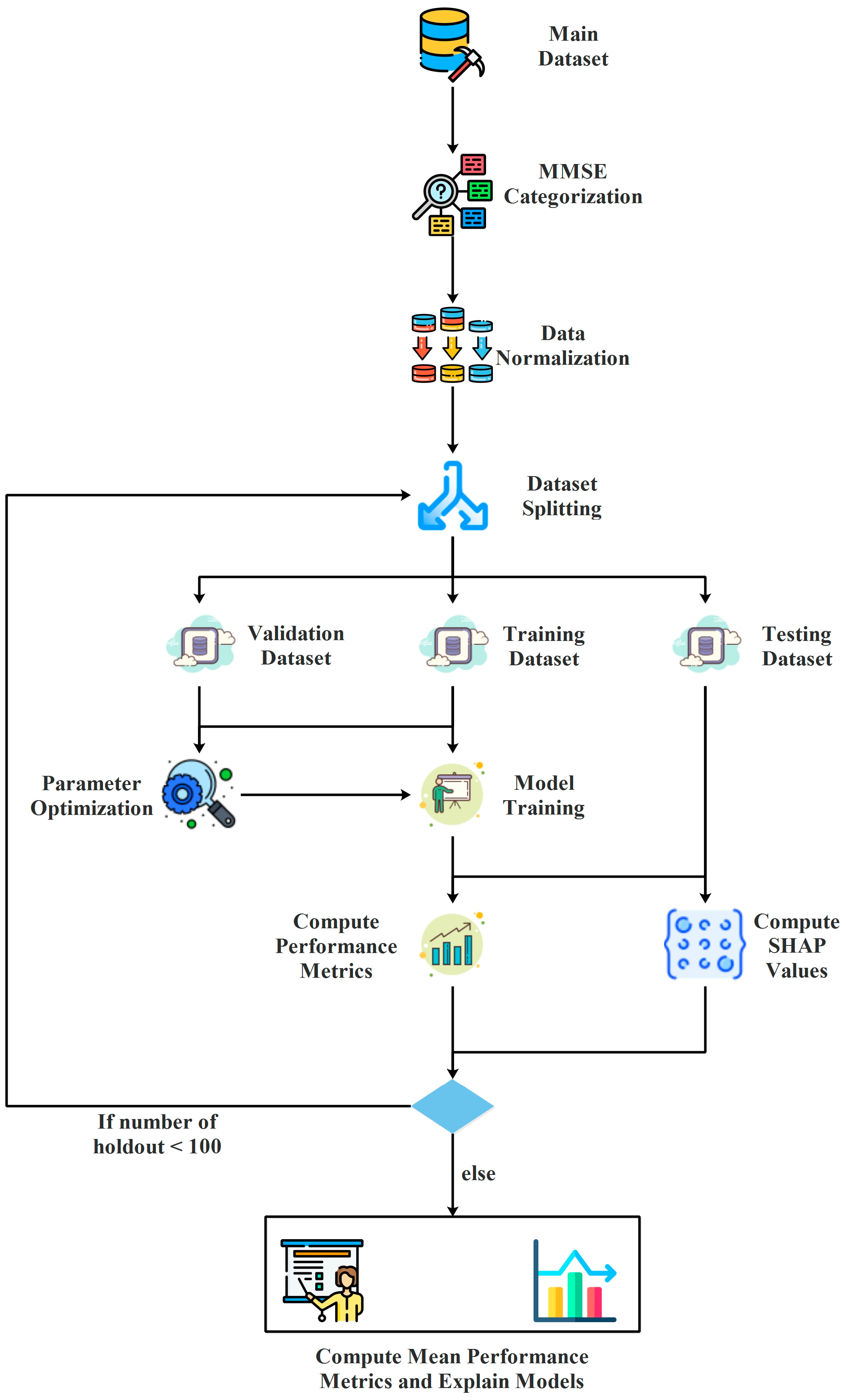

2. Materials and Methods

2.1. Population, Dataset, and Ethical Procedures

- Physical Activity Factors

- ○

- Moderate physical activity duration (moderatePA minutes): Weekly duration of moderate-intensity physical activity (in minutes).

- ○

- Walking days (walk days): Number of days per week spent walking.

- ○

- Sitting time (sitting time minutes, 7 days): Total weekly sitting time (in minutes), as an indicator of sedentary behavior.

- Anthropometric Factors

- ○

- Age: Age of participants (in years).

- ○

- Body Mass Index (BMI): Calculated as weight divided by the square of height (kg/m2).

- ○

- Weight: Body weight of participants (in kg).

- ○

- Height: Height of participants (in cm).

- Types of Cognitive Impairment

- ○

- Severe cognitive impairment: Individuals with MMSE scores ≤ 17.

- ○

- Mild cognitive impairment: Individuals with MMSE scores > 17.

2.2. Classification Models

2.3. Performance Metrics

2.4. Bayesian Optimization

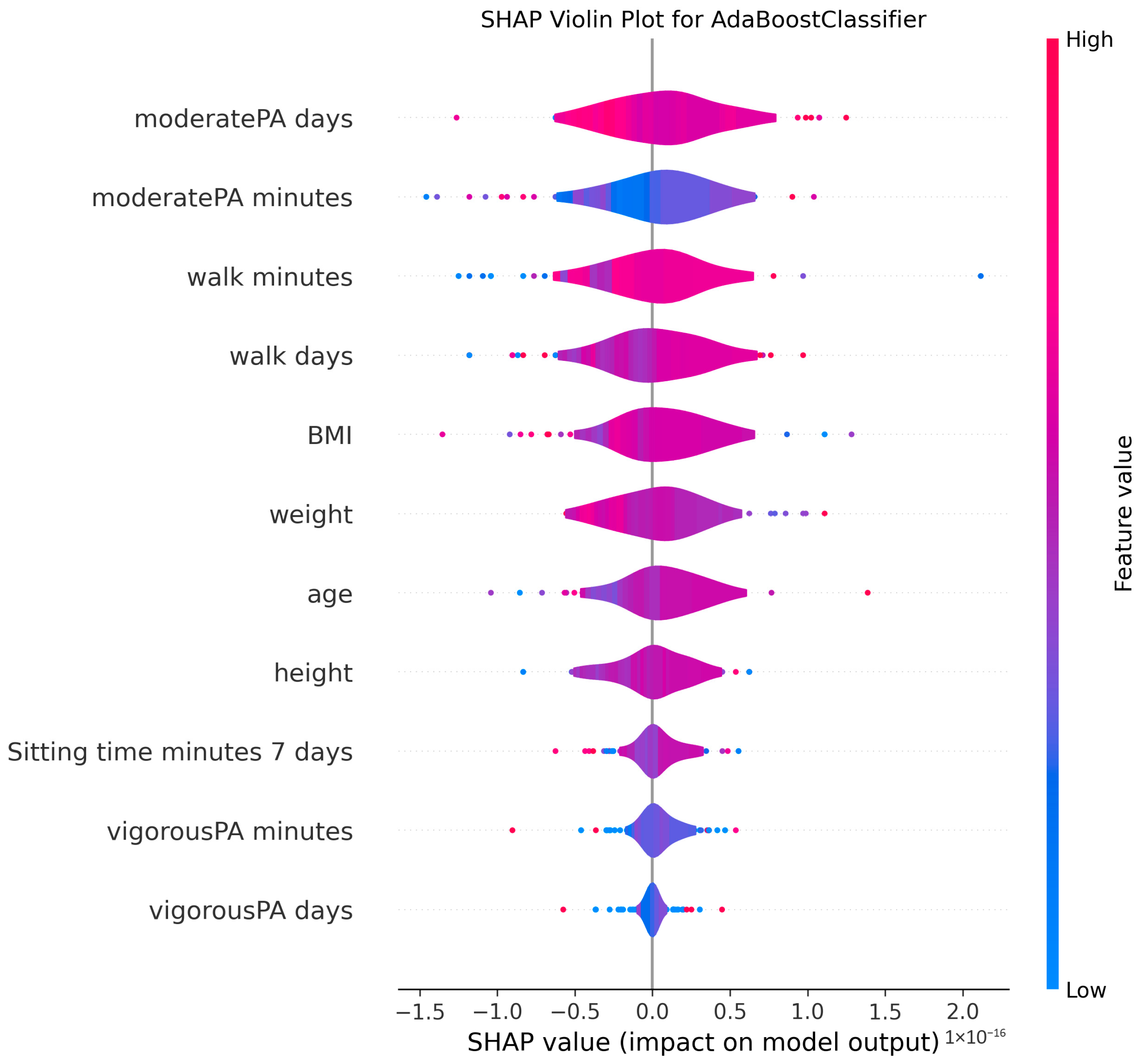

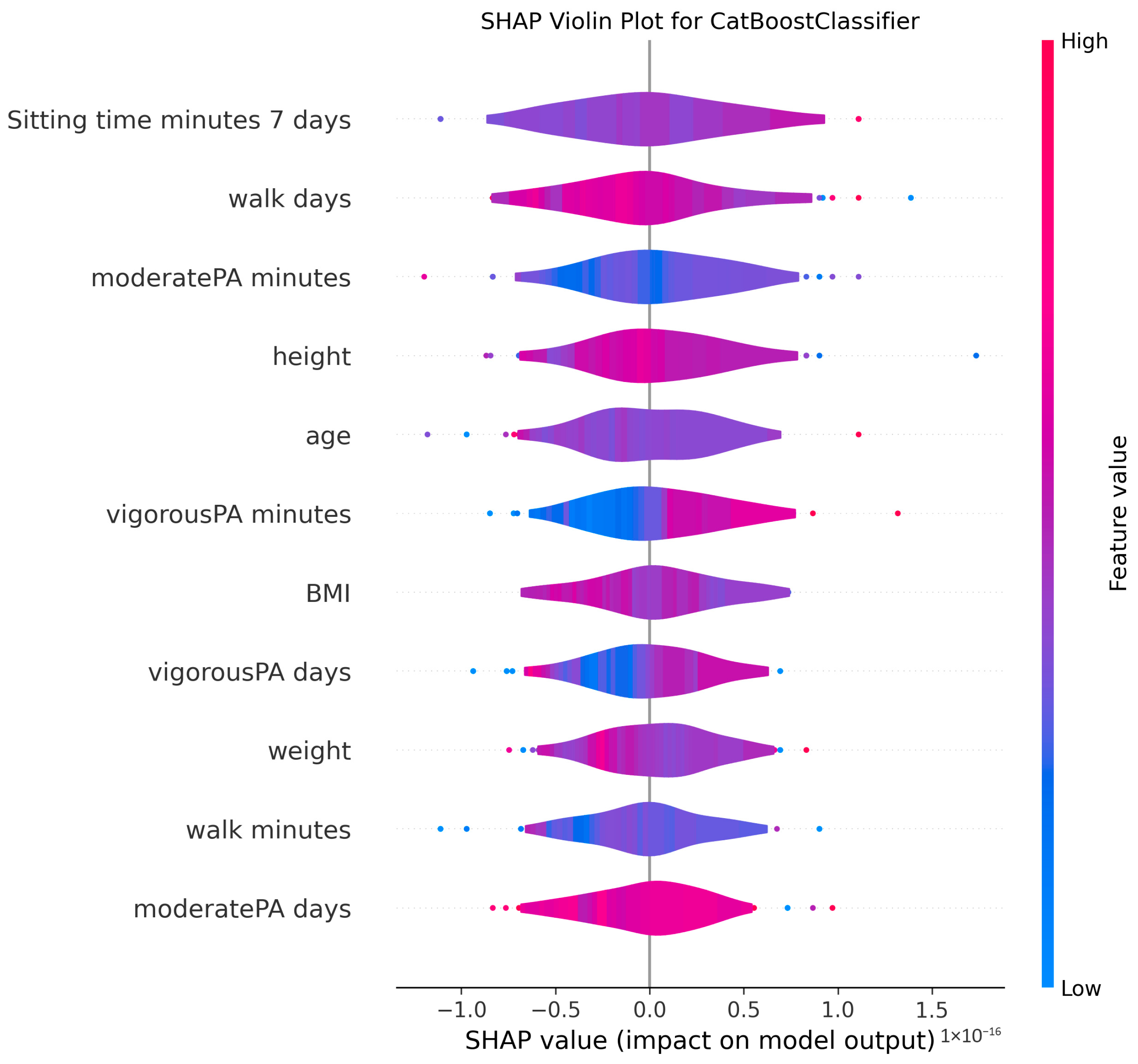

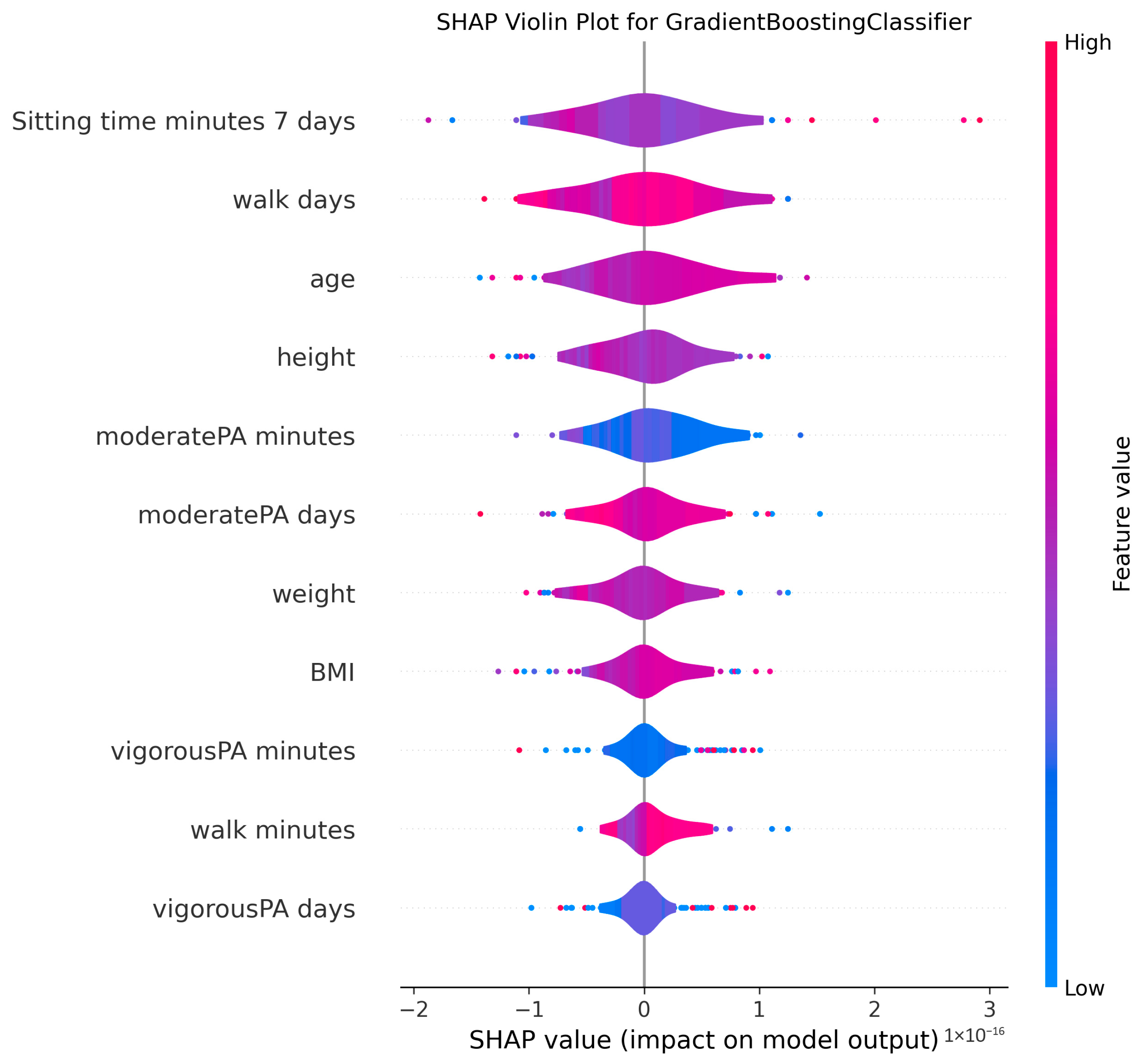

2.5. SHapley Additive exPlanations

3. Results

3.1. Data Preparation

3.2. Hyperparameter Optimization

3.3. Model Training and Evaluation

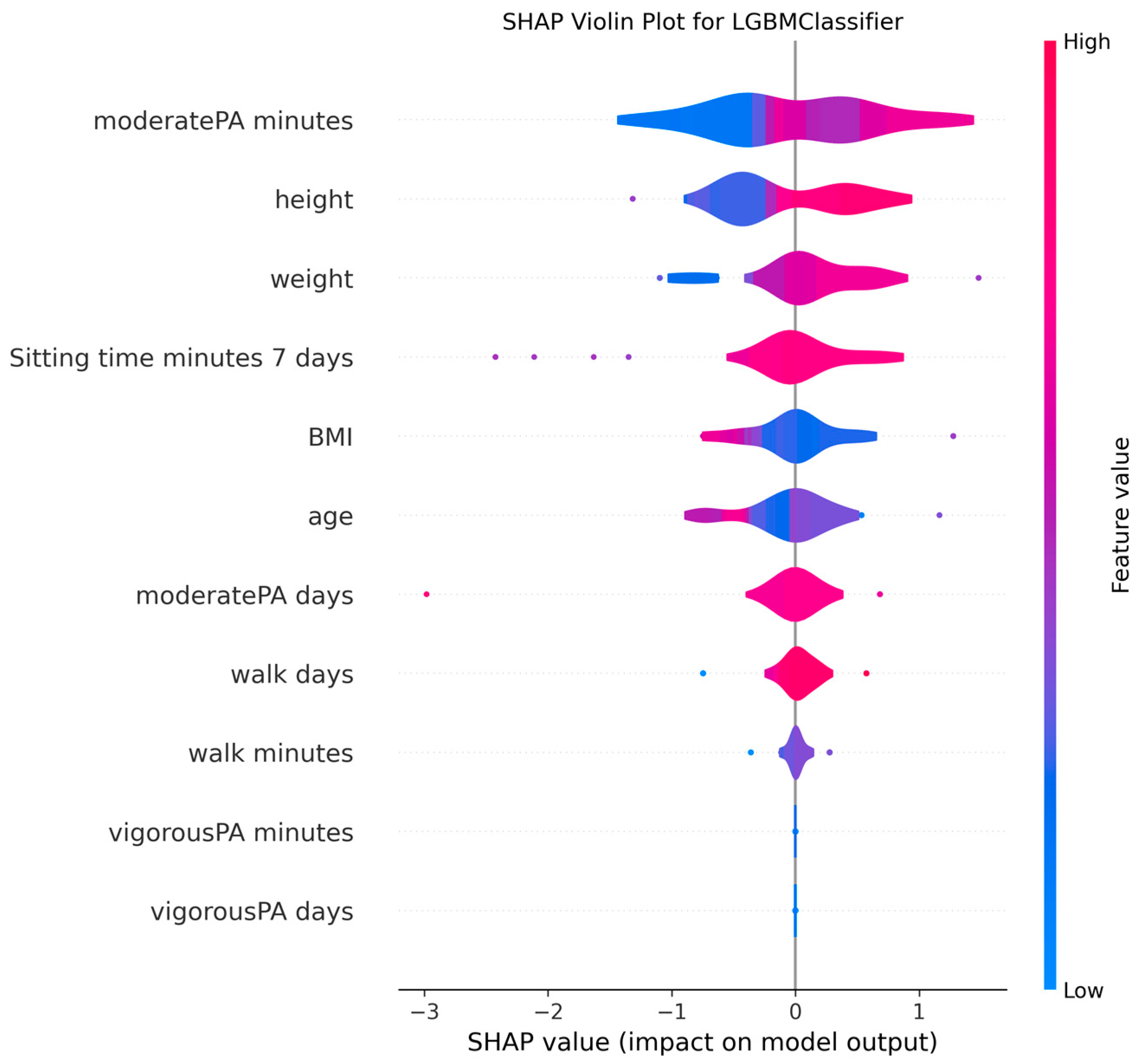

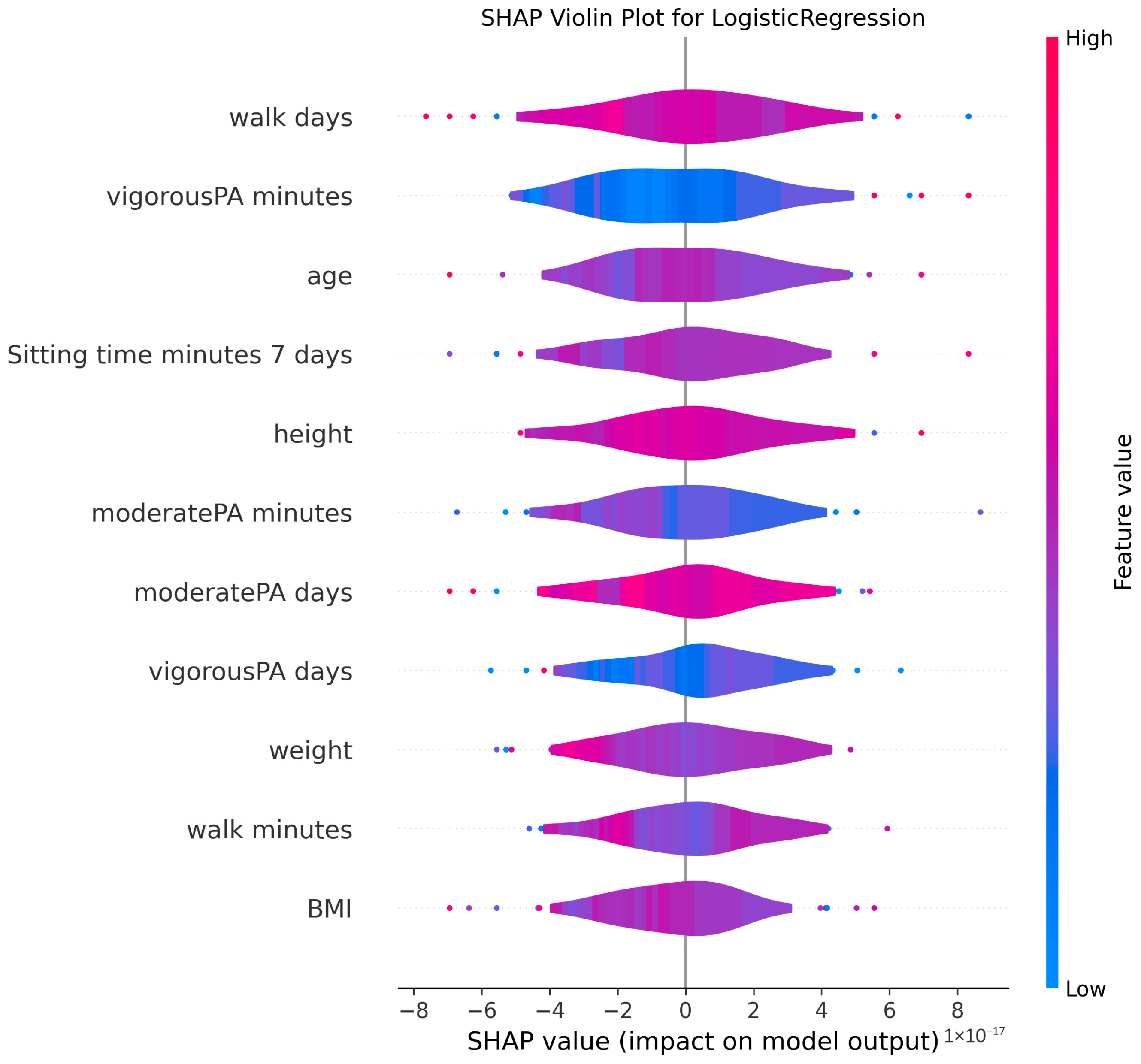

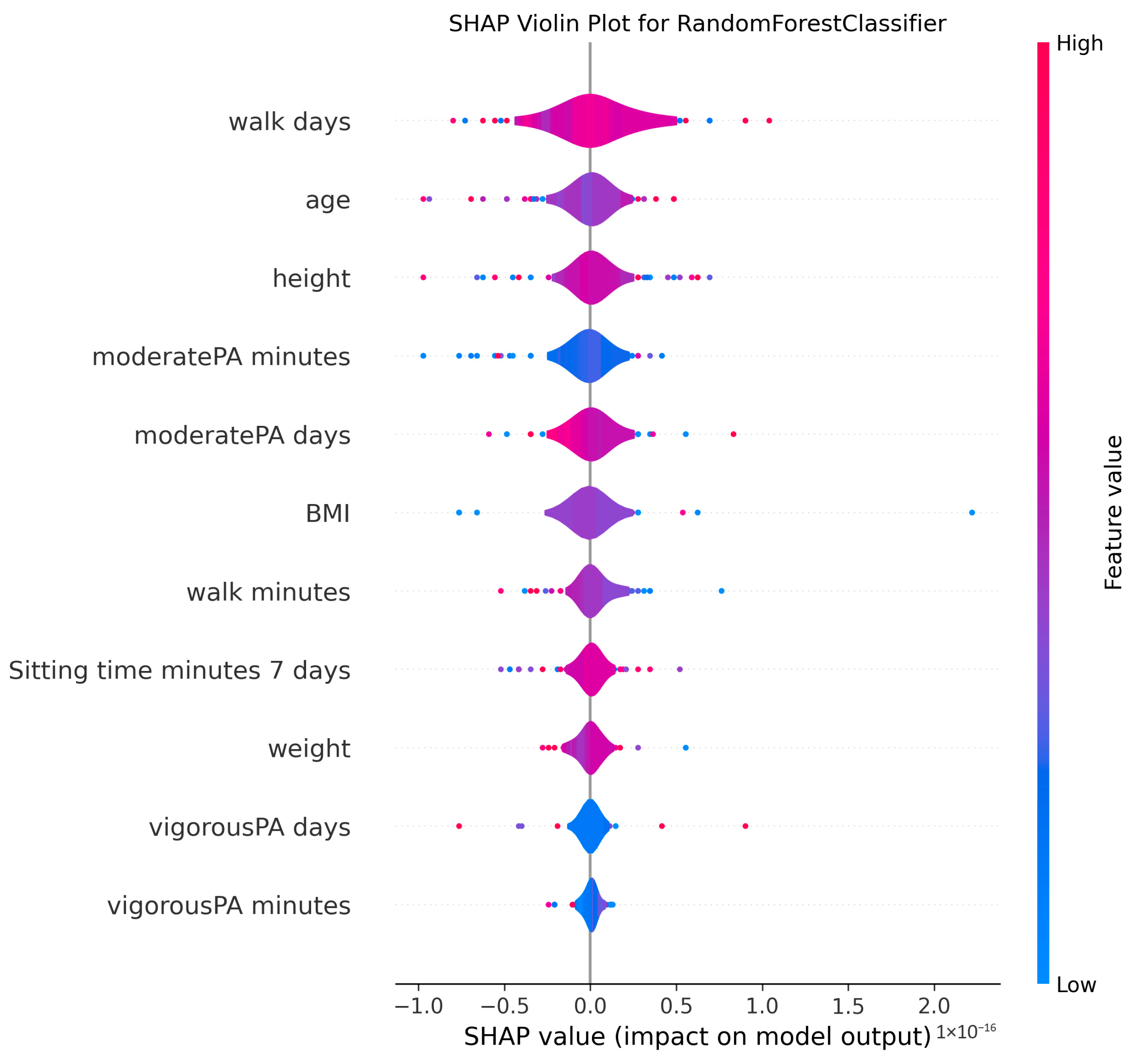

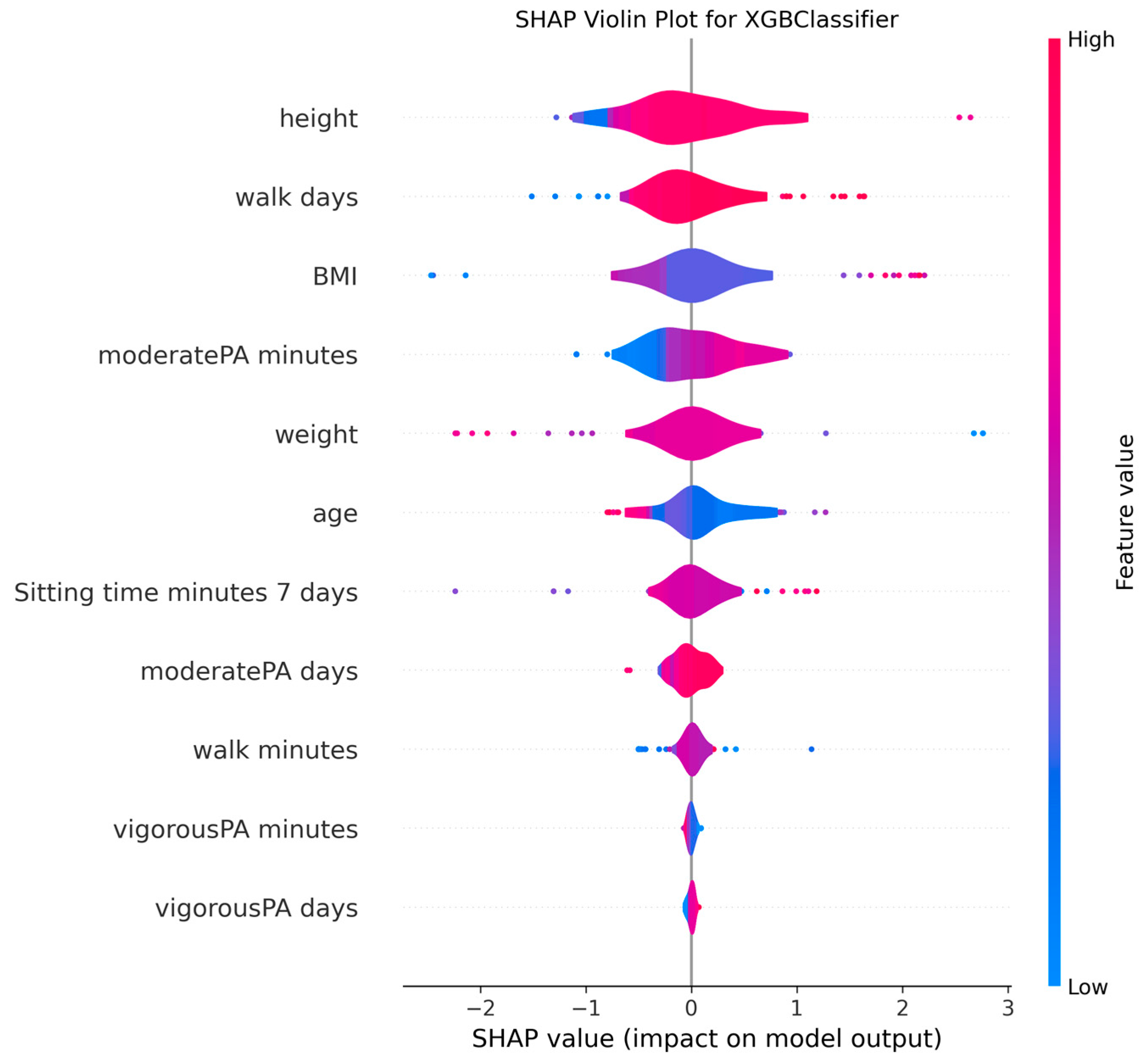

3.4. Model Explanations

4. Discussion

4.1. Interpretation of Machine Learning Performance in MMSE Classification

4.2. Clinical Significance of Physical Activity and Sedentary Behavior

4.3. Anthropometric Factors and Cognitive Health

4.4. Methodological Considerations and Model Interpretability

4.5. Limitations and Future Research Directions

4.6. Clinical and Public Health Implications

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gonzales, M.; Garbarino, V.; Pollet, E.; Palavicini, J.; Kellogg, D.; Kraig, E.; Orr, M. Biological aging processes underlying cognitive decline and neurodegenerative disease. J. Clin. Investig. 2022, 132, e158453. [Google Scholar] [CrossRef]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. “Mini-mental state”: A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- Zhu, H.; Li, H.D.; Feng, B.L.; Zhang, L.; Zheng, Z.X.; Zhang, Y.; Wang, D.J.; Xiong, Z.; Kang, J.F.; Jin, J.C.; et al. Association between sarcopenia and cognitive impairment in community-dwelling population. Chin. Med. J. 2020, 134, 725–727. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Zhang, W.; Wang, C.; Tao, W.; Dou, Q.; Yang, Y. Sarcopenia as a predictor of hospitalization among older people: A systematic review and meta-analysis. BMC Geriatr. 2018, 18, 188. [Google Scholar] [CrossRef]

- Cruz-Jentoft, A.J.; Bahat, G.; Bauer, J.; Boirie, Y.; Bruyère, O.; Cederholm, T.; Cooper, C.; Landi, F.; Rolland, Y.; Sayer, A.A.; et al. Sarcopenia: Revised European consensus on definition and diagnosis. Age Ageing 2019, 48, 16–31. [Google Scholar] [CrossRef] [PubMed]

- Livingston, G.; Huntley, J.; Sommerlad, A.; Ames, D.; Ballard, C.; Banerjee, S.; Brayne, C.; Burns, A.; Cohen-Mansfield, J.; Cooper, C.; et al. Dementia prevention, intervention, and care: 2020 report of the Lancet Commission. Lancet 2020, 396, 413–446. [Google Scholar] [CrossRef] [PubMed]

- Marini, J.A.G.; Abdalla, P.P.; Bohn, L.; Mota, J.; Duncan, M.; Dos Santos, A.P.; Machado, D.R.L. Moderate Physical Activity Reduces the Odds of Sarcopenia in Community-dwelling Older Women: A Cross-sectional Study. Curr. Aging Sci. 2023, 16, 219–226. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Nallapu, B.T.; Petersen, K.K.; Qian, T.; Demirsoy, I.; Ghanbarian, E.; Davatzikos, C.; Lipton, R.B.; Ezzati, A. A Machine Learning Approach to Predict Cognitive Decline in Alzheimer Disease Clinical Trials. Neurology 2025, 104, e213490. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 4768–4777. [Google Scholar]

- Gardner, M.W.; Dorling, S.R. Artificial neural networks (the multilayer perceptron)—A review of applications in the atmospheric sciences. Atmos. Environ. 1998, 32, 2627–2636. [Google Scholar] [CrossRef]

- Hancock, J.T.; Khoshgoftaar, T.M. CatBoost for big data: An interdisciplinary review. J. Big Data 2020, 7, 94. [Google Scholar] [CrossRef] [PubMed]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. Lightgbm: A highly efficient gradient boosting decision tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Hosmer Jr, D.W.; Lemeshow, S.; Sturdivant, R.X. Applied Logistic Regression; John Wiley & Sons: New York, NY, USA, 2013. [Google Scholar]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar] [CrossRef]

- Saito, T.; Rehmsmeier, M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 2015, 10, e0118432. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Yagin, F.H.; Alkhateeb, A.; Raza, A.; Samee, N.A.; Mahmoud, N.F.; Colak, C.; Yagin, B. An explainable artificial intelligence model proposed for the prediction of myalgic encephalomyelitis/chronic fatigue syndrome and the identification of distinctive metabolites. Diagnostics 2023, 13, 3495. [Google Scholar] [CrossRef] [PubMed]

- Arslan, A.K.; Yagin, F.H.; Algarni, A.; Karaaslan, E.; Al-Hashem, F.; Ardigò, L.P. Enhancing type 2 diabetes mellitus prediction by integrating metabolomics and tree-based boosting approaches. Front. Endocrinol. 2024, 15, 1444282. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.H.; Lee, J.M. Explainable fault diagnosis model using stacked autoencoder and kernel SHAP. In Proceedings of the 2022 IEEE International Symposium on Advanced Control of Industrial Processes (AdCONIP), Vancouver, BC, Canada, 7–9 August 2022; pp. 182–187. [Google Scholar]

- Whelan, P.J.; Oleszek, J.; Macdonald, A.; Gaughran, F. The utility of the Mini-Mental State Examination in guiding assessment of capacity to consent to research. Int. Psychogeriatr. 2009, 21, 338–344. [Google Scholar] [CrossRef] [PubMed]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 2–8 December 2018; pp. 6639–6649. [Google Scholar]

- Caruana, R.; Niculescu-Mizil, A.; Crew, G.; Ksikes, A. Ensemble selection from libraries of models. In Proceedings of the Twenty-First International Conference on Machine Learning, Banff, AB, Canada, 4–8 July 2004; p. 18. [Google Scholar]

- Falck, R.; Davis, J.; Liu-Ambrose, T. What is the association between sedentary behaviour and cognitive function? A systematic review. Br. J. Sports Med. 2016, 51, 800–811. [Google Scholar] [CrossRef]

- Dillon, K.; Morava, A.; Prapavessis, H.; Grigsby-Duffy, L.; Novic, A.; Gardiner, P. Total Sedentary Time and Cognitive Function in Middle-Aged and Older Adults: A Systematic Review and Meta-analysis. Sports Med.-Open 2022, 8, 127. [Google Scholar] [CrossRef]

- Yi, H.-J.; Tan, C.-H.; Hong, W.-P.; Yu, R.-L. Development and validation of the geriatric apathy scale: Examining multi-dimensional apathy profiles in a neurodegenerative population with cultural considerations. Asian J. Psychiatry 2024, 93, 103924. [Google Scholar] [CrossRef] [PubMed]

- Montoya--Murillo, G.; Ibarretxe--Bilbao, N.; Peña, J.; Ojeda, N. The impact of apathy on cognitive performance in the elderly. Int. J. Geriatr. Psychiatry 2019, 34, 657–665. [Google Scholar] [CrossRef] [PubMed]

- Marino, F.; Deal, J.; Gross, A.; An, Y.; Tian, Q.; Simonsick, E.; Ferrucci, L.; Resnick, S.; Schrack, J.; Wanigatunga, A. Directionality between cognitive function and daily physical activity patterns. Alzheimer’s Dement. Transl. Res. Clin. Interv. 2025, 11, e70068. [Google Scholar] [CrossRef] [PubMed]

- Zhai, W.; Zhang, G.; Wei, C.; Zhao, M.; Sun, L. The obesity paradox in cognitive decline: Impact of BMI dynamics and APOE genotypes across various cognitive status. Diabetes Obes. Metab. 2025, 27, 3967–3983. [Google Scholar] [CrossRef]

- Brodaty, H.; Chau, T.; Heffernan, M.; Ginige, J.; Andrews, G.; Millard, M.; Sachdev, P.; Anstey, K.; Lautenschlager, N.; McNeil, J.; et al. An online multidomain lifestyle intervention to prevent cognitive decline in at-risk older adults: A randomized controlled trial. Nat. Med. 2025, 31, 565–573. [Google Scholar] [CrossRef]

- Hu, L.; Chen, S.; Fu, Y.; Gao, Z.; Long, H.; Ren, H.-W.; Zuo, Y.; Wang, J.; Li, H.; Xu, Q.; et al. Risk Factors Associated with Clinical Outcomes in 323 COVID-19 Hospitalized Patients in Wuhan, China. Clin. Infect. Dis. 2020, 71, 2089–2098. [Google Scholar] [CrossRef]

- Zhao, X.; Sun, B.; Chu, X.; Wu, D.; Jiang, G.; Zhou, H.; Cai, J. A decision-making approach under uncertainty based on ensemble learning model with multimodal data and its application in medical diagnosis. Expert Syst. Appl. 2025, 265, 125983. [Google Scholar] [CrossRef]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Avila, J.F.; Vonk, J.M.; Verney, S.P.; Witkiewitz, K.; Rentería, M.A.; Schupf, N.; Mayeux, R.; Manly, J.J. Sex/gender differences in cognitive trajectories vary as a function of race/ethnicity. Alzheimer’s Dement. 2019, 15, 1516–1523. [Google Scholar] [CrossRef] [PubMed]

- Pua, S.-Y.; Yu, R.-L. Effects of executive function on age-related emotion recognition decline varied by sex. Soc. Sci. Med. 2024, 361, 117392. [Google Scholar] [CrossRef] [PubMed]

| Model Name | Hyperparameter Name | Hyperparameter Type | Hyperparameter Space |

|---|---|---|---|

| MLP | hidden_layer_sizes | Categorical | 50 to 2000 (step = 50) |

| Alpha | Real | High = 10−1, Low = 10−4 | |

| Learning_rate | Real | High = 10−1, Low = 10−4 | |

| CatBoost | Learning_rate | Real | High = 0.3, Low = 10−3 |

| Iterations | Integer | High = 1500, Low = 100 | |

| Depth | Integer | High = 10, Low = 4 | |

| l2_leaf_reg | Real | High = 10, Low = 10−3 | |

| loss_function | Categorical | ‘Logloss’, ‘CrossEntropy’ | |

| LightGBM | Max_depth | Integer | High = 10, Low = 3 |

| Learning_rate | Real | High = 0.3, Low = 10−2 | |

| n_estimators | Integer | High = 500, Low = 50 | |

| Subsample | Real | High = 1.0, Low = 0.5 | |

| colsample_bytree | Real | High = 1.0, Low = 0.5 | |

| min_child_samples | Integer | High = 100, Low = 20 | |

| reg_alpha | Real | High = 10, Low = 10−3 | |

| reg_lambda | Real | High = 10, Low = 10−3 | |

| XGBoost | Max_depth | Integer | High = 10, Low = 3 |

| learning_rate | Real | High = 0.3, Low = 0.01 | |

| n_estimators | Integer | High = 1500, Low = 50 | |

| Subsample | Real | High = 1, Low = 0.5 | |

| colsample_bytree | Real | High = 1, Low = 0.5 | |

| Gamma | Real | High = 10, Low = 0 | |

| min_child_weight | Integer | High = 10, Low = 1 | |

| RF | n_estimators | Integer | High = 1500, Low = 10 |

| max_depth | Integer | High = 20, Low = 2 | |

| min_samples_split | Real | High = 0.99, Low = 0.01 | |

| max_features | Real | High = 0.5, Low = 0.001 | |

| GB | n_estimators | Integer | High = 1500, Low = 50 |

| learning_rate | Real | High = 0.3, Low = 0.001 | |

| max_depth | Integer | High = 30, Low = 1 | |

| min_samples_split | Integer | High = 20, Low = 2 | |

| min_samples_leaf | Integer | High = 20, Low = 1 | |

| LR | C | Real | High = 100, Low = 10−6 |

| Solver | Categorical | ‘lbfgs’, ‘liblinear’ | |

| AdaBoost | n_estimators | Integer | High = 10, Low = 200 |

| learning_rate | Real | High = 0.01, Low = 2 |

| Model Name | w_f1 (%) | std (%) | acc (%) | std (%) | pre (%) | std (%) | rec (%) | std (%) | pr_auc (%) | std (%) | auc_roc (%) | std (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MLP | 80.04 | 3.49 | 80.71 | 3.27 | 83.23 | 3.91 | 80.71 | 3.27 | 76.22 | 9.89 | 76.46 | 6.52 |

| CatBoost | 87.05 | 2.85 | 87.14 | 2.86 | 88.3 | 3.05 | 87.14 | 2.86 | 89.6 | 8.48 | 90 | 5.65 |

| LightGBM | 79.74 | 2.68 | 80 | 2.86 | 80.96 | 3.83 | 80 | 2.86 | 81.37 | 9.77 | 81.04 | 7.52 |

| XGBoost | 79.69 | 2.7 | 80 | 2.86 | 80.87 | 3.86 | 80 | 2.86 | 85.93 | 10.79 | 81.88 | 9.4 |

| RF | 81.84 | 6.68 | 82.14 | 6.59 | 84.99 | 6.44 | 82.14 | 6.59 | 88.34 | 9.07 | 85.94 | 7.94 |

| GB | 86.35 | 2.14 | 86.42 | 2.14 | 88.58 | 2.3 | 86.42 | 2.14 | 91.88 | 5.18 | 88.96 | 6.18 |

| LR | 77.45 | 3.69 | 77.86 | 3.85 | 78.91 | 4.86 | 77.86 | 3.85 | 80.21 | 8.51 | 78.96 | 5.39 |

| AdaBoost | 86.43 | 2.15 | 86.42 | 2.14 | 88.32 | 2.51 | 86.42 | 2.14 | 92.49 | 5.83 | 89.58 | 6.86 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the Lithuanian University of Health Sciences. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gormez, Y.; Yagin, F.H.; Aygun, Y.; Alzakari, S.A.; Alhussan, A.A.; Aghaei, M. Machine Learning Classification of Cognitive Status in Community-Dwelling Sarcopenic Women: A SHAP-Based Analysis of Physical Activity and Anthropometric Factors. Medicina 2025, 61, 1834. https://doi.org/10.3390/medicina61101834

Gormez Y, Yagin FH, Aygun Y, Alzakari SA, Alhussan AA, Aghaei M. Machine Learning Classification of Cognitive Status in Community-Dwelling Sarcopenic Women: A SHAP-Based Analysis of Physical Activity and Anthropometric Factors. Medicina. 2025; 61(10):1834. https://doi.org/10.3390/medicina61101834

Chicago/Turabian StyleGormez, Yasin, Fatma Hilal Yagin, Yalin Aygun, Sarah A. Alzakari, Amel Ali Alhussan, and Mohammadreza Aghaei. 2025. "Machine Learning Classification of Cognitive Status in Community-Dwelling Sarcopenic Women: A SHAP-Based Analysis of Physical Activity and Anthropometric Factors" Medicina 61, no. 10: 1834. https://doi.org/10.3390/medicina61101834

APA StyleGormez, Y., Yagin, F. H., Aygun, Y., Alzakari, S. A., Alhussan, A. A., & Aghaei, M. (2025). Machine Learning Classification of Cognitive Status in Community-Dwelling Sarcopenic Women: A SHAP-Based Analysis of Physical Activity and Anthropometric Factors. Medicina, 61(10), 1834. https://doi.org/10.3390/medicina61101834