1. Introduction

Detecting forced oscillations plays a significant role in ensuring the security and stability of power systems. Forced oscillations can cause undesirable power vibrations, damage equipment, and even interrupt the power supply. If the frequency of a forced oscillation is close to that of a poorly damped natural oscillation in power systems, it can lead to highly magnified energy. This can cause the forced oscillation to propagate across the entire system, potentially leading to catastrophic cascading blackouts [

1,

2,

3].

Unlike natural oscillations caused by intrinsic natural interactions among dynamic components within the power system, forced oscillations are usually excited by periodic external disturbances, e.g., malfunctions in generator exciters, inverter controllers of renewable sources, and large-scale cyclic loads [

1,

2]. Therefore, the strategy of mitigating forced oscillations is different from that of natural ones; natural oscillations can be remedied by improving damping, while forced oscillations are effectively mitigated by removing forced oscillation sources [

3]. To determine the correct remedial strategy, it is a prerequisite to distinguish forced and natural oscillations accurately.

With the help of synchrophasor data provided by phasor measurement units (PMUs), many methods of detecting forced oscillations or distinguishing them from natural oscillations have been developed. Theses methods are broadly divided into two categories: the traditional approaches and the deep learning approaches. The traditional methods commonly utilize spectral analysis and time–frequency representation to extract features of different oscillation signals [

4,

5,

6,

7,

8,

9,

10,

11,

12]. For example, based on the mathematical representation of two oscillation types, a spectral approach is proposed using the noise responses and their spectral differences [

8]. Based on statistical signatures from power spectral density, an oscillation diagnosis algorithm is developed [

10]. To improve the detection performance when the frequency of forced oscillations is close to that of natural oscillations, a residual spectral analysis method is proposed [

11]. To handle non-stationary oscillating signals, some time–frequency representation methods, such as short-time Fourier transform (STFT), STFT-based synchrosqueezing transform, and continuous wavelet transform, are utilized to extract non-stationary components, and then the time–frequency ridges with maximum energy are applied to detection the forced oscillations [

6,

9,

13]. Further, some machine learning methods, such as the support vector machine (SVM) method and K-nearest neighbors algorithm, utilize the extracted features to distinguish two oscillation types [

12] or to classify time-series data corresponding to the location of the forced oscillation sources [

14]. Although traditional methods are computationally efficient and require fewer data, their heavy reliance on expert knowledge to extract features from oscillation signals limits their ability to handle the complex and changing operating conditions in power systems.

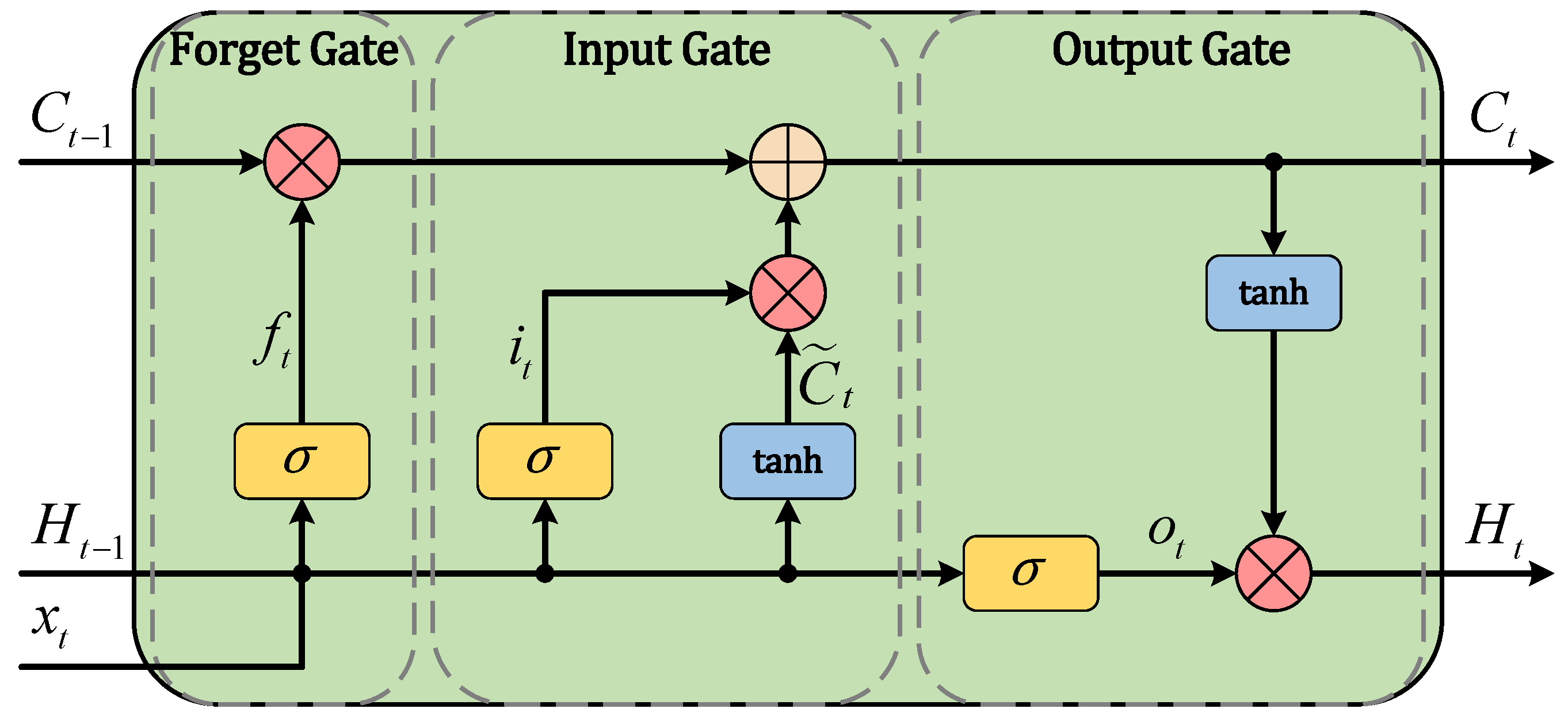

Recently, the success of deep learning methods has facilitated their application in the oscillation problems due to the strong feature extraction capability of these methods. Commonly, deep learning methods are mainly implemented based on artificial neural networks (ANNs), namely, the second generation of neural networks (NNs). For example, a shallow NN, consisting of a two-layer feedforward network, is proposed to distinguish forced oscillation from natural oscillation [

15]. A hierarchical deep learning NN, comprising three NNs, is developed to perform FO detection, identification, and suppression [

16]. Several NNs, based on long short-term memory (LSTM) and a convolutional NN (CNN), are employed to predict the Low-Frequency modes of oscillations [

17]. To improve the training efficiency of deep learning models, a two-stage deep transfer learning and CNN method is proposed to convert the forced oscillation location problem into an image recognition problem [

18]. Additionally, a new transfer branch-level transformer-based deep learning approach is proposed to localize the forced oscillation source without requiring extensive training data [

19]. These developments indicate that ANNs are a fundamental technology in handling forced oscillation problems.

Despite the effectiveness of previous work based on ANNs, their inherent computational complexity and high power consumption pose significant challenges for forced oscillation detection applications, especially in edge computing. Typically, forced oscillations occur randomly in power systems due to various factors, such as changing operating conditions and the integration of renewable energy. It is essential to detect the presence of forced oscillations in a timely manner at edge devices, which are deployed at critical nodes in the power system, before these oscillations spread widely throughout the entire power grid and cause catastrophic cascading blackouts. However, edge devices are often designed with relatively limited computational resources (e.g., memory and storage) and constrained power supplies. Thus, deploying ANNs that require complex computations and high power consumption becomes difficult on edge devices.

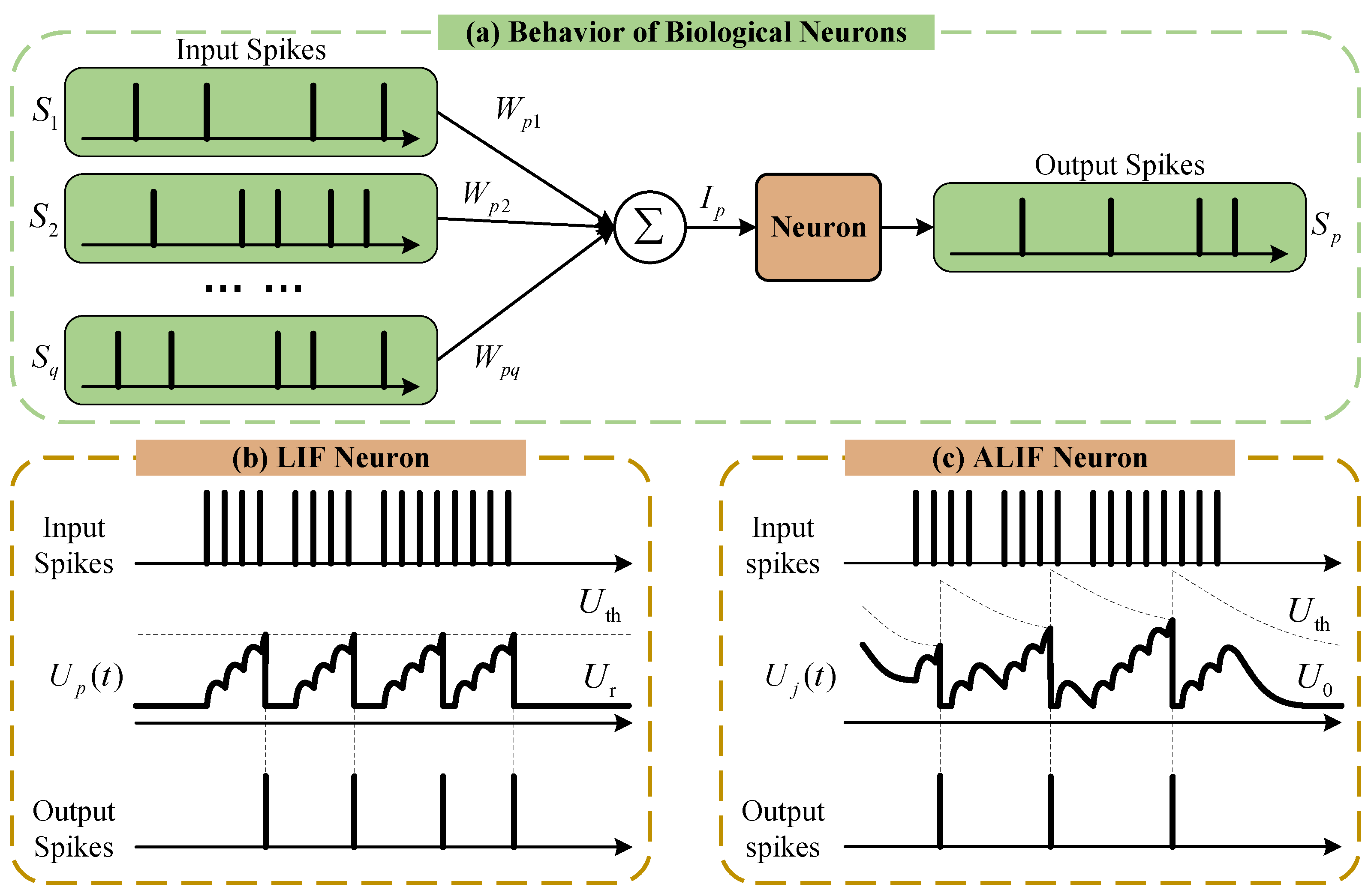

In contract, spiking neural networks (SNNs) [

20,

21,

22] have been theoretically proved to achieve greater computational and energy efficiency than ANNs [

23], thanks to the advantage of a bio-inspired spiking computation mechanism. As the third generation of neural networks, SNNs are designed to emulate the information processing in the brain. While the structure of SNNs is similar to that of ANNs, unlike ANNs, which use continuous activation values to transmit information between neurons, SNNs utilize discrete spikes to represent and transmit information by encoding and processing data as electrical pulses or spikes. Since energy is mostly consumed only when a spike event occurs, this spiking-driven computation strategy of SNNs provides attractive energy-saving benefits, making it possible to deploy detection algorithms on edge devices [

24,

25]. Currently, SNNs have found applications in diverse domains, including power transformer fault diagnosis [

26], image recognition [

27], and more. However, SNNs are typically constructed as shallow networks and face the issue of training difficulty due to the non-differentiable nature of brain-like neurons. Consequently, the accuracy of SNNs is usually lower than that of popular ANNs [

28,

29]. Brief descriptions of the advantages and disadvantages of traditional methods, ANNs, and SNNs are shown in

Table 1.

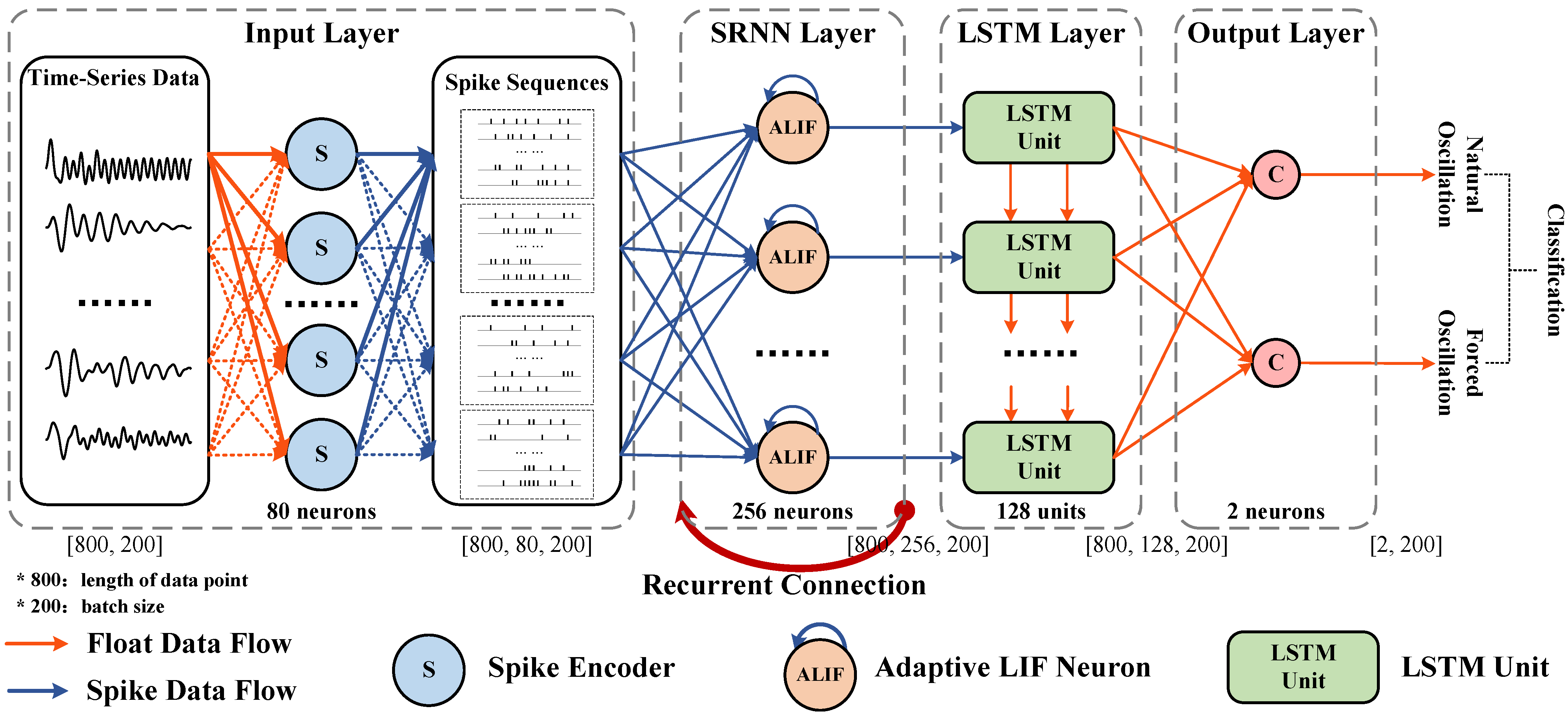

To explore the advantages of ANNs and SNNs, the hybrid networks of integrating SNNs and ANNs, such as the SNN–CNN [

28,

30], have been used and proven effective in some applications. Unlike these existing hybrid networks, we propose a novel hybrid network that integrates a spiking recurrent neural network (SRNN) with LSTM. In this hybrid architecture, the SRNN is constructed using adaptive leak integrate-and-fire (ALIF) neurons with self-recurrency. The ALIF neurons enhance computational capability through an adaptive firing threshold, while the recurrent mechanism within ALIF facilitates the capture of temporal dependencies in time-series data. Meanwhile, the LSTM, as a type of recurrent ANN, enables selective information processing, which further captures long-term dependencies and avoids issues such as vanishing gradients. The proposed hybrid network is directly trained by adopting a Gaussian function as a surrogate for the non-differentiable active one of the ALIF neurons in the backpropagation-through-time (BPTT) algorithm [

31]. Finally, the trained hybrid network is applied to PMU data to implement the detection of forced oscillations, mainly distinguishing them from natural oscillations. Our contributions are mainly summarized as follows:

The proposed hybrid network not only leverages the energy-saving advantage of SRNNs but also improves the detection performance of forced oscillations since combining with the LSTM can better capture the time-dependent information of oscillation data.

Different from the existing detection methods based on ANNs, the proposed hybrid network driven by spike events is more friendly for deploying in edge devices.

The proposed hybrid network achieves more satisfactory performance for detecting forced oscillations on simulated and real-world measured PMU data compared with other schemes, even in resonance conditions and periodically non-sinusoid injected disturbances.

The rest of this paper is organized as follows. The proposed methodology, including the problem formulation of forced oscillation detection, the proposed hybrid network, and the training strategy, is presented in

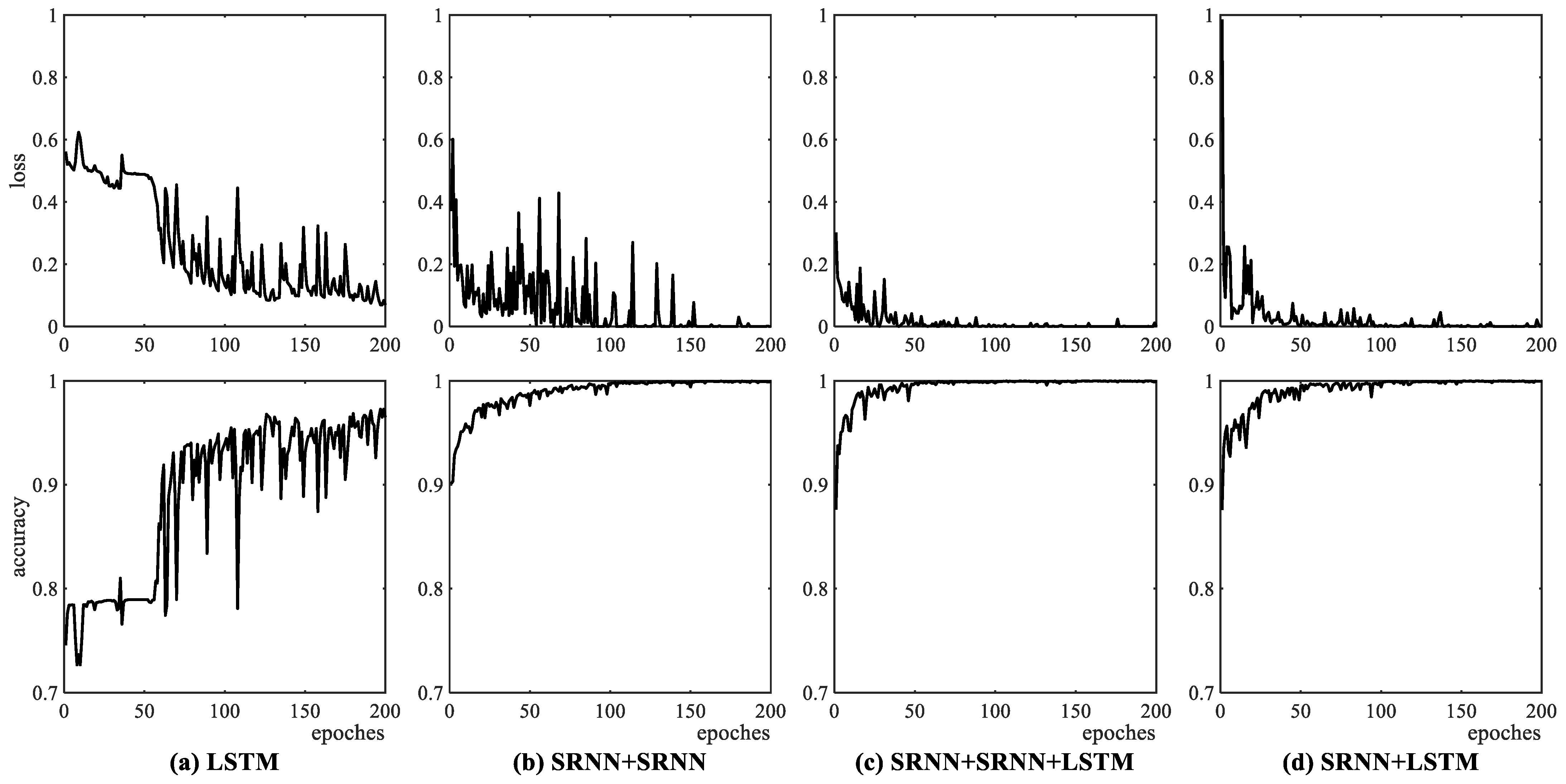

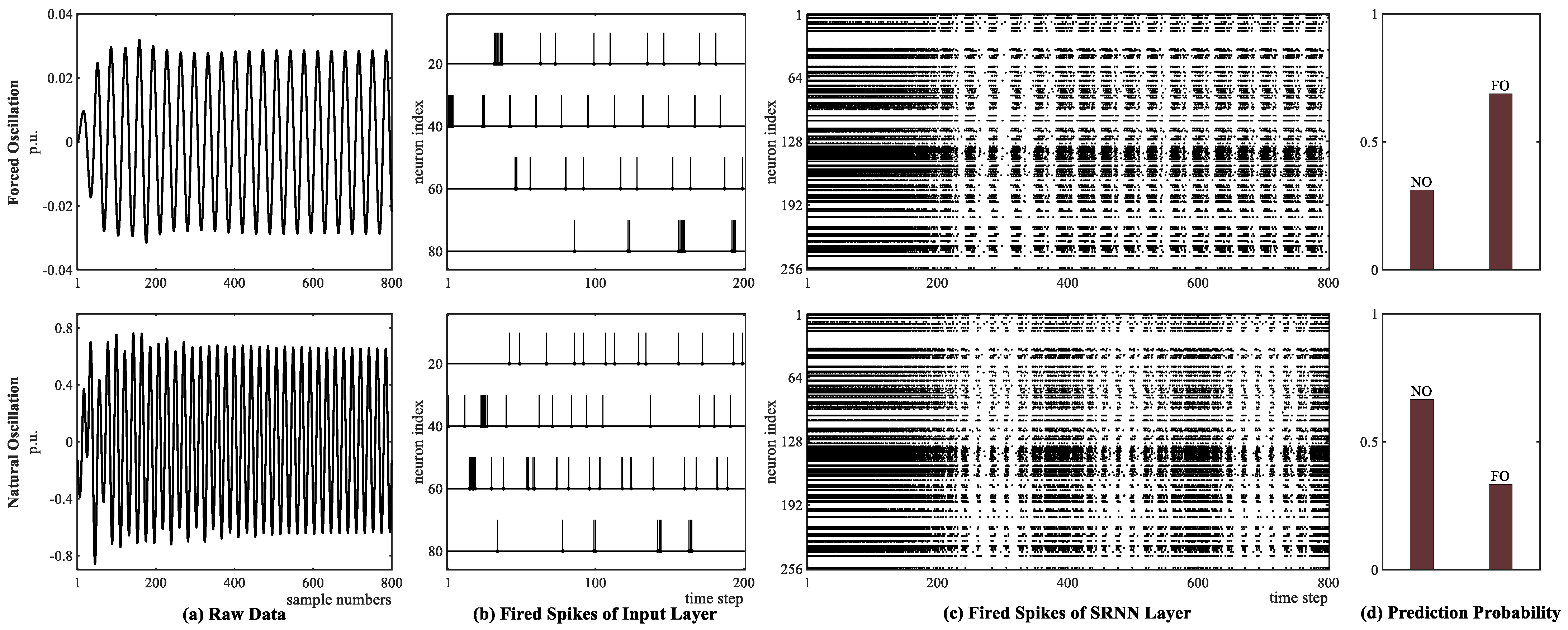

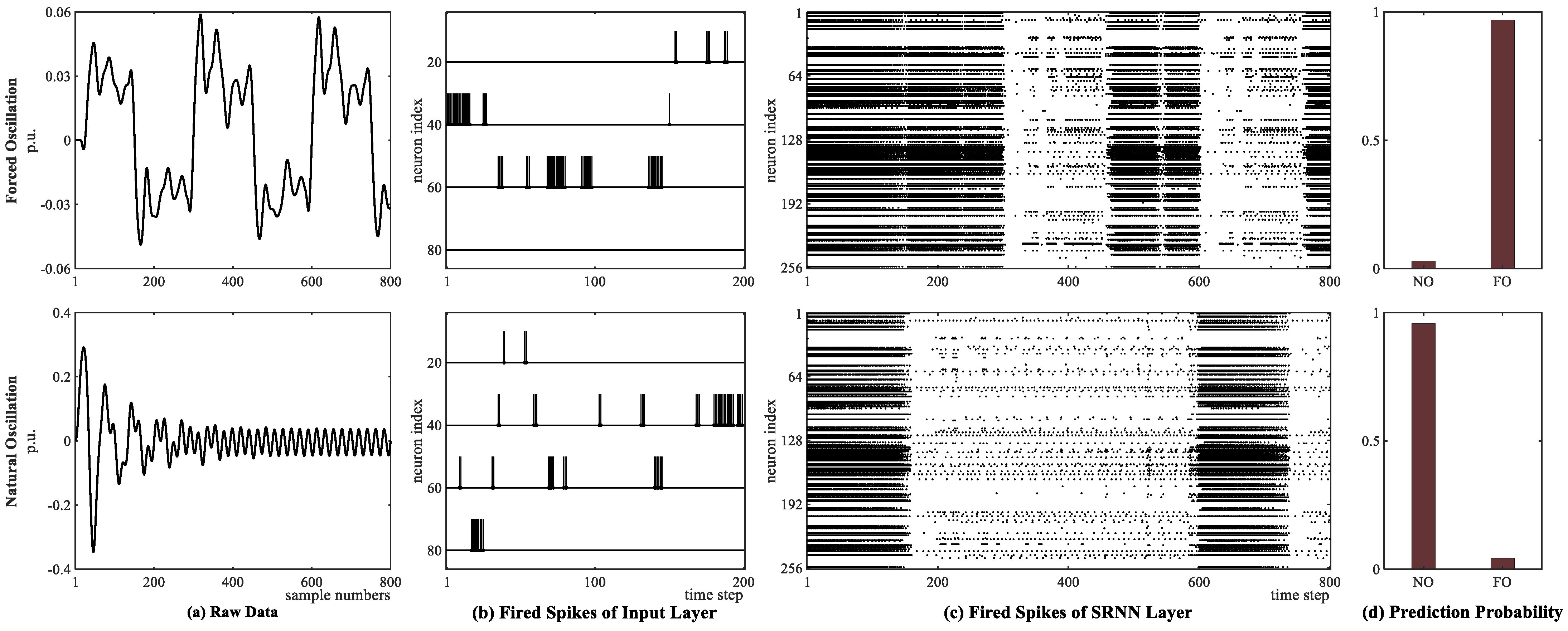

Section 2. The detection performance and visual results of the proposed hybrid network are demonstrated in

Section 3. Conclusions and potential future work are addressed in

Section 4.