Surface Defect Detection for Small Samples of Particleboard Based on Improved Proximal Policy Optimization

Abstract

1. Introduction

2. Related Work

2.1. Large Vision Model

2.2. Reinforcement Learning Model

3. Materials and Methods

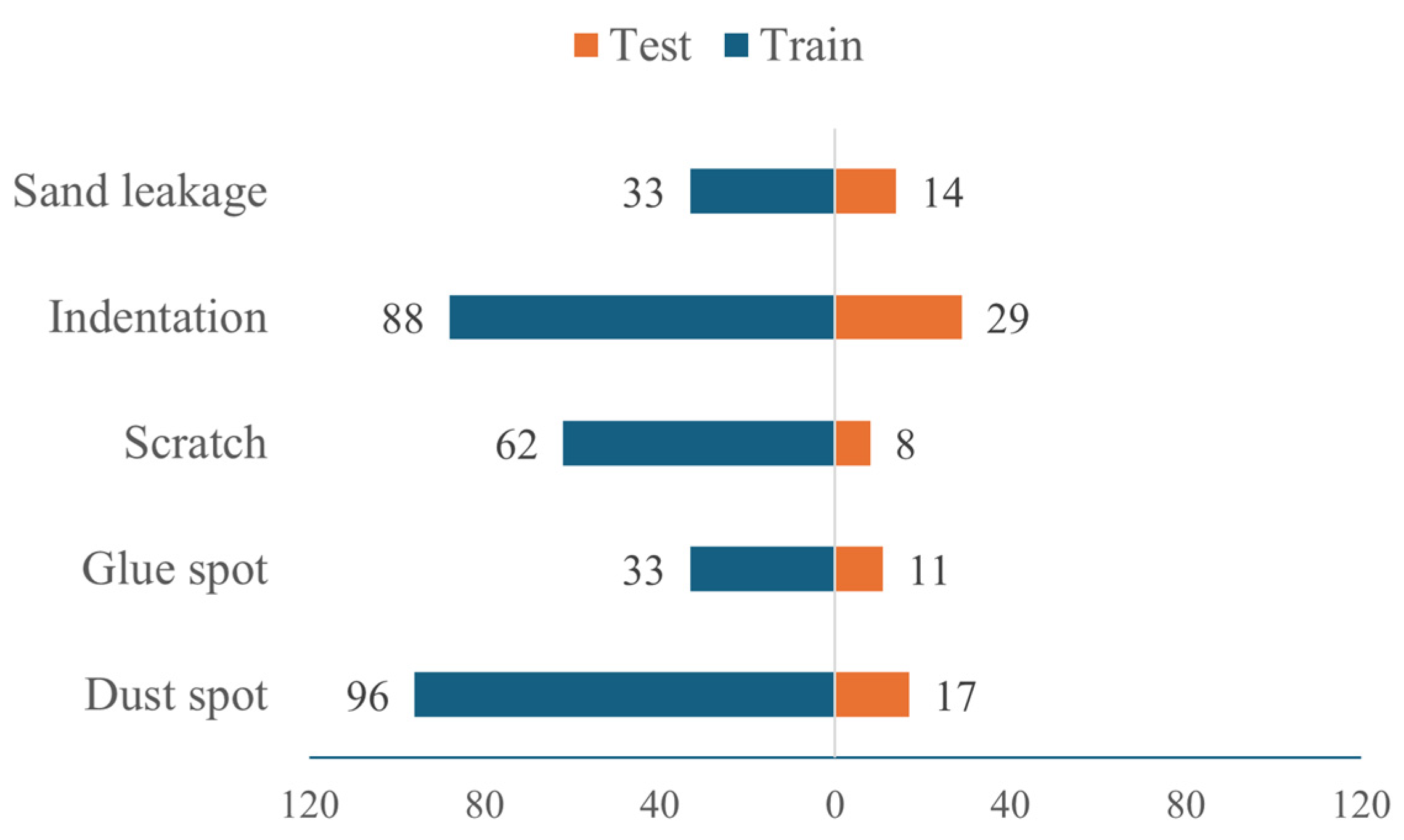

3.1. Particleboard Dataset

3.1.1. Image Acquisition System

3.1.2. Dataset Construction

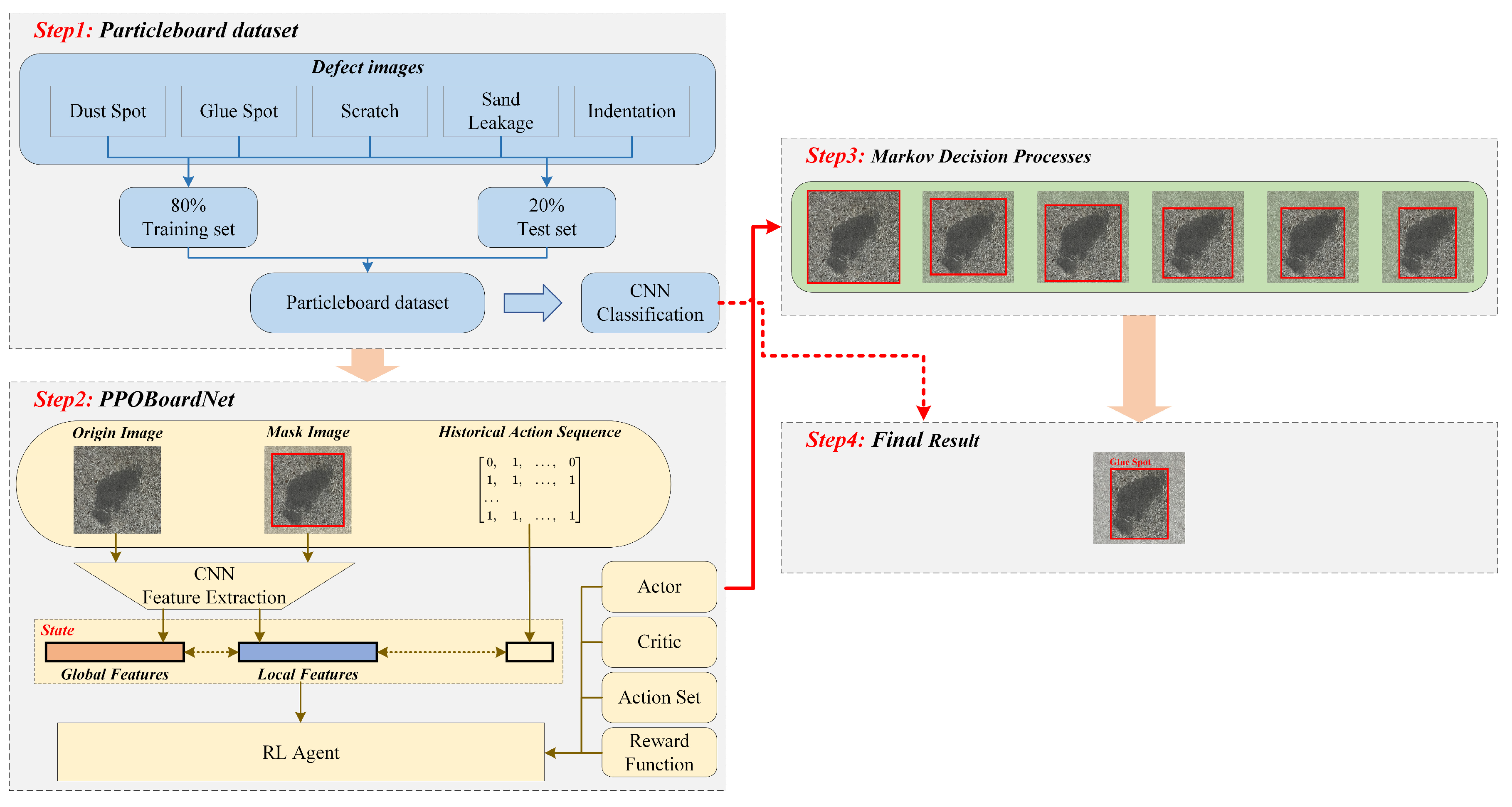

3.2. Deep Reinforcement Learning-Driven Algorithm for Particleboard Defect Detection

3.2.1. Enhanced Actor-Critic Network Architecture

3.2.2. Enhanced State Representation Based on CNN

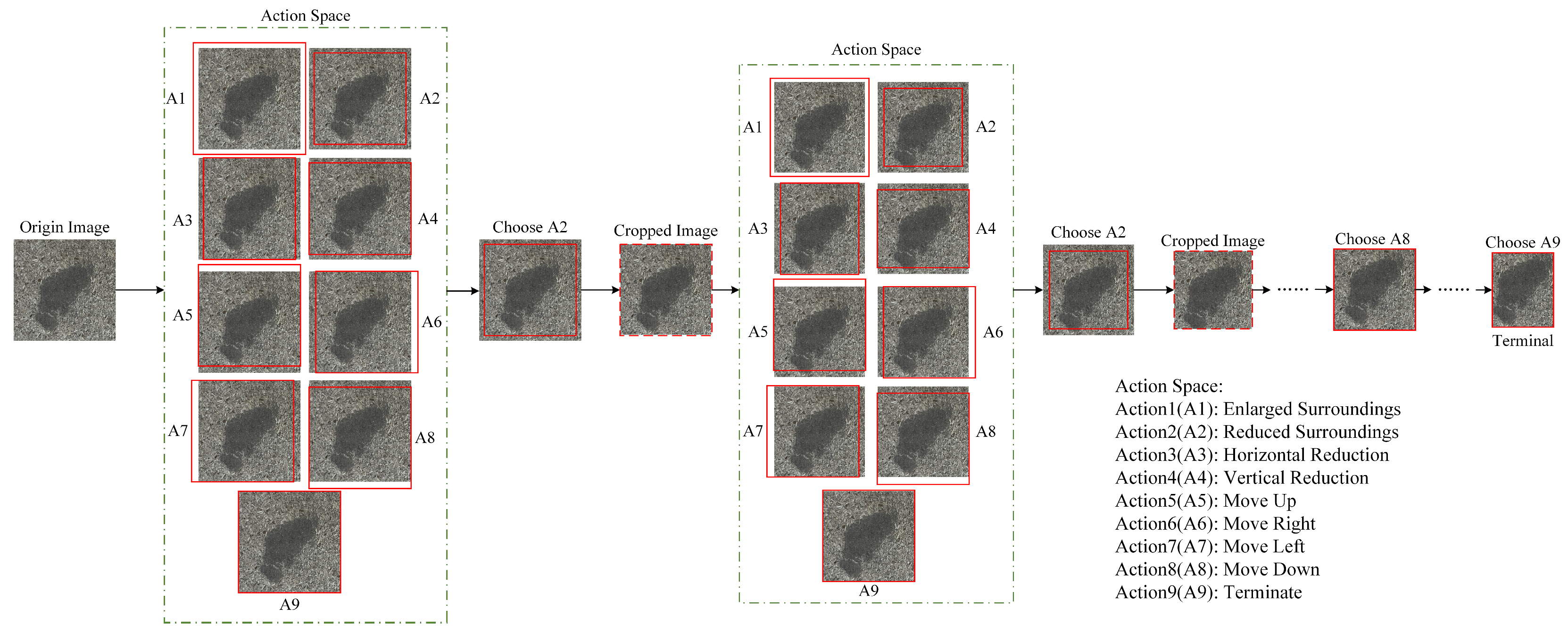

3.2.3. Variable Action Space

3.2.4. Composite Reward Function

3.3. Evaluation Metrics and System Specifications

4. Results and Discussion

4.1. Experiment Setting

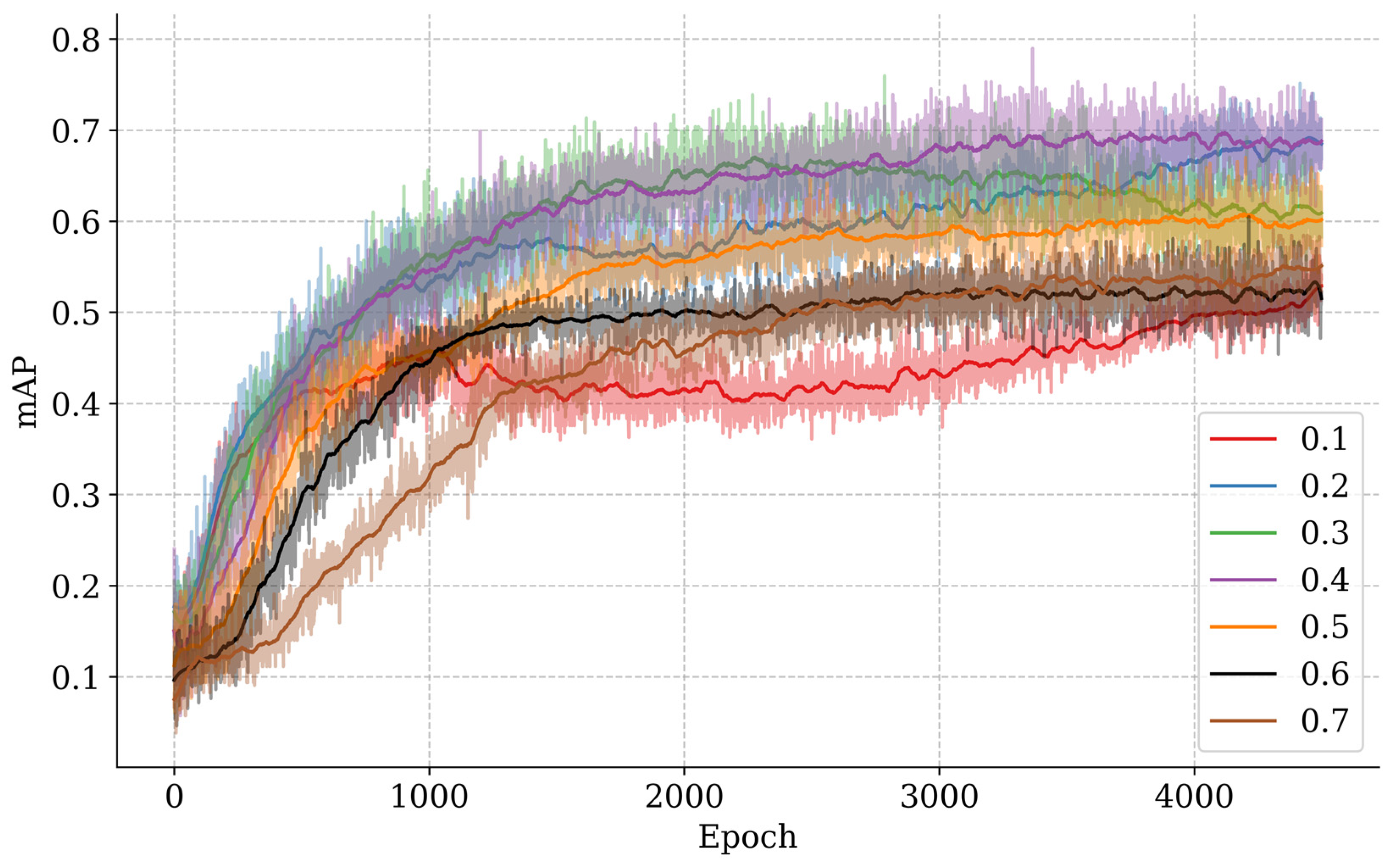

4.2. Action Magnitude Parameter Optimization

4.3. Performance Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Huang, Z.L.; Cao, Z.R.; Wang, Y.K.; Tang, J.Y.; Chen, L.H.; Qian, S.W.; Yan, X.J.; Deng, Y.; Chen, Y.F.; Zhu, M.W. Adhesive-Free, Light, and Strong Particle Board. Nano Lett. 2025, 25, 868–875. [Google Scholar] [CrossRef]

- Zhang, C.L.; Wang, C.L.; Zhao, L.Y.; Qu, X.L.; Gao, X.J. A method of particleboard surface defect detection and recognition based on deep learning. Wood Mater. Sci. Eng. 2024, 20, 50–61. [Google Scholar] [CrossRef]

- Xiong, X.Q.; Li, R.R.; Bai, H.T. Current Status and Development Trends of Chinese Intelligent Furniture Industry. J. For. Eng. 2021, 6, 21–28. [Google Scholar] [CrossRef]

- Zhou, H.Y.; Liu, Y.; Liu, Z.; Zhuang, Z.L.; Wang, X.; Gou, B.L. Crack Detection Method for Engineered Bamboo Based on Super-Resolution Reconstruction and Generative Adversarial Network. Forests 2022, 13, 1896. [Google Scholar] [CrossRef]

- Li, R.R.; Xu, Z.Y.; Yang, F.; Yang, B.K. Defect detection for melamine-impregnated paper decorative particleboard surface based on deep learning. Wood Mater. Sci. Eng. 2024, 1–14. [Google Scholar] [CrossRef]

- Zhou, H.Y.; Xia, H.F.; Fan, C.L.; Lan, T.X.; Liu, Y.; Yang, Y.T.; Shen, Y.X.; Yu, W. Intelligent Detection Method for Surface Defects of Particleboard Based on Super-Resolution Reconstruction. Forests 2024, 15, 2199. [Google Scholar] [CrossRef]

- Wang, C.C.; Liu, Y.Q.; Wang, P.Y.; Lv, Y.L. Research on the Identification of Particleboard Surface Defects Based on Improved Capsule Network Model. Forests 2023, 14, 822. [Google Scholar] [CrossRef]

- Xu, M.; Sun, J.; Cheng, J.H.; Yao, K.N.; Wu, X.H.; Zhou, X. Non-destructive prediction of total soluble solids and titratable acidity in Kyoho grape using hyperspectral imaging and deep learning algorithm. Int. J. Food Sci. Technol. 2023, 58, 9–21. [Google Scholar] [CrossRef]

- Wu, X.H.; Liang, X.Y.; Wang, Y.X.; Wu, B.; Sun, J. Non-Destructive Techniques for the Analysis and Evaluation of Meat Quality and Safety: A Review. Foods 2022, 11, 3713. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X.D. Object Detection with Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.X.; Steibel, J.; Siegford, J.; Han, J.J.; Norton, T. Classification of drinking and drinker-playing in pigs by a video-based deep learning method. Biosyst. Eng. 2020, 196, 1–14. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Wang, A.C.; Liu, J.Z.; Faheem, M. A Comparative Study of Semantic Segmentation Models for Identification of Grape with Different Varieties. Agriculture 2021, 11, 997. [Google Scholar] [CrossRef]

- Tang, S.X.; Xia, Z.L.; Gu, J.A.; Wang, W.B.; Huang, Z.D.; Zhang, W.H. High-precision apple recognition and localization method based on RGB-D and improved SOLOv2 instance segmentation. Front. Sustain. Food Syst. 2024, 8, 1403872. [Google Scholar] [CrossRef]

- Chen, Y.; Yu, Z.; Han, Z.X.; Sun, W.H.; He, L. A Decision-Making System for Cotton Irrigation Based on Reinforcement Learning Strategy. Agronomy 2024, 14, 11. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, M.W.; Yu, Z.; Sun, W.H.; Fu, W.G.; He, L. Enhancing cotton irrigation with distributional actor–critic reinforcement learning. Agric. Water Manag. 2025, 307, 109194. [Google Scholar] [CrossRef]

- Zhou, M.; Wang, R.J.; Xie, C.J.; Liu, L.; Li, R.; Wang, F.Y.; Li, D.S. ReinforceNet: A reinforcement learning embedded object detection framework with region selection network. Neurocomputing 2021, 443, 369–379. [Google Scholar] [CrossRef]

- Gu, Y.; Cheng, Y.; Chen, C.L.P.; Wang, X. Proximal Policy Optimization with Policy Feedback. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 4600–4610. [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wang, J.Z.; Gao, Z.H.; Zhang, Y.; Zhou, J.; Wu, J.Z.; Li, P.P. Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm. Horticulturae 2022, 8, 21. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, Y.K.; Fan, W.P.; Liu, J.Z. An Improved YOLOv8 Model for Lotus Seedpod Instance Segmentation in the Lotus Pond Environment. Agronomy 2024, 14, 1325. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Part I. pp. 21–37. [Google Scholar]

- Hu, T.T.; Wang, W.B.; Gu, J.A.; Xia, Z.L.; Zhang, J.; Wang, B. Research on Apple Object Detection and Localization Method Based on Improved YOLOX and RGB-D Images. Agronomy 2023, 13, 1816. [Google Scholar] [CrossRef]

- Zhu, W.D.; Sun, J.; Wang, S.M.; Shen, J.F.; Yang, K.F.; Zhou, X. Identifying Field Crop Diseases Using Transformer-Embedded Convolutional Neural Network. Agriculture 2022, 12, 1083. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Zunair, H.; Khan, S.; Hamza, A.B. Rsud20k: A dataset for road scene understanding in autonomous driving. In Proceedings of the 2024 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 27–30 October 2024. [Google Scholar]

- Caicedo, J.C.; Lazebnik, S. Active Object Localization with Deep Reinforcement Learning. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2488–2496. [Google Scholar]

- Cao, Y.; Lu, X.; Zhu, Y.; Zhou, X. Context-based Fine Hierarchical Object Detection with Deep Reinforcement Learning. In Proceedings of the 2020 7th International Conference on Information Science and Control Engineering (ICISCE), Changsha, China, 18–20 December 2020; pp. 405–409. [Google Scholar]

- Li, Y.; Fu, K.; Sun, H.; Sun, X. An Aircraft Detection Framework Based on Reinforcement Learning and Convolutional Neural Networks in Remote Sensing Images. Remote Sens. 2018, 10, 243. [Google Scholar] [CrossRef]

- Wen, J.Z.; Liu, H.Y.; Li, J.B. A Task-Risk Consistency Object Detection Framework Based on Deep Reinforcement Learning. Remote Sens. 2023, 15, 5031. [Google Scholar] [CrossRef]

- Yin, S.L.; Wang, L.G.; Shafiq, M.; Teng, L.; Laghari, A.A.; Khan, M.F. G2Grad-CAMRL: An Object Detection and Interpretation Model Based on Gradient-Weighted Class Activation Mapping and Reinforcement Learning in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3583–3598. [Google Scholar] [CrossRef]

- Jie, Z.Q.; Liang, X.D.; Feng, J.S.; Jin, X.J.; Lu, W.F.; Yan, S.C. Tree-Structured Reinforcement Learning for Sequential Object Localization. In Proceedings of the Advances in Neural Information Processing Systems 29 (NIPS 2016), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Wu, Z.; Khan, N.M.; Gao, L.; Guan, L. Deep Reinforcement Learning with Parameterized Action Space for Object Detection. In Proceedings of the 2018 IEEE International Symposium on Multimedia (ISM 2018), Taichung, Taiwan, 10–12 December 2018; pp. 101–104. [Google Scholar]

- Fang, F.; Liang, W.Y.; Cheng, Y.; Xu, Q.L.; Lim, J.H. Enhancing Representation Learning With Spatial Transformation and Early Convolution for Reinforcement Learning-Based Small Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 315–328. [Google Scholar] [CrossRef]

- Xie, G.L.; Zhang, W.; Hu, Z.; Li, G.J. Upper confident bound advantage function proximal policy optimization. Clust. Comput. J. Netw. Softw. Tools Appl. 2023, 26, 2001–2010. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Taniguchi, S.; Harada, K.; Minegishi, G.; Oshima, Y.; Jeong, S.C.; Nagahara, G.; Iiyama, T.; Suzuki, M.; Iwasawa, Y.; Matsuo, Y. ADOPT: Modified Adam Can Converge with Any β2 with the Optimal Rate. arXiv 2024, arXiv:2411.02853. [Google Scholar]

| Equipment | Manufacturer | Technical Parameters | |

|---|---|---|---|

| MV-CL086-91GC | China Hangzhou Hikrobot Technology Co., Ltd., Hangzhou, China | Resolution | 8192 × 6 pixels |

| Line Frequency | 4.7 kHz | ||

| LD21S01 | China Jiangxi Phoenix Optical Co., Ltd., Shangrao, China | Focal Length | 35 mm |

| Aperture | F2.8-16 | ||

| Category | Item | Specification |

|---|---|---|

| Software | Ubuntu | 22.04 |

| PyCharm | 2024.2.4 | |

| Python | 3.10 | |

| CUDA | 12.6 | |

| PyTorch | 2.5.1 | |

| Hardware | CPU | United States Silicon Valley Intel Core i9-14900K |

| GPU | NVIDIA RTX 4090 24 GB |

| Dust Spot | Glue Spot | Scratch | Sand Leakage | Indentation | mAP | |

|---|---|---|---|---|---|---|

| 0.1 | 61.1 | 33.3 | 57.1 | 58.1 | 71.4 | 56.2 |

| 0.2 | 66.7 | 55.6 | 85.7 | 67.7 | 100.0 | 75.1 |

| 0.3 | 61.1 | 55.6 | 85.7 | 77.4 | 100.0 | 76.0 |

| 0.4 | 61.1 | 66.7 | 100.0 | 74.2 | 92.9 | 79.0 |

| 0.5 | 61.1 | 44.4 | 85.7 | 58.1 | 85.7 | 67.0 |

| 0.6 | 50.0 | 33.3 | 71.4 | 54.8 | 92.9 | 60.5 |

| 0.7 | 44.4 | 22.2 | 71.4 | 64.5 | 92.9 | 59.1 |

| Method | Dust Spot | Glue Spot | Scratch | Sand Leakage | Indentation | Regions | Time | mAP |

|---|---|---|---|---|---|---|---|---|

| YOLOv11n | 54.7 | 99.5 | 34.2 | 75.7 | 58.8 | 8400 | 60.16 ms | 73.7 |

| YOLOv11s | 55.2 | 87.7 | 16.7 | 58.3 | 58.2 | 8400 | 64.46 ms | 64.5 |

| YOLOv11m | 66.2 | 93.8 | 57.7 | 92.1 | 76.6 | 8400 | 79.18 ms | 73.1 |

| YOLOv11l | 76.2 | 84.1 | 42.6 | 76.6 | 77.4 | 8400 | 91.76 ms | 73.7 |

| PPOBoardNet (ours) | 61.1 | 66.7 | 100.0 | 74.2 | 92.9 | 4 | 36.05 ms | 79.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xia, H.; Zhou, H.; Zhang, M.; Zhang, Q.; Fan, C.; Yang, Y.; Xi, S.; Liu, Y. Surface Defect Detection for Small Samples of Particleboard Based on Improved Proximal Policy Optimization. Sensors 2025, 25, 2541. https://doi.org/10.3390/s25082541

Xia H, Zhou H, Zhang M, Zhang Q, Fan C, Yang Y, Xi S, Liu Y. Surface Defect Detection for Small Samples of Particleboard Based on Improved Proximal Policy Optimization. Sensors. 2025; 25(8):2541. https://doi.org/10.3390/s25082541

Chicago/Turabian StyleXia, Haifei, Haiyan Zhou, Mingao Zhang, Qingyi Zhang, Chenlong Fan, Yutu Yang, Shuang Xi, and Ying Liu. 2025. "Surface Defect Detection for Small Samples of Particleboard Based on Improved Proximal Policy Optimization" Sensors 25, no. 8: 2541. https://doi.org/10.3390/s25082541

APA StyleXia, H., Zhou, H., Zhang, M., Zhang, Q., Fan, C., Yang, Y., Xi, S., & Liu, Y. (2025). Surface Defect Detection for Small Samples of Particleboard Based on Improved Proximal Policy Optimization. Sensors, 25(8), 2541. https://doi.org/10.3390/s25082541