Practical Test-Time Domain Adaptation for Industrial Condition Monitoring by Leveraging Normal-Class Data

Abstract

1. Introduction

2. Related Work

2.1. Domain Generalization

2.2. Domain Adaptation

2.2.1. Feature Alignment

2.2.2. Adversarial Training

2.2.3. Hypothesis Transfer

2.3. Source-Free Domain Adaptation

- Tent [37], which adapts only the batch normalization layers by minimizing prediction entropy on the target data;

- SHOT [20], which freezes the feature extractor and fine-tunes the classifier via pseudo-labeling;

- AdaBN [38], an early method that recalibrates batch normalization statistics using target domain samples.

2.4. Test-Time Domain Adaptation

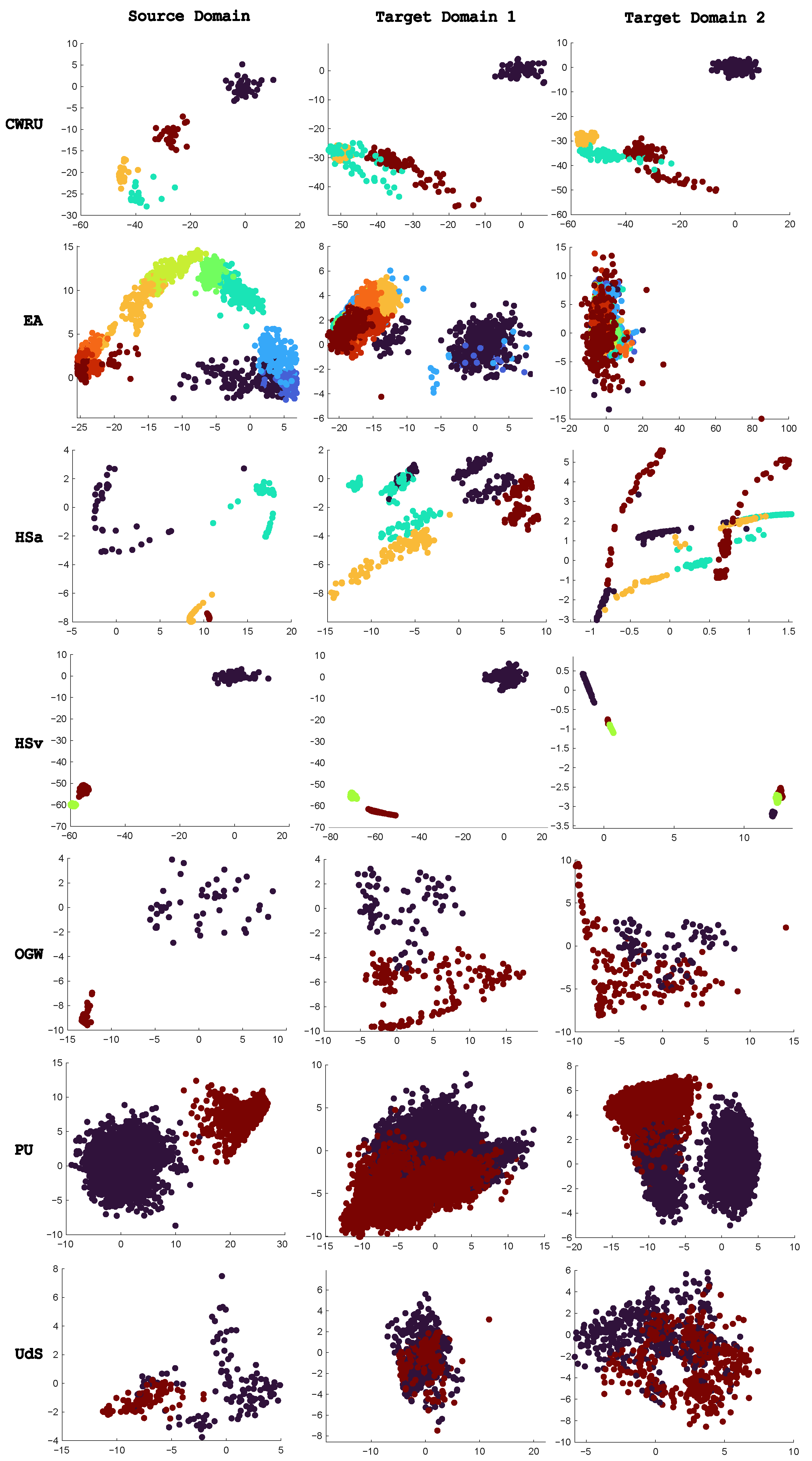

2.5. Challenges of Domain Adaptation in Industrial Prognostics

3. Materials and Methods

3.1. Datasets

- The ZeMA Electromechanical Axis (EA) dataset [42], ZeMA gGmbH, Saarbrücken, Germany;

- The ZeMA Hydraulic System (HS) dataset [29];

- The Open Guided Waves (OGW) dataset [43];

- The Paderborn University Bearing (PU) dataset [44], Paderborn University, Paderborn, Germany;

- The Case Western Reserve University Bearings (CWRU) dataset [45], Case Western Reserve University, Cleveland, OH, USA;

- The Saarland University Bearings (UdS) dataset [46], Saarland University, Saarbrücken, Germany.

3.2. Methods

3.2.1. HP-ConvNet

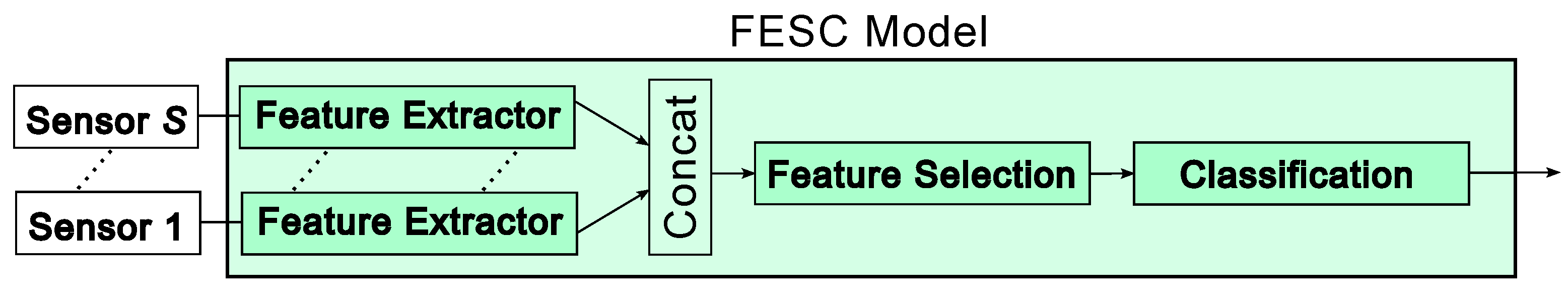

3.2.2. FESC

3.2.3. HP-Based Deep Ensemble

3.2.4. FESC Ensemble

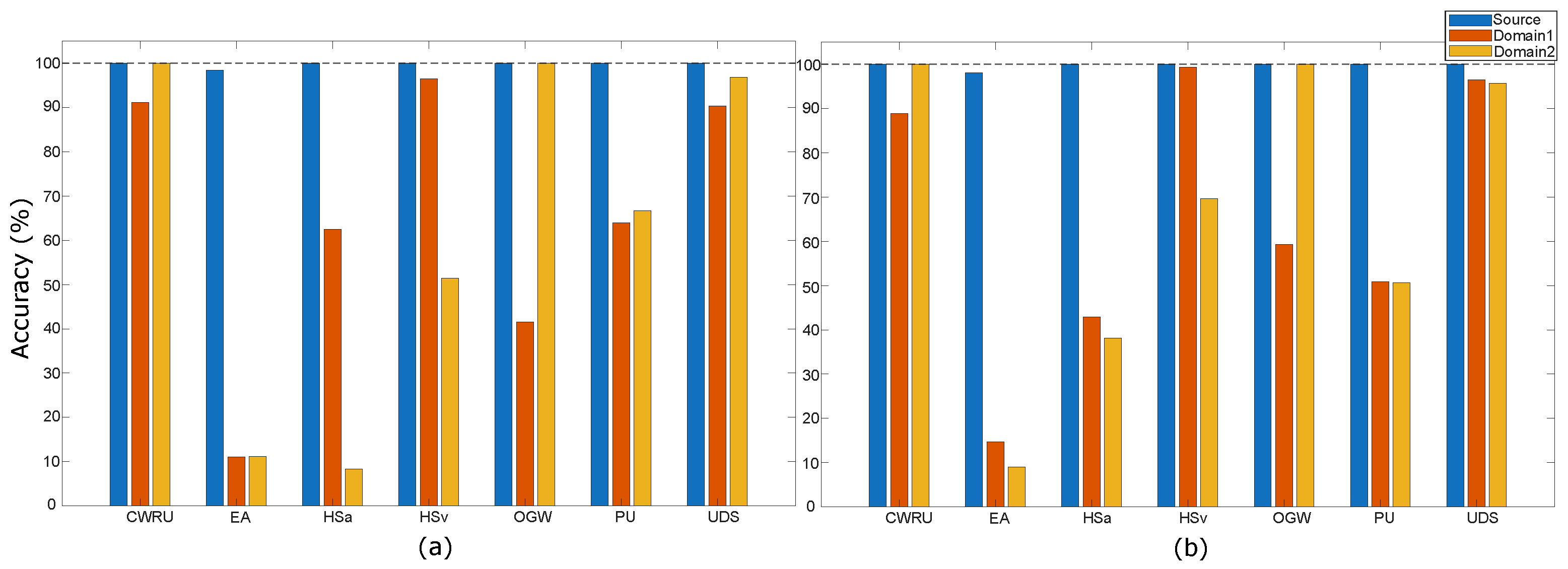

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AUROC | Area Under the Receiver Operating Characteristic Curve |

| FPR | False Positive Rate |

| FPR95 | FPR at 95% TPR |

| kNN | k-Nearest Neighbors |

| ConvNet | Convolutional Neural Network |

| HP | Hyperparameter |

| EA | ZeMA Electromechanical Axis Dataset |

| HS | ZeMA Hydraulic System Dataset |

| OGW | The Open Guided Waves Dataset |

| PU | Paderborn University Bearing Dataset |

| CWRU | Case Western Reserve University Bearings Dataset |

| UdS | Saarland University Bearings Dataset |

| ML | Machine Learning |

| AutoML | Automated Machine Learning |

| DNN | Deep Neural Network |

| NAS | Neural Architecture Search |

| LDA | Linear Discriminant Analysis |

| SVM | Support Vector Machines |

| ALA | Adaptive Linear Approximation |

| BFC | Best Fourier Coefficient |

| BDW | Best Daubechies Wavelets |

| TFEx | Statistical Features in Time and Frequency Domains |

| NoFE | No Feature Extraction |

| PCA | Principal Component Analysis |

| StatMom | Statistical Moments |

| Pearson | Pearson Correlation Coefficient |

| RFESVM | Recursive Feature Elimination Support Vector Machines |

| Spearman | Spearman Correlation Coefficient |

| NoFS | No Feature Selection |

| FESC | Feature Extraction Feature Selection, and Classification |

| DA | Domain Adaptation |

| UDA | Unsupervised Domain Adaptation |

| TTDA | Test-time domain adaptation |

| DANN | Domain-Adversarial Neural Networks |

| MMD | Maximum Mean Discrepancy |

| CORAL | Correlation ALignment |

| OpC | Operating Conditions |

Appendix A

References

- Quionero-Candela, J.; Sugiyama, M.; Schwaighofer, A.; Lawrence, N.D. Dataset Shift in Machine Learning; The MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- LeCun, Y.; Cortes, C.; LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Wu, B.; Ng, A.Y. Reading Digits in Natural Images with Unsupervised Feature Learning. In Proceedings of the NIPS Workshop on Deep Learning and Unsupervised Feature Learning; Stanford University: Stanford, CA, USA, 2011. [Google Scholar]

- Goodarzi, P.; Schütze, A.; Schneider, T. Comparison of different ML methods concerning prediction quality, domain adaptation and robustness. Tech. Mess. 2022, 89, 224–239. [Google Scholar] [CrossRef]

- Goodarzi, P.; Schütze, A.; Schneider, T. Domain shifts in industrial condition monitoring: A comparative analysis of automated machine learning models. J. Sensors Sens. Syst. 2025, 14, 119–132. [Google Scholar] [CrossRef]

- Farahani, A.; Voghoei, S.; Rasheed, K.; Arabnia, H.R. A brief review of domain adaptation. In Advances in Data Science and Information 510 Engineering: Proceedings from ICDATA 2020 and IKE 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 877–894. [Google Scholar]

- Qian, Q.; Luo, J.; Qin, Y. Adaptive Intermediate Class-Wise Distribution Alignment: A Universal Domain Adaptation and Generalization Method for Machine Fault Diagnosis. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 4296–4310. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, X.; Li, J.; Yang, Y. Intelligent Fault Diagnosis with Deep Adversarial Domain Adaptation. IEEE Trans. Instrum. Meas. 2021, 70, 1–9. [Google Scholar] [CrossRef]

- Gulrajani, I.; Lopez-Paz, D. In Search of Lost Domain Generalization. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 2 July 2020. [Google Scholar]

- Neupane, D.; Seok, J. Bearing Fault Detection and Diagnosis Using Case Western Reserve University Dataset With Deep Learning Approaches: A Review. IEEE Access 2020, 8, 93155–93178. [Google Scholar] [CrossRef]

- Schneider, T.; Helwig, N.; Schütze, A. Industrial condition monitoring with smart sensors using automated feature extraction and selection. Meas. Sci. Technol. 2018, 29, 94002. [Google Scholar] [CrossRef]

- Hendriks, J.; Dumond, P.; Knox, D. Towards better benchmarking using the CWRU bearing fault dataset. Mech. Syst. Signal Process. 2022, 169, 108732. [Google Scholar] [CrossRef]

- Chen, P.; Zhang, R.; He, C.; Jin, Y.; Fan, S.; Qi, J.; Zhou, C.; Zhang, C. Progressive contrastive representation learning for defect diagnosis in aluminum disk substrates with a bio-inspired vision sensor. Expert Syst. Appl. 2025, 289, 128305. [Google Scholar] [CrossRef]

- Qi, J.; Chen, Z.; Kong, Y.; Qin, W.; Qin, Y. Attention-guided graph isomorphism learning: A multi-task framework for fault diagnosis and remaining useful life prediction. Reliab. Eng. Syst. Saf. 2025, 263, 111209. [Google Scholar] [CrossRef]

- Siya, Y.; Kang, Q.; Zhou, M.; Rawa, M.; Abusorrah, A. A survey of transfer learning for machinery diagnostics and prognostics. Artif. Intell. Rev. 2022, 56, 2871–2922. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Wang, M.; Deng, W. Deep visual domain adaptation: A survey. Neurocomputing 2018, 312, 135–153. [Google Scholar] [CrossRef]

- Redko, I.; Morvant, E.; Habrard, A.; Sebban, M.; Bennani, Y. A survey on domain adaptation theory. arXiv 2020, arXiv:2004.11829. [Google Scholar]

- Yue, K.; Li, J.; Chen, Z.; Chen, J.; Li, W. Universal Source-Free Knowledge Transfer Network for Fault Diagnosis of Electromechanical System with Multimodal Signals. IEEE Trans. Instrum. Meas. 2025, 74, 1–12. [Google Scholar] [CrossRef]

- Liang, J.; Hu, D.; Feng, J. Do We Really Need to Access the Source Data? Source Hypothesis Transfer for Unsupervised Domain Adaptation. In Proceedings of the 37th International Conference on Machine Learning, Online, 13–18 July 2020; Singh, A.H.D., III, Ed.; PMLR: London, UK, 2020; Volume 119, pp. 6028–6039. [Google Scholar]

- Li, J.; Yu, Z.; Du, Z.; Zhu, L.; Shen, H.T. A comprehensive survey on source-free domain adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5743–5762. [Google Scholar] [CrossRef]

- Olivas, E.S.; Guerrero, J.D.M.; Sober, M.M.; Benedito, J.R.M.; Lopez, A.J.S. Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods and Techniques-2 Volumes; IGI Publishing: Hershey, PA, USA, 2009. [Google Scholar]

- Huang, J.; Gretton, A.; Borgwardt, K.; Schölkopf, B.; Smola, A. Correcting Sample Selection Bias by Unlabeled Data. In Proceedings of the Advances in Neural Information Processing Systems; Schölkopf, B., Platt, J., Hoffman, T., Eds.; MIT Press: Cambridge, MA, USA, 2006; Volume 19. [Google Scholar]

- Ganin, Y.; Lempitsky, V. Unsupervised Domain Adaptation by Backpropagation. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; Bach, F., Blei, D., Eds.; PMLR: Cambridge, MA, USA, 2015; Volume 37, pp. 1180–1189. [Google Scholar]

- Daumé, H., III. Frustratingly Easy Domain Adaptation. In Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, Prague, Czech Republic, 7 June 2007; Zaenen, A., van den Bosch, A., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2007; pp. 256–263. [Google Scholar]

- Pan, S.J.; Kwok, J.T.; Yang, Q. Transfer learning via dimensionality reduction. In Proceedings of the 23rd National Conference on Artificial Intelligence-Volume 2, Chicago IL, USA, 13–17 July 2008; AAAI Press: Washington, DC, USA, 2008; pp. 677–682. [Google Scholar]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 1–40. [Google Scholar] [CrossRef]

- Schneider, T.; Klein, S.; Bastuck, M. Condition monitoring of hydraulic systems Data Set at ZeMA. Zenodo 2018, 46, 66121. [Google Scholar] [CrossRef]

- Mayilvahanan, P.; Zimmermann, R.S.; Wiedemer, T.; Rusak, E.; Juhos, A.; Bethge, M.; Brendel, W. In Search of Forgotten Domain Generalization. In Proceedings of the ICML 2024 Workshop on Foundation Models in the Wild, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Chen, C.; Xie, W.; Huang, W.; Rong, Y.; Ding, X.; Huang, Y.; Xu, T.; Huang, J. Progressive feature alignment for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 21 November 2019; pp. 627–636. [Google Scholar]

- Yan, H.; Ding, Y.; Li, P.; Wang, Q.; Xu, Y.; Zuo, W. Mind the class weight bias: Weighted maximum mean discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 18–20 June 2017; pp. 2272–2281. [Google Scholar]

- Sun, B.; Saenko, K. Deep coral: Correlation alignment for deep domain adaptation. In Proceedings of the Computer Vision–ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 October and 15–16 October 2016; proceedings, part III 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 443–450. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; March, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 1–35. [Google Scholar]

- Saito, K.; Watanabe, K.; Ushiku, Y.; Harada, T. Maximum classifier discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 18–20 June 2018; pp. 3723–3732. [Google Scholar]

- Grandvalet, Y.; Bengio, Y. Semi-supervised learning by entropy minimization. Adv. Neural Inf. Process. Syst. 2004, 17, 8. [Google Scholar]

- Wang, D.; Shelhamer, E.; Liu, S.; Olshausen, B.; Darrell, T. Tent: Fully Test-Time Adaptation by Entropy Minimization. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4–8 May 2021. [Google Scholar]

- Li, Y.; Wang, N.; Shi, J.; Hou, X.; Liu, J. Adaptive Batch Normalization for practical domain adaptation. Pattern Recognit. 2018, 80, 109–117. [Google Scholar] [CrossRef]

- Wang, Q.; Fink, O.; Van Gool, L.; Dai, D. Continual test-time domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21 June 2022; pp. 7201–7211. [Google Scholar]

- Liang, J.; He, R.; Tan, T. A comprehensive survey on test-time adaptation under distribution shifts. Int. J. Comput. Vis. 2025, 133, 31–64. [Google Scholar] [CrossRef]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M. Learning Transferable Features with Deep Adaptation Networks. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; Volume 37, pp. 97–105. [Google Scholar]

- Klein, S. Sensor data set, electromechanical cylinder at ZeMA testbed (2kHz). Zenodo 2018, 3, 11. [Google Scholar] [CrossRef]

- Moll, J.; Kexel, C.; Pötzsch, S.; Rennoch, M.; Herrmann, A.S. Temperature affected guided wave propagation in a composite plate complementing the Open Guided Waves Platform. Sci. Data 2019, 6, 191. [Google Scholar] [CrossRef]

- Lessmeier, C.; Kimotho, J.K.; Zimmer, D.; Sextro, W. Condition Monitoring of Bearing Damage in Electromechanical Drive Systems by Using Motor Current Signals of Electric Motors: A Benchmark Data Set for Data-Driven Classification. Eur. Conf. Progn. Health Manag. Soc. 2016, 3, 1577. [Google Scholar] [CrossRef]

- Case Western Reserve University Bearing Data Center. Case Western Reserve University Bearing Data Set. 2019. Available online: https://engineering.case.edu/bearingdatacenter (accessed on 8 December 2025).

- Schnur, C.; Goodarzi, P.; Robin, Y.; Schauer, J.; Schütze, A. A Machine Learning Dataset of Artificial Inner Ring Damage on Cylindrical Roller Bearings Measured Under Varying Cross-Influences. Data 2025, 10, 77. [Google Scholar] [CrossRef]

- Goodarzi, P.; Schauer, J.; Schütze, A. Robust Distribution-Aware Ensemble Learning for Multi-Sensor Systems. Sensors 2025, 25, 831. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6999–7019. [Google Scholar] [CrossRef]

- Holzinger, A.; Saranti, A.; Molnar, C.; Biecek, P.; Samek, W. Explainable AI Methods-A Brief Overview. In xxAI-Beyond Explainable AI: International Workshop, Held in Conjunction with ICML 2020, July 18, 2020, Vienna, Austria, Revised and Extended Papers; Holzinger, A., Goebel, R., Fong, R., Moon, T., Müller, K.R., Samek, W., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 13–38. [Google Scholar] [CrossRef]

- Schorr, C.; Goodarzi, P.; Chen, F.; Dahmen, T. Neuroscope: An Explainable AI Toolbox for Semantic Segmentation and Image Classification of Convolutional Neural Nets. Appl. Sci. 2021, 11, 2199. [Google Scholar] [CrossRef]

- White, C.; Safari, M.; Sukthanker, R.; Ru, B.; Elsken, T.; Zela, A.; Dey, D.; Hutter, F. Neural architecture search: Insights from 1000 papers. arXiv 2023, arXiv:2301.08727. [Google Scholar] [CrossRef]

- Zoph, B.; Le, Q.V. Neural architecture search with reinforcement learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

- Kornblith, S.; Shlens, J.; Le, Q.V. Do Better ImageNet Models Transfer Better? In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2656–2666. [Google Scholar] [CrossRef]

- Goodarzi, P.; Klein, S.; Schütze, A.; Schneider, T. Comparing Different Feature Extraction Methods in Condition Monitoring Applications. In Proceedings of the 2023 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Kuala Lumpur, Malaysia, 22–25 May 2023. [Google Scholar]

- Badihi, H.; Zhang, Y.; Jiang, B.; Pillay, P.; Rakheja, S. A Comprehensive Review on Signal-Based and Model-Based Condition Monitoring of Wind Turbines: Fault Diagnosis and Lifetime Prognosis. Proc. IEEE 2022, 110, 754–806. [Google Scholar] [CrossRef]

- Rajapaksha, N.; Jayasinghe, S.; Enshaei, H.; Jayarathne, N. Acoustic Analysis Based Condition Monitoring of Induction Motors: A Review. In Proceedings of the 2021 IEEE Southern Power Electronics Conference (SPEC), Kigali, Rwanda, 6–9 December 2021; pp. 1–10. [Google Scholar] [CrossRef]

- Ao, S.I.; Gelman, L.; Karimi, H.R.; Tiboni, M. Advances in Machine Learning for Sensing and Condition Monitoring. Appl. Sci. 2022, 12, 12392. [Google Scholar] [CrossRef]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical Bayesian Optimization of Machine Learning Algorithms. In Proceedings of the Advances in Neural Information Processing Systems; Pereira, F., Burges, C., Bottou, L., Weinberger, K., Eds.; Curran Associates, Inc.: San Jose, CA, USA, 2012; Volume 25. [Google Scholar]

- Hutter, F.; Kotthoff, L.; Vanschoren, J. Automated Machine Learning-Methods, Systems, Challenges; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Olszewski, R.T.; Maxion, R.; Siewiorek, D. Generalized Feature Extraction for Structural Pattern Recognition in Time-Series Data. Ph.D. Thesis, School of Computer Science, Carnegie Mellon University, Pittsburgh, PA, USA, 2001. [Google Scholar]

- Mörchen, F. Time series feature extraction for data mining using DWT and DFT. Tech. Rep. 2003, 33, 71. [Google Scholar]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Kirch, W. (Ed.) Pearson’s Correlation Coefficient. In Encyclopedia of Public Health; Springer: Dordrecht, The Netherlands, 2008; pp. 1090–1091. [Google Scholar] [CrossRef]

- Kononenko, I.; Šimec, E.; Robnik-Šikonja, M. Overcoming the Myopia of Inductive Learning Algorithms with RELIEFF. Appl. Intell. 1997, 7, 39–55. [Google Scholar] [CrossRef]

- Lin, X.; Yang, F.; Zhou, L.; Yin, P.; Kong, H.; Xing, W.; Lu, X.; Jia, L.; Wang, Q.; Xu, G. A support vector machine-recursive feature elimination feature selection method based on artificial contrast variables and mutual information. J. Chromatogr. B 2012, 910, 149–155. [Google Scholar] [CrossRef] [PubMed]

- Spearman, C. The Proof and Measurement of Association between Two Things. Am. J. Psychol. 1904, 15, 72–101. [Google Scholar] [CrossRef]

- Yu, H.; Yang, J. A direct LDA algorithm for high-dimensional data—With application to face recognition. Pattern Recognit. 2001, 34, 2067–2070. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Aghbalou, A.; Staerman, G. Hypothesis transfer learning with surrogate classification losses: Generalization bounds through algorithmic stability. In Proceedings of the International Conference on Machine Learning, Baltimore, Maryland, 23–29 July 2023; PMLR: London, UK, 2023; pp. 280–303. [Google Scholar]

- Olah, C.; Mordvintsev, A.; Schubert, L. Feature Visualization. Distill 2017, 2, e7. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the the 23rd International Conference on Machine learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Yang, J.; Zhou, K.; Li, Y.; Liu, Z. Generalized out-of-distribution detection: A survey. INternational. J. Comput. Vis. 2024, 132, 5635–5662. [Google Scholar] [CrossRef]

- Hawks, B.; Duarte, J.; Fraser, N.J.; Pappalardo, A.; Tran, N.; Umuroglu, Y. Ps and Qs: Quantization-Aware Pruning for Efficient Low Latency Neural Network Inference. Front. Artif. Intell. 2021, 4, 676564. [Google Scholar] [CrossRef] [PubMed]

| Method | Source Data | Source Labels | Target Data (Train Time) | Target Labels |

|---|---|---|---|---|

| TL | ✓ | ✓ | ✓ | ✓ (few) |

| DA | ✓ | ✓ | ✓ | – |

| DG | ✓ | ✓ | – | – |

| Dataset | Cause of Shift | Source | Domain 1 | Domain 2 |

|---|---|---|---|---|

| EA | Device | Axis 3 | Axis 5 | Axis 7 |

| HS ∗ | Cooler | 100% | 20% | 3% |

| OGW | Pair of sensors | Sensors 1 and 6 | Sensors 1 and 2 | Sensors 2 and 4 |

| PU | Device group | Group 1 | Group 2 | Group 3 |

| CWRU | Motor load | 1 hp | 0 hp | 2 hp |

| UdS | OpC | 2 | 1 | 3 |

| Feature Extraction Methods | |

|---|---|

| ALA | Adaptive linear approximation [61] |

| BFC | Best Fourier coefficient [62] |

| BDW | Best Daubechies wavelets [62] |

| TFEx | Statistical features in time and frequency domains [55] |

| NoFE | No feature extraction |

| PCA | Principal component analysis [63] |

| StatMom | Statistical moments [11] |

| Feature Selection Methods | |

| Pearson | Pearson correlation coefficient [64] |

| RELIEFF | RELIEFF [65] |

| RFESVM | Recursive feature elimination |

| support vector machines [66] | |

| Spearman | Spearman correlation coefficient [67] |

| NoFS | No feature selection |

| Classification Methods | |

| LDAMahal | Linear discriminant analysis [68] with |

| Mahalanobis distance classification | |

| SVM | Support vector machine [69] with |

| a radial basis function kernel | |

| (a) HP-Based Deep Ensemble | ||||||

| Source | Domain 1 | Domain 2 | ||||

| Datasets | AUROC ↑ | FPR95 ↓ | AUROC ↑ | FPR95 ↓ | AUROC ↑ | FPR95 ↓ |

| CWRU | 100.0 | 0.0 | 100.0 | 0.0 | 100.0 | 0.0 |

| EA | 98.3 | 2.2 | 99.8 | 0.3 | 98.3 | 5.9 |

| HSa | 99.2 | 4.8 | 99.8 | 2.4 | 98.0 | 9.5 |

| HSv | 98.0 | 5.7 | 99.4 | 0.0 | 99.0 | 2.4 |

| OGW | 100.0 | 0.0 | 99.3 | 5.0 | 100.0 | 0.0 |

| PU | 100.0 | 0.0 | 94.8 | 18.8 | 100.0 | 0.0 |

| UdS | 99.8 | 0.0 | 99.7 | 0.1 | 100.0 | 0.0 |

| (b) FESC Ensemble | ||||||

| Source | Domain 1 | Domain 2 | ||||

| Datasets | AUROC ↑ | FPR95 ↓ | AUROC ↑ | FPR95 ↓ | AUROC ↑ | FPR95 ↓ |

| CWRU | 100.0 | 0.0 | 100.0 | 0.0 | 100.0 | 0.0 |

| EA | 98.2 | 5.6 | 99.6 | 0.4 | 86.9 | 47.3 |

| HSa | 100.0 | 0.0 | 99.7 | 0.9 | 96.4 | 20.6 |

| HSv | 100.0 | 0.0 | 100.0 | 0.0 | 95.5 | 29.1 |

| OGW | 100.0 | 0.0 | 98.6 | 6.3 | 100.0 | 0.0 |

| PU | 100.0 | 0.0 | 91.9 | 23.9 | 99.7 | 1.4 |

| UdS | 100.0 | 0.0 | 90.4 | 52.2 | 88.9 | 34.2 |

| Source Domain | Target Domain | |||||

|---|---|---|---|---|---|---|

| Method | ACC | AUROC ↑ | FPR95 ↓ | ACC | AUROC ↑ | FPR95 ↓ |

| HP-based deep ensemble | 99.8 | 99.3 | 1.8 | 54.0 | 99.1 | 3.2 |

| FESC ensemble | 99.7 | 99.7 | 0.8 | 57.0 | 96.2 | 15.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Goodarzi, P.; Schütze, A. Practical Test-Time Domain Adaptation for Industrial Condition Monitoring by Leveraging Normal-Class Data. Sensors 2025, 25, 7614. https://doi.org/10.3390/s25247614

Goodarzi P, Schütze A. Practical Test-Time Domain Adaptation for Industrial Condition Monitoring by Leveraging Normal-Class Data. Sensors. 2025; 25(24):7614. https://doi.org/10.3390/s25247614

Chicago/Turabian StyleGoodarzi, Payman, and Andreas Schütze. 2025. "Practical Test-Time Domain Adaptation for Industrial Condition Monitoring by Leveraging Normal-Class Data" Sensors 25, no. 24: 7614. https://doi.org/10.3390/s25247614

APA StyleGoodarzi, P., & Schütze, A. (2025). Practical Test-Time Domain Adaptation for Industrial Condition Monitoring by Leveraging Normal-Class Data. Sensors, 25(24), 7614. https://doi.org/10.3390/s25247614