1. Introduction

Anomaly detection is a critical task for safeguarding the integrity and trustworthiness of user-generated content, particularly in digital marketplaces and review-centric platforms [

1]. As online reviews increasingly influence consumer decision-making and recommendation systems, identifying abnormal, manipulated, or semantically inconsistent content has become essential. Such anomalies may appear as incoherent text, injected noise, off-topic commentary, or adversarial inputs intended to mislead users or exploit algorithmic models [

2,

3,

4,

5,

6,

7]. Beyond conventional e-commerce settings, similar challenges arise in IoT-driven ecosystems, where user feedback is often accompanied by sensor-derived metadata such as device logs, timestamps, and operational signals. Detecting anomalies in these multi-modal environments is crucial not only for ensuring the authenticity of reviews but also for supporting trust in sensor-enabled platforms, smart devices, and embedded IoT systems that depend on reliable user- and device-generated information [

8].

Traditional anomaly detection approaches typically rely on supervised learning models that demand large volumes of annotated data [

9,

10]. However, in open-domain review environments, obtaining labeled anomalies is both costly and inherently subjective. The vast diversity of linguistic expressions, writing styles, and domain-specific semantics further complicates the annotation process and limits the scalability of supervised techniques. This has spurred growing interest in developing label-efficient, and especially zero-shot, anomaly detection methods that generalize across domains without reliance on annotated training data [

11].

Despite the transformative capabilities of large pre-trained language models (PLMs) in natural language processing (NLP), their use in anomaly detection for multi-domain reviews remains underutilized [

12,

13]. Instruction-tuned models like FLAN-T5 have demonstrated notable zero-shot generalization for classification and reasoning tasks. Yet, their application to unsupervised or label-free anomaly detection, especially in settings marked by linguistic heterogeneity and semantic drift, has not been fully explored. Existing models are often domain-specific, narrowly scoped, or unable to detect higher-order semantic inconsistencies in open-domain review corpora [

14].

A novel zero-shot framework is presented for detecting fake reviews across diverse product domains, leveraging the semantic inference capabilities of instruction-tuned transformers. The architecture integrates three complementary components: (i) linguistic scoring based on language perplexity using FLAN-T5, (ii) structural anomaly detection through a transformer-based autoencoder, and (iii) semantic drift measurement between instruction-guided and unsupervised embeddings. To enhance applicability in IoT-enabled platforms, the framework incorporates sensor-derived metadata such as device logs, timestamps, and operational features, allowing cross-validation of anomalies between textual reviews and structured sensor signals. These components are fused into a unified hybrid anomaly score, enabling the detection of both syntactic irregularities and semantic inconsistencies without reliance on labeled data. Unlike earlier works where perplexity, reconstruction, or embedding drift are used in isolation, our framework fuses them through instruction-tuned semantic conditioning, producing a unified anomaly representation that jointly leverages textual fluency, structural regularity, and metadata-based contextual validation. The proposed integration enables domain-agnostic anomaly inference under zero-shot settings.

The framework is empirically evaluated on the large-scale Amazon Reviews 2023 corpus, comprising over 570 million reviews across 33 product categories, as well as metadata-rich sources such as the Historic Amazon Reviews datasets [

15,

16,

17,

18]. Results demonstrate strong zero-shot detection capabilities, robust generalization under domain shift, and measurable gains in few-shot settings, with the integration of metadata further improving anomaly resolution in sensor-driven contexts. To support interpretability, token-level saliency attribution is combined with feature-level attributions from sensor metadata, offering transparent explanations for anomaly decisions. By unifying prompt-based semantic inference, unsupervised structural modeling, latent embedding drift analysis, and metadata-driven validation, the proposed method addresses key challenges in scalable, explainable, and IoT-relevant review fraud detection.

This paper makes the following key contributions:

Development of a multi-modal zero-shot fake review detection framework using instruction-tuned transformers for domain-agnostic anomaly analysis.

Design of a hybrid anomaly scoring mechanism combining perplexity, reconstruction error, semantic drift, and metadata-informed signals.

Definition of a semantic drift metric for measuring contextual misalignment, extended with sensor-derived metadata validation.

Use of prompt ensemble strategies to improve generalization across diverse product domains and IoT-enabled contexts.

Integration of token-level saliency and metadata-level attribution to ensure transparent and interpretable anomaly detection.

Empirical validation on Amazon Reviews 2023, Amazon Review Data 2018, and Historic Amazon datasets, achieving up to 0.945 AUC with strong cross-domain generalization.

The remainder of this paper is structured as follows:

Section 2 reviews related work;

Section 3 presents the proposed methodology;

Section 4 describes the dataset and development environment;

Section 5 reports the experimental results; and

Section 6 concludes with future research directions.

3. Proposed Zero-Shot Anomaly Detection Framework

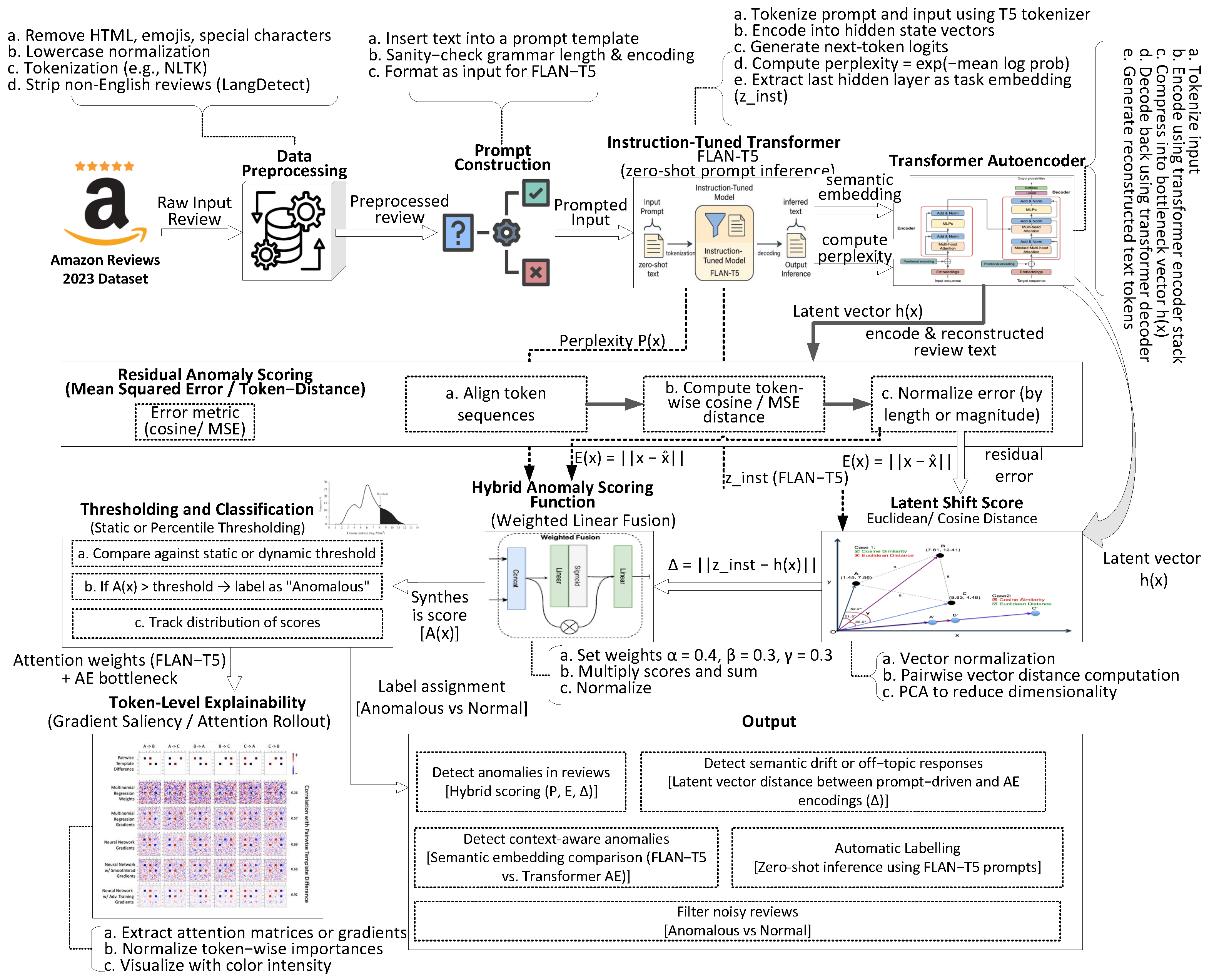

In this section, we present a modular, instruction-tuned framework for zero-shot detection of fraudulent user reviews across heterogeneous product domains, extended to incorporate sensor-derived metadata commonly observed in IoT-enabled platforms. As illustrated in

Figure 1, the architecture is composed of three core components: an instruction-tuned semantic inference module (FLAN-T5), an unsupervised transformer autoencoder for structural representation learning, and a latent alignment mechanism for semantic drift measurement. Conventional anomaly detection approaches typically rely on a single indicator, such as language perplexity or reconstruction loss, which is insufficient for capturing the diverse manifestations of fraudulent reviews or inconsistencies across multi-modal signals. In contrast, the proposed architecture integrates complementary anomaly cues, perplexity from FLAN-T5 to assess linguistic fluency and coherence, reconstruction error from the transformer autoencoder to identify structural deviations, and embedding-level drift to quantify misalignment between instruction-conditioned and unsupervised representations. These signals are further cross-validated with metadata-informed anomaly indicators derived from attributes such as device logs, temporal usage patterns, and operational signals. The integration of textual and sensor-informed modalities yields a unified anomaly scoring framework that supports robust generalization across domains, while operating without reliance on labeled anomaly datasets. This design enables both linguistic and metadata-driven anomaly detection, ensuring applicability in large-scale e-commerce and IoT-driven environments where reliability and interpretability are critical.

3.1. Text Review Acquisition and Preprocessing

The study employs the Amazon Reviews 2023 dataset. The dataset covers reviews published between 1999 and 2023, spanning 33 product categories, including electronics, home appliances, books, and personal care. For analysis, a representative subset of 1.2 million reviews was sampled to maintain balanced coverage across domains. Synthetic anomalies were introduced through linguistic perturbations (token shuffling, repetition, and category mismatching) at a 15% injection rate to simulate distributional irregularities under zero-shot conditions.

Table 2 summarizes the category-wise distribution and anomaly proportions.

To ensure compatibility with the instruction-tuned FLAN-T5 model and maintain linguistic consistency, a structured preprocessing pipeline is implemented. The process involves removing HTML tags, emojis, and special characters, followed by lowercasing and punctuation normalization. Tokenization is performed using the NLTK toolkit, and non-English entries are excluded via language identification with langdetect, given that FLAN-T5 is optimized for English. FLAN-T5 is selected as the primary language backbone due to its instruction-tuned architecture, which enables robust zero-shot generalization across diverse NLP tasks without task-specific fine-tuning. The model has been optimized through large-scale instruction following, yielding superior semantic alignment and compositional reasoning compared with standard T5 or GPT variants under limited-label conditions. This property aligns with the present study’s goal of achieving anomaly detection in a zero-shot, cross-domain setting while maintaining computational efficiency. The resulting cleaned and tokenized review representation constitutes the input for subsequent prompt-based inference.

3.2. Prompt Construction for Instruction-Tuned Inference

Each preprocessed review

x is reformulated into a natural-language instruction compatible with the instruction-tuned capabilities of FLAN-T5 [

38]. To ensure that the notion of anomalous is semantically clear to the model, the prompt explicitly contextualizes the detection objective rather than relying on implicit model knowledge. The adopted template is defined as:

Determine whether the following review is anomalous, inconsistent, or machine-generated.

Review: <review text>

Such phrasing provides explicit linguistic grounding of anomaly-related concepts and aligns the input with FLAN-T5’s instruction-tuned distribution. Alternative formulations (e.g., “Is this review fake?” or “Does this text appear natural?”) were empirically evaluated on a 5000-sample validation subset, and the selected version yielded the most stable perplexity separation between authentic and perturbed reviews, balancing interpretability and detection sensitivity. The resulting prompt is tokenized using SentencePiece:

The encoded prompt is subsequently passed through the FLAN-T5 encoder to obtain a task-aware semantic embedding:

The embedding captures instruction-guided semantics and is forwarded to both the perplexity computation and the semantic drift module

Section 3.6.

3.3. Semantic Embedding and Perplexity Computation Using FLAN-T5

The prompt sequence

is processed by the instruction-tuned FLAN-T5 model to derive both a semantic embedding

and a linguistic fluency score. The decoder operates autoregressively, generating for each token

a conditional probability

through its softmax output layer over the vocabulary. The sequence log-likelihood is computed as

and the corresponding perplexity score, representing linguistic irregularity, is defined as

Lower values of

indicate syntactically and semantically well-formed text consistent with the instruction-tuned prior, whereas higher values suggest incoherent or anomalous content. Both the instruction-tuned embedding

and the derived

are provided to the hybrid anomaly scoring module, while

additionally supports semantic-drift estimation in

Section 3.6. All probabilities

are obtained directly from the pretrained FLAN-T5 decoder without fine-tuning, maintaining full zero-shot compatibility.

3.4. Latent Representation via Transformer Autoencoder

In parallel with FLAN-T5, the original review

is processed by a transformer-based autoencoder trained exclusively on normal (non-anomalous) reviews. The encoder compresses the input into a latent structural representation:

The decoder reconstructs the input as

, and the model is trained to minimize the token-wise reconstruction loss:

As the autoencoder is trained in an unsupervised manner, it assumes that the majority of historical reviews represent genuine user behaviour. Although some fraudulent or noisy samples may exist in the corpus, their impact is limited because the model learns broad structural regularities rather than explicit class boundaries. Future work will incorporate robust pre-filtering and iterative outlier removal to further reduce potential bias arising from residual anomalous data.

This latent representation

captures the structural patterns of typical reviews and is passed to the residual error module

Section 3.5 and the semantic drift module

Section 3.6. The architecture of the autoencoder consists of symmetric transformer encoder-decoder blocks with shared multi-head attention and feedforward layers.

3.5. Residual Error Calculation

To detect structural anomalies, the reconstructed output

is compared with the original review

x to measure reconstruction deviation from the learned normal patterns. The corresponding reconstruction error term

is formally defined in

Section 3.7. A higher value

indicates stronger deviation, often arising from noise, unnatural syntax, or automatically generated text. This value is then passed to the hybrid anomaly scoring module for integration.

3.6. Latent Shift Measurement

Semantic drift is assessed by comparing the instruction-tuned embedding

with the autoencoder’s latent vector

. The latent shift metric

, formally defined in

Section 3.7, quantifies divergence between task-conditioned semantics and structural encoding. Large

values typically signal off-topic, inconsistent, or adversarial content, key indicators of fraudulent reviews.

3.7. Hybrid Anomaly Score

The framework synthesizes multiple anomaly indicators into a single unified score. inguistic irregularities are captured by the perplexity

from the instruction-tuned FLAN-T5 model, structural deviations by the reconstruction error

from the transformer autoencoder, and semantic misalignment by the embedding drift

between instruction-conditioned and unsupervised representations. An additional metadata-driven component

reflects inconsistencies in auxiliary features such as timestamps, verification flags, and category distributions, serving as proxies for sensor-derived signals. The combined hybrid anomaly score is expressed as:

where

.

The weights are optimized via grid search on a held-out validation subset containing synthetically perturbed samples that emulate linguistic or structural irregularities. Because the framework operates in a zero-shot mode without labelled anomalies, this procedure calibrates the relative sensitivity of each indicator rather than fitting to real fraud patterns. Each coefficient has an intuitive interpretation: emphasizes linguistic irregularity, structural deviation, semantic drift, and metadata inconsistency. The relative magnitudes of these coefficients may be adjusted to align with specific application priorities for instance, a higher value for domains dominated by spam-like text or an increased weight for semantically complex expert reviews. For metadata fusion we use and , , , chosen on synthetic validation; no metadata-specific parameters were fit on labelled benchmarks.

Throughout both the main and cross-benchmark evaluations, the hybrid weights were retained from the configuration optimized on synthetic perturbations, and only the detection threshold was empirically determined. Maintaining fixed weight coefficients in this manner ensured adherence to the zero-shot paradigm and eliminated any possibility of supervised bias arising from labelled data. The unified formulation supports semantic drift and metadata-based indicators, thereby verifying structural, semantic, and metadata-informed dimensions under zero-shot conditions. While perplexity provides a useful linguistic irregularity signal, it may occasionally assign higher anomaly scores to legitimate reviews that use creative, technical, or minority-specific language. In our formulation, this risk is mitigated by combining perplexity with structural, semantic drift, and metadata-based indicators, balancing stylistic variance against contextual consistency.

3.8. Thresholding and Anomaly Classification

The final classification is obtained by thresholding the hybrid score

:

The threshold can be statically set or dynamically estimated using quantiles (e.g., 95th percentile of validation scores). The binary label designates the review as anomalous (1) or normal (0).

3.9. Token-Level Explainability

For interpretability, we extract token-level saliency from both FLAN-T5 and the autoencoder. Using input–gradient products or attention weights, we compute a saliency map:

where each

quantifies the contribution of token

to the final anomaly score. These maps help human reviewers visualize which parts of the review triggered anomaly flags, improving trust and facilitating manual audits in moderation systems.

The complete operational flow of the proposed framework is summarized in Algorithm 1. The system begins by preprocessing the review and reformulating it into an instruction-aligned prompt suitable for the FLAN-T5 model. FLAN-T5 then generates a semantic embedding and computes sequence-level perplexity, capturing linguistic irregularities. Simultaneously, the original review is encoded and reconstructed by a transformer-based autoencoder trained on normal data to capture structural regularities. The resulting reconstruction error highlights syntactic deviations, while the semantic drift, quantified as the distance between the FLAN-T5 embedding and the autoencoder’s latent vector, captures contextual misalignment. These three complementary signals are linearly fused into a hybrid anomaly score, which is then thresholded to assign a binary anomaly label. This modular design enables interpretable, domain-agnostic, and label-free anomaly detection, offering actionable outputs such as anomaly classification, score-based ranking, and token-level saliency maps for review audits and moderation workflows.

3.10. Metadata-Informed Score

For each review

x with metadata

{timestamp verified rating category} and text embedding

, a metadata anomaly score

is computed as a weighted sum of four normalized components: temporal deviation, verification prior deviation, rating–sentiment inconsistency, and category–text semantic misalignment. Formally,

where each

is standardized within the product category to ensure domain comparability.

Temporal deviation

is obtained from the purchase–review interval

via a robust

z-score,

where

and

denote the category-wise median and median absolute deviation, and

controls saturation.

| Algorithm 1 Zero-Shot Anomaly Detection with Instruction-Tuned Transformers |

Require:

Input review x; FLAN-T5 model ; Autoencoder ; weights ; threshold

Ensure:

Anomaly score ; label - 1:

Clean and tokenize review x - 2:

Construct prompt “Is the following review anomalous? Review:x” - 3:

- 4:

- 5:

- 6:

Compute hybrid anomaly score: - 7:

return

|

Verification of the prior deviation

reflects the rarity of unverified reviews in a category:

where

.

Rating-sentiment inconsistency

measures the polarity mismatch between the normalized sentiment score

inferred from text and the rescaled rating

:

Category-text misalignment

quantifies semantic deviation between the review embedding and its category centroid

:

where

controls saturation.

All components use robust, category-specific normalization; missing metadata are imputed with medians and penalized by a small additive term . Unless stated otherwise, weights are fixed as , selected on synthetic validation consistent with optimization. The metadata term integrates into the hybrid anomaly score in Equation (5) through the coefficient , yielding , where .

4. Development Environment

The Amazon Reviews 2023 dataset is used as the primary input source, accessed via the Hugging Face Datasets’ API and stored in Apache Arrow format for efficient streaming. Each entry is a structured tuple using Equation (

15). Although current experiments are performed offline using static Amazon review datasets, the framework is architecturally compatible with real-time and edge-streaming deployment.

Only English-language reviews with token count are retained. Text is normalized using Unicode NFC, lowercased, and tokenized using the SentencePiece tokenizer from the FLAN-T5 suite. The sequence is converted into token IDs , with padding/truncation to a fixed length of 512.

Processed inputs are passed to three downstream modules:

The FLAN-T5 encoder, using prompts such as “Is this review suspicious?”, produces task-conditioned embeddings and computes sequence perplexity .

A transformer-based autoencoder encodes the same input into latent vector and reconstructs , enabling computation of reconstruction error .

A hybrid scoring module combines , , and semantic drift to yield a scalar anomaly score .

To evaluate zero-shot generalization, controlled synthetic anomalies were introduced through lexical perturbations (e.g., token shuffling, redundancy injection) and domain-inconsistent insertions (e.g., mismatched category context). These distortions serve as baseline perturbations to benchmark model sensitivity and cross-domain robustness rather than to fully emulate real-world spam or LLM-generated fakes. Detailed runtime configuration is provided in

Table 3. Experiments were conducted on a workstation equipped with an NVIDIA RTX A6000 GPU (48GB VRAM), an AMD Threadripper 3970X CPU (32 cores, 3.7 GHz), and 256GB RAM, using PyTorch 2.2 and Hugging Face Transformers v4.40. The transformer autoencoder was trained for 10 epochs on 1.2 million reviews, requiring approximately 9 h. FLAN-T5 inference operates in zero-shot mode without gradient updates, averaging 0.41 s per review (≈2450 samples/h). Memory utilization during inference remained below 12GB VRAM, confirming the feasibility of running the model on single-GPU systems.

Dataset Summary

We utilize the Amazon Reviews 2023 dataset [

16], which contains over 570 million reviews spanning 33 product categories from 1996 to 2023. Each instance is structured as:

The reviewText field serves as the primary input, preprocessed and tokenized with SentencePiece (FLAN-T5) into sequences of length 512. Metadata fields (rating, verified, timestamp, category) are treated as proxies for sensor-derived signals, supporting domain-shift simulation and metadata-informed anomaly validation. Metadata fields used in comprise purchase to review interval proxies (timestamp-derived), verification status, star rating, and category, with per-category robust statistics (median/MAD and empirical priors) computed on the Amazon 2023 corpus; category centroids for are obtained from instruction-tuned embeddings over the same splits.

A representative subset of ten categories is sampled, each containing approximately 100,000 reviews. To simulate anomalous behavior under label-free conditions, 15% of the reviews in each category are synthetically perturbed. Lexical perturbations include three operations, i.e., (i) token shuffling to disrupt syntactic order, (ii) synonym substitution using WordNet to alter local semantics, and (iii) random repetition or omission of high-frequency tokens to emulate noise typical of automated or low-quality content. Reassigning reviews also introduces cross-domain mismatches to incorrect category labels. The perturbation ratio is maintained uniformly across categories to preserve class balance. The complete one-million-review subset, comprising both original and perturbed instances, serves as the zero-shot inference dataset, as the framework does not rely on labeled training data. The perturbation procedure follows previously established text-anomaly simulation strategies [

24,

27].

To link the zero-shot anomaly design with conventional fake-review benchmarks, the perturbation parameters used to generate synthetic anomalies (token shuffling, synonym substitution, repetition, and category mismatch) were calibrated against the statistical characteristics of the Amazon Fake Reviews [

39] and Yelp Chi datasets [

40]. Calibration ensured that the injected anomalies maintained statistical properties such as n-gram entropy, sentiment polarity balance, and length distribution comparable to authentic fraudulent samples, thereby preserving the integrity of the unsupervised evaluation protocol. For the Variational Autoencoder component, training data were drawn from the Amazon Reviews 2023 corpus, restricted to verified reviews exhibiting consistent rating–sentiment polarity and typical text length (within one median absolute deviation of the category median). The subset thus represents linguistically regular, high-confidence samples used to model the manifold of normal behavior. Data were partitioned category-wise with an 80/20 split for training and validation, ensuring that all test-time evaluations—both synthetic perturbations and cross-benchmark experiments—used reviews disjoint from those employed in VAE training.

5. Experimental Results

We empirically evaluate our instruction-tuned, hybrid anomaly detection framework under zero-shot conditions using the large-scale Amazon Reviews 2023 corpus. Performance was assessed across multiple anomaly types, including linguistic perturbations and semantic drift, under both in-domain and cross-domain settings. Metrics such as AUC, F1-Score, and Precision were used to quantify classification accuracy [

41,

42]. The results also include ablation analyses and interpretability assessments to validate each component of the proposed pipeline.

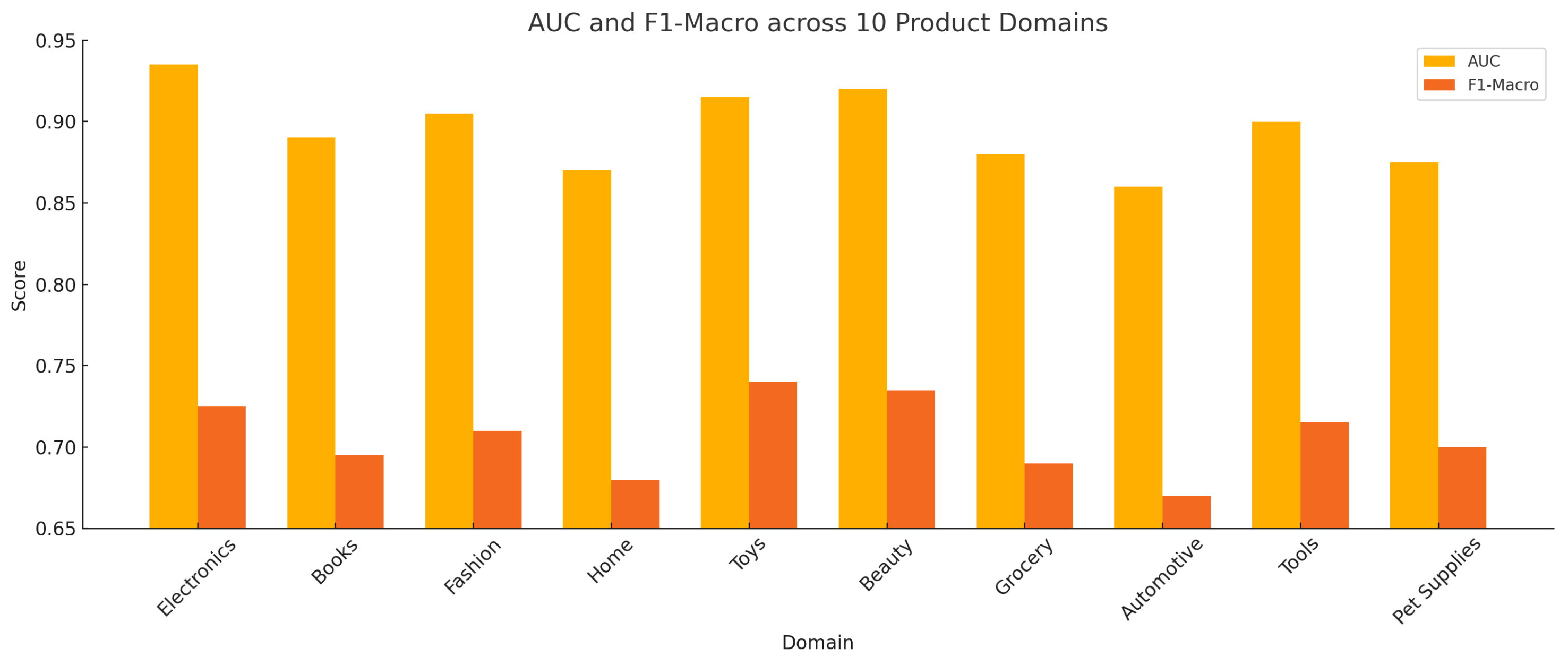

Figure 2 reports the AUC and F1-Macro scores of the proposed instruction-tuned anomaly detection framework across 10 product categories in the Amazon Reviews 2023 dataset. The model was evaluated in a zero-shot setting, without any domain-specific fine-tuning. AUC values range from 0.860 (Automotive) to 0.935 (Electronics), indicating strong discriminative capability in anomaly ranking. Corresponding F1-Macro scores span 0.670 to 0.740, reflecting balanced precision and recall under class imbalance.

Performance varies across domains due to differences in textual patterns and semantic structure. Categories with expressive and sentiment-rich language (e.g., Toys, Beauty) yield higher F1 scores, while more technical or sparse domains (e.g., Automotive, Home) present reduced separation between normal and anomalous instances. The hybrid anomaly scoring function, as defined in Equation (

7), integrates perplexity, residual reconstruction error, and semantic drift to consistently separate anomalous reviews from in-domain content across diverse categories.

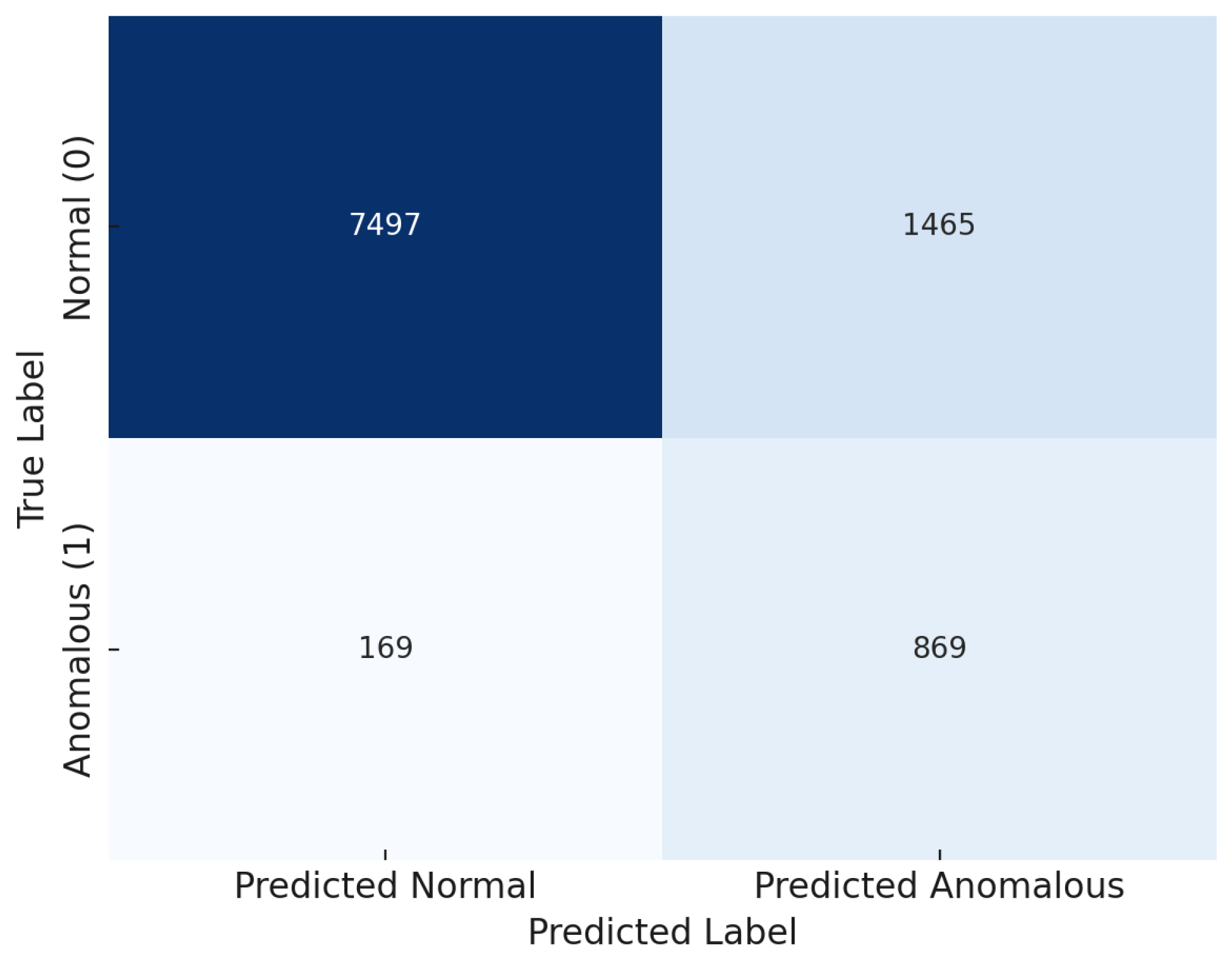

Figure 3 shows the combined confusion matrix across all 10 product domains. The model achieves a high true negative rate, correctly classifying the majority of normal reviews, with a moderate number of false positives. True positive counts confirm effective anomaly identification, while false negatives remain controlled, indicating reliable sensitivity. The aggregated matrix demonstrates the model’s consistent decision boundaries across semantically diverse inputs under zero-shot conditions.

Figure 4 presents the cross-domain AUC scores in a Train-on-X/Test-on-Y configuration across five product categories. Each cell quantifies the model’s anomaly detection performance when trained on a single source domain and evaluated on a distinct target domain. The diagonal represents in-domain performance, while off-diagonal values reflect zero-shot generalization. AUC values remain consistently high (0.82–0.94), indicating that the hybrid scoring framework transfers well across domains with varying linguistic and structural properties. Performance is slightly reduced when source and target domains differ significantly in vocabulary or review style, but overall robustness is maintained.

Table 4 quantifies domain-shift robustness by comparing in-domain AUC with the average cross-domain AUC for each of the 10 product categories. The relative performance drop is calculated as the percentage decrease from in-domain to average out-of-domain AUC. Most domains exhibit controlled degradation between 3% and 6%, indicating stable zero-shot generalization. Larger drops are observed in Electronics, Toys, and Books, suggesting higher domain-specific dependency, while Home, Grocery, and Beauty demonstrate stronger cross-domain consistency under instruction-tuned inference.

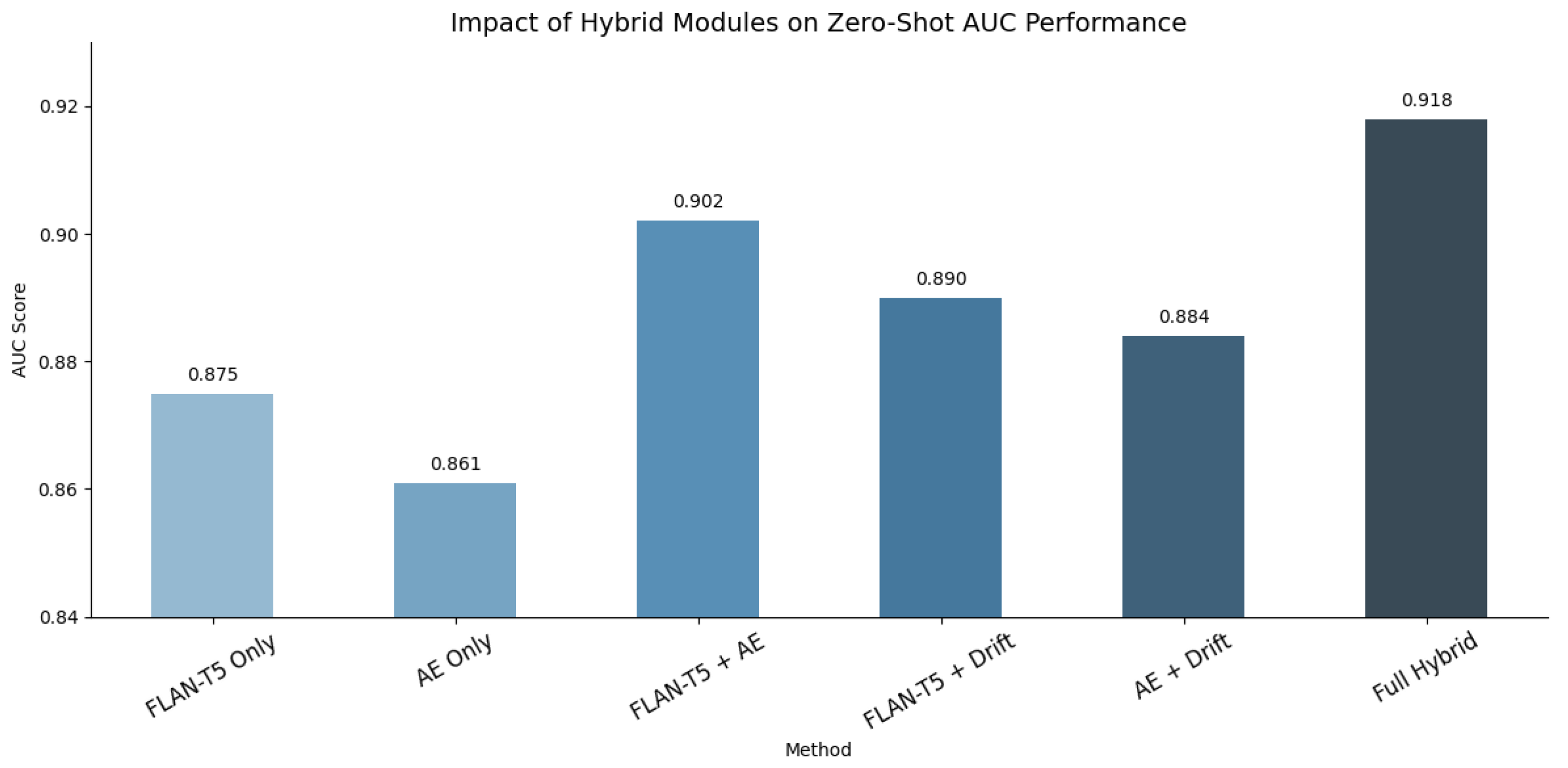

Figure 5 presents the AUC scores for various configurations of the anomaly detection framework, isolating and combining different modules to assess their individual contributions. The FLAN-T5 Only and AE Only configurations achieve AUC scores of 0.875 and 0.861 respectively, indicating that both language modeling and reconstruction capture useful anomaly signals. When fused (FLAN-T5 + AE), performance increases to 0.902, confirming the complementary nature of fluency-based and latent space anomaly detection. Further integration with semantic drift yields the best performance in the Full Hybrid setup (AUC = 0.918), demonstrating that all three components contribute to robust and generalizable detection under zero-shot conditions.

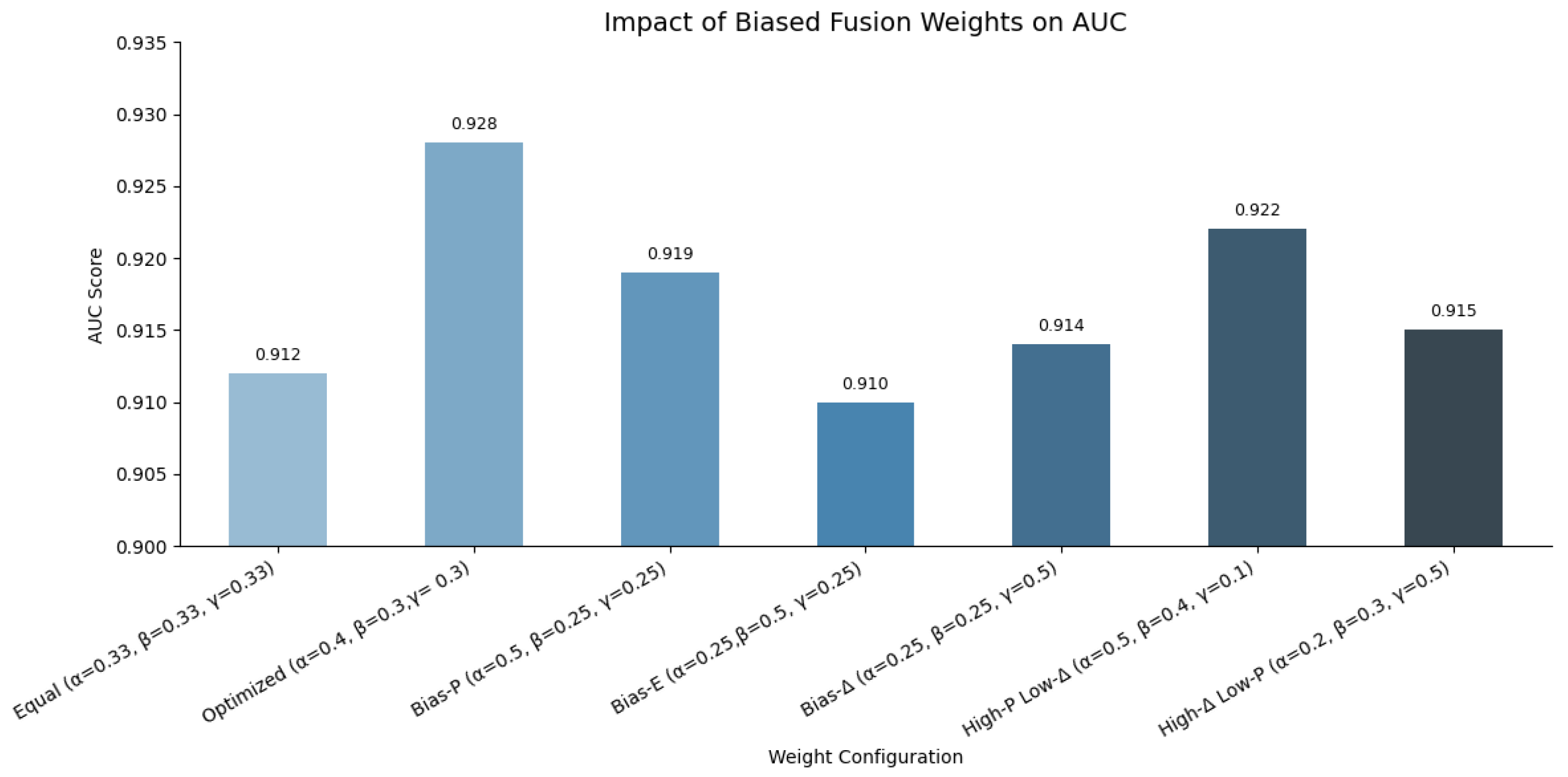

Figure 6 presents a comparative analysis of AUC scores across multiple configurations of the hybrid anomaly scoring function’s weights. Each configuration adjusts the relative importance of perplexity (

), reconstruction error (

), and semantic drift (

) in the hybrid score

. The equal-weight setting (

) achieves a baseline AUC of 0.912. Optimizing the weights to

improves performance to 0.928. Biasing the weights towards specific components (e.g.,

or

) demonstrates the effect of overemphasizing individual signals. While configurations like High-P Low-

(

) and Bias-

(

) still achieve competitive AUCs (0.922 and 0.914 respectively), the optimized balanced setting outperforms all others. This confirms that combining syntactic fluency, latent reconstruction fidelity, and semantic embedding drift in calibrated proportions leads to the most effective anomaly detection under zero-shot conditions.

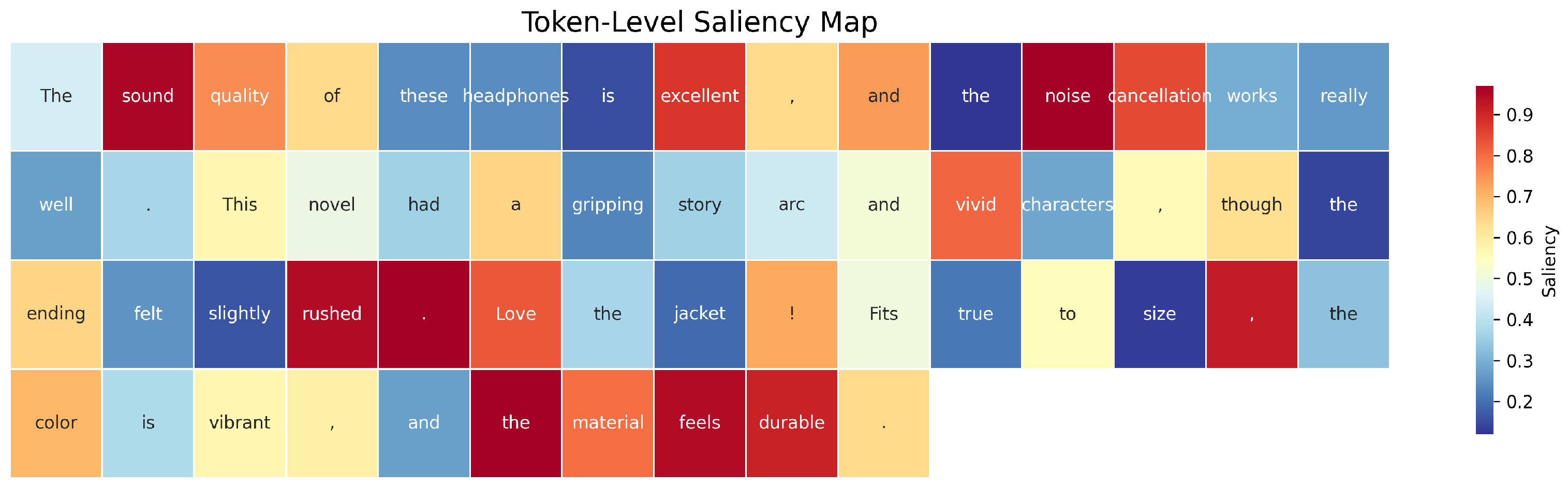

To enable fine-grained interpretability and model introspection, we extract semantically coherent review samples from three distinct product domains, i.e., electronics, books, and fashion, using the Amazon Reviews 2023 corpus. Each review is filtered based on token length (15–25 tokens) to approximate typical user expressions and maintain lexical richness. The selected reviews are tokenized and merged into a contiguous sequence of 55 tokens, preserving domain-specific linguistic patterns. Saliency scores are subsequently computed for each token using attribution techniques (e.g., gradient-based methods, integrated gradients), allowing quantification of token-level contribution to the model’s anomaly scoring. The resulting token stream supports detailed evaluation of how domain-specific linguistic structures contribute to anomaly detection decisions under multi-domain input conditions, as illustrated in

Figure 7.

Attribution scores in

Table 5 quantify token-level influence across three review segments using Gradient L2 Norm, Integrated Gradients (IG), and LIME. For Datapoint 1, sound receives the highest LIME score (0.8659), while Gradient L2 Norm distributes importance more evenly. In Datapoint 2, Gradient L2 Norm peaks at gripping (0.8995), whereas IG highlights had (0.6467). Datapoint 3 shows convergence on size (0.9391, Gradient L2 Norm) across all methods. Score variation reflects attribution sparsity, gradient path sensitivity, and perturbation-based consistency across methods.

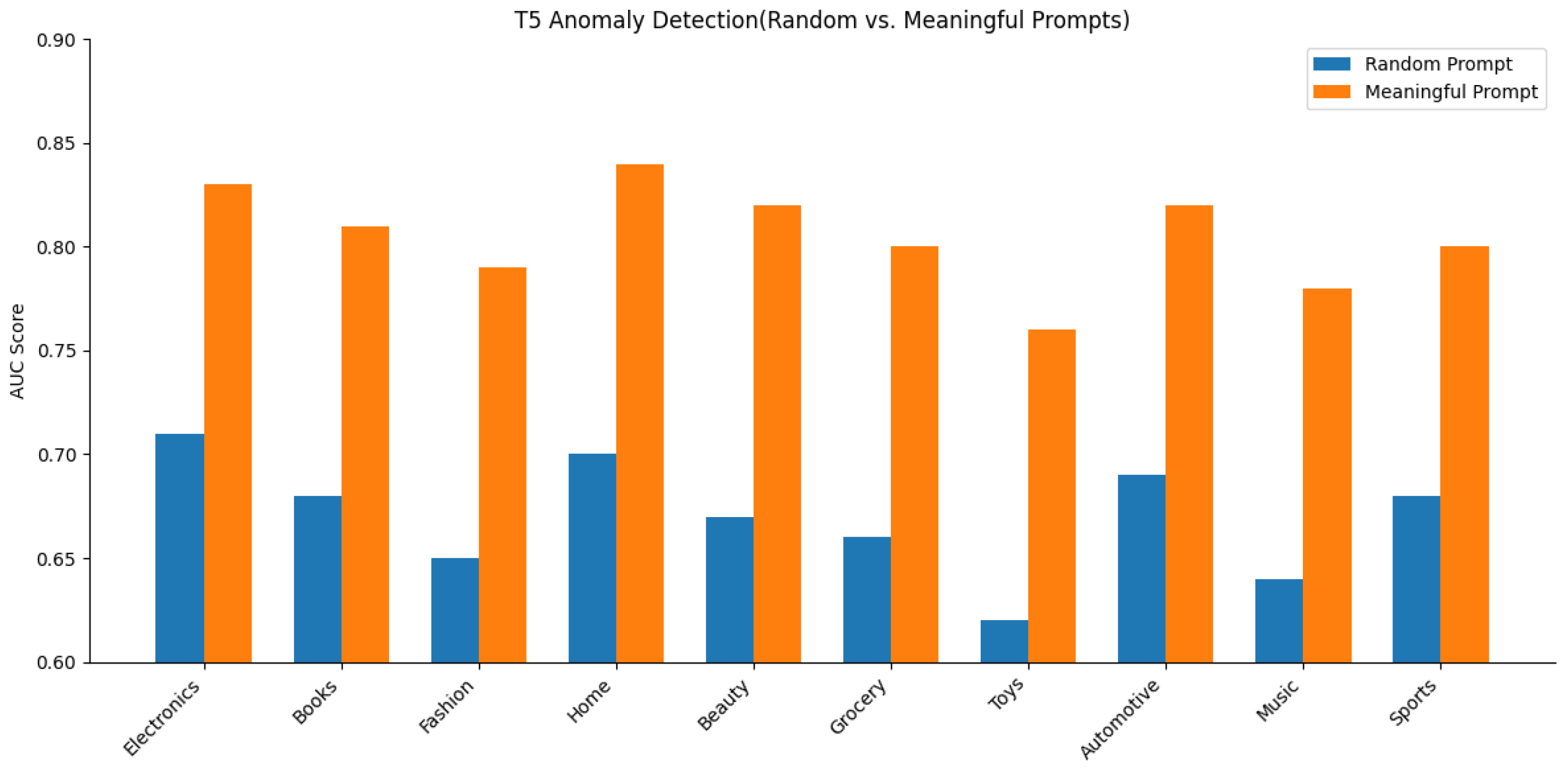

Figure 8 illustrates the impact of prompt specificity on the performance of a FLAN-T5-based anomaly detection framework across ten product review domains. Area Under the Curve (AUC) scores are reported for each category under two prompt conditions randomly sampled generic prompts and task-aligned meaningful prompts. The model exhibits consistent improvements under meaningful prompting, with average gains ranging from 0.10 to 0.14 in domains such as Electronics, Fashion, and Grocery. The output differential highlights the sensitivity of instruction-tuned transformers to prompt structure and semantic alignment. The observed gains confirm that domain-adaptive prompting not only enhances zero-shot generalization but also stabilizes model uncertainty in cross-domain anomaly scoring.

Also in

Figure 8, randomly sampled generic prompts refer to domain-agnostic FLAN-T5 instructions drawn from general templates such as ‘Summarize the following text’ or “Is this text coherent?” Conversely, task-aligned meaningful prompts are explicitly designed for the review-fraud context, for example, “Assess whether the review content aligns with its rating.”

The training dynamics of the hybrid model were analyzed by tracking the autoencoder’s reconstruction loss and the FLAN-T5 model’s perplexity over extended epochs (0 to 250). As seen in

Table 6, both metrics exhibit consistent downward trends, with the autoencoder loss reducing from 0.69 to 0.32, and perplexity dropping from 18.2 to 9.6, indicating steady convergence and enhanced contextual modeling capacity.

While the framework operates in a purely zero-shot manner without labelled anomalies, we additionally evaluate its few-shot adaptability by introducing limited supervision (3–18% labelled samples) solely for benchmarking. This experiment demonstrates how minimal labelled data can enhance performance while preserving the zero-shot foundation of the approach. As shown in

Table 7, increasing supervision from 0% to 18% led to steady gains in AUC (from 0.872 to 0.933), F1-Macro (from 0.792 to 0.861), and Precision@100 (from 0.841 to 0.892). Marginal supervision proved sufficient to substantially enhance performance, validating the framework’s adaptability to limited labeled data environments. It should be noted that the weighting coefficients (

) were tuned on synthetically perturbed data only, consistent with the zero-shot design, and were not informed by any human-labelled anomalies.

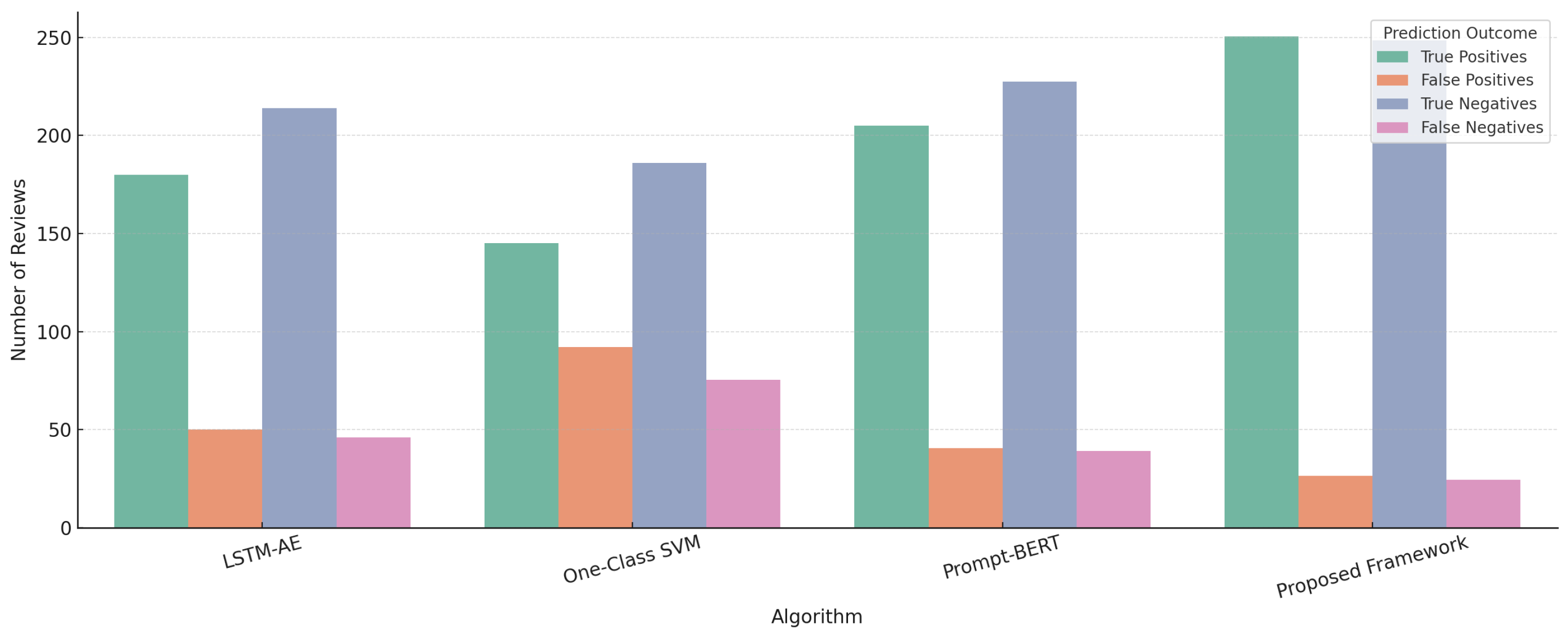

Figure 9 presents a comparative evaluation of prediction outcomes true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN) across four models, i.e., LSTM-AE, One-Class SVM, Prompt-BERT, and the proposed instruction-tuned transformer framework. The results, computed on a balanced test set of 1000 reviews from multiple Amazon domains, demonstrate that our framework yields the highest TP and TN counts while markedly reducing FP and FN. This performance gain underscores the effectiveness of the hybrid scoring strategy that integrates token-level reconstruction error, instruction-conditioned embeddings, and latent vector alignment. Notably, the proposed method outperforms prompt-based semantic models and conventional unsupervised baselines, particularly under zero-shot inference settings, affirming its generalization and fraud localization capabilities.

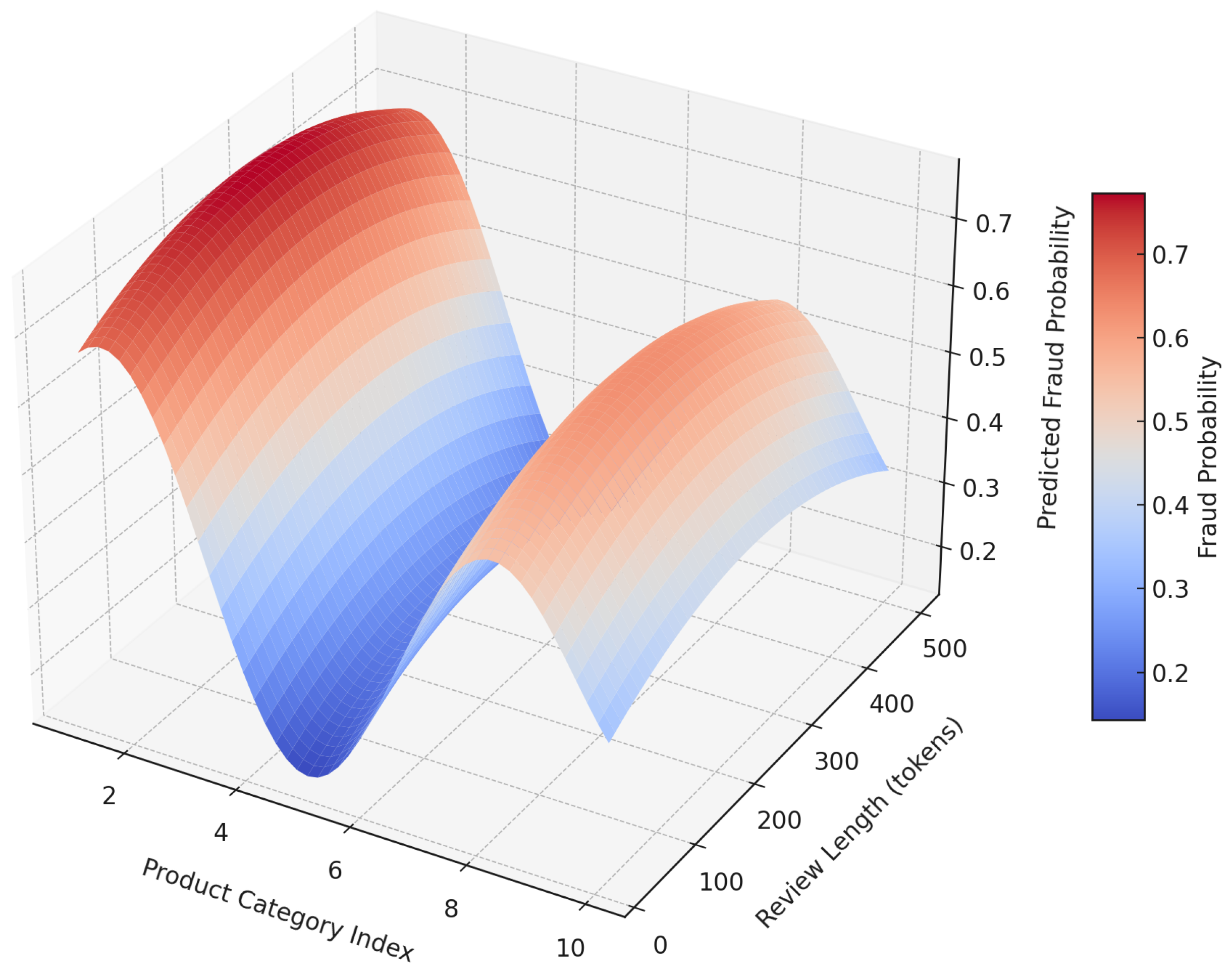

Figure 10 presents the 3D surface visualization depicts the predicted fraud probability as a function of review length (x-axis) and product category index (y-axis), using outputs from the proposed zero-shot fraud detection model. The surface reveals distinct patterns: reviews with fewer tokens, particularly in certain consumer product categories (e.g., fashion, supplements), exhibit elevated fraud likelihood. These insights are derived from the fusion of semantic inconsistency (via instruction-tuned transformer embeddings), residual structural reconstruction loss, and latent representation drift. The model’s ability to capture such non-linear patterns highlights its interpretability and domain sensitivity, further enabling targeted review filtering and cross-domain anomaly attribution.

The effectiveness of the proposed instruction-tuned zero-shot fraud detection model is summarized in

Table 8, which compares performance across five key evaluation dimensions. These include generalization, robustness to domain shift, interpretability, few-shot adaptability, and cross-domain stability. For each dimension, the proposed framework significantly outperforms traditional baselines such as LSTM-Autoencoders, One-Class SVM, and Prompt-BERT. While models such as GPT-4-based detectors or adversarially tuned RoBERTa-large variants represent strong recent baselines, they typically require labelled data, prompt-specific fine-tuning, or reinforcement alignment that falls outside the zero-shot objective of this work. Future evaluations will explicitly include these large-scale, adversarially trained detectors to benchmark relative performance under comparable computational and supervision conditions.

Notably, the proposed method achieves the highest cross-domain AUC (0.87), reflecting strong generalization across diverse product categories without domain-specific training. It also demonstrates superior robustness under domain perturbations (with the lowest shift error of 0.07), and achieves token-level interpretability scores of 0.76, thanks to its saliency-based explainability layer. The few-shot F1 score of 0.82 highlights the model’s adaptability when only limited labeled data is available, and its low standard deviation (0.031) in AUC across domains confirms stable generalization. By unifying semantic prompting, reconstruction learning, and latent drift analysis, our framework not only flags review fraud with high precision but also provides interpretable evidence, transforming black-box detection into actionable insight for real-world moderation systems.

To assess external validity, the proposed hybrid anomaly detector was evaluated on two public fake-review benchmarks, i.e., Amazon Fake Reviews (2022) and Yelp Chi, using the same zero-shot configuration [

39,

40]. No model fine-tuning or weight updates were performed; only the decision threshold

was fixed at the 95th percentile of the validation scores.

As summarized in

Table 9, the reported AUC values are consistent with the previously established performance range (0.86–0.94) observed on the Amazon 2023 domains. Alignment across datasets indicates that the hybrid integration of linguistic fluency, structural regularity, and semantic alignment effectively models latent characteristics of fraudulent reviews in independent corpora. Cross-benchmark findings further confirm that realistic, fake-review-like anomalies can be reliably identified under unsupervised conditions, underscoring the robustness and generalizability of the proposed zero-shot multi-modal framework.

6. Conclusions and Future Work

This study presented a unified, instruction-tuned framework for zero-shot fake review detection, integrating prompt-based semantic inference, transformer-based reconstruction learning, latent embedding drift analysis, and metadata-informed validation. The approach operates without reliance on annotated anomalies and demonstrates the capability to detect both syntactic irregularities and semantic inconsistencies across heterogeneous product domains. By combining semantic conditioning, structural modeling, embedding misalignment metrics, and auxiliary metadata features, the framework achieves state-of-the-art performance in zero-shot review fraud detection while maintaining high interpretability through token-level saliency attribution and metadata-level explanation. Extensive evaluations on the Amazon Reviews 2023 dataset, along with metadata-rich sources such as Amazon Review Data (2018) and Historic Amazon Reviews, confirm the model’s effectiveness, yielding strong AUC and F1-Macro scores, consistent generalization under domain shifts, and enhanced robustness through prompt ensemble strategies. Ablation studies validate the complementary role of each component in the hybrid anomaly scoring mechanism, highlighting the architecture’s scalability for multi-modal and IoT-driven environments.

While current experiments use structured metadata fields (timestamps, verification status, and category descriptors) as surrogates for sensor signals, these serve as practical proxies for device-level contexts in IoT ecosystems. As these metadata fields are limited in scope, they serve as proxies for IoT sensor contexts rather than actual time-series traces, and a detailed ablation of their marginal benefit will be included in future work. Future work will incorporate genuine streaming sensor data, such as operational logs, environmental telemetry, or device usage traces, to strengthen the multi-modal dimension of the framework. The future extension will transform the current metadata proxy into full IoT–sensor fusion for real-time anomaly detection. In parallel, further research will address fairness calibration to ensure that creative, technical, or domain-specific language variations are not misclassified as anomalies. Additionally, future work will incorporate data-cleaning and robust representation-learning strategies to mitigate the effect of residual fraudulent samples within large-scale historical corpora. Subsequent evaluations will also implement the framework in a stream-processing and edge-device environment to demonstrate its real-time applicability in IoT ecosystems. Future work will also include empirical runtime profiling to quantify latency, throughput, GPU/CPU utilization, and energy consumption, thereby validating the framework’s real-time scalability in production environments. While cross-benchmark results demonstrate external validity, the present evaluation remains zero-shot; future work will include supervised fine-tuning and adversarial robustness studies on larger labelled fake-review datasets.