MCP-YOLO: A Pruned Edge-Aware Detection Framework for Real-Time Insulator Defect Inspection via UAV

Abstract

1. Introduction

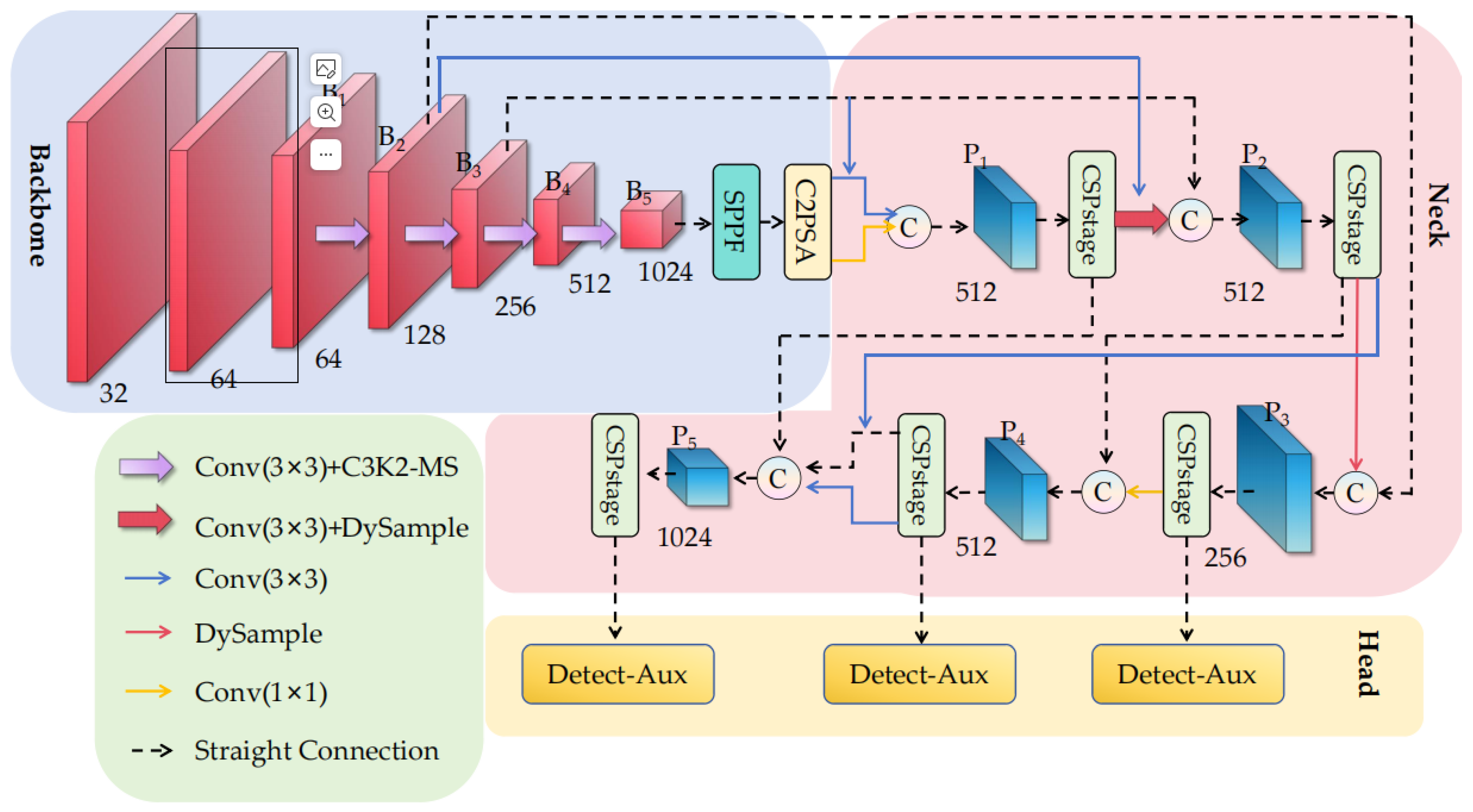

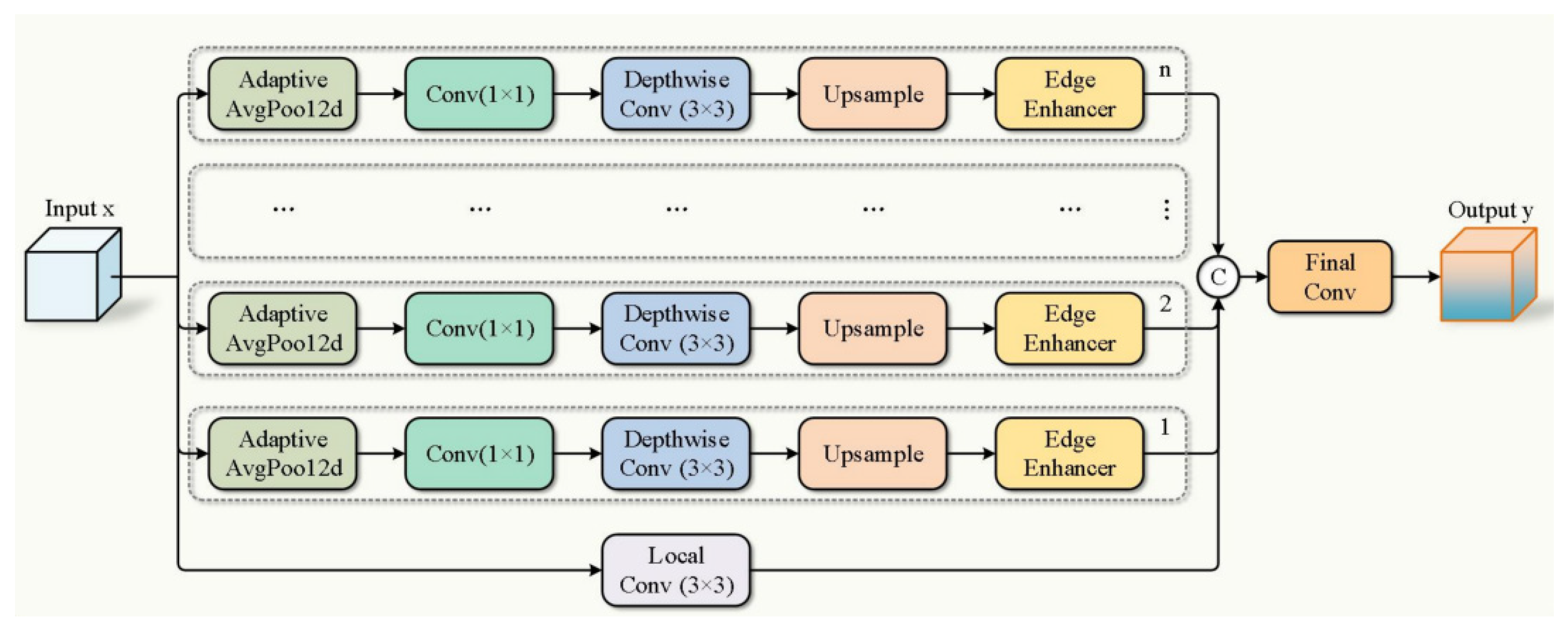

- In the backbone network, we introduce MS-EdgeNet to replace residual blocks in C3K2. The Multi-Scale Edge Information Enhancement Module (MS-EdgeNet) fundamentally strengthens the network’s perception of multi-granularity edge features through combining edge enhancement at multiple scales. This improves the model’s adaptability to complex scenes, enabling superior detection of targets and occluded objects in challenging environments. Additionally, grouped convolution reduces parameter count while preserving spatial design integrity.

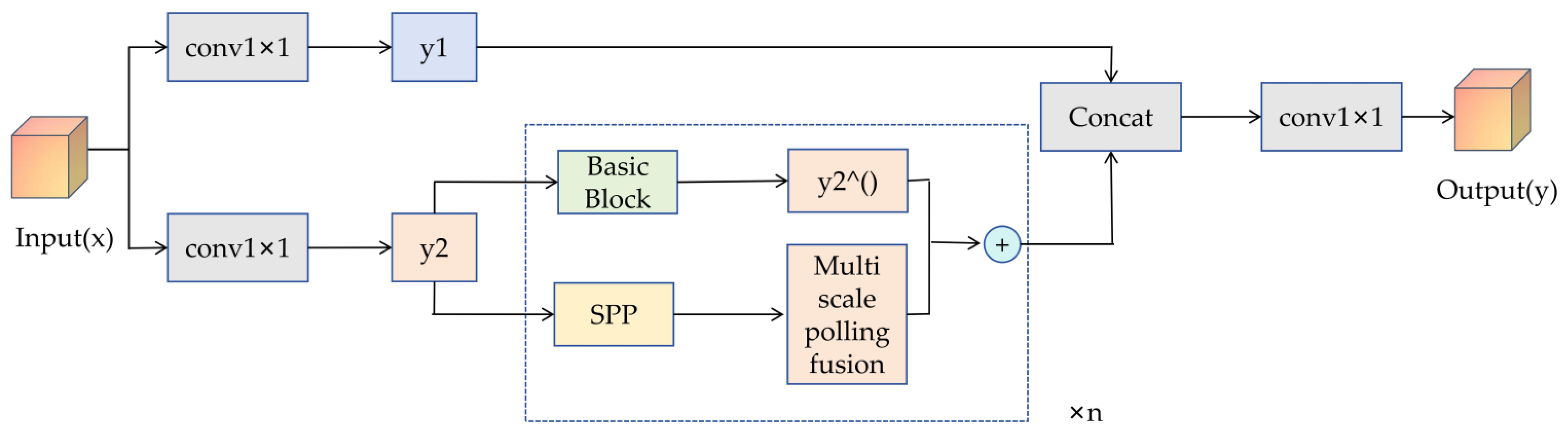

- The DyFPN module enhances the Neck structure by combining the DySample module [11] with the RepGFPN module from DAMO-YOLO [12]. RepGFPN employs a multi-branch structure during training through re-parameterization to strengthen feature fusion capabilities. By integrating DySample, information flows more flexibly across different levels. This improves the model’s detection performance for objects of varying sizes, particularly in scenarios involving occlusion or small targets.

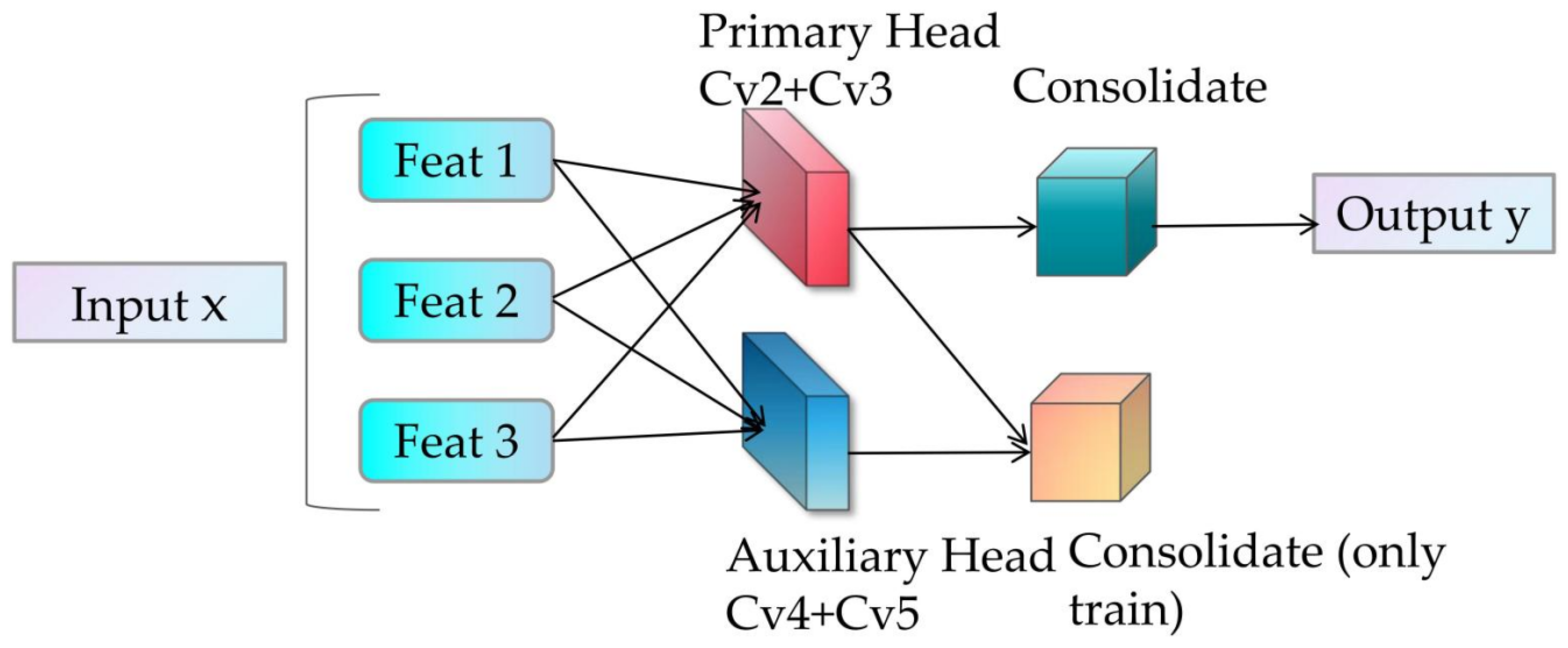

- An auxiliary detection head (Auxiliary Head) [13] is incorporated at the detection head position. The original YOLOv11 lacks an auxiliary head, resulting in absence of additional supervision during training. The auxiliary detection head enhances gradient flow during training, particularly for multi-scale objects or occlusion cases. During training, the auxiliary detection head improves the model’s generalization capability and detection accuracy, while during inference, the auxiliary head is removed to reduce computational load and memory usage [14].

- To achieve model lightweighting, the Group SLIM pruning method [15,16] is employed for model compression. This approach reduces model size without requiring modifications to the original detection architecture. It not only enhances the model’s generalization capability and robustness by mitigating overfitting risks but also effectively addresses hardware resource constraints and computational efficiency imbalances faced by UAV platforms in small object detection within complex scenarios, achieving dual optimization of algorithm performance and deployment environment.

2. Related Work

3. Method

3.1. MCP-YOLO Structural Framework

- (1)

- Multi-scale edge enhancement capability to address the challenge of detecting small and occluded targets in complex backgrounds—This is achieved through our C3k2-MS module, which replaces the Bottle Neck in C3K2 with MS-EdgeNet, fundamentally strengthening the network’s perception of multi-granularity edge features by integrating edge details across different scales.

- (2)

- Dynamic multi-scale feature fusion capability to handle varying sizes of insulator defects and partial occlusions—This capability is realized by our DyFPN module, which combines DySample’s lightweight dynamic upsampling with RepGFPN’s multi-branch structure, enabling flexible information flow across different levels.

- (3)

- Enhanced training supervision capability to improve generalization under small-sample conditions and prevent overfitting—This is implemented through our Auxiliary Head, which provides additional supervision signals during training to enhance gradient flow, while being removed during inference to maintain efficiency.

- (4)

- Lightweight deployment capability to ensure real-time performance on resource-constrained UAV platforms—This is enabled by Group SLIM pruning method, which achieves multi-level compression while maintaining detection accuracy.

3.2. Detailed Introduction to the C3k2-MS Module

3.3. Detailed Introduction to the DyFPN Module

3.4. Introduction to Detect-Aux

3.5. Lightweight Adjustment of MCP-YOLO

4. Experiment

4.1. Datasets

- (1)

- Background complexity: The images were captured across diverse terrains typical of northern China’s transmission line corridors, including dense forests with varying vegetation, agricultural farmlands with seasonal crop variations, mountainous regions with complex topography, urban-industrial areas with building interference, and open plains under different weather conditions. These varied backgrounds create significant detection challenges, particularly when insulators appear against cluttered or similarly colored backgrounds.

- (2)

- Insulator diversity: The dataset includes three main insulator types commonly used in China’s power grid: glass insulators with their characteristic transparent/green coloration, porcelain insulators featuring white/gray ceramic surfaces, and composite insulators with polymer housings. The color variations pose particular challenges—glass insulators often blend with vegetated backgrounds, white porcelain insulators can be indistinguishable against cloudy skies or snow, and weathered insulators exhibit discoloration that complicates defect identification.

- (3)

- Defect characteristics: The dataset captures two critical defect categories encountered in actual grid operations: flashover damage showing characteristic burn marks and surface degradation, and broken/cracked insulators with varying degrees of structural damage. These defects were identified and verified by experienced grid maintenance personnel during routine inspections.

4.2. Evaluation Metrics

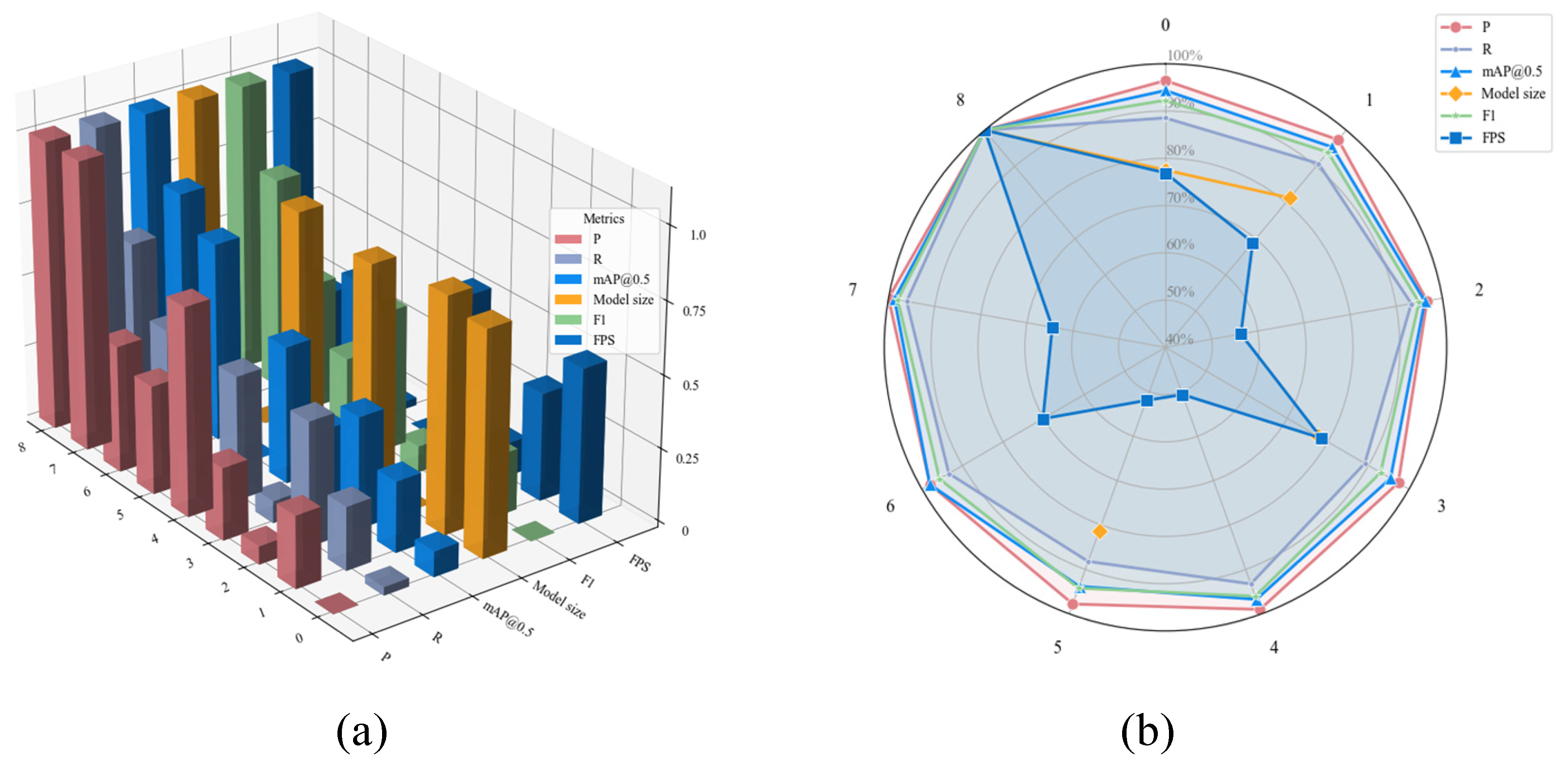

4.3. Model Comparison Experiment

4.4. Ablation Experiments

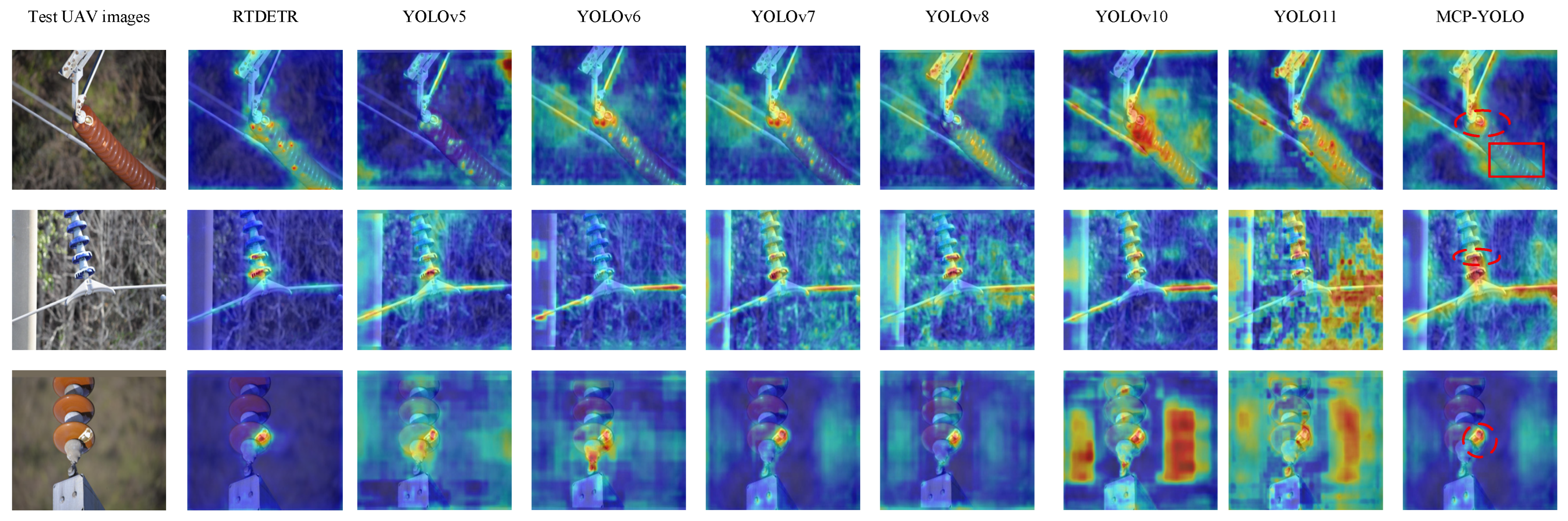

4.5. Visualization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| MCP | Multi-scale Complex-background detection and Pruning |

| UAV | Unmanned Aerial Vehicle |

| P | Precision |

| R | Recall |

| mAP | MeanAverage Precision |

| FPS | Frames Per Second |

| RTDETR | Real-Time Detection Transformer |

| CNN | Convolutional Neural Networks |

| SSD | Single Shot MultiBox Detector |

References

- Khanam, R.; Hussain, M. YOLO11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Yang, W.; Sun, P.; Sima, W.; Yang, M.; Yuan, T.; Li, Z.; Li, G.; Zhao, X. Study on Self-Healing RTV Composite Coating Material and Its Properties for Antifouling Flashover of High-Voltage Insulators. In Proceedings of the 2024 7th International Conference on Energy, Electrical and Power Engineering (CEEPE), Yangzhou, China, 26–28 April 2024; pp. 283–287. [Google Scholar] [CrossRef]

- Alhassan, A.B.; Zhang, X.; Shen, H.; Xu, H. Power transmission line inspection robots: A review, trends and challenges for future research. Int. J. Electr. Power Energy Syst. 2020, 118, 105862. [Google Scholar] [CrossRef]

- Katrasnik, J.; Pernus, F.; Likar, B. A survey of mobile robots for distribution power line inspection. IEEE Trans. Power Deliv. 2009, 25, 485–493. [Google Scholar] [CrossRef]

- Cao, Y.; Xu, H.; Su, C.; Yang, Q. Accurate glass insulators defect detection in power transmission grids using aerial image augmentation. IEEE Trans. Power Deliv. 2023, 38, 956–965. [Google Scholar] [CrossRef]

- Lin, H.; Zhou, J.; Gan, Y.; Vong, C.-M.; Liu, Q. Novel up-scale feature aggregation for object detection in aerial images. Neurocomputing 2020, 411, 364–374. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A comprehensive review of YOLO architectures in computer vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Sapkota, R.; Qureshi, R.; Calero, M.F.; Badjugar, C.; Nepal, U.; Poulose, A.; Vaddevolu, U.B.P. YOLOv10 to its genesis: A decadal and comprehensive review of the You Only Look Once series. arXiv 2024, arXiv:2406.19407. [Google Scholar]

- You, C.; Zhang, R. 3D Trajectory Optimization in Rician Fading for UAV-Enabled Data Harvesting. IEEE Trans. Wirel. Commun. 2019, 18, 3192–3207. [Google Scholar] [CrossRef]

- Peng, J.; Cai, Y.; Yuan, J.; Ying, K.; Yin, R. Joint Optimization of 3D Trajectory and Resource Allocation in Multi-UAV Systems via Graph Neural Networks. In Proceedings of the 2025 IEEE 101st Vehicular Technology Conference (VTC2025-Spring), Oslo, Norway, 17–20 June 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to Upsample by Learning to Sample. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 6004–6014. [Google Scholar] [CrossRef]

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.; Zhang, Y.; Sun, X. Damo-YOLO: A Report on Real-Time Object Detection Design. arXiv 2022, arXiv:2211.15444. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Traina ble bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Jin, G.; Taniguchi, R.-I.; Qu, F. Auxiliary Detection Head for One-Stage Object Detection. IEEE Access 2020, 8, 85740–85749. [Google Scholar] [CrossRef]

- Friedman, J.; Hastie, T.; Tibshirani, R. A note on the group lasso and a sparse group lasso. arXiv 2010, arXiv:1001.0736. [Google Scholar] [CrossRef]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning efficient convolutional networks through network slimming. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2736–2744. [Google Scholar]

- Ross, T.Y.; Dollár, G. Focal loss for dense object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2980–2988. [Google Scholar]

- Wei, L.; Dragomir, A.; Dumitru, E.; Christian, S.; Scott, R.; Cheng-Yang, F.; Berg, A.C. Ssd: Single Shot Multibox Detector; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3: An incremental improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; Springer: Berlin/Heidelberg, Germany, 2018; Volume 1804, pp. 1–6. [Google Scholar]

- Ge, Z. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the European Conference on Computer Vision (ECCV); Springer: Cham, Switzerland, 2025; pp. 1–24. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Ding, G.; Han, J.; Lin, Z. Yolov10: Real-time end-to end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S. Faster R-CNN: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Wan, Z.; Lan, Y.; Xu, Z.; Shang, K.; Zhang, F. DAU-YOLO: A Lightweight and Effective Method for Small Object Detection in UAV Images. Remote Sens. 2025, 17, 1768. [Google Scholar] [CrossRef]

- Wang, Y.; Song, X.; Feng, L.; Zhai, Y.; Zhao, Z.; Zhang, S.; Wang, Q. MCI-GLA Plug-In Suitable for YOLO Series Models for Transmission Line Insulator Defect Detection. IEEE Trans. Instrum. Meas. 2024, 73, 9002912. [Google Scholar] [CrossRef]

- Qu, F.; Lin, Y.; Tian, L.; Du, Q.; Wu, H.; Liao, W. Lightweight Oriented Detector for Insulators in Drone Aerial Images. Drones 2024, 8, 294. [Google Scholar] [CrossRef]

- He, M.; Qin, L.; Deng, X.; Liu, K. MFI-YOLO: Multi-Fault Insulator Detection Based on an Improved YOLOv8. IEEE Trans. Power Deliv. 2024, 1, 39. [Google Scholar] [CrossRef]

- Lu, Y.; Li, D.; Li, D.; Li, X.; Gao, Q.; Yu, X. A Lightweight Insulator Defect Detection Model Based on Drone Images. Drones 2024, 8, 431. [Google Scholar] [CrossRef]

- Luo, B.; Xiao, J.; Zhu, G.; Fang, X.; Wang, J. Occluded Insulator Detection System Based on YOLOX of Multi-Scale Feature Fusion. IEEE Trans. Power Deliv. 2024, 39, 1063–1074. [Google Scholar] [CrossRef]

- Gao, P.; Wu, T.; Song, C. Cloud–Edge Collaborative Strategy for Insulator Recognition and Defect Detection Model Using Drone-Captured Images. Drones 2024, 8, 779. [Google Scholar] [CrossRef]

- Li, D.; Lu, Y.; Gao, Q.; Li, X.; Yu, X.; Song, Y. LiteYOLO-ID: A Lightweight Object Detection Network for Insulator Defect Detection. IEEE Trans. Instrum. Meas. 2024, 73, 5023812. [Google Scholar] [CrossRef]

- Sun, S.; Chen, C.; Yang, B.; Yan, Z.; Wang, Z.; He, Y.; Fu, J. ID-Det: Insulator Burst Defect Detection from UAV Inspection Imagery of Power Transmission Facilities. Drones 2024, 8, 299. [Google Scholar] [CrossRef]

- Huang, X.; Jia, M.; Tai, X.; Wang, W.; Hu, Q.; Liu, D.; Han, H. Federated knowledge distillation for enhanced insulator defect detection in resource-constrained environments. IET Comput. Vis. 2024, 18, 1072–1086. [Google Scholar] [CrossRef]

- Qiu, J.; Chen, C.; Liu, S.; Zhang, H.-Y.; Zeng, B. SlimConv: Reducing Channel Redundancy in Convolutional Neural Networks by Features Recombining. IEEE Trans. Image Process. 2021, 30, 6434–6445. [Google Scholar] [CrossRef]

- Kumar, N.P.; Archie, M.; Levin, D. Bilinear Upsampling Gradients Using Convolutions In Neural Networks—Generalization To Other Schemes. In Proceedings of the 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), Delhi, India, 6–8 July 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Min, X.; Zhou, W.; Hu, R.; Wu, Y.; Pang, Y.; Yi, J. LWUAVDet: A Lightweight UAV Object Detection Network on Edge Devices. IEEE Internet Things J. 2024, 11, 24013–24023. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

| Dataset Split | Number of Images | Percentage |

|---|---|---|

| Training Set | 2196 | 70% |

| Validation Set | 595 | 20% |

| Test Set | 300 | 10% |

| Total | 3091 | 100% |

| Name | System Configuration |

|---|---|

| CPU | 12th Gen Intel(R) Core(TM) i7-12700KF 3.60 GHz |

| GPU | NVIDIA GeForce RTX 4060 |

| Memory | 16 GB |

| Operating system | Windows 11 |

| Deep learning framework | Pytorch |

| IDE | Anaconda3 |

| Data processing | Python3.8 |

| Algorithm | Precision | Recall | mAP@0.5 | Model Size (M) | F1score (%) | FPS |

|---|---|---|---|---|---|---|

| YOLOv5 | 0.879 | 0.805 | 0.862 | 9.55 | 84.04 | 104.16 |

| YOLOv6 | 0.837 | 0.776 | 0.834 | 16.15 | 80.53 | 175 |

| YOLOv7 | 0.895 | 0.883 | 0.918 | 139.2 | 88.90 | 80.64 |

| YOLOv8 | 0.897 | 0.82 | 0.887 | 11.46 | 85.68 | 186.41 |

| YOLOv10 | 0.828 | 0.81 | 0.864 | 8.64 | 81.89 | 186.41 |

| YOLO11 | 0.873 | 0.788 | 0.869 | 9.85 | 82.83 | 192.30 |

| RTDETR | 0.917 | 0.931 | 0.935 | 75.81 | 92.39 | 83.22 |

| MCP-YOLO | 0.905 | 0.89 | 0.921 | 8.65 | 89.74 | 250 |

| Experiement Number | Base Line | C3k2-MS | DyFPN | Detect- Aux | Group SLIM | Precision | Recall | mAP @0.5 | Model Size (M) | F1 Score (%) | FPS |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | √ | 0.873 | 0.788 | 0.869 | 9.85 | 82.83 | 192.30 | ||||

| 1 | √ | √ | 0.881 | 0.808 | 0.878 | 9.65 | 84.29 | 172.41 | |||

| 2 | √ | √ | 0.875 | 0.83 | 0.886 | 13.99 | 85.19 | 140.84 | |||

| 3 | √ | √ | 0.881 | 0.793 | 0.879 | 9.85 | 83.46 | 196.07 | |||

| 4 | √ | √ | √ | 0.896 | 0.83 | 0.891 | 13.79 | 86.17 | 126.58 | ||

| 5 | √ | √ | √ | 0.885 | 0.785 | 0.864 | 9.65 | 84.55 | 129.87 | ||

| 6 | √ | √ | √ | 0.887 | 0.831 | 0.903 | 13.99 | 85.86 | 175.43 | ||

| 7 | √ | √ | √ | √ | 0.905 | 0.855 | 0.909 | 13.79 | 87.93 | 161.29 | |

| 8 | √ | √ | √ | √ | √ | 0.905 | 0.89 | 0.921 | 8.65 | 89.74 | 250 |

| Experiement Number | Base Line | C3k2-MS | DyFPN | Detect- Aux | SE Block | Precision | Recall | mAP @0.5 |

|---|---|---|---|---|---|---|---|---|

| 8 | √ | √ | 0.871 | 0.775 | 0.85 | |||

| 9 | √ | √ | √ | √ | 0.868 | 0.818 | 0.891 | |

| 10 | √ | √ | √ | √ | √ | 0.887 | 0.864 | 0.903 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, H.; Guo, S.; Pan, X.; Shen, Q.; Xu, Y.; Ma, J.; Qu, Z. MCP-YOLO: A Pruned Edge-Aware Detection Framework for Real-Time Insulator Defect Inspection via UAV. Sensors 2025, 25, 7049. https://doi.org/10.3390/s25227049

Sun H, Guo S, Pan X, Shen Q, Xu Y, Ma J, Qu Z. MCP-YOLO: A Pruned Edge-Aware Detection Framework for Real-Time Insulator Defect Inspection via UAV. Sensors. 2025; 25(22):7049. https://doi.org/10.3390/s25227049

Chicago/Turabian StyleSun, Hongbin, Shijun Guo, Xin Pan, Qiuchen Shen, Yaqi Xu, Jianchuan Ma, and Zhanpeng Qu. 2025. "MCP-YOLO: A Pruned Edge-Aware Detection Framework for Real-Time Insulator Defect Inspection via UAV" Sensors 25, no. 22: 7049. https://doi.org/10.3390/s25227049

APA StyleSun, H., Guo, S., Pan, X., Shen, Q., Xu, Y., Ma, J., & Qu, Z. (2025). MCP-YOLO: A Pruned Edge-Aware Detection Framework for Real-Time Insulator Defect Inspection via UAV. Sensors, 25(22), 7049. https://doi.org/10.3390/s25227049