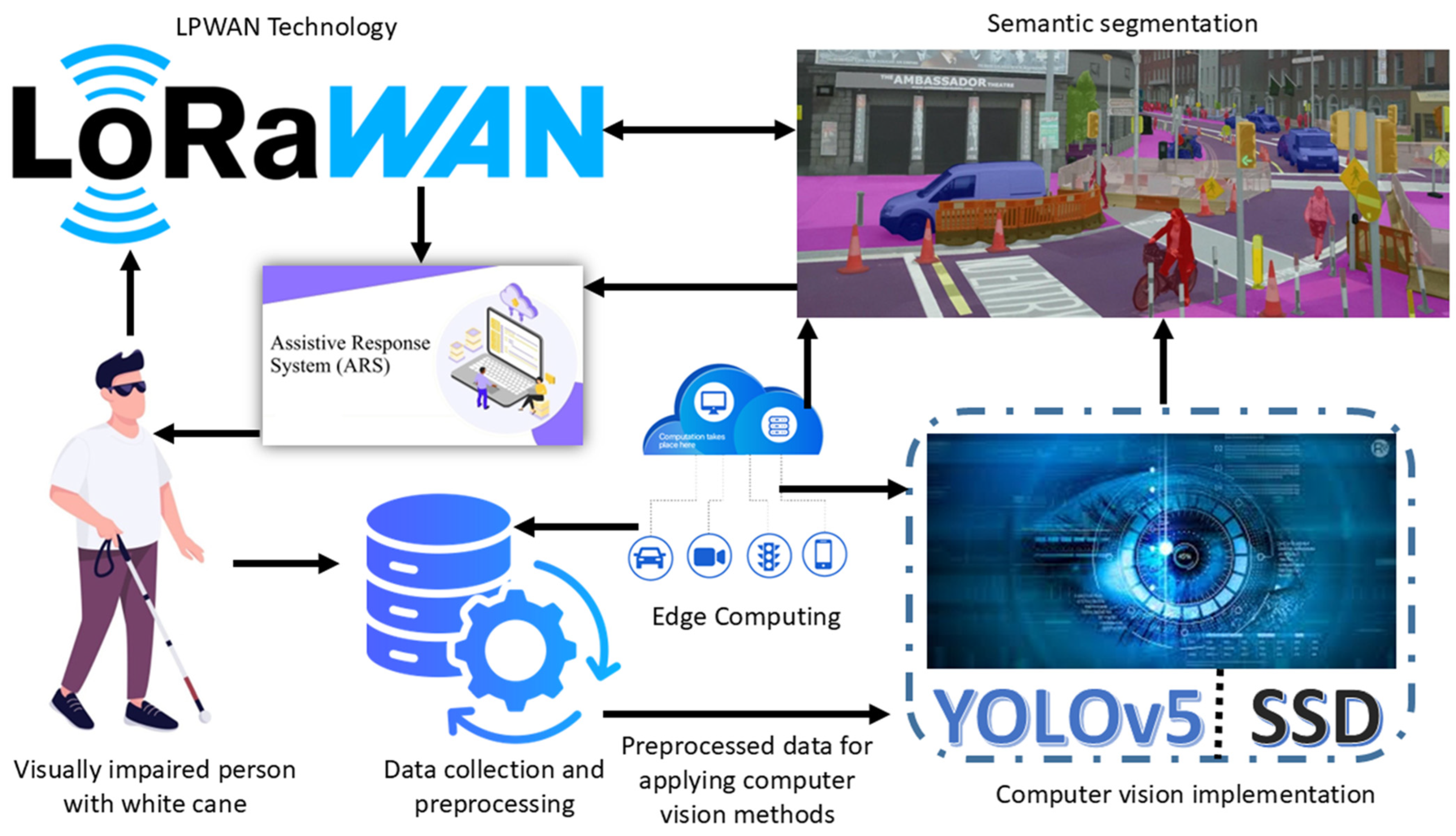

We performed simulations and evaluated the performance of proposed framework with respect to the object detection and classification, while we performed real-world experiments with regard to the implementation of LPWAN.

4.1. Computer-Vision-Based Object Detection and Classification

The “Visually Impaired (White Cane)” [

32] dataset available on Kaggle platform was used; it contains a total of 9309 image files, categorized into two main classes (blind and the white cane). This dataset is useful for the training of machine learning models that are specifically used for identifying visually impaired people and white canes in different scenes. They are appropriately tagged, which makes it easier for supervised learning problems to occur on each image. The dataset is especially suitable for initiatives aimed at improving navigation and security for those with vision impairments by using computer vision.

- b.

Dataset Preprocessing

We preprocess the “Visually Impaired (White Cane)” dataset to transform it into the form required by machine learning algorithms. The data are in .jpg format with .txt label files, which are then preprocessed in order to match the images and their labels. The preprocessing involves splitting the dataset into three subsets: The prevalence of the training set was 70%, while that of the validation and test sets was 20% and 10%, respectively. Organizing the data in this way allows for the equally effective training, fine tuning, and evaluation of machine learning models with subsets of data.

For each subset, we create separate directories for images and labels so that there can be no confusion. Image files are first extracted and then sub-categorized into different sets using the method train_test_split from sklearn. Then create text files with the name of each image and replace the extension with .txt and move them to the correct directories. This systematic approach means that there is always a proper correlation between images and their labels in the different subsets. We also make the dataset usable in machine learning pipelines by handling file operations autonomously.

- c.

Implementation of Yolov5 Model

The YOLOv5 model was used in the context of object detection of the “Visually Impaired (White Cane)” dataset. The intention was to correctly identify and locate visually impaired people and their walking sticks, or white canes, in the images. As a result, YOLOv5 was used due to its fast and accurate detection method and was able to perform real-time object detection.

The dataset is made up of images and their associated label files in which each label contains the bounding box coordinates and the class number of objects in the image. The bounding boxes are tagged in the normalized coordinates, and the tags are then converted back to the original image scale when needed. For data preparation, we read the label files, calculated the corners of the bounding boxes, and, using a script, we overlaid these bounding boxes on the images, which gives a visualization of the models predictions. Other objects were bound with the class IDs written above each box to make it easier to interpret the results given by the model. This step was useful in checking on the correspondence between the labels to the images and the quality of the YOLOv5 predictions. For the bounding boxes, a red rectangle was drawn to help in interpretation, and the class labels were written using text annotations. The applied YOLOv5 model consists of 7,012,822 parameters and 157 layers, with a computational complexity of 15.8 GFLOPs. It was trained for 24 epochs, taking 0.801 h (approximately 48 min) in total, or about 2 min per epoch. Finally, with training and optimization, the final model size was 14.4 MB, obtaining proper performance and resource balance.

As illustrated in

Figure 2, the different visualizations confirmed the ability of YOLOv5 to identify objects with high accuracy, given that the dataset was well structured with clear labeling of objects. The process not only showed the strong sides of the model but also regions where perhaps false positive or false negative results can occur. These visual checks coalesced with the quantitative measures collected during the model assessment phase and highlighted areas to improve within training.

We also remove the optimizer state from the saved model weights file (last.pt and best.pt) to make it compressed and to improve the speed of the model during inference. We test the model with the best weights (best.pt) on a dataset of 1871 images; 1909 instances were detected. We combine the layers of the proposed model to fine tune it from the perspective of faster inference. Next, we briefly describe the model architecture with 157 layers and 7,012,822 parameters that takes 15.8 GFLOPs to pass forward. Then, we evaluate the Yolov5 model performance and obtain key metrics, which are presented in

Table 1. The metrics in

Table 1 indicate strong performance in both detection and classification tasks, with the model achieving high precision and recall.

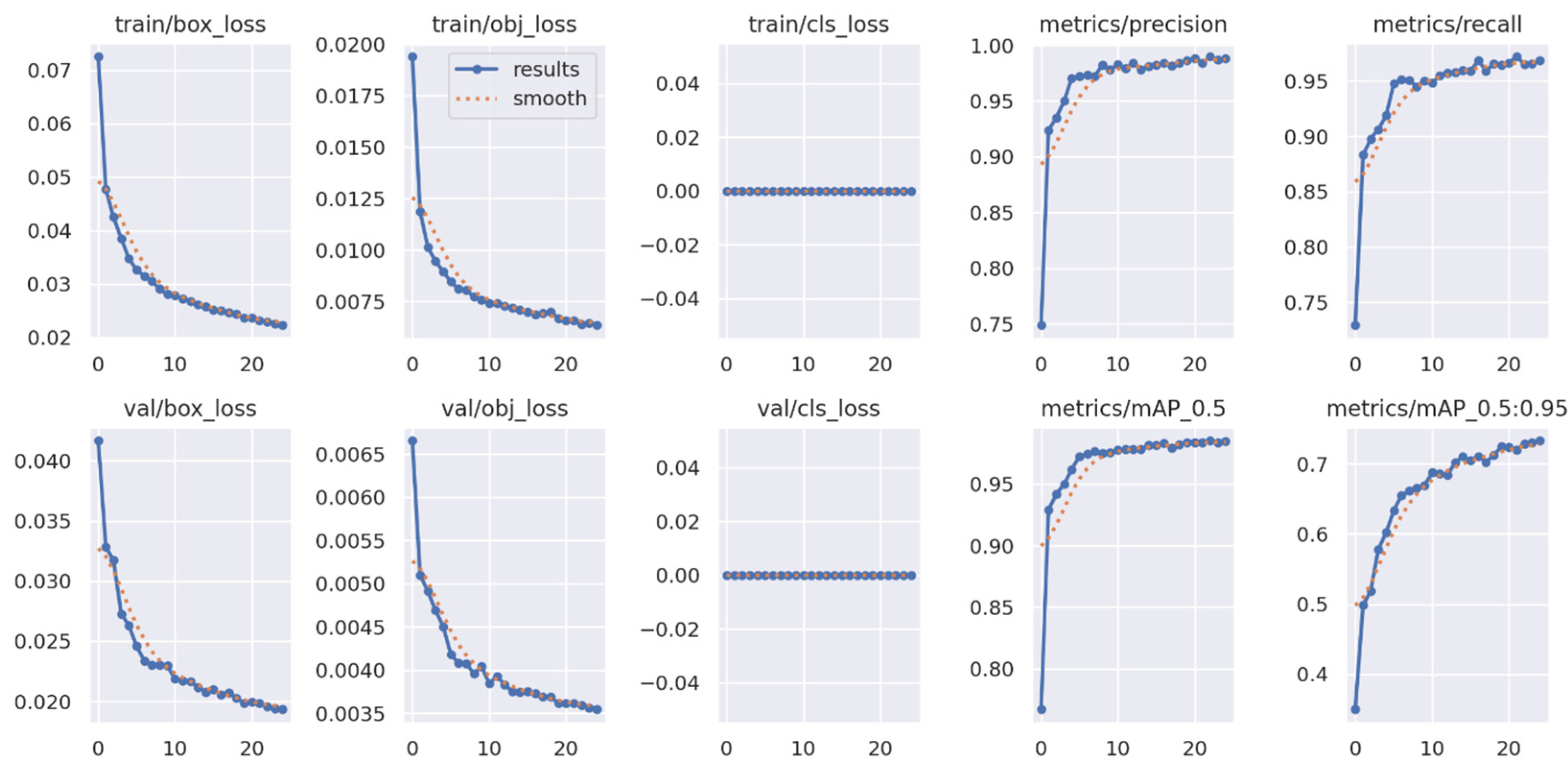

We then plot the results using a plot shown in

Figure 3, which shows the various loss metrics, which include box_loss, object_loss, and class_loss, as well as the precision and recall. These metrics indicate how the learning process is going in the course of model training; that is, they indicate how the model’s abilities to predict the coordinates of bounding boxes, to detect objects, and to classify them correctly are changing. The plot also contains the precision and recall statistics that show how well a model was able to find objects (precision) and did not miss most of them (recall). The high values of precision and relatively high recall value mean that the model has very small values of both false positives and false negatives; hence the model would work well practically. Therefore, we can safely state that the YOLOv5 model is efficient and ready for deployment or additional fine-tuning.

- d.

Implementation of SSD Model

We carry out exploration analysis on a dataset and then perform an image detection using a Single Shot MultiBox Detector (SSD) model. The objects to be detected in this particular model include, for instance, visually impaired people and white canes, and the performance of the model is assessed using the validation set. To measure the models’ performance on the problem, we compute various parameters including precision, recall, and mean average precision (mAP) with IoU thresholds that define successful prediction. While evaluating, prediction is calculated for each image present in the validation set. Such predictions are based on the presence of bounding boxes and confidence scores and are compared to the ground truth boxes via IoU. Detection results that have a higher IoU of more than a certain level (for example, 0.5) are considered correct while the rest are labelled as false positives. Additionally, the ones that are not matched with the corresponding prediction are considered false negatives among ground truths. Using these outcomes pooled over the dataset, we calculate precision, recall, and mean average precision as one composite measure.

The measures obtained from the analysis are precision of 0.0023, recall of 0.6634, and mAP of 0.3328 (

Table 2). These scores mean that, although the model has a high recall rate, meaning that most objects are identified, it may suffer from low precision, apparently because it often produces false positives. These parts of the performance suggest that a focus on improving the model structure, changing the anchors, or adding more data to the training set will increase accuracy.

The SSD model was implemented for detecting visually impaired individuals and white canes, and its performance was not satisfactory with a precision of 0.0023, recall of 0.6634, and mAP of 0.3328. But the detection accuracy on the high false positive rate is not satisfactory, so we chose to transfer to the YOLOv5 model that worked much better. The most accurate YOLOv5 attained a precision of 0.988, recall of 0.969, and mAP of 0.985, which is the highest compared to the other methods. With these improvements, YOLOv5 was chosen as the model of choice for our framework.

- e.

Implementation of Semantic Segmentation

We perform semantic segmentation for the assistive navigation systems to obtain pixel-level information about the image for object detection and mapping of the environment. When performing this, we employ DeepLabV3+, a segmentation model that has shown high levels of performance when it comes to semantic segmentation. The task is thus to map the existing annotations of object detection to segmentation masks and subsequently train the model to output a pixel-wise label for each object in the image.

We address the issue of conversion of YOLO-style annotations to segmentation masks. This is conducted for training, validation, and test datasets and uses label files that contain original annotations in the form of bounding boxes. For every box, the script converts YOLO format coordinates relative to the image size and generates a binary mask for each object using a different class number (mask color). The outcome of the mask is stored in a .png file on the image’s name. This preprocessing step readies the data for training of the DeepLabV3+ model, which in turn will segment the objects on the images at the pixel level.

Finally, based on the analysis of the dataset preparation for the semantic segmentation, it is found that the training set has 6515 images and 6515 masks, and no mask or image is lost, which shows that the training data are perfectly prepared for the training of the model. Likewise, there are 1871 images and 1871 masks in the validation set, and no data are missing in the images or the masks, which means that the validation phase will be conducted on the correct data. For the test set, there are 922 images and 922 masks with no missing images or masks; thus, they are prepared for the evaluation of the model trained in this study. The good thing is that there are no missing data in all splits, which makes the dataset complete, and we can now go ahead and train the semantic segmentation model without worrying about data-related problems.

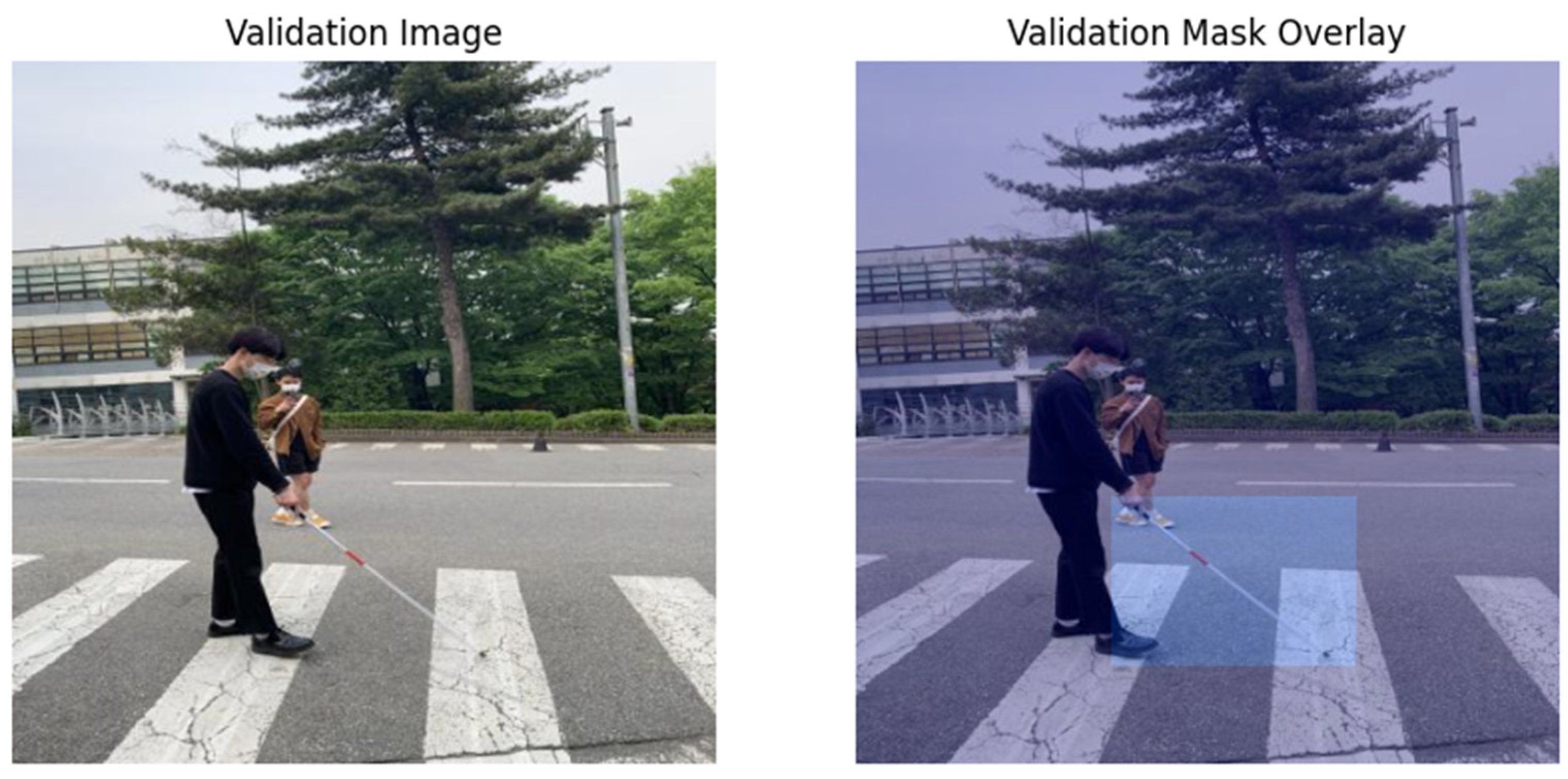

Specifically, we choose an image from the validation subset randomly and then show how it looks together with the segmentation mask. Originally, the image is read from the validation set directory, and the corresponding mask is obtained and reshaped to the image size. The mask is then color-mapped to improve the visualization. Originally, there is an image where the authors place the mask by using a blend of the weighted sum in a way that accentuates the regions where the segmentation model identifies the objects.

Figure 4 also contains two subplots: the validation image is shown as the first subplot, and the second subplot contains an overlay of the segmentation mask that can be easily compared with the image. By using this visualization, one can evaluate the model’s performance of correctly distinguishing and grouping objects in the image.

In

Figure 5, we present an example of an image from the training dataset and its mask. The script now chooses a batch at random from the data loader and takes an image–mask pair out. The image is then reshaped to match the visualization height, width, and number of channels. The mask is in 2D form, and the figure is represented using a color map for enhanced visibility. What is presented on the left is the original image, and on the right one is the mask, where white color indicates the areas containing the segmented objects. This visualization allows one to review the structure and labeling of objects in the image by the model, which gives the opportunity to check the adequacy and quality of the dataset loader when preparing the data for training.

In the next step, we apply the evaluation of the model on the validation set to check how accurate the latter is. The evaluation process involves calculating two key metrics: Mean Intersection over Union (IoU) and Mean Pixel Accuracy. The model is transitioned into evaluation mode, and for every batch of images in the validation data loader, we feed the images through the model and compute the predicted outputs. We then compute IoU by identifying the intersection and union of the predicted and true masks and pixel accuracy by comparing the predicted labels with the true labels at the pixel level. The script sums up the total of IoU and the total of pixels that are accurately segmented for all the batches, and only the average values are then displayed. The validation set has been used to measure the mean IoU at 0.8320 and the mean pixel accuracy at 0.9917. The results presented here show that the model is effective in terms of both pixel-level accuracy and segmentation quality and can indeed correctly detect objects in the validation images and generate precise segmentation maps. From the quantitative results, we can see that the pixel accuracy is very high. This means that the model is right for most of the pixels, and the IoU score means the degree of overlap of the segmented object predicted by the model and the actual object boundary, which means the model is precise enough that it can be used in real-life applications where segmentation is needed.

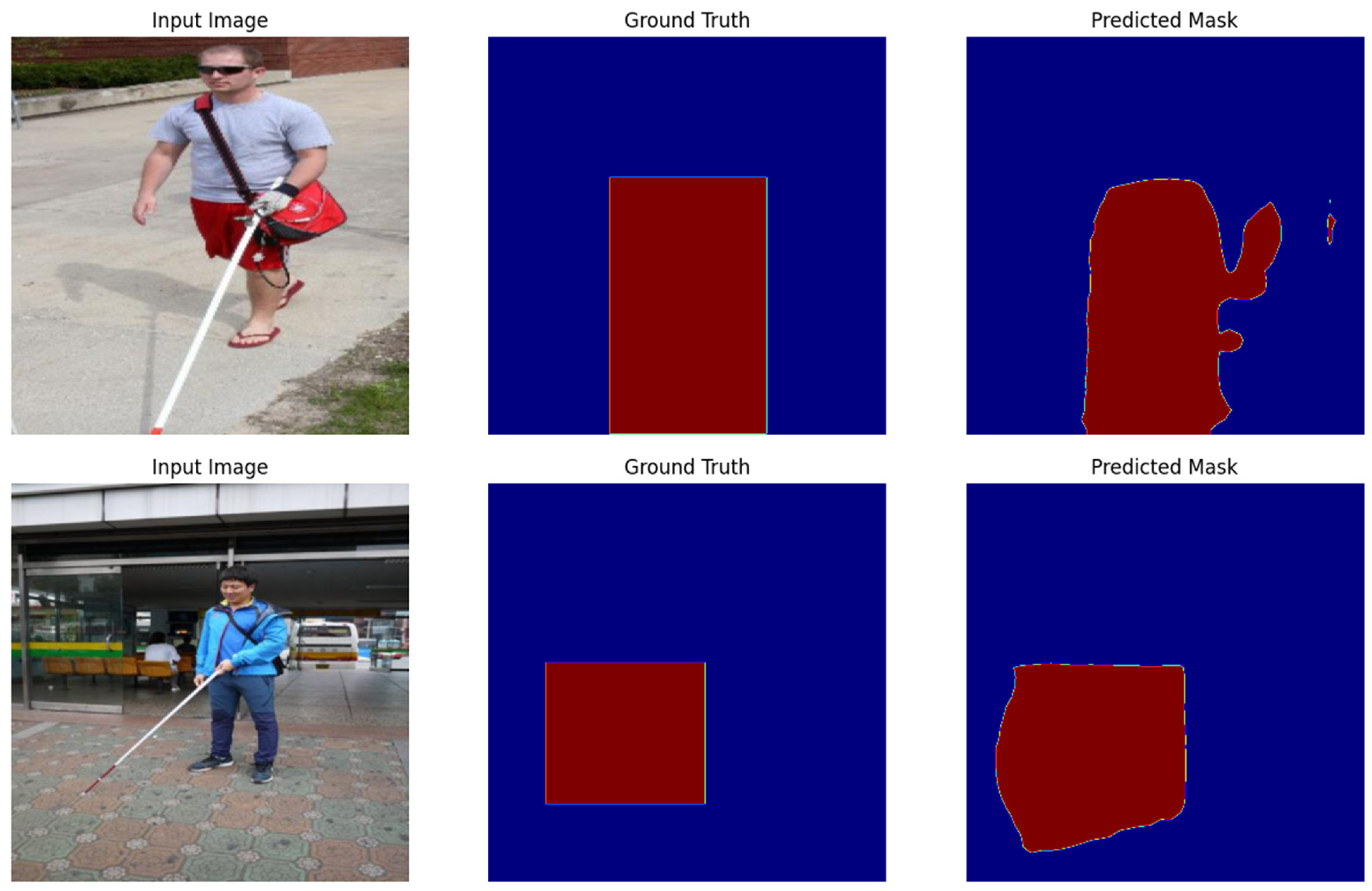

In

Figure 6, we show the results of the model on the validation set, in terms of input image, ground truth mask, and predicted mask for some samples. It then produces a set of images and their ground truth masks from the validation data loader; then, it feeds them to the model to get the prediction. Coming out of the model is a set of logits, and, by applying the ‘argmax’ function, the class labels are obtained. Finally, the original images, the ground truth mask, and the predicted masks are shown in the same panel for the sake of clearer comparison.

The first inset plot in

Figure 6 shows the input image, which gives a view of the original data. The second subplot is the ground truth mask, which shows the actual segmentation of the objects in the image. The third subplot demonstrates the predicted mask, which is the outcome of the model, which has gone through the semantic segmentation step. This allows us to visually judge the segmentation performance of the model, where the closer the predicted mask to the ground truth mask, the better the performance of the model. The figure shows how well the model can split objects and gives some idea of the areas where the model may require fine tuning.

To test the model’s ability to generalize to unseen data, we perform the measurement on the test dataset following training using the validation data. The evaluation process includes quantification of mean IoU and mean pixel accuracy, which are the fundamental measures in semantic segmentation problems. In the evaluation, the model is set to evaluation mode, and then an input forward pass is made on the test images. The previously described bounding boxes are used to segment the predicted masks regarding the ground truth masks to compute the IoU and pixel accuracy. The IoU is computed as the overlap between predicted and true masks as a fraction of the union of predicted and true masks; pixel accuracy measures the percentage of exactly correctly classified pixels.

The results demonstrate that the proposed model has a mean IoU of 0.8456 and mean pixel accuracy of 0.9912 for the test data set. These results suggest that the model performs very well at segmenting objects, as shown by the high pixel accuracy and a high degree of overlap between the predicted masks and the true masks (IoU). It can be inferred that most of the image pixels are correctly classified by the exceptional pixel accuracy, and the high IoU proves that the model identifies the boundaries of the objects.

4.2. LPWAN Implementation

We used LPWAN technology as an application for aiding visually impaired people. More specifically, the protocols we proposed and implemented were an LPWAN-based Assistive Response System (LARS). As a result, to provide efficient data distribution, we used Wi-Fi as the primary means of communication while using LoRaWAN as an additional solution where Wi-Fi connection is not available. This double layer guarantees smooth signal transmission and reception in case of connection with Wi-Fi networks problems or power outages during emergencies. LoRaWAN thus continues to operate during such interruptions, hence providing the assurance of the reliability of the system.

In our system, we use two Heltec Wi-Fi LoRa 32 V2 boards that integrate BLE, Wi-Fi, and LoRa functionalities. In this activity, one device is transmitting the signal while the other device is expected to receive the same signal. For the targeted visually impaired users, the system offers the opportunity to press a panic button, which will result in the immediate sending of the alert message to the recipient. This setup minimizes latency and makes the emergency response mechanism more reliable. The Heltec Wi-Fi LoRa 32 V2 is a low-power consumption IoT development board featuring an ESP32 dual-core processor, an SX127x LoRa/Wi-Fi/BLE, a Li-Po battery charging circuit, and a built-in 0.96-inch OLED display. ESP32 has built-in support for TCP/IP and 802.11 b/g/n Wi-Fi MAC and offers Wi-Fi Direct. The SX1276 transceiver uses Semtech’s proprietary LoRa modulation scheme, enabling high sensitivity of over −148 dBm. This high sensitivity, along with immunity to in-band interference, makes the device highly useful for IoT applications.

The GPS module integrated within the system allows location identification and tracking with at least 2.5 m positional accuracy in two dimensions. This small and inexpensive module is used for such purposes as satellite navigation, speed and location tracking, and navigation of ground, aerial, and marine vehicles. It has an IPX interface for active antenna connectivity and has low power consumption, low cost, and small size, making it ideal for portable devices. The IoT panic button is an essential part of our system that has been developed to respond to the needs of visually impaired people in emergency situations. This makes it easy to use and efficient, and users do not need to possess technical skill in order to use the application. They can push a button that would send an alert with the user’s location to the desired recipient using the LoRa network. This guarantees quick action in case of an emergency such as difficulty in navigation or cases of theft. The portability of the panic button increases its usability as well as ease of use to provide a realistic and strong solution in real-time help and monitoring in times of emergencies.

A total of 30 trials were performed to evaluate the efficiency of the system, 15 in a typical open area and the other 15 in a typical closed area. The open field was chosen because the terrain of the field is higher and steeper so as to ascertain whether the system can perform optimally in such conditions. The experiments involved using two antenna heights, 1 m and 2 m, and two packet sizes, 32 bytes and 64 bytes, with the transmission power (TX power) at 15 dBm for all tests. The end device was tested at distances of 0 m, 50 m, 100 m, 200 m, 300 m, 400 m, and 500 m. In every configuration, the experiment was carried out methodically. First the antenna was used at 1 m for transmitting 32-byte data packets and then at 2 m with the same size packet. This process was then repeated using a data packet size of 64 bytes. In each distance, fifty packets were sent, and average latency was determined. Latency is usually the time that has elapsed from a certain event, and it is relevant in the network because of the time that data takes to travel from one node to another. The transmission and reception delay time is rounded. Trip delay is commonly measured in microseconds; this period accounts for signal transmission and back. The latency is arrived at by adding several other types of delays, such as transmitting and receiving delays. It is measured in ms [

33]. Equation (1) is used to compute the latency:

Table 3 shows the latency and RSSI (Received Signal Strength Indicator) with variable packet sizes and antenna heights. The performance of the system is described in the experimental results in terms of latency and received signal strength indicator (RSSI) at different packet sizes, heights of the antennas, and distances. The latency, which measures the time taken for data transmission and reception, rose uniformly with distance for all the configurations. At a distance of 1 m and a packet size of 32 bytes, latency varied between 225 ms at 0 m and 350 ms at 500 m, and, when the antenna height was increased to 2 m, the latency was between 210 ms and 330 ms, respectively. Likewise, for 64-byte packets, the latency was much higher, ranging from 745 ms at 0 m to 806 ms at 500 m for 1 m height, and it was even higher at 2 m height, ranging from 565 ms to 713 ms over the same distances. These results also show that increased packet size results in increased latency, while increasing the height of the antenna decreases the latency through improving the quality of the signal.

Similarly, received signal strength indication (RSSI) values were dependent on the packet size, height of the antenna, and distance. In the case of 32-byte packets, the RSSI values were higher (nearer 0 dBm) at short distances from the transmitter node and fell gradually with the increase in distance from the transmitter node. At 1 m antenna height, the average value of RSSI dropped from −24.82 dBm at 0 m distance to −111.15 dBm at 500 m distance. Likewise, at 2 m, RSSI reduced from −52.15 dBm to −116.12 dBm for the same distances. The pattern was similar with RSSIs being lower at 64 bytes, with overall readings starting from −56.3 dBm at 0 m and decreasing to −126.75 dBm at 500 m, with an antenna height of 1 m. An increase of the antenna height to 2 m had a positive effect on the RSSI values, which varied from −57.31 dBm for 0 m to −112.38 dBm for 500 m.

The results reveal that packet size, the height of antennas, and distance define system performance, and the need for choosing an optimal value was stated. These include reduced packet size and increased antenna height, and these are more desirable wherever there is a need to make rapid communications that are very dependable and the RSSI is strong. However, larger packets have higher latency and a weaker signal strength, an issue that can be very sensitive to changes in system parameters depending on application and environment. They also corroborate the anticipated decline in performance with distance to the target and therefore support optimal designs for the position of the antenna and strength of the signal to ensure dependable performance of the system over large distances.

We chose a 32-byte and 64-byte packet size to optimize latency, signal strength, and transmission efficiency for emergency communication in our LPWAN-based Assistive Response System (LARS). We observed that latency and RSSI values were lower when the size of the packet was smaller (32 bytes), and they were suitable for time-sensitive alerts, while introducing more delay, but this can increase data transmission size (64 bytes). Previous studies [

3,

7] have also considered similar packet sizes in LoRaWAN and LPWAN communication systems due to the need to balance network performance, energy efficiency, and real-time responsiveness for assistive technologies. In the experimental analysis of LPWAN implementation, we observe that an RSSI value closer to zero was obtained under cold or rainy weather conditions than under normal or hot weather conditions. In addition, low-light conditions, high traffic density, and noisy environments have been considered factors. The object detection accuracy may need optimized sensor configuration for low light conditions. The density of traffic that can generate congestion can severely dampen real-time processing and making decisions.

Our system achieves significant improvements in terms of performance. It is the YOLOv5 model that has high accuracy on object detection so that obstacles, pathways, and guiding tools can be identified. We apply the framework in our experimental results and show that it has real-time applicability by reducing latency and enhancing signal strength under varying environmental conditions. The edge computing paradigm improves decision-making speed, and thus the system becomes more sensitive to the needs of visually impaired users. From a cost-effectiveness point of view, LPWAN technology (LoRaWAN) features long-range, low-power communications, which makes it a cost-effective option as compared to traditional wireless networks. The use of edge computing reduces the need for high-bandwidth cloud processing and overall reduces operational costs as well as improving system efficiency. At the same time, the system makes use of existing IoT infrastructure such as wearable or portable devices and does not require high-powered specialized hardware.