IoTMindCare: An Integrative Reference Architecture for Safe and Personalized IoT-Based Depression Management

Abstract

1. Introduction

- Identifying core challenges in IoT-based depression management via a structured literature review (Section 2);

- A modular, extensible reference architecture (Section 3) that supports heterogeneous sensors, edge/cloud analytics, safety protocols, and personalization;

- Demonstrating the proposed architecture’s applicability by instantiating a concrete IoT depression care system that delivers proactive, ambient, and user-aware support and related evaluations (Section 4).

2. Related Works

- Large-scale smart-home and Activities of Daily Living (ADL)/Human Activity Recognition (HAR) platforms: Large-scale initiatives and datasets, such as the SMART BEAR consortium [9], which targets heterogeneous clinical and consumer sensors for aging-in-place pilots, and contemporary SPHERE [10] releases with multi-sensor annotated datasets, show how diverse sensor suites can be combined for robust ADL inference and longitudinal baselining. Recent work on virtual smart-home simulators and digital twins provides practical tools for testing ADL recognition and failure modes at scale. Meanwhile, contemporary reviews and studies on HAR system lifespan, semi-supervised learning, and ensemble methods highlight modern approaches for handling concept drift and label scarcity in long-term deployments [11]. These efforts collectively provide updated empirical and methodological evidence that multi-modal fusion, simulator-based validation, and hybrid learning pipelines improve robustness and reduce failure modes. These lessons directly motivated IoTMindCare’s modular Sensing Layer, fallback sensing strategies, and Personalization Engine design.

- Edge–cloud orchestration and privacy-preserving learning: Edge–fog–cloud orchestration frameworks and federated/on-device learning solutions, such as FedHome [5], VitalSense [12], and related edge-IoT work, inform placement decisions for training, inference, and data minimization. These works inform our hybrid deployment choices, including edge-first detection for latency and privacy and cloud-based personalization for long-term adaptation, and shaped the Personalization Engine and deployment recipes in IoTMindCare. Similarly, Benrimoh et al. [13] explore individualized therapy recommendations using deep neural networks. While its focus is on clinical personalization, IoTMindCare complements these approaches by providing an architectural foundation to integrate these models within IoT-based monitoring systems.

- Safety, escalation, and multi-stage verification: Ambient Assisted Living (AAL) pilots and alarm-management projects, such as [14,15], emphasize the operational need for multi-stage verification and caregiver-in-the-loop escalation to reduce false alarms while ensuring timely response. These operational patterns motivated IoTMindCare’s Safety Engine and tiered alert/escalation policy.

- Algorithmic and modality advances (explainability and contactless sensing): Advances in explainable wearable models and digital-twin/hierarchical detection pipelines provide mechanisms for clinician-facing explainability and hypothesis testing before escalation [6,16]. Complementary research on contactless modalities, such as radar and Wi-Fi CSI, provides privacy-respecting fallback sensing options when wearables are unavailable [17]. IoTMindCare integrates these algorithmic and modality-oriented lessons through explainable interfaces and contactless sensing options, with strict local privacy controls in place.

2.1. Operational, Privacy, and Standards

2.2. Synthesis: How the Literature Shaped IoTMindCare

- Sensing layer: multi-modal fusion, fallback sensing, and passive modalities from ADL/HAR platforms inform sensor selection and local rules.

- Safety Engine: multi-stage verification and caregiver-in-the-loop designs from AAL pilots shape alert policies and escalation workflows.

- Personalization Engine: federated and edge–cloud learning patterns guide where to place model updates and how to preserve privacy while enabling per-user adaptation.

- Deployment and standards: edge–fog–cloud orchestration patterns and interoperability standards guide practical integration and long-term maintainability.

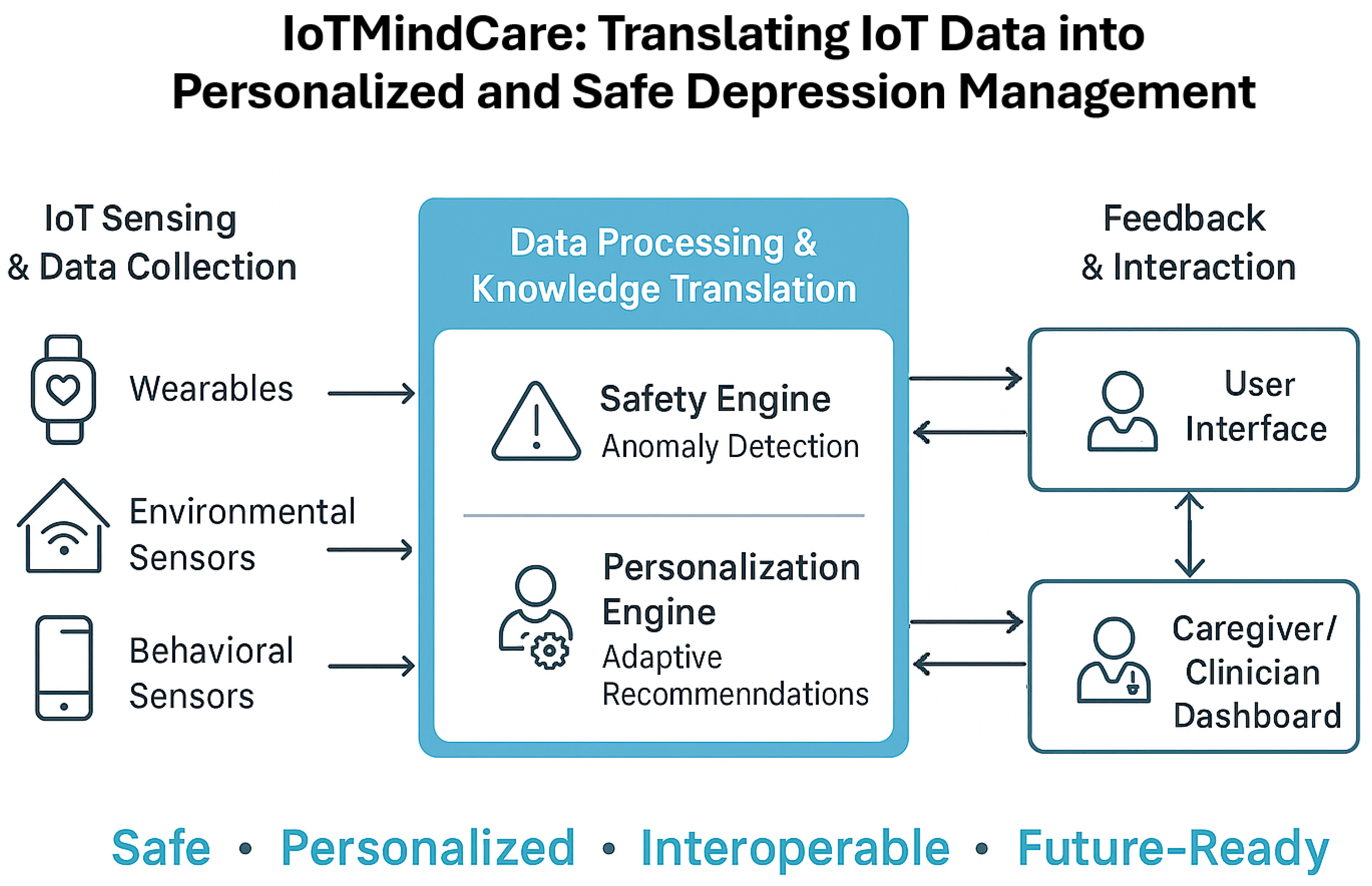

3. IoTMindCare: Proposed Reference Architecture

3.1. Architectural Drivers

3.1.1. Functional Requirements

- F1

- Continuous Monitoring or sensing of physiological, behavioral, and environmental signals.

- F2

- Context-Aware Decision-Making of multimodal data within spatial-temporal and behavioral contexts.

- F3

- Alert Generation and Escalation to caregivers or clinicians in response to detected risk conditions.

- F4

- User and Clinician Interfaces for stakeholders.

- F5

- Personalized Feedback and intervention mechanisms tailored to individual users.

3.1.2. Quality Attribute Scenarios

- QAS1: Safety: The system must detect critical events, such as depressive crises or self-harm, and respond with timely alerts using a probabilistic, context-aware anomaly detection model (Table 2). Detection accuracy, timeliness, and escalation logic are critical. This includes handling uncertainty through a confidence scoring model and minimizing the False Positive Rate (FPR) and False Negative Rate (FNR) as primary operational objectives. Events are cross-referenced with historical and contextual data to improve detection reliability.

- QAS2: Personalization: The system must adapt dynamically to users’ physiological and behavioral baselines, with support for partial or heterogeneous device configurations (Table 3). When sensors are missing, alternative data (manual input, AI-inferred metrics) must substitute for automated sensing. Personalization includes dynamic switching between modes, user-specific thresholds, and real-time updates to decision logic.

3.1.3. Technical Constraints

- TC1

- Limited Edge Resources necessitate light-weight and distributed processing.

- TC2

- Privacy Constraints require local processing and adherence to privacy legislation.

- TC3

- Device Heterogeneity points to the need to tolerate and adapt to variable device configurations.

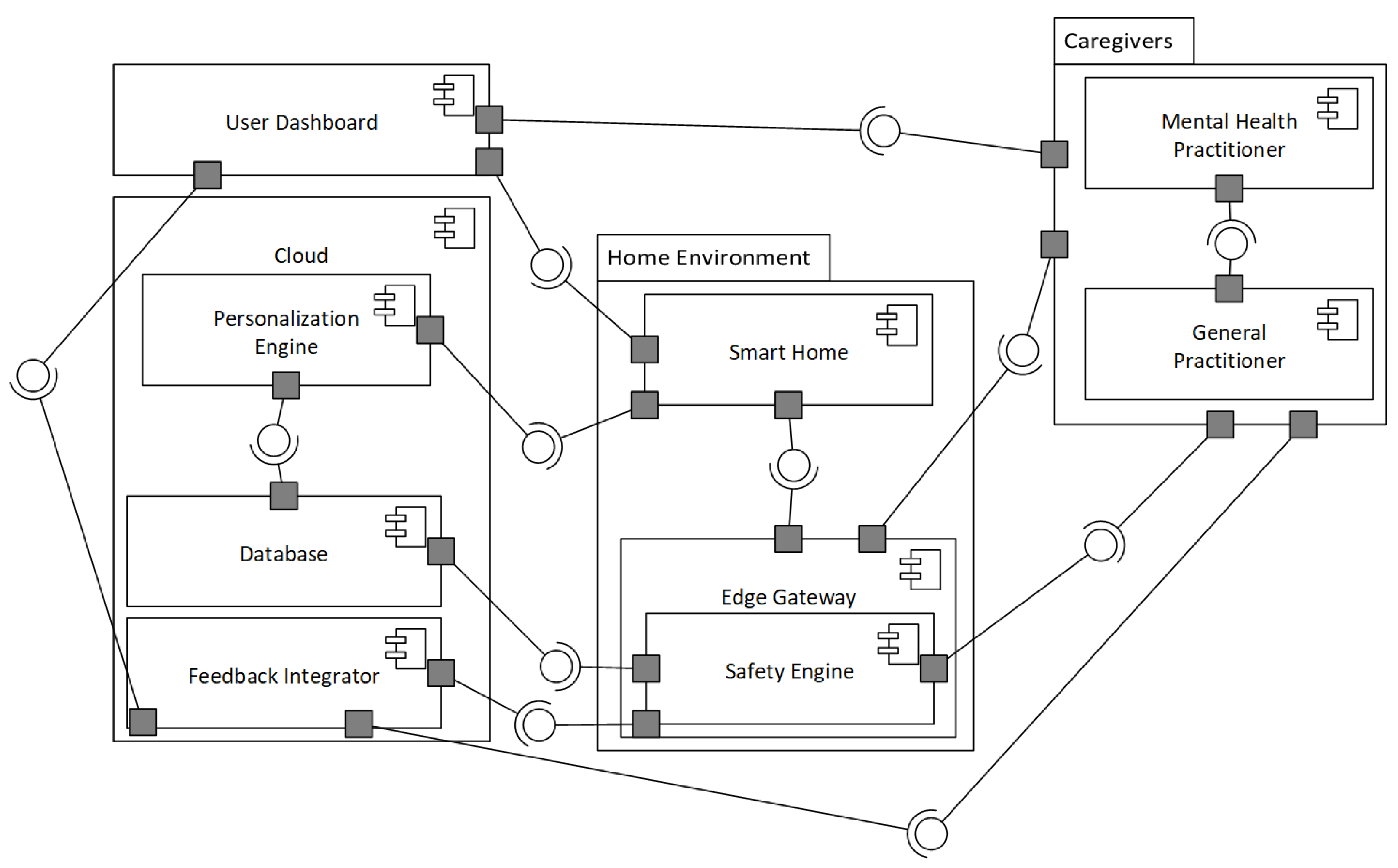

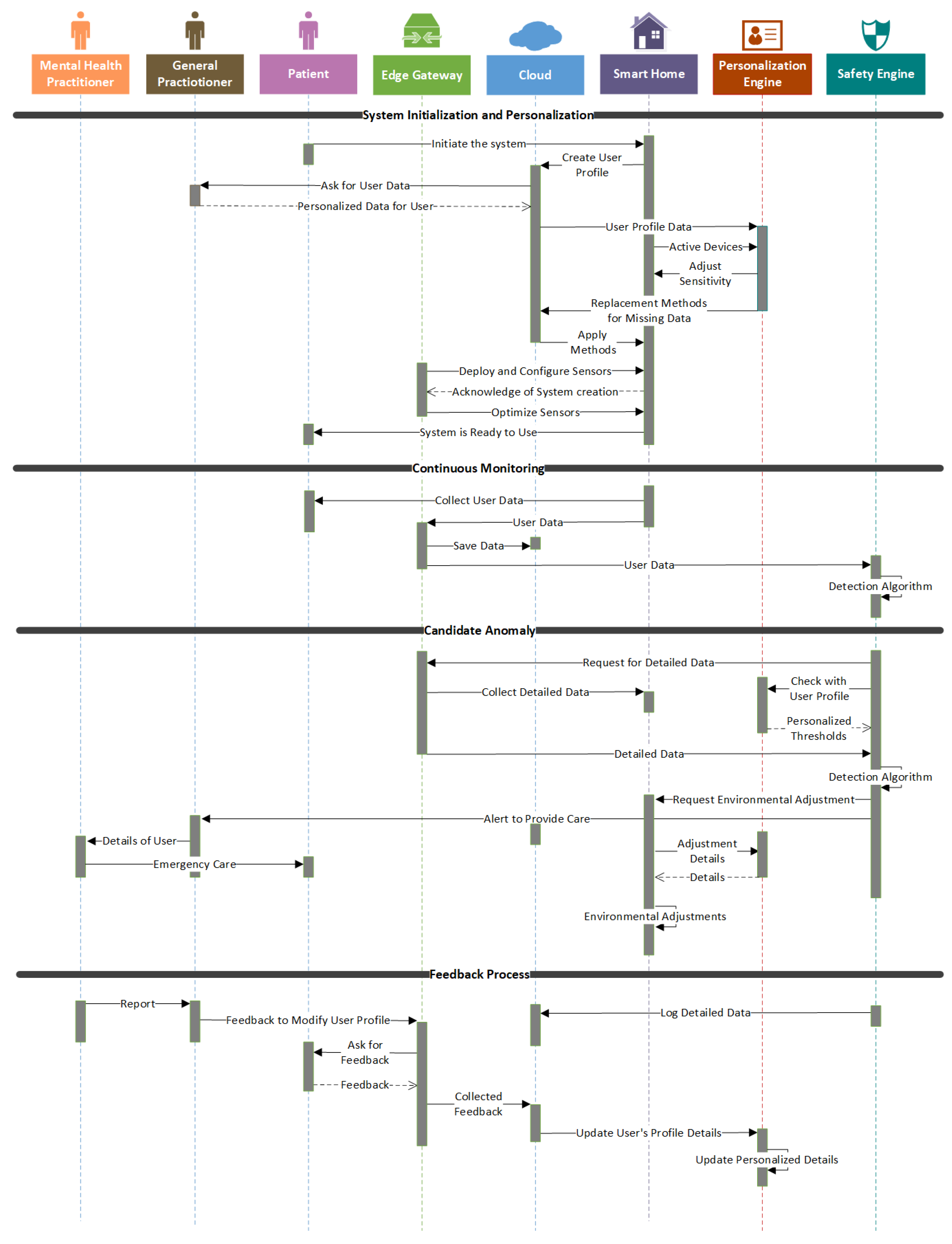

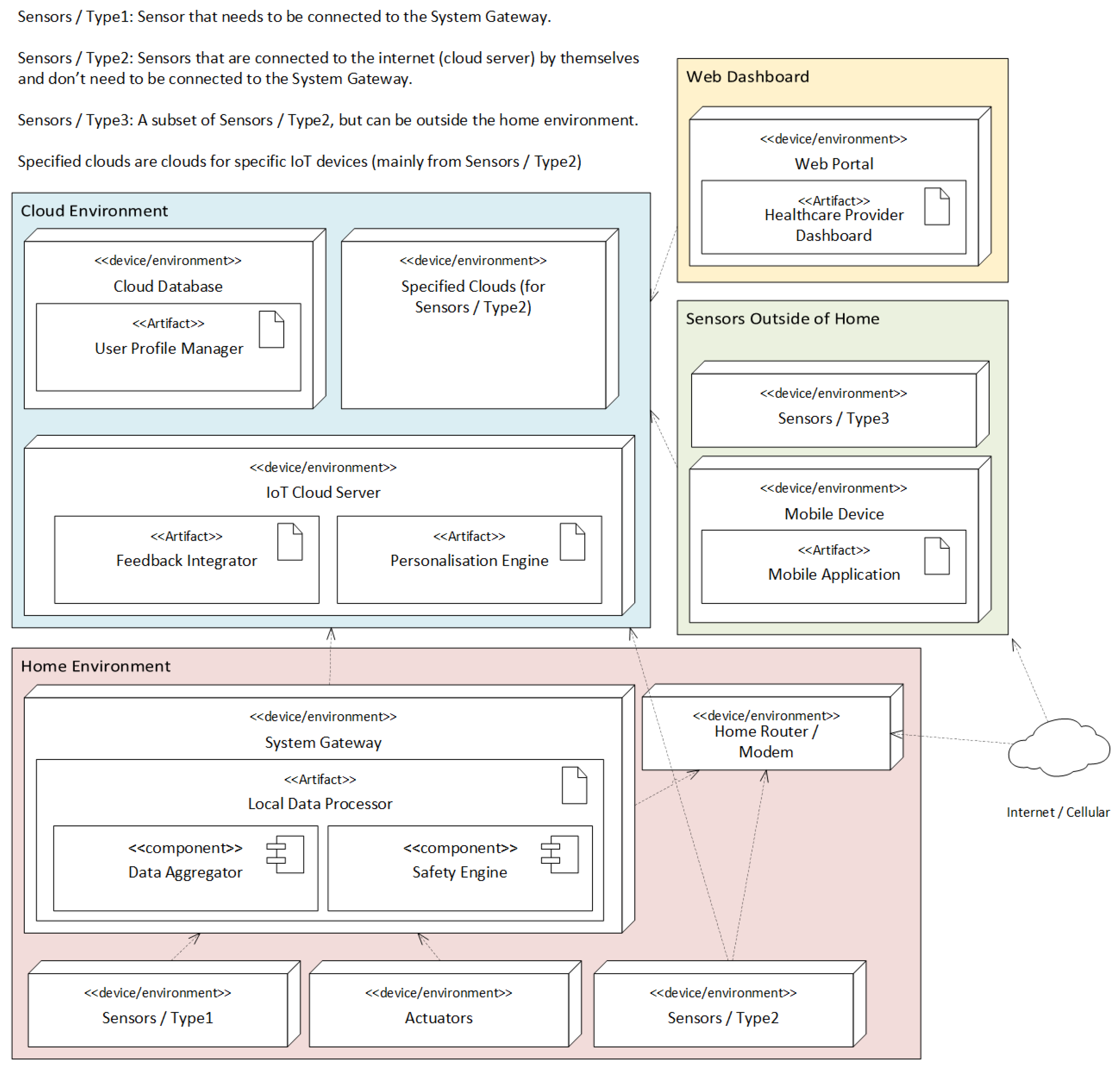

3.2. Architecture Views

3.2.1. Logical View

3.2.2. Development View

3.2.3. Process View

3.2.4. Deployment View

4. Architecture Evaluation

- Tradeoff Analysis

- Safety vs. Personalisation: A central tradeoff exists between safety and personalization. Personalization improves detection sensitivity (reducing FNR) by adapting to individual baselines; however, overly narrow per-user thresholds may increase FPR if not validated. IoTMindCare mitigates this via a tiered verification pipeline (edge trigger → cloud contextual validation → caregiver confirmation) and feedback-driven recalibration. However, overly personalized thresholds risk increasing FPs if not rigorously validated. This tradeoff is mitigated by delegating final anomaly verification to a centralized cloud component (Figure 2) and integrating feedback from caregivers (Figure 3) to calibrate and improve models iteratively.

- Feedback Loops vs. Timeliness: Incorporating caregiver and user feedback into anomaly detection loops (Figure 3) helps reduce FPR/FNR rates over time. However, this introduces delays in model retraining and potential inconsistencies during transition phases. The architecture mitigates this by decoupling feedback ingestion from real-time alerting, maintaining responsiveness while still enabling long-term model refinement.

- Local Autonomy vs. Central Oversight: To ensure availability and responsiveness, the system gateway performs initial anomaly evaluation at the edge (Figure 4). This supports low-latency decision-making and offers resilience during cloud disconnection, contributing to safety (FNR reduction). However, relying solely on edge intelligence limits access to broader contextual information needed to reduce FPRs. As a result, the architecture introduces a tiered decision-making pipeline: edge components perform preliminary assessments, while cloud services provide contextual validation to reduce FPRs. This design reflects a deliberate trade-off between local autonomy and central oversight.

4.1. Comparison with Existing Architectures

- Incorporating home environmental sensors.

- Employing multi-stage anomaly verification to manage FPR/FNR tradeoffs.

- Supporting feedback-driven model adaptation with user/caregiver input.

- Enabling edge-first inference policies for low latency and privacy.

4.2. Scenario-Driven Instantiation and Configuration

Representative Scenarios

- Early deterioration detection (gradual mood decline): Long-term ADL/sleep/activity drift that signals worsening depression (motivated by SMART BEAR [9]).

- Acute stress episode (physiological spike): Short-term HRV and activity spikes detected by wearables requiring brief interventions or prompting (wearable explainability studies [16]).

- Contactless monitoring for privacy-sensitive users: Use radar/Wi-Fi channel state information and on-device inference where wearables are infeasible (contactless systems [17]).

- Teletherapy-triggered sensing: Trigger richer sensing or clinician contact when conversational agents or self-report apps indicate increased risk (integration with digital therapeutics).

- Select sensors and signals relevant to the scenario

- Choose model placement: edge-first for low-latency safety scenarios; cloud-enabled personalization for long-term baselining and retraining.

- Define escalation policy: map detection confidence levels to tiered actions (edge prompt, cloud validation, caregiver/clinician alert) within the Safety Engine.

- Personalize and test: initialize thresholds from population priors, then adapt per-user via the Feedback Integrator. Use concrete quantitative validation metrics, such as reductions in FNR, reductions in FPR, improvements in sensitivity/specificity, and changes in detection and escalation latency, to evaluate the personalization gain.

- Privacy and failover: apply on-device preprocessing, encrypted model updates, or federated learning, and contactless fallbacks where wearables are refused.

4.3. Limitations and Threats to Validity

- Construct Validity: Behavioral and physiological signals may not fully capture the complex psychological aspects of depression and have not been clinically validated against gold-standard diagnostic tools.

- External Validity: The system has not yet been deployed across diverse demographics, socioeconomic groups, or cultural contexts, limiting generalizability.

- Ethical and Legal Constraints: The system has not undergone formal ethical review or compliance assessment with regulations such as GDPR or HIPAA, especially regarding passive data collection.

- Technical and Ecological Validity: Assumes consistent sensor reliability, user compliance, and stable network conditions; real-world variability and behavioral shifts due to monitoring awareness may affect performance.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| ADD | Attribute-Driven Design |

| ATAM | Architecture Trade-off Analysis Method |

| MQTT | Message Queuing Telemetry Transport |

| CoAP | Constrained Application Protocol |

| UML | Unified Modeling Language |

| ADL | Activities of Daily Living |

| HAR | Human Activity Recognition |

| AAL | Ambient Assisted Living |

| QAS | Quality Attribute Scenario |

| FPR | False Positive Rate |

| FNR | False Negative Rate |

References

- World Health Organizaation. Depression. Available online: https://www.who.int/news-room/fact-sheets/detail/depression (accessed on 28 May 2025).

- Ochnik, D.; Buława, B.; Nagel, P.; Gachowski, M.; Budziński, M. Urbanization, loneliness and mental health model—A cross-sectional network analysis with a representative sample. Sci. Rep. 2024, 14, 24974. [Google Scholar] [CrossRef]

- Zhang, Y.; Jia, X.; Yang, Y.; Sun, N.; Shi, S.; Wang, W. Change in the global burden of depression from 1990–2019 and its prediction for 2030. J. Psychiatr. Res. 2024, 178, 16–22. [Google Scholar] [CrossRef]

- Simon, G.E.; Moise, N.; Mohr, D.C. Management of depression in adults: A review. JAMA 2024, 332, 141–152. [Google Scholar] [CrossRef]

- Wu, Q.; Chen, X.; Zhou, Z.; Zhang, J. Fedhome: Cloud-edge based personalized federated learning for in-home health monitoring. IEEE Trans. Mob. Comput. 2020, 21, 2818–2832. [Google Scholar] [CrossRef]

- Gupta, D.; Kayode, O.; Bhatt, S.; Gupta, M.; Tosun, A.S. Hierarchical federated learning based anomaly detection using digital twins for smart healthcare. In Proceedings of the 2021 IEEE 7th International Conference on Collaboration and Internet Computing (CIC), Virtual Event, 13–15 December 2021; pp. 16–25. [Google Scholar]

- Cervantes, H.; Kazman, R. Designing Software Architectures: A Practical Approach; Addison-Wesley Professional: Boston, MA, USA, 2024. [Google Scholar]

- Angelov, S.A.; Trienekens, J.J.M.; Grefen, P.W.P.J. Extending and Adapting the Architecture Tradeoff Analysis Method for the Evaluation of Software Reference Architectures; Technische Universiteit Eindhoven: Eindhoven, The Netherlands, 2014. [Google Scholar]

- SMART BEAR Consortium. Smart Big Data Platform to Offer Evidence-Based Personalised Support for Healthy and Independent Living at Home. Horizon 2020 Project. 2020. Available online: https://smart-bear.eu/ (accessed on 20 August 2025).

- Tonkin, E.L.; Holmes, M.; Song, H.; Twomey, N.; Diethe, T.; Kull, M.; Craddock, I. A multi-sensor dataset with annotated activities of daily living recorded in a residential setting. Sci. Data 2023, 10, 162. [Google Scholar] [CrossRef] [PubMed]

- Patricia, A.C.P.; Rosberg, P.C.; Butt-Aziz, S.; Alberto, P.M.M.; Roberto-Cesar, M.O.; Miguel, U.T.; Naz, S. Semi-supervised ensemble learning for human activity recognition in casas Kyoto dataset. Heliyon 2024, 10, e29398. [Google Scholar] [CrossRef]

- Rodrigues, V.F.; da Rosa Righi, R.; da Costa, C.A.; Zeiser, F.A.; Eskofier, B.; Maier, A.; Kim, D. Digital health in smart cities: Rethinking the remote health monitoring architecture on combining edge, fog, and cloud. Health Technol. 2023, 13, 449–472. [Google Scholar]

- Benrimoh, D.; Fratila, R.; Israel, S.; Perlman, K. Deep Learning: A New Horizon for Personalized Treatment of Depression? McGill J. Med. 2018, 16, 1–6. [Google Scholar] [CrossRef]

- Aalam, J.; Shah, S.N.A.; Parveen, R. Personalized Healthcare Services for Assisted Living in Healthcare 5.0. Ambient Assist. Living 2025, 203–222. [Google Scholar]

- Jovanovic, M.; Mitrov, G.; Zdravevski, E.; Lameski, P.; Colantonio, S.; Kampel, M.; Florez-Revuelta, F. Ambient assisted living: Scoping review of artificial intelligence models, domains, technology, and concerns. J. Med. Internet Res. 2022, 24, e36553. [Google Scholar]

- Zhang, Y.; Folarin, A.A.; Stewart, C.; Sankesara, H.; Ranjan, Y.; Conde, P.; Choudhury, A.R.; Sun, S.; Rashid, Z.; Dobson, R.J.B. An Explainable Anomaly Detection Framework for Monitoring Depression and Anxiety Using Consumer Wearable Devices. arXiv 2025, arXiv:2505.03039. [Google Scholar] [CrossRef]

- Li, A.; Bodanese, E.; Poslad, S.; Chen, P.; Wang, J.; Fan, Y.; Hou, T. A contactless health monitoring system for vital signs monitoring, human activity recognition, and tracking. IEEE Internet Things J. 2023, 11, 29275–29286. [Google Scholar] [CrossRef]

- Health Level Seven International (HL7). HL7 FHIR (Fast Healthcare Interoperability Resources); Technical Report; Release 5.0.0 (R5–STU); HL7 International: Ann Arbor, MI, USA, 2023; Available online: https://hl7.org/fhir/ (accessed on 29 June 2025).

- openEHR International. openEHR—The Future of Digital Health Is Open. Available online: https://openehr.org/ (accessed on 29 June 2025).

- FIWARE Foundation. FIWARE: Open APIs for Open Minds—Open-Source Smart Applications Platform. Available online: https://www.fiware.org/ (accessed on 29 June 2025).

- IEEE Std 11073-10701–2022/ISO/IEEE 11073-10701:2024; Health Informatics—Device Interoperability—Part 10701: Point-of-Care Medical Device Communication—Metric Provisioning by Participants in a Service-Oriented Device Connectivity (SDC) System. IEEE: New York, NY, USA, 2022.

- Prochaska, J.J.; Vogel, E.A.; Chieng, A.; Kendra, M.; Baiocchi, M.; Pajarito, S.; Robinson, A. A therapeutic relational agent for reducing problematic substance use (Woebot): Development and usability study. J. Med. Internet Res. 2021, 23, e24850. [Google Scholar] [CrossRef] [PubMed]

- Wysa: Your AI-Powered Mental Wellness Companion. Touchkin eServices Pvt Ltd. Available online: https://www.wysa.com/ (accessed on 28 May 2025).

- BetterHelp Editorial Team. How Effective Is Online Counseling for Depression? Available online: https://www.betterhelp.com/ (accessed on 29 April 2025).

- Roble Ridge Software LLC. Moodfit: Tools & Insights for Your Mental Health. Available online: https://www.getmoodfit.com/ (accessed on 29 April 2025).

- Healthify He Puna Waiora, NZ. T2 Mood Tracker App. Available online: https://healthify.nz/apps/t/t2-mood-tracker-app (accessed on 29 April 2025).

- Software Engineering Design Research Group. Quality Attribute Scenario Template—Design Practice Repository. Available online: https://socadk.github.io/design-practice-repository/artifact-templates (accessed on 18 May 2025).

- Akhtar, K.; Yaseen, M.U.; Imran, M.; Khattak, S.B.A.; Nasralla, M.M. Predicting inmate suicidal behavior with an interpretable ensemble machine learning approach in smart prisons. PeerJ Comput. Sci. 2024, 10, e2051. [Google Scholar] [CrossRef]

- Karnouskos, S.; Sinha, R.; Leitão, P.; Ribeiro, L.; Strasser, T.I. The applicability of ISO/IEC 25023 measures to the integration of agents and automation systems. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018; pp. 2927–2934. [Google Scholar]

- Cheikhrouhou, O.; Mershad, K.; Jamil, F.; Mahmud, R.; Koubaa, A.; Moosavi, S.R. A lightweight blockchain and fog-enabled secure remote patient monitoring system. Internet Things 2023, 22, 100691. [Google Scholar] [CrossRef]

- Kruchten, P.B. The 4+1 view model of architecture. IEEE Softw. 2002, 12, 42–50. [Google Scholar]

- Nasiri, S.; Sadoughi, F.; Dehnad, A.; Tadayon, M.H.; Ahmadi, H. Layered Architecture for Internet of Things-based Healthcare System. Informatica 2021, 45, 543–562. [Google Scholar] [CrossRef]

- Lamonaca, F.; Scuro, C.; Grimaldi, D.; Olivito, R.S.; Sciammarella, P.F.; Carnì, D.L. A layered IoT-based architecture for a distributed structural health monitoring system. Acta Imeko 2019, 8, 45–52. [Google Scholar] [CrossRef]

- Choi, J.; Lee, S.; Kim, S.; Kim, D.; Kim, H. Depressed mood prediction of elderly people with a wearable band. Sensors 2022, 22, 4174. [Google Scholar] [CrossRef] [PubMed]

- Richardson, L.; Ruby, S. RESTful Web Services; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008. [Google Scholar]

- Aazam, M.; Zeadally, S.; Harras, K.A. Deploying fog computing in industrial internet of things and industry 4.0. IEEE Trans. Ind. Inform. 2018, 14, 4674–4682. [Google Scholar] [CrossRef]

- Razzaque, M.A.; Milojevic-Jevric, M.; Palade, A.; Clarke, S. Middleware for internet of things: A survey. IEEE Internet Things J. 2015, 3, 70–95. [Google Scholar] [CrossRef]

- CarePredict. CarePredict: AI-Powered Senior Care Platform. Available online: https://www.carepredict.com/ (accessed on 6 June 2025).

- Yousefpour, A.; Fung, C.; Nguyen, T.; Kadiyala, K.; Jalali, F.; Niakanlahiji, A.; Kong, J.; Jue, J.P. All one needs to know about fog computing and related edge computing paradigms: A complete survey. J. Syst. Archit. 2019, 98, 289–330. [Google Scholar] [CrossRef]

- Perera, C.; Qin, Y.; Estrella, J.C.; Reiff-Marganiec, S.; Vasilakos, A.V. Fog computing for sustainable smart cities: A survey. ACM Comput. Surv. (CSUR) 2017, 50, 1–43. [Google Scholar] [CrossRef]

- Dastjerdi, A.V.; Gupta, H.; Calheiros, R.N.; Ghosh, S.K.; Buyya, R. Fog computing: Principles, architectures, and applications. In Internet of Things; Elsevier: Amsterdam, The Netherlands, 2016; pp. 61–75. [Google Scholar]

- Idrees, Z.; Zou, Z.; Zheng, L. Edge computing based IoT architecture for low cost air pollution monitoring systems: A comprehensive system analysis, design considerations & development. Sensors 2018, 18, 3021. [Google Scholar] [CrossRef] [PubMed]

- Kazman, R.; Klein, M.; Clements, P. ATAM: Method for Architecture Evaluation; Carnegie Mellon University, Software Engineering Institute: Pittsburgh, PA, USA, 2000. [Google Scholar]

- Cook, D.; Schmitter-Edgecombe, M.; Crandall, A.; Sanders, C.; Thomas, B. Collecting and disseminating smart home sensor data in the CASAS project. In Proceedings of the CHI Workshop on Developing Shared Home Behavior Datasets to Advance HCI and Ubiquitous Computing Research, Boston, MA, USA, 4–9 April 2009; pp. 1–7. [Google Scholar]

| System/Reference | Focus Area | Key Technologies | Unique Contributions/Features | Limitations Addressed by IoTMindCare |

|---|---|---|---|---|

| SMART BEAR [9] | Healthy aging, large-scale IoT monitoring | Heterogeneous sensors, AI-based analytics, cloud integration | Personalized interventions for older adults using real-world evidence; scalable data fusion platform | Focuses on physical health; lacks adaptive stress and mood analytics |

| SPHERE [10] | Multi-sensor home monitoring, ADL/HAR | Video, wearable, and environmental sensing; ML-based ADL inference | Provides open datasets and multimodal monitoring for chronic conditions | Does not address personalization or dynamic emotional context |

| CASAS [11] | HAR and anomaly detection | Semi-supervised ensemble learning, temporal features | Enhanced HAR accuracy in complex environments | No user-centered personalization or mental health context |

| VitalSense [12] | Smart city and remote health monitoring | Edge–fog–cloud hierarchy, digital twins, adaptive routing | Low-latency IoT architecture for continuous health monitoring | Lacks domain-specific decision logic for emotional wellbeing |

| AAL Healthcare 5.0 [14] | Assisted living, healthcare personalization | IoT–AI integration, context awareness, user modeling | Personalized ambient intelligence and health prediction | No explainable AI or caregiver feedback integration |

| AAL AI Review [15] | AI in AAL | ML and DL algorithms, cognitive computing, privacy concerns | Comprehensive review highlighting challenges in transparency and interoperability | IoTMindCare addresses these gaps via explainable reasoning and standardized APIs |

| Explainable Wearable Monitoring [16] | Depression/anxiety detection from wearables | Explainable anomaly detection, multimodal fusion | Transparent AI model linking sensor data to mental state changes | Lacks a modular IoT reference architecture or cross-platform interoperability |

| Contactless IoT Health [17] | Vital signs and ADL tracking | Radar, thermal, and computer vision-based sensing | Comprehensive non-invasive monitoring system with motion tracking | No personalized feedback or multi-stakeholder (user–clinician) data flow |

| Digital Therapeutic Apps [22,23,24,25,26] | AI chatbots, cognitive behavioral therapy, tele-therapy | Natural Language Processing-based conversational agents, self-tracking apps | Real-time emotional support and mood tracking through mobile interfaces | Standalone, not integrated with physiological or contextual IoT data |

| Stimulus |

|

| Stimulus Source |

|

| Environment |

|

| Artifact |

|

| Response |

|

| Response Measure |

|

| Stimulus |

|

| Stimulus Source |

|

| Environment |

|

| Artifact |

|

| Response |

|

| Response Measure |

|

| Interface Layer (user interface on mobile devices and actuators, caregiver’s access via dashboard, and feedback mechanism to upgrade the system) | Personalization (supporting device variability, customized treatments, user-specific thresholds, and behavioral diversity) | Safety (anomalous behavior detection by continous monitoring, real-time alert to caregivers, and environemtal adjustments) |

| Service Layer (real-time monitoring, automated alerts by decision-making, environmental adjustments) | ||

| Data Processing and Storage (local processing for real-time and rule-based triggers, cloud processing for long-term behavioral analytics, and data storage) | ||

| Network and Communication (local communication, gateway, remote communication, ensuring reliable data exchange between system components | ||

| Sensing (collect multimodal data from physiological, environmental, and behavioral sensors, depending on sensors’ availability) |

| QAS | Relevant Components/Diagrams | Validation Evidence | Component Level Mapping (Figure 2) | Behavioral-Level Mapping (Figure 3) | Deployment-Level Mapping (Figure 4) |

|---|---|---|---|---|---|

| Safety (FPR) (Table 2) | Safety Engine (Figure 2 and Figure 4)—Alert Generation (Candidate Anomaly Section in Figure 3)) | Alert verification by checking with the edge gateway to reduce incorrect alerts | Multi-layer verification across the System Gateway and Cloud | Reduced by context-aware confirmation in the cloud before triggering alerts | Ensuring local anomaly detection continues during network downtime (edge computing on the System Gateway) |

| Safety (FNR) (Table 2) | Safety Engine (Figure 2 and Figure 4)—Caregiver and user feedback by Feedback Integrator (Feedback Process Section in Figure 3) | Feedback integration to detect missed anomalies | Caregivers and User interface to facilitates feedback loops and missed-event reporting | Addressed via caregiver and user feedback paths feeding into the anomaly model | Ensuring local anomaly detection continues during network downtime (edge computing on the System Gateway) |

| Personalization (Figure Table 3) | Personalisation Engine (Figure 2 and Figure 4)—User Dashboard (Figure 2 and Figure 4)—User Profile on Cloud (Figure 4)—Integrating different sensors (Figure 4)—Ask user data from General Practitioner (Figure 3)—Adjust devices and sensitivity (Figure 3) | Adaptable thresholds—Behavioral baselining in algorithms—User-specific anomaly models by checking with user profile—Setting up based on the user’s devices | User module to build user-specific behavioral models and adapt thresholds over time—System configuration based on the user’s specified devices | Individualized thresholds used during verification, and using the user’s available set of devices | Hosting behavioral models and user data in the cloud enables modifications as user thresholds change |

| Feature | Zhang et al. [16] | Wu et al. [5] | Gupta et al. [6] | IoTMindCare |

|---|---|---|---|---|

| Data Sources (See Logical View in Section 3.2.1) | Wearables | Wearables/Home Sensors | Wearables/Digital Twins | Wearables/IoT/Environment |

| Personalization (See Process View in Section 3.2.3) | Global Model | User-Specific Models | Group-Based Models | User Profile/Feedback-Driven |

| Explainability (See Development View in Section 3.2.2) | SHAP | – | – | Human-in-Loop/Logic Layers and Modules |

| FPR/FNR Strategy (See Process View in Section 3.2.3 | Global Threshold | Personalized FL | Multilevel Validation | Multi-Stage/Feedback |

| Deployment Topology (See Deployment View in Section 3.2.4) | - | Edge-Cloud Based FL | Hierarchical Edge–Cloud | Hybrid Edge–Cloud with Fallback and Local Safety Logic |

| Scenario | Key Signals/Sensors | Source (Examples) | Relevant Views | Primary QAS & Config Notes |

|---|---|---|---|---|

| Early deterioration detection | Activity patterns, sleep trends, phone/PC usage, longitudinal HR/ actigraphy | SMART BEAR [9] | Logical, Deployment, Process | QAS2. Longitudinal baselining in Personalization Engine; cloud-hosted model training with scheduled recalibration; edge summarization to preserve privacy. Use drift-detection and periodic retraining hooks; measure FPR, FNR, sensitivity, specificity, detection latency, and convergence rate. |

| Acute crisis detection | HRV, speech content/prosody, sudden inactivity, environmental context | AAL reviews/safety pilots [14,15] | Service, Process, Deployment | QAS1. Edge-first low-latency detection (Safety Engine) with multi-stage confirmation: edge trigger → cloud contextual validation → caregiver escalation. Configure conservative thresholds + high-confidence escalation; log detection latency and sensitivity/specificity. |

| Sleep disturbance monitoring | Bed-pressure, respiration (radar/CSI), actigraphy | SPHERE [10], Li et al. [17] | Logical, Deployment | QAS2 and QAS1. Use contactless modalities where wearables unavailable; process on edge for privacy; personalize sleep thresholds per-user; validate with sleep-specific sensitivity score and detection-latency metrics. |

| Social isolation/ withdrawal | Reduced movement, decreased device interactions, fewer outgoing calls | CASAS/HAR literature [10,11] | Logical, Process, Development | QAS2. Personalization Engine tracks social-activity baselines; thresholds adapted via Feedback Integrator; A/B style trials to compare static vs adaptive thresholds; measure reduction in FNR for isolation detection. |

| Medication/routine deviation | Smart-plug, cabinet/door contacts, schedule adherence logs | SMART BEAR [9], AAL reviews [15] | Development, Deployment | QAS1 & QAS2. Edge acknowledgement prompts (in-situ) before escalation; local rule filters + cloud validation; integrate clinician-configured escalation policies. Monitor FPR rate of missed-medication alerts. |

| Acute stress episode | Wearable HR/HRV, accelerometer, short-term speech features | Explainable wearable studies [16] | Logical, Service | QAS1 & QAS2. Edge/local detection for low latency, short-term buffering to cloud for contextual validation; use explainability output for clinician dashboards; evaluate with precision/recall and detection latency. |

| Contactless privacy-sensitive monitoring | Radar, Wi-Fi CSI, on-device feature extraction | Li et al. [17], contactless sensing literature | Logical, Deployment | QAS2 and privacy constraints. Prefer on-device feature extraction and encrypted summaries; disable raw audio/video transmission; define privacy-preserving model update policies (differentially private updates or federated patterns). |

| Teletherapy- triggered sensing | Self-report, chatbot flags, scheduled clinician prompts | Digital therapeutics/ chatbots [22,23] | Interface, Process, Development | QAS2. Use chatbot signals to change sensing mode (e.g., increase sampling), start short-term local analytics, and route data to clinician dashboards; measure user acceptability and engagement. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zamani, S.; Sinha, R.; Madanian, S.; Nguyen, M. IoTMindCare: An Integrative Reference Architecture for Safe and Personalized IoT-Based Depression Management. Sensors 2025, 25, 6994. https://doi.org/10.3390/s25226994

Zamani S, Sinha R, Madanian S, Nguyen M. IoTMindCare: An Integrative Reference Architecture for Safe and Personalized IoT-Based Depression Management. Sensors. 2025; 25(22):6994. https://doi.org/10.3390/s25226994

Chicago/Turabian StyleZamani, Sanaz, Roopak Sinha, Samaneh Madanian, and Minh Nguyen. 2025. "IoTMindCare: An Integrative Reference Architecture for Safe and Personalized IoT-Based Depression Management" Sensors 25, no. 22: 6994. https://doi.org/10.3390/s25226994

APA StyleZamani, S., Sinha, R., Madanian, S., & Nguyen, M. (2025). IoTMindCare: An Integrative Reference Architecture for Safe and Personalized IoT-Based Depression Management. Sensors, 25(22), 6994. https://doi.org/10.3390/s25226994