1. Introduction

Multi-modal perception has become a cornerstone of modern autonomous systems, where complementary information from optical sensors such as LiDAR and cameras is integrated to achieve reliable scene understanding. A critical step in this integration is extrinsic calibration, which aligns geometric structures captured by LiDAR with semantic-rich visual cues from cameras. Accurate calibration provides the geometric foundation for transforming and fusing heterogeneous sensor data, enabling downstream tasks such as 3D object detection, semantic mapping, and autonomous navigation.

Conventional calibration approaches predominantly rely on supervised methods involving calibration targets (e.g., checkerboards or AprilTags) [

1,

2], manual annotation, or precise sensor synchronization. To improve calibration accuracy, Geiger [

3] introduced an automated calibration system utilizing multiple checkerboards as targets. This strategy introduces redundancy into the calibration process, thereby enhancing both stability and precision. To further reduce edge detection errors in sparse point clouds, Tóth [

4] proposed the use of spherical calibration targets. Their continuous and symmetric surfaces enable reliable reconstruction in both LiDAR and camera modalities. These target-based methods are effective under limited conditions; however, they still require cumbersome manual operations, are susceptible to environmental factors, and are unsuitable for real-time or large-scale deployment in dynamic environments.

To reduce manual effort and improve scalability, recent research has explored unsupervised and weakly supervised calibration frameworks. These methods often leverage photometric consistency, mutual information, or self-supervised feature correspondences to align point clouds and images without ground-truth annotations. For instance, Yuan et al. [

5] proposed an efficient edge extraction technique based on voxel segmentation and plane fitting, while Liu et al. [

6] employed geometric features such as line segments and rectangles extracted from urban scenes to maximize 2D–3D feature correspondences. Although these approaches reduce reliance on manual labeling, they still struggle with generalization, robustness, and accuracy in complex or unstructured environments.

To overcome these challenges, deep learning (DL)-based calibration methods have recently gained traction. Early approaches such as RegNet [

7] applied convolutional neural networks (CNNs) to regress extrinsic parameters using Euclidean loss, marking a shift from heuristic to data-driven calibration. Zhu et al. [

8] further redefined calibration as an optimization task and proposed semantic-driven metrics to evaluate alignment quality. Unlike traditional pipelines, DL-based methods achieve higher accuracy with minimal manual intervention. However, most existing models rely on RGB–depth fusion or shallow geometric cues, which capture incomplete and poorly aligned information, and they rarely exploit global feature extraction across modalities. This limits both calibration accuracy and generalization capability.

In this work, we propose a fully unsupervised and end-to-end framework for LiDAR–camera extrinsic calibration that eliminates the need for manual intervention, handcrafted features, or prior synchronization. Our method builds on a Transformer-based framework [

9] to extract and align global features from 2D images and 3D point clouds: a Vision Transformer encodes rich semantic features from RGB images, while a Point Transformer captures spatial structure from LiDAR data. The extracted features are fused and processed through a regression network to estimate the 4 × 4 extrinsic transformation matrix. Moreover, we design a multi-constraint loss function that enforces structural consistency while improving the stability and physical validity of extrinsic calibration. Extensive experiments on both the KITTI dataset [

10] and our self-collected dataset validate the effectiveness of the proposed method. In summary, the main contributions of this work lie in:

- (1)

A dual-Transformer framework for unsupervised cross-modal feature extraction and alignment;

- (2)

A multi-constraint loss function that enhances geometric consistency and calibration stability;

- (3)

Comprehensive validation on both benchmark and real-world data, demonstrating the scalability and generalizability of the proposed approach.

2. Methods

In this section, we present a fully unsupervised LiDAR–camera extrinsic calibration framework based on a Transformer-based architecture. The framework is designed to automatically infer the spatial transformation between LiDAR and camera sensors without requiring manual annotations. The overall pipeline consists of three main components: (1) an image encoder based on the Vision Transformer [

11], which extracts global semantic features from 2D images; (2) a Point Transformer module, which captures geometric representations from 3D point clouds and models the spatial structure of the environment; and (3) a transformation regression module, which fuses the extracted features and estimates the 4 × 4 extrinsic transformation matrix between the two sensors.

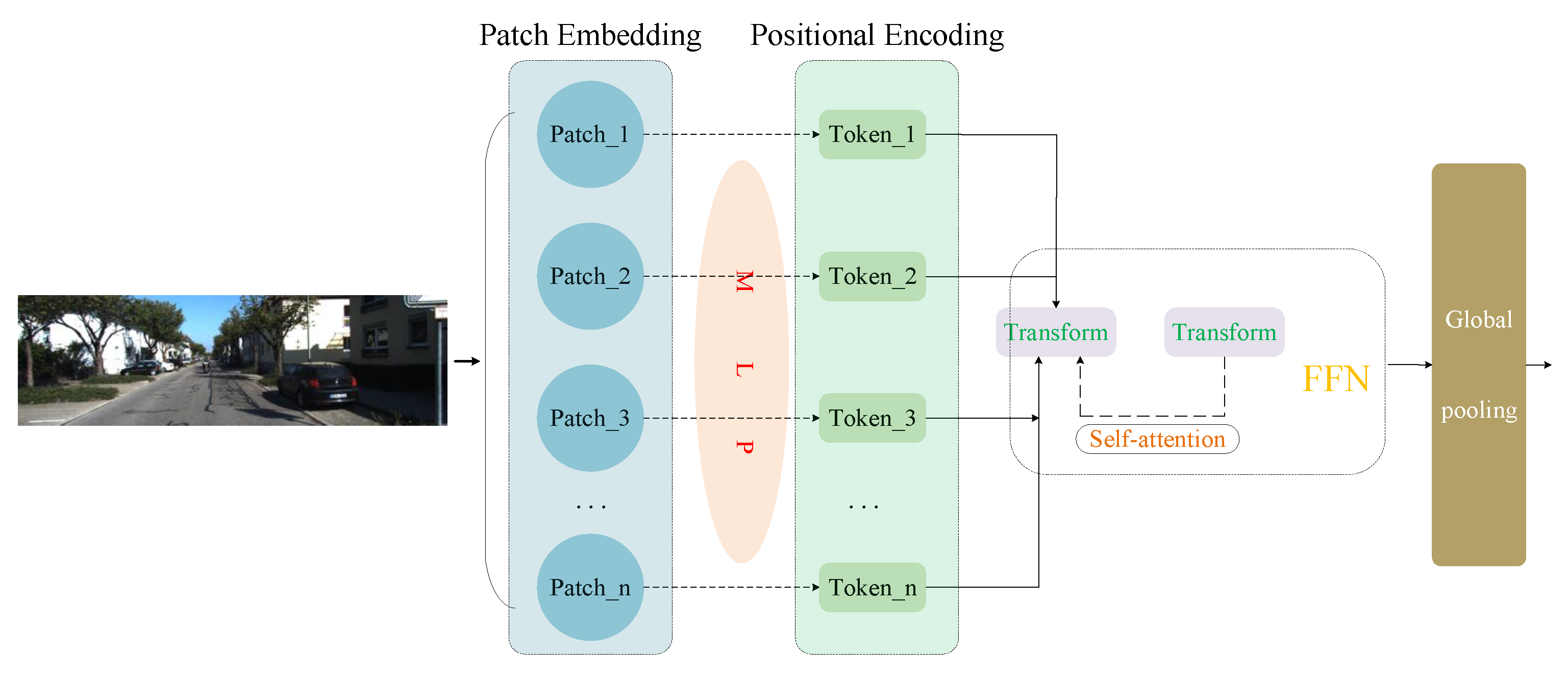

For image feature extraction, we employ a Vision Transformer encoder [

11], as illustrated in

Figure 1. The input RGB image is resized to 512 × 512 to ensure a compact yet sufficiently detailed representation, and then divided into non-overlapping 16 × 16 patches, yielding 1024 tokens. Each patch is flattened and projected by a multilayer perceptron (MLP) into a 1024-dimensional embedding. To preserve spatial information, learnable positional encodings are added, and the resulting token sequence is processed by 8 Transformer layers, each consisting of multi-head self-attention (with 8 heads) and feed-forward networks (hidden dimension 2048). Finally, a global pooling operation aggregates the outputs into a compact feature descriptor that provides the 2D semantic representation for cross-modal alignment with 3D point cloud features.

The choice of these parameters follows a balance between representation capacity and computational efficiency. The 512 × 512 input resolution provides sufficient detail while keeping computation tractable; a patch size of 16 maintains a reasonable granularity of spatial information. The embedding dimension of 1024 and 8 attention heads allow the model to capture rich dependencies across image regions without excessive overhead, while the feed-forward dimension of 2048 follows standard Transformer design for stable training and expressive features. The use of 8 Transformer layers further provides adequate depth for semantic modeling while avoiding overfitting under unsupervised training.

The Transformer-based encoder provides several advantages for calibration tasks. First, its ability to capture global dependencies enhances robustness in complex scenes and improves the accuracy of cross-modal alignment. Second, its flexible design naturally facilitates integration with 3D point cloud features, enabling more reliable calibration across modalities.

In the calibration process, the extraction of 3D features plays a crucial role in determining the accuracy, stability, and adaptability of the results. Compared with traditional methods that rely on sparse point or line features, global 3D features provide richer structural information, enabling more robust feature matching in structured environments and multi-sensor fusion scenarios. Robust 3D feature extraction also mitigates the influence of environmental changes, occlusions, and noise, thereby improving the stability of cross-sensor alignment.

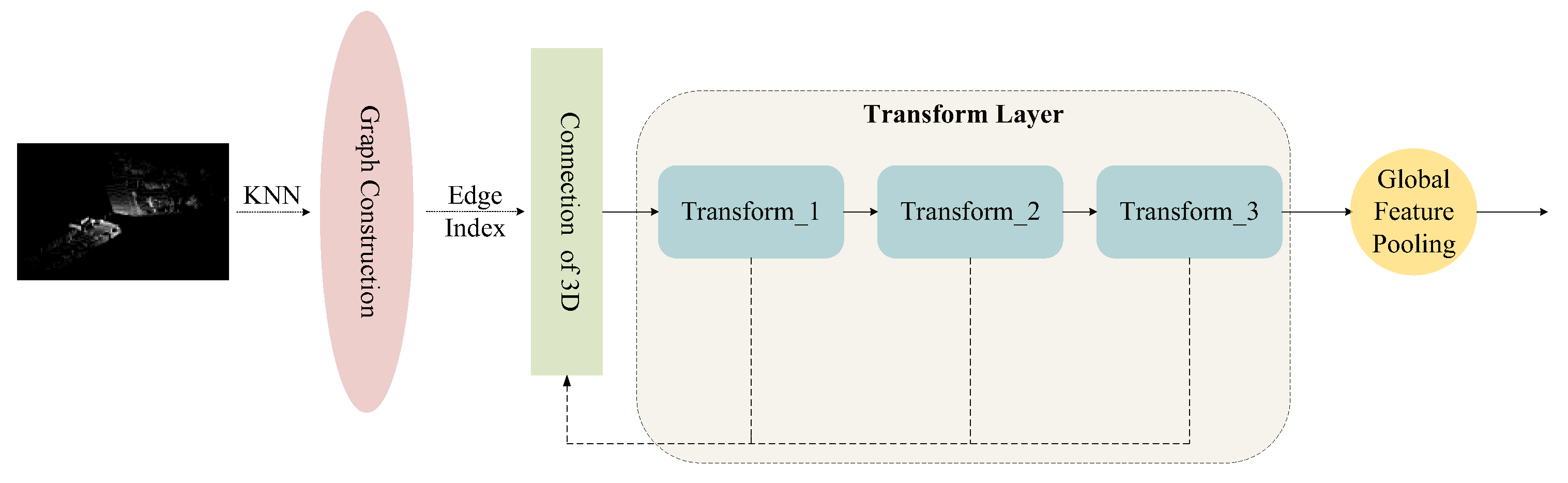

Based on these considerations, we adopt a Transformer-based approach for 3D feature extraction. The self-attention mechanism enables modeling of both local neighborhoods and long-range dependencies, allowing the network to capture complete geometric structures and establish robust feature correspondences across modalities. The 3D feature extraction process in our framework, illustrated in

Figure 2, consists of the following four steps:

A. KNN Graph Construction: Since point clouds are inherently unordered sets of discrete points, they lack explicit structural information for direct feature extraction. To address this, we employ the k-nearest neighbors (kNN) [

12] algorithm to construct a local topological structure. Specifically, for each point, its nearest neighbors are identified by computing Euclidean distances in the 3D coordinate space, where efficient search methods such as k-d tree indexing are used to accelerate neighbor retrieval. A sparse graph is then formed, in which points serve as nodes and neighbor relations define edges, enabling the model to capture local geometric structures. We set

, based on a balance between preserving sufficient local geometric information and avoiding the inclusion of noisy or redundant neighbors. Smaller values of

may lead to incomplete neighborhood representation, while larger values increase computational cost and risk incorporating irrelevant points.

B. Adjacency Matrix Generation and Computational Optimization: Following the KNN computation, an adjacency matrix (edge index) is generated, representing the connectivity between points. This adjacency matrix not only structures the point cloud into a graph but also constrains the computation of the Transformer to local neighborhoods, significantly reducing computational complexity.

The attention weight between point

and one of its neighbors

is dynamically computed as [

13,

14]:

where

and

are the query and key feature embeddings of point

and

, respectively. The attention mechanism is built upon this adjacency information, ensuring that each point can effectively aggregate features from its neighboring points, thereby transforming the point cloud from an unstructured set into a structured graph for more efficient feature extraction.

C. Transformer-based Feature Extraction: Once the local neighborhood graph is established, the point cloud is fed into a Transformer module for feature extraction. During this stage, each point’s 3D coordinates are first projected into a 256-dimensional embedding, providing a compact yet expressive representation. The token sequence is then processed by 4 Transformer layers, each consisting of multi-head self-attention with 4 heads and a feed-forward network of hidden dimension 512. These parameters were chosen to strike a balance between representation capacity and computational efficiency: the 256-dimensional embedding offers sufficient expressiveness for local and global geometric modeling, while the use of 4 layers and 4 heads provides adequate modeling depth and multi-subspace feature learning without incurring excessive computational cost.

Leveraging the self-attention mechanism, the Transformer models the relationships between points within local neighborhoods while also capturing long-range dependencies across distant points. Unlike conventional methods or CNN-based approaches that rely on fixed local receptive fields, the Transformer enables adaptive learning of multi-level feature representations across different subspaces. This allows even spatially distant points to establish effective global connections, thereby enhancing the completeness and robustness of point cloud representations. Additionally, the Transformer incorporates an adaptive weighting mechanism that dynamically adjusts the contribution of neighboring points during feature aggregation [

15]. Specifically, the aggregated feature of point

is computed as:

where

denotes the value embedding of neighbor

, and

is the learned attention weight derived from the Softmax operation. This formulation allows informative and geometrically relevant neighbors to contribute more strongly, while suppressing noisy or less relevant points, enabling the model to better handle irregular spatial distributions in real-world point clouds.

D. Global Feature Aggregation: To obtain a comprehensive global representation of the entire point cloud, we apply Global Average Pooling (GAP) [

16] over all points’ output features from the Transformer. This process aggregates individual point features into a single global feature vector that encapsulates the overall spatial structure and semantic information of the point cloud. The resulting global feature serves as a compact and expressive representation, supporting subsequent multi-modal fusion and transformation regression tasks.

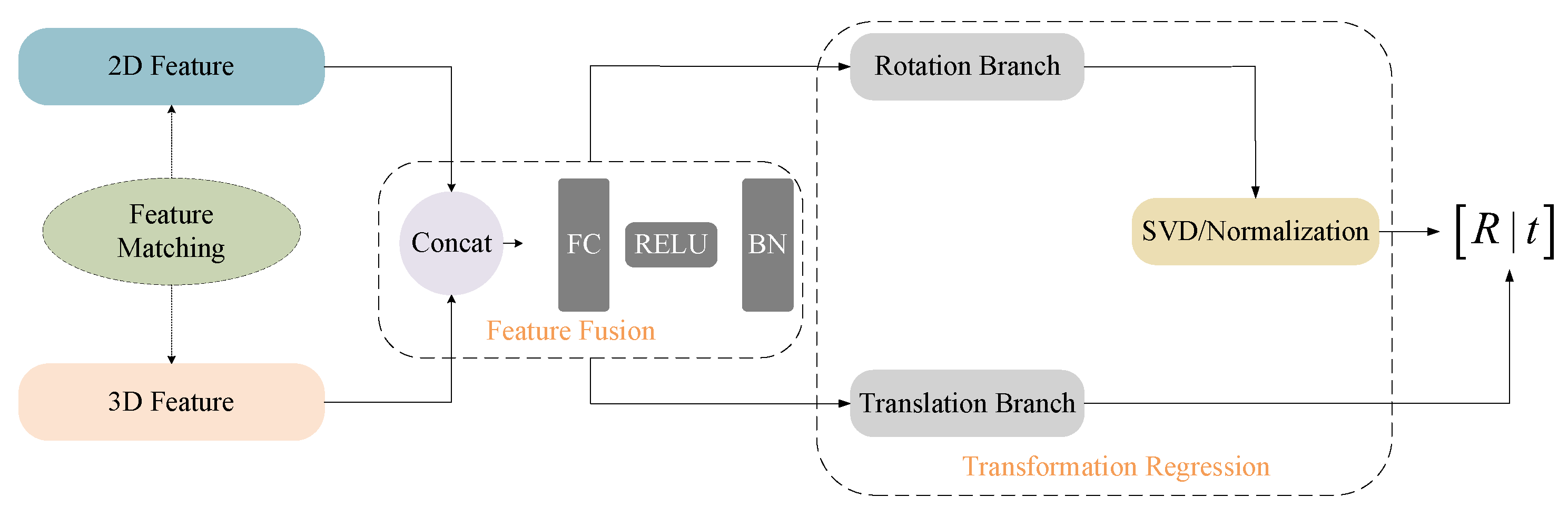

As illustrated in

Figure 3, our method first extracts global features from both the image and the point cloud. These features encode semantic information from the image and geometric structure from the point cloud. To enable cross-modal interaction, both features are projected into a shared 512-dimensional embedding space using two learnable linear layers. After projection, the 2D and 3D embeddings are concatenated and passed through MLP function [

17], producing a unified cross-modal feature representation. Compared to raw-level alignment, this high-level semantic fusion is more robust to noise, occlusion, and viewpoint changes.

After feature alignment, the features from both modalities are concatenated into a 1024-dimensional vector and passed into the feature fusion module, which is implemented as a lightweight multi-layer perceptron composed of fully connected layers, ReLU activation, and batch normalization. This design allows the network to perform deep-level fusion of the concatenated features, ensuring that the complementary semantics from the image and the geometric cues from the point cloud are effectively integrated into a unified cross-modal representation.

The fused features are fed into a transformation regression module consisting of two parallel branches [

18]: a Rotation Branch and a Translation Branch.

In the Rotation Branch, the network first outputs an intermediate matrix

. To guarantee geometric validity,

is converted into a proper orthogonal rotation matrix through Singular Value Decomposition (SVD). Specifically,

where

and

are orthogonal matrices, and

is a diagonal singular-value matrix, which enforces

. This SVD-based normalization prevents drift and ensures a stable rotation representation during training.

In the Translation Branch, the network directly regresses a 3D translation vector , representing the displacement between the two sensor coordinate systems.

Finally, the rotation

and translation

are combined to form the 6-DoF rigid transformation from the camera frame to the LiDAR frame:

After obtaining the extrinsic parameters, the transformation matrix is applied to project the 3D point cloud onto the image plane, thereby establishing spatial correspondence between the 3D geometric features and the 2D appearance features. This step not only provides a clear physical interpretation of cross-modal alignment but also offers natural guidance for subsequent optimization, enabling the framework to progressively improve accuracy and stability under unsupervised conditions without the need for manual annotations or additional calibration targets.

This mechanism seamlessly integrates feature extraction, semantic-level alignment, feature fusion, and transformation regression. Feature extraction ensures discriminative representations, feature fusion achieves effective modality integration, the regression network captures the cross-modal spatial mapping, and the projection operation provides inherent supervisory signals. This end-to-end design enhances the automation of the calibration process and demonstrates strong robustness and adaptability in complex environments, offering an efficient and annotation-free solution for camera–LiDAR calibration.

3. Results

In this section, we conduct experiments to validate the proposed unsupervised LiDAR–camera calibration framework. The evaluation covers multiple datasets and experimental settings, including loss design, quantitative and qualitative results, and ablation studies to analyze the contribution of each component.

3.1. Dataset

We evaluate the proposed method on both the KITTI dataset and a self-collected dataset.

KITTI dataset: The KITTI benchmark provides RGB–LiDAR pairs acquired in outdoor driving scenarios. Each sample consists of a high-resolution left camera image and a corresponding Velodyne point cloud. The ground-truth extrinsic parameters are only used for evaluation and visualization, ensuring that our framework remains fully unsupervised during training. Images are resized and normalized before being fed into the Vision Transformer, while point clouds are filtered to remove distant or low-intensity points. From the remaining points, 4096 are randomly sampled to maintain computational efficiency and uniformity.

Self-collected dataset: To further validate generalization in real-world applications, we constructed a dataset using a Livox Horizon LiDAR (Livox Technology Company Limited, Hong Kong, China) and an MV-CS050-10GC camera (HIKROBOT, Hangzhou, China) in outdoor environments. This dataset covers more diverse sensor configurations and environmental conditions compared with KITTI. Similar preprocessing is applied, including image normalization and random sampling of 4096 LiDAR points.

3.2. Loss Function

In our framework, we design a multi-constraint loss function to enforce structural consistency and ensure the physical validity of the predicted extrinsic parameters. The overall loss is defined as:

where

,

and

are weighting factors that balance the contributions of different loss terms.

denotes the structural consistency loss;

is the rotation orthogonality loss;

is the translation regularization loss.

To enforce geometric alignment between the image and the point cloud in an unsupervised manner, we define a structural consistency loss [

19]. Specifically, a 3D LiDAR point

is first transformed into the camera coordinate system using the estimated extrinsics:

where

denote the predicted rotation and translation matrices, and

denotes the 3D LiDAR point represented in the camera coordinate system. To project this 3D point to the image plane, perspective projection is applied:

denotes the 2D projection of the -th 3D point onto the image plane under the predicted transformation. Thus, are the 2D pixel coordinates corresponding to point .

To enforce cross-modal geometric alignment, we compare the semantic feature of in the point cloud with the feature sampled at on the image feature map:

: 3D point feature extracted by the point cloud Transformer.

: 2D semantic feature obtained by bilinear interpolation on the image feature map.

Finally, the structural consistency loss is defined as:

represents the image feature sampled at the projected location on the image feature map; refers to the point cloud feature corresponding to the original 3D point.

This loss forces projected LiDAR points to be consistent with their visual counterparts, enabling geometry-aware supervision without ground-truth extrinsics.

To ensure that the predicted rotation matrix

represents a physically valid 3D rotation, we enforce its orthogonality [

20]:

This ensures physically meaningful and interpretable rotations.

To discourage unrealistic translations, we penalize the

of the predicted translation vector

:

This improves stability and constrains predictions to realistic sensor configurations. The translation regularization loss is assigned only a weak weight and serves to discourage excessively large values. A trivial solution is avoided because the reprojection consistency loss dominates the optimization, ensuring physically meaningful, non-zero translations.

To ensure a closed optimization loop, the entire network is trained in an end-to-end manner. The total loss is computed as the weighted sum of all loss terms and is backpropagated through all learnable parameters, ensuring that both the extrinsic transformation and feature extraction modules are jointly optimized.

For implementation details, we use the Adam optimizer with an initial learning rate of . The model is trained with a batch size of 8 for 200 epochs on the KITTI dataset. All modules are trained jointly from random initialization, ensuring stable convergence and optimal performance.

3.3. Experiment

In this subsection, we present the experimental results of the proposed calibration framework. The evaluation is carried out on the KITTI benchmark and our self-collected dataset, followed by ablation studies to investigate the contribution of individual modules.

3.3.1. Calibration on KITTI Dataset

In this section, we evaluate the proposed unsupervised calibration method on the KITTI dataset. KITTI is one of the most representative benchmarks in autonomous driving, providing synchronized LiDAR point clouds, RGB images, and high-precision extrinsic parameters. Specifically, we adopt Sequence 00 of the KITTI odometry dataset, where paired images and point clouds are used to estimate the LiDAR–camera extrinsic transformation, enabling quantitative evaluation of our method without any super-vision. The KITTI benchmark provides ground-truth extrinsic parameters obtained via a target-based calibration procedure conducted during dataset collection. Therefore, the reported rotation and translation errors in our experiments are measured with respect to this benchmark reference. To comprehensively validate the effectiveness of the proposed approach, we compare it against several representative methods, including unsupervised approaches CalibDepth [

21] and RegNet, as well as supervised approaches LCCNet [

22], CalibNet [

23], and CALNet [

24].

In our framework, the Vision Transformer encoder extracts semantic features from RGB images, while the Point Transformer models geometric structures from LiDAR point clouds. The extracted 2D and 3D features are fused and passed through a regression network to predict the 6-DoF extrinsic transformation matrix. The predicted transformation is then optimized in an end-to-end manner using the proposed multi-constraint loss, which directly supervises the regression output and enforces cross-modal consistency. Through backpropagation, the Vision Transformer encoder parameters are updated jointly with the Point Transformer and regression module, ensuring that the Vision Transformer contributes directly to the calibration process, see

Table 1.

Our method achieves the lowest rotation error among unsupervised approaches, with an average of 0.21° (0.18° roll, 0.36° pitch, 0.13° yaw). For translation, it obtains an average error of 3.31 cm (3.8, 2.2, and 3.9 cm along X, Y, and Z, respectively). Compared with CALNet, a strong supervised baseline (0.18°/2.9 cm), our method delivers competitive performance without using any ground-truth extrinsic supervision.

Relative to other unsupervised methods such as RegNet (0.28°/6.00 cm) and CalibDepth (0.37°/1.56 cm), our model achieves a better trade-off between rotation and translation accuracy. While LCCNet, a supervised approach, yields the best overall accuracy, our results demonstrate that fully unsupervised calibration can still attain competitive performance without prior extrinsic knowledge.

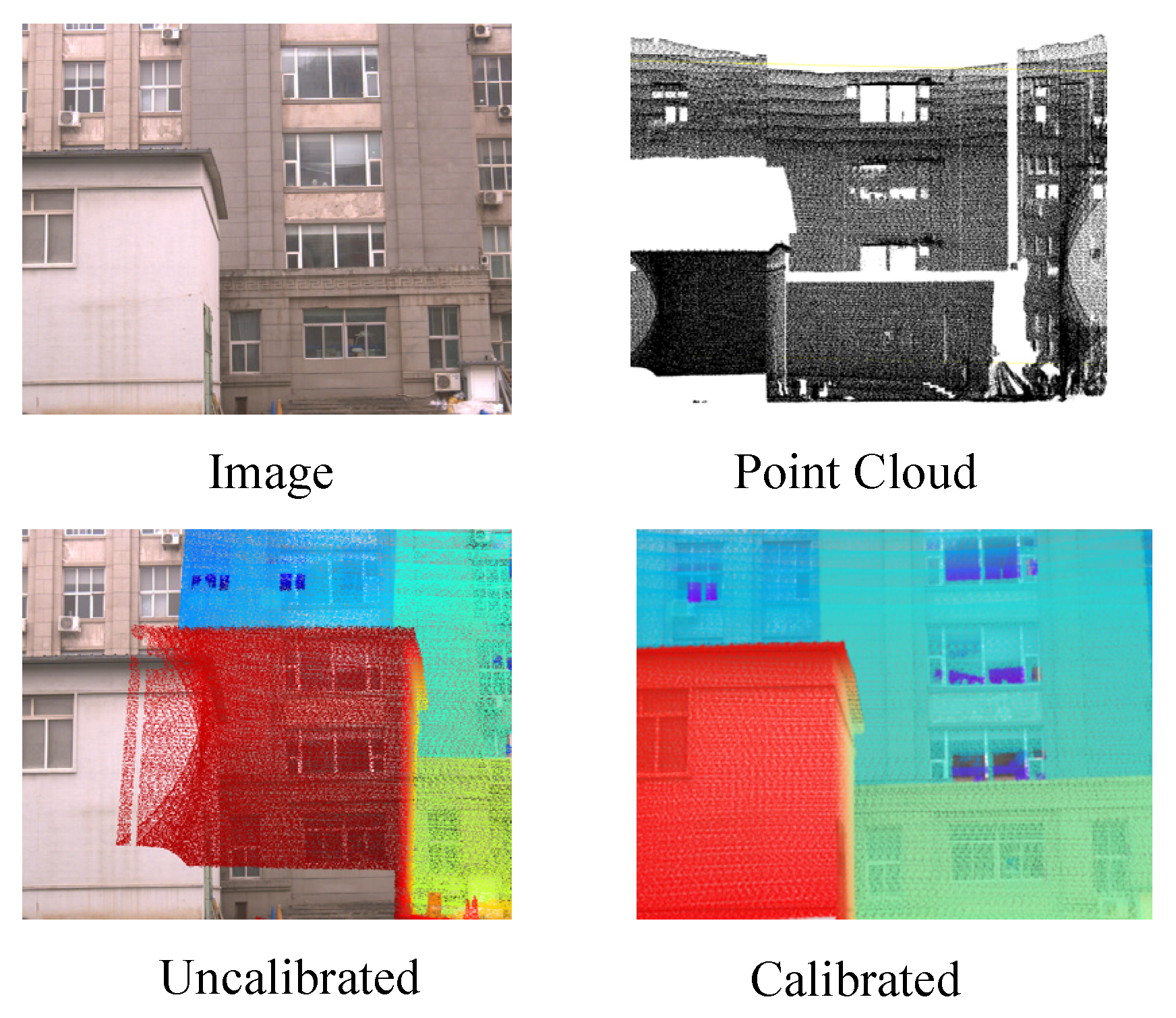

To further validate the effectiveness of our proposed approach, we provide qualitative visualization results of LiDAR-to-image projections under different calibration settings, as shown in

Figure 4.

The first column illustrates the original RGB images from the KITTI dataset, serving as the reference for alignment. In the second column, we present the uncalibrated case where the extrinsic parameters are perturbed. In this setting, the projected LiDAR points fail to align with the true scene geometry: points that should fall on solid surfaces such as road boundaries, vehicles, or building facades appear scattered in free space, producing floating points and distorted structures. These severe inconsistencies highlight the necessity of accurate calibration in multi-sensor fusion tasks. In contrast, the third column demonstrates the results obtained using the extrinsic parameters estimated by our framework. In the experimental results in the third column, we color-coded the LiDAR points according to depth ranges: 0–10 m (red), 10–20 m (orange), 20–30 m (yellow), 30–40 m (green), 40–60 m (cyan), 60–80 m (blue), and 80–100 m (purple). Here, the projected LiDAR points exhibit a high degree of spatial consistency with the visual scene. Specifically, road surfaces are clearly delineated, vehicles are tightly outlined, and building contours are precisely matched, indicating that the predicted transformation successfully bridges the geometric gap between the two modalities. Such improvements not only reflect the accuracy of our calibration method but also demonstrate its robustness under real-world scenarios.

These qualitative results confirm that our unsupervised calibration framework can effectively recover precise camera–LiDAR alignment without requiring manual annotation or dedicated calibration targets. By leveraging feature-level correspondence and projection-based consistency, the method ensures reliable multimodal alignment, which is critical for downstream perception tasks such as 3D object detection, semantic segmentation, and scene understanding.

3.3.2. Calibration on Self-Collected Dataset

Beyond experiments on public datasets such as KITTI, we further conducted evaluations on a self-collected dataset to assess the generalization capability of our proposed calibration framework.

Compared with standardized benchmarks, the self-collected data introduces additional challenges such as non-uniform LiDAR scanning patterns, varying illumination conditions, and moving objects. These factors provide a more realistic and rigorous test of the robustness of our calibration method. By analyzing this dataset, we aim to demonstrate that our framework not only achieves accurate calibration on well-structured benchmarks but also maintains stable and reliable performance under unconstrained, real-world conditions.

Since our self-collected dataset does not provide ground-truth extrinsic parameters, absolute rotation and translation errors cannot be computed. Instead, we employ reprojection error as the evaluation metric, measured by projecting LiDAR points onto the image plane and comparing them with detected checkerboard corners from calibration boards. This provides a reliable surrogate for quantitative evaluation in the absence of ground-truth extrinsics.

To further validate the effectiveness of our framework, we conducted a comparative evaluation on the self-collected dataset using several representative calibration methods, including a Canny-based geometric approach, the unsupervised deep learning method CalibDepth, and the supervised deep learning method CALNet.

Table 2 summarizes the mean reprojection error of these approaches together with our method. Unlike the KITTI benchmark, our self-collected dataset does not provide ground-truth extrinsic parameters, which makes it infeasible to compute absolute rotation and translation errors. Therefore, we adopt reprojection error as the evaluation metric, since it directly measures the pixel-level alignment between projected LiDAR points and image observations. This metric offers a reliable indicator of calibration quality in the absence of ground-truth extrinsics and reflects the method’s applicability to real-world scenarios where manual calibration labels are unavailable.

As shown in the results, the Canny-based method exhibits the largest error of 6.42 px, indicating its limited robustness in real-world scenarios due to sensitivity to noise and unreliable edge detection. CalibDepth, while unsupervised, improves the performance with an error of 4.89 px, demonstrating better adaptability but still lacking stability under challenging conditions. CALNet, which relies on supervised training with annotated calibration targets, achieves a mean error of 2.84 px, highlighting the advantage of explicit supervision. In comparison, our proposed unsupervised framework achieves the lowest mean reprojection error of 1.52 px, significantly surpassing both unsupervised and supervised baselines. This confirms that our feature alignment and projection-consistency mechanism effectively enhance calibration accuracy, while maintaining the advantages of an unsupervised approach.

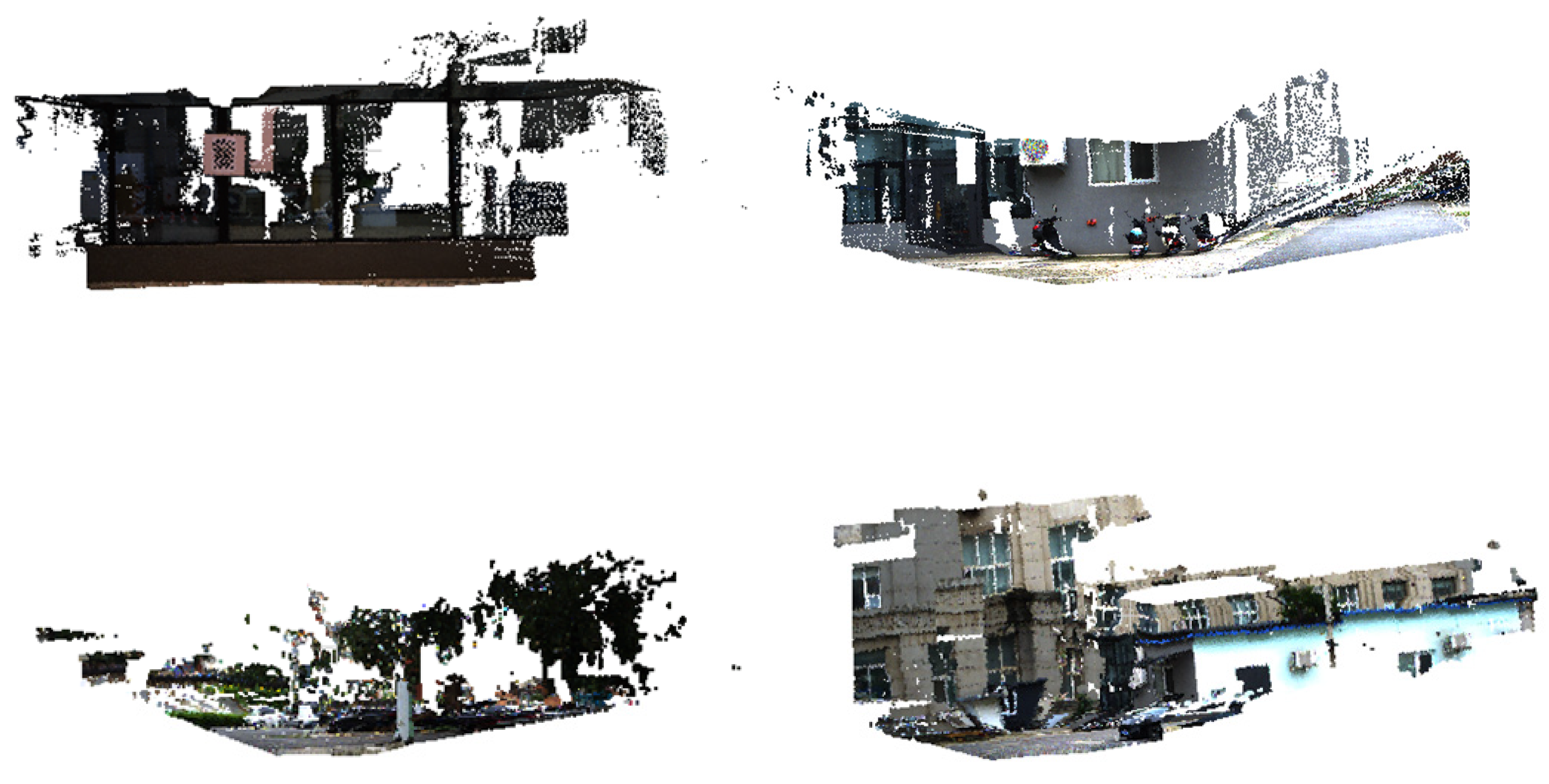

In addition to the quantitative results, we further conduct qualitative comparisons to more intuitively validate the effectiveness of the proposed method. Specifically, we present the calibration results from two complementary perspectives: first, by projecting the 3D LiDAR point cloud onto the 2D image plane to examine the consistency between geometric contours and image boundaries; and second, by projecting the 2D image onto the 3D point cloud space to verify the correspondence between color information and structural details. These two forms of visualization provide complementary evidence to directly reflect the accuracy and robustness of the calibration results.

Figure 5 presents the qualitative results of the 3D-to-2D projection, where LiDAR point clouds are projected onto the corresponding RGB image. The figure shows the raw RGB image, the original point cloud, the projection with perturbed extrinsics (Uncalibrated), and the projection using our estimated extrinsics (Calibrated). In the uncalibrated case, significant misalignments can be observed: points from the building façade deviate from the actual window grid, the wall edges appear distorted, and the ground–wall boundary fails to align, resulting in floating and misplaced points. After applying our calibration, the projected points adhere closely to structural boundaries such as window frames, façade contours, and ground surfaces, demonstrating a high degree of spatial consistency between the two modalities. This result highlights the effectiveness of our method in recovering accurate extrinsics and achieving precise geometric alignment between LiDAR and camera data.

Figure 6 presents several examples of 2D-to-3D projection results, where image features are reprojected onto the LiDAR point clouds to generate colored 3D reconstructions. Across different scenarios, including building facades, road scenes, and open environments with trees and vegetation, our method consistently demonstrates precise alignment between modalities. For instance, in urban street scenes, structural details such as windows, doors, and walls are accurately mapped onto the corresponding 3D geometry, resulting in point clouds with clear semantic boundaries. In outdoor environments, vegetation and road textures are well preserved, with the projected color distribution tightly adhering to the underlying 3D surfaces. These results highlight the natural fusion of 2D and 3D modalities achieved by our calibration method and further confirm its robustness in delivering strong cross-modal consistency, which is crucial for downstream applications such as semantic mapping, scene reconstruction, and multimodal perception in complex environments.

3.3.3. Ablation Experiment

To further investigate the contribution of each module in our framework, we conduct an ablation study on the KITTI dataset, with results summarized in

Table 3. The complete model achieves the best performance with the lowest rotation and translation errors, while removing or altering any component leads to noticeable degradation, confirming the necessity of each design.

When the Point Transformer is removed, the translation error increases from 3.31 cm to 4.82 cm, indicating that fine-grained 3D geometric modeling is crucial for accurate cross-modal alignment. Substituting the Vision Transformer with a standard CNN encoder results in higher rotation error (0.30° vs. 0.21°), which highlights the importance of capturing long-range semantic dependencies from the image domain.

The feature fusion module also proves essential: without it, the integration of image and point cloud features becomes less effective, leading to errors of 5.13 cm in translation and 0.41° in rotation. This shows that deep-level fusion is necessary to exploit complementary cross-modal cues. Finally, removing the multi-constraint loss causes the most significant performance drop (RTE 6.35 cm, RRE 0.53°), demonstrating that appropriate constraints during optimization are indispensable for stable and accurate calibration.

These results confirm that each component—feature extraction, fusion, and loss design—contributes meaningfully to the overall performance, and their combination ensures the robustness and accuracy of our framework.