Crowd Gathering Detection Method Based on Multi-Scale Feature Fusion and Convolutional Attention

Abstract

1. Introduction

2. Related Work

2.1. Crowd Gathering Detection Method Based on Density Maps

2.2. Crowd Gathering Detection Method Based on Point Supervision

3. Our Work

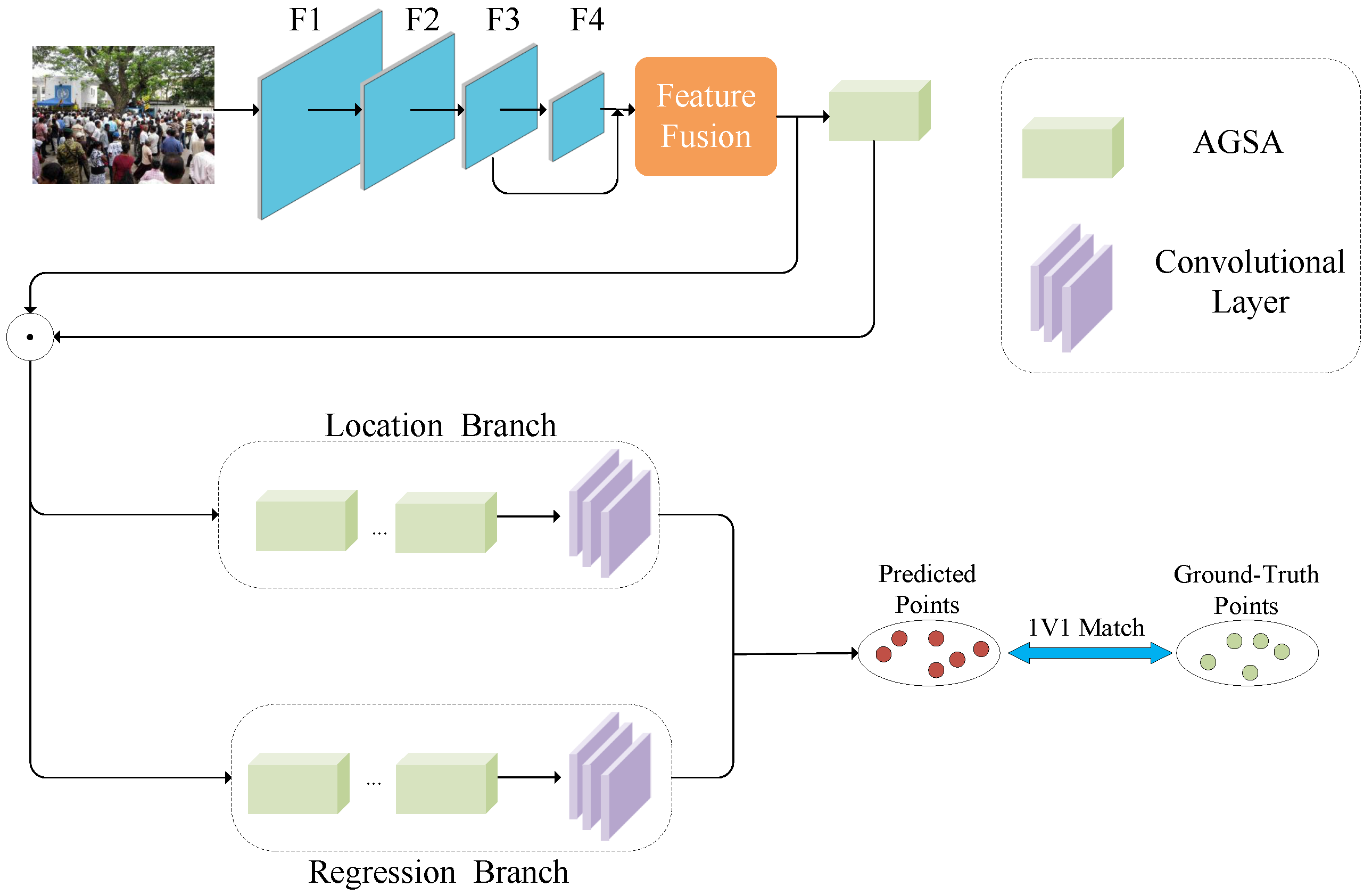

3.1. Introduction to Basic Model

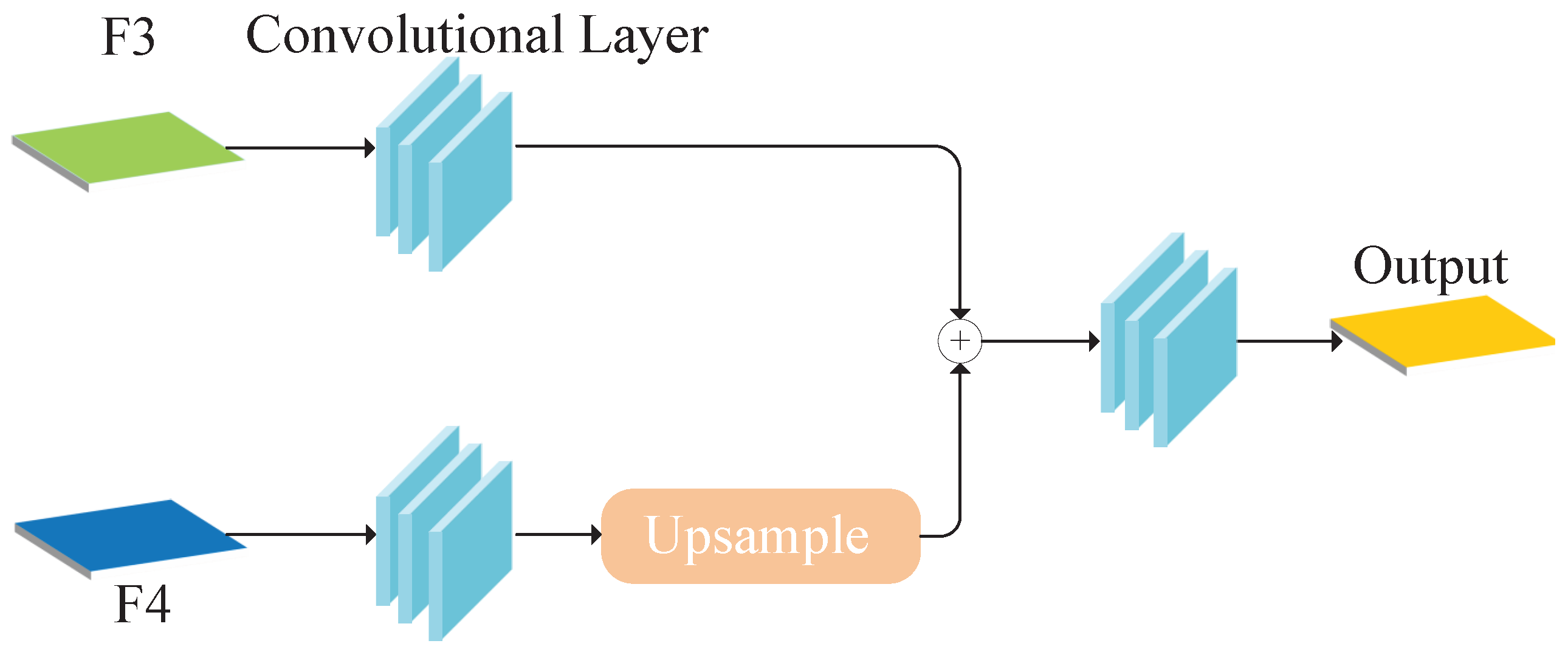

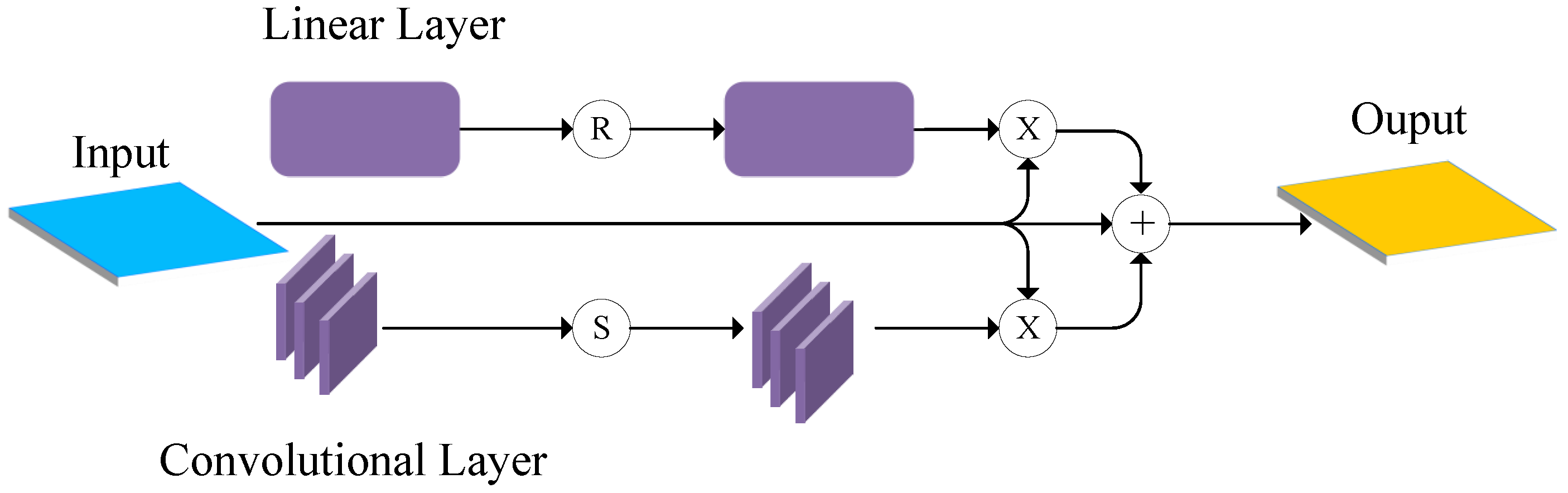

3.2. Our Model

3.3. Loss Function

4. Experiments

4.1. Experiment Planning

4.2. Datasets Introduction

4.3. Implementation Details

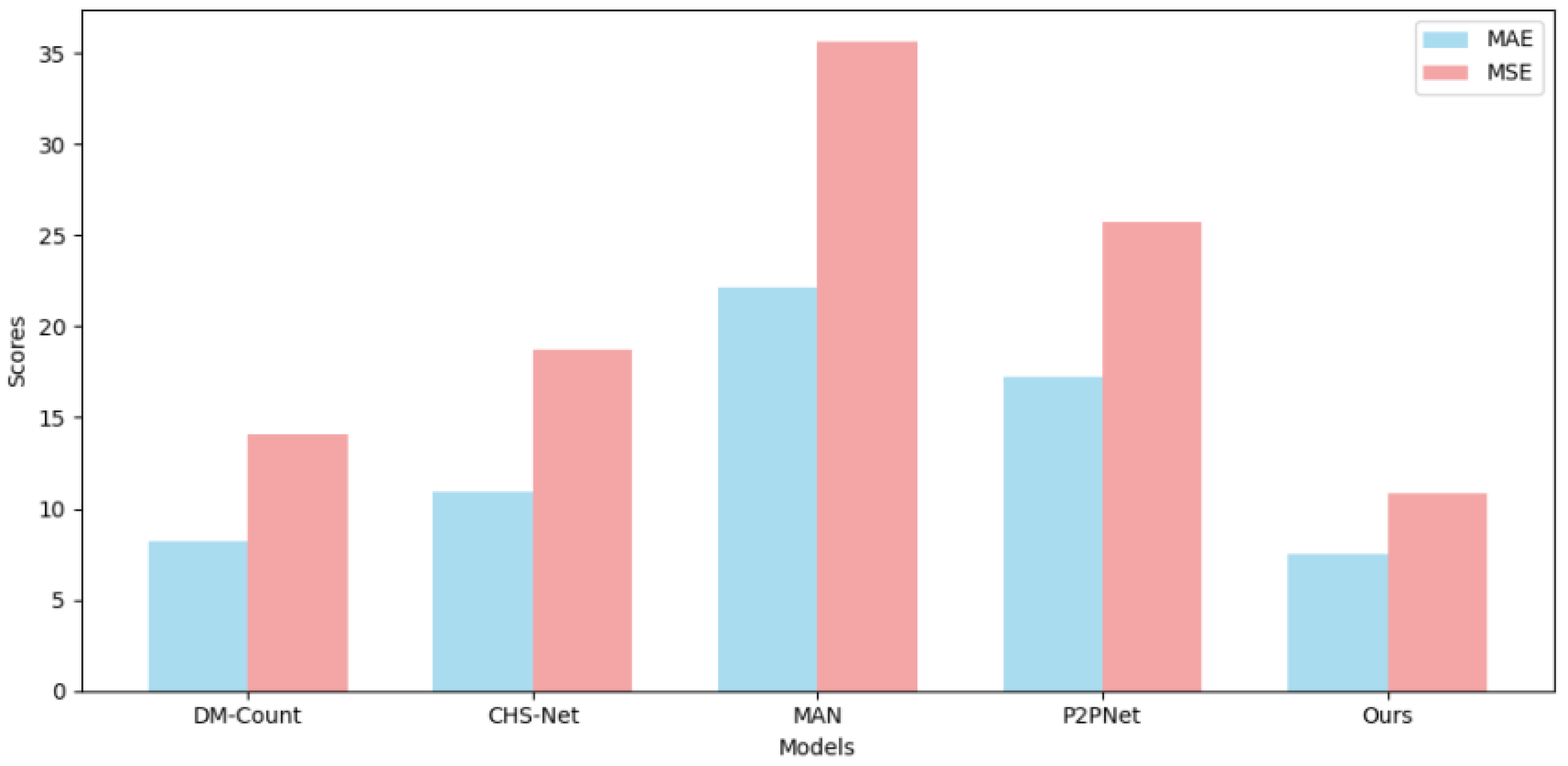

4.4. Result Comparison and Analysis

4.5. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lempitsky, V.; Zisserman, A. Learning to Count Objects in Images. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2010; Volume 23. [Google Scholar]

- Chen, Y.; Liang, D.; Bai, X.; Xu, Y.; Yang, X. Cell localization and counting using direction field map. IEEE J. Biomed. Health Inform. 2021, 26, 359–368. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Liang, D.; Xu, Y.; Bai, S.; Zhan, W.; Bai, X.; Tomizuka, M. Autoscale: Learning to scale for crowd counting. Int. J. Comput. Vis. 2022, 130, 405–434. [Google Scholar] [CrossRef]

- Wen, L.; Du, D.; Zhu, P.; Hu, Q.; Wang, Q.; Bo, L.; Lyu, S. Detection, tracking, and counting meets drones in crowds: A benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 7812–7821. [Google Scholar]

- Liu, Y.; Shi, M.; Zhao, Q.; Wang, X. Point in, box out: Beyond counting persons in crowds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6469–6478. [Google Scholar]

- Sam, D.B.; Peri, S.V.; Sundararaman, M.N.; Kamath, A.; Babu, R.V. Locate, size, and count: Accurately resolving people in dense crowds via detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2739–2751. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Hou, J.; Hou, X.; Chau, L.P. A self-training approach for point-supervised object detection and counting in crowds. IEEE Trans. Image Process. 2021, 30, 2876–2887. [Google Scholar] [CrossRef] [PubMed]

- Cheng, J.; Xiong, H.; Cao, Z.; Lu, H. Decoupled two-stage crowd counting and beyond. IEEE Trans. Image Process. 2021, 30, 2862–2875. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Weng, X.; Mu, Y. Recurrent attentive zooming for joint crowd counting and precise localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1217–1226. [Google Scholar]

- Song, Q.; Wang, C.; Jiang, Z.; Wang, Y.; Tai, Y.; Wang, C.; Wu, Y. Rethinking counting and localization in crowds: A purely point-based framework. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3365–3374. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Zhang, C.; Li, H.; Wang, X.; Yang, X. Cross-scene crowd counting via deep convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 833–841. [Google Scholar]

- Hu, Y.; Chang, H.; Nian, F.; Wang, Y.; Li, T. Dense crowd counting from still images with convolutional neural networks. J. Vis. Commun. Image Represent. 2016, 38, 530–539. [Google Scholar] [CrossRef]

- Ma, Z.; Wei, X.; Hong, X.; Gong, Y. Bayesian loss for crowd count estimation with point supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6142–6151. [Google Scholar]

- Wan, J.; Chan, A. Modeling Noisy Annotations for Crowd Counting. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 3386–3396. [Google Scholar]

- Li, Y.; Zhang, X.; Chen, D. CSRNet: Dilated convolutional neural networks for understanding the highly congested scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1091–1100. [Google Scholar]

- Miao, Y.; Lin, Z.; Ding, G.; Han, J. Shallow feature based dense attention network for crowd counting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11765–11772. [Google Scholar]

- Jiang, X.; Zhang, L.; Xu, M.; Zhang, T.; Lv, P.; Zhou, B.; Pang, Y. Attention scaling for crowd counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4706–4715. [Google Scholar]

- Xiong, H.; Lu, H.; Liu, C.; Liu, L.; Cao, Z.; Shen, C. From open set to closed set: Counting objects by spatial divide-and-conquer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8362–8371. [Google Scholar]

- Liu, L.; Lu, H.; Xiong, H.; Xian, K.; Cao, Z.; Shen, C. Counting objects by blockwise classification. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 3513–3527. [Google Scholar] [CrossRef]

- Liu, L.; Lu, H.; Zou, H.; Xiong, H.; Cao, Z.; Shen, C. Weighing Counts: Sequential Crowd Counting by Reinforcement Learning. In Computer Vision–ECCV 2020; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12356, pp. 164–181. [Google Scholar]

- Liu, W.; Salzmann, M.; Fua, P. Context-aware crowd counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5099–5108. [Google Scholar]

- Bai, S.; He, Z.; Qiao, Y.; Hu, H.; Wu, W.; Yan, J. Adaptive dilated network with self-correction supervision for counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4594–4603. [Google Scholar]

- Lian, D.; Li, J.; Zheng, J.; Luo, W.; Gao, S. Density map regression guided detection network for RGB-D crowd counting and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1821–1830. [Google Scholar]

- Laradji, I.H.; Rostamzadeh, N.; Pinheiro, P.O.; Vazquez, D.; Schmidt, M. Where are the blobs: Counting by localization with point supervision. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 547–562. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar] [CrossRef]

- Li, J.; Xue, Y.; Wang, W.; Ouyang, G. Cross-level parallel network for crowd counting. IEEE Trans. Ind. Inform. 2019, 16, 566–576. [Google Scholar] [CrossRef]

- Hu, Y.; Jiang, X.; Liu, X.; Zhang, B.; Han, J.; Cao, X.; Doermann, D. NAS-Count: Counting-by-Density with Neural Architecture Search. In Computer Vision–ECCV 2020; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12362, pp. 747–766. [Google Scholar]

- Abousamra, S.; Hoai, M.; Samaras, D.; Chen, C. Localization in the crowd with topological constraints. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 872–881. [Google Scholar]

- Wang, B.; Liu, H.; Samaras, D.; Nguyen, M.H. Distribution Matching for Crowd Counting. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 1595–1607. [Google Scholar]

- Dai, M.; Huang, Z.; Gao, J.; Shan, H.; Zhang, J. Cross-head supervision for crowd counting with noisy annotations. In Proceedings of the 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 4–9 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Lin, H.; Ma, Z.; Ji, R.; Wang, Y.; Hong, X. Boosting crowd counting via multifaceted attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19628–19637. [Google Scholar]

| Method | STA | STB | ||

|---|---|---|---|---|

| MAE | MSE | MAE | MSE | |

| CSRNet [16] | 68.2 | 115.0 | 10.6 | 16.0 |

| CLPNet [27] | 71.5 | 108.7 | 12.2 | 20.0 |

| AMSNet [28] | 56.7 | 93.4 | 6.7 | 10.2 |

| CAN [22] | 62.3 | 100.0 | 7.8 | 12.2 |

| S-DCNet [29] | 58.3 | 95.0 | 6.7 | 10.7 |

| SGANet [4] | 57.6 | 101.1 | 6.6 | 10.2 |

| GauNet [2] | 54.8 | 89.1 | 6.2 | 9.9 |

| P2PNet [10] | 52.7 | 85.1 | 6.3 | 9.9 |

| DM-Count [30] | 59.7 | 95.7 | 7.4 | 11.8 |

| CHS-Net [31] | 59.2 | 97.8 | 7.1 | 12.1 |

| TopoCount [29] | 61.2 | 104.6 | 7.8 | 13.7 |

| Ours | 51.6 | 82.7 | 6.2 | 10.2 |

| Method | MAE | MSE |

|---|---|---|

| P2PNet [10] | 26.1 | 58.3 |

| DM-Count [30] | 29.7 | 62.0 |

| CHS-Net [31] | 55.5 | 128.8 |

| MAN [32] | 49.4 | 104.7 |

| Ours | 24.4 | 53.7 |

| Method | MAE | MSE |

|---|---|---|

| P2PNet [10] | 22.1 | 34.0 |

| DM-Count [30] | 23.1 | 34.9 |

| CHS-Net [31] | 24.6 | 39.3 |

| MAN [32] | 22.1 | 32.8 |

| Ours | 18.2 | 28.7 |

| Method | MAE | MSE |

|---|---|---|

| P2PNet [10] | 8.2 | 14.1 |

| DM-Count [30] | 10.9 | 18.7 |

| CHS-Net [31] | 22.1 | 35.6 |

| MAN [32] | 17.2 | 25.7 |

| Ours | 7.5 | 10.8 |

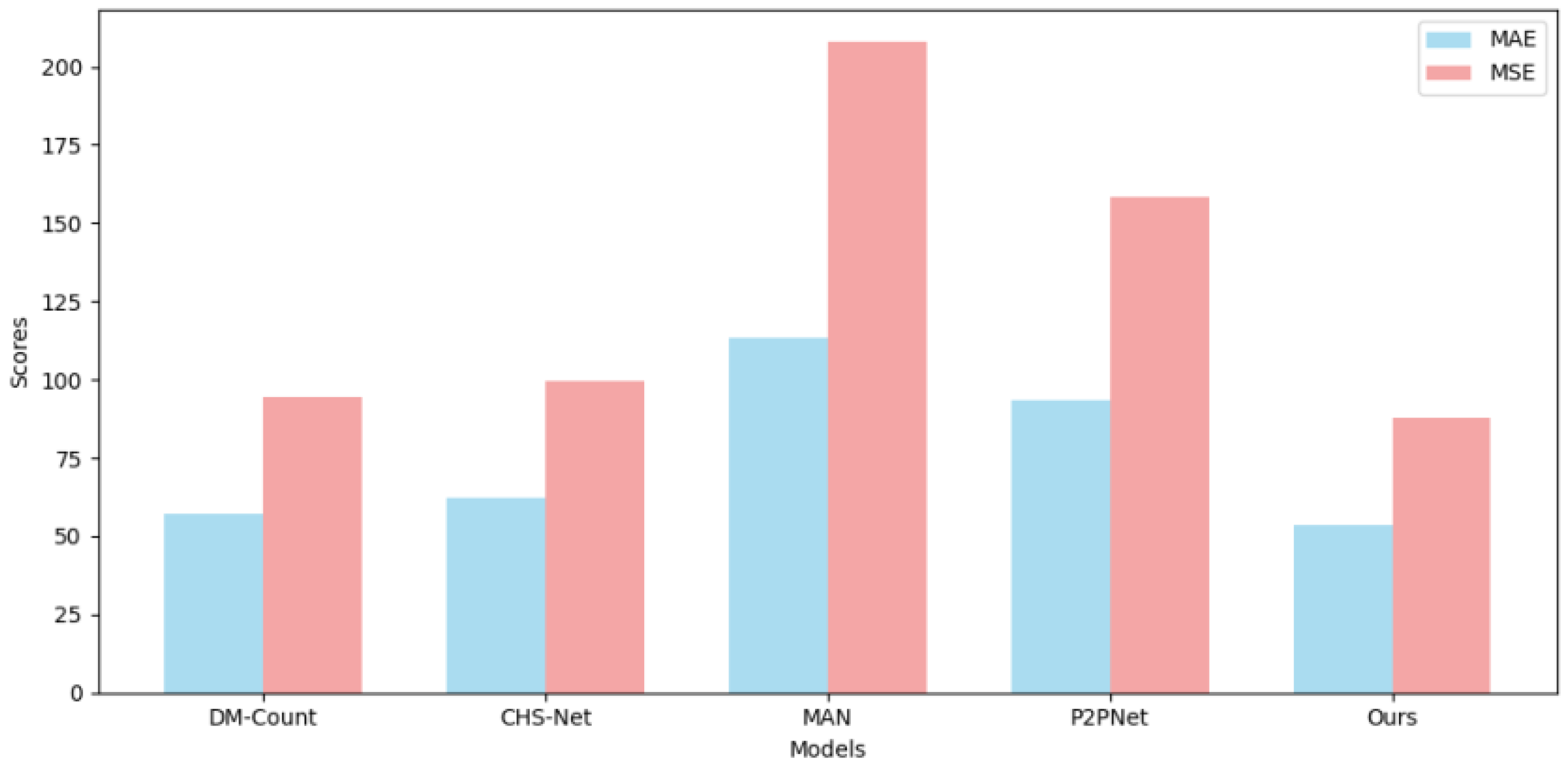

| Method | MAE | MSE |

|---|---|---|

| P2PNet [10] | 57.1 | 94.6 |

| DM-Count [30] | 62.3 | 99.5 |

| CHS-Net [31] | 113.5 | 207.8 |

| MAN [32] | 93.3 | 158.3 |

| Ours | 53.7 | 87.7 |

| Number | MAE | MSE |

|---|---|---|

| 0 | 53.0 | 84.9 |

| 1 | 53.5 | 86.6 |

| 2 | 52.0 | 83.4 |

| 3 | 51.6 | 82.7 |

| MAE | MSE | |

|---|---|---|

| 0.0002 | 51.6 | 82.7 |

| 0.0004 | 52.7 | 85.5 |

| 0.0006 | 52.1 | 87.6 |

| 0.0008 | 50.6 | 83.5 |

| Method | MAE | MSE |

|---|---|---|

| Dilated convolution | 51.6 | 82.7 |

| Depthwise separable convolution | 52.7 | 84.4 |

| Module | MAE | MSE |

|---|---|---|

| Without channel | 56.7 | 91.3 |

| Without spatial | 53.4 | 91.4 |

| With channel and spatial | 51.6 | 82.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yasen, K.; Zhou, J.; Zhou, N.; Qin, K.; Wang, Z.; Li, Y. Crowd Gathering Detection Method Based on Multi-Scale Feature Fusion and Convolutional Attention. Sensors 2025, 25, 6550. https://doi.org/10.3390/s25216550

Yasen K, Zhou J, Zhou N, Qin K, Wang Z, Li Y. Crowd Gathering Detection Method Based on Multi-Scale Feature Fusion and Convolutional Attention. Sensors. 2025; 25(21):6550. https://doi.org/10.3390/s25216550

Chicago/Turabian StyleYasen, Kamil, Juting Zhou, Nan Zhou, Ke Qin, Zhiguo Wang, and Ye Li. 2025. "Crowd Gathering Detection Method Based on Multi-Scale Feature Fusion and Convolutional Attention" Sensors 25, no. 21: 6550. https://doi.org/10.3390/s25216550

APA StyleYasen, K., Zhou, J., Zhou, N., Qin, K., Wang, Z., & Li, Y. (2025). Crowd Gathering Detection Method Based on Multi-Scale Feature Fusion and Convolutional Attention. Sensors, 25(21), 6550. https://doi.org/10.3390/s25216550