Accuracy of Heart Rate Measurement Under Transient States: A Validation Study of Wearables for Real-Life Monitoring

Abstract

1. Introduction

2. Materials and Methods

2.1. Recruitment

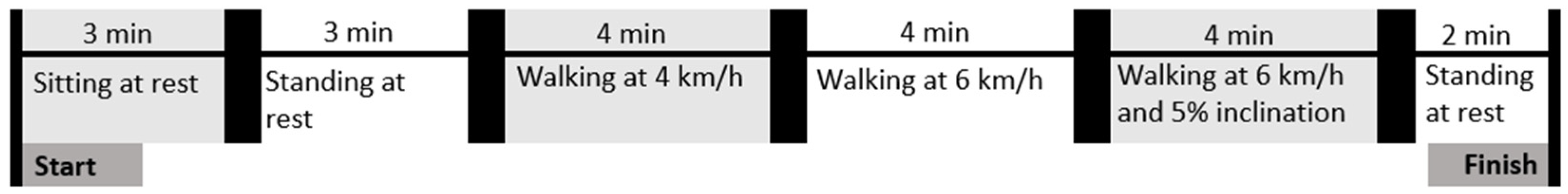

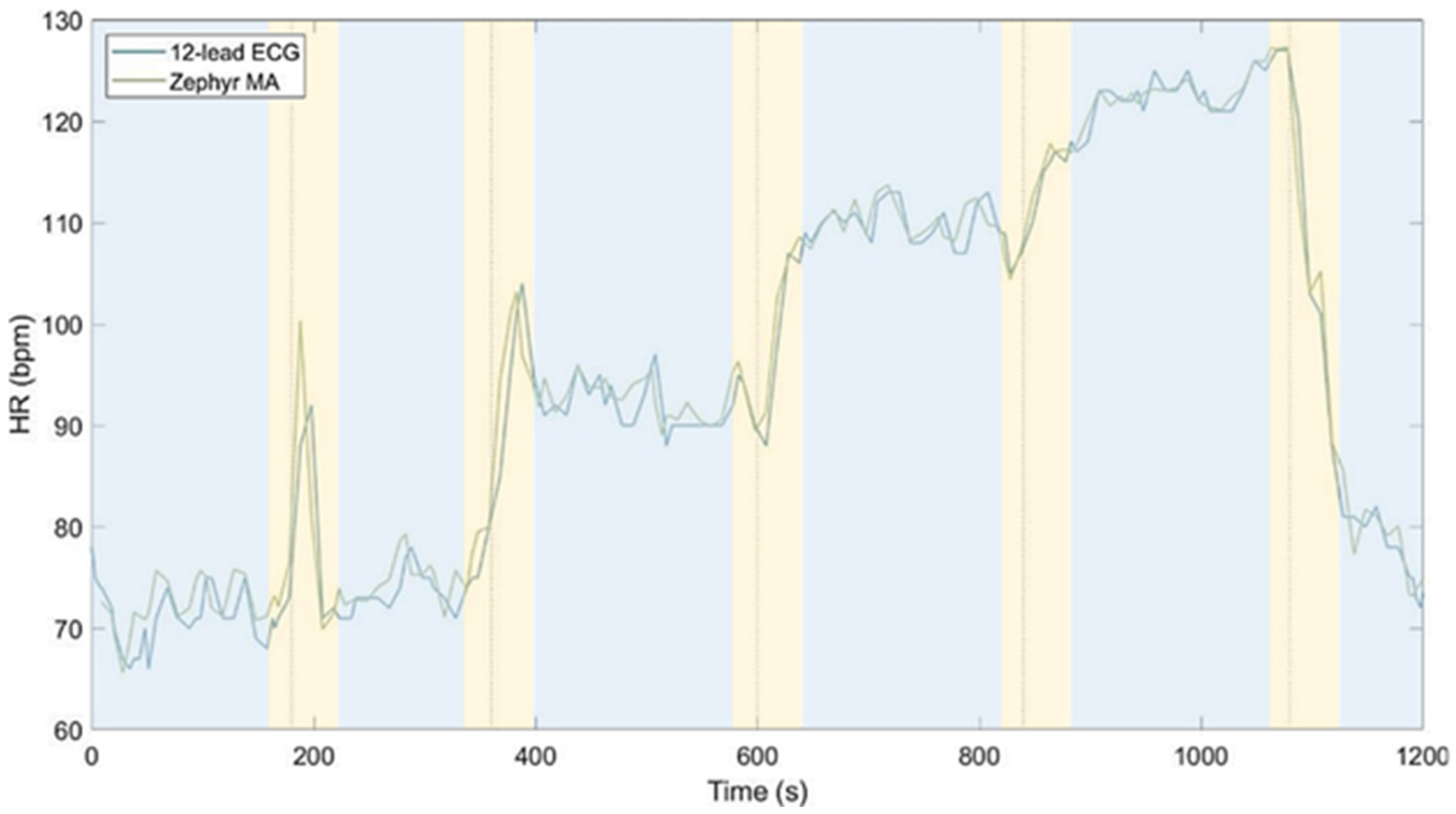

2.2. Study Protocol

2.3. Data Preprocessing

2.4. Statistical Analyses

3. Results

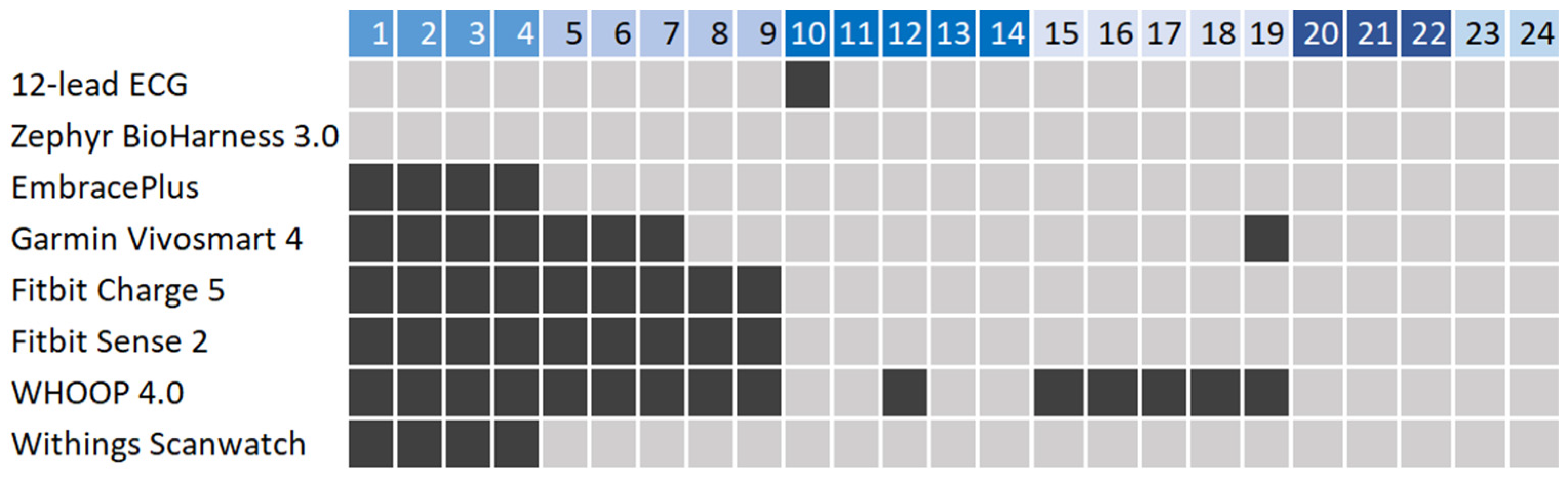

3.1. User Statistics and Data Collection

3.2. Overall Performance in Measuring Heart Rate

3.3. Performance Measuring Heart Rate Dynamics

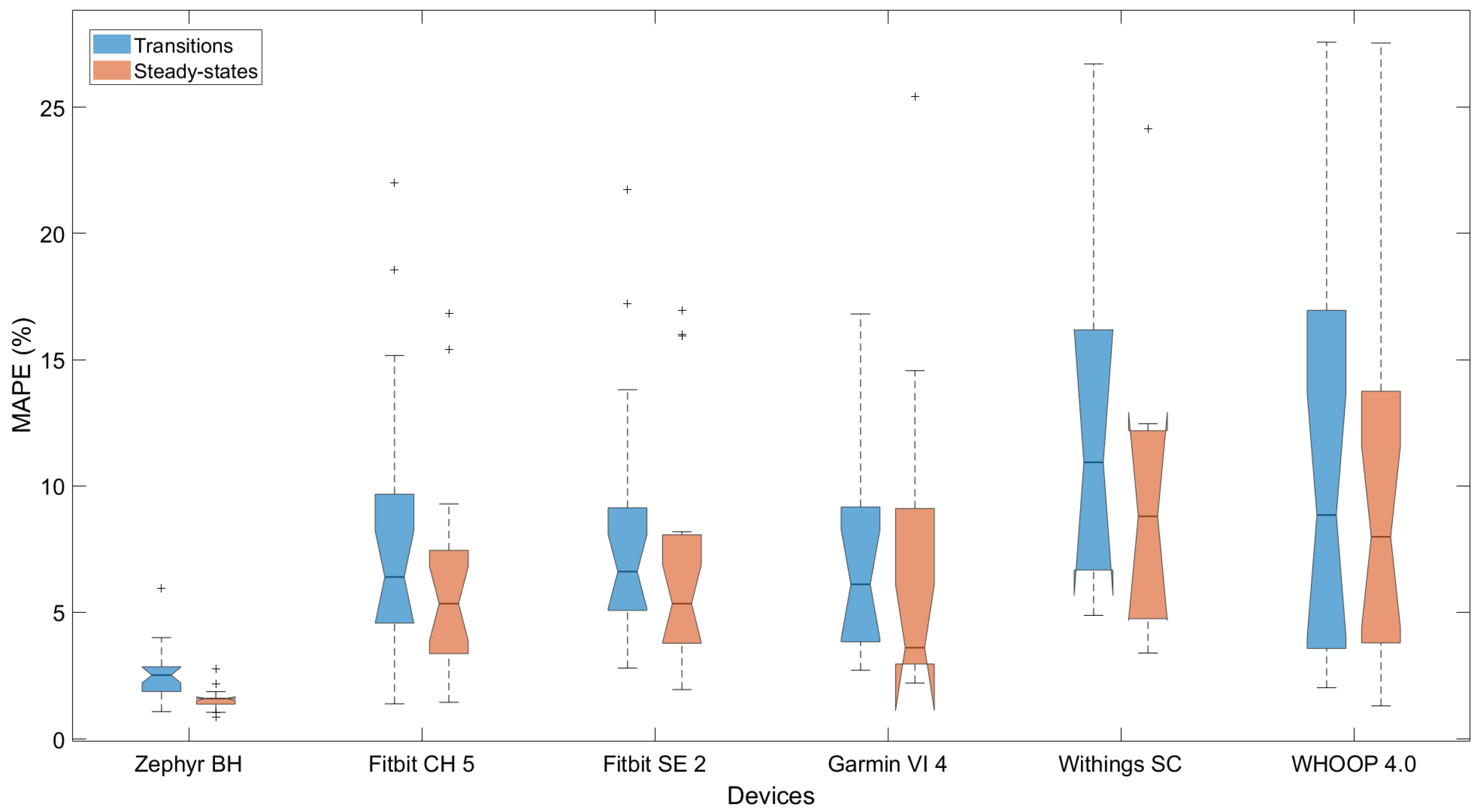

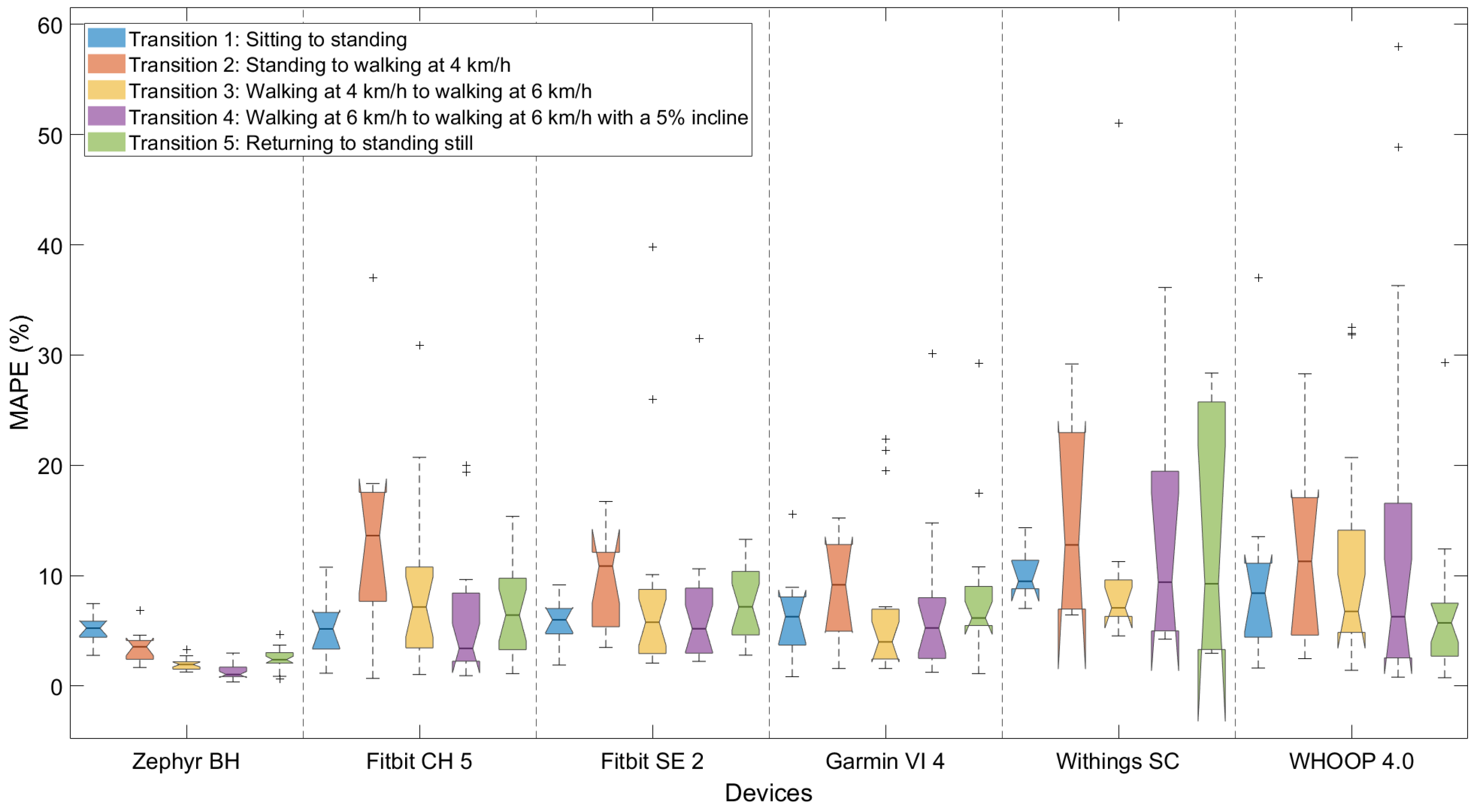

3.4. Performance Depending on Type of Dynamics

3.5. High-Resolution Validation

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ECG | electrocardiogram |

| HR | heart rate |

| HRV | heart rate variability |

| EDA | electrodermal activity |

| PPG | photoplethysmography |

| PRV | pulse rate variability |

| MAPE | mean absolute percentage error |

| MAE | mean absolute error |

| IQR | interquartile range |

| SCC | Spearman correlation coefficient |

| CCC | concordance correlation coefficient |

| rmCCC | repeated-measures concordance correlation coefficient |

| CI | confidence interval |

| LoA | limits of agreement |

References

- Huhn, S.; Axt, M.; Gunga, H.C.; Maggioni, M.A.; Munga, S.; Obor, D.; Sié, A.; Boudo, V.; Bunker, A.; Sauerborn, R.; et al. The impact of wearable technologies in health research: Scoping review. JMIR Mhealth Uhealth 2022, 10, e34384. [Google Scholar] [CrossRef]

- Shin, G.; Jarrahi, M.H.; Fei, Y.; Karami, A.; Gafinowitz, N.; Byun, A.; Lu, X. Wearable activity trackers, accuracy, adoption, acceptance and health impact: A systematic literature review. J. Biomed. Inform. 2019, 93, 103153. [Google Scholar] [CrossRef]

- Barac, M.; Scaletty, S.; Hassett, L.C.; Stillwell, A.; Croarkin, P.E.; Chauhan, M.; Chesak, S.; Bobo, W.V.; Athreya, A.P.; Dyrbye, L.N. Wearable technologies for detecting burnout and well-being in health care professionals: Scoping review. J. Med. Internet Res. 2024, 26, e50253. [Google Scholar] [CrossRef]

- Sabry, F.; Eltaras, T.; Labda, W.; Alzoubi, K.; Malluhi, Q. Machine learning for healthcare wearable devices: The big picture. J. Healthc. Eng. 2022, 2022, 4653923. [Google Scholar] [CrossRef]

- Gedam, S.; Paul, S. A review on mental stress detection using wearable sensors and machine learning techniques. IEEE Access 2021, 9, 84045–84066. [Google Scholar] [CrossRef]

- Vos, G.; Trinh, K.; Sarnyai, Z.; Rahimi Azghadi, M. Generalizable machine learning for stress monitoring from wearable devices: A systematic literature review. Int. J. Med. Inform. 2023, 173, 105026. [Google Scholar] [CrossRef]

- Abd-Alrazaq, A.; Alajlani, M.; Ahmad, R.; AlSaad, R.; Aziz, S.; Ahmed, A.; Alsahli, M.; Damseh, R.; Sheikh, J. The performance of wearable AI in detecting stress among students: Systematic review and meta-analysis. J. Med. Internet Res. 2024, 26, e52622. [Google Scholar] [CrossRef]

- Bolpagni, M.; Pardini, S.; Dianti, M.; Gabrielli, S. Personalized stress detection using biosignals from wearables: A scoping review. Sensors 2024, 24, 3221. [Google Scholar] [CrossRef]

- Hickey, B.A.; Chalmers, T.; Newton, P.; Lin, C.T.; Sibbritt, D.; McLachlan, C.S.; Clifton-Bligh, R.; Morley, J.; Lal, S. Smart devices and wearable technologies to detect and monitor mental health conditions and stress: A systematic review. Sensors 2021, 21, 3461. [Google Scholar] [CrossRef]

- Namvari, M.; Lipoth, J.; Knight, S.; Jamali, A.A.; Hedayati, M.; Spiteri, R.J.; Syed-Abdul, S. Photoplethysmography enabled wearable devices and stress detection: A scoping review. J. Pers. Med. 2022, 12, 1792. [Google Scholar] [CrossRef]

- Can, Y.S.; Chalabianloo, N.; Ekiz, D.; Ersoy, C. Continuous stress detection using wearable sensors in real life: Algorithmic programming contest case study. Sensors 2019, 19, 1849. [Google Scholar] [CrossRef]

- Bunn, T.L.; Slavova, S.; Struttmann, T.W.; Browning, S.R. Sleepiness/fatigue and distraction/inattention as factors for fatal versus nonfatal commercial motor vehicle driver injuries. Accid. Anal. Prev. 2005, 37, 862–869. [Google Scholar] [CrossRef]

- Rowland, B.; Wishart, D.E.; Davey, J.D.; Freeman, J.E. The influence of occupational driver stress on work-related road safety: An exploratory review. J. Occup. Health Saf. Aust. N. Z. 2007, 23, 459–468. [Google Scholar]

- Fuller, D.; Colwell, E.; Low, J.; Orychock, K.; Tobin, M.A.; Simango, B.; Buote, R.; Van Heerden, D.; Luan, H.; Cullen, K.; et al. Reliability and validity of commercially available wearable devices for measuring steps, energy expenditure, and heart rate: Systematic review. JMIR Mhealth Uhealth 2020, 8, e18694. [Google Scholar] [CrossRef]

- Jo, E.; Lewis, K.; Directo, D.; Kim, M.J.; Dolezal, B.A. Validation of biofeedback wearables for photoplethysmographic heart rate tracking. J. Sports Sci. Med. 2016, 15, 540–547. [Google Scholar]

- Zhang, Y.; Song, S.; Vullings, R.; Biswas, D.; Simões-Capela, N.; van Helleputte, N.; van Hoof, C.; Groenendaal, W. Motion artifact reduction for wrist-worn photoplethysmograph sensors based on different wavelengths. Sensors 2019, 19, 673. [Google Scholar] [CrossRef]

- Mühlen, J.M.; Stang, J.; Lykke Skovgaard, E.; Judice, P.B.; Molina-Garcia, P.; Johnston, W.; Sardinha, L.B.; Ortega, F.B.; Caulfield, B.; Bloch, W.; et al. Recommendations for determining the validity of consumer wearable heart rate devices: Expert statement and checklist of the INTERLIVE Network. Br. J. Sports Med. 2021, 55, 767–779. [Google Scholar] [CrossRef]

- Fine, J.; Branan, K.L.; Rodriguez, A.J.; Boonya-Ananta, T.; Ajmal; Ramella-Roman, J.C.; McShane, M.J.; Coté, G.L. Sources of inaccuracy in photoplethysmography for continuous cardiovascular monitoring. Biosensors 2021, 11, 126. [Google Scholar] [CrossRef]

- Wallen, M.P.; Gomersall, S.R.; Keating, S.E.; Wisløff, U.; Coombes, J.S. Accuracy of heart rate watches: Implications for weight management. PLoS ONE 2016, 11, e0154420. [Google Scholar] [CrossRef]

- Chevance, G.; Golaszewski, N.M.; Tipton, E.; Hekler, E.B.; Buman, M.; Welk, G.J.; Patrick, K.; Godino, J.G. Accuracy and precision of energy expenditure, heart rate, and steps measured by combined-sensing Fitbits against reference measures: Systematic review and meta-analysis. JMIR Mhealth Uhealth 2022, 10, e35626. [Google Scholar] [CrossRef]

- Germini, F.; Noronha, N.; Borg Debono, V.; Abraham Philip, B.; Pete, D.; Navarro, T.; Keepanasseril, A.; Parpia, S.; de Wit, K.; Iorio, A. Accuracy and acceptability of wrist-wearable activity-tracking devices: Systematic review of the literature. J. Med. Internet Res. 2022, 24, e30791. [Google Scholar] [CrossRef] [PubMed]

- Davies, A.; Scott, A. Starting to Read ECGs: The Basics, 1st ed.; Springer: London, UK, 2014. [Google Scholar] [CrossRef]

- Ranganathan, P.; Pramesh, C.S.; Aggarwal, R. Common pitfalls in statistical analysis: Logistic regression. Perspect. Clin. Res. 2017, 8, 148–151. [Google Scholar] [CrossRef]

- Sartor, F.; Papini, G.; Cox, L.G.E.; Cleland, J. Methodological shortcomings of wrist-worn heart rate monitors validations. J. Med. Internet Res. 2018, 20, e10108. [Google Scholar] [CrossRef]

- Nelson, B.W.; Low, C.A.; Jacobson, N.; Areán, P.; Torous, J.; Allen, N.B. Guidelines for wrist-worn consumer wearable assessment of heart rate in biobehavioral research. NPJ Digit. Med. 2020, 3, 13. [Google Scholar] [CrossRef]

- ANSI/AAMI. Cardiac Monitors, Heart Rate Meters, and Alarms; American National Standards Institute: Arlington, VA, USA, 2002. [Google Scholar]

- Nelson, B.W.; Allen, N.B. Accuracy of consumer wearable heart rate measurement during an ecologically valid 24-hour period: Intraindividual validation study. JMIR Mhealth Uhealth 2019, 7, e10828. [Google Scholar] [CrossRef]

- Gillinov, S.; Etiwy, M.; Wang, R.; Blackburn, G.; Phelan, D.; Gillinov, A.M.; Houghtaling, P.; Javadikasgari, H.; Desai, M.Y. Variable accuracy of wearable heart rate monitors during aerobic exercise. Med. Sci. Sports Exerc. 2017, 49, 1697–1703. [Google Scholar] [CrossRef]

- Pasadyn, S.R.; Soudan, M.; Gillinov, M.; Houghtaling, P.; Phelan, D.; Gillinov, N.; Bittel, B.; Desai, M.Y. Accuracy of commercially available heart rate monitors in athletes: A prospective study. Cardiovasc. Diagn. Ther. 2019, 9, 379–385. [Google Scholar] [CrossRef]

- Nazari, G.; MacDermid, J.C. Reliability of Zephyr BioHarness respiratory rate at rest, during the modified Canadian aerobic fitness test and recovery. J. Strength Cond. Res. 2020, 34, 264–269. [Google Scholar] [CrossRef] [PubMed]

- Giggins, O.M.; Doyle, J.; Sojan, N.; Moran, O.; Crabtree, D.R.; Fraser, M.; Muggeridge, D.J. Accuracy of Wrist-Worn Photoplethysmography Devices at Measuring Heart Rate in the Laboratory and During Free-Living Activities. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Mexico City, Mexico, 1–5 November 2021; pp. 6970–6973. [Google Scholar] [CrossRef]

- Bent, B.; Goldstein, B.A.; Kibbe, W.A.; Dunn, J.P. Investigating sources of inaccuracy in wearable optical heart rate sensors. NPJ Digit. Med. 2020, 3, 54. [Google Scholar] [CrossRef] [PubMed]

- Coelli, S.; Carrara, M.; Bianchi, A.M.; De Tommaso, M.; Actis-Grosso, R.; Reali, P. Comparing consumer and research-grade wristbands for inter-beat intervals monitoring. In Proceedings of the 2024 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE), St Albans, UK, 21–23 October 2024; pp. 201–206. [Google Scholar] [CrossRef]

- Støve, M.P.; Hansen, E.C.K. Accuracy of the Apple Watch Series 6 and the Whoop Band 3.0 for assessing heart rate during resistance exercises. J. Sports Sci. 2022, 40, 2639–2644. [Google Scholar] [CrossRef]

- Boudreaux, B.D.; Hebert, E.P.; Hollander, D.B.; Williams, B.M.; Cormier, C.L.; Naquin, M.R.; Gillan, W.W.; Gusew, E.E.; Kraemer, R.R. Validity of wearable activity monitors during cycling and resistance exercise. Med. Sci. Sports Exerc. 2018, 50, 624–633. [Google Scholar] [CrossRef]

- Gilgen-Ammann, R.; Schweizer, T.; Wyss, T. RR interval signal quality of a heart rate monitor and an ECG Holter at rest and during exercise. Eur. J. Appl. Physiol. 2019, 119, 1525–1532. [Google Scholar] [CrossRef]

- Hashimoto, Y.; Sato, R.; Takagahara, K.; Ishihara, T.; Watanabe, K.; Togo, H. Validation of Wearable Device Consisting of a Smart Shirt with Built-In Bioelectrodes and a Wireless Transmitter for Heart Rate Monitoring in Light to Moderate Physical Work. Sensors 2022, 22, 9241. [Google Scholar] [CrossRef] [PubMed]

- Charlton, P.H.; Kotzen, K.; Mejía-Mejía, E.; Aston, P.J.; Budidha, K.; Mant, J.; Pettit, C.; Behar, J.A.; Kyriacou, P.A. Detecting beats in the photoplethysmogram: Benchmarking open-source algorithms. Physiol. Meas. 2022, 43, 085007. [Google Scholar] [CrossRef] [PubMed]

- Gagnon, J.; Khau, M.; Lavoie-Hudon, L.; Vachon, F.; Drapeau, V.; Tremblay, S. Comparing a Fitbit wearable to an electrocardiogram gold standard as a measure of heart rate under psychological stress: A validation study. JMIR Form. Res. 2022, 6, e37885. [Google Scholar] [CrossRef]

- Kondama Reddy, R.; Pooni, R.; Zaharieva, D.P.; Senf, B.; El Youssef, J.; Dassau, E.; Doyle, F.J.; Clements, M.A.; Rickels, M.R.; Patton, S.R.; et al. Accuracy of wrist-worn activity monitors during common daily physical activities and types of structured exercise: Evaluation study. JMIR Mhealth Uhealth 2018, 6, e10338. [Google Scholar] [CrossRef]

- Spierer, D.K.; Rosen, Z.; Litman, L.L.; Fujii, K. Validation of photoplethysmography as a method to detect heart rate during rest and exercise. J. Med. Eng. Technol. 2015, 39, 264–271. [Google Scholar] [CrossRef]

- Muggeridge, D.J.; Hickson, K.; Davies, A.V.; Giggins, O.M.; Megson, I.L.; Gorely, T.; Crabtree, D.R. Measurement of heart rate using the Polar OH1 and Fitbit Charge 3 wearable devices in healthy adults during light, moderate, vigorous, and sprint-based exercise: Validation study. JMIR Mhealth Uhealth 2021, 9, e25313. [Google Scholar] [CrossRef]

- Olstad, B.H.; Zinner, C. Validation of the Polar OH1 and M600 optical heart rate sensors during front crawl swim training. PLoS ONE 2020, 15, e0231522. [Google Scholar] [CrossRef]

- Johnson, J.M. Physical training and the control of skin blood flow. Med. Sci. Sports Exerc. 1998, 30, 382–386. [Google Scholar] [CrossRef]

- Schoenmakers, M.; Saygin, M.; Sikora, M.; Vaessen, T.; Noordzij, M.; de Geus, E. Stress in Action Wearables Database: A database of noninvasive wearable monitors with systematic technical, reliability, validity, and usability information. Behav. Res. Methods 2025, 57, 171. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.H.; Roberge, R.; Powell, J.B.; Shafer, A.B.; Williams, J. Measurement accuracy of heart rate and respiratory rate during graded exercise and sustained exercise in the heat using the Zephyr BioHarness. Int. J. Sports Med. 2013, 34, 497–501. [Google Scholar] [CrossRef] [PubMed]

- Emptica Inc. E4 Data-BVP Expected Signal–Empatica Support. Available online: https://support.empatica.com/hc/en-us/articles/360029719792-E4-data-BVP-expected-signal (accessed on 29 November 2023).

- Gianni, M. FitToStrava. Available online: https://www.fittostrava.com/data.php (accessed on 15 February 2023).

- Oosterveen, H. FIT File Viewer. Available online: https://www.fitfileviewer.com/ (accessed on 15 February 2023).

- Withings. Withings App & Online Dashboard: Exporting My Data. Available online: https://support.withings.com/hc/en-us/articles/201491377-Withings-App-Online-Dashboard-Exporting-my-data (accessed on 20 February 2023).

| Device | Data Availability |

|---|---|

| 12-lead ECG | Every 5 or 10 s |

| Zephyr Bioharness 3.0 | Every second |

| EmbracePlus | Every minute |

| Garmin Vivosmart 4 | Infrequent (range: 1–110 s, average: 10 s) |

| Fitbit Charge 5 | Every 1, 2, or 3 s |

| Fitbit Sense 2 | Every 1, 2, or 3 s |

| Withings Scanwatch | Infrequent (range: 1–90 s, average: 10 s) |

| WHOOP 4.0 | Every second |

| Participants | Men (n = 11) | Women (n = 14) | ||

|---|---|---|---|---|

| Mean (SD) | Range | Mean (SD) | Range | |

| Age (years) | 23.3 (1.7) | 22–27 | 22.6 (1.9) | 18–26 |

| Weight (kg) | 78.9 (11.1) | 66–100 | 67.9 (7.1) | 56–79 |

| Height (cm) | 186.0 (7.2) | 174–193 | 174.1 (4.8) | 167–180 |

| BMI (kg/m2) | 22.9 (3.7) | 18.1–31.2 | 22.4 (2.1) | 18.9–25.6 |

| Dataset | Device | #Pairs | Missingness (%) | rmCCC (95% CI) | Bland–Altman: Mean Diff (Lower, Upper LoA) | Median MAPE (Iqr) |

|---|---|---|---|---|---|---|

| 10-s | Zephyr 1 | 3559 | 0.00% | 0.98 (0.98, 0.99) | −0.30 * (−9.99, 9.32) | 2.28 (0.99) |

| Fitbit CH 5 2 | 2854 | 9.79% | 0.87 (0.85, 0.88) | 0.46 (−16.52, 17.43) | 5.65 (4.13) | |

| Fitbit SE 2 3 | 2854 | 7.59% | 0.86 (0.84, 0.87) | 0.52 (−16.34, 17.38) | 5.61 (3.53) | |

| Garmin VI 4 4 | 1595 | 49.59% | 0.77 (0.75, 0.79) | 2.12 (−17.23, 21.46) | 4.40 (6.18) | |

| Withings SC 5 | 1236 | 60.93% | 0.68 (0.65, 0.71) | 3.51 (−23.17, 30.19) | 9.34 (6.59) | |

| WHOOP 4.0 | 2752 | 13.02% | 0.79 (0.77, 0.80) | −0.39 (−24.17, 23.39) | 8.52 (10.30) | |

| 60-s | Zephyr | 450 | 0.00% | 0.99 (0.98, 0.99) | −0.50 * (−5.25, 4.24) | 1.45 (1.00) |

| EmbracePlus | 359 | 20.02% | 0.85 (0.82, 0.88) | −0.57 (−20.42, 19.28) | 6.22 (5.54) | |

| Fitbit CH 5 | 370 | 17.78% | 0.91 (0.89, 0.92) | 0.24 (−14.59, 15.06) | 5.09 (4.58) | |

| Fitbit SE 2 | 378 | 16.00% | 0.90 (0.88, 0.92) | 0.33 (−14.84, 15.50) | 4.55 (4.15) | |

| Garmin VI 4 | 293 | 34.89% | 0.85 (0.81, 0.88) | 1.88 (−14.48, 18.26) | 3.23 (4.78) | |

| Withings SC | 159 | 64.67% | 0.73 (0.64, 0.79) | 3.78 (−21.25, 28.82) | 8.59 (7.92) | |

| WHOOP 4.0 | 390 | 13.33% | 0.79 (0.74, 0.82) | 0.96 (−24.53, 26.45) | 9.99 (10.38) | |

| Per-second | Zephyr | 3567 | 0.00% | 0.95 (0.95, 0.96) | −0.34 * (−9.99, 9.32) | 3.86 (0.99) |

| Fitbit CH 5 | 1429 | 59.94% | 0.87 (0.85, 0.88) | 0.22 (−16.59, 17.02) | 6.04 (4.58) | |

| Fitbit SE 2 | 1582 | 55.65% | 0.86 (0.84, 0.87) | −0.21 (−16.88, 16.46) | 4.58 (3.53) | |

| Garmin VI 4 | 249 | 93.02% | 0.77 (0.72, 0.82) | 1.66 (−17.18, 20.49) | 4.12 (6.18) | |

| Withings SC | 1252 | 65.90% | 0.69 (0.66, 0.72) | 3.78 * (−22.88, 29.88) | 8.94 (6.50) | |

| WHOOP 4.0 | 1529 | 57.13% | 0.55 (0.51, 0.58) | 0.21 (−34.03, 34.44) | 11.55 (12.66) |

| Device | Fitbit CH 5 | Fitbit SE 2 | Garmin Vi 4 | WHOOP 4.0 | Withings SC |

|---|---|---|---|---|---|

| Zephyr | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 |

| Fitbit CH 5 | 0.715 | 1.000 | 0.046 | 0.199 | |

| Fitbit SE 2 | 0.555 | 0.094 | 0.414 | ||

| Garmin Vi 4 | 0.087 | 0.298 | |||

| WHOOP 4.0 | 0.614 |

| Device | #Pairs | Missingness (%) | rmCCC (95% CI) | Bland–Altman: Mean Diff (Lower, Upper LoA) | Median MAPE (Iqr) |

|---|---|---|---|---|---|

| EmbracePlus | 388 | 98.24% | 0.81 (0.78, 0.84) | 1.42 (−21.22, 24.06) | 7.43 (5.25) |

| Fitbit CH 5 | 11,453 | 65.42% | 0.85 (0.85, 0.86) | −0.65 (−19.34, 18.03) | 7.17 (2.83) |

| Fitbit SE 2 | 12,428 | 61.03% | 0.84 (0.83, 0.84) | −0.03 (−19.02, 18.95) | 7.22 (4.59) |

| Garmin VI 4 | 1748 | 94.30% | 0.71 (0.69, 0.74) | −1.75 (−23.46, 19.97) | 8.04 (5.05) |

| Withings SC | 9762 | 81.05% | 0.64 (0.63, 0.65) | −3.54 (−30.78, 23.70) | 11.20 (6.83) |

| WHOOP 4.0 | 6061 | 66.56% | 0.78 (0.77, 0.79) | −0.42 (−25.86, 25.01) | 11.37 (8.86) |

| Device | Fitbit CH 5 | Fitbit SE 2 | Garmin Vi 4 | WHOOP 4.0 | Withings SC |

|---|---|---|---|---|---|

| EmbracePlus | 0.474 | 0.861 | 0.917 | 0.075 | 0.209 |

| Fitbit CH 5 | 0.603 | 0.377 | 0.024 | 0.066 | |

| Fitbit SE 2 | 0.729 | 0.053 | 0.090 | ||

| Garmin Vi 4 | 0.232 | 0.331 | |||

| WHOOP 4.0 | 0.690 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Van Oost, C.N.; Masci, F.; Malisse, A.; Schyvens, A.-M.; Peters, B.; Dirix, H.; Ross, V.; Wets, G.; Neven, A.; Verbraecken, J.; et al. Accuracy of Heart Rate Measurement Under Transient States: A Validation Study of Wearables for Real-Life Monitoring. Sensors 2025, 25, 6319. https://doi.org/10.3390/s25206319

Van Oost CN, Masci F, Malisse A, Schyvens A-M, Peters B, Dirix H, Ross V, Wets G, Neven A, Verbraecken J, et al. Accuracy of Heart Rate Measurement Under Transient States: A Validation Study of Wearables for Real-Life Monitoring. Sensors. 2025; 25(20):6319. https://doi.org/10.3390/s25206319

Chicago/Turabian StyleVan Oost, Catharina Nina, Federica Masci, Adelien Malisse, An-Marie Schyvens, Brent Peters, Hélène Dirix, Veerle Ross, Geert Wets, An Neven, Johan Verbraecken, and et al. 2025. "Accuracy of Heart Rate Measurement Under Transient States: A Validation Study of Wearables for Real-Life Monitoring" Sensors 25, no. 20: 6319. https://doi.org/10.3390/s25206319

APA StyleVan Oost, C. N., Masci, F., Malisse, A., Schyvens, A.-M., Peters, B., Dirix, H., Ross, V., Wets, G., Neven, A., Verbraecken, J., & Aerts, J.-M. (2025). Accuracy of Heart Rate Measurement Under Transient States: A Validation Study of Wearables for Real-Life Monitoring. Sensors, 25(20), 6319. https://doi.org/10.3390/s25206319