1. Introduction

In urban rail transit systems, platform doors play a crucial role. Not only do they ensure passenger safety and prevent accidents involving passengers falling onto the tracks, but they also improve train operational efficiency. However, foreign objects in the gaps between platform doors, such as items left behind by passengers, trash, or other obstacles, can obstruct the normal closing of platform doors, cause malfunctions, and even lead to serious safety accidents [

1,

2,

3]. Therefore, detecting foreign objects in platform door gaps is extremely important.

In recent years, deep learning technology has achieved remarkable results in the field of object detection, particularly with the use of convolutional neural networks [

4]. Representative algorithms include the Region-based Convolutional Neural Network (R-CNN) [

5], the Fast Region-based Convolutional Neural Network (Fast R-CNN) [

6], Faster R-CNN (Faster Region-based Convolutional Neural Network) [

7], and Mask R-CNN [

8] and their derivative algorithms have continuously improved average accuracy on public object detection datasets. As the network architecture becomes increasingly complex, the object representations extracted by the model become more abstract. This characteristic enables the model to demonstrate significant performance improvements in both image classification tasks in computer vision and semantic recognition tasks in natural language processing.

Current methods for detecting foreign objects in subway gaps can be broadly categorized into two types: traditional detection approaches and computer image processing-based techniques. Traditional detection primarily involves manual inspections and sensor-based detection methods. Manual inspections consume significant human resources and impose heavy workloads on personnel, while also failing to provide real-time monitoring capabilities.

Currently, methods for detecting foreign objects in subway gaps can be summarized as categorized into two types: traditional detection methods and computer image processing-based methods. Traditional detection methods primarily involve manual inspections and sensor-based detection methods. Manual inspections consume a significant amount of human resources and impose a heavy workload on personnel, while also failing to provide real-time monitoring capabilities. Fei et al. [

9] proposed a multi-sensor foreign object detection method that integrates visual cameras and lidar to detect foreign objects. Zhang et al. [

10] proposed a complementary infrared and ultrasonic detection method for detecting foreign object intrusion, which can improve detection performance at different distances.

For algorithms used in computer-based foreign object detection, image processing is the primary focus, with deep learning algorithms emerging as the main subject of research. Wu et al. [

11] proposed an adaptive moving object detection algorithm based on an improved ViBe algorithm. Zheng et al. [

12] utilized machine vision to model the background of subway gaps, assess the degree of feature changes, and thereby detect the presence of foreign objects. Gao et al. [

13] developed a railway foreign objects detection algorithm based on the Faster R-CNN network model. This algorithm employs transfer learning techniques to augment railway foreign object intrusion data. However, it may encounter difficulties when identifying foreign object types outside the training dataset and exhibits relatively slow detection speeds. Meanwhile, the detection speed of SSD [

14] and YOLO [

15] series algorithms has also been significantly improved on public datasets, and target detection algorithms are widely applied across various fields to address detection problems.

Zhang et al. [

16] proposed an improved YOLOv7 algorithm for detecting foreign object intrusion on high-speed railways. By incorporating the CARAFE operator, GhostConv convolutions, and a global attention mechanism, this enhanced model achieves a balance between accuracy and efficiency, though computational time increases. Ding et al. [

17] increased the model’s recognition capabilities to recognize minute objects in complex environments by integrating YOLOv8 with attention mechanisms. This approach increased computational load and inference time, limiting the model’s applicability on resource-constrained platforms.

Cao et al. [

18] redesigned the c2f module in YOLOv8 to reduce model parameter redundancy and further improve detection speed. This method effectively reduces the number of parameters by optimizing the network structure, thereby achieving better performance in terms of speed and resource consumption. However, this parameter compression design reduces the detection accuracy of the model in scenarios where the target distribution is extremely complex or diverse.

As the YOLO series continues to evolve, YOLOv11, the latest iteration, introduces advanced architectural designs and optimization strategies. For example, the C3k2 module, Spatial Pyramid Pooling (SPPF) block, and Spatial Attention (C2PSA) block, among others, these improvements further enhance the model’s feature extraction capabilities and computational efficiency. YOLOv11 maintains high-speed detection while offering higher detection accuracy, making it one of the leading algorithms in the field of object detection [

19].

He et al. [

20] proposed a multi-scale feature fusion method that significantly enhanced YOLOv11’s detection capability for small objects. However, this approach increased computational costs and reduced inference speed, limiting its application in real-time scenarios. Zhang et al. [

21] proposed lightweight convolutional modules. This architecture substantially improved model speed and resource efficiency. However, the lightweight design compromised the model’s ability to represent fine-grained features, resulting in a slight decrease in detection accuracy.

Despite continuous technological advancements, detecting foreign objects in the narrow gap between subway train doors and platform screen doors presents unique challenges: Objects are typically extremely small and susceptible to complex lighting variations such as reflections and shadows. The strict timing requirements for door operations demand both high precision and real-time inference capabilities. Furthermore, the extremely short door opening/closing intervals necessitate ultra-high-speed processing, compelling the system to achieve near-perfect detection reliability with minimal false alarm rates.

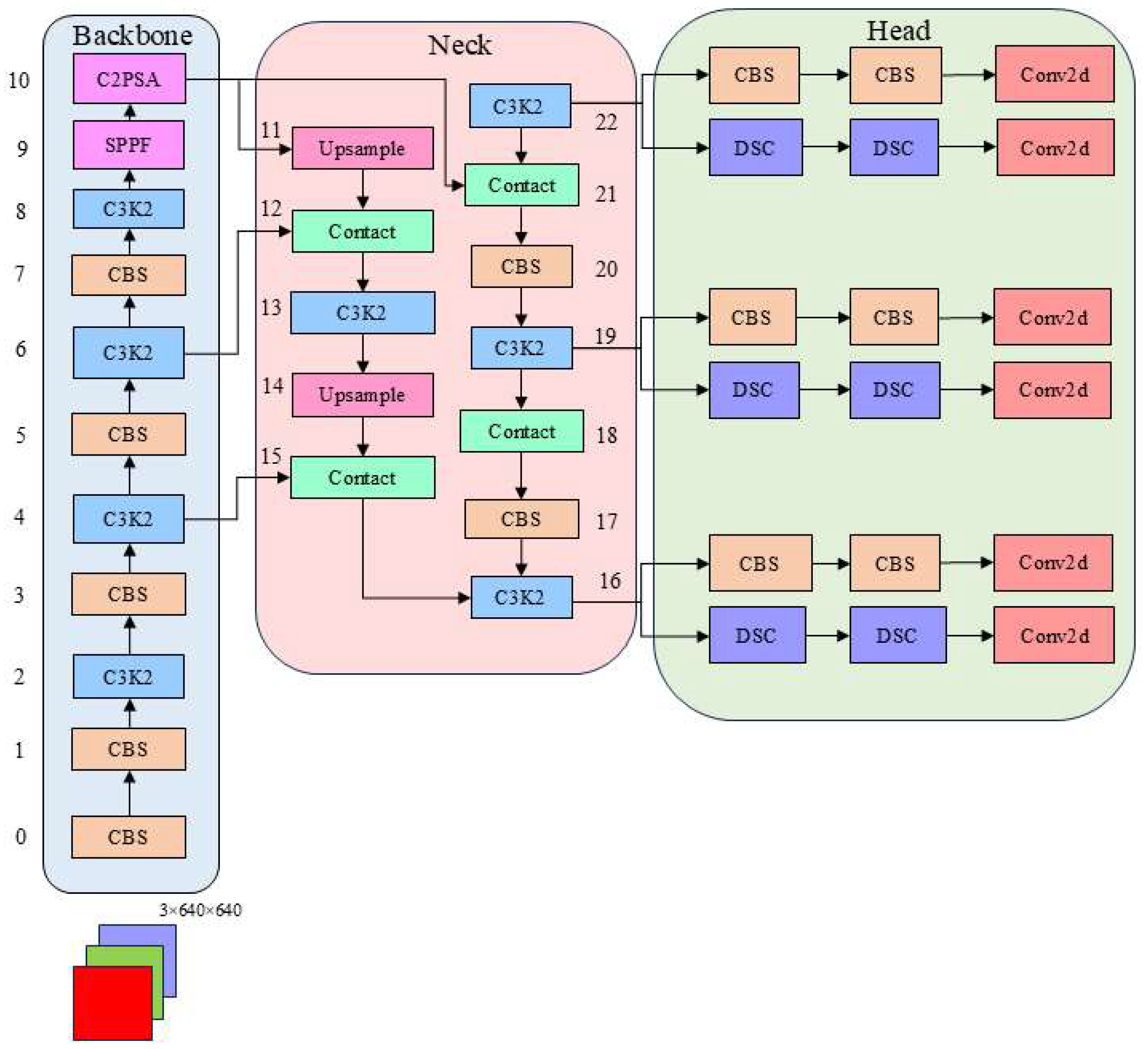

Based on the aforementioned issues, this article proposes an improved object detection framework, GA-YOLOv11, tailored for subway safety scenarios. This framework is based on the YOLOv11 architecture, with a focus on optimizing the algorithm to enable real-time detection of foreign objects in the gaps between subway train doors and platform screen doors. First, a dataset containing various categories of foreign objects was constructed and annotated. Subsequently, the YOLOv11 model was specifically optimized and adjusted by integrating the GhostConv network into the backbone network. Additionally, this study introduced the GAM channel attention module before the final convolutional layer in the head network.

The main contributions of this paper are summarized as follows:

This study focuses on subway safety scenarios and has constructed a dataset covering multiple types of foreign objects, completing detailed annotation work. The dataset focuses on foreign object detection in the gap between subway train doors and platform screen doors, providing a solid data foundation for subsequent algorithm optimization and model training, and effectively improving the adaptability and accuracy of object detection tasks in practical applications.

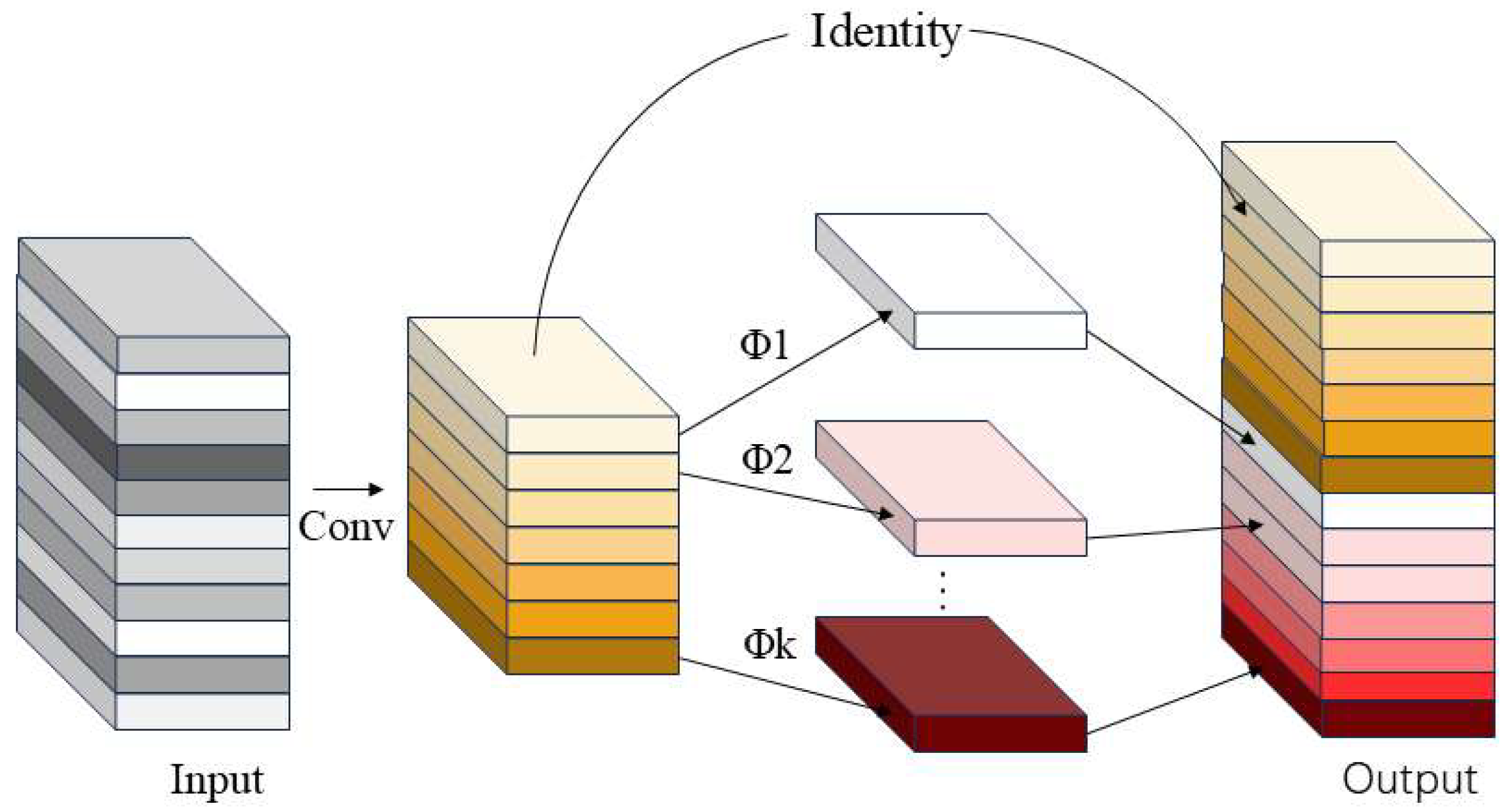

Based on the YOLOv11n infrastructure, this paper introduces the GhostConv network module, specifically by adding the Ghost module to the backbone network. This module significantly improves network capacity by generating additional feature maps while maintaining low computational costs. This innovative design effectively improves the model’s feature extraction capabilities in an intricate environment, thereby enhancing the efficiencies and performance of foreign object detection.

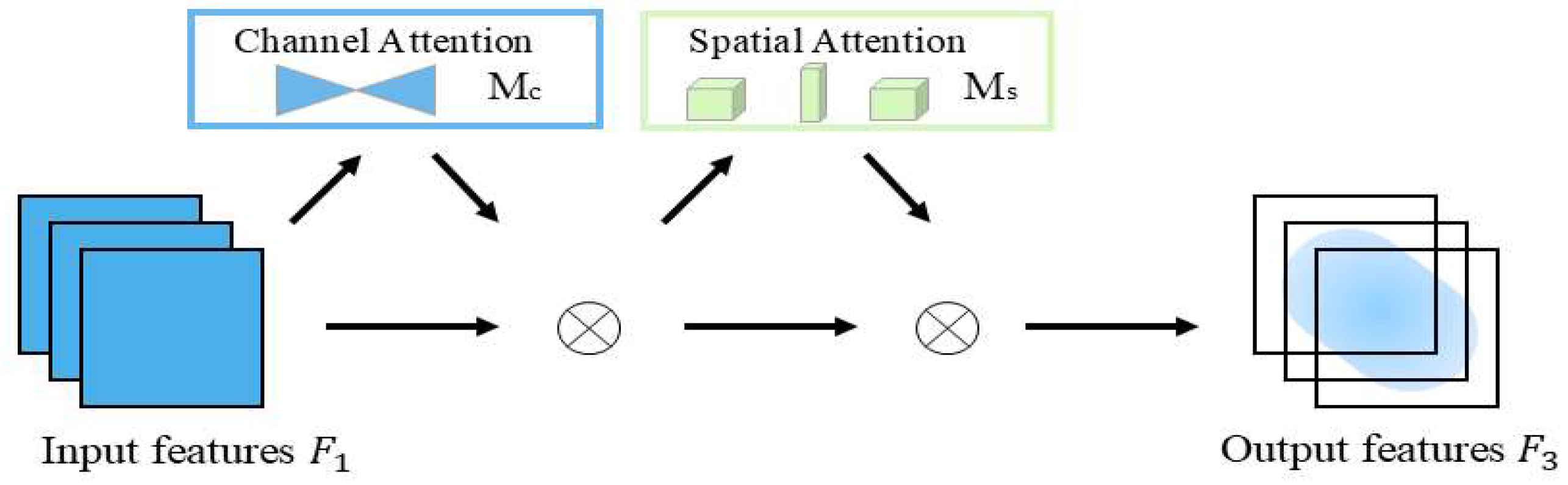

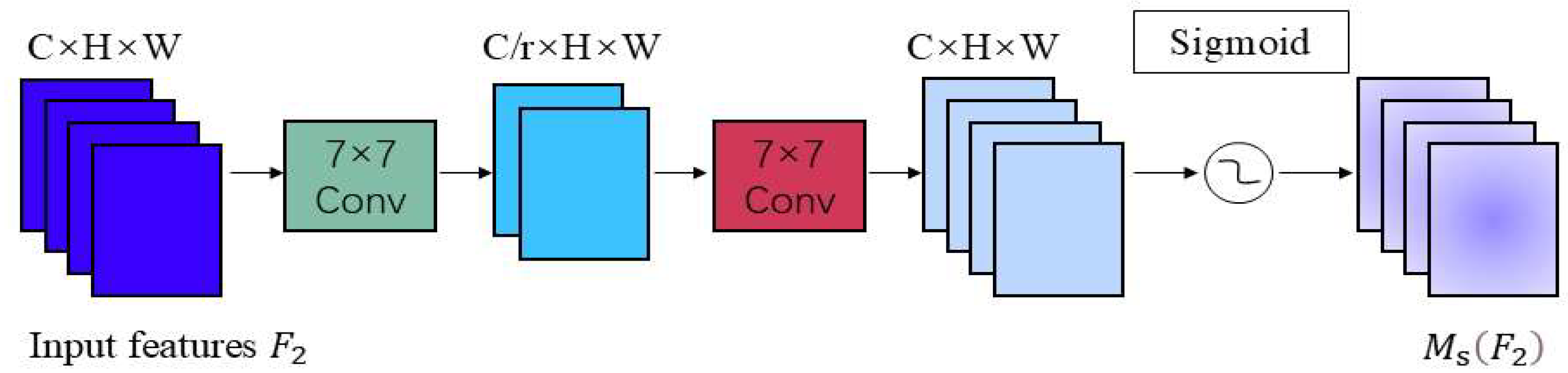

To further enhance the model’s ability to learn the correlations between feature channels, the researchers added a GAM channel attention module before the final convolutional layer of the head network. This module can dynamically adjust the distribution of channel weights, strengthening the model’s ability to capture key features and enabling it to perform better in multi-scale feature representation, thereby improving the accuracy and robustness of real-time detection of foreign objects in subways.

Section 2 provides a detailed description of data collection, the simulation platform, the selection of typical items, and data augmentation.

Section 3 introduces the proposed model architecture, including the technical principles behind each module.

Section 4 outlines the experimental setup and comparison tests used, followed by a results and comparison analysis.

Section 5 concludes the paper with a summary of this study and directions for future work.

5. Conclusions

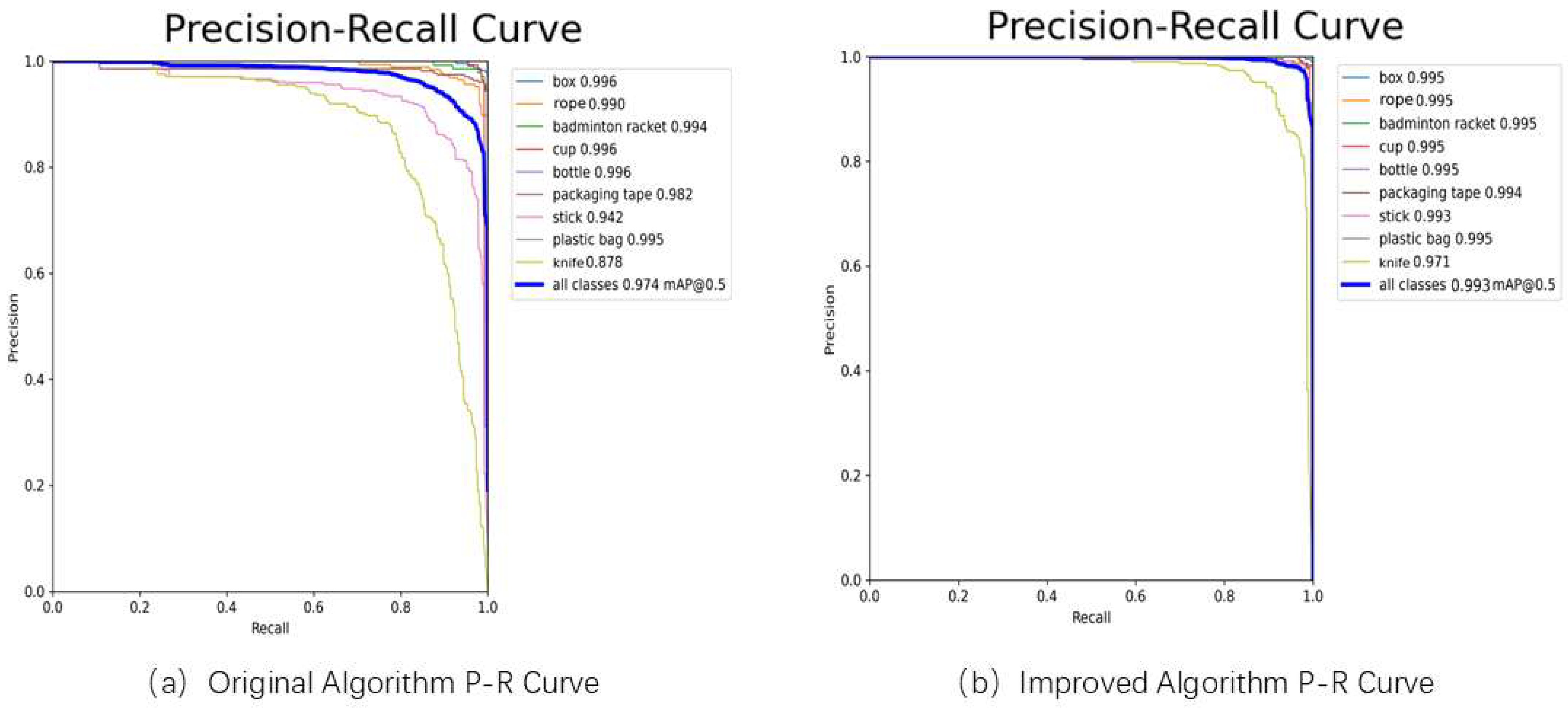

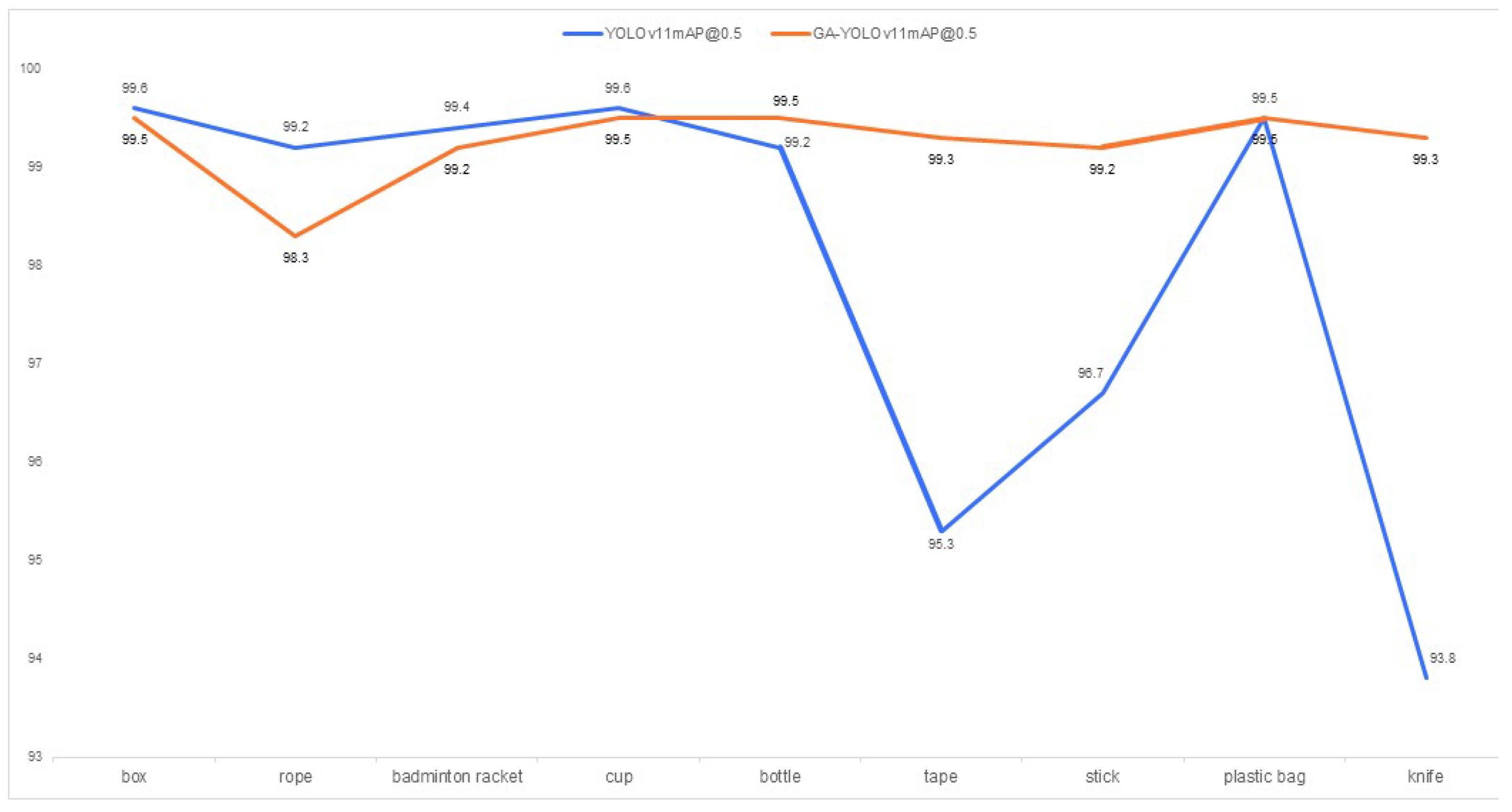

This article proposes an efficient, lightweight genetic algorithm-based YOLOv11 network model for detecting and characterizing foreign objects inside subway train door gaps, based on an enhanced YOLOv11 framework. Addressing the issues of false positives and false negatives, as well as the original model’s large number of model parameters, this model introduces the GhostConv module, which focuses on decreasing the amount of parameters and the calculation loading, reducing inference latency, and improving system response speed. Additionally, to enhance feature fusion and improve focus on small targets, the improved model incorporates the GAM module. This model achieves improvements of 3.3%, 3.2%, 1.2%, and 3.5% in precision, recall, mAP@0.5, and mAP@0.5:0.95, respectively. The number of parameters and GFLOPs are reduced by 18% and 7.9%, respectively, compared to the original YOLOv11n model, while maintaining the same model size. This paper focuses on optimizing network architecture, innovating algorithms, and exploring the design structure of new models to significantly reduce the number of parameters and the complexity of calculations while maintaining high accuracy, thereby achieving the goal of network model lightweighting. The enhanced model improves the ability to capture foreign object features in subway environments, particularly when foreign objects are similar to the background or under complex lighting conditions, thereby enhancing detection accuracy in subway platform gap foreign object detection scenarios.

However, the model still exhibits certain limitations. Although the integration of GhostConv with the GAM module significantly reduces computational costs and enhances detection performance, the model’s accuracy may slightly decrease when dealing with foreign objects that are highly irregular in shape or have extremely low background contrast. Furthermore, the current model optimization primarily targets common foreign object categories, and its performance on rare or unseen target types requires further validation.

With the continuous development of urban rail transit, the importance of automatic detection of foreign objects between subway platform doors and train doors has become increasingly prominent. The algorithm model proposed in this paper holds broad application prospects. Future research will focus on collecting foreign object intrusion data in real subway platform environments to construct a large-scale sample space, thereby enhancing the algorithm’s accuracy and robustness. Concurrently, research will also strive to conduct large-scale field testing in actual subway environments to validate and optimize the model’s performance under real-world operational conditions.