1. Introduction

Object detection is a cornerstone of computer vision, and its historical trajectory closely follows the evolution of deep learning paradigms. The field has progressed from early detectors that relied on handcrafted features through convolutional neural network (CNN)-based architectures to the recent emergence of Transformer-based models, steadily improving in accuracy, speed, and generalisation. Within this context, remote sensing object detection (RSOD) supports vital tasks such as urban planning, resource exploration, environmental monitoring, and national security, and it therefore continues to attract significant research and practical attention [

1].

When state-of-the-art detectors designed for natural images are applied directly to remote sensing imagery (RSI), their performance is often constrained by domain-specific factors, including sensor noise, cluttered backgrounds, high semantic similarity between targets and surroundings, extreme scale variation, and the high density of small objects.

Mainstream CNN and Transformer detectors learn and represent features solely in the spatial domain, overlooking the information embedded in the frequency domain. According to Fourier theory, low-frequency components encode global contours and smooth regions, whereas high-frequency components capture edges and textures [

2]. Small objects in RSI correspond mainly to high-frequency signals, and cluttered backgrounds exhibit distinctive frequency-domain patterns. Moreover, deep networks show a spectral bias [

3]; they fit low-frequency information first, which hinders the modelling of high-frequency details. A detector that seamlessly fuses spatial and frequency cues is therefore likely to overcome these intrinsic limitations and advance high-precision RSOD.

Motivated by this insight, and taking the real-time end-to-end detector RT-DETR [

4] as our baseline, we develop MSRS-DETR, a multi-scale detector tailored for RSI.

The main contributions of this work are as follows:

An efficient end-to-end framework for RSOD that integrates frequency-domain analysis into the backbone, encoder, and feature-fusion stages, thereby improving multi-scale detection in complex scenes.

C2fFATNET, a lightweight residual backbone equipped with frequency attention, which reduces parameters while enhancing the discriminative power of extracted features.

An Entanglement Transformer Block (ETB) that performs joint frequency–spatial attention to distil robust high-level semantics and suppress background interference.

S2-CCFF, a shallow-feature-augmented bidirectional fusion addition that preserves high-resolution detail and boosts the recall of small targets while introducing modest latency overhead along the fusion path.

The remainder of this paper is organised as follows.

Section 2 reviews related work.

Section 3 details the proposed MSRS-DETR, including a lightweight backbone refinement that augments the C2f/RepC3 blocks to better preserve fine-grained details, the Entanglement Transformer Block (ETB) for jointly modelling frequency and spatial cues, and the S2-CCFF bidirectional fusion across scales.

Section 4 briefly summarises the experimental setup and datasets and reports the main quantitative results with concise qualitative examples.

Section 5 concludes this paper and discusses limitations and future directions.

2. Related Work

2.1. CNN-Based Remote Sensing Object Detection

Since the rise of deep learning, CNN-driven algorithms have rapidly dominated object detection and have evolved along two main trajectories. The first comprises two-stage detectors typified by the R-CNN family [

5,

6,

7], which follow a proposal-then-refinement paradigm. By generating high-quality candidate boxes through a region proposal network, these methods achieve strong accuracy, though their multi-stage pipelines restrict real-time deployment. The second trajectory favours speed and is represented by one-stage detectors such as the You Only Look Once (YOLO) series [

8]. By eliminating the proposal stage and casting detection as direct regression and classification, YOLO has, through successive iterations, struck an exceptional balance between speed and precision. The latest version, YOLOv8 [

9], introduces a more efficient C2f backbone, an anchor-free detection head, and advanced loss functions, making it a widely adopted baseline in both academia and industry. To tackle the intrinsic multi-scale challenge of CNNs, Feature Pyramid Networks (FPNs) [

10] add a top-down pathway with lateral connections, merging deep semantic information with shallow spatial detail and becoming a standard component of modern detectors.

When these advanced CNN detectors are applied directly to remote sensing imagery, their inherent limitations become evident. The local receptive field of convolutions hampers the capture of the global context and long-range dependencies required for large-scene interpretation. Repeated down-sampling, essential for building deep semantic features, inevitably discards information; the faint signatures of small objects, common in RSI, are easily overwhelmed during propagation. Consequently, the upper bound of CNN performance in complex remote sensing scenarios is constrained by the architecture itself, motivating research into paradigms with stronger global modelling capacity.

In recent years, many remote sensing detectors have extended fast YOLO-style baselines to address domain challenges, such as small, sparse targets and cluttered backgrounds, while keeping real-time efficiency. Representative examples include MSA-YOLO, which introduces multi-scale strip attention, GS-PANet fusion, and a Wise-Focal CIoU loss to suppress background noise and balance sample contributions [

11]; YOLO-RS, which couples ASFF/OSPP-like aggregation with a lightweight head to improve accuracy and throughput on aerial benchmarks [

12]; and YOLO-RSA, a multi-scale design specialised for ship scenarios in optical imagery [

13]. Under adverse weather, CM-YOLO further applies component-decoupling background suppression and local–global semantic mining to maintain robustness in cloud/mist scenes [

14]. Complementary surveys synthesise that attention modules, lightweight backbones, and enhanced multi-scale fusion (e.g., ASFF/BiFPN variants) are effective methods for improving detection in RSI without sacrificing speed [

15,

16].

2.2. Transformer-Based Remote Sensing Object Detection

To overcome the locality of CNNs, the Transformer architecture, originally devised for natural language processing, has been introduced into computer vision and is now reshaping object detection. Starting with the Vision Transformer (ViT) [

17], its self-attention mechanism enables direct interactions between arbitrary pixels, yielding a true global receptive field.

DETR (Detection Transformer) [

18] is the seminal work in this line. By adopting an encoder–decoder Transformer and learnable object queries, DETR reformulates detection as an end-to-end set prediction task, discarding anchors and non-maximum suppression in favour of bipartite matching and set loss supervision. Despite its elegance, the first version converges slowly and struggles with small objects. Deformable DETR [

19] addresses these issues by introducing deformable attention, which focuses computation on a sparse set of sampling points, greatly reducing complexity, accelerating convergence, and improving small-object recall. To extend DETR’s end-to-end advantages to real-time scenarios, RT-DETR [

4] employs a lightweight hybrid encoder comprising a single Transformer layer on top of deep CNN features and adopts uncertainty-minimal query selection. It thus achieves very high throughput while maintaining accuracy, performing competitively with, and in many cases surpassing, heavily optimised YOLO variants on the reported benchmarks, and establishing a strong standard for real-time end-to-end detection.

Although Transformer-based detectors excel at global modelling, several limitations remain in remote sensing. First, they typically require large-scale pre-training, whereas well-annotated RSI datasets are scarce. Second, in hybrid designs such as RT-DETR, image features still undergo multiple CNN down-sampling operations before entering the Transformer, so the loss of small-object information persists [

20], leaving the subsequent attention layers with insufficient detail.

Building on the real-time encoder–decoder paradigm, RS-DETR adapts RT-DETR to aerial scenarios via cross-scale gating, EBiFPN-style fusion, and refined IoU objectives, reporting improved accuracy while preserving throughput on representative benchmarks [

21]. Recent surveys further emphasise that to stabilise optimisation and accommodate diverse object scales in remote sensing, Transformer components are increasingly combined with CNN pyramids and domain-tailored fusion necks [

16]. Overall, these advances suggest that carefully designed fusion and loss formulations are key to transferring Transformer-style global reasoning to remote sensing imagery.

3. Methodology

To address the widespread challenges in remote sensing imagery of detecting multi-scale objects, especially small targets, coping with heavy background clutter, and overcoming the limited representational capacity of existing end-to-end detectors, we build on the high-performance real-time detector RT-DETR [

4] and propose MSRS-DETR, a new multi-scale remote sensing detection model that revises the backbone, encoder, and decoder designs.

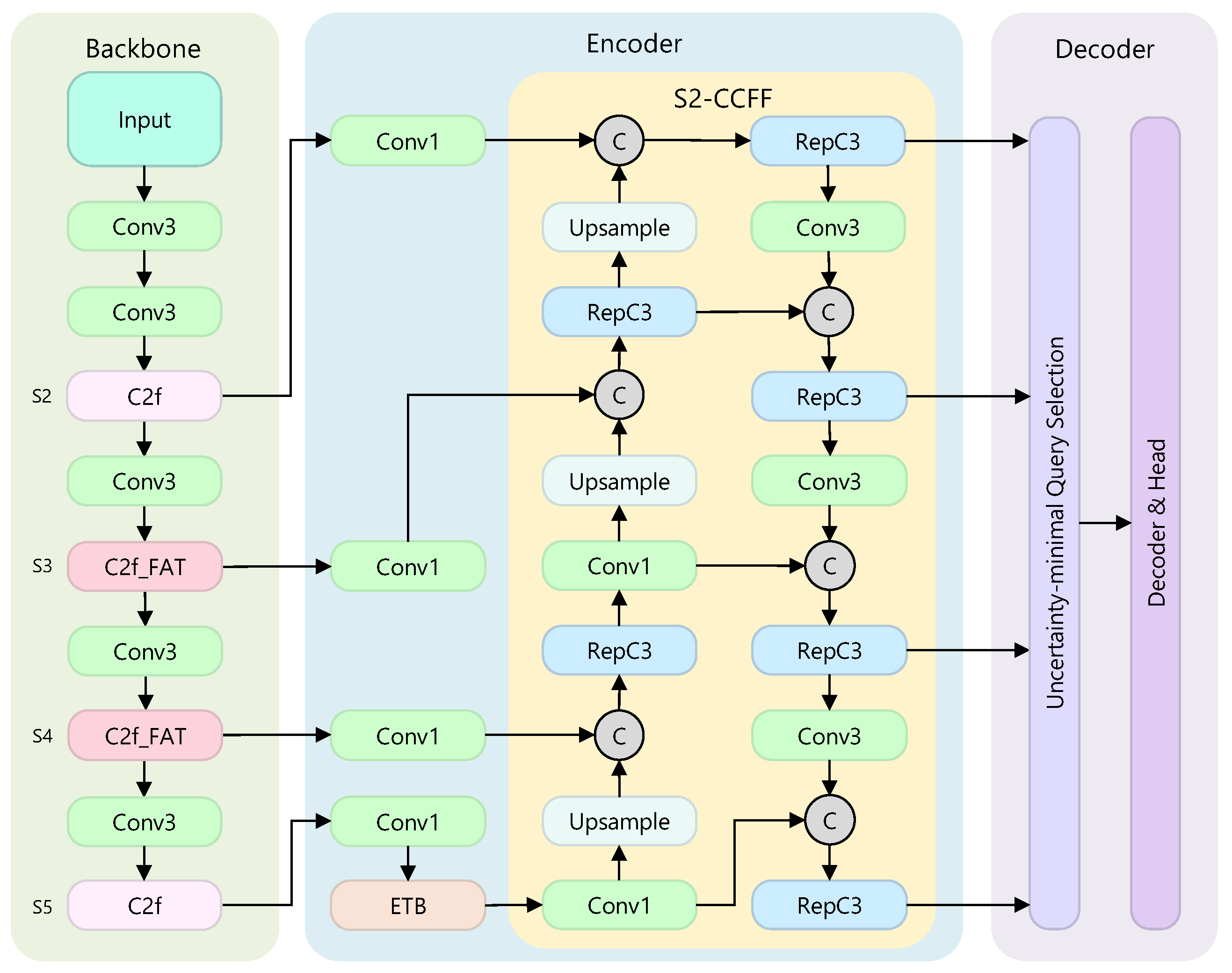

Figure 1 gives an overview of the architecture, which comprises a lightweight frequency-aware residual backbone C2f

FATNET, an Entanglement Transformer Block (ETB) for frequency–spatial feature extraction, and an S2-CCFF fusion module that leverages shallow features.

3.1. Backbone

The backbone underpins the detector, and the quality of its features directly sets the performance ceiling. An effective backbone should extract discriminative multi-scale features while keeping computational cost low. In MSRS-DETR we introduce C2f

FATNET, a frequency-aware backbone illustrated in

Figure 1. Classic C2f blocks handle the shallow S2 and deepest S5 stages for generic features, whereas the middle stages S3 and S4 adopt the new C2f

FAT blocks for stronger discrimination.

The C2f block, derived from CSPNet [

22], splits the input along channels into a shortcut branch and a bottleneck branch, then concatenates and fuses them with a

convolution, thus enriching gradients and reducing redundancy. However, its bottleneck relies solely on standard convolutions, a spatially local operation that lacks long-range context.

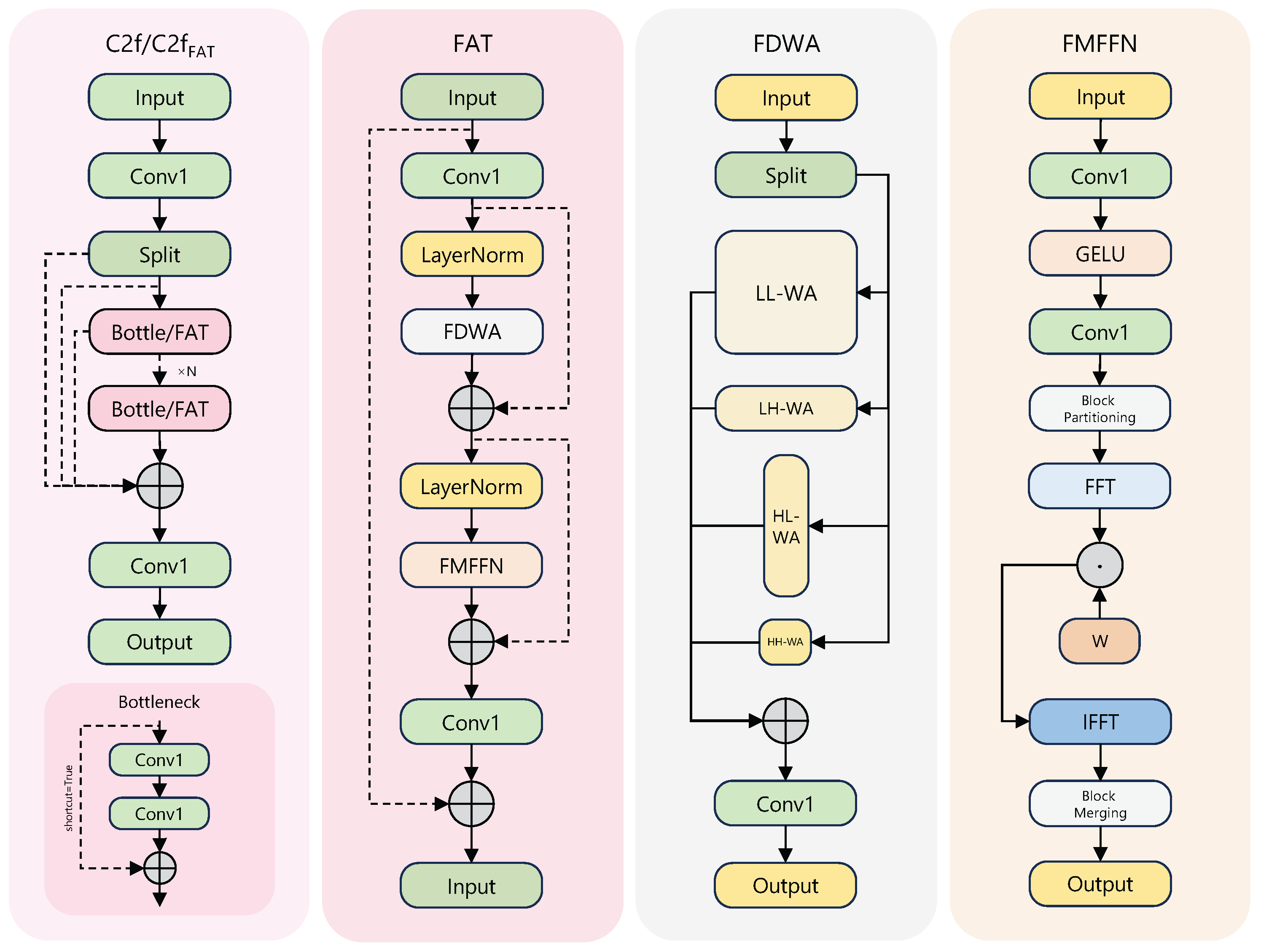

We therefore replace the bottleneck with a Frequency-Aware Transformer (FAT) module [

23], which extends analysis from the spatial domain to the joint frequency–spatial domain. As shown in

Figure 2, FAT contains Frequency-Decomposition Window Attention (FDWA) and a Frequency-Modulation Feed-Forward Network (FMFFN).

FDWA runs four attention windows of different shapes in parallel: a large square window for low-frequency context (LL-WA), a small square window for high-frequency detail (HH-WA), and two rectangular windows for vertical (HL-WA) and horizontal (LH-WA) anisotropic features. Thus, a single block can decompose multi-scale and multi-directional frequency components that describe oriented ships or textured vegetation.

FMFFN adaptively refines the features as

where

is the input of the block,

and

denote block partition and merge, and

,

are the fast Fourier transform and its inverse. The learnable filter

W modulates the spectrum, amplifying informative frequencies and suppressing noise.

By integrating FAT, the C2fFAT block evolves from a purely spatial extractor into a dual-domain module. Despite higher internal complexity than a single bottleneck, its stronger representation allows fewer parameters than stacking multiple convolutions and furnishes richer cues for downstream detection, particularly where pure spatial features fall short.

3.2. Encoder

3.2.1. Entanglement Transformer Block

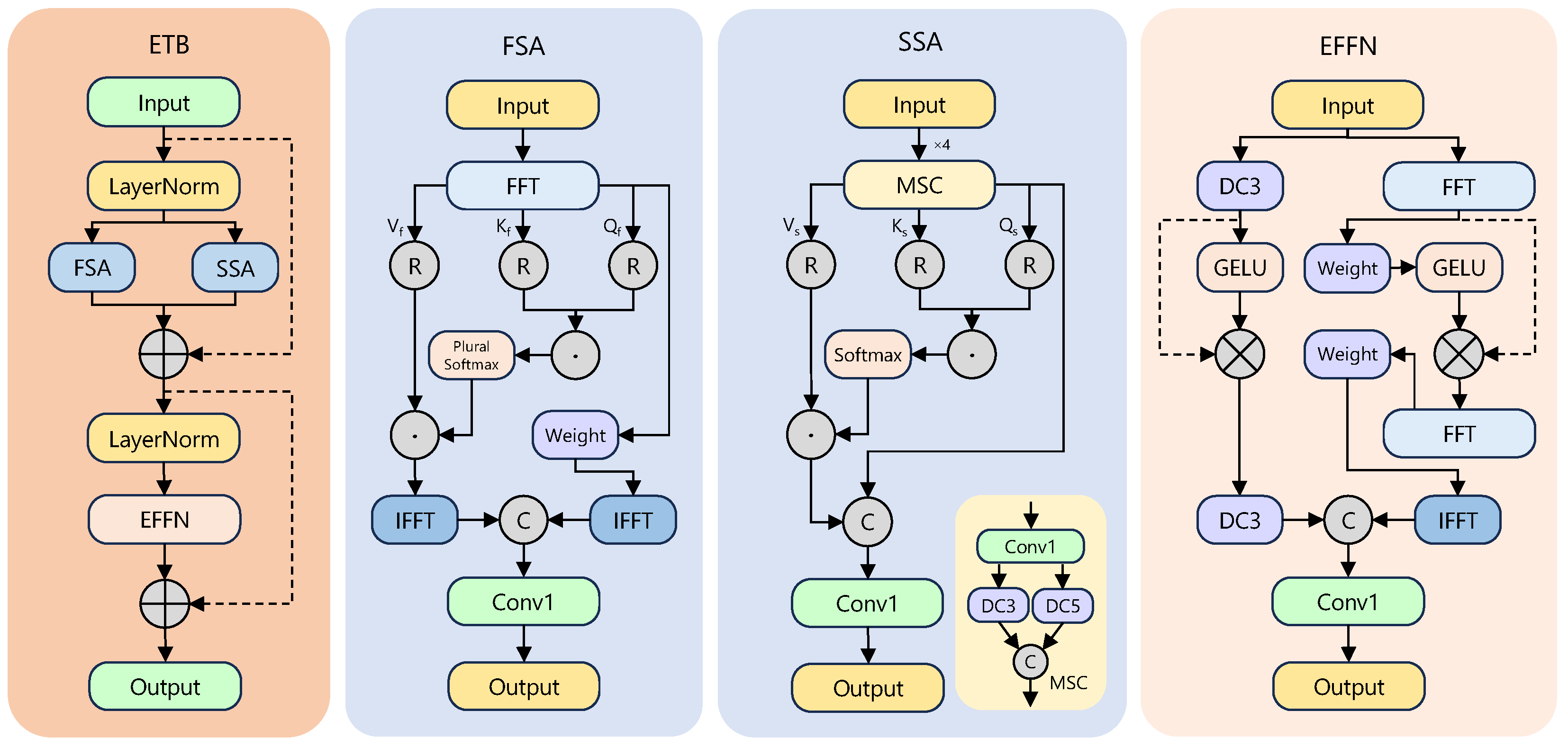

To extract robust features when targets and background share high visual similarity, we append an Entanglement Transformer Block (ETB) [

24] after the deepest backbone stage S5. ETB jointly models features in frequency and spatial domains through parallel processing and deep interaction.

Figure 3 outlines its three parts: frequency self-attention (FSA), spatial self-attention (SSA), and an Entangled Feed-Forward Network (EFFN). After layer normalisation, the input is sent to FSA and SSA in parallel; their outputs are fused, renormalised, and fed to EFFN.

FSA captures global frequency dependencies. The input is linearly projected, transformed with FFT, and produces complex-valued queries , keys , and values . The resulting attention map is split into real and imaginary parts, each soft-maxed and recombined, enabling the model to correlate periodic or textured patterns across the spectrum before transformation back to the spatial domain via IFFT.

SSA models local spatial context efficiently. We employ multi-scale depthwise separable convolution (MSC) to generate , , and , focusing attention on geometry and local relations.

EFFN performs two-stage cross-domain exchange. First, the fused features are projected separately to spatial and frequency domains. Second, the two outputs are concatenated and re-entered into parallel branches for deeper interaction, forcing repeated alignment between complementary cues. When spatial details are obscured, frequency patterns such as texture still guide discrimination, yielding robust representations with negligible parameter overhead. Parallel FSA and SSA also exploit GPU concurrency for faster inference.

3.2.2. S2-Enhanced CNN-Based Cross-Scale Feature Fusion

Severe scale variation, especially tiny targets buried by repeated down-sampling, motivates the S2-Enhanced CNN-based Cross-Scale Feature Fusion (S2-CCFF). Using a bidirectional topology, it exchanges information among backbone outputs . A top-down semantic path up-samples S5 to S4, concatenates with , and aggregates them with a RepC3 block, iterating to S2. The fused high-resolution layer is then the source of a bottom-up localisation path. After a stride-3 convolution, is down-sampled, fused with the semantic output at S3, and processed again by RepC3, propagating precise edges upward while semantic cues flow downward. Thus, each scale receives both rich semantics and fine localisation, boosting small-object detection.

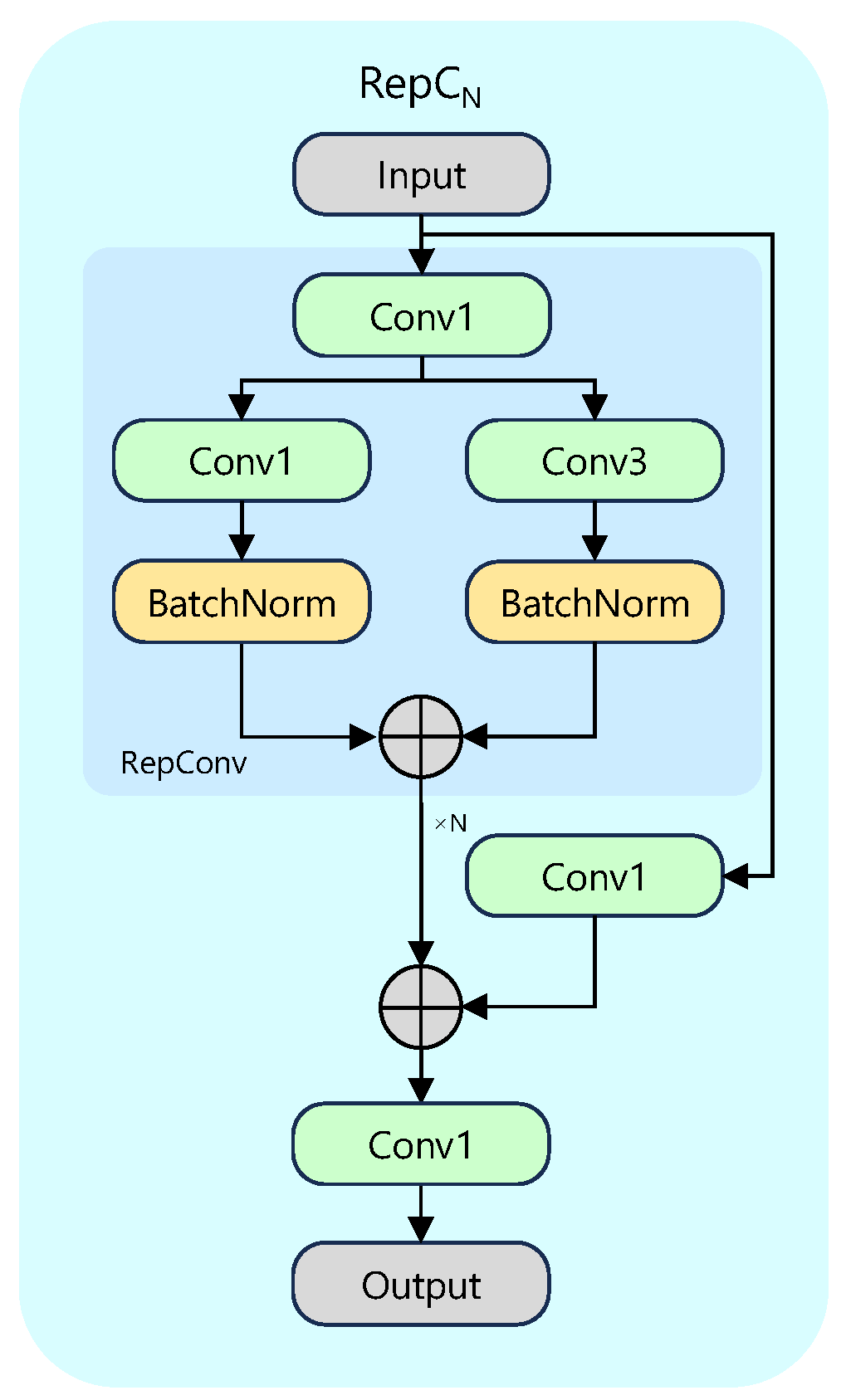

RepC3 is chosen as the fusion unit, see

Figure 4. A fixed receptive field limits ordinary convolutions, whereas RepC3 leverages structural re-parameterisation [

25]. During training, its RepConv branch mates a

, a

, and an identity pathway, akin to an ensemble of receptive fields. At deployment, these branches are algebraically folded into a single

convolution by padding the

kernel and merging batch-normalisation statistics, giving standard

cost yet richer representation.

3.3. Decoder

To remain efficient and free of non-maximum suppression, the decoder adopts the query selection strategy from the baseline RT-DETR [

4]. The mechanism, referred to as uncertainty-minimal query selection (UmQS), is designed to provide high-quality initial queries to the decoder. It operates by first using an auxiliary prediction head on the encoder’s output features to generate initial classification scores. Then, the features corresponding to the top-K highest scores are selected to serve as the initial object queries for the decoder layers. This approach ensures that the decoder focuses its attention on feature map regions with a high probability of containing objects, thereby improving both convergence speed and overall efficiency.

These selected queries pass through several identical decoder layers, each applying self-attention to suppress redundancy and cross-attention to gather evidence from encoder features. Training is supervised by a set-to-set loss with Hungarian matching between

N predictions and

M ground truths:

where

is the focal loss [

26] to tackle class imbalance, and

and

[

27] supervise bounding box regression. Losses are applied to every decoder layer and summed, realising an end-to-end pipeline without postprocessing.

4. Experiments and Results

4.1. Experimental Setup

4.1.1. Datasets

To conduct a comprehensive and multi-faceted evaluation of the proposed MSRS-DETR model, we selected two representative public benchmarks for remote sensing ship detection, namely, HRSC2016 and ShipRSImageNet. These datasets span scenarios from simple to highly complex, enabling an effective assessment of the model’s accuracy, robustness, and generalisation, especially for multi-scale and small-object detection.

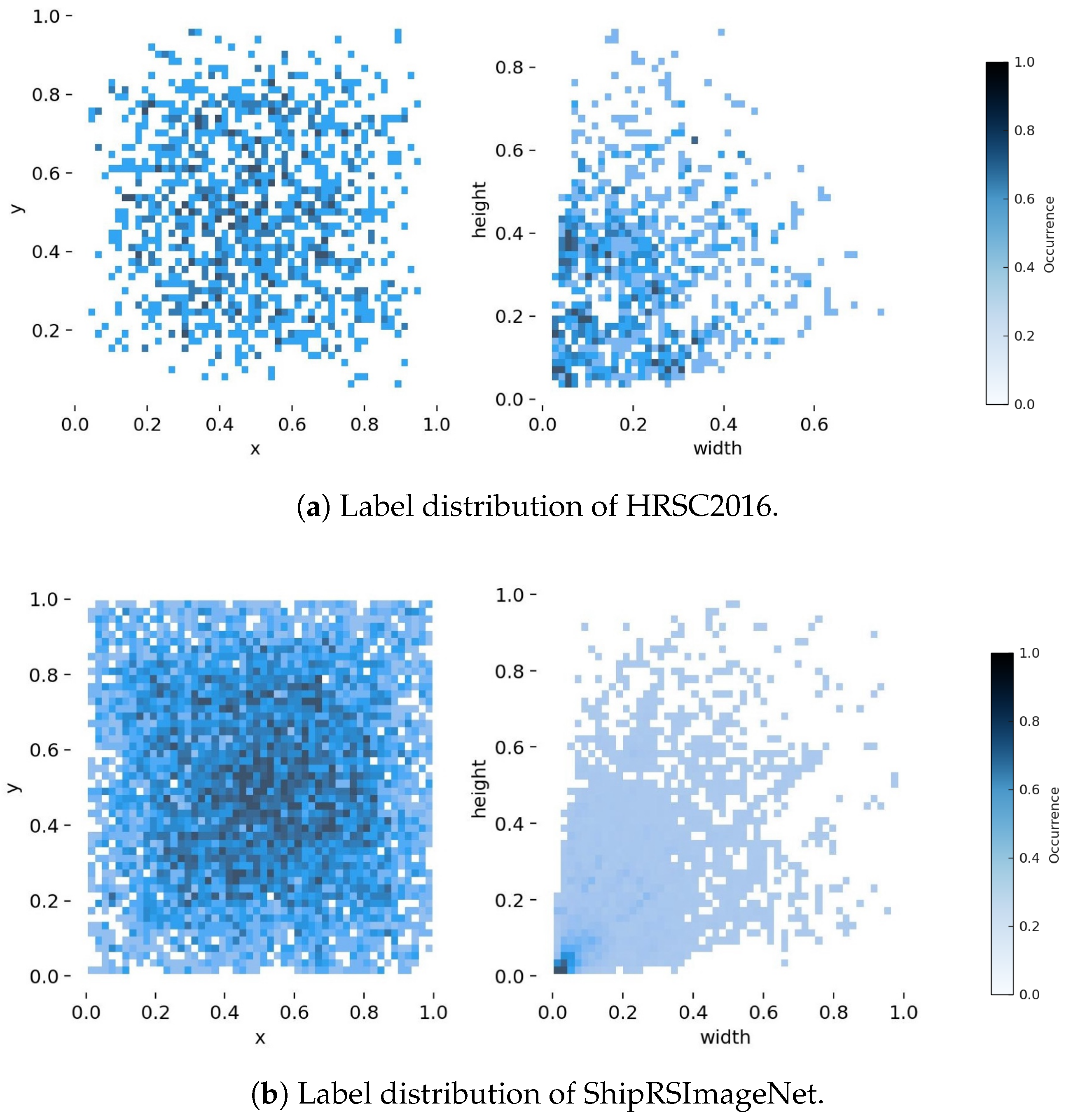

Figure 5 visualises the differences in target scale and spatial distribution between the two datasets.

HRSC2016 [

28] is a classic benchmark for ship recognition in high-resolution optical remote sensing imagery. It contains 1061 images collected from six major ports, officially split into 436 training, 181 validation, and 444 test images, with 2976 annotated ship instances in total. Resolutions range from 0.4 m to 2.0 m and image sizes vary from

to

pixels. Images cover open-sea scenes with simple backgrounds and inshore-port scenes with highly cluttered backgrounds, thereby testing detection performance under diverse conditions. As shown in

Figure 5a, the target-size distribution is relatively uniform, making HRSC2016 suitable for evaluating multi-scale detection. Imagery is derived from Google Earth across six harbours; the dataset records harbor, data source, image date, geographic coordinates, resolution layer, and scale.

ShipRSImageNet [

29] is larger, more fine-grained, and more diverse. It comprises 3435 images with 17,573 ship instances sourced from various remote sensing platforms worldwide. Compared with HRSC2016, its target sizes vary more widely and include many small objects (

Figure 5b). Complex backgrounds and heterogeneous scenes pose additional challenges for feature representation. Consequently, ShipRSImageNet is used in ablation studies to verify the effectiveness and generalisation of each proposed module for small-object detection in complex scenes. Images are collected from multiple sources including xView (WorldView-3, 0.3 m GSD, chips of

), HRSC2016 and FGSD, the Airbus Ship Detection Challenge, and Chinese satellites such as GaoFen-2 and JiLin-1; the official split is 2198/550/687 (train/validation/test).

4.1.2. Evaluation Metrics

Model performance is assessed with a set of accuracy and efficiency metrics. Accuracy follows the COCO protocol and reports precision (P), recall (R), and mean Average Precision (mAP). Efficiency focuses on model complexity and inference speed, quantified by the number of parameters (Params) and theoretical computation (GFLOPs). Definitions are summarised in

Table 1. For both HRSC2016 and ShipRSImageNet, detections are evaluated using horizontal bounding boxes (HBB) parameterised as

, with standard axis-aligned IoU. Unless otherwise noted, we report AP

50, AP

75, and mAP

50:95.

4.1.3. Implementation Details

All experiments were run on a single GeForce RTX 4090 (NVIDIA, Santa Clara, USA) to ensure fair comparison. The software stack consisted of Ubuntu 22.04, PyTorch 2.4.0, CUDA 12.4, and Python 3.12.10. Training employed the AdamW optimiser. Input images were resized to , the batch size was 8, and training lasted 500 epochs with a cosine-annealing learning-rate schedule. Inference speed (FPS) was measured on single images (batch size = 1) and averaged over the entire test set; preprocessing was included. Key settings were as follows. We trained for 500 epochs with a batch size of 8 at an input size of . We used AdamW with an initial learning rate of , momentum of , and weight decay of . Data augmentation applied horizontal flipping with probability 0.5 together with random translation of 0.1 and random scaling of 0.5. We did not use rotation, mosaic, mixup, or copy–paste, and automatic mixed precision was off. We used an IoU threshold of 0.7 and limited the number of detections to 300. We fixed the random seed to 0, enabled deterministic training, and used four data-loading workers.

4.2. Comparative Experiments

To validate the overall capability of MSRS-DETR, we conducted a horizontal comparison on the HRSC2016 dataset against several mainstream detectors, including our baseline model, RT-DETR-R18. The experimental results are presented in

Table 2.

The experimental results demonstrate that our proposed MSRS-DETR strikes an exceptional balance between accuracy, efficiency, and model complexity, yielding significant performance gains. Compared to the baseline RT-DETR-R18, MSRS-DETR achieves a higher core mAP50-95 score along with improvements in mAP50 and mAP75, all with a remarkable 29.1% reduction in parameters. This result showcases a significant performance boost alongside model lightweighting. It is noteworthy that while the theoretical computation (GFLOPs) of MSRS-DETR is higher than the baseline, our subsequent ablation study shows that its practical inference speed is superior. When compared to YOLOv8m, which has similar computational load, MSRS-DETR achieves a 0.18% (HRSC2016) and 0.28% (ShipRSImageNet) higher mAP50-95 with fewer GFLOPs. Compared to YOLOv8s, which has a similar parameter count, MSRS-DETR surpasses it by 2.59% (HRSC2016) and 4.92% (ShipRSImageNet) in the core mAP50-95 metric. Furthermore, when measured against the top-performing RT-DETR-R101, MSRS-DETR uses only 18.9% of its parameters and 29.8% of its GFLOPs while lagging by a mere 0.55% in mAP50-95 on HRSC2016 and exceeding it by 1.38% on ShipRSImageNet. Evidently, MSRS-DETR maintains high accuracy while significantly reducing model complexity and computational cost, demonstrating its outstanding performance on multi-scale ship remote sensing object detection tasks.

4.3. Ablation Study

To clearly validate the individual effectiveness and synergistic effects of the proposed C2f

FATNET backbone, ETB module, and S2-CCFF module, we conducted a series of ablation experiments. Starting with RT-DETR-R18 as the baseline, we progressively integrated our proposed components and evaluated the performance on both the HRSC2016 and ShipRSImageNet datasets. The results are detailed in

Table 3.

The results of the ablation study show that isolating the introduction of the C2fFATNET backbone reduces the model’s parameter count from 19.9 M to 15.2 M (a 23.6% decrease) and lowers GFLOPs from 56.9 G to 51.3 G. While making the model more lightweight, the core mAP50-95 metric improved by 0.98% on HRSC2016 and 0.34% on ShipRSImageNet. This confirms that the FATBlock-enhanced C2fFATNET, with its powerful frequency-domain feature extraction, can achieve more efficient multi-scale feature extraction in RSI with fewer parameters and lower computational cost, thus achieving performance gains alongside backbone lightweighting.

When we introduced the ETB module for feature extraction at the top of the encoder, the core mAP50-95 metric improved by 0.67% and 0.34% on the two datasets, respectively, with almost no change in parameters or GFLOPs. Notably, the practical inference speed (FPS) increased by a remarkable 52.1% on HRSC2016 and 40.2% on ShipRSImageNet. This provides strong evidence that ETB’s unique frequency–spatial entanglement mechanism can capture more discriminative deep features, and its highly parallel structure effectively utilises the computational power of modern GPUs, leading to a substantial boost in practical inference efficiency without increasing the theoretical computational burden.

The isolated introduction of the S2-CCFF module yields the most significant accuracy gains on the more challenging ShipRSImageNet dataset, which is rich in small targets, with an improvement of 2.13% in mAP50-95. Although this module increases GFLOPs due to processing a higher-resolution feature map, the resulting accuracy benefit is substantial. This demonstrates that injecting the high-resolution details from the S2 layer into the bidirectional fusion path is an effective strategy for preserving and utilising key small-object cues and reducing the missed detection rate.

The full MSRS-DETR model, combining all three modules, achieved the best mAP

50-95 performance across all configurations on both datasets, improving upon the baseline by 1.69% and 2.16%, respectively. The final performance gain surpasses that of any partial combination, indicating a strong synergistic effect among our designed components. C2f

FATNET provides richer features at the source, the ETB module refines deep semantics, and the S2-CCFF module ensures effective detail fusion, together forming an efficient and powerful feature processing pipeline. With a 29.1% reduction in parameters compared to the baseline, the MSRS-DETR model achieves higher detection accuracy and faster inference speed (FPS increased by 12.4% and 8.4% on the two datasets), striking an optimal balance between model complexity, inference efficiency, and detection performance. While the ETB’s parallel feature extraction demonstrably improves runtime and accuracy in isolation (

Table 3), the end-to-end speedup of the full model is constrained by the S2-CCFF detail-extraction and fusion strategy on the high-resolution path; consequently, overall throughput improves only modestly over the baseline but yields the best accuracy–efficiency trade-off.

4.4. Visual Results

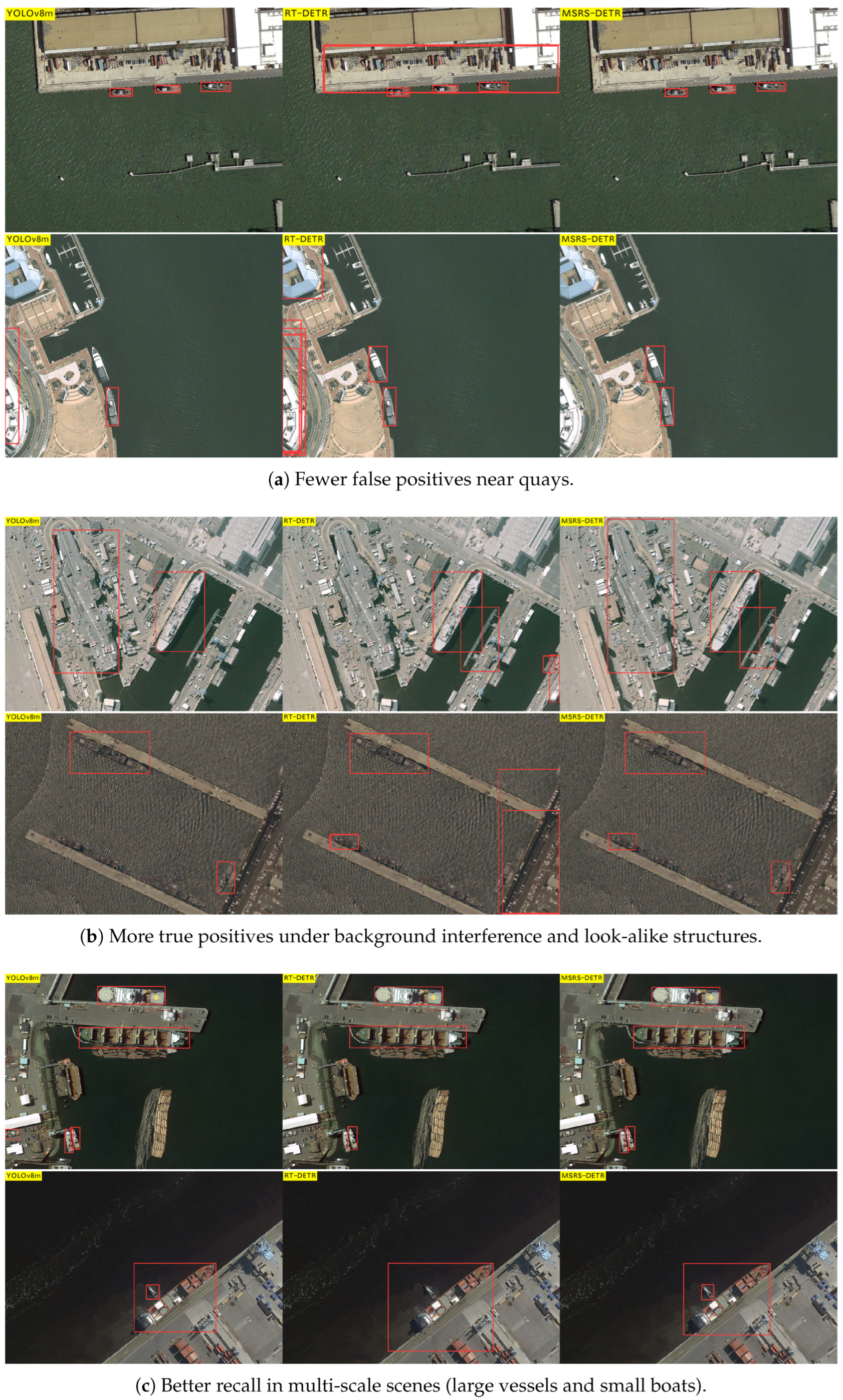

We further performed qualitative analyses to visualise improvements in challenging scenarios.

Figure 6 compares MSRS-DETR with the baseline on HRSC2016, illustrating reductions in false positives, increased true positives in cluttered backgrounds, and superior multi-scale detection. Concretely,

Figure 6a shows that MSRS-DETR yields fewer onshore false positives (e.g., quayside structures),

Figure 6b demonstrates more reliable recognition under background interference and look-alike structures where the baselines exhibit both false alarms and misses, and

Figure 6c highlights better recall of small objects in multi-scale scenes.

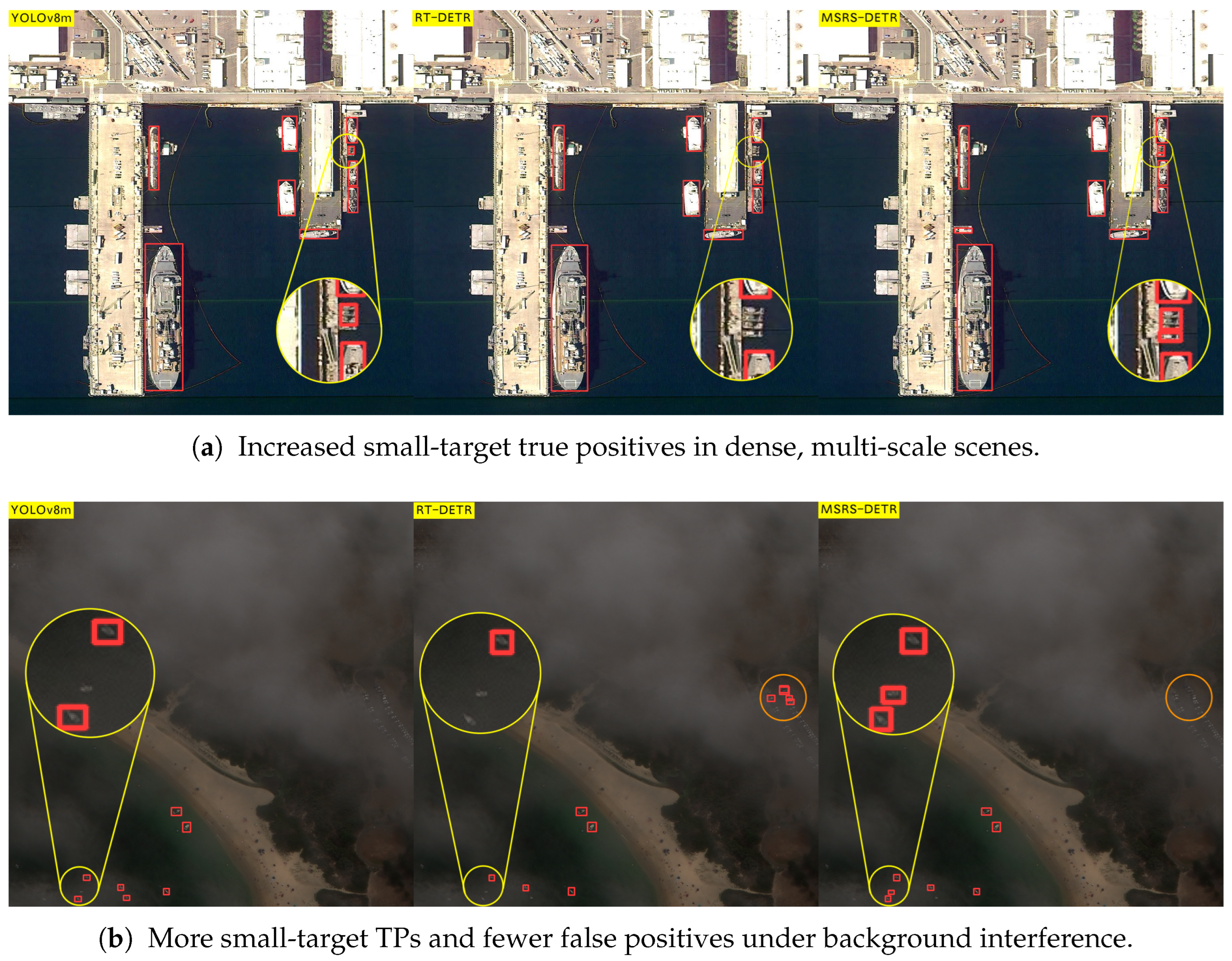

Figure 7 shows similar advantages on ShipRSImageNet, particularly for dense small targets, evidencing the efficacy of S2-CCFF. Specifically, in

Figure 7a YOLOv8m exhibits imprecise delineation of small targets and RT-DETR shows higher miss rates, whereas MSRS-DETR localises dense, multi-scale vessels more accurately; in

Figure 7b, under background interference, MSRS-DETR achieves more true positives with fewer false positives than the baselines. These visual results corroborate the quantitative findings and highlight the balanced accuracy, speed, and efficiency achieved by MSRS-DETR.

4.5. Discussion

MSRS-DETR improves detection in cluttered ports and dense small-object scenes by aligning frequency-aware feature extraction with an NMS-free DETR pipeline. The gains follow the roles of the three components and the patterns in

Section 4.4. C2f

FATNET strengthens fine-grained cues at the source and stabilises optimisation under heterogeneous backgrounds, which supports higher recall and fewer background-induced false alarms. ETB couples frequency and spatial information and reduces spectral bias, which preserves high-frequency detail that characterises small vessels and textured surroundings and also explains the strong runtime gains when ETB is used alone. S2-CCFF injects shallow high-resolution signals and propagates edges upward while semantics flow downward, which increases small-object recall in crowded regions. This benefit introduces extra work along the fusion path and bounds the end-to-end speedup once all modules are assembled. The trade-off is consistent with the ablation in

Table 3 and with the qualitative panels that show fewer quayside false positives and better localisation of small boats under interference.

The component-to-failure-mode mapping is clear in practice. Extremely low-contrast targets can still be missed when wakes or shadows dominate the local spectrum, and S2-CCFF reduces this miss rate by recovering edges while ETB sharpens texture cues. Look-alike human-made structures can trigger false alarms, and C2fFATNET improves class separation through more selective early features while ETB suppresses background leakage. Very large targets can be partially detected when effective context is limited, and the multi-scale route mitigates this behaviour by passing both localisation detail and deep semantics across scales. Together, these mechanisms produce the accuracy and efficiency balance reported for the full model.

The improvements we report come from the frequency–spatial fusion architecture that operates upstream of the box head and is therefore representation agnostic. To isolate architectural effects and preserve internal validity with the RT DETR baseline, we adopt horizontal bounding boxes, which keep Hungarian matching, losses, and uncertainty minimal query selection unchanged. Introducing oriented boxes would add angle parameterisation, periodicity handling, and rotated IoU and assignment, which could confound attribution of gains to our modules. HRSC2016 and ShipRSImageNet both natively support horizontal annotations and evaluation, which allows stable and directly comparable reporting without redesigning protocols. In future work we will add oriented detection and oriented metrics to complete the picture and to compare against methods that use rotated heads.

MSRS-DETR is positioned in the real-time end-to-end line that begins with DETR set prediction without NMS and extends to RT DETR for throughput. Our pathway differs from common FPN or EBiFPN necks that fuse only in the spatial domain. Frequency analysis appears in the backbone through FAT and in the encoder through ETB, so the fusion module receives inputs whose fine detail is already enhanced rather than recovered post hoc. The shallow bidirectional fusion is instantiated by RepCN with in our design and targets small-object detail while leaving the decoder unchanged in the RT DETR sense. This division of labour helps explain why accuracy improves most on ShipRSImageNet, where tiny targets dominate, while the end-to-end property is preserved.

The framework is practical with public cloud-hosted imagery and can run economically at scale. A typical workflow accesses Sentinel 2 L2A and Landsat ARD in place, applies quality masks such as the SCL layer for Sentinel 2 and QA bits for Landsat, normalises radiometry when mixed sensors are used, and windows space and time to produce chips at the detector input size. This pre-detector adaptation layer documents provenance and explains tolerance to varied spatial resolutions, and the detector then serves as the core of a multi-scale pipeline on cloud-native platforms with object storage and on-the-fly tiling and masking. We expect these steps to generalise beyond ships and to broaden usage in large-area monitoring where repeated coverage and low cost are important.

5. Conclusions

To overcome the performance bottleneck caused by the exclusive use of spatial information in existing remote sensing detectors, we have presented MSRS-DETR, a paradigm that deeply fuses spatial and frequency cues. The proposed model integrates a frequency-aware backbone network C2fFATNET, an Entanglement Transformer Block (ETB), and a shallow-feature-enhanced bidirectional fusion framework S2-CCFF into the backbone, encoder, and fusion stages, respectively. This design systematically alleviates small-object information loss and background interference from the initial feature extraction stage through multi-scale feature fusion.

Experiments on the HRSC2016 and ShipRSImageNet benchmarks show that MSRS-DETR markedly reduces parameter count while simultaneously improving detection accuracy and inference speed, confirming the effectiveness of joint spatial–frequency fusion in complex remote sensing scenarios for multi-scale ship remote sensing object detection. While the proposed method shows strong performance on these ship benchmarks, future work should focus on validating this approach on a wider variety of object classes (e.g., vehicles, aircraft) to rigorously assess its generalisability. This balanced outcome is achieved through the synergy of its components: the C2fFATNET backbone reduces parameters while enriching features, the ETB block significantly boosts practical inference speed, and the S2-CCFF module trades some of that speed gain for critical accuracy improvements on small targets. Future work will explore lighter and more adaptive spatial–frequency co-learning strategies, aiming to apply the dual-domain feature extraction and fusion scheme more efficiently to even more complex, multi-scenario, and multi-scale remote sensing detection tasks.

Author Contributions

Conceptualisation, H.H.; methodology, J.Y.; software, J.Y.; validation, S.F.; formal analysis, H.H.; investigation, J.Y.; resources, H.H.; data curation, S.F.; writing—original draft preparation, J.Y.; writing—review and editing, H.H.; visualisation, S.F.; supervision, H.H.; project administration, H.H.; funding acquisition, H.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Acknowledgments

We would like to thank our students for their work during the algorithm testing.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Y.; Cao, Y.; Guo, C.; Shi, P.; Li, P. Unified Spatial-Frequency Modeling and Alignment for Multi-Scale Small Object Detection. Symmetry 2025, 17, 242. [Google Scholar] [CrossRef]

- Xu, Z.-Q.J.; Zhang, Y.; Luo, T.; Xiao, Y.; Ma, Z. Frequency Principle: Fourier Analysis Sheds Light on Deep Neural Networks. Commun. Comput. Phys. 2020, 28, 1746–1767. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2023, arXiv:2304.08069. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Advances in Neural Information Processing Systems; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Sydney, Australia, 2015; Volume 28. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 8 May 2024).

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Su, Z.; Yu, J.; Tan, H.; Wan, X.; Qi, K. MSA-YOLO: A Remote Sensing Object Detection Model Based on Multi-Scale Strip Attention. Sensors 2023, 23, 6811. [Google Scholar] [CrossRef] [PubMed]

- Xie, T.; Han, W.; Xu, S. YOLO-RS: A More Accurate and Faster Object Detection Method for Remote Sensing Images. Remote Sens. 2023, 15, 3863. [Google Scholar] [CrossRef]

- Fang, Z.; Wang, X.; Zhang, L.; Jiang, B. YOLO-RSA: A Multiscale Ship Detection Algorithm Based on Optical Remote Sensing Image. J. Mar. Sci. Eng. 2024, 12, 603. [Google Scholar] [CrossRef]

- Hu, J.; Wei, Y.; Chen, W.; Zhi, X.; Zhang, W. CM-YOLO: Typical Object Detection Method in Remote Sensing Cloud and Mist Scene Images. Remote Sens. 2025, 17, 125. [Google Scholar] [CrossRef]

- Wang, X.; Wang, A.; Yi, J.; Song, Y.; Chehri, A. Small Object Detection Based on Deep Learning for Remote Sensing: A Comprehensive Review. Remote Sens. 2023, 15, 3265. [Google Scholar] [CrossRef]

- Gui, S.; Song, S.; Qin, R.; Tang, Y. Remote Sensing Object Detection in the Deep Learning Era—A Review. Remote Sens. 2024, 16, 327. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Ren, S.; Han, C.; Yang, X.; Han, G.; He, S. Detection. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 212–228. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable {DETR}: Deformable Transformers for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Kong, Y.; Shang, X.; Jia, S. Drone-DETR: Efficient Small Object Detection for Remote Sensing Image Using Enhanced RT-DETR Model. Sensors 2024, 24, 5496. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Ma, Z.; Li, X. RS-DETR: An Improved Remote Sensing Object Detection Model Based on RT-DETR. Appl. Sci. 2024, 14, 10331. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Li, H.; Li, S.; Dai, W.; Li, C.; Zou, J.; Xiong, H. Frequency-Aware Transformer for Learned Image Compression. In Proceedings of the The Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Sun, Y.; Xu, C.; Yang, J.; Xuan, H.; Luo, L. Frequency-Spatial Entanglement Learning for Camouflaged Object Detection. arXiv 2024, arXiv:2409.01686. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-style ConvNets Great Again. arXiv 2021, arXiv:2101.03697. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar] [CrossRef]

- Liu, Z.; Yuan, L.; Weng, L.; Yang, Y. A High Resolution Optical Satellite Image Dataset for Ship Recognition and Some New Baselines. In Proceedings of the 6th International Conference on Pattern Recognition Applications and Methods (ICPRAM), Porto, Portugal, 24–26 February 2017; pp. 324–331. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L.; Wang, Y.; Feng, P.; He, R. ShipRSImageNet: A Large-Scale Fine-Grained Dataset for Ship Detection in High-Resolution Optical Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8458–8472. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).