1. Introduction

Multiple-object Tracking (MOT) is a core task in computer vision, which detects and continuously tracks multiple targets in video sequences while maintaining their consistent identities. With the development of technologies such as autonomous driving, intelligent monitoring, and drone inspection, the demand for MOT in complex scenarios is growing day by day.

Based on the integration mode of tasks, MOT is mainly divided into two categories: Two-Stage Multi-Object Tracking (TSMOT) and joint detection and tracking (JDT) algorithms, which differ significantly in technical implementation and application scenarios. Two-stage multi-target tracking includes two independent stages: detection and association. In the detection stage, a target detector locates targets frame by frame and extracts appearance features. In the association stage, data association algorithms are used to match detection boxes from adjacent frames. The joint detection and tracking algorithm realizes in-depth collaboration between detection and tracking and adopts an end-to-end architecture.

However, Multi Small-Object Tracking (MSOT) faces unique challenges, which manifest in three aspects: First, challenges from the targets themselves. For instance, long-distance pedestrians, infrared targets, and small targets in drone aerial imagery have low resolution and sparse texture features, making detection and matching much more difficult. Second, the impact of background interference. Complex backgrounds can further obscure the feature information of small targets, reducing tracking stability. Third, dynamic interactions in dense scenes. Targets are often occluded and cross paths, which directly breaks the trajectory continuity of traditional tracking methods and leads to significant performance degradation.

Traditional MOT methods have been developed in two major paradigms: statistical filtering-based and computer vision-based methods. Statistical methods, such as Multiple Hypothesis Tracking (MHT) [

1] and Joint Probabilistic Data Association (JPDA) [

2], excel in handling data ambiguity but struggle with small targets—this is because they rely on high-quality point measurements (e.g., precise position coordinates), which are scarce for low-resolution small targets. Random finite set (RFS) [

3] models target sets as random variables to handle unknown target counts, but their former high computational complexity issue has been alleviated by advances like the Labeled Multi-Bernoulli (LMB) filter [

4]—which decomposes multi-object density into independent labeled Bernoulli components for linear-complexity data association and efficient SMC implementation. However, in dense small-target scenarios (e.g., crowded aerial tracking), the interaction-aware LMB filter still faces challenges: fine-grained state corrections for target interactions and distinguishing overlapping sparse-feature targets offsets complexity gains, so the real-time constraint remains valid here. With the rise of deep learning, computer vision-based MOT (e.g., two-stage TSMOT and end-to-end JDT) has become mainstream for small-target tracking. Even so, computer vision-based methods tailored for small-target MOT still have three key limitations: For two-stage TSMOT methods (e.g., SORT [

5], DeepSORT [

6]), they rely on Kalman filtering for motion prediction, but the linear motion assumption fails in fast-moving or occluded small-target scenarios. For end-to-end JDT methods (e.g., TraDeS [

7], OffsetNet [

8]), while they reduce detection error accumulation, they often sacrifice real-time performance due to complex feature fusion. For small-target-specific trackers (e.g., DroneMOT [

9]), though they improve detection accuracy, they lack adaptive recovery mechanisms for temporarily lost targets. To tackle these issues, this article proposes solutions from two aspects: motion modeling and trajectory recovery.

Dual motion modeling framework: It focuses on two key challenges; one is the large trajectory prediction bias of traditional Kalman filtering in occluded scenes, and the other is the high computational complexity of optical flow methods. By integrating two types of motion features, a new model is constructed. This framework uses dynamic weighting to combine the global linear prediction of Kalman filters with the local pixel-level motion capture of optical flow features, breaking the adaptation limitations of a single model in complex motion scenes. Compared with methods relying solely on Kalman filters (such as SORT [

5]), it significantly improves the tracking stability of motion-blurred targets.

Local detection enhancement mechanism: It aims to solve trajectory interruption caused by missed detection of small targets and severe occlusion. Its core lies in targeted processing of target disappearance positions: it leverages Kalman filtering to guide the generation of dynamic Regions of Interest (ROIs) and combines multi-feature fusion for re-detection within these regions, thereby achieving target repositioning and trajectory continuation when the detection algorithm fails.

Core contributions of this article include the following:

A dual motion modeling framework is proposed, which integrates Kalman filtering and optical flow features. It addresses the performance bottleneck of traditional single-motion models in occlusion and motion blur scenarios. A dynamic weighting mechanism is used to integrate the global linear motion prediction of Kalman filtering with the local pixel-level motion information of optical flow features. This balances the long-term stability of uniform velocity models and their ability to respond to sudden motion changes. It optimizes the accuracy of target state estimation, effectively reduces prediction lag caused by intense motion or occlusion, and improves tracking robustness in occlusion and motion blur scenarios.

A local detection enhancement mechanism is designed. It is based on a Kalman filter-guided dynamic ROI detection strategy. This addresses trajectory interruption caused by missed detection of small targets and severe occlusion. When a target is temporarily lost, a local ROI region is dynamically generated based on the predicted state of Kalman filtering. The search scale coefficient is adaptively adjusted according to historical trajectory speed and confidence. Re-detection is then performed within this region. This balances search efficiency and the risk of missed detection. It significantly improves the detection success rate in scenarios involving missed detection of small targets and severe occlusion. It also realizes the reconstruction and tracking recovery of lost targets and enhances trajectory continuity.

A multi-feature fusion target association mechanism is developed. It integrates appearance, geometric, and motion information. This enhances the robustness of target re-identification in complex scenarios. HSV color histograms (with Bhattacharyya distance as the metric), geometric features (aspect ratio and area constraints), and motion information (Kalman predictions) are combined. These perform multidimensional validation of candidate regions. This enables accurate target re-identification and consistent ID maintenance when targets are occluded or temporarily lost. It further ensures trajectory continuity and identity consistency.

Compared with traditional methods, this approach significantly improves tracking robustness and trajectory continuity in scenarios involving small targets and dense occlusion.

3. Methods

3.1. Overall Architecture

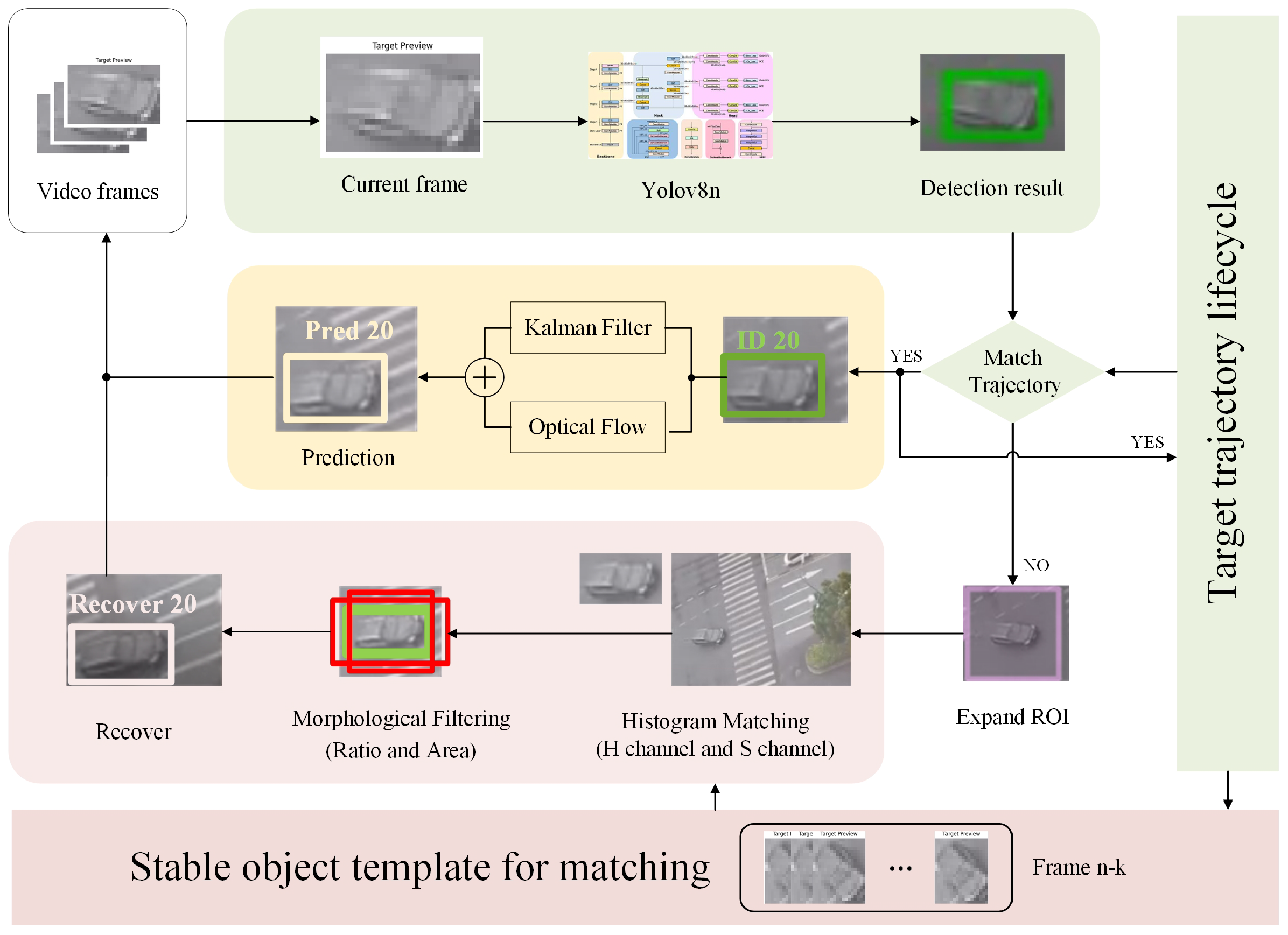

As illustrated in

Figure 1, the proposed method builds on the ByteTrack algorithm by retaining its two-stage matching logic (first associating high-confidence detection boxes and then low-confidence ones) while optimizing three core links to address the unique challenges of small-target multi-object tracking (MOT): replacing ByteTrack’s single Kalman motion model with an optical flow-corrected version, adding an adaptive local Region of Interest (ROI) search mechanism for lost targets (not included in ByteTrack), and upgrading the association metric from “motion + simple appearance” to multi-feature fusion. The method takes video frame sequences as input and adopts YOLOv8n as the detection model, with its core framework following a four-step pipeline (each step corresponds to a functional module in

Figure 1), and the modules collaborate to tackle issues like fast motion, short-term occlusion, and temporary target loss in small-target MOT scenarios:

- 1.

Initial Target Detection (Green Module in

Figure 1): Input video frames are fed into YOLOv8n, which locates small targets (e.g., long-distance pedestrians, small vehicles in aerial imagery) and outputs bounding boxes along with confidence scores. This step lays the foundation for subsequent motion modeling and target association, and its detection results directly determine the initial input quality of the follow-up modules.

- 2.

Optical Flow-Corrected Kalman Filter-Based State Estimation (Yellow Module in

Figure 1, Detailed in

Section 3.3): To optimize the accuracy of small-target motion state prediction, feature points are first extracted within the detection bounding box, and their optical flow displacement between adjacent frames is computed using the Lucas–Kanade algorithm. These displacement vectors are weighted and fused with the original detection box’s center coordinates to form an enhanced observation value, which is then fed into the Kalman filter. By balancing the long-term stability of the constant-velocity model and responsiveness to instantaneous motion changes, this module integrates Kalman’s global linear prediction with optical flow’s local pixel-level motion capture—effectively reducing prediction lag for fast-moving small targets and improving the update accuracy of motion states.

- 3.

Adaptive Local Search for Lost Target Recovery (Pink Module in

Figure 1, Detailed in

Section 3.2): When a small target is temporarily lost (i.e., no valid detection match in Step 1, often caused by severe occlusion or motion blur), this mechanism is triggered. Based on the predicted state of the Kalman filter (from Step 2), a local ROI is dynamically generated; meanwhile, the search scale coefficient k is adaptively adjusted according to the target’s historical trajectory velocity and the confidence of its last visible detection. Re-detection is performed only within this ROI—this not only avoids the computational overhead of full-image search but also reduces the risk of missed detections caused by background interference, enabling rapid recovery of lost small targets.

- 4.

Multi-Feature Fusion Target Association (Collaborates with Yellow and Pink Modules in

Figure 1, Detailed in

Section 3.4): To achieve accurate target matching and consistent ID maintenance for small targets, this module integrates three types of information for multidimensional validation of candidate regions: HSV color histograms (with Bhattacharyya distance as the similarity metric); geometric features (aspect ratio and area constraints); and motion information (Kalman filter predictions from Step 2). Even in complex scenarios involving occlusion or temporary target loss, this multidimensional validation enables reliable target re-identification, further ensuring the continuity of small-target trajectories and the consistency of their identities.

3.2. Adaptive Local Search

To enhance the re-identification capability when short-term tracking goals are lost and reduce the computational overhead incurred by full-image searches, this paper proposes an adaptive local search mechanism based on motion prediction. When target tracking fails, this method dynamically generates a Region of Interest according to the predicted state of the Kalman filter and conducts target re-detection within this region, thereby optimizing efficiency while maintaining a high recall rate.

Specifically, in the frame where the target disappears, the system first utilizes the target position in the previous frame to predict the center position, estimated width, and height of the target in the current frame via the Kalman filter. Subsequently, a rectangular candidate search area is constructed. The calculation method for the boundary coordinates of the Region of Interest is as follows:

Here,

a denotes the predicted width of the target in the current frame (output by the Kalman filter), and

b represents the predicted height of the target in the same frame, also derived from the Kalman filter. The parameter

k is the search scale coefficient, which is adaptively adjusted: it is set to 1.0 for targets with stable motion and high detection confidence and 1.2–1.5 for fast-moving or low-confidence targets to adapt to different motion and detection scenarios.

S stands for the side length of the square ROI, calculated by taking the maximum value of the predicted width and height and multiplying it by

k to ensure the ROI can fully cover the target’s possible position.

Among them, is the predicted center position of the target in the current frame; W stands for the width of the entire image frame, and H stands for the height of the entire image frame; and are the coordinates of the top-left corner of the region of interest, and and are the coordinates of the bottom-right corner of the region of interest; parameter k serves as the search scale coefficient, governing the expansion degree of the search area. A larger value broadens the search scope, favoring the capture of long-distance moving targets but potentially introducing more interference terms. Conversely, a smaller coefficient accelerates search speed but carries the risk of missed detections. Therefore, this paper adaptively adjusts the coefficient based on historical trajectories, incorporating factors like motion speed and detection confidence. This approach minimizes unnecessary computational overhead while preserving high tracking accuracy.

3.3. Optical Flow-Corrected Kalman Filter

In traditional MOT frameworks relying on Kalman filtering, target state estimation heavily depends on uniform motion models, which are susceptible to fast motion, occlusion, or nonlinear trajectories—leading to prediction error accumulation. To address this, this paper introduces an optical flow-corrected Kalman filtering approach that enhances responsiveness to instantaneous motion changes while preserving the filter’s long-term stability.

Within each frame’s object detection box, several key feature points are extracted, and sparse optical flow algorithms (e.g., Lucas–Kanade) are used to compute their displacements between the current and previous frames. The median of these displacements is subsequently computed to derive the overall optical flow estimation vector. By performing a weighted fusion of this vector with the original detection box’s center coordinates at a specified proportion, as shown in

Figure 2, an enhanced measurement closer to the true motion trend is constructed as the observation input for the Kalman filter.

Given the center coordinate of the detection box as

, the optical flow displacement vector as

, and the fusion weight parameter as

, the optimized measurement value is computed as follows:

The original Kalman filter observation equation is expressed as

, where

denotes observation noise terms. Upon introducing optical flow correction, the observation equation is revised to

The state update formula is formalized as

Among them, denotes the predicted state, signifies the Kalman gain, and represents the residual obtained from optical flow correction.

This fusion mechanism allows Kalman filtering to retain the long-term stability of the uniform velocity model while simultaneously responding to instantaneous motion changes derived from optical flow. This ensures that state estimation aligns more closely with the target’s instantaneous motion, mitigating prediction lag induced by fast movement or temporary occlusion.

3.4. Multi-Feature Fusion Target Association

To enhance the robustness and target persistence of multi-target tracking systems in complex scenarios involving occlusion and missed detections, this paper introduces a multi-feature target association mechanism that integrates appearance and geometric information, following Algorithm 1 as shown. This approach combines color histograms (HSV space), shape features (aspect ratio and area), and motion information (Kalman filter predictions) to enable accurate re-identification and tracking of temporarily occluded targets. While the target maintains a stable ID, the system continuously updates and maintains its appearance template. Unlike the Gibbs sampling approach in LRFS-based methods, which mitigates association ambiguity via probabilistic modeling, this work adopts a “color–geometry–motion” multi-feature hard constraint—this design not only ensures real-time performance but also minimizes mismatches in complex scenarios, making it particularly well-suited for scenes where small targets exhibit sparse feature representations.

In the algorithm, for each tracked target

t in the current frame

F, first, the Kalman filter

predicts the bounding box

p. Then, candidate regions

C near

p are extracted. For each candidate region

c in

C, histogram similarity

h (calculated by

between the target’s histogram

and the candidate’s histogram

), aspect ratio

a (

), geometric similarity

g (based on the absolute difference of aspect ratios), and motion similarity

m (based on the distance of centers) are computed. A combined score

s is obtained by weighted-summing these similarities. Valid matches are stored in

M if

s exceeds the threshold

. Finally, for valid matches, the Kalman filter is updated, and the appearance template (histogram

and aspect ratio

) is updated.

| Algorithm 1 Multi-Feature Fusion Target Association |

Require: Current frame F, tracked targets T Ensure: Updated targets - 1:

/* Matches set */ - 2:

for

do - 3:

/* Predicted bounding box */ - 4:

/* Candidate regions */ - 5:

for do - 6:

/* Histogram similarity */ - 7:

/* Aspect ratio */ - 8:

/* Geometric similarity */ - 9:

/* Motion similarity */ - 10:

/* Combined score */ - 11:

if then - 12:

/* Store valid match */ - 13:

end if - 14:

end for - 15:

end for - 16:

for do - 17:

/* Kalman filter update */ - 18:

/* Histogram template update */ - 19:

/* Aspect ratio update */ - 20:

end for - 21:

return T

|

3.4.1. Template Matching

To enhance the system’s adaptability to lighting variations, candidate ROIs are converted from the BGR to the HSV color space, with color histograms constructed using only the H and S channels. Following normalization, the Bhattacharyya distance is employed to quantify the similarity between the template color histogram and the histogram of the candidate region.

A smaller distance indicates greater feature similarity and a higher matching degree.

3.4.2. Dynamic Shape Constraints and Geometric Filtering

To optimize appearance matching and reduce false matches caused by shape inconsistencies, the system tracks the aspect ratio and bounding-box area of targets in real time and sets geometric consistency thresholds for verification. For targets with stable trajectories, their aspect ratios and areas are recorded. Dynamic thresholds are configured to adapt to rotations and deformations induced by camera motion or changes in target orientation.

After obtaining the ROI, it is converted into a grayscale image, and Gaussian adaptive thresholding is applied to enhance edge features. Then, a 3 × 3 elliptical kernel closing operation is performed to fill holes, connect fragmented regions, remove noise, and preserve the overall shape of the target. Connected regions are extracted from the binary image to obtain candidate contours. The aspect ratio and area of each candidate region are calculated, and regions that meet the aspect ratio and area conditions are selected.

For the candidate regions filtered by geometric constraints, the Bhattacharyya distance between their HSV histograms and the template histogram is calculated in sequence. Candidate boxes with distances below the preset threshold are retained. If there are candidate boxes, the one with the minimum Bhattacharyya distance is selected as the temporary position of the target, which is used to correct the state of the Kalman filter for fine-grained repositioning.

3.5. Time Complexity Analysis

The proposed framework mainly consists of four modules: detection, motion estimation, adaptive ROI re-detection, and multi-feature association. The computational complexity of each module is as follows:

Detection (YOLOv8n): The complexity is approximately , where N is the number of pixels in the input frame and C is the number of convolutional operations. Since modern detectors are GPU-optimized, this stage dominates the total computational cost.

Kalman Filtering: Both prediction and update steps have complexity , where d is the dimension of the state vector (typically ). This can be considered constant time relative to detection.

Optical Flow Estimation: Sparse Lucas–Kanade optical flow on k feature points has complexity . In practice, , so the additional overhead is small.

Data Association: Matching M detection boxes with the Hungarian algorithm and multi-feature similarity requires . Since the number of targets per frame is usually moderate, this stage contributes little compared to detection.

Overall, the framework’s time complexity can be expressed as

which is dominated by the detection stage. The tracking-related operations are lightweight and do not significantly impact runtime.

4. Experiments

4.1. Datasets

This experiment employed the VisDrone-MOT dataset [

30] and the UAVDT dataset [

31].

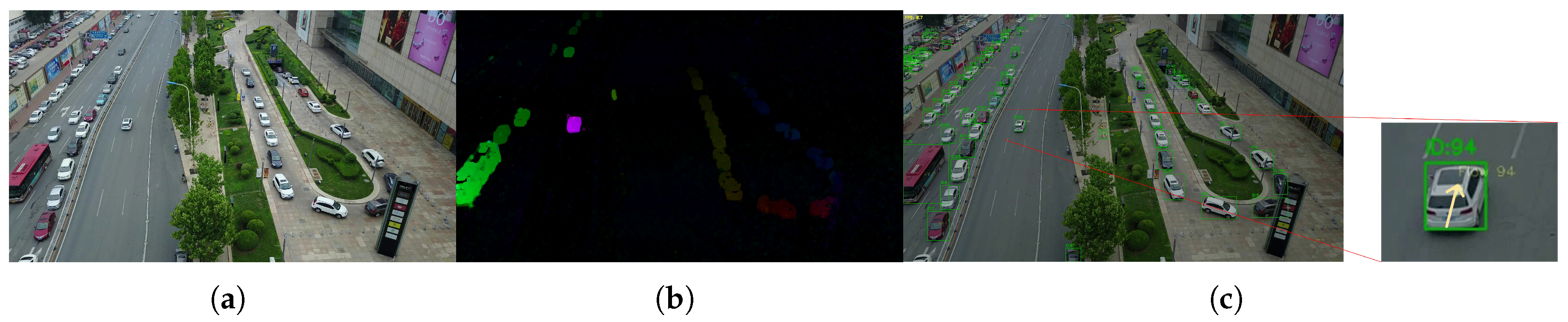

The VisDrone-MOT dataset, as shown in

Figure 3, is a specialized visual benchmark dataset for unmanned aerial vehicle perspectives, constructed by the AISKYEYE team at Tianjin University. It comprises 10,209 static images and 288 videos (with a total of over 260,000 frames), offering 2.6 million meticulously annotated bounding boxes for more than 10 types of targets—including pedestrians and vehicles—across complex scenes such as urban areas, rural regions, and traffic intersections. These targets are often characterized by small sizes, high density, occlusion, and exposure to varying lighting conditions. The annotation attributes encompass occlusion rate, truncation degree, and motion trajectory, supporting five major tasks: object detection, single-target tracking, multi-target tracking, and crowd counting.

As shown in

Figure 4, the UAVDT dataset consists of 100 video sequences captured by drones flying over different urban locations, covering various typical scenes like squares, main roads, and toll stations. The videos have a frame rate of 30 fps and an image resolution of 1080 × 540 pixels, with each frame containing precisely labeled bounding boxes for vehicles. It also provides key attribute information such as vehicle category and occlusion degree and is specifically designed to address the unique perspective and complex environmental challenges associated with drones.

4.2. Evaluation Metrics

4.2.1. MOTA

MOTA serves as a core metric for evaluating the overall performance of MOT algorithms. It assesses a tracker’s accuracy by comprehensively penalizing false negatives (FN), false positives (FP), and identity switches (IDSW). The calculation formula is

MOTA’s value can theoretically drop to negative infinity, though the closer it gets to 1, the better the tracking performance. This metric emphasizes global sensitivity to detection errors, rooted in the core logic that detection-stage false negatives and false positives impact overall performance far more than tracking-association-stage identity switches (IDSW). Thanks to its intuitiveness and comprehensiveness, MOTA sees widespread use in MOT task evaluation.

4.2.2. False Positives

False positives are a key metric in object detection and multi-object tracking, measuring non-existent targets misidentified or mislocalized by the model due to background misclassification or absent targets. In object detection, an FP refers to a predicted bounding box with insufficient overlap (IoU below the threshold) with any ground-truth target. In multi-target tracking, FPs are categorized into detection-level errors (single-frame false detections) and tracking-level errors (false trajectories from fragmented paths or incorrect associations). FP directly impacts detection accuracy and tracking metrics like MOTA. A high FP rate indicates model overfitting or logical flaws, potentially degrading downstream task reliability.

4.2.3. False Negatives

False negatives are a critical metric in object detection and multi-target tracking, measuring the model’s failure to detect actual targets. It is defined as the count or proportion of ground-truth targets missed by the model. Specifically, in object detection, an FN occurs when a real target is undetected (e.g., no predicted box or IoU below the threshold). In multi-target tracking, FNs are categorized into detection-level misses (single-frame non-detections) and tracking-level losses (target disappearances due to trajectory breaks or ID-switch errors).

FN directly impacts recall rates and tracking metrics like MOTA. A high FN rate indicates insufficient detection sensitivity or tracking association failures, potentially causing key target omissions in downstream tasks (e.g., security monitoring). Optimization strategies include lowering detection confidence thresholds, enhancing feature learning for complex scenes, and improving trajectory association robustness.

4.2.4. HOTA

HOTA is a comprehensive metric for multi-target tracking performance, designed to address limitations of traditional metrics like MOTA and IDF1 in balancing detection, association, and localization. Its core design combines detection accuracy (DetA), association consistency (AssA), and localization precision (LocA) into a single score via high-order correlation analysis. By using geometric averaging across different localization thresholds, HOTA holistically reflects tracking system performance.

HOTA’s unique advantage lies in its decomposability: it breaks down into sub-metrics like detection recall and association accuracy, quantifying five key errors—missed detections, false positives, identity switches, and trajectory breaks—to enable fine-grained model diagnosis. Unlike traditional metrics focusing on adjacent-frame matching, HOTA emphasizes long-term cross-frame association. By balancing detection and association weights, it reduces single-task bias, aligning more closely with human visual evaluation in complex scenarios (e.g., occlusion, appearance similarity).

4.2.5. IDF1

IDF1 is a key metric for multi-target tracking, balancing detection accuracy and identity consistency. It takes the harmonic mean of detection recall (capturing ground-truth targets) and identity precision (assigning correct labels), assessing a tracker’s ability to detect targets and keep identities accurate. Vital for long-term tracking (e.g., in crowds), high IDF1 indicates few false detections/identities or identity switches. Optimizing it requires boosting detection to cut errors and improving association to preserve identities.

4.3. Hyperparameter Experiments

In the detector section, YOLOv8n was uniformly adopted to train part of the data from VisDrone MOT, with the remaining untrained data used for testing. In the matching section, for a single matching algorithm, the detection confidence threshold is set to 0.5. For the secondary matching algorithm, the first matching reliability threshold is set to 0.5, and the second one to 0.1. The maximum tracking loss frame count is set to 150 frames. Local detection expands the search area to twice the target size and continues detection for 30 frames after the target is lost.

To determine the optimal Bhattacharyya distance threshold for multi-feature correlation, this paper evaluates system performance at thresholds of 0.5, 0.4, 0.3, and 0.2. The results are presented in

Table 1.

When the threshold is set high (0.5), FN remains low while FP surges, as the system shows low tolerance for mismatches—hindering target recovery. Notably, thresholds exceeding 0.5 were not selected for further analysis, as practical tests revealed even more severe issues: such values drastically relax the similarity requirement for feature matching, causing FP to escalate exponentially. This leads to a proliferation of false trajectories (e.g., misidentifying background noise or irrelevant objects as targets) and a sharp decline in core metrics like MOTA and HOTA, rendering the results practically meaningless for robust tracking. At a threshold of 0.3, overall performance peaks: FN and FP strike a balance, with both HOTA and MOTA reaching their highest values, marking the optimal inflection point for detection robustness and accuracy. When the threshold drops further to 0.2, although FP decreases, FN rises sharply, causing system overfitting that amplifies the risk of missed detections.

4.4. Comparison to State-of-the-Art Methods

To validate the superior performance of our method, six MOT methods are chosen, including ByteTrack [

17], BotSORT [

19], OC-SORT [

32], DeepOCSORT [

18], BoostTrack [

33], and Imprassoc.

4.4.1. Qualitative Analysis

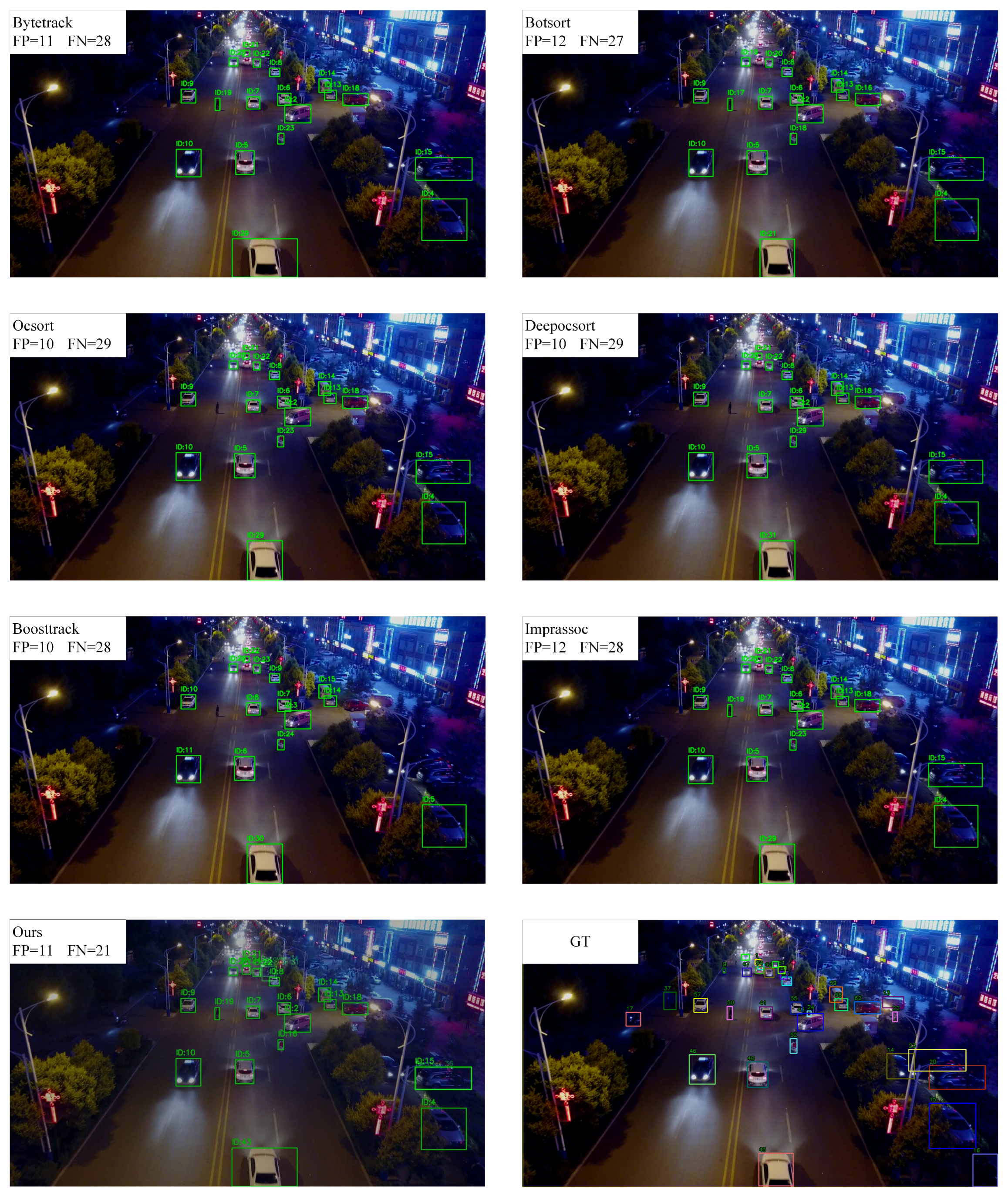

As shown in

Figure 5, in the context of ID stability performance within MOT tasks, algorithms like ByteTrack face certain performance drawbacks when pitted against traditional appearance matching-based methods and deep-learning methods typified by DeepOCSORT. Take the frame in the figure as an example, where the camera is in rapid motion and there is partial occlusion from roadside trees. These conditions make it a struggle to continuously and stably link the trajectories of the same target. To tackle this problem, as shown in the pink box in

Figure 6, when a target is temporarily lost, this paper does not have to depend on deep-learning models for secondary detection and feature matching to retrieve the target. Simultaneously, in the aspect of processing speed, since time-consuming procedures such as feature extraction and similarity calculation in deep-learning methods are bypassed, its operational efficiency has been notably enhanced compared with deep learning-based solutions. This makes it a better fit for scenarios with high real-time demands.

4.4.2. Quantitative Analysis

The performance evaluation on the VisDrone-MOT dataset, as presented in

Table 2, underscores the superiority of our proposed method. It surpasses all baseline algorithms across key metrics: achieving an MOTA of 63.24 and an IDF1 of 59.41. These values represent improvements of 22.68 and 5.01, respectively, over DeepOCSort, which ranks second in both MOTA and IDF1. Notably, the FP + FN count is drastically reduced from 191,693 (the highest among baselines, exhibited by BoostTrack) to 89,102. This reduction underscores the method’s enhanced capability to maintain target detection accuracy in complex scenes. With 1046 Mostly Tracked (MT) targets (the highest across all methods) and only 292 Mostly Lost (ML) targets (the lowest), the system excels at sustained trajectory maintenance and minimizing target loss. Although its HOTA of 34.84 is slightly lower than that of BotSort and DeepOCSort, the comprehensive performance in terms of MOTA, FP + FN, MT, and IDF1 demonstrates that our method effectively balances tracking precision, trajectory continuity, and identity recognition. Thus, it shows strong applicability in multi-object tracking tasks.

Results on the UAVDT dataset, as presented in the

Table 3, further validate our approach. Leading with MOTA = 63.24 and IDF1 = 59.41, our method maintains high accuracy. While ByteTrack and OCSort exhibit marginal speed advantages (FPS of 20.19 and 20.44, respectively), our method, with an FPS of 9.88, still demonstrates competitiveness in performance–efficiency balance. BotSort and DeepOCSort show relatively high HOTA (39.10 and 38.80), yet they lag behind our method in MOTA and IDF1. BoostTrack, with the highest FP + FN count of 191,693, frequently suffers from trajectory fragmentation or target missing in complex scenarios. In contrast, our method, with the lowest FP + FN count of 89,102 and the highest MT (1046) along with the lowest ML (292), demonstrates remarkable stability, ensuring reliable tracking performance even under challenging conditions.

4.5. Ablation Experiments

To evaluate the performance contribution of each core module, this paper designs four ablation comparisons based on the ByteTrack framework using the VisDrone-MOT dataset. The results are presented in

Table 4.

The results showed that introducing the local detection mechanism (ROI) improved MOTA from 40.56 to 60.31, a rise of nearly 20 percentage points, and reduced FP + FN count from 162,538 to 96,868. This indicates that this module significantly enhances the recovery of occluded or missed targets. However, it also caused changes in other metrics (e.g., HOTA slightly decreased from 35.50 to 33.90), suggesting that regional detection occasionally introduces mismatched targets, which may impact some performance aspects. Introducing the optical flow correction mechanism (optical flow) also significantly boosted overall performance. IDF1 rose from 56.14 to 59.45, evidence that accurate target motion trend modeling improves ID consistency. As shown in

Figure 6, when the target is lost due to image blurring caused by rapid camera movement, optical flow can still achieve a certain degree of tracking and prediction. Combining both (ROI + optical flow) achieved optimal results in key metrics like MOTA (reaching 63.24), MT (increasing to 1046), etc. FP + FN further decreased to 89,102, demonstrating that their synergistic effect balances target recovery, motion prediction, and appearance modeling to maximize system performance, though FPS showed a reduction compared to some single-mechanism cases.

4.6. Computational Efficiency Evaluation

To evaluate computational efficiency, We benchmarked our method on an NVIDIA RTX 1080ti GPU (manufactured by NVIDIA, Santa Clara, California, USA), an Intel i7-6850K CPU (manufactured by Intel, Santa Clara, California, USA), and 32 GB RAM. We measured the average single-frame processing time across the UAVDT datasets and compared it with representative baselines (ByteTrack and DeepOCSORT). Specifically, ByteTrack achieved an average single-frame processing time of 42.2 ms, DeepOCSORT took 65.8 ms, and our proposed method took 48.8 ms. According to the results, the ByteTrack method stands out with the optimal computational efficiency, recording an average single-frame processing time of only 42.2 ms. This excellent performance enables ByteTrack to process nearly 24 frames per second, fully meeting the real-time requirements of most high-demand MOT scenarios. Our proposed method achieves an average single-frame processing time of 48.8 ms, which is approximately 15.6% longer than ByteTrack. Despite this gap, it still maintains good computational efficiency (supporting about 20.5 frames per second) and outperforms the DeepOCSORT method significantly. DeepOCSORT shows the lowest computational efficiency among the three, with an average single-frame processing time reaching 65.8 ms—38% slower than our method and 55.9% slower than ByteTrack. This longer processing time may limit its application in scenarios where low latency is a key constraint.

5. Conclusions

This paper presents a dual motion modeling framework integrating Kalman filtering and optical flow features to address key challenges in MSOT, such as occlusion recovery, trajectory continuity, and detection accuracy. The framework incorporates an adaptive local re-detection mechanism and a multi-feature fusion target association strategy to systematically enhance MOT system robustness and accuracy in complex scenes.

Specifically, this work introduces three key modules based on the lightweight detector YOLOv8n and the ByteTrack framework:

- 1.

An optical flow-corrected Kalman filter that enhances state prediction responsiveness and timeliness, effectively mitigating prediction lag caused by rapid motion or occlusion.

- 2.

An adaptive local search mechanism that improves post-loss re-detection success rates and significantly reduces missed detections.

- 3.

A multi-feature object association mechanism integrating color histograms, geometric features, and motion trajectories to strengthen identity consistency and matching robustness under occlusion.

Extensive comparative and ablation experiments on two small-target datasets (VisDrone-MOT, UAVDT) confirm our method’s significant advantages in core metrics (MOTA, IDF1, HOTA). Notably, hyperparameter experiments (

Table 1) further verify the critical role of the Bhattacharyya distance threshold in multi-feature correlation: setting it to 0.3 yields optimal performance balance (MOTA: 63.24, HOTA: 34.84), with false positives (FP: 12,348) and false negatives (FN: 76,754) kept reasonable. A too-high threshold (e.g., 0.5) causes FP surges due to low mismatch tolerance, while a too-low one (e.g., 0.2) triggers overfitting and higher missed detection risks—this optimal parameter supports stable system operation in complex scenarios. Against state-of-the-art (SOTA) methods, our approach shows strong competitiveness: On VisDrone-MOT, it reaches MOTA 63.24 (22.68 higher than the second-ranked DeepOCSort) and IDF1 59.41 (5.01 higher than DeepOCSort), with the lowest FP+FN (89,102, drastically lower than BoostTrack’s 191,693) and highest Mostly Tracked (MT: 1046) targets. On UAVDT, it maintains a leading MOTA of 61.4 and IDF1 of 66.1, with the fewest Mostly Lost (ML: 56) targets. It excels in scenarios with frequent occlusion, dense targets, and low detector confidence, delivering superior target retention and trajectory stability. Meanwhile, it preserves good real-time performance (20.5 FPS on UAVDT, 9.88 FPS on VisDrone-MOT) while improving accuracy, showing strong engineering application potential

Although this approach advances small-target tracking, areas for improvement include the following:

Current sparse optical flow estimation struggles with complex backgrounds or textureless targets; future work could integrate deep learning-based dense optical flow networks.

The multi-feature fusion strategy introduces computational overhead during re-detection; optimizing feature extraction/matching efficiency is critical for large-scale real-time deployment.

Cross-category tracking in open scenarios remains challenging; future research could incorporate open-vocabulary recognition and semantic modeling for more intelligent, generalized tracking.

In summary, the proposed multi-module collaborative framework offers strong practicality and scalability for MSOT tasks, providing novel insights and technical pathways for multi-target tracking in complex environments.