Research on Multi-Sensor Fusion Localization for Forklift AGV Based on Adaptive Weight Extended Kalman Filter

Abstract

1. Introduction

2. Positioning Principles of Sensors

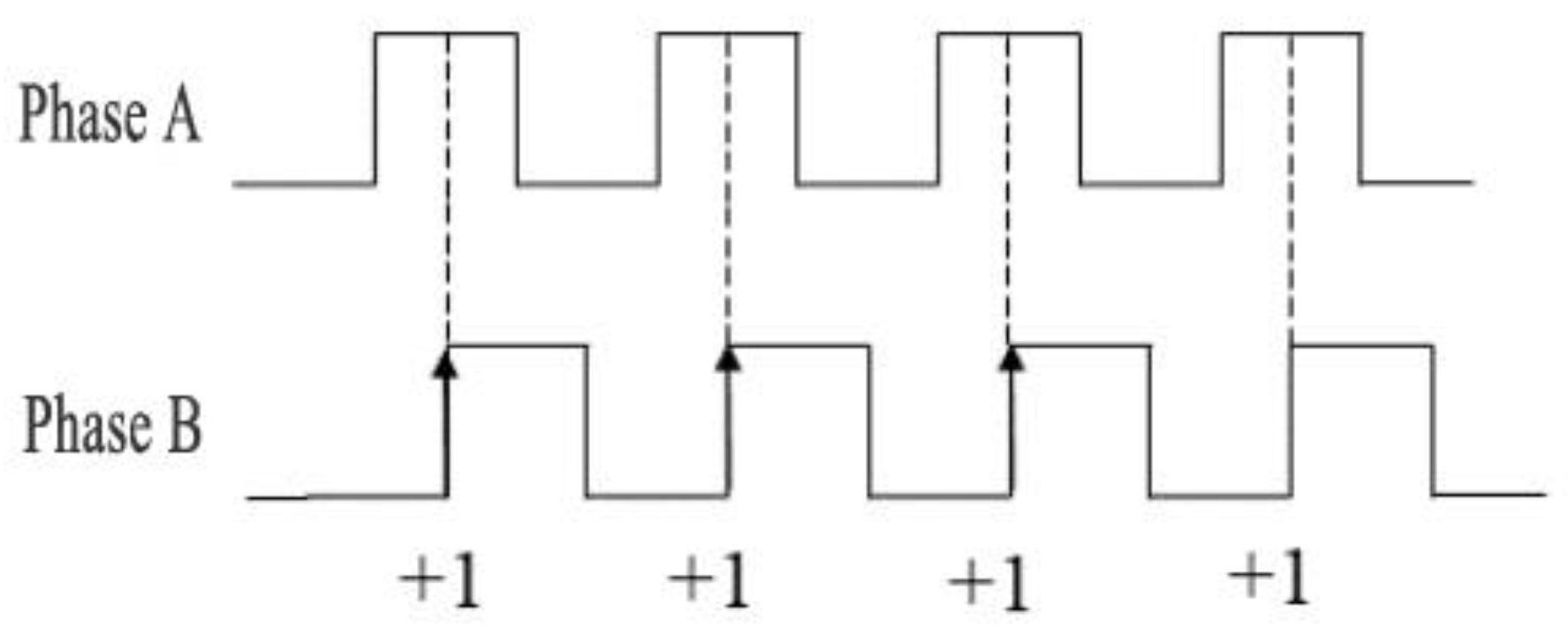

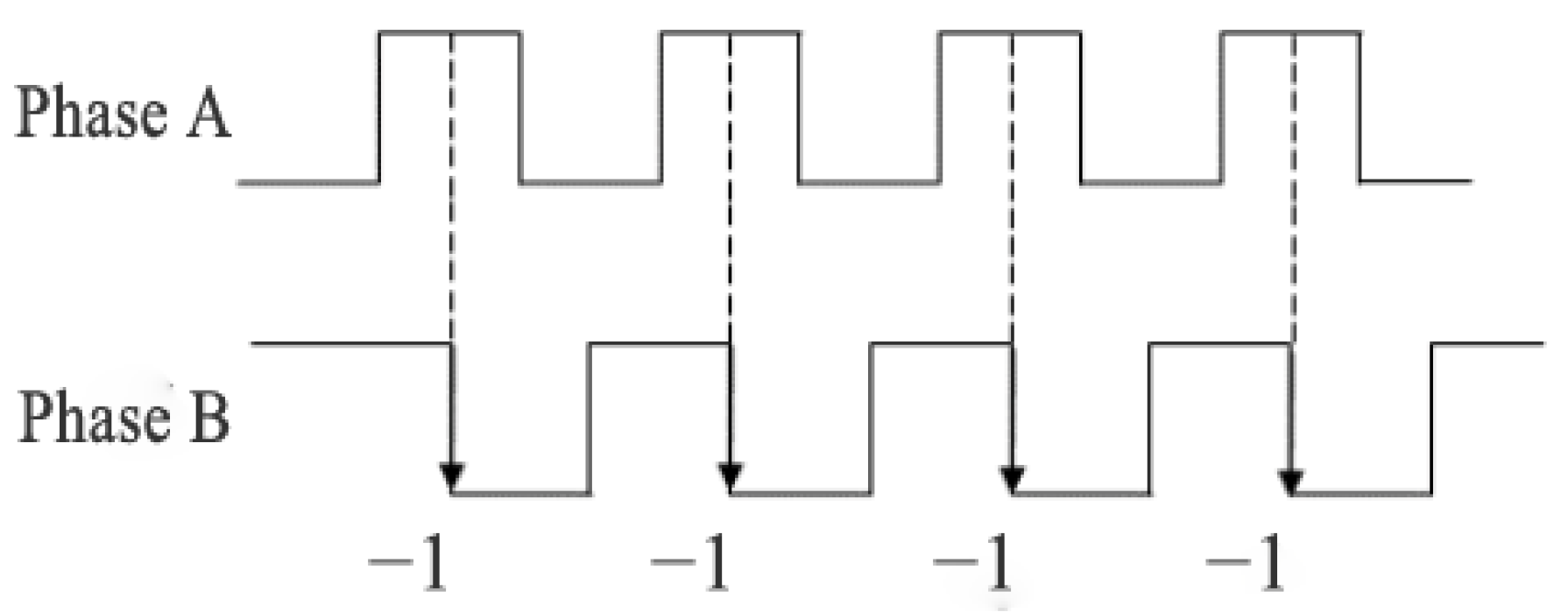

2.1. Odometer Positioning

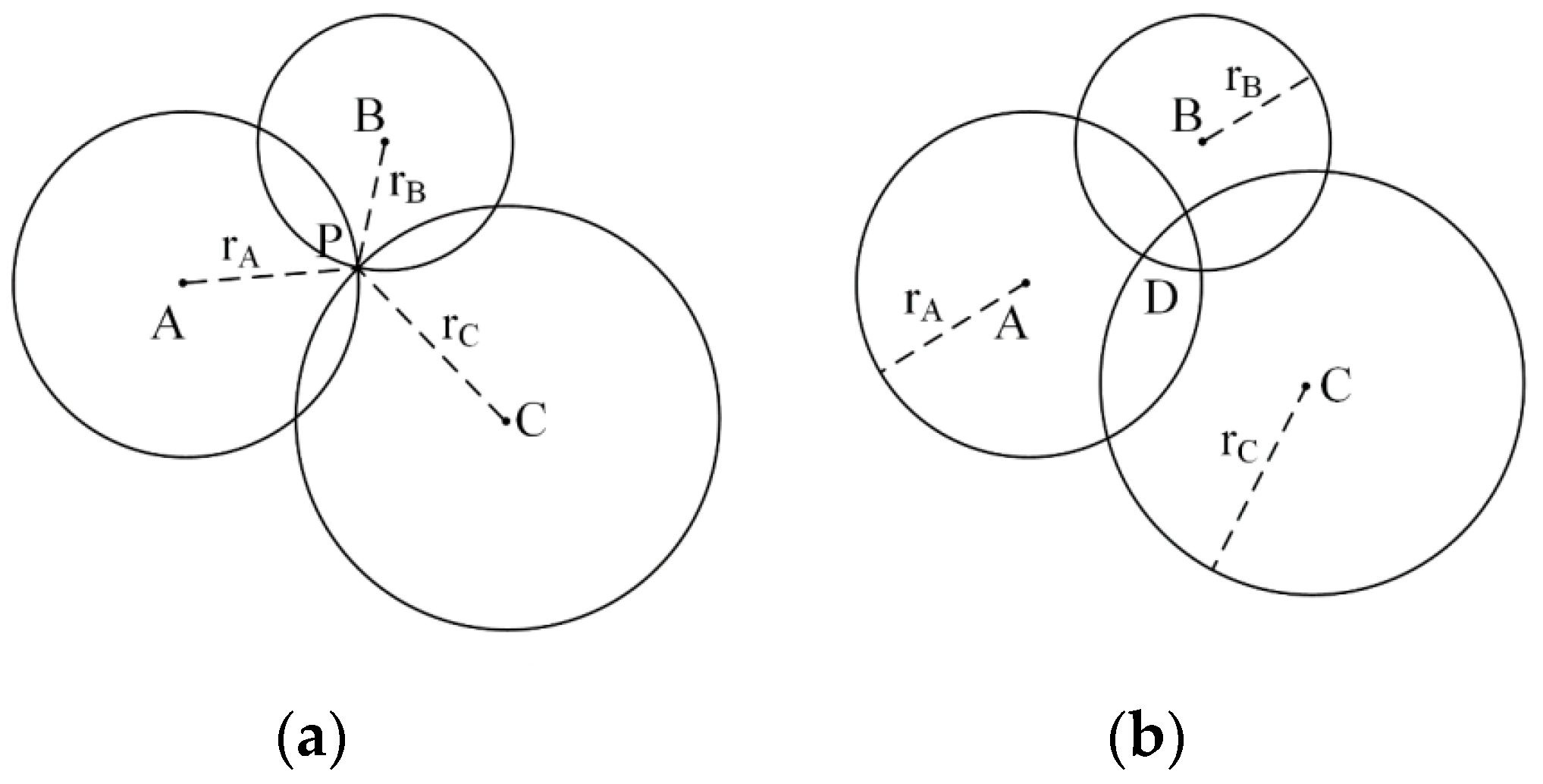

2.2. LiDAR Positioning

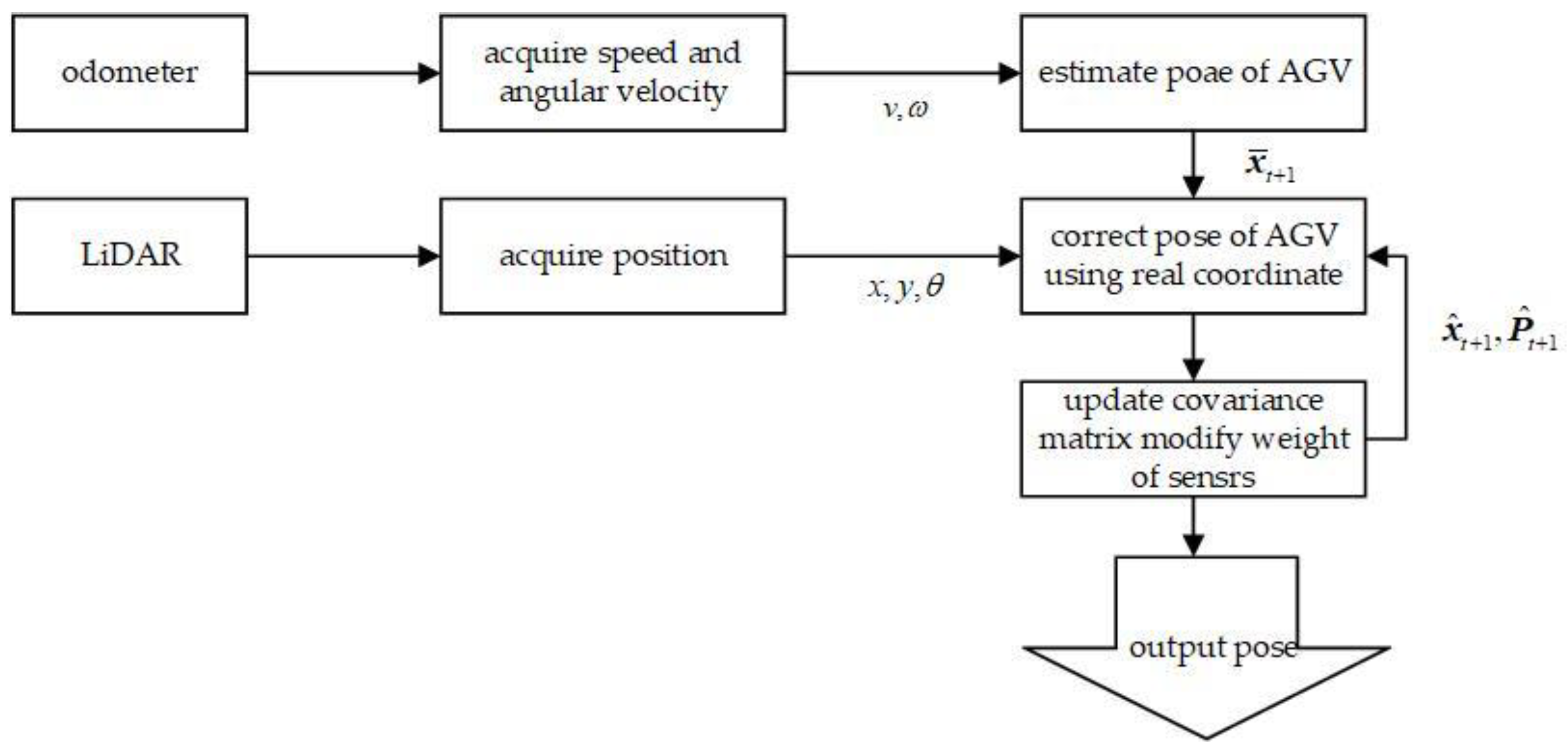

3. Improved EKF Fusion Localization

3.1. Odometer Prediction Model

3.2. Lidar Measurement Model

3.3. Detect Sensor State and Modify Weight

4. Simulation and Experimental Studies

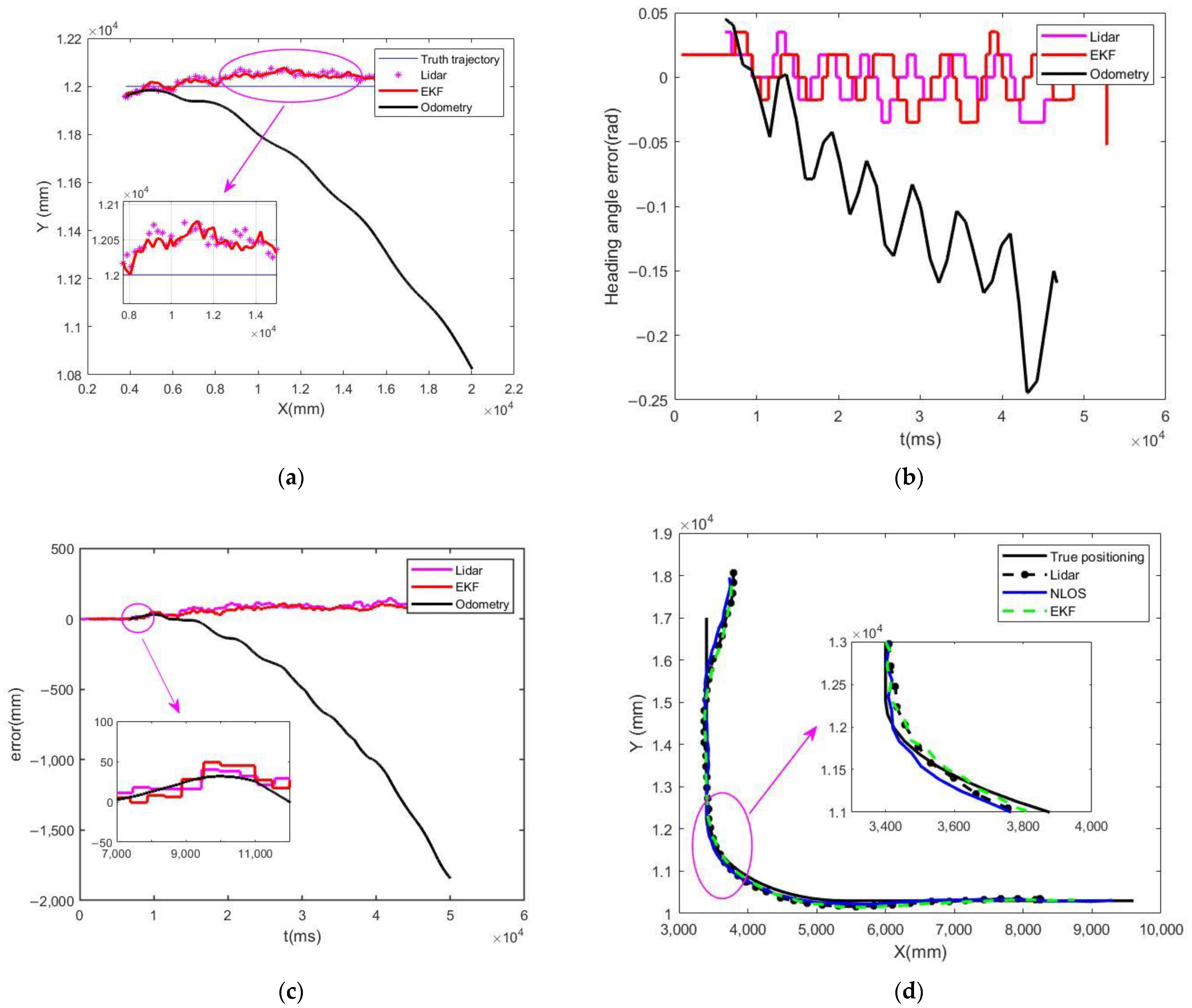

4.1. Simulation Studies

4.2. Experimental Studies

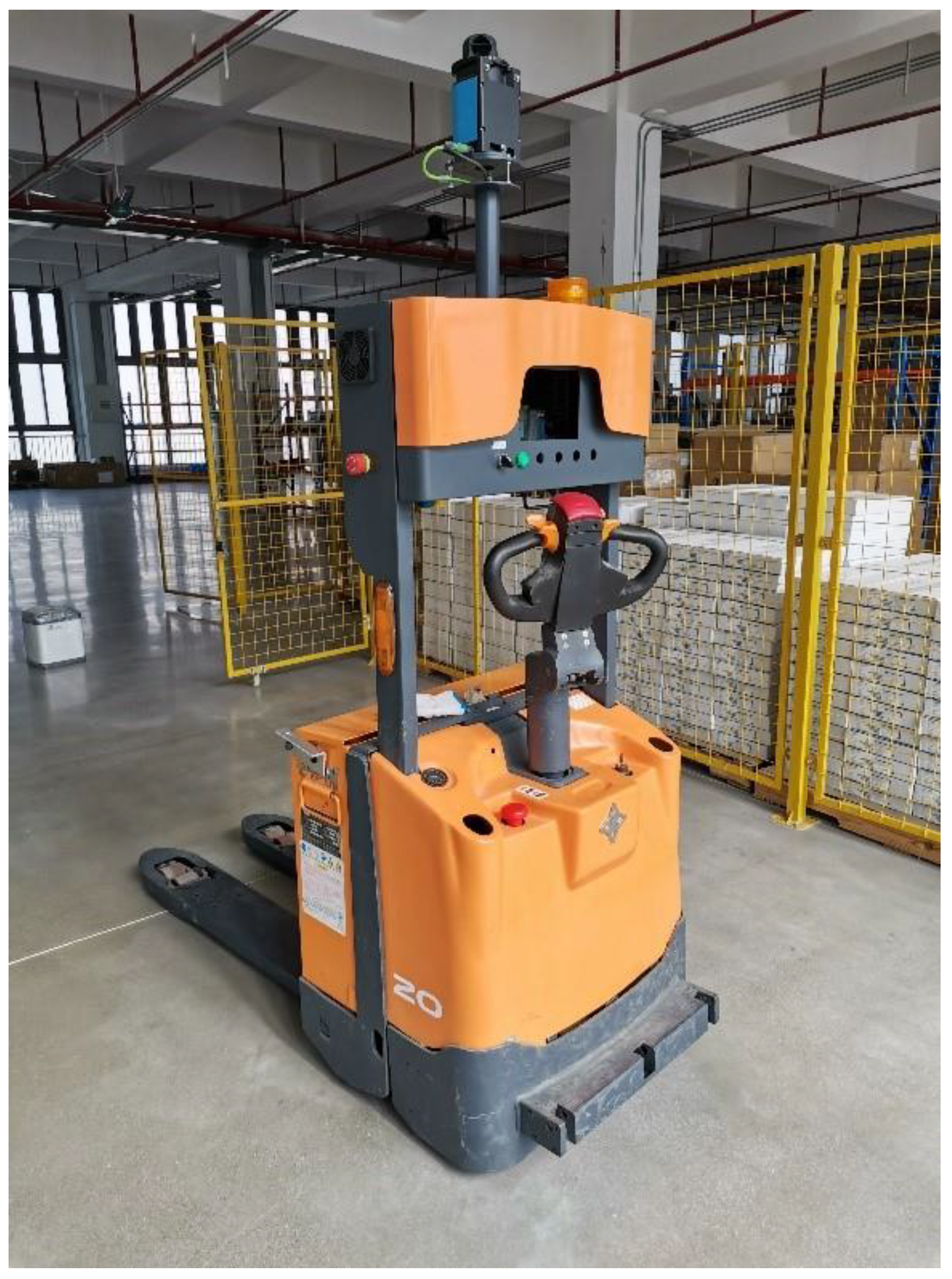

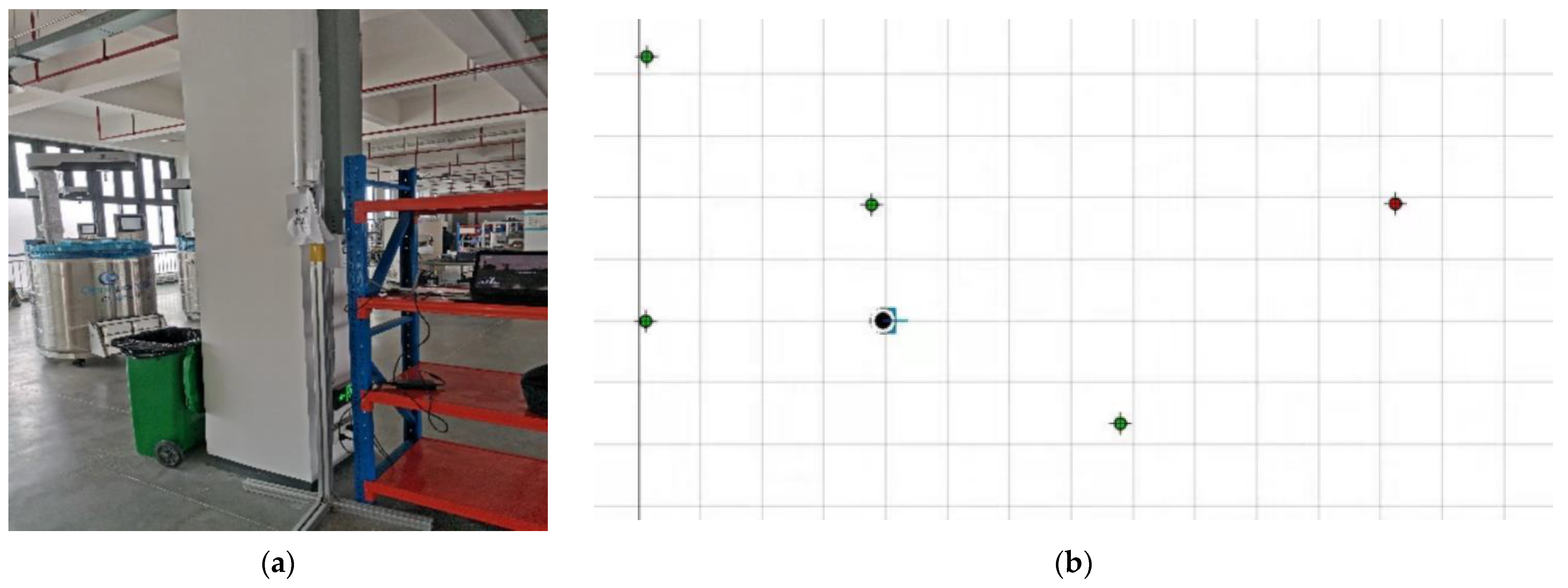

4.2.1. Environment and Equipment

4.2.2. Ground Truth Acquisition and Spatiotemporal Synchronization

- Ground Truth Acquisition and Calibration

- 2.

- Spatiotemporal Synchronization

4.2.3. Fusion Localization Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yang, Y.; Pan, W. Automated Guided Vehicles in Modular Integrated Construction: Potentials and Future Directions. Constr. Innov. 2021, 21, 85–104. [Google Scholar] [CrossRef]

- Patricio, R.; Mendes, A. Consumption Patterns and the Advent of Automated Guided Vehicles, and the Trends for Automated Guided Vehicles. Curr. Robot. Rep. 2020, 1, 145–149. [Google Scholar] [CrossRef]

- Peteinatos, G.G.; Weis, M.; Andújar, D.; Rueda Ayala, V.; Gerhards, R. Potential Use of Ground-Based Sensor Technologies for Weed Detection. Pest. Manag. Sci. 2014, 70, 190–199. [Google Scholar] [CrossRef] [PubMed]

- Fraifer, M.A.; Coleman, J.; Maguire, J.; Trslić, P.; Dooly, G.; Toal, D. Autonomous Forklifts: State of the Art—Exploring Perception, Scanning Technologies and Functional Systems—A Comprehensive Review. Electronics 2025, 14, 153. [Google Scholar] [CrossRef]

- Vaccari, L.; Coruzzolo, A.M.; Lolli, F.; Sellitto, M.A. Indoor Positioning Systems in Logistics: A Review. Logistics 2024, 8, 126. [Google Scholar] [CrossRef]

- Vasiljević, G.; Miklić, D.; Draganjac, I.; Kovačić, Z.; Lista, P. High-Accuracy Vehicle Localization for Autonomous Warehousing. Robot. Comput.-Integr. Manuf. 2016, 42, 1–16. [Google Scholar] [CrossRef]

- Simões, W.C.S.S.; Machado, G.S.; Sales, A.M.A.; De Lucena, M.M.; Jazdi, N.; De Lucena, V.F. A Review of Technologies and Techniques for Indoor Navigation Systems for the Visually Impaired. Sensors 2020, 20, 3935. [Google Scholar] [CrossRef]

- Asaad, S.M.; Maghdid, H.S. A Comprehensive Review of Indoor/Outdoor Localization Solutions in IoT Era: Research Challenges and Future Perspectives. Comput. Netw. 2022, 212, 109041. [Google Scholar] [CrossRef]

- Zhu, W.; Guo, S. Indoor Positioning of AGVs Based on Multi-Sensor Data Fusion Such as LiDAR. Int. J. Sens. Sens. Netw. 2024, 12, 13–22. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access 2020, 8, 2847–2868. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef] [PubMed]

- AlZubi, A.A.; Alarifi, A.; Al-Maitah, M.; Alheyasat, O. Multi-Sensor Information Fusion for Internet of Things Assisted Automated Guided Vehicles in Smart City. Sustain. Cities Soc. 2021, 64, 102539. [Google Scholar] [CrossRef]

- Yukun, C.; Xicai, S.; Zhigang, L. Research on Kalman-Filter Based Multisensor Data Fusion. J. Syst. Eng. Electron. 2007, 18, 497–502. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, A.; Sui, X.; Wang, C.; Wang, S.; Gao, J.; Shi, Z. Improved-UWB/LiDAR-SLAM Tightly Coupled Positioning System with NLOS Identification Using a LiDAR Point Cloud in GNSS-Denied Environments. Remote Sens. 2022, 14, 1380. [Google Scholar] [CrossRef]

- Long, Z.; Xiang, Y.; Lei, X.; Li, Y.; Hu, Z.; Dai, X. Integrated Indoor Positioning System of Greenhouse Robot Based on UWB/IMU/ODOM/LIDAR. Sensors 2022, 22, 4819. [Google Scholar] [CrossRef]

- Abdelaziz, N.; El-Rabbany, A. An Integrated INS/LiDAR SLAM Navigation System for GNSS-Challenging Environments. Sensors 2022, 22, 4327. [Google Scholar] [CrossRef] [PubMed]

- Poulose, A.; Han, D.S. UWB Indoor Localization Using Deep Learning LSTM Networks. Appl. Sci. 2020, 10, 6290. [Google Scholar] [CrossRef]

- Tian, Y.; Lian, Z.; Wang, P.; Wang, M.; Yue, Z.; Chai, H. Application of a Long Short-Term Memory Neural Network Algorithm Fused with Kalman Filter in UWB Indoor Positioning. Sci. Rep. 2024, 14, 1925. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, L.; Yang, J.; Cao, C.; Wang, W.; Ran, Y.; Tan, Z.; Luo, M. A Review of Multi-Sensor Fusion SLAM Systems Based on 3D LIDAR. Remote Sens. 2022, 14, 2835. [Google Scholar] [CrossRef]

- Yin, S.; Li, P.; Gu, X.; Yang, X.; Yu, L. Adaptive Kalman Filter with LSTM Network Assistance for Abnormal Measurements. Meas. Sci. Technol. 2024, 35, 075113. [Google Scholar] [CrossRef]

- Xing, L.; Zhang, L.; Sun, W.; He, Z.; Zhang, Y.; Gao, F. Performance Enhancement of Diffuse Fluorescence Tomography Based on an Extended Kalman Filtering-Long Short Term Memory Neural Network Correction Model. Biomed. Opt. Express 2024, 15, 2078–2093. [Google Scholar] [CrossRef] [PubMed]

- Zou, Z.; Yuan, C.; Xu, W.; Li, H.; Zhou, S.; Xue, K.; Zhang, F. LTA-OM: Long-term Association LiDAR–IMU Odometry and Mapping. J. Field Robot. 2024, 41, 2455–2474. [Google Scholar] [CrossRef]

| Positioning Methods | Mean Error of Positioning Trajectory | Maximum Positioning Trajectory Error | Minimum Trajectory Positioning Error |

|---|---|---|---|

| Lidar | 28 mm | 57 mm | 1.2 mm |

| Odometry | 100 mm | 2477 mm | 0.9 mm |

| EKF | 10 mm | 29 mm | 0.6 mm |

| Positioning Methods | Mean Error of Positioning Trajectory | Maximum Positioning Trajectory Error | Minimum Trajectory Positioning Error |

|---|---|---|---|

| Lidar | 21 mm | 70 mm | 1 mm |

| Odometry | 921 mm | 1843 mm | 2 mm |

| EKF | 13 mm | 61 mm | 1 mm |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Wu, J.; Liao, Y.; Huang, B.; Li, H.; Zhou, J. Research on Multi-Sensor Fusion Localization for Forklift AGV Based on Adaptive Weight Extended Kalman Filter. Sensors 2025, 25, 5670. https://doi.org/10.3390/s25185670

Wang Q, Wu J, Liao Y, Huang B, Li H, Zhou J. Research on Multi-Sensor Fusion Localization for Forklift AGV Based on Adaptive Weight Extended Kalman Filter. Sensors. 2025; 25(18):5670. https://doi.org/10.3390/s25185670

Chicago/Turabian StyleWang, Qiang, Junqi Wu, Yinghua Liao, Bo Huang, Hang Li, and Jiajun Zhou. 2025. "Research on Multi-Sensor Fusion Localization for Forklift AGV Based on Adaptive Weight Extended Kalman Filter" Sensors 25, no. 18: 5670. https://doi.org/10.3390/s25185670

APA StyleWang, Q., Wu, J., Liao, Y., Huang, B., Li, H., & Zhou, J. (2025). Research on Multi-Sensor Fusion Localization for Forklift AGV Based on Adaptive Weight Extended Kalman Filter. Sensors, 25(18), 5670. https://doi.org/10.3390/s25185670