SSTA-ResT: Soft Spatiotemporal Attention ResNet Transformer for Argentine Sign Language Recognition

Abstract

1. Introduction

1.1. Improvements in Recognition Algorithms

1.2. Format of Sign Language Recognition Datasets

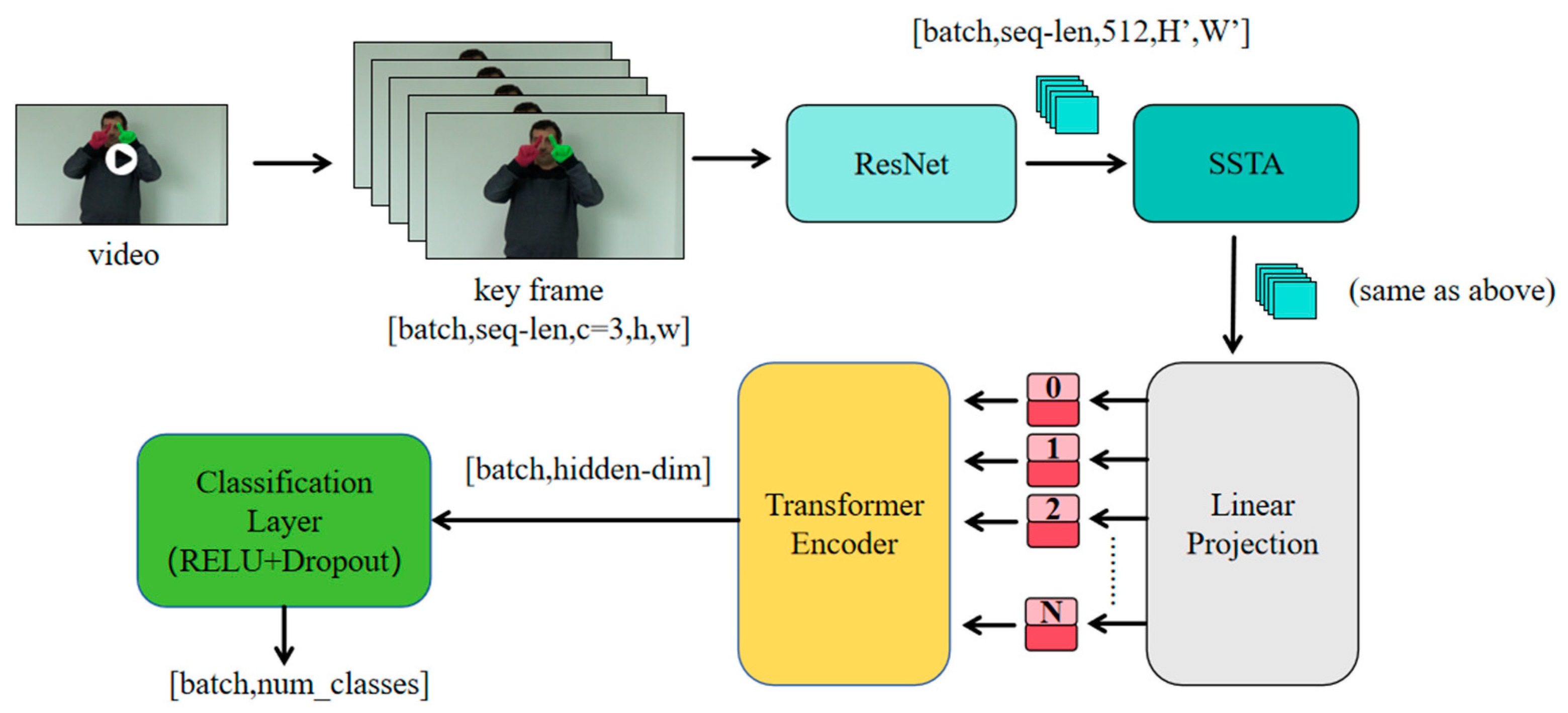

- We hereby propose a novel fusion network, SSTA-ResT, for action video classification and recognition. The network utilizes a residual network to extract spatial features, a spatiotemporal attention module to capture the relationship between temporal and spatial features, and a Transformer encoder to extract long-term temporal dependencies. This approach is demonstrated to enhance classification performance on the dataset.

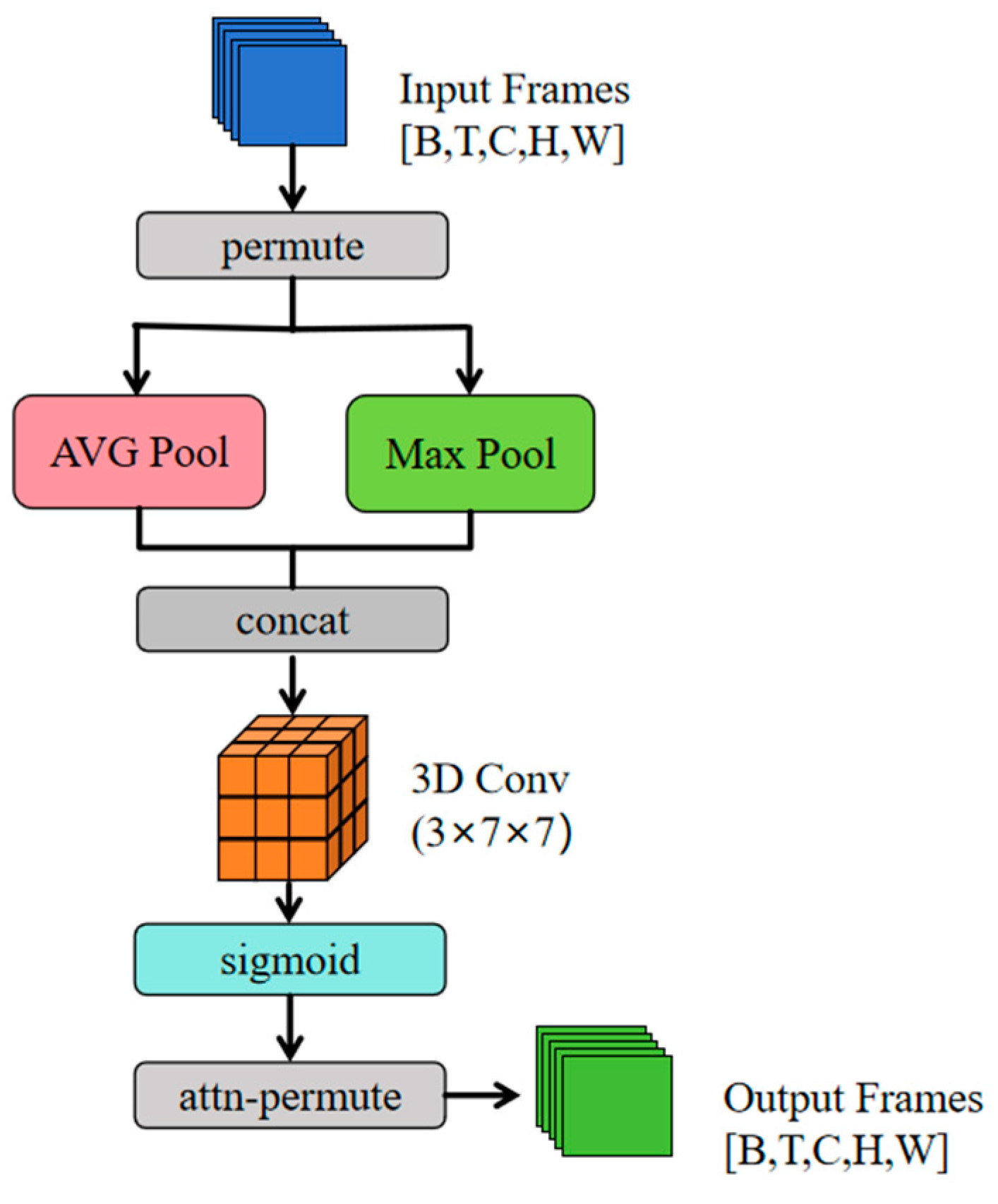

- We propose a lightweight convolutional soft attention mechanism, SSTA (Soft Spati-Temporal Attention), which is specifically designed for video sequence feature enhancement. This mechanism enhances spatiotemporal correlations by generating dual complementary representations.

- The efficacy of our word-level sign-language-based motion recognition approach is demonstrated to exceed that of alternative methodologies. The findings of our empirical investigation indicate that the model exhibits substantial enhancements in recognition performance in comparison with the outcomes of prior studies.

2. Literature Review

3. Methods and Materials

3.1. Overview

| Algorithm 1: SSTA-ResT:Spatio-Temporal attention ResNet transformer |

|

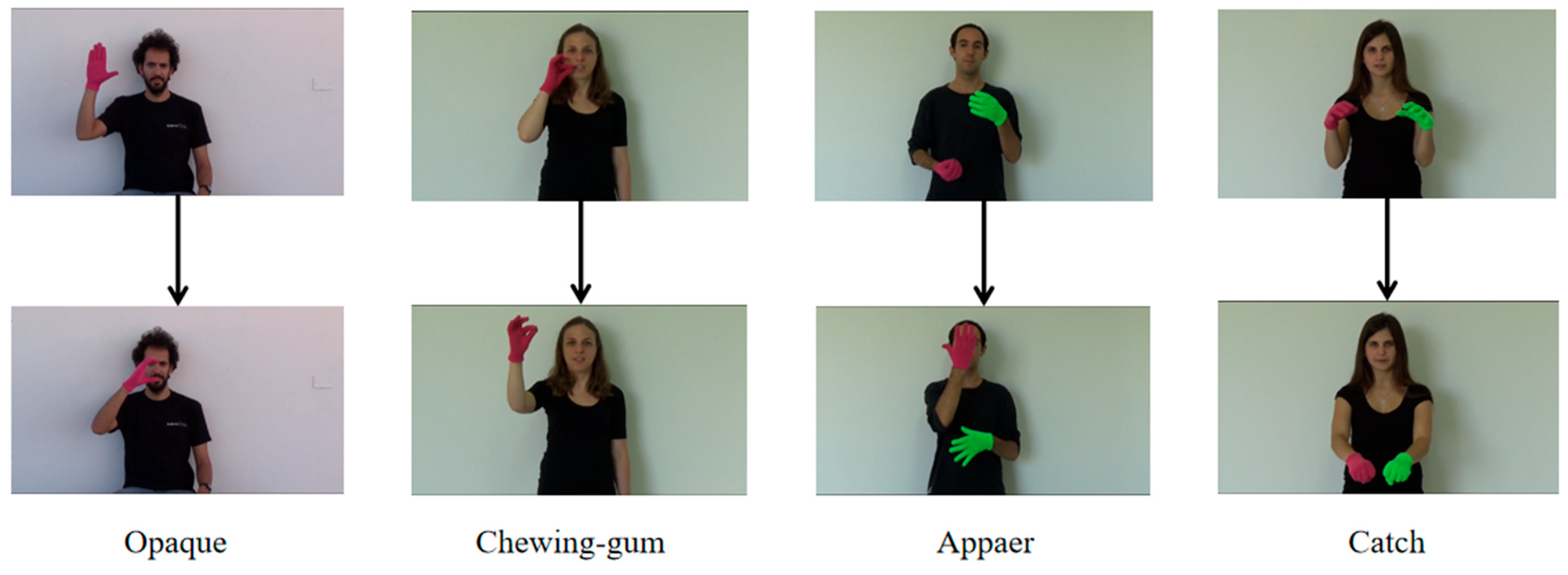

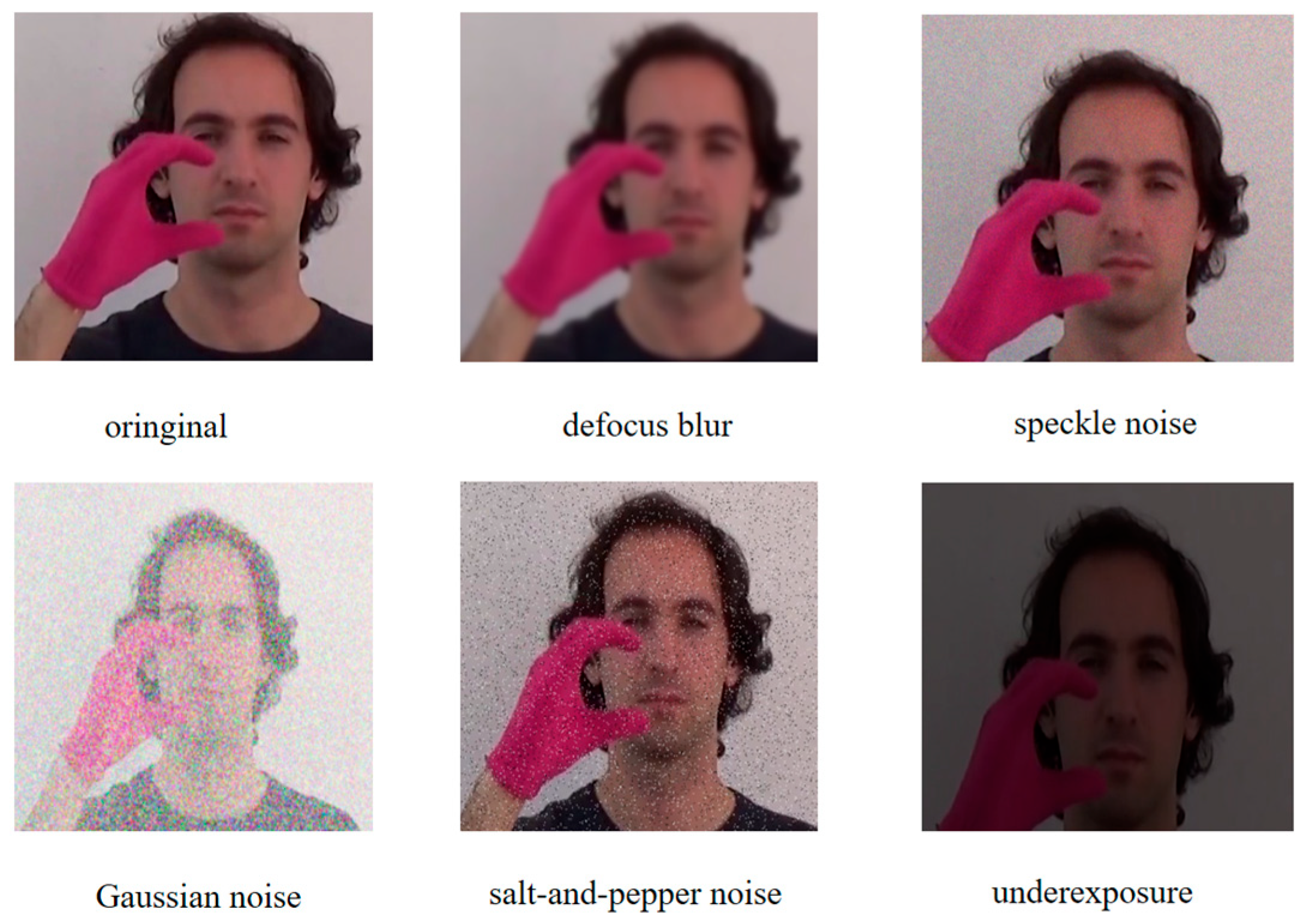

3.2. Dataset Preparation and Preprocessing

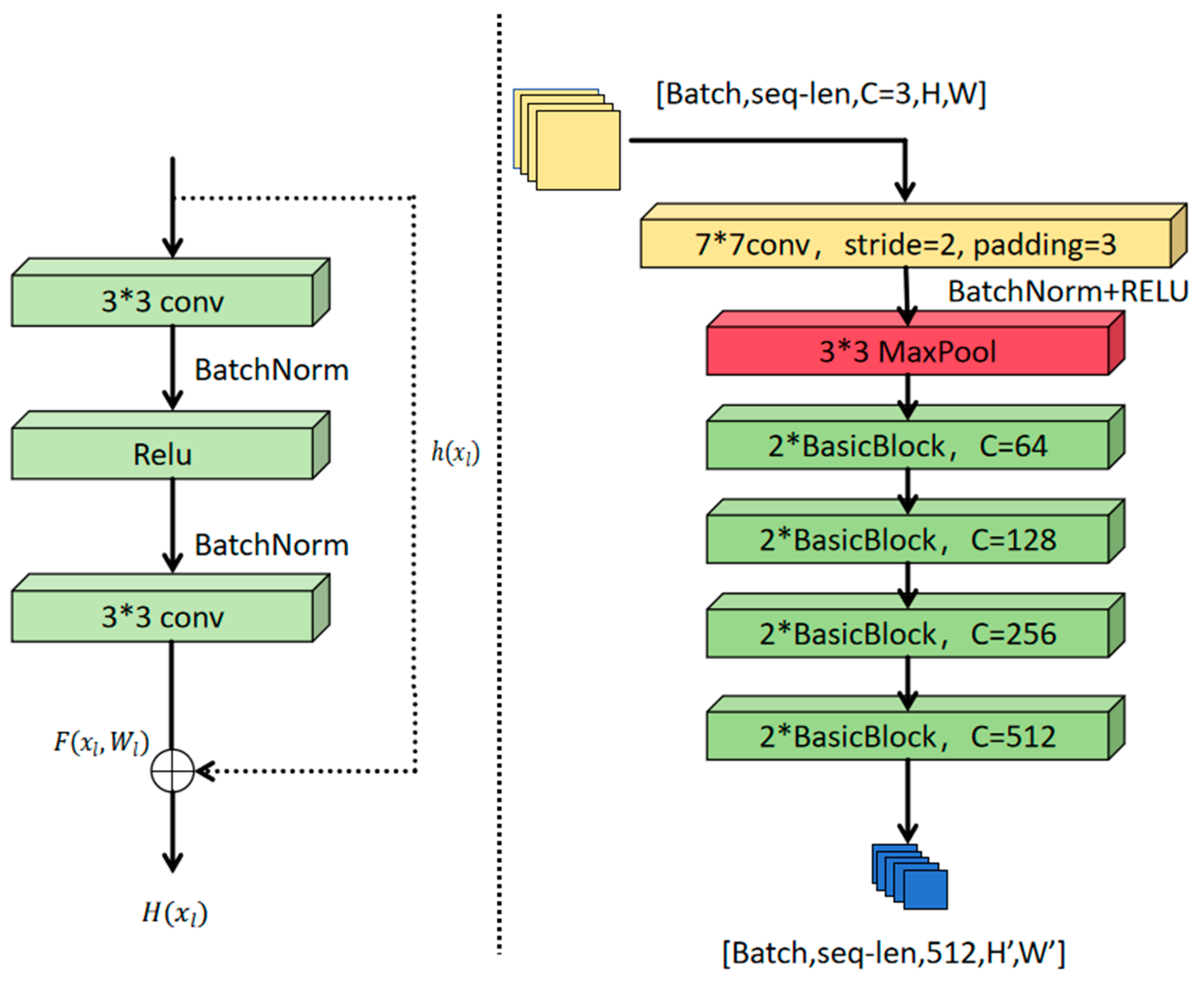

3.3. ResNet Feature Extraction Module

3.4. Soft Spatiotemporal Attention Mechanism (SSTA)

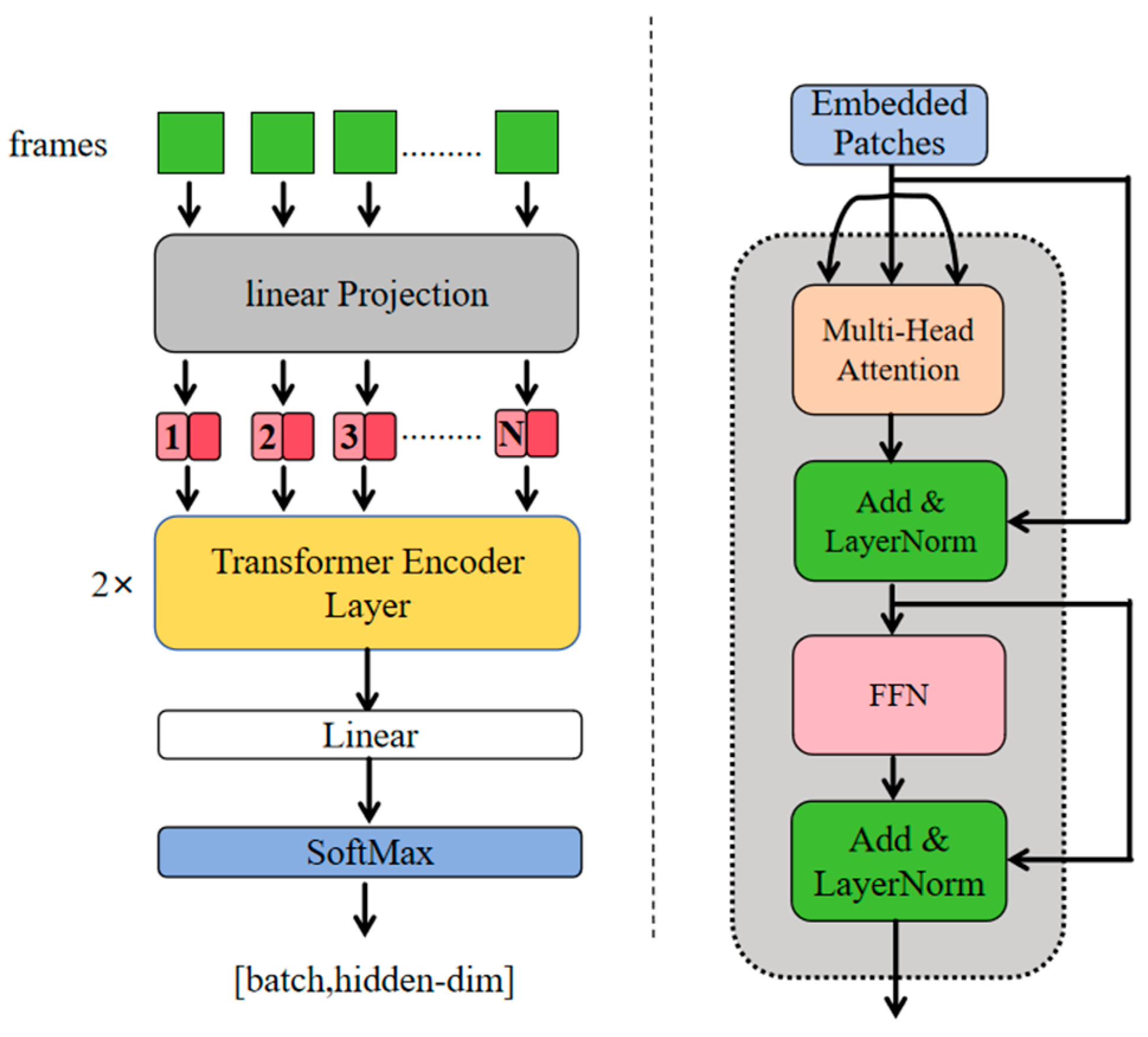

3.5. Transformer Encoder

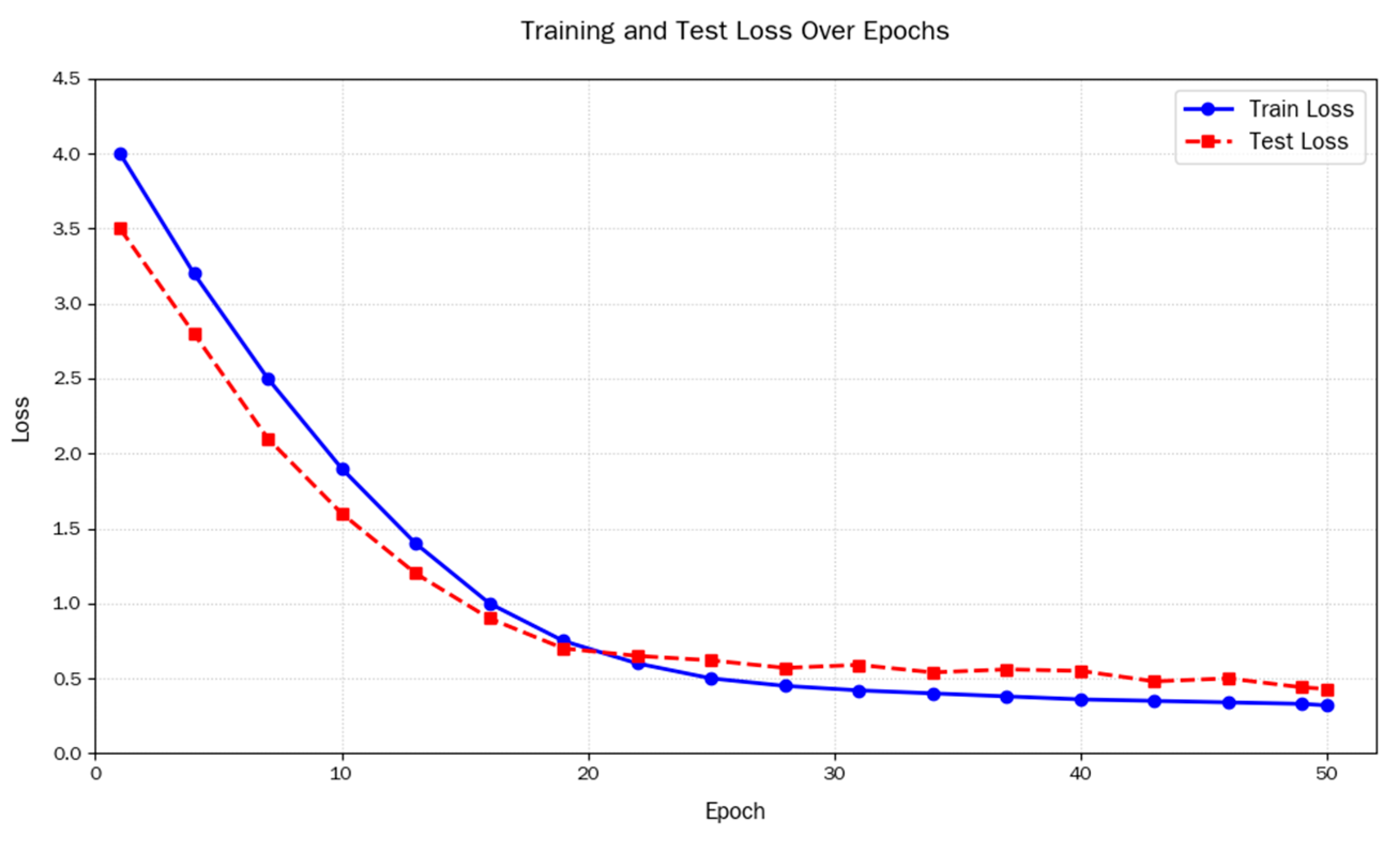

4. Experimental Results and Discussion

4.1. Indicators

4.2. Hyperparameter Study

4.3. Ablation Study

4.4. Comparison Study

4.5. Hardware Requirements

5. Conclusions

- Real-world deployment trials and continuous sign language recognition under unconstrained conditions.

- The current method focuses solely on word-level recognition and has not yet addressed the segmentation and recognition of continuous sign language sentences.

- The model’s real-time performance requires further optimization to meet the low-latency requirements of actual interactive scenarios.

- Exploring and enhancing the model’s generalization ability under different recording conditions (including camera distance and preferred hand differences).

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. World Report on Hearing; World Health Organization: Geneva, Switzerland, 2021; ISBN 978-92-4-002048-1. Available online: https://www.who.int/publications/i/item/9789240020481 (accessed on 30 July 2025).

- Guo, Z.; Hou, Y.; Li, W. Sign language recognition via dimensional global–local shift and cross-scale aggregation. Neural Comput. Appl. 2023, 35, 12481–12493. [Google Scholar] [CrossRef]

- Rastgoo, R.; Kiani, K.; Escalera, S. Sign Language Recognition: A Deep Survey. Expert Syst. Appl. 2021, 164, 113794. [Google Scholar] [CrossRef]

- Gaye, S. Sign Language Recognition and Interpretation System. Int. J. Sci. Res. Eng. Manag. 2022, 6. [Google Scholar] [CrossRef]

- Alyami, S.; Luqman, H.; Hammoudeh, M. Reviewing 25 years of continuous sign language recognition research: Advances, challenges, and prospects. Inf. Process. Manag. 2024, 61, 103774. [Google Scholar] [CrossRef]

- Alsharif, B.; Alanazi, M.; Ilyas, M. Machine Learning Technology to Recognize American Sign Language Alphabet. In Proceedings of the 2023 IEEE 20th International Conference on Smart Communities: Improving Quality of Life using AI, Robotics and IoT (HONET), Boca Raton, FL, USA, 4–6 December 2023; pp. 173–178. [Google Scholar] [CrossRef]

- Wattamwar, A. Sign Language Recognition using CNN. Int. J. Res. Appl. Sci. Eng. Technol. 2021, 9, 826–830. [Google Scholar] [CrossRef]

- Murugan, P. Learning The Sequential Temporal Information with Recurrent Neural Networks. arXiv 2018, arXiv:1807.02857. [Google Scholar] [CrossRef]

- Pricope, T.V. An Analysis on Very Deep Convolutional Neural Networks: Problems and Solutions. Stud. Univ. Babeș-Bolyai Inform. 2021, 66, 5. [Google Scholar] [CrossRef]

- As’ari, M.A.; Sufri, N.A.J.; Qi, G.S. Emergency sign language recognition from variant of convolutional neural network (CNN) and long short term memory (LSTM) models. Int. J. Adv. Intell. Inform. 2024, 10, 64. [Google Scholar] [CrossRef]

- Saleh, Y.; Issa, G.F. Arabic Sign Language Recognition through Deep Neural Networks Fine-Tuning. Int. J. Online Biomed. Eng. (iJOE) 2020, 16, 71–83. [Google Scholar] [CrossRef]

- Singh, P.K. Sign Language Detection Using Action Recognition LSTM Deep Learning Model. Int. J. Sci. Res. Eng. Manag. 2024, 8, 1–5. [Google Scholar] [CrossRef]

- Karanjkar, V.; Bagul, R.; Singh, R.R.; Shirke, R. A Survey of Sign Language Recognition. Int. J. Sci. Res. Eng. Manag. 2023, 7, 1–11. [Google Scholar] [CrossRef]

- Surya, S.; S, S.; R, S.; G, H. Sign Tone: Two Way Sign Language Recognition and Multilingual Interpreter System Using Deep Learning. Indian J. Comput. Sci. Technol. 2024, 3, 148–153. [Google Scholar] [CrossRef]

- Amin, M.S.; Rizvi, S.T.H.; Hossain, M.M. A Comparative Review on Applications of Different Sensors for Sign Language Recognition. J. Imaging 2022, 8, 98. [Google Scholar] [CrossRef]

- Liu, S.; Qing, W.; Zhang, D.; Gan, C.; Zhang, J.; Liao, S.; Wei, K.; Zou, H. Flexible staircase triboelectric nanogenerator for motion monitoring and gesture recognition. Nano Energy 2024, 128, 109849. [Google Scholar] [CrossRef]

- Song, Y.; Liu, M.; Wang, F.; Zhu, J.; Hu, A.; Sun, N. Gesture Recognition Based on a Convolutional Neural Network–Bidirectional Long Short-Term Memory Network for a Wearable Wrist Sensor with Multi-Walled Carbon Nanotube/Cotton Fabric Material. Micromachines 2024, 15, 185. [Google Scholar] [CrossRef]

- Jain, V.; Jain, A.; Chauhan, A.; Kotla, S.S.; Gautam, A. American Sign Language recognition using Support Vector Machine and Convolutional Neural Network. Int. J. Inf. Technol. 2021, 13, 1193–1200. [Google Scholar] [CrossRef]

- Novianty, A.; Azmi, F. Sign Language Recognition using Principal Component Analysis and Support Vector Machine. IJAIT (Int. J. Appl. Inf. Technol.) 2021, 4, 49. [Google Scholar] [CrossRef]

- Chang, C.-C.; Pengwu, C.-M. Gesture recognition approach for sign language using curvature scale space and hidden Markov model. In Proceedings of the 2004 IEEE International Conference on Multimedia and Expo (ICME) (IEEE Cat. No.04TH8763), Taipei, Taiwan, 27–30 June 2004; pp. 1187–1190. [Google Scholar]

- Dhulipala, S.; Adedoyin, F.F.; Bruno, A. Sign and Human Action Detection Using Deep Learning. J. Imaging 2022, 8, 192. [Google Scholar] [CrossRef] [PubMed]

- Adão, T.; Oliveira, J.; Shahrabadi, S.; Jesus, H.; Fernandes, M.; Costa, Â.; Ferreira, V.; Gonçalves, M.F.; Lopéz, M.A.G.; Peres, E.; et al. Empowering Deaf-Hearing Communication: Exploring Synergies between Predictive and Generative AI-Based Strategies towards (Portuguese) Sign Language Interpretation. J. Imaging 2023, 9, 235. [Google Scholar] [CrossRef]

- Ma, Y.; Xu, T.; Kim, K. Two-Stream Mixed Convolutional Neural Network for American Sign Language Recognition. Sensors 2022, 22, 5959. [Google Scholar] [CrossRef]

- Teran-Quezada, A.A.; Lopez-Cabrera, V.; Rangel, J.C.; Sanchez-Galan, J.E. Sign-to-Text Translation from Panamanian Sign Language to Spanish in Continuous Capture Mode with Deep Neural Networks. Big Data Cogn. Comput. 2024, 8, 25. [Google Scholar] [CrossRef]

- Ariesta, M.C.; Wiryana, F.; Suharjito; Zahra, A. Sentence Level Indonesian Sign Language Recognition Using 3D Convolutional Neural Network and Bidirectional Recurrent Neural Network. In Proceedings of the 2018 Indonesian Association for Pattern Recognition International Conference (INAPR), Jakarta, Indonesia, 7–8 September 2018; pp. 16–22. [Google Scholar]

- Muthu Mariappan, H.; Gomathi, V. Indian Sign Language Recognition through Hybrid ConvNet-LSTM Networks. Emit. Int. J. Eng. Technol. 2021, 9, 182–203. [Google Scholar] [CrossRef]

- Ronchetti, F.; Quiroga, F.; Estrebou, C.; Lanzarini, L.; Rosete, A. Sign Languague Recognition Without Frame-Sequencing Constraints: A Proof of Concept on the Argentinian Sign Language. In Proceedings of the 15th Ibero-American Conference on AI (IBERAMIA 2016), San José, Costa Rica, 23–25 November 2016; pp. 338–349. [Google Scholar]

- Neto, G.M.R.; Junior, G.B.; de Almeida, J.D.S.; de Paiva, A.C. Sign Language Recognition Based on 3D Convolutional Neural Networks. In Proceedings of the 15th International Conference on Image Analysis and Recognition (ICIAR 2018), Póvoa de Varzim, Portugal, 27–29 June 2018; pp. 399–407. [Google Scholar]

- Huang, J.; Chouvatut, V. Video-Based Sign Language Recognition via ResNet and LSTM Network. J. Imaging 2024, 10, 149. [Google Scholar] [CrossRef]

- Tan, C.K.; Lim, K.M.; Chang, R.K.; Lee, C.P.; Alqahtani, A. HGR-ViT: Hand Gesture Recognition with Vision Transformer. Sensors 2023, 23, 5555. [Google Scholar] [CrossRef]

- Alnabih, A.F.; Maghari, A.Y. Arabic sign language letters recognition using Vision Transformer. Multimed. Tools Appl. 2024, 83, 81725–81739. [Google Scholar] [CrossRef]

- Ronchetti, F.; Quiroga, F.M.; Estrebou, C.; Lanzarini, L.; Rosete, A. LSA64: An Argentinian Sign Language Dataset. arXiv 2016, arXiv:2310.17429. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. arXiv 2015, arXiv:1502.01852. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the ICLR 2021, Oral Session 7, Virtual, 3–7 May 2021. [Google Scholar] [CrossRef]

- Marais, M.; Brown, D.; Connan, J.; Boby, A.; Kuhlane, L. Investigating Signer-Independent Sign Language Recognition on the LSA64 Dataset. In Proceedings of the Southern Africa Telecommunication Networks and Applications Conference (SATNAC), Western Cape, South Africa, 28–30 August 2022. [Google Scholar]

- Woods, L.T.; Rana, Z.A. Constraints on Optimising Encoder-Only Transformers for Modelling Sign Language with Human Pose Estimation Keypoint Data. J. Imaging 2023, 9, 238. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Shen, L.; Sun, G.; Albanie, S. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Feichtenhofer, C. X3D: Expanding Architectures for Efficient Video Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 200–210. [Google Scholar]

- Li, Y.; Ji, B.; Shi, X.; Zhang, J.; Kang, B.; Wang, L. TEA: Temporal Excitation and Aggregation for Action Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Navin, N.; Farid, F.A.; Rakin, R.Z.; Tanzim, S.S.; Rahman, M.; Rahman, S.; Uddin, J.; Karim, H.A. Bilingual Sign Language Recognition: A YOLOv11-Based Model for Bangla and English Alphabets. J. Imaging 2025, 11, 134. [Google Scholar] [CrossRef]

| Batch Size | Lr | Optimizer | Accuracy | Precision | F1-Score |

|---|---|---|---|---|---|

| 8 | 1 × 10−4 | Adam | 96.25% | 97.18% | 0.9671 |

| 16 | 1 × 10−4 | Adam | 90.88% | 94.65% | 0.9269 |

| 4 | 1 × 10−4 | Adam | 88.19% | 90.87% | 0.8952 |

| 8 | 1 × 10−5 | Adam | 63.82% | 65.81% | 0.6479 |

| 8 | 1 × 10−3 | SGD | 1.56% | 9.42% | 0.0268 |

| 8 | 1 × 10−4 | AdamW | 93.98% | 95.76% | 0.9486 |

| Method | Backbone | Neck | Classifier | Accuracy | Precision | F1-Score | Parames |

|---|---|---|---|---|---|---|---|

| SSTA-ResT(ours) | ResNet | SSTA | Transformer | 96.25% | 97.18% | 0.9671 | 11.66 M |

| Ablation1 | ResNet | - | Transformer | 91.78% | 95.57% | 0.9353 | 11.66 M |

| Ablation2 | ResNet | SSTA | - | 67.72% | 67.28% | 0.6750 | 11.30 M |

| Ablation3 | ResNet | SE [38] | Transformer | 89.52% | 91.24% | 0.9037 | 11.70 M |

| Ablation4 | ResNet | CA [39] | Transformer | 89.76% | 92.09% | 0.9091 | 11.69 M |

| Ablation5 | MobileNet | SSTA | Transformer | 86.03% | 87.32% | 0.8669 | 4.05 M |

| Ablation6 | CNN | SSTA | Transformer | 87.11% | 88.76% | 0.8792 | 4.73 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Zhou, Z.; Xia, E.; Yin, X. SSTA-ResT: Soft Spatiotemporal Attention ResNet Transformer for Argentine Sign Language Recognition. Sensors 2025, 25, 5543. https://doi.org/10.3390/s25175543

Liu X, Zhou Z, Xia E, Yin X. SSTA-ResT: Soft Spatiotemporal Attention ResNet Transformer for Argentine Sign Language Recognition. Sensors. 2025; 25(17):5543. https://doi.org/10.3390/s25175543

Chicago/Turabian StyleLiu, Xianru, Zeru Zhou, E Xia, and Xin Yin. 2025. "SSTA-ResT: Soft Spatiotemporal Attention ResNet Transformer for Argentine Sign Language Recognition" Sensors 25, no. 17: 5543. https://doi.org/10.3390/s25175543

APA StyleLiu, X., Zhou, Z., Xia, E., & Yin, X. (2025). SSTA-ResT: Soft Spatiotemporal Attention ResNet Transformer for Argentine Sign Language Recognition. Sensors, 25(17), 5543. https://doi.org/10.3390/s25175543