1. Introduction

Currently, the convergence of artificial intelligence (AI), the Internet of Things (IoT), and automation technologies is facilitating intelligent transformation across various industries [

1,

2,

3]. Robotic technology represents a key driver of this evolution [

4]. Advancements in intelligent algorithms and hardware enable robots to undertake increasingly complex tasks. As a critical subset of robots, mobile robots are being deployed extensively in industry [

5,

6], logistics [

7], and agriculture [

8].

Despite their diverse designs and functionalities, mobile robots commonly face challenges in safe obstacle avoidance and efficient navigation during operation [

9]. In dynamic and complex environments, they must accurately identify obstacles, plan safe trajectories, and make real-time adjustments [

10]. Ensuring task completion necessitates advanced sensor systems for real-time environmental perception [

11]. Moreover, using intelligent algorithms to process information, execute dynamic path planning, and adapt to uncertainties is critical, and enhancing these capabilities is key to ensuring efficient and safe operation in complex environments [

12,

13,

14].

Beyond traditional obstacle avoidance, navigating shared environments requires socially aware navigation capabilities [

15]. Robots must not only avoid collisions but also adhere to social norms, exhibit predictable behavior, and respect personal space to achieve smooth and comfortable human–robot coexistence [

16,

17]. This involves understanding and predicting the motivations and intentions of human behavior and has become a research focus in the field of human–robot interaction (HRI) [

18,

19]. However, although the ultimate goal of social navigation is to achieve natural and comfortable human–machine coexistence, collision risk mitigation remains the most basic and critical safety prerequisite. Any social navigation strategy must be based on absolute collision avoidance in order to further optimize comfort and efficiency [

20,

21].

While techniques have previously been developed for obstacle avoidance and navigation by mobile robots, significant limitations persist [

22,

23]. Traditional control methods such as the artificial potential field (APF) method [

24] and dynamic window approach (DWA) [

25], often reliant on predefined rules and fixed path planning, perform adequately in simple tasks but lack adaptability and flexibility in complex, dynamic environments [

26,

27,

28]. Their limited capacity for autonomous adjustment frequently results in decision errors or inefficiency when handling intricate scenarios [

29]. Furthermore, the safety assurance mechanisms of conventional obstacle avoidance strategies remain inadequate, leading to elevated collision risks during operation. These constraints hinder their widespread deployment in complex and variable settings [

30,

31].

Advances in AI have facilitated the application of reinforcement learning (RL) in mobile robotics [

32]. As a machine learning approach, RL demonstrates significant potential for use in autonomous robot decision-making [

33]. It addresses key challenges including task execution policy optimization, environmental adaptability enhancement, and autonomous decision-making for complex tasks [

34,

35]. By learning optimal policies through environmental interaction, RL enables effective decision-making in dynamic and uncertain environments [

36].

To enhance the time efficiency for mobile robot navigation in crowded environments, Zhou et al. [

37] proposed a social graph-based double dueling deep Q-Network (DQN). This approach employs a social attention mechanism to extract effective graph representations, optimizes the state–action value approximator, and leverages simulated experiences generated by a learned environmental model for further refinement, demonstrating significantly improved success rates in crowded navigation tasks. Li et al. [

38] introduced a fused deep deterministic policy gradient (DDPG) method, integrating a multi-branch deep learning network with a time-critical reward function, which effectively enhanced the convergence velocity and navigation performance in complex environments.

On the other hand, the control barrier function (CBF) has garnered attention in regard to obstacle avoidance control for mobile robots [

39,

40,

41]. Originating from control theory, the CBF provides robust control guarantees for safety-critical systems operating under constraints [

42]. For mobile robot obstacle avoidance, the CBF enforces strict safety conditions during motion by constructing safety constraints [

43,

44].

In order to further enhance the obstacle avoidance performance of the CBF, researchers have proposed various improvements and hybrid approaches. Singletary et al. [

45] conducted a comparative analysis of the performance of the CBF and artificial potential fields (APFs) in robotic obstacle avoidance, demonstrating that the CBF generates smoother trajectories, effectively mitigates oscillations, and offers enhanced safety guarantees. Jian et al. [

46] introduced a dynamic control barrier function, integrating it with model predictive control (MPC) to ensure collision-free trajectories for robots operating in uncertain and dynamic environments.

This paper proposes a novel safe reinforcement learning framework integrating the control barrier function (CBF) and proximal policy optimization (PPO) to reduce collisions in navigation for a mecanum wheel robot. Implemented within the ROS Gazebo simulation environment, the framework leverages CBF-based safety constraints formulated as a quadratic programming problem. This dynamically adjusts potentially unsafe actions generated by the PPO policy, guaranteeing collision mitigation while preserving motion efficiency. Comparative experiments against baseline algorithms (PPO, DQN, and DDPG) are conducted to rigorously evaluate the method’s effectiveness across key safety and navigation performance metrics.

The remainder of this paper is organized as follows:

Section 2 introduces the robot model and basic methods;

Section 3 presents the main research and methods;

Section 4 describes the simulation results and and provides a discussion; and the final section,

Section 5, concludes this paper.

2. Preliminary Knowledge

This section establishes the theoretical groundwork for the mobile robot platform, detailing its kinematic model and core implementation approaches. To support the development of subsequent control strategies and navigation algorithms, the unique omnidirectional motion capabilities inherent to the mecanum wheel robot are mathematically characterized. This section then presents fundamental obstacle avoidance techniques utilizing relevant control methodologies.

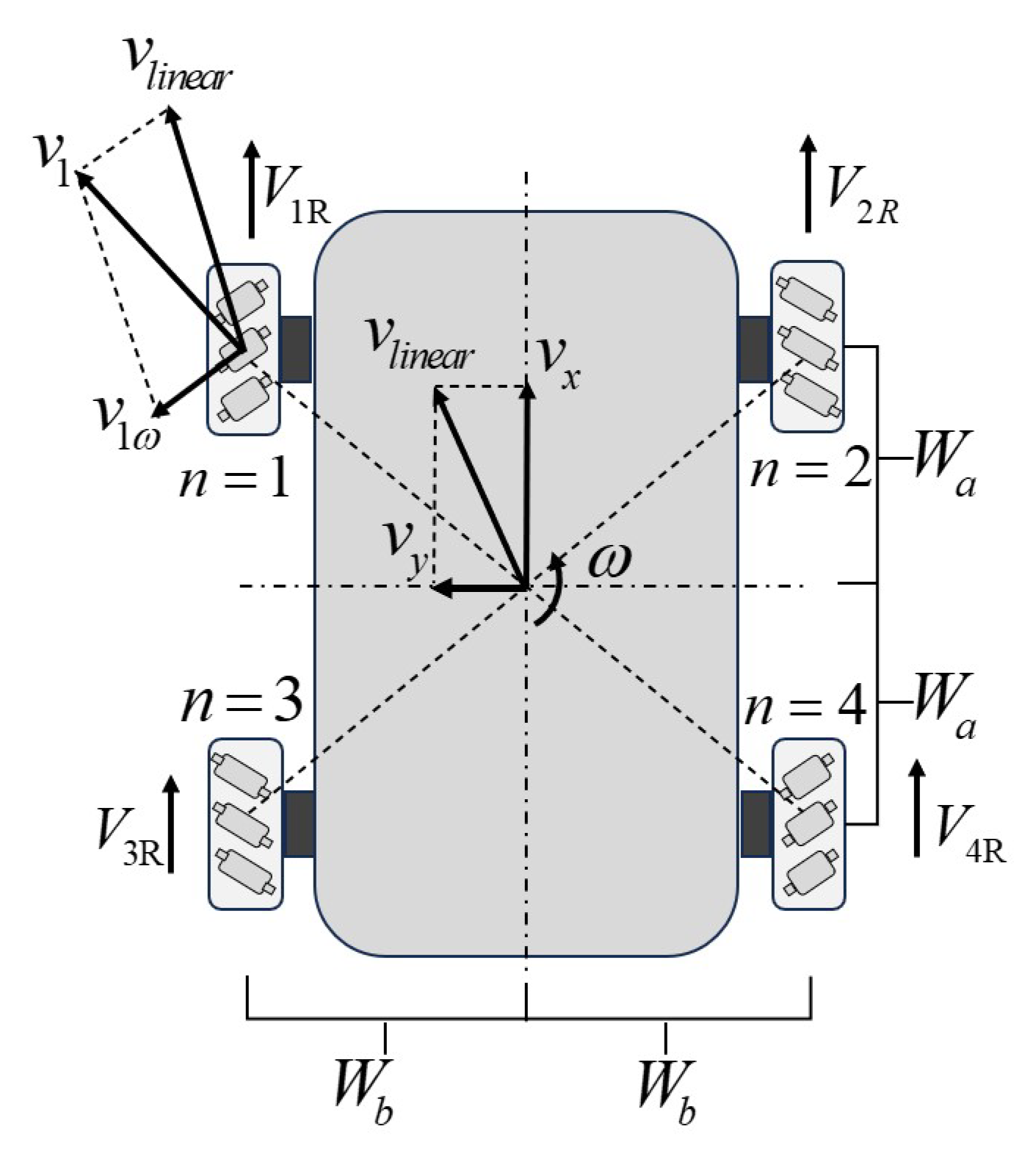

2.1. Kinematic Model of Robot

The mecanum wheel robot can achieve omnidirectional movement due to its unique wheel hub structure. The passive rollers installed at a 45° angle around each wheel allow the robot to translate or rotate in any direction without changing the orientation of the robot. Its kinematic model defines the relationship between the wheel velocities and the robot’s holistic motion. This representation is illustrated in

Figure 1.

2.1.1. Forward and Inverse Kinematics Models

The parameters related to the robot chassis are defined in

Figure 1. In the model

represents half of the body length (from the front wheel to the center of the rear wheel), and

represents half of the body width (the distance between the left and right wheel centers). The robot’s linear velocity is

, and

and

are the velocities in the

x and

y directions, respectively.

In addition

is the linear velocity of each wheel, and

is the angular velocity of the chassis corresponding to each wheel, and

represents the velocity at which the wheel moves forward. In summary, the forward kinematics model of the robot can be described as

where

represents the characteristic length of the robot chassis. The matrix

J establishes the mapping relationship between the wheel velocities and the chassis motion in the body-fixed frame. The corresponding inverse kinematics of the mecanum wheel robot chassis are shown as

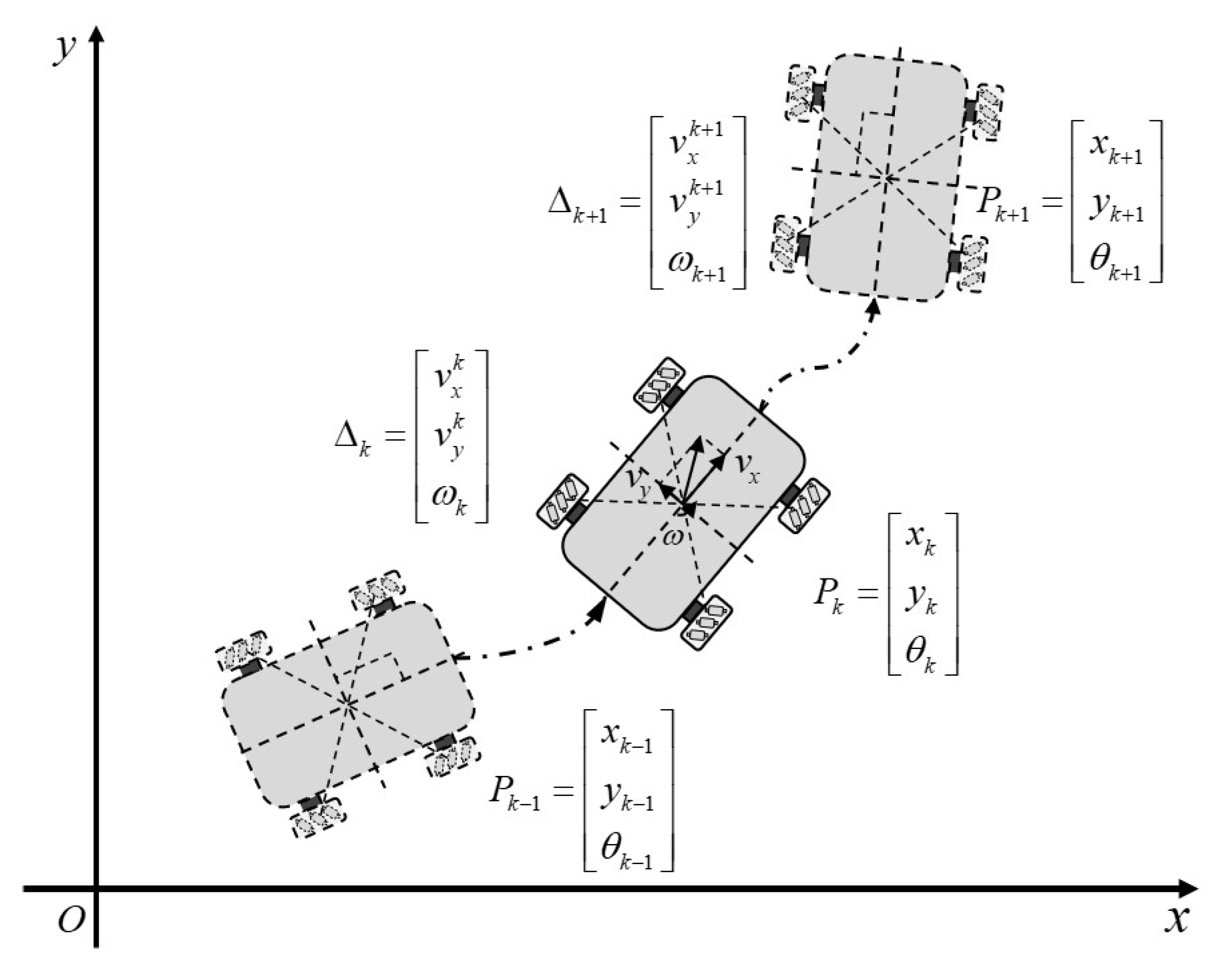

Therefore, the velocity of the robot chassis and the velocity of the four wheels can be converted into each other using the forward and inverse kinematics, which facilitates control of the robot’s trajectory. Based on this, a schematic diagram of the robot’s wheel odometry model is shown in

Figure 2.

2.1.2. Ideal Model of Robot’s Odometry

Under ideal conditions, the relationship between the robot’s current position,

, and the previous position,

, is expressed by the offset

. The next posture,

, is the current posture,

, plus the offset

, and the corresponding expression equation is

where

, and

. Assuming that the time,

, between two points is very small, the next position can be expressed as the linear and angular velocity offsets of the current position, and the expanded component form is as follows:

Therefore, the derived forward and inverse kinematic models, combined with the discrete-time odometry update equations, provide a complete mathematical framework to predict the robot’s chassis motion based on the wheel velocities, which forms the basis for controlling the robot’s trajectory.

2.2. Control Barrier Function

In the process of robot movement and navigation, obstacle avoidance and velocity limiting are indispensable to prevent uncontrollable behavior. This section mainly introduces the control barrier function (CBF), which is often used in the control field as a controller and can also be used to constrain a robot’s actions.

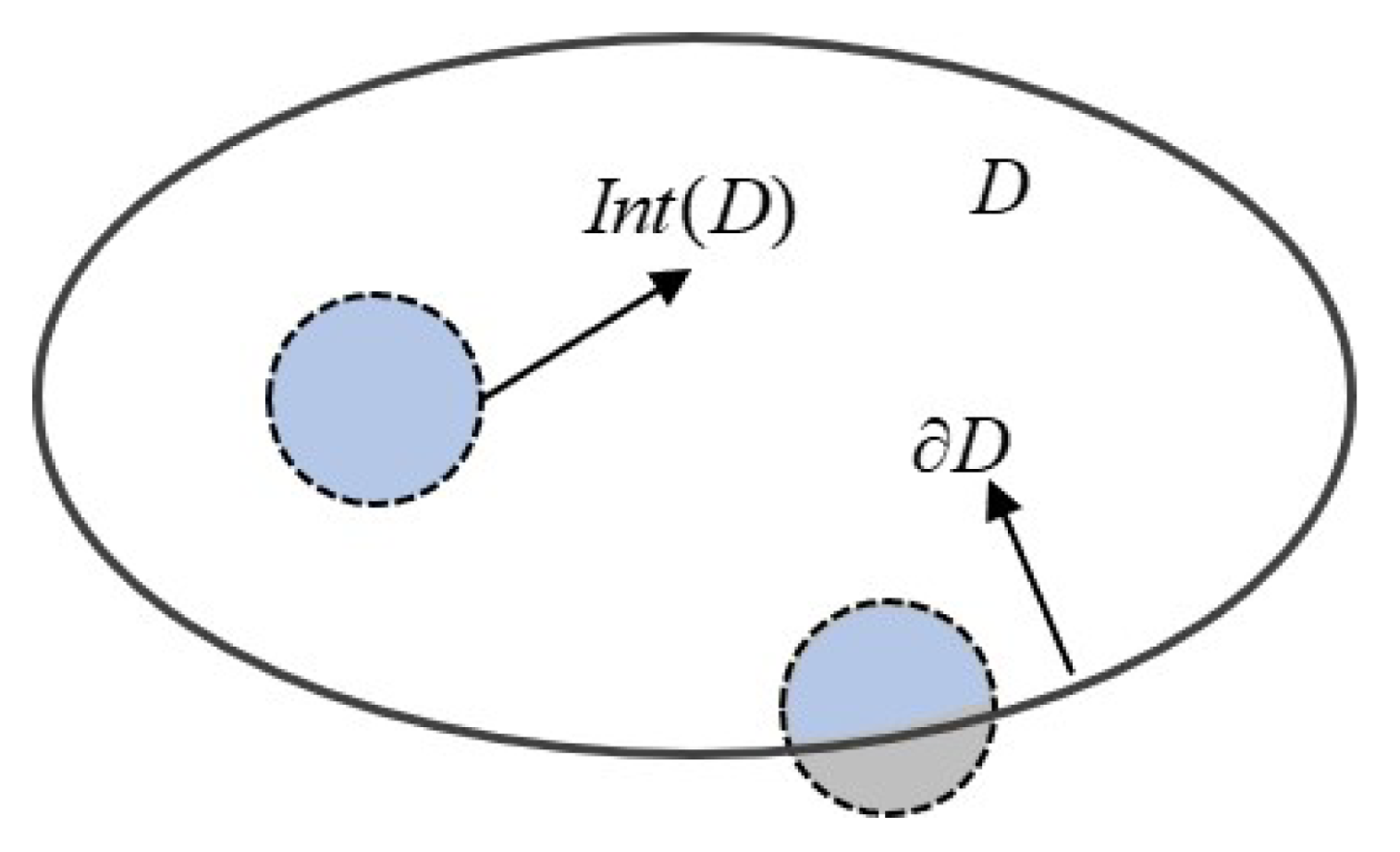

2.2.1. Definition of Safety Set

For a dynamic system, the safety set

is defined as the set of safe states in the system. A diagram of the safety set is shown in

Figure 3, and

is defined as

where the safe set

contains the status

. When the system state

x is safe, it means that it is inside the safety combination

or just on the boundary, which is

. On the contrary,

means that state

x is outside the safe set.

2.2.2. Definition of Control Barrier Funciton

Consider a control affine system and the corresponding dynamic system in the nonlinear case, which can be expressed as

where

, and

F is Lipschitz continuous. The behavior of the system is

f when there is no control input, and

g represents the control of the system and affects the changes in the system through a control input,

u.

For

,

, and

, there exists

, such that any state,

x, in the set

satisfies the condition

where

is considered to be a CBF, and

is an extended

K function.

2.3. Basic Theory of Reinforcement Learning

Reinforcement learning (RL) is a machine learning approach characterized by an agent learning to optimize its actions through trial-and-error interactions with a dynamic environment, aimed at discovering strategies that maximize the long-term returns. Its core feature is learning through a trial-and-error mechanism, relying on reward signals to guide behavior optimization.

RL usually models a problem as a Markov decision process (MDP). A MDP can be represented by a five-tuple, .

S: The state space represents the set of all the possible states of the agent in the environment. The state represents a specific situation in the environment at a certain moment and contains all the information needed by the agent.

A: The Action Space and the actions that the agent can take during an interaction with the environment are usually represented by , where represents the set of possible actions in state s.

P: The State Transition Function represents the probability of transitioning to state if an action, a, is executed in state s: that is, .

R: The reward function can be written as and refers to the immediate reward feedback obtained by the agent when it performs action a in state s.

: The discount factor is used to calculate future cumulative rewards, . A larger discount factor is suitable for tasks that focus on achieving long-term goals, while a smaller discount factor is more suitable for tasks that emphasize immediate feedback.

A robot can be regarded as an intelligent agent in reinforcement learning. The process of training it to interact with the environment involves RL. The corresponding environmental interaction is shown in

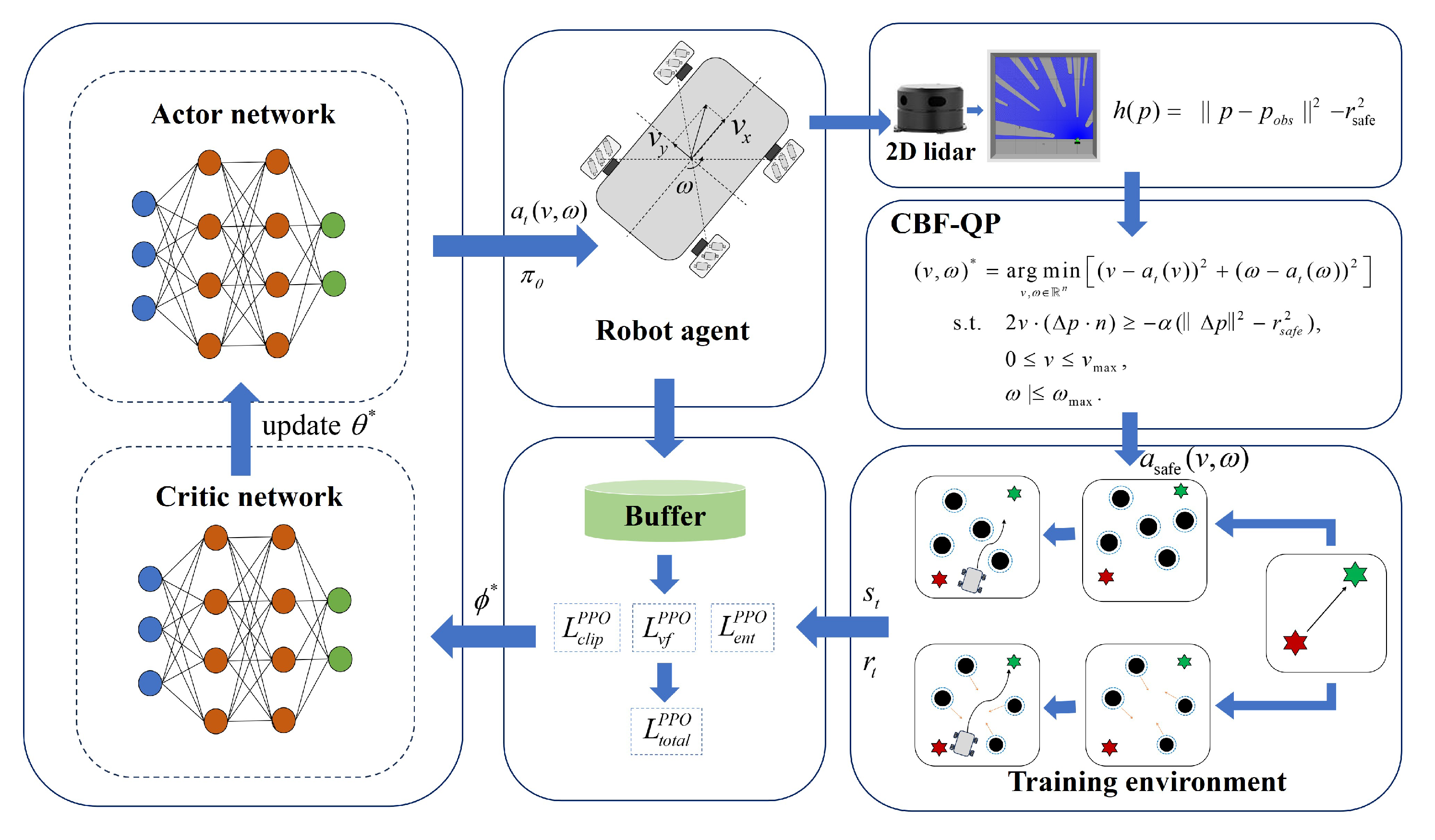

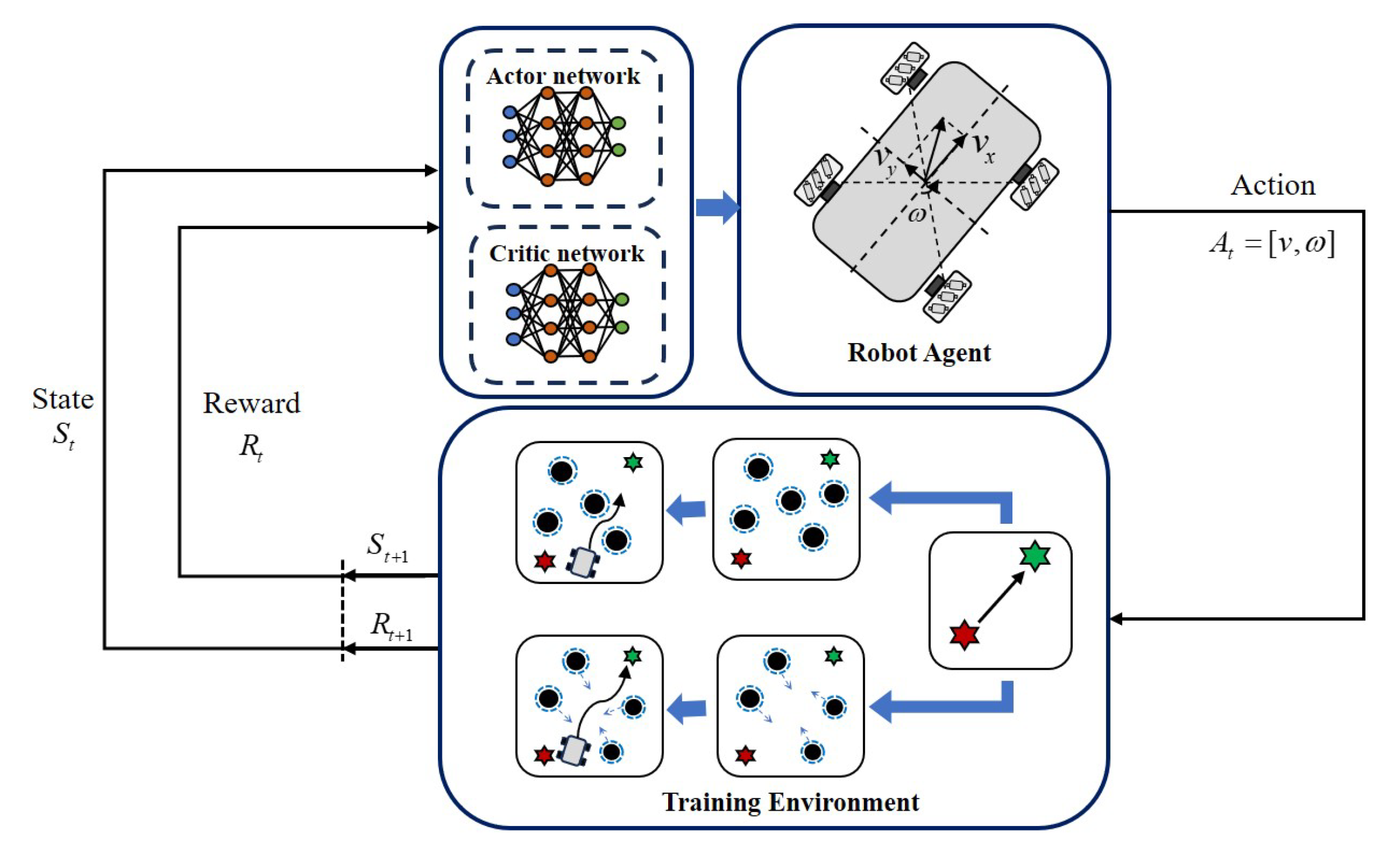

Figure 4.

Figure 4 depicts the iterative learning process through which an agent learns to interact with its environment within an Actor–Critic framework. The Actor generates an action mechanism and communicates it to the robot agent. The robot explores the environment and obtains the corresponding rewards and states, which are then passed to the Critic network for value calculation. The Critic updates the Actor with the calculated parameters and outputs a better action strategy.

3. Main Research Content and Methods

This section mainly describes the motion control method based on proximal policy optimization (PPO) and the design of the real-time constraint mechanism using the CBF. On this basis, a novel fusion framework is constructed to implement a strategy for safe reinforcement learning, so as to ensure that the robot maintains safety performance while exerting its exploration advantages and further improve the stability of reinforcement learning.

3.1. Proximal Policy Optimization

The PPO objective function and Actor–Critic architecture are defined as key mechanisms for generating efficient exploratory navigation strategies for omnidirectional mobile robotic platforms. Their core function is to learn adaptive obstacle avoidance strategies for use in complex or dynamic scenarios and output robot velocity commands that balance navigation task completion and safety.

3.1.1. PPO Principle Description

As an Actor–Critic derivative, PPO enforces policy update constraints via a trust-region-inspired mechanism to ensure monotonic improvement. By introducing an objective function clipping mechanism, it achieves efficient learning while ensuring training stability. Its core components and formulas are as follows:

where

and

are the parameters of the policy network and the value network, respectively, the current state is

, and

is the generated action.

First, we need to calculate the generalized advantage estimation (GAE), calculate the TD error based on

, and calculate the advantage

based on the TD error

. The specific equation is expressed as

with

being the discount factor and

the GAE parameter.

The importance sampling ratio in the policy network update

is

Next, we need to calculate the clipping objective function, which is also one of the core components of PPO. It can be expressed as

where

is the clipping threshold, and it limits the importance sampling ratio

to within the interval

.

For an action with a positive advantage , the objective function is clipped to prevent the policy from increasing the probability of the action too aggressively . Conversely, for an action with a negative advantage , the objective function is clipped to prevent the probability from decreasing too much .

In the Critic network update, the value loss function that needs to be calculated is as follows:

where

is the cumulative return. At the same time, PPO introduces an entropy regularization term to enhance the exploration of strategies:

where

is the entropy of the strategy.

Then the total loss function can be calculated as

where

and

are weight parameters.

3.1.2. PPO Algorithm Flow

The PPO algorithm is an efficient policy gradient method. By introducing an objective function clipping mechanism, it can achieve efficient learning while ensuring training stability. The core idea of PPO is to limit the step size of policy updates to avoid performance crashes during training. The algorithm flow is shown in Algorithm 1.

| Algorithm 1 Proximal policy optimization strategy. |

Input: Initial policy , value , , , , , , K, M Output: Optimized , - 1:

Initialize , - 2:

for

to

K

do - 3:

Collect via - 4:

for each t in do - 5:

- 6:

- 7:

end for - 8:

- 9:

for episode to M do - 10:

Sample minibatch from - 11:

- 12:

for each in do - 13:

- 14:

- 15:

- 16:

- 17:

- 18:

end for - 19:

- 20:

- 21:

end for - 22:

end for - 23:

return ,

|

3.2. Application and Implementation of CBF

The CBF is specifically used for its safety conditions, which are based on the kinematic model of the omnidirectional mobile robot used in this study. The focus is on demonstrating how this function acts as a real-time safety filter, applying online corrections to the original motion output by the PPO algorithm to achieve collision avoidance during navigation. Its quadratic programming formulation explicitly serves a single goal: to minimize the safety corrections to the PPO-determined motion while ensuring that the robot maintains its specific safety distance and stays within its physical motion limits.

3.2.1. Safe Sets and Safe Functions

The set of states,

, in which the robot can safely operate and the barrier function

are defined as

where

represents the coordinates of the robot in the global coordinate system,

represents the coordinates of the nearest obstacle, and

is the safety distance threshold.

3.2.2. Implementation of CBF Safety Constraints

The time derivative of the CBF can be calculated as

where

,

. Then substitute the robot motion model into the equation

Since the CBF needs to satisfy this constraint, substitute Equation (

22) into Equation (

9):

Set a relative position vector,

, and the unit vector of the robot’s forward direction,

i, as

The corresponding constraints can be transformed into

In summary, the overall constraint solving framework of the CBF can be written as

where

is the max value of

v, and

is the max angular velocity of

.

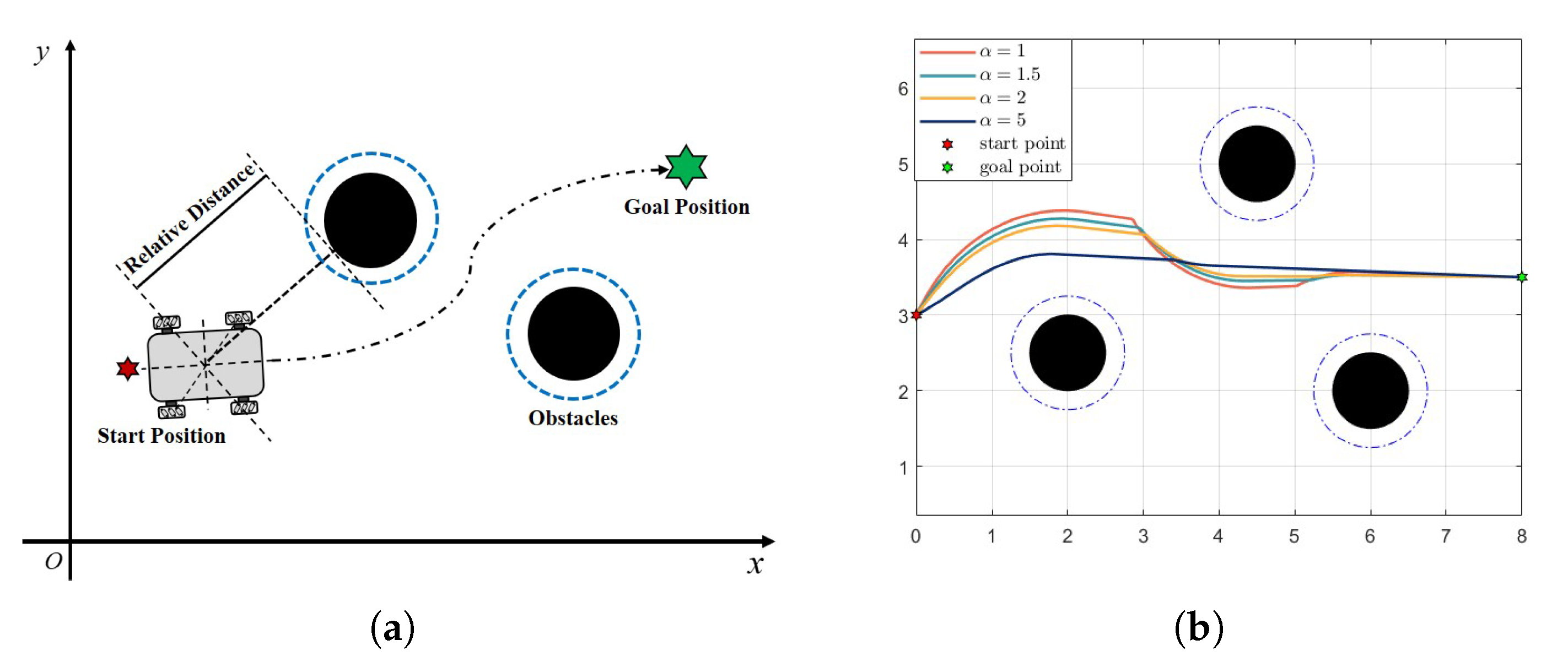

Figure 5 shows a simple diagram of the CBF obstacle avoidance process, as well as an example of the impact of different

values on the trajectory. At each step, the CBF combines the current velocity and other state variables to determine the next output that meets the constraint requirements and combines this with the result from the obstacle function calculation to continuously move until it reaches the target position.

A CBF framework for safe robot motion is proposed. First, a safe set is used by a barrier function, which represents the set of collision-free trajectories. Then, the time derivative of this barrier function is derived from the robot’s kinematic model and combined with the

K function to obtain linear inequalities for the control variables. Finally, a quadratic program is formulated to minimize the modification of the nominal control command while satisfying the CBF safety constraints and the actuator’s restrictions on the linear and angular velocities. The corresponding algorithm for the CBF is shown in Algorithm 2.

| Algorithm 2 CBF safety filter. |

Input: Robot kinematic model, safety distance threshold , maximum velocity , maximum angular velocity , CBF parameters Output: Optimized control inputs , - 1:

Initialize robot state , robot orientation , velocity v, and angular velocity - 2:

Calculate the relative position vector to the nearest obstacle - 3:

Calculate the unit vector of the robot forward direction - 4:

Calculate the barrier function - 5:

Calculate the time derivative of the barrier function using ( 22) - 6:

Set the initial control inputs , - 7:

for

to

K

do - 8:

Calculate the constraint - 9:

where - 10:

and - 11:

Solve the optimization problem - 12:

Update control inputs , - 13:

Update robot state using the kinematic model - 14:

end for - 15:

return ,

|

3.3. Risk-Aware, Dynamic, Adaptive Regulation Barrier Policy Optimization

This section designs a risk-aware, dynamic, adaptive regulation barrier policy optimization (RADAR-BPO) method which combines the efficiency of stable exploration with PPO. The Actor outputs probabilistic actions, and the Critic updates their value and optimizes the Actor based on the information obtained from feedback on the agent’s motion in the environment. In addition, extra security is provided by the CBF, which was added to further optimize the actions output by the PPO algorithm for the actor, filter actions with risky values, and output safe actions. This method deeply integrates the exploration capabilities of PPO and the security of the CBF, so that the robot agent can maximize the value of exploration in different environments. A corresponding block diagram of the complete system is shown in

Figure 6, and the algorithm is shown in Algorithm 3.

Figure 6.

Complete RADAR-BPO framework for training to interact with environment.

Figure 6.

Complete RADAR-BPO framework for training to interact with environment.

| Algorithm 3 RADAR-BPO. |

Input: Initial policy , value , safety params Output: Optimized , - 1:

Initialize , - 2:

for iteration to K do - 3:

Data Collection: - 4:

Collect trajectory via policy - 5:

Policy Optimization: - 6:

Compute advantages and returns for - 7:

- 8:

Update using PPO loss with - 9:

for each state in do - 10:

Get PPO action - 11:

Compute safety constraint based on robot state - 12:

Safety Filtering: - 13:

- 14:

Execute safe action - 15:

end for - 16:

end for - 17:

return ,

|

In addition, this method fully considers information on the robot’s spatial dimensions during the obstacle avoidance process, including its position, orientation, distance from obstacles, etc., and transforms this spatial information into safety constraints using the CBF and embeds it into a reinforcement learning-based decision-making process, thereby achieving safe and efficient navigation in complex dynamic environments.

To achieve an optimal balance between navigation efficiency and safety guarantees, a multi-objective reward function with adaptive components is designed. The mathematical formulation is defined as follows:

where

,

,

, and

are defined below.

The heading reward

encourages alignment with the target direction:

where

denotes the angular deviation between the robot’s current orientation and the target direction. The distance reward

motivates progression toward the target position:

where

is the current Euclidean distance to the target and

is the initial distance.

The obstacle penalty

ensures collision risk awareness:

where

is the distance to the nearest obstacle.

The velocity reward

optimizes the motion efficiency:

with an adaptive optimal velocity of

and

. This equation encourages a higher velocity when distant from the target while promoting precision during the final phases.

The reward function integrates the four components in an additive manner. Although this is an equally weighted sum, each term is designed to have comparable magnitudes to prevent any one objective from overly dominating the learning process. For example, , and is scaled by a factor and exponentially decays with distance. provides a significant but bounded penalty. This design prioritizes simplicity, interpretability, and minimal parameter tuning in the early stages of the algorithm’s implementation, resulting in a reliable benchmark. The component is not a constant reward but a term used to fine-tune the efficiency of the movement.

The RADAR-BPO framework establishes a safety-critical reinforcement learning paradigm through integrated policy optimization and real-time safety assurance. During each training iteration, the agent executes interactions with the environment using its current policy network , gathering trajectory data that includes environmental states, exploratory actions, and reward signals. This collected experience forms the foundation for subsequent policy refinement.

Following data collection, the algorithm performs proximal policy optimization using the GAE. This involves calculating the temporal difference errors to estimate the action advantages, then updating both the policy and value networks using a specialized objective function. The optimization balances exploration incentives with value approximation while maintaining training stability through gradient clipping.

During action execution, each policy-generated velocity command undergoes safety verification using a CBF filter. This module solves a quadratic optimization problem that minimizes deviations from the original actions while enforcing collision avoidance constraints. The safety verification incorporates the robot’s positional relationship with obstacles and its current heading orientation to dynamically adjust the motion commands.

The resulting safe actions preserve the learning direction of the policy network while ensuring formal safety guarantees through real-time constraint enforcement. This synergistic integration creates an evolution mechanism where policy improvement and safety assurance mutually reinforce each other throughout the learning process. The system maintains continuous compliance with safety boundaries while progressively refining its navigation strategy through environmental interactions.

4. Case Study

To rigorously evaluate the performance and validate the efficacy of the proposed risk-aware, dynamic, adaptive regulation barrier policy optimization (RADAR-BPO) framework for collision risk mitigation in mobile robot navigation, this section presents comprehensive simulation experiments conducted within the ROS Gazebo environment.

4.1. Test Environment Setup

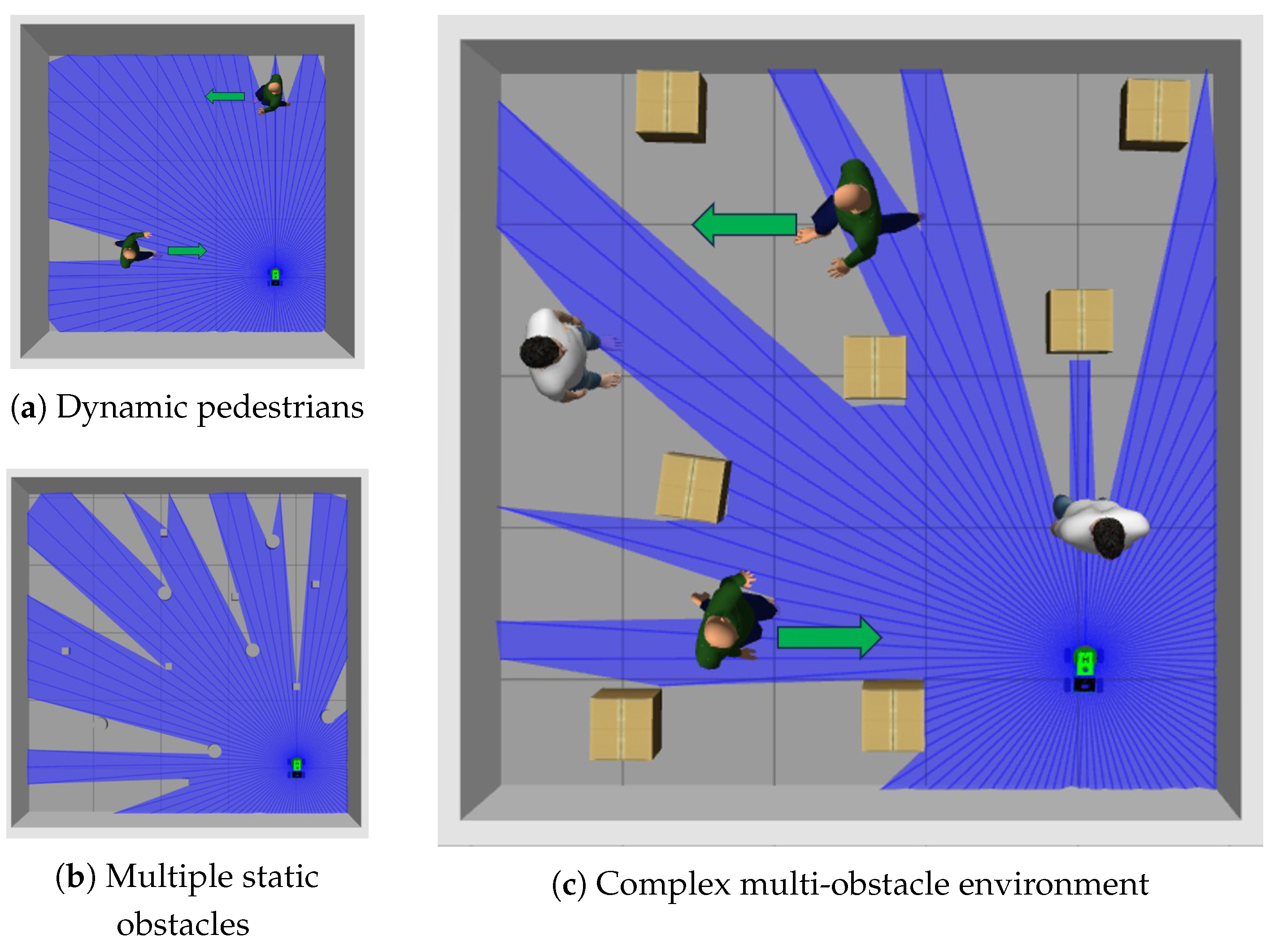

The simulation environments visualized in

Figure 7 were constructed within the ROS Gazebo platform to test a robot’s obstacle avoidance navigation. Specifically,

Figure 7a presents a dynamic pedestrian environment,

Figure 7b demonstrates a multi-obstacle environment, and

Figure 7c showcases a complex obstacle environment incorporating both static and dynamic elements.

In

Figure 7a, there are two pedestrians walking back and forth at a certain speed. The robot needs to avoid them and reach the target point while the pedestrians are moving. In

Figure 7b, there are multiple static cylindrical or cubic obstacles. The robot needs to avoid the static obstacles and successfully navigate to the target point.

Figure 7c contains multiple pedestrians and multiple different static obstacles. The robot also needs to complete a navigation task in this complex and changing environment.

4.2. Design of Experimental Test

The motion trajectories of the pedestrians in

Figure 7a,c are shown in

Table 1, and there are two stationary pedestrians in

Figure 7c.

In the Gazebo coordinate system (the positive direction on the y-axis is left and the negative direction is right), pedestrian 1 moves 1 m to the right from their initial position (0.5, 2.5) to (0.5, 1.5), turns on the spot, moves 1 m to the left, returns to their starting point (0.5, 2.5), and stays there. At the same time, pedestrian 2 moves 2 m to the left from their initial position (3.0, 0.0) to (3.0, 2.0), turns on the spot, moves 2 m to the right, returns to their starting point (3.0, 0.0), and stays there. Both pedestrians complete a round trip in the y-axis direction. There are two other stationary pedestrians: one is fixed at (1.0, 0.0), and the other is fixed at (2.0, 3.0). The robot starts from the starting point (0.0, 0.0) and needs to traverse the dynamic environment to reach the target point (3.0, 3.0). Its path will be blocked by moving pedestrians and it will need to avoid stationary pedestrian obstacles and other obstacles.

Table 2 lists the key parameter settings used to train the RADAR-BPO navigation algorithm. These parameters cover aspects such as policy optimization, the safety constraints, the robot’s kinematic model, and the training environment configuration. Some parameter values (such as discount factors and GAE parameters) were within typical ranges or needed to be adjusted according to the specific environment.

The algorithm was trained on each environment for a fixed number of episodes: 150 for Env 1 (dynamic pedestrians), 200 for Env 2 (multiple static obstacles), and 300 for Env 3 (multiple complex obstacles). This design with an increasing environment complexity and training time was intended to mimic curriculum learning, allowing the agent to consolidate foundational skills before tackling more difficult tasks. Each training episode terminated when the agent successfully reached the goal, collided with an obstacle, or reached the maximum step limit.

4.3. Test Results and Analysis

To comprehensively evaluate the performance of the proposed RADAR-BPO framework and quantitatively assess its effectiveness in mitigating collision risks while maintaining navigation efficiency, extensive simulations were conducted across the three distinct environments introduced in

Section 4.1 (

Figure 7). The evaluation employed a three-stage progressive training paradigm: Stage 1 (

Figure 7a) utilized the dynamic pedestrian environment (Env 1), Stage 2 (

Figure 7b) progressed to the multi-static obstacle environment (Env 2), and Stage 3 (

Figure 7c) took place in the complex hybrid environment containing both static obstacles and dynamic pedestrians (Env 3). This staged approach rigorously tested the algorithm to determine its fundamental obstacle avoidance ability.

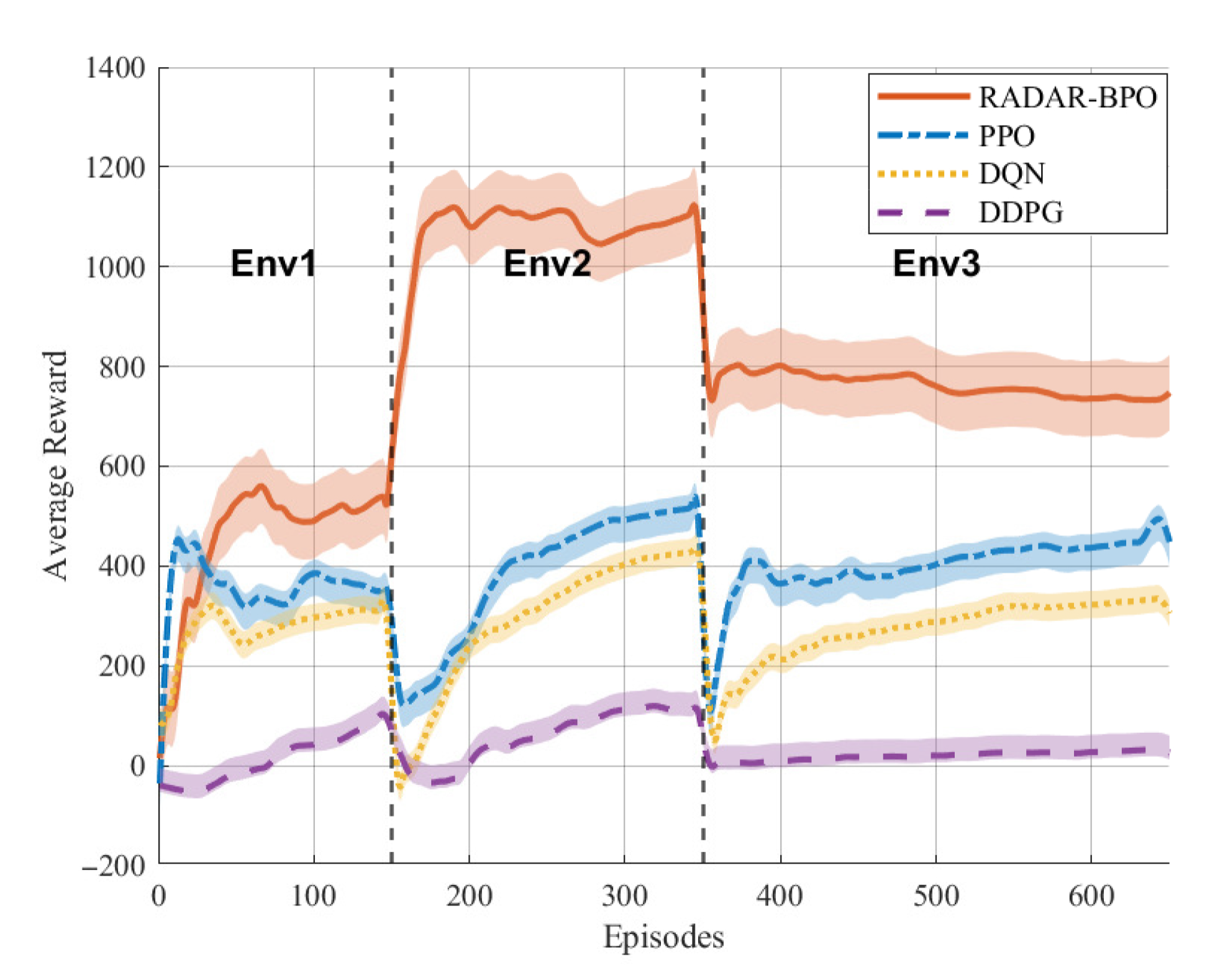

The core performance metrics included the learning stability, safety performance, and navigation efficiency. Crucially, the cumulative reward curves from throughout the training process were analyzed and compared against those of the baseline PPO algorithm. This direct comparison highlighted the impact of integrating the real-time CBF safety filter within the RADAR-BPO framework. The corresponding segmented training reward curve is shown in

Figure 8.

As shown in

Figure 8, during the progressive training process implemented across three stages (Env 1, Env 2, and Env 3), the RADAR-BPO algorithm significantly outperformed all the baseline algorithms (PPO, DQN, and DDPG) in terms of the average reward in all the environments. This overall performance advantage was demonstrated by the following: in the relatively simple Env 1 stage, while all the algorithms initially experienced low rewards, RADAR-BPO quickly escaped this low-reward zone and stabilized at a higher level. Entering the more complex Env 2 stage, RADAR-BPO exhibited a burst of performance growth, with its reward values rapidly exceeding 1000 and stabilizing at approximately 1100. In contrast, PPO slowly climbed to around 400, while the DQN and DDPG lagged significantly behind. In the most complex Env 3 stage, the challenging environment caused performance degradation for all the algorithms. However, RADAR-BPO maintained an absolute advantage, stabilizing at around 760 with a smooth, volatility-resistant curve. PPO fluctuated violently below 400 and showed weak growth. The DQN and DDPG’s performance remained sluggish, failing to improve significantly, and the gap between their performance and RADAR-BPO’s was the largest.

Comparing the two curves at the beginning of the Env 3 phase reveals that PPO’s reward values exhibited a larger upward gradient and oscillation amplitude, while the rise in RADAR-BPO’s values was more stable. This phenomenon reveals the different learning modes of the two algorithms: The PPO strategy almost failed after an environment switch, requiring a painstaking learning process to recover from an extremely high collision rate, resulting in an unstable learning process. In contrast, RADAR-BPO benefited from the real-time safety guarantees provided by the CBF. Its strategy retained its core obstacle avoidance capabilities after an environment switch, and its learning process involved stable fine-tuning based on a higher performance baseline to adapt to new dynamic obstacles. Therefore, RADAR-BPO sacrificed a seemingly larger learning amplitude in exchange for a higher, more stable, and safer final performance.

This result clearly shows that the RADAR-BPO framework integrated with real-time CBF security filtering can not only achieve higher task returns in complex dynamic environments (based on the higher success rate and average reward in

Table 3) but also significantly improve the convergence speed of the learning process, the final performance ceiling, and the training stability, effectively solving the core problems of low exploration efficiency, large policy fluctuations, and limited performance of traditional PPO in safety-critical scenarios.

According to the results listed in

Table 3, RADAR-BPO outperformed the PPO algorithm in most metrics. In Env 1, RADAR-BPO’s collision rate was 68.67%, lower than PPO’s 82.00%; in Env 3, RADAR-BPO’s collision rate further decreased to 10.67%, compared to PPO’s 30.67%. Furthermore, RADAR-BPO achieved 47, 164, and 268 successes in the three different environments, respectively, all exceeding PPO’s 27, 134, and 208. In terms of the average reward, RADAR-BPO also generally outperformed PPO, with these algorithms achieving 1070.09 and 377.01, respectively, in Env 2. These results demonstrate that RADAR-BPO maintains high task completion efficiency and a high reward yield while reducing the collision rate.

In contrast, the DDPG and DQN algorithms generally underperformed in comparison to RADAR-BPO and PPO. The DDPG’s collision rate was above 75% across all the environments, and its average reward was significantly lower than that of the other algorithms. The DQN had zero successes and a 100% collision rate in Env 1. In Env 2 and Env 3, its collision rates were 55.50% and 68.00%, respectively. While its average reward was higher than that of the DDPG, it was still lower than that of RADAR-BPO and PPO.

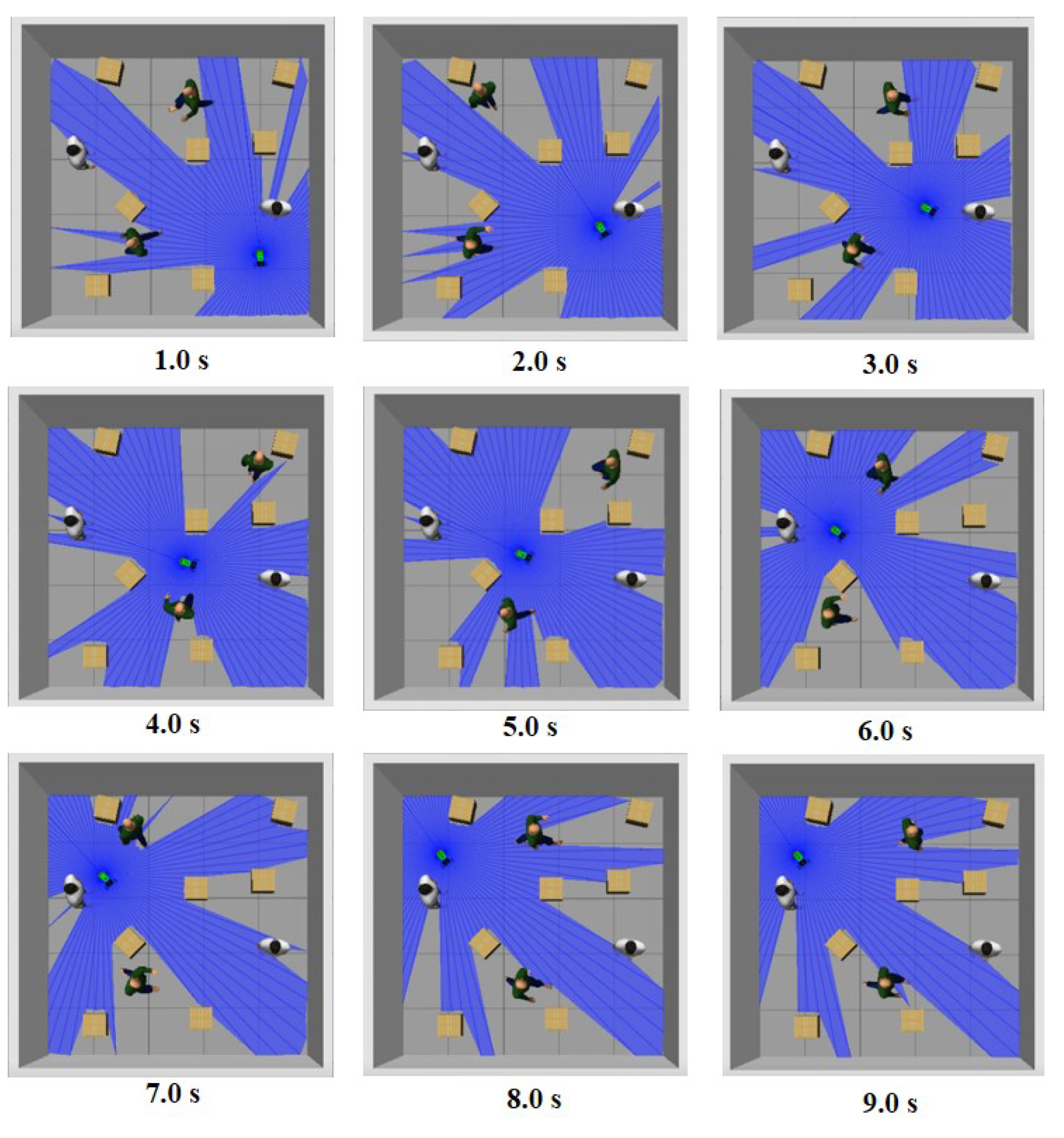

To provide an intuitive visualization of the algorithm’s real-time decision-making and safety assurance capabilities in the most challenging scenario, the trajectory evolution of the robot navigating across Env 3 over a critical 9 s interval (from t = 1 s to t = 9 s) is presented and discussed. This sequence illustrates how RADAR-BPO dynamically adjusted the robot’s path to safely avoid both static obstacles and moving pedestrians while progressing towards the target.

In

Figure 9, the robot starts from the starting position (0.0, 0.0), initially accelerates to bypass the stationary pedestrian in front, and turns left toward the target direction; when it encounters Pedestrian 1 (0.5, 2.5 → 1.5) moving horizontally to the right, it suddenly turns at a sharp angle to achieve emergency avoidance and simultaneously and smoothly avoids walls and nearby obstacles. Under the combined threat of Pedestrian 2 (3.0, 2.0 → 0.0) moving horizontally and dense obstacles, the robot flexibly adjusts the path to squeeze through and finally arrives at the end point (3.0, 3.0) accurately. The entire path maintains the straight-line efficiency of PPO in open areas and forms a smooth and conservative contour using CBF constraints when approaching obstacles. The collision-free nature of the entire process verifies RADAR-BPO’s seamless integration of exploration and safety in dynamic and dense scenes.

5. Conclusions and Future Work

This paper proposes RADAR-BPO (risk-aware, dynamic, adaptive regulation barrier policy optimization), a novel safe reinforcement learning framework integrating PPO with CBF-based safety filters to mitigate collision risks in mobile robot navigation. The framework leverages PPO for exploratory policy generation while employing the CBF as a real-time safety filter, formulated as a quadratic programming problem, to minimally modify risky actions and ensure collision avoidance. Implemented on a mecanum wheel robot within the ROS Gazebo simulation environment, the method demonstrated significant improvements in safety performance across diverse dynamic and complex scenarios. Comparative experiments against baseline PPO, DQN, and DDPG algorithms confirmed that RADAR-BPO achieves higher success rates, lower collision rates, and superior average rewards while maintaining navigation efficiency, highlighting its effectiveness in balancing exploration with formal safety guarantees.

In future work, the framework could be extended to address more complex real-world challenges. Potential directions include validating the approach on physical robotic platforms to assess its real-time performance and robustness under sensor noise and hardware limitations. Additionally, exploring the use of adaptive or learned CBF parameters to handle heterogeneous obstacle shapes and uncertain dynamics, integrating multi-robot collision avoidance scenarios, and extending the method to unstructured outdoor environments would further enhance this approach’s applicability.