Abstract

Gallstone disease affects approximately 10–20% of the global adult population, with early diagnosis being essential for effective treatment and management. While image-based machine learning (ML) models have shown high accuracy in gallstone detection, tabular data approaches remain less explored. In this study, we have proposed a Random Forest (RF) classifier optimized using the Sand Cat Swarm Optimization (SCSO) algorithm for gallstone prediction based on a tabular dataset. Our experiments have been conducted across four frameworks: only RF without cross-validation (CV), RF with CV, RF-SCSO without CV, and RF-SCSO with CV. Only RF without CV model has achieved 81.25%, 79.07%, 85%, and 73.91% accuracy, F-score, precision, and recall, respectively, using all 38 features, while the RF with CV has obtained a 10-fold cross-validation accuracy of 78.42% using the same feature set. With SCSO-based feature reduction, the RF-SCSO without and with CV models have delivered a comparable accuracy of 79.17% and 78.32%, respectively, using only 13 features, indicating effective dimensionality reduction. SHAP analysis has identified CRP, Vitamin D, and AAST as the most influential features, and DiCE has further illustrated the model’s behavior by highlighting corrective counterfactuals for misclassified instances. These findings demonstrate the potential of interpretable, feature-optimized ML models for gallstone diagnosis using structured clinical data.

1. Introduction

Gallstones are solid and crystalline deposits in the gallbladder or bile ducts caused by high levels of cholesterol or bilirubin in bile [1,2]. About 10–20% of adults worldwide have gallstones. Gallstones are mainly classified by composition: over 90% are cholesterol stones, and under 10% are pigment stones (black or brown), and by location, either in the gallbladder or bile ducts (extrahepatic or intrahepatic) [1]. Gallstones develop when bile contains excess cholesterol or lacks enough bile salts, combined with poor gallbladder movement. Common risk factors are female gender, pregnancy, aging, obesity, specific ethnic groups, unhealthy eating habits, quick weight loss, and certain illnesses [2]. In Western countries, most gallstones (75–80%) are cholesterol stones, meaning they are made of more than 50% cholesterol [3]. The rest are pigment stones, made of less than 30% cholesterol, and these are further divided into black pigment stones (10–15%) and brown pigment stones (5–10%). Gallstone diagnosis usually begins with assessing pain in the upper right abdomen, although this can also be caused by other conditions [4]. Blood tests such as Complete Blood Count (CBC), Liver Function Test (LFT), and measurements of enzymes like lipase and amylase support the diagnosis. However, imaging techniques are the most accurate, with Ultrasonography (US) being the gold standard due to its high sensitivity and specificity. In unclear cases, additional imaging methods such as Computed Tomography (CT), Magnetic Resonance Cholangiopancreatography (MRCP), or Endoscopic Retrograde Cholangiopancreatography (ERCP) are used. Figure 1 shows the presence of gallstones in the gallbladder.

Figure 1.

Gallstones in the gallbladder.

Early detection of gallstones enables the initiation of timely treatment and significantly reduces the burden on patients. In this context, machine learning (ML) techniques offer a promising solution. Previous studies have demonstrated the effectiveness and growing acceptance of ML methods for accurate gallstone detection and localization. Bozdag et al. have used Content-Based Image Retrieval (CBIR) systems to classify gallbladder diseases, including gallstones [5]. They have achieved an accuracy of 94.4%. Wang et al. have proposed an ultrasound (US) image-based model that has gained an Area under the Curve (AUC) of 0.995 using the XGBoost-US radiomics model [6]. Obaid et al. have used images to identify gallbladder diseases with an accuracy of 98.35%, where the gallstone classification accuracy has been recorded as 98% [7]. Pang et al. have used the You Only Look Once (YOLO) algorithm on CT scan images to classify gallstones and have succeeded in attaining 86.5% accuracy [8]. Hong et al. have used the Random Forest (RF) classifier on US images and shown 96.33% accuracy [9]. The previously discussed research studies have primarily focused on image-based approaches. However, unlike the previously mentioned image-based approaches, Esen et al. utilized a tabular dataset and achieved an accuracy of 85.42% in predicting the presence of gallstones using a Gradient Boosting Classifier [10].

This research focuses on evaluating the performance of the Sand Cat Swarm Optimization (SCSO) algorithm in combination with the Random Forest (RF) classifier for gallstone classification using a tabular dataset. We have used a publicly available dataset, Gallstone, available on the UC Irvine Machine Learning Repository. The dataset contains 39 columns (38 features + a binary target class) and 319 subjects. The detailed description of the dataset is given in the dataset subsection. Most of the previous studies [5,6,7,8,9] have relied on image-based approaches, which typically involve complex models and slower identification processes compared to tabular dataset-based models. However, Esen et al. [10] proposed a tabular dataset approach using 38 features. This high number of features presents practical limitations, as it requires extensive clinical testing, which can be both time-consuming and costly. Overall, the existing studies face several limitations, including high model complexity, a large number of required features, and a lack of in-depth analysis, particularly regarding the nature of misclassification. An ML model that does not provide insights into its decision-making process is often considered a “black box”. In previous studies, most models have remained black boxes, as they failed to explain the rationale behind individual predictions, such as why a particular case was classified as gallstone positive or negative.

To overcome the limitations of prior studies, we have proposed a feature-reductive ML approach leveraging metaheuristic algorithms (MHAs). MHAs are optimization techniques inspired by natural phenomena, such as animal hunting strategies and particle interactions, and are widely applied for both feature selection and hyperparameter tuning [11,12]. Previous studies have shown the acceptance of different types of MHAs on medical datasets for feature selection and hyperparameter tuning. Stephan et al. have used an artificial bee colony with whale optimization on breast cancer datasets to improve classification task [13]. Li et al. (2021) have demonstrated that the integration of particle swarm optimization with neural networks can significantly improve classification performance in medical data [14]. Previous studies have shown the multidimensional applicability of the SCSO algorithm in diverse domains, such as biomedical applications [15] and cancer classification [16]. Ref. [17,18] have used the gray wolf optimization algorithm for classifying heart-related issues such as Coronary Artery Disease and Heart Failure.

In this research, we have focused on assessing the effectiveness of MHAs in identifying gallstone cases using a minimal set of diagnostic features, thereby reducing the need for extensive and costly clinical testing. Moreover, we have prioritized reducing the computational complexity and execution time of the models to enhance their practicality in real-world clinical settings. To address the black-box nature of many ML models, we have incorporated explainable artificial intelligence (XAI) techniques. Specifically, we employed Shapley Additive Explanations (SHAP) and Diverse Counterfactual Explanations (DiCE) to interpret model decisions and provide transparency into feature contributions and prediction logic. Recent studies on medical health care have proven the integration of these XAI algorithms. Ref. [19] has used SHAP and DiCE in their research to enhance the mild cognitive impairment and Alzheimer’s disease diagnosis. Su et al. have visualized the feature importance using SHAP, highlighting which features are most influential to the model [20]. AlJalaud et al. have implemented DiCE on four different datasets, including the breast cancer dataset [21]. In addition to model interpretation, we have conducted an in-depth analysis of misclassified instances, aiming to understand the underlying reasons for incorrect predictions and explore how these can be corrected through model refinement. To ensure the robustness and generalizability of our approach, we have implemented a customized 10-fold cross-validation strategy, allowing the model to be evaluated comprehensively on unseen and practical data scenarios. The performance of the model has been evaluated using accuracy, F1-score, precision, recall, Area Under the Curve (AUC), and Receiver Operating Characteristic (ROC) curve. So, considering these, our main contributions are as follows:

- ■

- We have used the Sand Cat Swarm Optimization (SCSO) algorithm for simultaneous feature selection and hyperparameter tuning within the Random Forest (RF) framework for gallstone classification;

- ■

- We have introduced a metaheuristic-based optimization pipeline that reduces the number of features while improving classification accuracy and computational efficiency in clinical usages;

- ■

- The proposed model incorporates interpretable AI techniques, including SHAP for detailed feature contribution analysis and DiCE for generating counterfactual explanations, enabling a clinically meaningful understanding of predictions;

- ■

- In this study, the model’s performance has been systematically evaluated across multiple metrics, including accuracy, F1-score, precision, recall, AUC, ROC, and execution time, demonstrating both effectiveness and efficiency.

The remaining portion of this research study has been organized as Materials and Methods, Results, Discussion, and Conclusion. In Section 2, we have described the dataset, ML algorithm, and the development of the proposed frameworks. In Section 3, the findings of the research work have been presented and explained. Then we have compared our models with existing works in Section 4. Finally, we have concluded this research work, highlighting the key findings in Section 5.

2. Materials and Methods

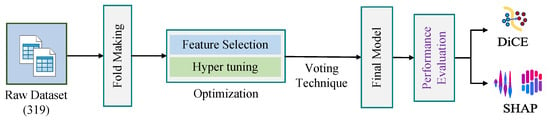

In this section, we have presented the dataset used in this work. We have also described the ML algorithms used in this work, such as SCSO, RF, SHAP, and DiCE, including the equations of evaluation metrics. Figure 2 shows our overall methodology for the research work.

Figure 2.

Research methodology.

2.1. Dataset

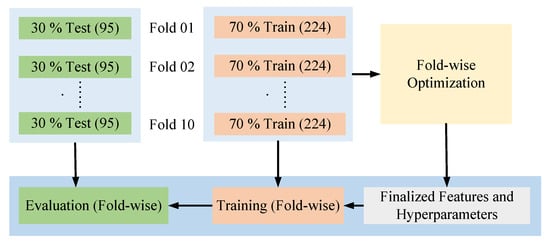

In this study, we utilized the gallstone dataset publicly available from the UC Irvine Machine Learning Repository (Gallstone-UCI dataset) [10], accessible at https://archive.ics.uci.edu/dataset/1150/gallstone-1 (accessed on 19 July 2025). This dataset comprises 319 records (161 gallstone patients and 158 healthy controls) collected from the Internal Medicine Outpatient Clinic of Ankara VM Medical Park Hospital, each represented by 38 quantitative attributes in addition to a binary target label indicating the presence (0) or absence (1) of gallstones. The dataset is fully complete, containing no missing values, and therefore does not require any imputation. Additionally, all categorical and binary features are already labeled and encoded, eliminating the need for further preprocessing. The dataset comprises 161 patients with gallstones and 158 healthy controls, reflecting a balanced class distribution. We have prepared a 10-fold dataset, ensuring proper shuffling to facilitate the effective implementation of the machine learning algorithms. In each fold, the dataset was split into two distinct sets: 70% for training and 30% for testing.

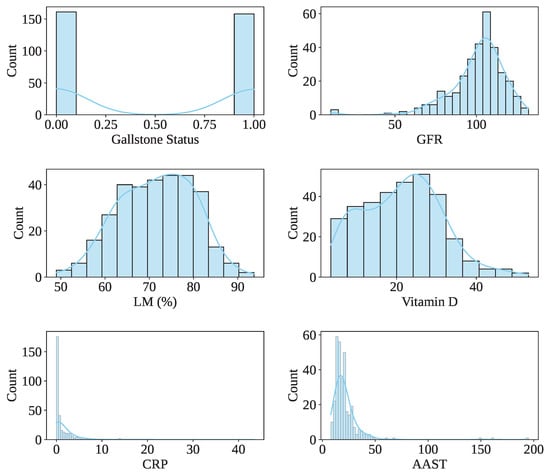

These attributes are derived from routine clinical laboratory evaluations and include hematological parameters (e.g., white blood cell count, red blood cell indices, platelet count), hepatic function biomarkers (e.g., alanine transaminase [ALT], aspartate transaminase [AST], alkaline phosphatase [ALP], total and direct bilirubin), lipid profile components (e.g., total cholesterol, HDL, LDL, triglycerides), and renal function indicators (e.g., creatinine, urea), among others. Demographic variables such as age and gender are also included. Table 1 presents the description of the Gallstone-UCI dataset. The dataset has 161 gallstone patients and 158 healthy controls, indicating a balanced dataset. In the dataset, 91.54% (292) of people’s ages vary from 30 to 70, indicating that most of the people are middle-aged. The male and female patient ratio is 162:157. Figure 3 shows the data value distribution of the rest of some features, including the target class of the dataset.

Table 1.

Feature overview of the gallstone dataset (Fet. = Feature; Bin. = Binary; Cat. = Categorical; Con. = Continuous; Int.=Integer).

Figure 3.

Distribution of dataset values of some features, including the target class.

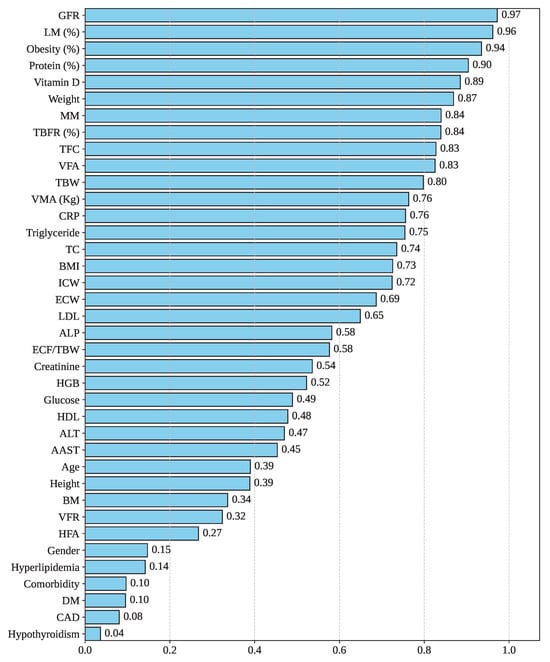

Figure 4 illustrates the Cramer’s V correlation between Gallstone Status and the other features in the dataset. Cramer’s V is a statistical measure used to assess the strength of association between variables, particularly suitable for contingency tables larger than [22]. As depicted in the figure, the feature GFR exhibits the highest correlation with Gallstone Status, with a score of 0.97. Following that, the features LM, Obesity, Protein, and Vitamin demonstrate strong associations, with Cramer’s V values of 0.96, 0.94, 0.90, and 0.89, respectively. However, Hyperlipidemia, Comorbidity, DM, CAD, and Hypothyroidism have the lowest correlation score of 0.14, 0.10, 0.10, 0.08, and 0.04. So, GFR, LM, and Obesity have a greater impact on gallstone formation, whereas DM, CAD, and Hypothyroidism have a lesser influence on gallstone formation.

Figure 4.

Feature correlation with gallstone status.

2.2. Random Forest Classifier

Random Forest (RF) classifier, introduced by Breiman [23], is an ensemble-based machine learning method that constructs multiple decision trees using randomly sampled subsets of the dataset and features. This approach enhances predictive accuracy and mitigates overfitting. RF leverages bootstrap aggregation (bagging) along with random feature selection during tree construction [24]. The ensemble of base learners (decision trees) can be represented using Equation (1) [23].

Here, , and represents a randomly drawn parameter vector that guides the learning process for each base learner, , identifying the class for the input vector x. The performance of each decision tree is evaluated using the Gini impurity, which is defined in Equation (2) [24].

In this expression, s denotes a split or node in the tree, M is the total number of classes, and refers to the class label. The majority voting among all individual trees determines the final output. The generalization error of an RF classifier is denoted as shown in Equation (3) and is defined by the probability that the margin function is less than zero over the joint distribution of the input vector X and its true label Z:

RF is widely recognized for its high predictive accuracy, robustness against outliers, scalability to high-dimensional data, interpretability, and computational efficiency in parallel processing environments [25].

2.3. Sand Cat Swarm Optimization

Sand Cat Swarm Optimization (SCSO) is a nature-inspired metaheuristic algorithm developed by Seyyedabbasi and Kiani in 2022, which simulates the unique hunting behavior of sand cats in arid desert regions [26]. Sand cats possess a highly evolved ability to detect underground prey using low-frequency hearing. Upon identifying the location of prey, they perform a strategic and rapid leap from an optimal angle to capture it. This natural hunting strategy has been abstracted into two main algorithmic phases: Exploration and Exploitation.

The switching between exploration and exploitation is controlled by the value of R, as shown in Equation (4), where is a random number between 1 and 0 and is varied from 2 to 0 and calculated by the Equation (5) [26]. is the hearing characteristic of the sand cat, is the current iteration, and is the maximum iteration number. When , exploitation occurs; triggers exploration.

The individual sensitivity of the sand cat is calculated by Equation (6), which assigns unique search radii to each sand cat by scaling with randomness [26]. Further, the position in the exploration phase is updated using Equation (7). The agents move relative to the best candidate position () and their current position (), with scaling step size and ensuring comprehensive space coverage.

In the exploitation phase, the sand cats update their position by the value of in Equation (8) [26]. It manages prey-attack behavior (). computes movement direction toward global best (), while (with chosen via Roulette Wheel) directs angled pounces.

2.3.1. Justification of Choosing SCSO

The Sand Cat Swarm Optimization (SCSO) algorithm has been selected due to its proven effectiveness in balancing exploration and exploitation during optimization [26]. Extensive benchmarking on 30 test functions, including both classical and complex CEC2019 functions, has shown that SCSO has achieved the best solution in 63.3% of cases, demonstrating superior convergence and robustness compared to other metaheuristic algorithms. Furthermore, SCSO has been successfully applied to various complex engineering design problems, confirming its ability to locate local and global optima reliably. SCSO is effective because it is stable, flexible, simple to implement, derivative-free, and computationally efficient, making it adaptable to a wide range of problems [27]. Previous studies have shown the multidimensional implementation of the SCSO algorithm, such as power management [28], battery quality management [29], biomedical [15], and cancer classifications [16]. These characteristics make SCSO particularly suitable for feature selection and hyperparameter optimization in the gallstone classification task, where efficient search and convergence are critical for reducing computational cost while maintaining high model performance.

2.3.2. Optimizer Problem Development

We have designed our framework in which the dataset has been transformed into a 10-fold cross-validation set, maintaining 70% of the data for training and 30% for testing. Then, each of the train fold sets has been sent for optimization separately. The optimization window has two main tasks: (I) feature reduction and (II) hyperparameter tuning. We have integrated SCSO with the RF classifier to calculate the fitness of each searching agent (cat) participating in the hunting process. F-score has been considered as the cost function. We have used 100 iterations (epochs) and 50 population size. After each iteration, the RF classifier has calculated the cost function for each agent and assigned it as the current best agent. Further, after 100 epochs, the SCSO has returned the global best fitness value, including the best hyperparameter sets and selected features. This whole process has been detailed in Algorithm 1.

| Algorithm 1 MHA-Based Optimization for Each Training Fold Using RF Hyperparameters |

|

Since each training set in the 10-fold cross-validation has been optimized independently, we obtained 10 different sets of features and hyperparameters. To finalize the model configuration, we applied a majority voting strategy (taking the value of respective parameters that have appeared in the highest number of folds) to determine the optimal hyperparameters and used the union of all selected feature subsets to form the final feature set. Figure 5 presents the main operational view of the process from optimization to evaluation of each distinct fold set, while ensuring no data leakage of the unseen data to the model before the evaluation.

Figure 5.

Operational task from optimization to evaluation.

2.3.3. Performance Evaluation

To assess the effectiveness of the developed models, five key evaluation metrics have been utilized: Accuracy, F1-score, Precision, Recall, and Area Under the ROC Curve (AUC). Furthermore, computational efficiency has been examined by analyzing two time-based measures: training time and testing time. The time complexities have been evaluated on a per-fold and per-sample basis. For each fold, we directly measured the total training and testing time. Since each fold contains 224 training samples and 95 testing samples, the average time per sample was obtained by dividing the total time by the corresponding number of samples in each category. Finally, we have presented the average per-sample training and testing time across all folds. The formal definitions of the employed metrics are presented below:

In these formulas, (True Positives) refers to the number of instances where the model correctly identifies the positive class (e.g., patients with heart gallstones), while (True Negatives) indicates the correct prediction of negative class instances. (False Positives) refers to cases where the model incorrectly predicts a negative instance as positive, and (False Negatives) captures the positive instances that were misclassified as negative.

2.3.4. SHAP-Based Interpretability

SHapley Additive exPlanations (SHAP) is a popular interpretability framework proposed by Lundberg et al. [30], which builds on the concept of Shapley values from cooperative game theory, originally developed by Lloyd Shapley [31]. SHAP calculates the individual contribution of each feature j to a particular prediction, as defined by the following equation:

Here, G represents the complete set of input features, and R is any subset of G that does not include the feature j. The term denotes the model’s output when only the features in subset R are considered, while reflects the prediction with feature j included.

The final prediction for a given instance x can be approximated by summing the expected model output and the SHAP values of all features:

If the value of exceeds the classification threshold (e.g., 0.5), the instance is classified as Class 1 (e.g., Dengue Positive); otherwise, it is assigned to Class 0.

2.3.5. Diverse Counterfactual Explanations (DiCE)

Diverse Counterfactual Explanations (DiCE) is a model-agnostic technique that generates a set of diverse and plausible counterfactual instances to help users understand how modifications to input features can lead to different model predictions [32]. The DiCE optimization objective is formulated as follows in Equation (17), where denotes the set of k counterfactuals generated for a given input x:

In this formulation, the term denotes the classification loss, driving each counterfactual toward the specified target class y. The proximity component discourages counterfactuals that stray too far from the original instance x, thereby maintaining realism. Finally, leverages Determinantal Point Processes (DPPs) to encourage variety among the generated counterfactuals, ensuring the returned set offers multiple distinct and actionable alternatives. The hyperparameters and control the trade-off between proximity and diversity. By optimizing this objective, DiCE provides a diverse set of actionable alternatives that can alter the model’s output, enabling users to explore multiple pathways to achieve a desired prediction, without assuming a single optimal feature modification [32].

3. Results

The Results section is structured to assess the performance of classifiers under multiple configurations, facilitating a comprehensive comparison. Initially, evaluations are conducted using classifiers with their default parameters, both without cross-validation and with cross-validation, to observe the baseline performance. Subsequently, the classifiers are re-evaluated after optimization, again considering both scenarios, with and without cross-validation. This layered approach enables a clear understanding of the impact of both parameter tuning and cross-validation on overall classification performance.

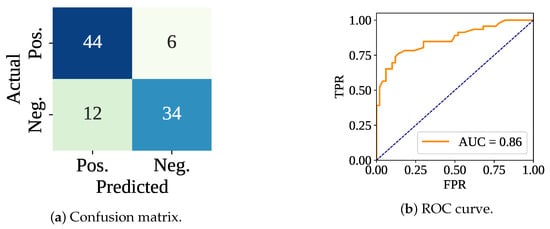

3.1. Only Classifier Without Cross-Validation

Table 2 shows both the training and testing performances, including the execution time. RF classifier has gained a 100% training accuracy with the default parameters and without cross-validation. However, the test performances have varied. The testing accuracy, F-score, Precision, and Recall have been recorded as 81.25%, 79.07%, 85.00%, and 73.91%, respectively. Figure 6 shows the confusion matrix and ROC curve, in which the model has made 78 (44 and 34 ) correct predictions out of 96. In addition, the model has made 6 and 12 predictions. The ROC curve shows that this time the model has achieved an AUC value of 0.86. The total training samples have taken 230.46 ms to be trained, meaning 1.03 ms per sample. However, the per-sample testing time has been calculated as 0.16 ms.

Table 2.

Performance using only RF classifier without cross-validation (Acc. = Accuracy; F1 = F-score; Pre. = Precision; Rec. = Recall; ms = milliseconds).

Figure 6.

Confusion matrix and ROC curve using only the classifier without cross-validation.

3.2. Only Classifier with Cross-Validation

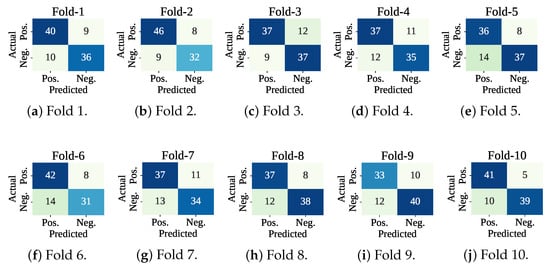

We have also performed a 10-fold cross-validation operation, using only the classifier. During this operation, we have kept the classifier with default parameters. Table 3 presents the results of a 10-fold cross-validation using only the Random Forest (RF) classifier with its default parameters. For each fold, it has reported the performance metrics—Accuracy, F1-score, Precision, Recall, and computation time—for both the training and testing phases. The classifier has consistently achieved perfect scores (100%) across all training folds, indicating that it has perfectly fitted the training data. However, the test performance has varied across folds, with accuracy ranging from 74.74% to 84.21%. On average, the RF classifier has achieved 78.42% accuracy, 77.75% F1-score, 80.01% precision, and 75.75% recall on the test sets, while maintaining a mean fold-wise training time of 252.93 ms and testing time of 9.82 ms. These results have demonstrated the classifier’s stable generalization capability and computational efficiency.

Table 3.

Performance using only RF classifier with 10-fold cross-validation (Acc. = Accuracy; F1 = F-score; Pre. = Precision; Rec. = Recall; ms = milliseconds).

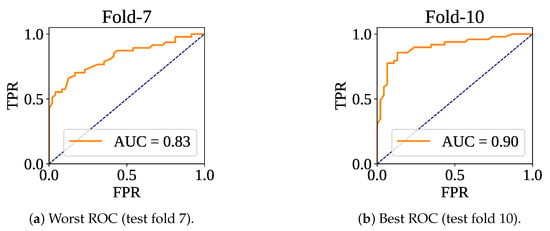

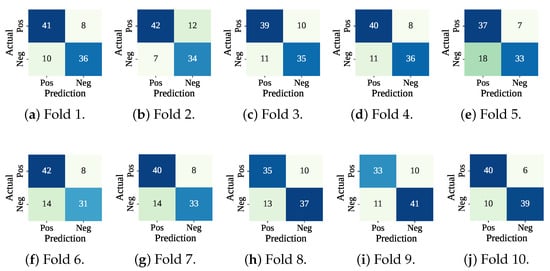

Figure 7 shows the confusion matrices of the folds. The lowest number of misclassifications has been recorded at fold 10, and the highest number of wrong predictions has been found at fold 7. At test fold 10, the model has made 15 wrong predictions (: 10 and : 5). At test fold 7, the classifier model has found 24 wrong predictions (: 13 and : 11). Figure 8 shows the ROC curve for the fold set 7 (worst AUC: 0.83) and fold set 10 (best AUC: 0.90).

Figure 7.

Confusion matrices using only the classifier with cross-validation.

Figure 8.

ROC curve for worst and best test fold.

3.2.1. Optimized Classifier with Cross-Validation

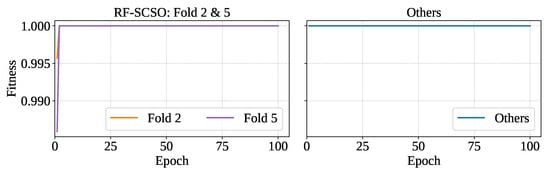

The RF classifier optimized with SCSO (RF-SCSO) using the training set of each fold separately has minimized the number of features and ensured proper hyperparameter tuning. RF-SCSO has achieved the highest fitness in each fold of optimization. However, training folds 2 and 5 have shown slower convergence in reaching the fitness saturation of 1. The optimizer has achieved fitness of 1 at the first epoch for all training sets except folds 2 and 5, which have reached it at epoch 2. Figure 9 shows the fitness vs. epoch plot for the optimized model.

Figure 9.

Fitness vs epoch for optimized model.

During the optimization process, each cross-validation fold has independently selected a distinct subset of features along with a corresponding set of hyperparameters. Table 4 presents the optimized feature subsets and hyperparameters obtained from each of the ten folds, including the finalized features and hyperparameters. To construct a robust and generalized model, the finalized feature set has been derived by taking the union of all features selected across the folds, thereby preserving any feature that was deemed important in at least one fold. In contrast, the finalized hyperparameters have been determined using majority voting, where the most frequently occurring values among the folds have been selected as the final configuration.

Table 4.

Fold-wise and finalized optimized hyperparameters and selected features.

The table provides a fold-wise breakdown of the selected feature indices and the optimized values of four hyperparameters, denoted as through . These hyperparameters may correspond to model-specific tuning variables such as the number of estimators, tree depth, or minimum sample split values, depending on the classifier used. Notably, fold-wise variations in both feature selection and hyperparameter values highlight the model’s sensitivity to different data partitions. The final row of the table consolidates the outcome of this optimization strategy: a unified feature set comprising 13 distinct features {6, 7, 8, 12, 16, 20, 25, 27, 30, 32, 33, 35, 37}, and a consistent set of hyperparameters (, , , ) chosen through statistical mode. This comprehensive strategy ensures that the final model benefits from the diverse insights captured across all folds while maintaining parameter stability for reproducibility and performance.

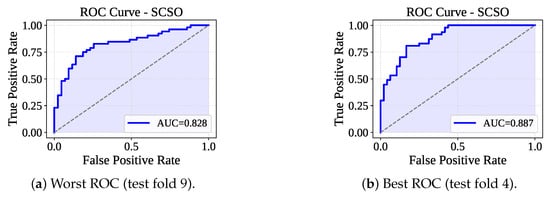

Table 5 presents the performance of the RF-SCSO model evaluated through 10-fold cross-validation. The model has consistently achieved perfect training performance across all folds, with 100% accuracy, F1-score, precision, and recall. On the test sets, the model has shown stable and competitive performance, with an average accuracy of 78.32%, F1-score of 77.44%, precision of 80.36%, and recall of 75.01%. The average fold-wise training and testing times were 736.09 ms and 16.95 ms, respectively. Figure 10 lists the confusion matrices received from the RF-SCSO. Test fold set 5 has faced the maximum misclassification (: 18 and : 7), and test fold set 10 has faced the minimum number of misclassifications (: 10 and : 6). Figure 11 presents the ROC curve for the test fold set 9 (Worst AUC) and 4 (Best AUC). RF-SCSO has calculated the worst AUC = 0.828 and best AUC = 0.887 for the test folds 9 and 4, respectively.

Table 5.

Performance using RF–SCSO with 10-fold cross-validation (Acc. = Accuracy; F1 = F-score; Pre. = Precision; Rec. = Recall; ms = milliseconds).

Figure 10.

Confusion matrices using RF-SCSO with cross-validation.

Figure 11.

ROC curve for worst and best test fold.

For balanced comparison of the models, we have additionally performed the RF-SCSO without CV. Table 6 presents the performances of the RF-SCSO without CV, including the total training and testing time for 224 and 95 samples, respectively. The model achieved 100% accuracy, F1-score, precision, and recall on the training set with a training time of 518.94 ms, while on the test set it obtained 79.17% accuracy, 77.78% F1-score, 79.55% precision, and 76.09% recall with a testing time of 21.80 ms. The per-sample training and testing times are 2.32 ms and 0.23 ms, respectively.

Table 6.

Performance using RF-SCSO classifier without cross-validation (Acc. = Accuracy; F1 = F-score; Pre. = Precision; Rec. = Recall; ms = milliseconds).

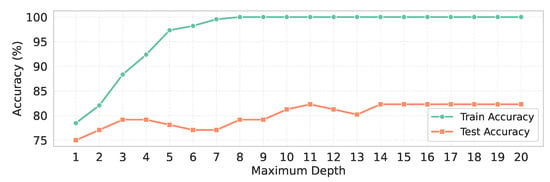

3.2.2. Controlling the Overfitting

As there is a notable gap between the training and test accuracy, we have conducted an additional experiment to address the overfitting issue in the only RF classifier without CV, which has demonstrated the highest performance. Specifically, we have trained the RF classifier without CV for different values of the maximum depth, ranging from 1 to 20, to observe its effect on model generalization.

From Table 7, when the maximum depth has been set to lower values (e.g., depth = 1–3), the training accuracy has remained below 75%, and the test accuracy has been below 72%, with a very small gap of around 3%, indicating the absence of overfitting. However, as the maximum depth has increased, the training accuracy has gradually risen, reaching above 95% at higher depths, while the test accuracy has improved only marginally, peaking around 78%. Consequently, a substantial gap between training and test accuracy has emerged, indicating clear overfitting. A matter of consideration is that after the maximum depth has reached 14, both training and test accuracies have become saturated, with the gap stabilizing at approximately 17.71%, as shown in Figure 12.

Table 7.

Performance of only RF without CV for different maximum depths (Acc. = Accuracy; F1 = F-score; Pre. = Precision; Rec. = Recall; Gap = Train-Test Accuracy Gap).

Figure 12.

Maximum depth vs. training and test accuracy.

3.2.3. Computational Complexity

The Table 8 presents the computational time complexity of two models using 10-fold cross-validation. For the standard RF classifier, the fold-wise training time has ranged from 206.77 ms to 499.75 ms, and the training per sample has varied from 0.923 ms to 2.231 ms, with averages of 252.93 ms and 1.129 ms, respectively. The fold-wise testing time has ranged from 8.15 ms to 13.25 ms, and the testing per sample has varied from 0.086 ms to 0.139 ms, with averages of 9.82 ms and 0.103 ms, respectively.

Table 8.

Computational time complexity of the models (ms = milliseconds).

For the RF–SCSO model, the fold-wise training time has ranged from 506.59 ms to 1358.37 ms, and the training per sample has varied from 2.262 ms to 6.064 ms, with averages of 736.09 ms and 3.286 ms, respectively. The fold-wise testing time has ranged from 7.71 ms to 64.64 ms, and the testing per sample has varied from 0.081 ms to 0.680 ms, with averages of 16.95 ms and 0.178 ms, respectively.

3.2.4. Misclassifications

In this subsection, misclassification indices have been presented. We have enlisted the results from the three different approaches to classify the presence of gallstones. We have some similar records that have been misclassified in each approach. Indexes 73, 94, 116, 118, 147, 176, 177, 194, 195, 203, 211, 250, 299, and 316 have been misclassified in each approach (only classifier with and without cross-validation and RF-SCSO with cross-validation). Table 9 and Table 10 have presented the clinical profiles of the misclassified cases, specifically the false negatives (actual gallstone presence predicted as absence) and false positives (gallstone absence predicted as presence). The analysis of the false negative group has revealed that these samples have generally exhibited lower levels of key clinical markers, including CRP (mean = 0.718), Creatinine (mean = 0.734), and AAST scores (mean = 15.4). All patients in this group have had no diabetes (DM = 0), with relatively moderate obesity percentages (mean = 31.74%) and lower Glucose (mean = 94.8) and LDL (mean = 161.2) values. These characteristics have likely contributed to a subtler clinical presentation, which has led the model to misclassify them as gallstone-negative.

Table 9.

False negative predictions.

Table 10.

False positive predictions (In. = Index).

Conversely, the false positive group has shown higher values for several metabolic and inflammatory features, such as Glucose (mean = 115.67), ALP (mean = 81.89), and LDL (mean = 123.22), which may have mimicked the profiles of gallstone-positive cases. Some patients in this group have had diabetes (DM = 1 in two cases), and obesity levels have been more variable (mean = 27.64%). Additionally, higher ICW (mean = 24.17) and Weight (mean = 82.71 kg) have been observed, which may have biased the model toward a positive prediction.

3.3. SHAP Analysis

In this SHAP analysis, we have presented the mean SHAP plot and summary plot to visualize the feature ranking and contribution to the overall model. Then we have used a waterfall plot for depth analysis of the individual predictions, especially for misclassification.

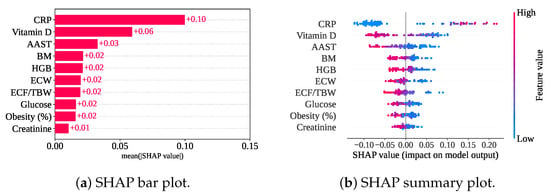

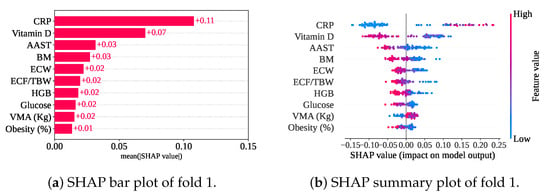

3.3.1. SHAP of Only Classifier Without Cross-Validation

Figure 13 shows the SHAP plots for the top ten features based on only the RF classifier without a cross-validation model. SHAP has found that CRP has the highest mean SHAP value (), indicating the highest contribution to the model. Vitamin D, AAST, BM, and HGB are the next four top contributors to the model, with the mean SHAP value of , , , and , respectively, as shown in Figure 13b.

Figure 13.

SHAP plots for only the classifier without cross-validation.

Figure 13a shows a summary plot of the only classifier model without cross-validation. A lower value (blue color) of CRP has mainly contributed to the model with a negative SHAP value. The negative SHAP value of the feature has pulled the decision towards class 0 (gallstone positive). However, the higher value (pink color) of other features has contributed to the model’s negative SHAP value, and this has also led to the decision in favor of the gallstone-positive class.

3.3.2. SHAP of Only Classifier with Cross-Validation

Figure 14 shows the measured mean SHAP value of (CRP), (Vitamin D), (AAST and BM), (ECW, ECF/TBW, HGB, Glucose, and VMA), and (Obesity). So, CRP, Vitamin D, AAST, BM, and ECW are the top five features for this model, too. In comparison between only the classifier with and without cross-validation, HGB has been replaced with ECW from the fifth position. Nevertheless, the summary plot has also shown the feature impact on the model. In cross-validation with only a classifier, the summary plot shows a similar nature. In addition, a lower VMA has achieved a negative SHAP value, leading the prediction towards the gallstone-positive group.

Figure 14.

SHAP plots for only the classifier with cross-validation.

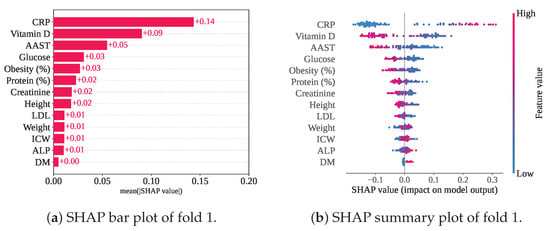

3.3.3. SHAP for RF-SCSO

Figure 15 illustrates the mean SHAP values and summary plot, highlighting the rankings of the selected features within the RF-SCSO framework. Among the 13 selected features, CRP has consistently emerged as the top-ranked contributor, as observed in previous frameworks. Notably, lower CRP levels have been associated with negative SHAP values, indicating a link with Class 0 (gallstone positive). Vitamin D and AAST have followed CRP in importance, with mean SHAP values of and , respectively, while CRP has maintained the highest mean SHAP value of . Additionally, higher levels of Vitamin D, AAST, Glucose, and Obesity have influenced the model towards classifying individuals as gallstone positive.

Figure 15.

SHAP plots for RF-SCSO with cross-validation.

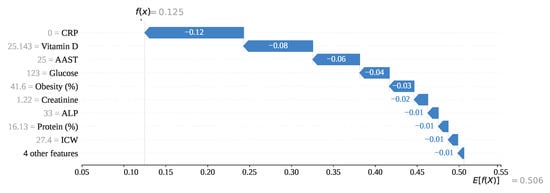

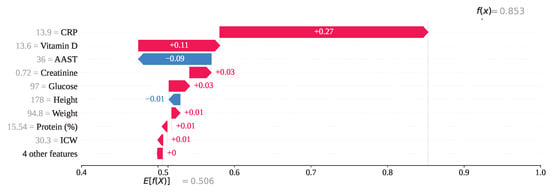

To understand the model at a fundamental level, we have conducted an in-depth analysis of individual predictions, covering each type: (Index: 25), (Index: 208), (Index: 176), and (Index: 76). Figure 16 illustrates the prediction formation process for index 208 using a SHAP-generated waterfall plot. In this case, CRP, with a feature value of 0, has contributed the most to the prediction with a SHAP value of . Additionally, features such as Vitamin D (), AAST (), Glucose (), and Obesity (), along with other contributing features, have all exhibited negative SHAP values. Starting from the base value , these cumulative negative contributions have led to a final output of , which is below the classification threshold of 0.5. Consequently, the model has correctly predicted the instance as class 0 (gallstone positive).

Figure 16.

Individual prediction of (Index: 25).

For index 208, the feature CRP with a value of 13.9 has exhibited the highest SHAP value of , indicating a strong positive influence on the model’s output as shown in Figure 17. Vitamin D, with a feature value of 13.6, has also contributed positively with a SHAP value of . In contrast, AAST, having a value of 36, has contributed negatively with a SHAP value of . All other features, except Height, have shown a positive influence on the prediction outcome. Consequently, the model has classified this instance as class 1 (gallstone negative), with a final SHAP-based output value of , which exceeds the decision threshold of 0.5.

Figure 17.

Individual prediction of (Index: 208).

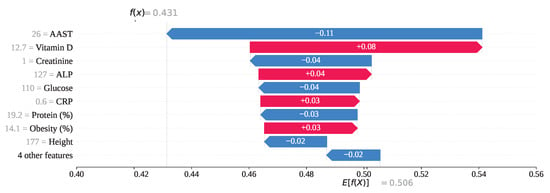

For index 176 in Figure 18, the feature AAST, with a value of 26, has ranked first in influence with a SHAP value of −0.11, strongly contributing to the prediction of gallstone positivity. Additionally, features such as Creatinine = 1, Glucose = 110, Protein = 19.2, and Height = 177 have also yielded negative SHAP values, collectively steering the model toward an incorrect prediction of class 0. In contrast, Vitamin D = 12.7, ALP = 127, CRP = 0.6, and Obesity = 14.1 have produced positive SHAP values, attempting to counterbalance the misclassification. Ultimately, the cumulative SHAP value for this instance has been computed as , which falls below the 0.5 threshold. Therefore, the model has predicted this individual as gallstone-positive, although the true label is gallstone-negative.

Figure 18.

Individual prediction of (Index: 176).

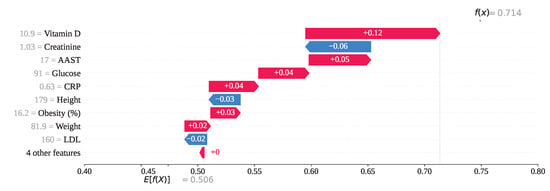

For index 73 in Figure 19, several features have positively influenced the model’s prediction. Specifically, Vitamin D, AAST, Glucose, CRP, Obesity, and Weight have contributed SHAP values of , , , , , and , respectively. As a result of these positive contributions, the overall SHAP output value has been calculated as , leading the model to predict class 1 (gallstone negative). However, this prediction is incorrect, as the individual has gallstones. On the other hand, Creatinine = 1.03, Height = 179, and LDL = 160 have exhibited negative SHAP values of , , and , respectively, attempting to steer the model toward the correct classification. Despite their influence, these features were insufficient to override the stronger positive contributions, resulting in a misclassification.

Figure 19.

Individual prediction of (Index: 73).

3.4. DiCE Analysis

In all of the developed frameworks, CRP, Vitamin D, and AAST have consistently emerged as the top three most influential features based on feature selection and SHAP analyses. To further understand their contribution to individual predictions, we have conducted a DiCE analysis focused specifically on these features. Table 11 illustrates ten counterfactual scenarios generated for the subject at index 176, who in reality belongs to the non-gallstone class (class 1). However, the model has incorrectly classified this individual as having gallstones (class 0), making it a false positive prediction. The goal of this counterfactual analysis is to determine how minimal, feasible changes to the input features could reverse the model’s incorrect decision and result in a correct classification.

Table 11.

Ten generated counterfactuals showing changes in CRP, Vitamin D, AAST, and Gallstone Status for index 176.

As shown in the table, the most frequent and impactful change observed across all ten counterfactuals is the adjustment of CRP levels. In every counterfactual instance, the original CRP value of 0.6 has been increased, with values ranging from 3.0 to 15.2, which alone or in combination with slight adjustments in Vitamin D or AAST, successfully flip the model’s prediction from class 0 to class 1. For example, in CF#1, simply increasing CRP from 0.6 to 12.8 is sufficient to achieve a correct prediction, while in CF#2, a simultaneous increase in both CRP (0.6 → 14.7) and AAST (26.0 → 144.9) is necessary. Similarly, in CF#3 and CF#4, the prediction is corrected by moderate increases in both CRP and Vitamin D. Interestingly, CRP is the only feature that is altered in all ten counterfactuals, which strongly suggests that CRP is the most decisive feature influencing the model’s classification for this individual. Changes in Vitamin D and AAST occur less frequently and are often secondary, indicating their supportive but less dominant roles.

We have conducted a DiCE-based counterfactual analysis for the individual at index 76, whose actual label is gallstone positive (class 0). However, the models have incorrectly classified this case as gallstone negative (class 1), resulting in a false prediction. Table 12 presents the ten counterfactual instances for this case. Notably, in all counterfactuals, the CRP value remains unchanged at 0.63, suggesting that CRP has had minimal influence on this particular decision. Instead, the model’s prediction has been altered primarily through increases in Vitamin D and AAST values. In the majority of counterfactuals, either Vitamin D or AAST—or both—have been significantly increased. For example, in CF#1, Vitamin D has been adjusted from 10.9 to 18.1 and AAST from 17.0 to 60.4, which successfully corrects the model’s prediction. Similarly, CF#2 and CF#3 show a strong upward shift in both Vitamin D (to over 36.0) and AAST (to over 120.0), effectively flipping the model output. In some cases (e.g., CF#5), only Vitamin D has been changed (from 10.9 to 39.7), whereas in others like CF#6 and CF#9, only AAST has been modified, indicating that either feature alone can sometimes suffice to reverse the prediction.

Table 12.

Ten generated counterfactuals showing changes in CRP, Vitamin D, AAST, and Gallstone Status for index 73.

This pattern suggests that, for index 76, the model has placed significantly more weight on AAST and Vitamin D than on CRP in its decision-making process. The absence of any change in CRP across all ten counterfactuals reinforces this observation. Therefore, we can infer that misclassification in this case can be corrected by appropriate adjustments to Vitamin D and AAST values, highlighting their dominant influence in the prediction for this specific individual. Overall, the counterfactual explanations not only help correct a false negative prediction but also offer actionable insights into the specific features that govern the model’s decisions at an individual level.

To strengthen the DiCE explanation, we have incorporated the alteration of a correct prediction for index 208, a gallstone-positive individual correctly classified by the RF-SCSO model. We have generated five counterfactuals for this index to explore how the prediction could change from positive to negative. Table 13 presents these five counterfactuals. The analysis has demonstrated how altering key feature values can modify the model’s prediction. Specifically, lowering the CRP value from 13.9 to values between 0.1 and 0.5 has been sufficient to change the prediction from gallstone-positive (1) to gallstone-negative (0), while keeping Vitamin D and AAST largely unchanged. In the fifth counterfactual, simultaneous changes in CRP and Vitamin D have further illustrated how combinations of feature alterations have influenced the prediction. These results have highlighted the sensitivity of the RF-SCSO model to CRP and Vitamin D, and have demonstrated the practical utility of DiCE in identifying actionable feature-level interventions to correct misclassifications.

Table 13.

Five generated counterfactuals showing changes in CRP, Vitamin D, AAST, and Gallstone Status for index 208.

4. Discussion

In this study, we have implemented three machine learning (ML) approaches to classify individuals as gallstone-positive or gallstone-negative using a tabular dataset. While numerous ML-based models exist for gallstone detection, the majority rely on image-based data. Table 14 presents a comparative analysis between our models and existing approaches. According to the literature [5,6,7,8], image-based classification models have achieved accuracies of up to 98%. In contrast, Esen et al. employed a tabular dataset and reported an accuracy of 85.42%, albeit using a substantially large feature set comprising 38 variables.

Table 14.

Performance comparison (CV = Cross-validation).

In comparison, our proposed framework using only a Random Forest classifier without cross-validation (RF without CV) has achieved an accuracy of 81.25%, an F-score of 79.07%, a precision of 85%, and a recall of 73.91% using all 38 features. These results demonstrate strong compatibility with existing gallstone prediction models. To enhance the generalizability of the model, we have further employed a 10-fold cross-validation approach (RF with CV), which has yielded a mean accuracy of 78.42%, an F-score of 77.75%, a precision of 80.01%, and a recall of 75.75% using the same 38 features. Subsequently, we have applied the SCSO algorithm to reduce the number of input features while maintaining competitive performance. RF-SCSO without CV has achieved 79.17% accuracy, 77.78% F-score, 79.55% precision, and 76.09% recall, using 13 features. The final framework, RF-SCSO with CV, has achieved a mean accuracy of 78.32%, an F-score of 77.44%, a precision of 80.36%, and a recall of 75.01% using only 13 features. These findings indicate that the reduced feature set yields performance comparable to the full-feature RF with CV model, highlighting the effectiveness of the proposed optimization-based feature selection.

Moreover, this research has identified CRP, Vitamin D, and AAST as the most influential features for gallstone prediction. CRP is associated with gallstone-related inflammation and disease severity, serving as a useful biomarker in gallbladder disorders [33]. Another medical research on 4484 participants has found that CRP levels are associated with a greater prevalence of gallstones [34]. Liu et al. have claimed that CRP is an independent risk factor for gallstone disease [35]. Another study on 6873 people has shown that higher dietary vitamin D consumption (D2+D3) is positively associated with gallstone incidence [36]. AAST is a liver enzyme; a higher level of AAST has a positive association with gallstone formation [37]. Another study on 55,531 has shown that liver enzymes may increase the risk of developing gallstones [38]. So, previous studies have proven the association of CRP, Vitamin D, and AAST with gallstone classification. Our study has also identified these three features as the top three important features.

Variations in the values of CRP, Vitamin D, and AAST can significantly impact the model’s output and may even lead to misclassification, as demonstrated in the analysis of index 176 and index 73. For index 176, a prediction is incorrect, which has AAST = 26, Vitamin D = 12.7, and CRP = 0.6. In this case, AAST = 26 has played a vital role in making this wrong prediction. For index 76, an prediction is incorrect, in which Vitamin D (10.9), AAST (17), and CRP (0.63) have pushed the prediction to be class 1 (gallstone negative). Table 15 shows the mean value of the top three features separately for class 0 and class 1. In the original dataset, the mean feature values for a person with a gallstone are CPR = 0.46, Vitamin D = 24.90, and AAST = 23.91. Further, these values for the gallstone negatives are 3.27, 17.83, and 19.41, respectively, for CRP, Vitamin D, and AAST. The CRP value in the gallstone-positive group is lower than in the negative group. However, Vitamin D and AAST have higher levels in the gallstone-positive group compared to the gallstone-negative group. For the wrong prediction (predicted as class 1 or gallstone negative) indices shown in Table 9, the mean values of the selected three features are 0.718, 16.626, and 15.4, respectively, for CRP, Vitamin D, and AAST.

Table 15.

Selected features Gallstone Positive vs. Gallstone Negative.

On the other side, for the wrong prediction (predicted as class 0 or gallstone positive) indices shown in Table 10, the mean values of the selected three features are 0.279, 23.31, and 21.00, respectively, for CRP, Vitamin D, and AAST. This has shown a clear difference between the mean feature values of the wrong predicted indices and has a relation with the mean of the original dataset.

In the dataset, the gallstone-positive individuals have a mean CRP of 0.46, which is lower than the negative individuals. Further, the indices also have a mean CRP of 0.279, which is surprisingly low. Not only that, in the original dataset, the positive cases have a higher mean of Vitamin D and AAST, as shown in the cases. Because of these similarities in feature values, the models have classified them as . The same relation we have also identified for cases with the feature mean value of the negative individuals in the original dataset. So this research has shown that the individuals who have lower CRP, higher Vitamin D, and AAST, have a higher chance of gallstones. DiCE has also mentioned the same relation as shown in Table 11 and Table 12.

Additionally, we have presented that the overfitting issue can be solved by reducing the maximum depth. At lower depths, the accuracy has been relatively low, but the gap between training and test performance has been minimal, while higher depths have provided better accuracy but have raised the concern of overfitting. So, the main contribution of this paper relies on the reduction of features, resolving the over-fitting issue, and enhancing model interpretability, supported by SHAP-based feature importance analysis and DiCE-driven exploration of misclassifications and error correction.

In clinical practice, the findings from SHAP and DiCE can play an essential role. As SHAP has identified the most influential features (CRP, Vitamin D, AAST), clinicians can place greater emphasis on these features during diagnosis. Additionally, SHAP has demonstrated how the model generates individual predictions, allowing clinicians to determine which features are playing a key role for a particular patient. The clinical computational efficiency has been achieved by reducing the original 38 features to 13 optimized features, thereby minimizing diagnostic tests, lowering computational burden, and enabling faster clinical decision-making. Finally, the DiCE explanations can assist in addressing misclassifications and anomalies in diagnosis, providing actionable insights for improving patient assessment.

Future Scope: This study has successfully classified gallstone presence using a tabular dataset and has shown commendable performance with the use of RF and SCSO. Beyond achieving reliable prediction, it has also provided important insights into feature rankings, causes of misclassification, and ways to correct incorrect predictions. These outcomes have the potential to contribute significantly to the medical field. In the future, this framework can be integrated into advanced medical technologies such as smart diagnosis systems, autonomous disease detection tools, and smartphone-based health monitoring applications. Such integration may enhance early detection, support clinical decision-making, and improve overall patient care.

Limitations: This study has several limitations that should be acknowledged. First, the dataset is relatively small (319 subjects), which restricts the statistical power and robustness of the findings. Second, as the data were collected from a single center, the results may be limited in their generalizability to broader populations with diverse demographic and clinical characteristics. Third, the absence of an external dataset with the same feature set prevented independent validation, which is critical for confirming the robustness and clinical applicability of our models. Finally, we observed a discrepancy between training and testing performance, suggesting a potential risk of overfitting, possibly driven by the limited sample size. Future work should focus on incorporating larger, multi-center datasets with external validation to improve the generalizability and reliability of gallstone prediction models.

5. Conclusions

Gallstones are hard crystalline formations in the gallbladder or bile ducts, primarily caused by excess cholesterol or bilirubin in bile, and affect approximately 10–20% of adults worldwide. Recent research has demonstrated the growing acceptance of ML-based approaches for gallstone prediction using both image and tabular data. In this study, we have proposed a Random Forest (RF) classifier optimized with the Sand Cat Swarm Optimization (SCSO) algorithm. Our only RF without a CV framework has achieved an accuracy of 81.25%, an F-score of 79.07%, a precision of 85%, and a recall of 73.91% using 38 features, while RF with CV has obtained a 10-fold cross-validation mean accuracy of 78.42% with the same feature set. Furthermore, the optimized RF-SCSO without and with CV framework has achieved accuracy of 79.17% and 78.32% (mean accuracy), respectively, using only 13 features, indicating that SCSO has provided significant feature reduction with minimal performance loss. To enhance interpretability, SHAP has been employed to explain the model’s predictions, revealing that CRP, Vitamin D, and AAST are the three most influential features across all frameworks. Additionally, DiCE has demonstrated the error correction mechanism for False Positive () and False Negative () predictions. These findings underscore the potential of combining RF and SCSO for efficient and interpretable gallstone prediction.

Author Contributions

Conceptualization, P.S., J.-J.T., and A.-A.N.; Formal analysis, P.S. and A.-A.N.; Funding acquisition, J.-J.T.; Investigation, P.S. and A.-A.N.; Methodology, P.S. and A.-A.N.; Resources, P.S.; Software, P.S. and J.-J.T.; Supervision, J.-J.T. and A.-A.N.; Validation, A.-A.N.; Visualization, P.S., J.-J.T., and A.-A.N.; Writing—original draft, P.S.; Writing—review & editing, J.-J.T. and A.-A.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Dataset is available on UC Irvine Machine Learning Repository. Dataset Name: Gallstone. https://doi.org/10.1097/md.0000000000037258.

Acknowledgments

We have used some of the large Language Models, such as Chat GPT (Version: GPT-4) and DeepSeek (Version: DeepSeek-V3) to enhance the structure of sentences.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lammert, F.; Gurusamy, K.; Ko, C.W.; Miquel, J.; Méndez-Sánchez, N.; Portincasa, P.; van Erpecum, K.J.; Laarhoven, C.J.; Wang, D.Q.-H. Gallstones. Nat. Rev. Dis. Prim. 2016, 2, 16024. [Google Scholar] [CrossRef] [PubMed]

- Gurusamy, K.S.; Davidson, B.R. Gallstones. BMJ 2014, 348, g2669. [Google Scholar] [CrossRef] [PubMed]

- Cariati, A. Gallstone classification in western countries. Indian J. Surg. 2015, 77 (Suppl. 2), 376–380. [Google Scholar] [CrossRef] [PubMed]

- Thamer, S.J. Pathogenesis, diagnosis and treatment of gallstone disease: A brief review. Biomed. Chem. Sci. 2022, 1, 70–77. [Google Scholar] [CrossRef]

- Bozdag, A.; Yildirim, M.; Karaduman, M.; Mutlu, H.B.; Karaduman, G.; Aksoy, A. Detection of Gallbladder Disease Types Using a Feature Engineering-Based Developed CBIR System. Diagnostics 2025, 15, 552. [Google Scholar] [CrossRef]

- Wang, L.F.; Wang, Q.; Mao, F.; Xu, S.H.; Sun, L.P.; Wu, T.F.; Zhou, B.Y.; Yin, H.H.; Shi, H.; Zhang, Y.Q.; et al. Risk stratification of gallbladder masses by machine learning-based ultrasound radiomics models: A prospective and multi-institutional study. Eur. Radiol. 2023, 33, 8899–8911. [Google Scholar] [CrossRef]

- Obaid, A.M.; Turki, A.; Bellaaj, H.; Ksantini, M.; AlTaee, A.; Alaerjan, A. Detection of gallbladder disease types using deep learning: An informative medical method. Diagnostics 2023, 13, 1744. [Google Scholar] [CrossRef]

- Pang, S.; Ding, T.; Qiao, S.; Meng, F.; Wang, S.; Li, P.; Wang, X. A novel YOLOv3-arch model for identifying cholelithiasis and classifying gallstones on CT images. PLoS ONE 2019, 14, e0217647. [Google Scholar] [CrossRef]

- Hong, C.; Zafar, I.; Ayaz, M.M.; Kanwal, R.; Kanwal, F.; Dauelbait, M.; Bourhia, M.; Jardan, Y.A.B. Analysis of Machine Learning Algorithms for Real-Time Gallbladder Stone Identification from Ultrasound Images in Clinical Decision Support Systems. Int. J. Comput. Intell. Syst. 2025, 18, 73. [Google Scholar] [CrossRef]

- Esen, İ.; Arslan, H.; Esen, S.A.; Gülşen, M.; Kültekin, N.; Özdemir, O. Early prediction of gallstone disease with a machine learning-based method from bioimpedance and laboratory data. Medicine 2024, 103, e37258. [Google Scholar] [CrossRef]

- Rajwar, K.; Deep, K.; Das, S. An exhaustive review of the metaheuristic algorithms for search and optimization: Taxonomy, applications, and open challenges. Artif. Intell. Rev. 2023, 56, 13187–13257. [Google Scholar] [CrossRef]

- Sarker, P.; Ksibi, A.; Jamjoom, M.M.; Choi, K.; Nahid, A.A.; Samad, M.A. Breast cancer prediction with feature-selected XGB classifier, optimized by metaheuristic algorithms. J. Big Data 2025, 12, 78. [Google Scholar] [CrossRef]

- Stephan, P.; Stephan, T.; Kannan, R.; Abraham, A. A hybrid artificial bee colony with whale optimization algorithm for improved breast cancer diagnosis. Neural Comput. Appl. 2021, 33, 13667–13691. [Google Scholar] [CrossRef]

- Li, G.; Tan, Z.; Xu, W.; Xu, F.; Wang, L.; Chen, J.; Wu, K. A particle swarm optimization improved BP neural network intelligent model for electrocardiogram classification. BMC Med. Inform. Decis. Mak. 2021, 21 (Suppl. 2), 99. [Google Scholar] [CrossRef] [PubMed]

- Qtaish, A.; Albashish, D.; Braik, M.; Alshammari, M.T.; Alreshidi, A.; Alreshidi, E.J. Memory-based sand cat swarm optimization for feature selection in medical diagnosis. Electronics 2023, 12, 2042. [Google Scholar] [CrossRef]

- Anupama, C.S.S.; Yonbawi, S.; Moses, G.J.; Lydia, E.L.; Kadry, S.; Kim, J. Sand cat swarm optimization with deep transfer learning for skin cancer classification. Comput. Syst. Sci. Eng. 2023, 47, 2079–2095. [Google Scholar] [CrossRef]

- Al-Tashi, Q.; Rais, H.; Jadid, S. Feature selection method based on grey wolf optimization for coronary artery disease classification. In International Conference of Reliable Information and Communication Technology; Springer International Publishing: Cham, Switzerland, 2018; pp. 257–266. [Google Scholar]

- Le, T.M.; Pham, T.N.; Dao, S.V.T. A novel wrapper-based feature selection for heart failure prediction using an adaptive particle swarm grey wolf optimization. In Enhanced Telemedicine and e-Health: Advanced IoT Enabled Soft Computing Framework; Springer International Publishing: Cham, Switzerland, 2021; pp. 315–336. [Google Scholar]

- Vlontzou, M.E.; Athanasiou, M.; Dalakleidi, K.V.; Skampardoni, I.; Davatzikos, C.; Nikita, K. A comprehensive interpretable machine learning framework for mild cognitive impairment and Alzheimer’s disease diagnosis. Sci. Rep. 2025, 15, 8410. [Google Scholar] [CrossRef]

- Su, J.; Lu, H.; Zhang, R.; Cui, N.; Chen, C.; Si, Q.; Song, B. Cervical cancer prediction using machine learning models based on routine blood analysis. Sci. Rep. 2025, 15, 22655. [Google Scholar] [CrossRef]

- AlJalaud, E.; Hosny, M. Counterfactual explanation of AI models using an adaptive genetic algorithm with embedded feature weights. IEEE Access 2024, 12, 74993–75009. [Google Scholar] [CrossRef]

- Akoglu, H. User’s guide to correlation coefficients. Turk. J. Emerg. Med. 2018, 18, 91–93. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Fawagreh, K.; Gaber, M.M.; Elyan, E. Random forests: From early developments to recent advancements. Syst. Sci. Control Eng. Open Access J. 2014, 2, 602–609. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support vector machine versus random forest for remote sensing image classification: A meta-analysis and systematic review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Seyyedabbasi, A.; Kiani, F. Sand Cat swarm optimization: A nature-inspired algorithm to solve global optimization problems. Eng. Comput. 2023, 39, 2627–2651. [Google Scholar] [CrossRef]

- Anka, F.; Aghayev, N. Advances in sand cat swarm optimization: A comprehensive study. Arch. Comput. Methods Eng. 2025, 32, 2669–2712. [Google Scholar] [CrossRef]

- Srinivasan, C.; Sheeba, J.C. Energy management of hybrid energy storage system in electric vehicle based on hybrid SCSO-RERNN approach. J. Energy Storage 2024, 78, 109733. [Google Scholar]

- Xiao, D.; Li, B.; Shan, J.; Yan, Z.; Huang, J. SOC estimation of vanadium redox flow batteries based on the ISCSO-ELM algorithm. ACS Omega 2023, 8, 45708–45714. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Shapley, L.S. A value for n-person games. In Contributions to the Theory of Games II; Princeton University Press: Princeton, NJ, USA, 1953. [Google Scholar]

- Mothilal, R.K.; Sharma, A.; Tan, C. Explaining machine learning classifiers through diverse counterfactual explanations. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 607–617. [Google Scholar]

- Rajab, I.M.; Majerczyk, D.; Olson, M.E.; Addams, J.M.; Choe, M.L.; Nelson, M.S.; Potempa, L.A. C-reactive protein in gallbladder diseases: Diagnostic and therapeutic insights. Biophys. Rep. 2020, 6, 49–67. [Google Scholar] [CrossRef]

- Jiang, Z.; Jiang, H.; Zhu, X.; Zhao, D.; Su, F. The relationship between high-sensitivity C-reactive protein and gallstones: A cross-sectional analysis. Front. Med. 2024, 11, 1453129. [Google Scholar] [CrossRef]

- Liu, T.; Siyin, S.T.; Yao, N.; Duan, N.; Xu, G.; Li, W.; Qu, J.; Liu, S. Relationship between high-sensitivity C reactive protein and the risk of gallstone disease: Results from the Kailuan cohort study. BMJ Open 2020, 10, e035880. [Google Scholar] [CrossRef]

- Bin, C.; Zhang, C. The association between vitamin D consumption and gallstones in US adults: A cross-sectional study from the national health and nutrition examination survey. J. Formos. Med. Assoc. 2025, 124, 212–217. [Google Scholar] [CrossRef]

- Olokoba, A.B.; Bojuwoye, B.J.; Olokoba, L.B.; Braimoh, K.T.; Inikori, A.K.; Abdulkareem, A.A. Relationship between gallstone disease and liver enzymes. Res. J. Med. Sci. 2009, 3, 1–3. [Google Scholar]

- Shi, A.; Xiao, S.; Wang, Y.; He, X.; Dong, L.; Wang, Q.; Lu, X.; Jiang, J.; Shi, H. Metabolic abnormalities, liver enzymes increased risk of gallstones: A cross-sectional study and multivariate mendelian randomization analysis. Intern. Emerg. Med. 2025, 20, 501–508. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).