Abstract

This paper proposes optimal sliding mode fault-tolerant control for multiple robotic manipulators in the presence of external disturbances and actuator faults. First, a quantitative prescribed performance control (QPPC) strategy is constructed, which relaxes the constraints on initial conditions while strictly restricting the trajectory within a preset range. Second, based on QPPC, adaptive gain integral terminal sliding mode control (AGITSMC) is designed to enhance the anti-interference capability of robotic manipulators in complex environments. Third, a critic-only neural network optimal dynamic programming (CNNODP) strategy is proposed to learn the optimal value function and control policy. This strategy fits nonlinearities solely through critic networks and uses residuals and historical samples from reinforcement learning to drive neural network updates, achieving optimal control with lower computational costs. Finally, the boundedness and stability of the system are proven via the Lyapunov stability theorem. Compared with existing sliding mode control methods, the proposed method reduces the maximum position error by up to 25% and the peak control torque by up to 16.5%, effectively improving the dynamic response accuracy and energy efficiency of the system.

1. Introduction

With the continuous progress in intelligence, big data, cloud computing, and other technologies, the number of robotic manipulators will increase from a single function to multiple functions and from fixed scenes to flexible applications, whose applications in the fields of intelligent manufacturing, medical care, logistics, and other fields will continue to expand [1,2,3]. Therefore, multiple robotic manipulators will be more intelligent and have a complex direction of development. In the process of developing multiple robotic manipulators, fault-tolerant control is particularly important because of its complexity and interdependence among multiple robotic manipulators, which has also been one of the research focuses of cooperative control of multiple robotic manipulators in recent years [4,5,6].

Multiple robotic manipulators are critical for industrial and hazardous operations (e.g., nuclear waste handling [7,8]), but their control performance is limited by actuator/sensor failures, time-varying joint friction, and persistent disturbances [9]. High radiation in such environments directly causes sensor/actuator malfunctions, degrading system reliability. Therefore, enhancing the control performance of multiple robotic manipulators has become an urgent requirement. Sliding mode control (SMC) [10], known for its fast response and strong anti-interference capabilities, has emerged as a critical candidate for robotic manipulator system control [11,12]. However, existing SMC strategies face three fundamental contradictions in practical applications. First, there is a contradiction between robustness and precision. The traditional SMC is sensitive to parameter variations and external disturbances. For example, Wu et al.’s performance-constrained SMC method [13], when applied to complex terrain transportation tasks, experiences significant trajectory deviations due to inertial parameter fluctuations caused by load changes and wind disturbances. Although the boundary layer strategy [14,15] mitigates chattering, it compromises sliding mode stability, failing to meet the millimeter-level precision requirements for medical rehabilitation robotic manipulator end-effectors. Second, there is a conflict between computational complexity and real-time performance. Advanced SMC methods such as higher-order terminal SMC [16] rely on complex derivative calculations, significantly increasing the computational burden of the controller. In collaborative welding scenarios requiring a millisecond-level response, computational delays can lead to welding defects such as uneven seams or porosity [16,17]. Similarly, real-time performance degradation in multiple robotic manipulator collaborative grasping tasks causes asynchronous movements among manipulators. The third limitation is the limitations of adaptive capability in scenario adaptation. Existing adaptive SMC strategies perform well in specific scenarios but have deficiencies in robotic manipulator collaborative tasks: the terminal SMC in [18], which is effective for simple mobile robots, cannot adapt to dynamic parameter adjustments in industrial assembly; the nonsingular terminal strategy in [19] exhibits excessive complexity when handling strong coupling disturbances; and the stiffness-scheduling method in [20] lacks multiple robotic manipulator coordination mechanisms, hindering personalized treatment in rehabilitation scenarios. In summary, current SMC methods face significant limitations in robustness, real-time performance, and adaptive capability when applied to multiple robotic manipulators, as they fail to fully address their complex characteristics and domain-specific requirements. Therefore, developing a novel SMC structure with high robustness, low computational complexity, and strong scenario adaptability is crucial for advancing the stable application of multiple robotic manipulators in complex environments.

As the concept of sustainable development has taken deep root in people’s minds, the optimal control [21] of multiple robotic manipulators has become a key indicator for evaluating their performance. Reinforcement learning (RL), with its ability to obtain optimal strategies through continuous training, has garnered widespread attention in the field of multiple robotic manipulator control. To address the challenging problem of optimal control for multiple robotic manipulators under unknown nonlinear disturbances, reference [22,23,24] pioneered the adoption of an actor–critic architecture based on neural networks. In this architecture, the critic is responsible for calculating the cost function to evaluate control performance, while the actor continuously optimizes its own strategy on the basis of feedback from the critic, thereby gradually approaching the optimal solution. When the dynamic characteristics of the system are unknown, the identifier becomes a crucial component for accurately estimating uncertainties. References [25,26] introduced neural networks and fuzzy logic systems, respectively, as identifiers, effectively enhancing the system’s adaptability to unknown environments. However, the model uncertainties inherent in multiple robotic manipulator systems, along with the nonlinear characteristics of reinforcement learning, pose significant challenges in terms of heavy computational burdens for traditional optimal control methods in practical applications. To overcome this dilemma, refs. [27,28,29,30] innovatively proposed a critic-only reinforcement learning framework. Reference [27] ingeniously transformed the safety coordination problem into an optimal control problem by simplifying the structure of the critic neural network and introducing obstacle avoidance variables to increase system safety. Ma et al. [28] combined adaptive dynamic programming (ADP) algorithms with event-triggered mechanisms, utilizing a critic-only neural network to efficiently solve event-triggered Hamilton–Jacobi–Bellman equations, achieving decentralized tracking control. Reference [29] further constructed a critic-only neural network on the basis of policy iteration and ADP algorithms, successfully deriving an approximate fault-tolerant position—force optimal control strategy. Reference [30] targeted robotic manipulator systems with asymmetric input constraints and disturbances, achieving optimal control in complex environments by introducing a value function and approximately solving Hamilton–Jacobi–Isaacs equations online on the basis of ADP principles. Inspired by these studies, this paper focuses on multiple robotic manipulator systems in complex environments and designs an RL optimal control strategy based on a critic-only neural network. This strategy reduces computational complexity by optimizing the structure of the critic network and proposes explicit solutions to address the coupling issues of model uncertainties and nonlinear disturbances, providing a more efficient solution for practical deployment.

Prescribed performance control (PPC) [13,31] serves as an efficient control strategy capable of ensuring that system states adhere strictly to predefined performance specifications. Its core principle lies in the meticulous design of performance functions, combined with error transformation mechanisms and controller design [32,33], enabling control systems to precisely meet established performance requirements. Owing to this significant advantage, PPC has been extensively researched and applied in the field of robotic manipulator control. In terms of PPC implementation, reference [34,35,36] introduced performance functions to overcome performance limitations associated with tracking errors and transformed the error constraint problem of multiple robotic manipulators into an unconstrained stability control problem through error transformation strategies, providing new insights for system performance optimization. To address the state and output constraints of robotic manipulators, references [37,38] innovatively proposed barrier Lyapunov functions within the PPC framework, effectively ensuring that system states remain within predefined constraint boundaries. Furthermore, Liu et al. [39] developed a dynamic threshold finite-time prescribed performance control (DTFTPPC) method, which dynamically adjusts performance thresholds when the system reaches predefined time points, continuously compressing errors into smaller ranges and significantly enhancing control precision. However, existing research still presents limitations: the aforementioned multiple robotic manipulator systems require validation of initial position compliance with performance constraints before each operation, an additional verification step that increases operational complexity. Therefore, designing PPC control schemes that do not rely on initial position checks—while maintaining control performance and simplifying operational procedures—has become a critical challenge in the field of multiple robotic manipulator control, warranting in-depth and systematic investigations.

Inspired by the above discussion, an ADP based on an approximate optimal solution is proposed for the problem of actuator faults of multiple robotic manipulators in external disturbances. The sliding mode variable is constructed by combining the QPPC, and then the sliding mode variable is added to the value function to obtain an approximate optimal solution for the multiple robotic manipulators. The ADP is constructed to improve the control performance of multiple robotic manipulators under external disturbances and actuator failures. The contributions of this paper are summarized as follows.

(1) To address the problem of complex operations because of different initial states, a quantitative prescribed performance control (QPPC) strategy is introduced to release the initial state to realize flexible performance constraints and global prescribed performance.

(2) Adaptive gain-integrated terminal sliding mode control (AGITSMC) is proposed on the basis of a gain parameter that varies with the magnitude of the error, which improves the sensitivity of the sliding mode variable in complex environments. The proposed AGITSMC not only improves the convergence velocity and tracking accuracy but also enhances the stability under external disturbances and actuator faults.

(3) A critic-only neural network optimal dynamic programming (CNNODP) strategy is constructed by combining the gradient descent strategy, parallel learning technique, and experience playback technique. Unlike the traditional actor–critic or identifier–actor–critic NN structure, a critic-only NN is used to approximate the cost function, and the RL residuals and historical samples are employed to drive the update of the neural network, which achieves optimal control with fewer computations.

The remaining parts of this paper are structured as follows: Section 2 expounds on the dynamic model of multiple robotic manipulators and the theorems employed in this study; Section 3 provides a detailed derivation of the proposed control algorithms, namely, QPPC, AGITSMC, CNNODP, and fault compensation control, along with a stability analysis of the closed-loop system; Section 4 introduces the simulation setup and presents many simulation results to validate the effectiveness and robustness of the proposed algorithms; and finally, Section 5 summarizes the major findings of this paper and discusses potential future research directions.

2. Preliminaries

For ease of understanding, we use specific characters to represent the state variables of the robotic manipulator, with detailed explanations provided in the Table 1 below:

Table 1.

Abbreviated table.

2.1. System Model

The dynamical models of multiple robotic manipulator systems are represented in the Euler–Lagrange form; here, we consider a multiple robotic manipulator system with degrees of freedom with the following nonlinear differential equation:

Remark 1.

To further deepen the in-depth understanding of multiple robotic manipulator system models, a detailed and systematic explanation of the kinematic and dynamic models of multiple robotic manipulator systems is presented below.

(1) Variables and models: The kinematic model links the joint space and operational space of the manipulator. For an N-degree-of-freedom manipulator, the relationship between the joint position vector and the end-effector pose is nonlinear because of the complex link–joint geometry. Forward kinematics calculates the end-effector pose from given joint positions via coordinate transformations, whereas inverse kinematics finds joint positions for a desired end-effector pose, which often has multiple or no solutions and requires specific algorithms. The dynamic model is key to understanding the forces on the manipulator. The vectors , , and represent the position, velocity, and acceleration, respectively. The inertia matrix connects acceleration to inertial forces and changes with joint position. The centripetal-C Coriolis matrix accounts for velocity-related dynamic effects. The gravity vector shows that gravitational forces vary with pose. The input torque is the control signal, and the bounded disturbance vector represents the uncertainties. Newton’s second law shows that the left-hand side describes internal dynamics and that the right-hand side represents external forces.

(2) Assumptions and physical constraints: Links are assumed to be rigid to ease force calculations. Joints are ideal with no flexibility or clearance for direct motion transfer. Motion is assumed to be continuous without sudden impacts when smooth models are used. Dynamic parameters such as mass and inertia are considered known and constant, ignoring real-world variations. Nonlinear factors such as friction are often ignored initially, and the system is assumed to be linear or linearizable for applying linear control theory. The joint motion range is limited by mechanical design to prevent damage. Velocity is constrained by motor and structural performance, as high speed can cause vibration and stress. The input torque is limited by the motor capacity to avoid overloading. Acceleration is restricted by system inertia and motor capabilities. Manipulators must avoid collisions to prevent damage and follow physical laws such as energy conservation.

(3) Reasons for ignoring parameter uncertainty: Multiple robotic manipulator models are complex, and adding parameter uncertainty makes them even more complex, increasing the difficulty of analysis and controller design. In ideal scenarios such as well-controlled labs, accurate parameter knowledge is reasonable for focusing on basic system aspects. A phased research approach is common: first, a basic controller is designed without considering uncertainty for fundamental functions; then, robustness against uncertainty is enhanced later for an efficient research process.

Combined with the dynamic Equation (1) of multiple robotic manipulators, one obtains

For simplicity, we let and . The expression for can be rewritten as . Let denote the diagonal matrix of matrix ; we obtain , where . Thus, can again be rewritten as follows:

where .

The following actuator fault model is constructed:

where and denote the input and output of the fault model, respectively; and denote the bias fault and additive fault of the actuator, respectively; and , where and denote the state vectors of the robotic manipulator, with a total of dimensions. denotes the degree of freedom of the robotic manipulator with dimensions.

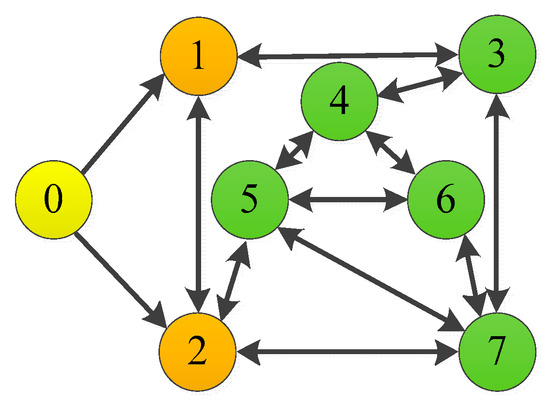

2.2. Graph Description

Define the followers set by . The digraph is used to represent the relation under which information about the state is exchanged among robotic manipulators, where represents the communication of followers. indicates that the robotic manipulator can obtain information from the robotic manipulator; otherwise, . The set of followers is defined as . and = diag{} are defined. The Laplacian matrix is defined as . The adjacency matrix of leaders is defined as = diag{}, which represents the communication among the leader and the followers. indicates that the robotic manipulator can obtain information from the leader; otherwise, . For later analysis, the information exchange matrix is defined as .

2.3. Radial Basis Function Neural Network (RBFNN) Research

In current research on control systems, uncertain nonlinear terms in nonlinear systems can be approximated via the RBFNN.

In general, the RBFNN is utilized to approximate any unknown function . The RBFNN is denoted as follows:

where and indicate the unknown input vector and the weight vector, respectively. is the known basis function vector. is a continuous function that is defined on a compact set . Hence, for , a neural network exists such that . Therefore, . The optimal constant vector is designed to be .

2.4. Lemmas and Assumptions

Lemma 1

([40]). For any constants and , the relationships below can be obtained:

Lemma 2

([41]). For any continuous functions and on that satisfy and , the relationships below can be obtained:

Assumption 1.

The bias fault and additive fault of the actuator are bounded, i.e., the existence of constants and such that and .

Assumption 2

([27]). The activation function and its gradient are paradigmatically bounded, i.e., and , where and are unknown positive parameters.

3. Main Results

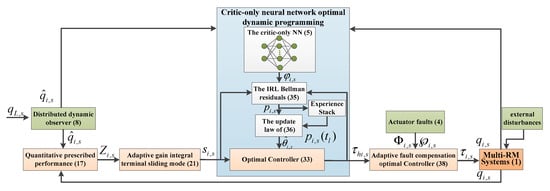

The main objective of this work is to satisfy the control performance requirements of multiple robotic manipulators with actuator faults and external disturbances. The overall control scheme of this paper is shown in Figure 1. First, a quantitative prescribed performance control (QPPC) strategy is designed by combining the signals generated by the observer. Then, the QPPC is utilized to construct the adaptive gain-integrated terminal sliding mode control (AGITSMC). Third, the sliding mode variables are introduced into the value function to construct a critic-only neural network optimal dynamic programming (CNNODP) control strategy for approximating the optimal solution. Finally, adaptive fault compensation control is proposed to reconstruct controllers with fault problems.

Figure 1.

Overall control scheme of the proposed method.

3.1. Quantitative Prescribed Performance Control (QPPC)

In general, the robotic manipulator connected to the leader knows its information, whereas other robotic manipulators do not have direct access to the leader’s information. However, once the robotic manipulator connected to the leader is faulty, the other robotic manipulators are inevitably greatly affected. To ensure that all the robotic manipulators can directly access the leader information without being affected by other robotic manipulators, the dynamic distribution observer is designed as follows:

where , , and denote the leader’s trajectory, velocity and acceleration, respectively; , , and denote the follower’s trajectory, velocity and acceleration, respectively; and () denotes positive parameters. The observation errors of the observers are defined as , , and .

Lemma 3

([27]). For , and with any initial state satisfying and , the observer’s state converges exponentially to the leader’s trajectory, i.e., , and as .

The angle error is given by the following:

The velocity error is given by the following:

Here, by using the inverse tangent function, the error is quantified as follows:

On the basis of the inverse tangent function, .

To ensure the prescribed performance of the consensus error with multiple robotic manipulator positions, the prescribed performance function is designed as follows:

where and denote the initial state and the ultimate convergence domain, respectively, and where is a predefined convergence time.

Deriving from , one can obtain the following:

To ensure PPC, by combining the prescribed performance Function (14) and quantified Function (13), the error transformation function is defined as follows:

Remark 2.

The error transformation function ensures that for . is a prescribed performance function of and . From the above, it follows that is a monotonically decreasing function. always holds under the constraints of , and enters and is always in the predefined domain . On the basis of the properties of the inverse tangent function, always satisfies . Thus, is unconstrained when , and is constrained as decreases and satisfies .

From the above, the constraint states of can be classified into two types.

- When the prescribed performance function , one can obtain the following:

- When the prescribed performance function , one can obtain the following:

Therefore, the PPC strategy designed in this paper releases the initial condition of multiple robotic manipulators, which no longer needs to know the initial state.

Deriving from , one can obtain the following:

where and .

Remark 3.

Combined with the quantization function, a new error transformation function is developed in this paper. Unlike the traditional error transformation strategy [34,35,36,37,38,39], this strategy releases the initial condition and no longer requires the initial error to be within a certain domain, realizing global PPC. In addition, with the help of the quantization function, the error is transformed to a smaller bounded domain even if the error is large, which can greatly reduce the control energy and overshooting phenomenon.

3.2. Adaptive Gain Integral Terminal Sliding Mode Control (AGITSMC)

To ensure that the system trajectory converges quickly and with good robustness, an AGITSMC control strategy is designed as follows:

where and , and where , , , , and are design parameters.

Thus, the derivative of is as follows:

where and .

On the basis of the [42] SMC principle, when the system runs into the sliding stage, i.e., , the following can be obtained:

Remark 4.

The SMC process is considered in two stages [42]: the reaching stage and the sliding mode control stage. However, the trajectory tends to depart from the slide modeling surface under the effects of other adverse conditions, such as obstacle avoidance, external disturbances, actuator faults, and input saturation, which leads to the problem of robustness degradation of the system, especially in the reaching phase. Obviously, the problem of robustness degradation severely affects the control performance. In this paper, AGITSMC control is developed to suppress the jitter of the system. On this basis, the adaptive gain parameters and are introduced, which increase when the error increases and decrease when the error decreases. Therefore, the introduction of the adaptive gain parameter avoids excessive or insufficient gain, which not only improves the convergence velocity and control accuracy but also reduces the jitter phenomenon. Thus, the proposed AGITSMC strategy can enable the system to maintain good control performance in complex environments.

3.3. Critic-Only Neural Network Optimal Dynamic Programming (CNNODP) Control

Next, to design an optimal sliding mode controller, we construct a critic-only neural network (NN) strategy with the RBFNN, where the critic-only NN is updated in real time via RL Bellman residuals and empirical replay techniques. Combining the sliding variables in (20) with the total cost of the control input energy, the performance function index with an attenuation coefficient can be designed as follows:

where denotes the cost function and where denotes the controller. The attenuation coefficient and the term ensure that the cost function remains bounded even though the tracking errors do not eventually converge to 0.

Remark 5.

The purpose of adaptive optimal control is to minimize the value function of a particular characteristic. In nominal systems [22,23,24,25,26], the cost function is a quadratic function related to the tracking error and control input. In this work, sliding mode variables are added to the value function of each robotic manipulator to achieve optimal sliding mode control to address the effects of environmental disturbances and actuator faults on control performance.

To address the optimal trajectory problem, a new adaptive optimal sliding mode controller is designed. The design process is as follows:

First, the Bellman equation is obtained by using Leibniz’s law to derive the following Equation (23):

where is the gradient of in the direction of .

Then, the Hamiltonian function can be defined as follows:

is defined as the set of permissible controls on , where denotes the tight set. The optimized cost function is as follows:

Thus, the Hamilton–Jacobi–Bellman (HJB) equation can be designed according to the following:

where is the gradient of in the direction of .

is considered optimized . By solving for , the optimal controller can be calculated as follows:

Obviously, the direct computation of the optimal controller is difficult because of the complex nonlinearities in . To achieve the optimal performance control objective, the term is constructed as , where a is a positive number.

Therefore, one can obtain the following:

Therefore, can be rewritten as follows:

Considering the unknown functions, the critic-only NN is used to approximate and . The cost function is approximated as and , where is the ideal NN parameter vector, is the activation function, and denotes the approximation error with . Since is unknown, the critic-only NN is calculated as follows:

where and denote the cost function and the critic-only NN weight estimate, respectively. and are the estimate errors.

Finally, combining (30) and (31), the approximate optimal controller can be rewritten as follows:

To update the critic-only NN in real time with the RL Bellman residuals and empirical replay techniques, we can define and . By using the RL algorithm, Equation (23) can be rewritten as follows:

Therefore, the RL Bellman residuals caused by the critic-only NN can be defined as follows:

where and .

Remark 6.

In this paper, the critic-only NN is constructed to obtain an approximate optimal controller instead of the actor–critic structure or the identifier–actor–critic structure. The proposed strategy is excellent over several existing strategies.

First, in controller (32), the consensus control relies on the value function, and we use the critic-only NN to obtain the approximate optimal controller. Compared with the actor–critic structure [22,23,24], the critic-only NN structure adopted in this paper greatly reduces the control system complexity, which is favorable for practical application in engineering.

Next, compared with traditional adaptive optimal control [25,26], the RL Bellman residuals are adopted to drive the update law of the critic-only NN. However, because of the uncertainty of the system model, there are often unknown functions in the HJB equations, which requires the identifier network to estimate the unknown function of (3). There is no doubt that this greatly increases the complexity of the system. To eliminate the identifier network, we use an empirical playback technique to obtain the RL residuals (34) and then drive the updating law with the help of the RL residuals. As a result, there is no need to consider the effect of unknown functions, which reduces the need for an identifier network and greatly reduces the complexity of the system.

Third, unlike [27,28,29,30], the designed update law contains two items. The first one is driven by using RL residuals on the basis of the gradient descent method. The second uses historical samples to adjust the weight vector, which accelerates the decrease in the adaptive law [43].

Finally, compared with existing reinforcement learning algorithms [22,23,24,25,26,27,28,29,30], this paper introduces a feedback term to improve the convergence velocity of robotic manipulators.

To minimize the RL residual, the gradient descent method, parallel learning technique, and experience replay technique are utilized to obtain the update law of as follows:

where is the learning rate, whose value determines the training velocity of the critic-only NN, and where is the index that is used to mark the historical state of the storage.

According to , one can obtain the following:

where and .

Remark 7.

To ensure that converges to , this paper combines the gradient descent method and parallel learning technique to relax the PE condition. Therefore, the update law (35) is classified into two terms. The first is driven by the current data via the gradient descent algorithm, and the second is driven by the historical data via the gradient descent algorithm. To minimize the residuals from the RL, the current data and historical data are used together to drive the update of (35) via the parallel learning technique. Define and as the state of the history. To increase the velocity of convergence of the critic-only neural network and relieve the stringent incentive persistence (PE) conditions encountered in several existing studies, the number of stored history states can be defined as , such as [43].

3.4. Adaptive Fault Compensation Control

Adaptive fault compensation control is designed here to address the problem of multiple robotic manipulator actuator faults. To achieve the control objective, an adaptive fault compensation optimal controller is designed as follows.

Combining (32), the compensation controller is calculated as follows:

Combining (37)–(39), the adaptive fault compensation optimal controller is calculated as follows:

The adaptive parameters and are approximations of and , respectively, which can be calculated as follows:

where , , , and are positive constants; if and , it is simple to obtain and ; and .

3.5. Stability Analysis

Step 1. On the basis of , . Deriving from , one can obtain the following:

Step 2. On the basis of , . Deriving from , one can obtain the following:

On the basis of Yang’s inequality, one can obtain the following:

Substituting (45) into (44), one can obtain the following:

Step 3. On the basis of , . Deriving from , one can obtain the following:

On the basis of Yang’s inequality, one can obtain the following:

Substituting (48) into (47), one can obtain the following:

Combining (43), (46) and (49), one can obtain the following:

On the basis of Lemma 2, one can obtain the following:

Substituting (51) into (50), one can obtain the following:

From the above, we find that is an approximate optimal controller derived without considering actuator faults. Therefore, , where does not consider actuator faults.

Assumption 3

([31]). Let be a candidate term of a continuously differentiable Lyapunov function that satisfies the following:

Therefore, there exists a positive definite matrix satisfying the following:

Combining (52), (54) and Assumption 3, one can obtain the following:

Step 4. On the basis of , . Deriving from , one can obtain the following:

On the basis of Yang’s inequality, one can obtain the following:

Combining (56)–(58), one can obtain the following:

Combining (56)–(60), one can obtain the following:

Step 5. On the basis of , . Deriving from , one can obtain the following:

Step 6. On this basis . Deriving from , one can obtain the following:

where , and .

Finally, one can obtain the following:

On the basis of the standard Lyapunov extension lemma of [44], the trajectories of the multiple robotic manipulators are verified to be uniformly ultimately bounded (UUB).

Proof.

On the basis of assumption 2 and the term, the cost function approximation cannot be infinite in a real system even though the tracking error does not eventually converge to 0. On the basis of Section 2.4, the critic-only NN approximation error and its gradient are both bounded. It is simple to obtain and . On the basis of Assumption 1, the actuator fault influences are bounded. For equation , Lemma 2 shows that is bounded, it is obvious from that , and it is obvious from that . It can be concluded that is bounded. Assumption 1 and Assumption 2 can be fulfilled in practice. In summary, we can consider to be bounded. Therefore, the UUB of the multiple robotic manipulator system can be ensured under the control scheme of this paper. The proof ends. □

Remark 8.

Since the critic-only NN is related to the estimation error, it can guarantee only UUB stability for cooperative control with multiple robotic manipulators. However, one can obtain the desired control performance by adjusting the design parameters of the controller and choosing the appropriate neural network structure.

4. Simulations

4.1. Simulation Conditions

In this section, multiple two-degree-of-freedom manipulator systems consisting of a leader and seven followers are demonstrated. The dynamics model is given by the following entries:

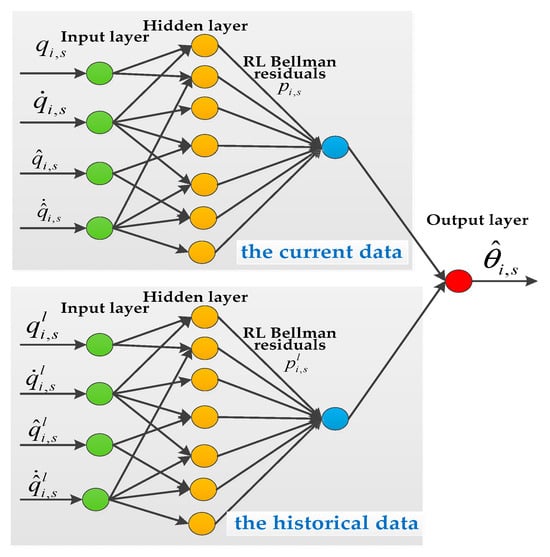

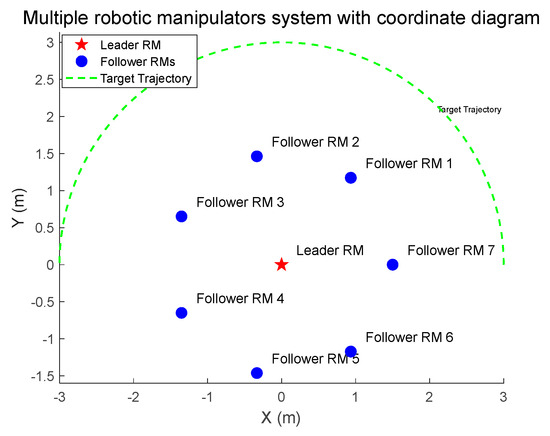

where ; ; ; and denote the mass of the robotic manipulator; and denote the moment of inertia of the robotic manipulator; and denote the length of the robotic manipulator; and and denote the center of mass of the robotic manipulator. The critic-only NN structure is shown in Figure 2. The interactive topology of leaders and followers is shown in Figure 3. The multiple robotic manipulator system with a coordinate diagram is shown in Figure 4. The multiple manipulator model parameters are shown in Table 2. The system’s parameters and initial conditions are shown in Table 3.

Figure 2.

The critic-only NN structure.

Figure 3.

Interaction of multiple robotic manipulators with two degrees of freedom.

Figure 4.

Multiple robotic manipulator systems with a coordinate diagram.

Table 2.

Model parameters of multiple robotic manipulators.

Table 3.

Initial parameters and initial conditions of the system.

Remark 9.

To explain the effects of various parameter choices on the performance of the control system, the following basic suggestions for parameter adjustment are given:

(1) For the dynamic distributed observer (DDO), the selection of parameter needs to balance the observation accuracy and computational efficiency: increasing can reduce the observation error of robotic manipulators and improve the control accuracy, but it increases the computational burden and affects the real-time performance. The value of should be optimized in practical applications to ensure sufficient accuracy while avoiding excessive consumption of computational resources to achieve the best trade-off between error and computational cost.

(2) For the QPPC method, the initial value () plays a role in determining the initial error bounds and has an impact on the transient performance; the final value () affects the ultimate convergence performance; the convergence time () necessitates a careful balance between velocity, which favors small values, and stability, which requires large values.

(3) For the AGITSMC method, as fundamental proportionality coefficients, and directly establish the benchmark for the magnitude values of and , meaning that larger values of and will correspondingly increase the overall magnitude values of and , which in turn affects the calculation of the sliding surface and influences the dynamic performance of the control system; as small positive parameters, and dictate the change rates of and with respect to variations in and , where larger and values heighten the sensitivity of functions and to such changes, enabling quicker adjustments of and and thus facilitating a more prompt system response to error changes; is a positive number that can balance the impact of current errors and historical errors on the sliding surface.

(4) For the CNNODP method, feedback gains () optimize the system response by balancing the convergence velocity and stability, increasing the degree of convergence, but excessive gain causes oscillation; the discount factor ( > 0) serves to strike a balance between the significance of the present performance and future outcomes while simultaneously enhancing computational stability by preventing the unrestricted accumulation of future costs or rewards in the control system; the learning rate () is crucial in the gradient descent algorithm, as it controls the step size, influences the convergence speed and stability, and balances speed with accuracy to ensure effective convergence to a good solution; () usually represents a time interval that balances the system’s consideration of current and future performance by defining the integral interval, influencing the stability of the optimization results, and thereby achieving more effective control optimization; the experience sample () provides diverse data to facilitate comprehensive system understanding, smooths the update process, boosts computational efficiency, and prevents overfitting to recent experiences.

(5) For the fault compensation control method, as a positive parameter, (, , , and ) control the rate of compensation of the fault compensation rate, which is greater for a faster response to changes in actuator faults and smaller for slower and more conservative updating; thus, it needs to be carefully adjusted to ensure stable and accurate fault compensation. (, , , and ) are positive numbers that control the compensation speed of the fault compensation rate. The larger the values of (, , , and are, the faster the fault compensation rate responds to changes in actuator faults, and a smaller value results in a slower and more conservative update. These parameters need to be carefully adjusted to ensure the stability and accuracy of fault compensation.

4.2. Simulation Analysis

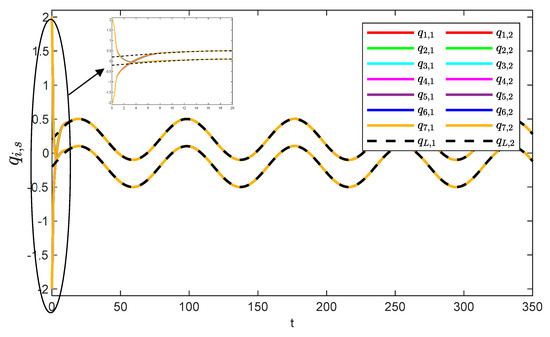

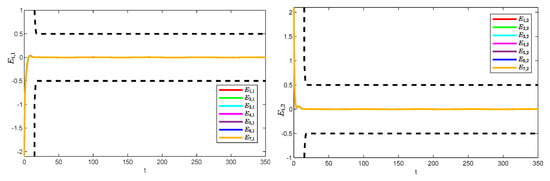

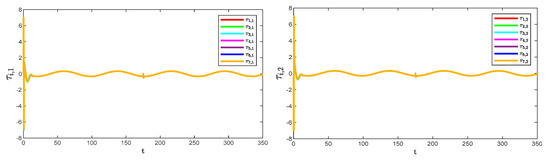

Figure 5 and Figure 6 show the position tracking and tracking error profiles for one leader and seven followers via the QPPC strategy. Figure 7 illustrates the control inputs of the robotic manipulator. Specifically, the system is unconstrained from the initial period to a certain time period, i.e., when , and the system error is constrained to a predefined time domain when . Therefore, Figure 5 and Figure 6 show that the designed controller realizes flexible performance constraints and has good tracking performance throughout. Figure 6 shows the control inputs of the multiple robotic manipulators. The control inputs eventually converge and remain bounded and very stable without significant jitter.

Figure 5.

Angular consistency effect.

Figure 6.

Trajectory errors of the system.

Figure 7.

Control inputs to the system.

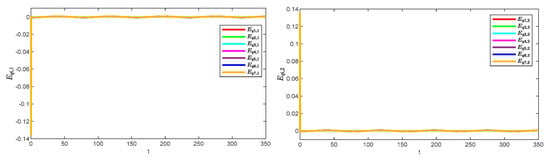

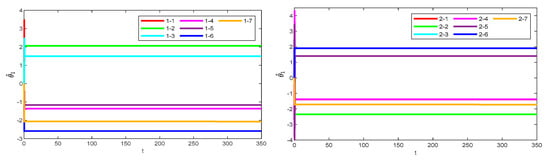

Figure 8 illustrates the observation errors for the multiple robotic manipulators. The observation errors eventually converge and are always bounded. Figure 9 shows the convergence process of the critic-only NN weight estimation for robotic manipulator 1, which ultimately remains stable. The critic-only NN weight estimation eventually converges and eventually remains stable. Therefore, optimal control is achieved by using the critic-only strategy, which is approximately one-half the computation of the actor–critic strategy [22,23,24] and one-third of the identifier–actor–critic strategy [25,26].

Figure 8.

Observation errors of the system.

Figure 9.

Learning process of the critic-only NN weights for robotic manipulator 1.

4.3. Comparative Simulation

4.3.1. Comparative Simulation for the AGITSMC Control Strategy

To highlight the advantages of the AGITSMC control strategy, comparisons with those of [11,12,13] are given.

- Case 1 ([13]).where , and are positive parameters,and is the prescribed performance function.

- Case 2 ([12]).where and are positive parameters.

- Case 3 ([11]).where , , and are positive parameters.

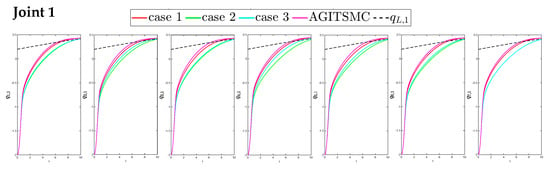

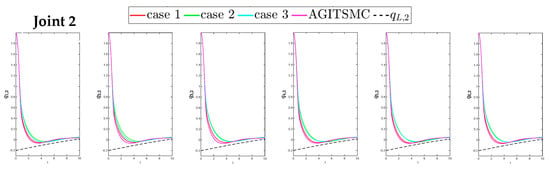

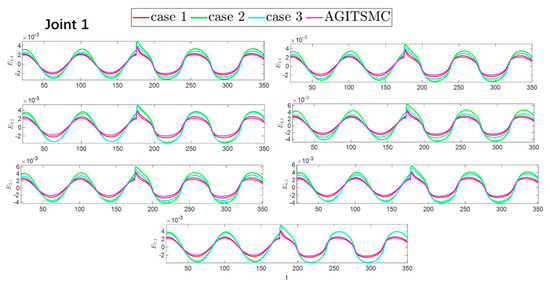

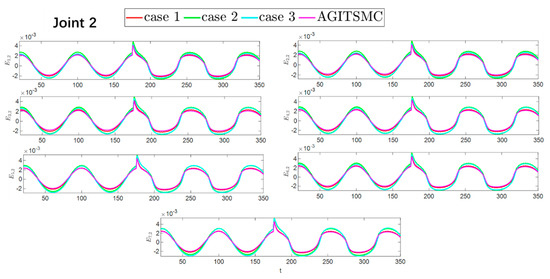

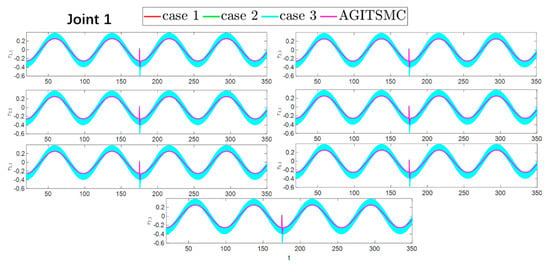

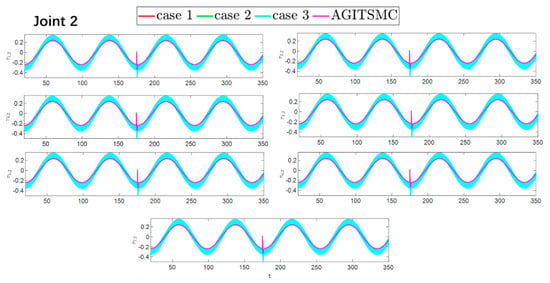

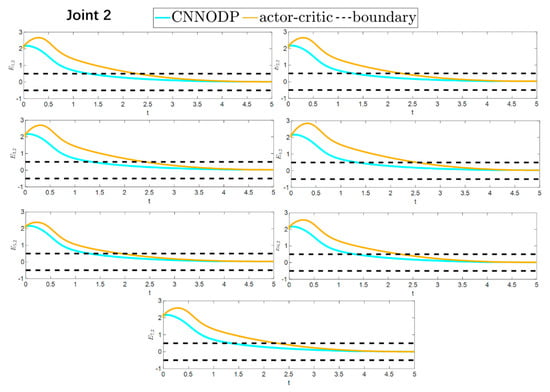

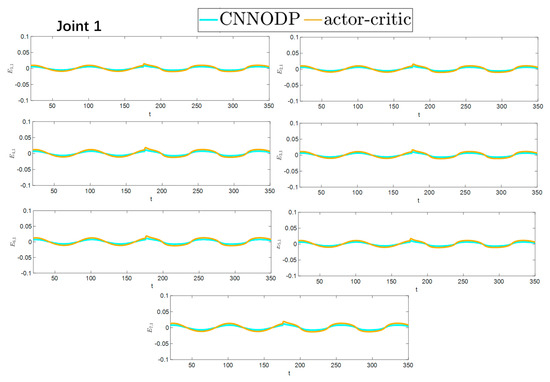

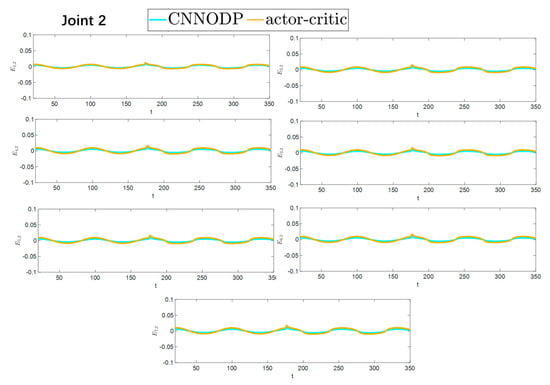

To better show the differences in the control performance of the four strategies, local comparisons of the trajectory convergence velocity, error convergence domain, and control inputs for the four control strategies are presented. The parameters for the comparative experiments are shown in Table 4. Figure 10 and Figure 11 show a comparison of the trajectory convergence velocities from 0 to 10 s for the four control strategies. From the two figures. The proposed AGITSMC strategy has the fastest convergence velocity. Figure 12 and Figure 13 show the comparisons of the error convergence domain in the 20–350 s range for the four control strategies. The two figures show that the tracking errors of the four strategies are within a predefined domain, especially after the actuator faults are within a predefined domain. Therefore, the four strategies have good steady-state performance. Specifically, among the four control strategies, the AGITSMC strategy has the smallest error convergence domain and the best tracking effect. Figure 14 and Figure 15 show comparisons of the control inputs from 20 to 350 s for the four strategies. The control inputs of the proposed AGITSMC strategy are much smoother and recover to a smooth state even after actuator faults. In contrast, the control inputs of the other three strategies have a certain level of jitter, especially in case 3, which obviously cannot address the problem of input jitter well. In addition, the control energy of the AGITSMC strategy is the smallest.

Table 4.

The parameters of the comparative experiment.

Figure 10.

Comparison of trajectory convergence velocities from 0 to 10 s for the four strategies.

Figure 11.

Comparison of trajectory convergence velocities from 0 to 10 s for the four strategies.

Figure 12.

Comparison of the error convergence domain at 20–350 s for the four strategies.

Figure 13.

Comparison of the error convergence domain at 20–350 s for the four strategies.

Figure 14.

Comparison of control inputs in the range of 20–350 s for the four strategies.

Figure 15.

Comparison of control inputs in the range of 20–350 s for the four strategies.

To fully evaluate the effectiveness of the four strategies, three indicators are used to quantify their performance. They are the integral of the absolute value of the error (IAE), the integral of the time multiplied by the absolute value of the error (ITAE), and the integral of the square value (ISV) of the control input, which are described as follows:

To facilitate the comparative performance indicators of the seven robotic manipulators in the four strategies, we directly take the average of the corresponding indicators of the seven robotic manipulators. The relevant data are summarized in Table 5. Table 5 shows that both the IAE and ITAE indicators of the proposed AGITSMC are minimized, which means that the AGITSMC has a smaller tracking error and higher dynamic tracking accuracy than the comparative strategies do; the ISV indicator of the AGITSMC is minimized, which means that the control cost of the proposed controller is minimized. Thus, compared with existing SMC strategies, the proposed AGITSMC not only offers better tracking performance but also saves more cost.

Table 5.

Comparison of three control performance indicators for the four strategies.

4.3.2. Comparative Simulation for the CNNODP Control Strategy

To highlight the advantages of the CNNODP control strategy, comparisons with the actor–critic method [22,23,24] are given.

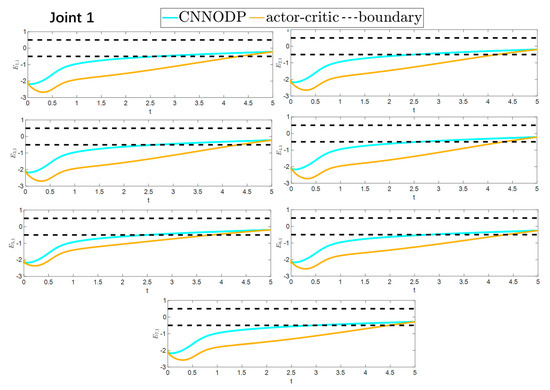

The convergence speeds of the two strategies are shown in Figure 16 and Figure 17, indicating that the CNNODP strategy converges significantly faster. This is because CNNODP only needs to optimize a single value network without the coupled update issues between the actor and critic networks. In simple state-action mapping scenarios, it can quickly learn effective control rules, leading to a faster error reduction rate than the more complex actor–critic architecture can achieve. Figure 18 and Figure 19 clearly show a comparison of the error convergence domains of the two strategies. The results clearly reveal that the convergence error of the CNNODP strategy is significantly smaller. The core reason for this advantage lies in the fact that the value function design of the CNNODP strategy enables it to directly learn the “optimal action-state matching” rules without going through complex network interaction processes. Therefore, unlike other strategies, it does not generate additional deviations because of network coupling issues. This characteristic allows the CNNODP strategy to maintain high tracking accuracy even after the system stabilizes.

Figure 16.

Comparison of the convergence velocity for the two strategies.

Figure 17.

Comparison of the convergence velocity for the two strategies.

Figure 18.

Comparison of the convergence domain for the two strategies.

Figure 19.

Comparison of the convergence domain for the two strategies.

5. Conclusions

In this paper, adaptive optimal sliding mode fault-tolerant control is proposed for multiple robotic manipulators on the basis of quantitative prescribed performance control and critic-only dynamic programming. The QPPC strategy releases the initial state of the control system to realize global predetermined performance control. The AGITSMC strategy not only improves the convergence velocity and control accuracy but also reduces the jitter of the system. The CNNODP strategy not only reduces the computational effort but also achieves optimal control. The adaptive fault compensation control strategy addresses actuator faults. The simulation results of the control strategies constructed in this paper under external disturbances and actuator faults and the comparison of the simulation results with those of the existing methods have proven the effectiveness of the proposed strategy. The simulation results show that the proposed control strategies have very good control performance. Specifically, the QPPC strategy divides the error constraint into two parts, i.e., when , the system is unconstrained and when , the system is constrained to a predefined domain, and the control system retains a very good tracking effect throughout the whole process. Compared with other existing strategies, the AGITMC strategy ensures faster convergence of the system, less control cost, and the best interference ability. The learning law of the CNNODP strategy remains stable throughout the process, with a good learning effect. In conclusion, the proposed control strategy can achieve optimal robust control of multiple robotic manipulators under external disturbances and actuator faults. From the perspective of research development, it is necessary to further expand the section on future work, and conducting tests in different fields has numerous positive implications. For example, in various scenarios of industrial automation production, testing the applicability of this method to different types of multiple robotic manipulator systems can further validate its robustness and versatility. In the aerospace field, when confronted with complex and high-precision operational tasks as well as harsh environmental conditions, testing this method can explore its performance under extreme circumstances. Therefore, if tests can be carried out in different fields in the future, they will contribute to a more comprehensive evaluation of the value and application prospects of this method.

Author Contributions

Conceptualization, X.Z. and H.L.; Methodology, X.Z. and H.L.; Software, X.Z. and H.L.; Validation, X.Z. and H.L.; Formal analysis, Z.Y. and H.L.; Investigation, X.H.; Resources, Z.Y. and X.H.; Data curation, Z.Y. and X.H.; Writing—original draft, X.Z. and Z.Y.; Writing—review & editing, X.Z. and Z.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Key Project of the Department of Education of Guangdong Province [grant number 2023ZDZX1005], the Science and Technology Innovation Team of the Department of Education of Guangdong Province [grant number 2024KCXTD041], the Shenzhen Science and Technology Program [grant number JCYJ20220530162014033], the Guangdong Basic and Applied Basic Research Foundation [grant number 2024A1515011345], and the Science and Technology Planning Project of Zhanjiang City [grant number 2021A05023].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

There are no potential commercial interests that require disclosure under the relevant guidelines.

References

- Surya, P.S.K.; Shukla, A.; Pandya, N.; Jha, S.S. Automated Vision-based Bolt Sorting by Manipulator for Industrial Applications. In Proceedings of the 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE), Bari, Italy, 28 August–1 September 2024. [Google Scholar]

- Lai, J.; Lu, B.; Ren, H. Kinematic concepts in minimally invasive surgical flexible robotic manipulators: State of the art. In Handbook of Robotic Surgery; Academic Press: Cambridge, MA, USA, 2025; pp. 27–41. [Google Scholar]

- Dias, P.A.; Petry, M.R.; Rocha, L.F. The Role of Robotics: Automation in Shoe Manufacturing. In Proceedings of the 2024 20th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications (MESA), Genova, Italy, 2–4 September 2024. [Google Scholar]

- Wang, L.M.; Jia, L.Z.; Zhang, R.D.; Gao, F.R. H∞output feedback fault-tolerant control of industrial processes based on zero-sum games and off-policy Q-learning. Comput. Chem. Eng. 2023, 179, 108421. [Google Scholar] [CrossRef]

- Wang, L.M.; Li, X.Y.; Zhang, R.D.; Gao, F.R. Reinforcement Learning-Based Optimal Fault-Tolerant Tracking Control of Industrial Processes. Ind. Eng. Chem. Res. 2023, 62, 16014–16024. [Google Scholar] [CrossRef]

- Wang, L.M.; Jia, L.Z.; Zou, T.; Zhang, R.D.; Gao, F.R. Two-dimensional reinforcement learning model-free fault-tolerant control for batch processes against multi- faults. Comput. Chem. Eng. 2025, 192, 108883. [Google Scholar] [CrossRef]

- Visinsky, M.L.Z. Dynamic Fault Detection and Intelligent Fault Tolerance for Robotics; Rice University: Houston, TX, USA, 1994. [Google Scholar]

- Aldridge, H.A.; Juang, J.N. Experimental Robot Position Sensor Fault Tolerance Using Accelerometers and Joint Torque Sensors. No. NASA-TM-110335. 1997. Available online: https://dl.acm.org/doi/pdf/10.5555/871365 (accessed on 22 July 2025).

- Sun, W.W. Stabilization analysis of time-delay Hamiltonian systems in the presence of saturation. Appl. Math. Comput. 2011, 217, 9625–9634. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, J.Y.; Zhu, Q.M. Adaptive Secure Control for Uncertain Cyber-Physical Systems With Markov Switching Against Both Sensor and Actuator Attacks. IEEE Trans. Syst. Man Cybern. Syst. 2025, 55, 3917–3928. [Google Scholar] [CrossRef]

- Jouila, A.; Nouri, K. An adaptive robust nonsingular fast terminal sliding mode controller based on wavelet neural network for a 2-DOF robotic arm. J. Frankl. Inst. 2020, 357, 13259–13282. [Google Scholar] [CrossRef]

- Zhang, S.; Cheng, S.; Jin, Z. Variable Trajectory Impedance: A Super-Twisting Sliding Mode Control Method for Mobile Manipulator Based on Identification Model. IEEE Trans. Ind. Electron. 2025, 72, 610–619. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Y.Y.; Xie, X.P.; Wu, Z.G.; Yan, H.C. Adaptive Reinforcement Learning Strategy-Based Sliding Mode Control of Uncertain Euler–Lagrange Systems With Prescribed Performance Guarantees: Autonomous Underwater Vehicles-Based Verification. IEEE Trans. Fuzzy Syst. 2024, 32, 6160–6171. [Google Scholar] [CrossRef]

- Boiko, I.; Fridman, L. Analysis of chattering in continuous sliding-mode controllers. IEEE Trans. Autom. Control 2005, 50, 1442–1446. [Google Scholar] [CrossRef]

- Zhang, Z.; Guo, Y.; Zhu, S.; Liu, J.; Gong, D. Adaptive integral sliding-mode finite-time control with integrated extended state observer for uncertain nonlinear systems. Inf. Sci. 2024, 667, 120456. [Google Scholar] [CrossRef]

- Zha, M.X.; Wang, H.P.; Tian, Y.; He, D.X.; Wei, Y.C. A novel hybrid observer-based model-free adaptive high-order terminal sliding mode control for robot manipulators with prescribed performance. Int. J. Robust Nonlinear Control 2024, 34, 11655–11680. [Google Scholar] [CrossRef]

- Tran, X.T.; Kang, H.J. Adaptive Hybrid High-Order Terminal Sliding Mode Control of MIMO Uncertain Nonlinear Systems and Its Application to Robot Manipulators. Int. J. Precis. Eng. Manuf. 2015, 16, 255–266. [Google Scholar] [CrossRef]

- Baban, P.Q.; Ahangari, M.E. Adaptive terminal sliding mode control of a non-holonomic wheeled mobile robot. Int. J. Veh. Inform. Commun. Syst. 2024, 9, 335–356. [Google Scholar]

- Alattas, K.A.; Vu, M.T.; Mofid, O.; El-Sousy, F.F.M.; Alanazi, A.K.; Awrejcewicz, J.; Mobayen, S. Adaptive Nonsingular Terminal Sliding Mode Control for Performance Improvement of Perturbed Nonlinear Systems. Mathematics 2022, 10, 1064. [Google Scholar] [CrossRef]

- Hu, K.X.; Ma, Z.J.; Zou, S.L.; Li, J.; Ding, H.R. Impedance Sliding-Mode Control Based on Stiffness Scheduling for Rehabilitation Robot Systems. Cyborg Bionic Syst. 2024, 5, 0099. [Google Scholar] [CrossRef]

- Tian, G.T.; Tan, J.; Li, B.; Duan, G.R. Optimal Fully Actuated System Approach-Based Trajectory Tracking Control for Robot Manipulators. IEEE Trans. Cybern. 2024, 54, 7469–7478. [Google Scholar] [CrossRef]

- Yin, Y.; Ning, X.; Xia, D. Adaptive output-feedback fault-tolerant control for space manipulator via actor-critic learning. Adv. Space Res. 2025, 75, 3914–3932. [Google Scholar] [CrossRef]

- Rahimi Nohooji, H.; Zaraki, A.; Voos, H. Actor–critic learning based PID control for robotic manipulators. Appl. Soft Comput. 2024, 151, 111153. [Google Scholar] [CrossRef]

- Liang, X.; Yao, Z.; Ge, Y.; Yao, J. Disturbance observer based actor-critic learning control for uncertain nonlinear systems. Chin. J. Aeronaut. 2023, 36, 271–280. [Google Scholar] [CrossRef]

- Fan, Y.; Yang, C.; Li, Y. Fixed-Time Neuro-Optimal Adaptive Control With Input Saturation for Uncertain Robots. IEEE Internet Things J. 2024, 11, 28906–28917. [Google Scholar] [CrossRef]

- Xie, Z.C.; Sun, T.; Kwan, T.; Wu, X.F. Motion control of a space manipulator using fuzzy sliding mode control with reinforcement learning. Acta Astronaut. 2020, 176, 156–172. [Google Scholar] [CrossRef]

- Guo, Y.; Huang, H. Approximate optimal and safe coordination of nonlinear second-order multirobot systems with model uncertainties. ISA Trans. 2024, 149, 155–167. [Google Scholar] [CrossRef]

- Ma, B.; Li, Y.C. Compensator-critic structure-based event-triggered decentralized tracking control of modular robot manipulators: Theory and experimental verification. Complex Intell. Syst. 2022, 8, 1913–1927. [Google Scholar] [CrossRef]

- Ma, B.; Dong, B.; Zhou, F.; Li, Y.C. Adaptive Dynamic Programming-Based Fault-Tolerant Position-Force Control of Constrained Reconfigurable Manipulators. IEEE Access 2020, 8, 183286–183299. [Google Scholar] [CrossRef]

- Duc, D.N.; Khac, L.L.; Tan, L.N. ADP-Based H∞ Optimal Control of Robot Manipulators With Asymmetric Input Constraints and Disturbances. IEEE Access 2024, 12, 67809–67819. [Google Scholar] [CrossRef]

- Liu, D.; Yang, X.; Wang, D.; Wei, Q. Reinforcement-Learning-Based Robust Controller Design for Continuous-Time Uncertain Nonlinear Systems Subject to Input Constraints. IEEE Trans. Cybern. 2015, 45, 1372–1385. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Huang, H.; Tian, X.; Zhang, J. Distributed fixed-time formation control for UAV-USV multiagent systems based on the FEWNN with prescribed performance. Ocean Eng. 2025, 328, 120996. [Google Scholar] [CrossRef]

- Liu, H.; Feng, Z.; Tian, X.; Mai, Q. Adaptive predefined-time specific performance control for underactuated multi-AUVs: An edge computing-based optimized RL method. Ocean Eng. 2025, 318, 120048. [Google Scholar] [CrossRef]

- Wang, M.; Yang, A. Dynamic Learning From Adaptive Neural Control of Robot Manipulators With Prescribed Performance. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 2244–2255. [Google Scholar] [CrossRef]

- Golestani, M.; Chhabra, R.; Esmaeilzadeh, M. Finite-Time Nonlinear H∞ Control of Robot Manipulators With Prescribed Performance. IEEE Control Syst. Lett. 2023, 7, 1363–1368. [Google Scholar] [CrossRef]

- Xia, Y.; Yuan, Y.; Sun, W. Finite-Time Adaptive Fault-Tolerant Control for Robot Manipulators With Guaranteed Transient Performance. IEEE Trans. Ind. Inform. 2025, 21, 3336–3345. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, Y.; Celler, B.G.; Su, S.W. Neural Adaptive Backstepping Control of a Robotic Manipulator With Prescribed Performance Constraint. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3572–3583. [Google Scholar] [CrossRef]

- Zhu, C.; Jiang, Y.; Yang, C. Fixed-Time Neural Control of Robot Manipulator With Global Stability and Guaranteed Transient Performance. IEEE Trans. Ind. Electron. 2023, 70, 803–812. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, H.; Sun, J.; Guo, X. Dynamic Threshold Finite-Time Prescribed Performance Control for Nonlinear Systems With Dead-Zone Output. IEEE Trans. Cybern. 2024, 54, 655–664. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Zhao, L. Distributed Adaptive Gain-Varying Finite-Time Event-Triggered Control for Multiple Robot Manipulators With Disturbances. IEEE Trans. Ind. Inform. 2023, 19, 9302–9313. [Google Scholar] [CrossRef]

- Ma, Z.; Ma, H. Adaptive Fuzzy Backstepping Dynamic Surface Control of Strict-Feedback Fractional-Order Uncertain Nonlinear Systems. IEEE Trans. Fuzzy Syst. 2020, 28, 122–133. [Google Scholar] [CrossRef]

- Mei, K.; Ding, S.; Dai, X.; Chen, C.C. Design of Second-Order Sliding-Mode Controller via Output Feedback. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 4371–4380. [Google Scholar] [CrossRef]

- Ma, Q.; Jin, P.; Lewis, F.L. Guaranteed Cost Attitude Tracking Control for Uncertain Quadrotor Unmanned Aerial Vehicle Under Safety Constraints. IEEE/CAA J. Autom. Sin. 2024, 11, 1447–1457. [Google Scholar] [CrossRef]

- Lewis, F.; Yesildirak, A.; Jagannathan, S. Neural Network Control of Robot Manipulators and Non-Linear Systems; Taylor & Francis: London, UK, 1998. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).