1. Introduction

In recent years, significant advancements in the fields of artificial intelligence (AI) and microelectronics have contributed to the pervasive adoption of wireless sensor networks (WSNs) in various applications, including the Internet of Things (IoT), smart cities, and environmental monitoring [

1]. WSNs facilitate the operation of intelligent systems by collecting real-time data, including temperature, humidity, and chemical concentration, from distributed sensor nodes [

2]. Moreover, in dynamic scenarios such as disaster response, military reconnaissance, or adaptive environmental monitoring, WSNs must rapidly adjust to changing conditions, necessitating mobile sensor nodes capable of dynamic deployment. However, the coverage optimization problem persists as a pivotal challenge in WSN design, namely, how to maximize the coverage of the monitoring area while ensuring connectivity and energy efficiency under the constraints of node number, energy, and computational resources. This problem directly impacts the accuracy of monitoring and the efficiency of resources in WSNs, especially in dynamic and heterogeneous environments, thereby limiting their reliability in intelligent networks [

3]. The optimization of WSN coverage is imperative to enhance network performance and facilitate the extensive implementation of IoT applications [

4].

Conventional optimization methodologies often prove challenging when it comes to addressing the intricacies inherent in the WSN coverage problem. Static deployment strategies, while effective in controlled environments, struggle to adapt to environmental changes or node failures, limiting their flexibility in real-world applications. In contrast, dynamic deployment enables sensor nodes to reposition themselves, enhancing network flexibility and robustness under resource constraints. Swarm intelligence algorithms offer a novel approach to addressing this challenge. The efficacy of Particle Swarm Optimization (PSO) in optimizing node positions is contingent upon swarm collaboration, yet its performance is constrained by the speed of convergence [

5]. Ant Colony Optimization (ACO) is predicated on the principle of colony collaboration to optimize node locations; however, it is constrained by high computational overhead [

6]. Deep reinforcement learning has demonstrated remarkable proficiency in dynamic optimization; however, its substantial resource requirements impose significant limitations on its practical applications [

7]. The deficiencies of these methodologies underscore the pressing necessity for efficient and robust algorithms to address the high-dimensional search space and real-time constraints in WSN coverage problems.

In order to address these practical issues, a thorough analysis of the particular requirements for optimizing the coverage of WSNs was conducted. This analysis revealed that the gray wolf optimization (GWO) algorithm offers an effective optimization framework by emulating the collaborative behavior of wolves. However, the GWO algorithm is subject to certain limitations, including uneven limitations and an imbalance between exploration and exploitation, as well as local optimal traps, arising from random initialization and exploration–exploitation imbalance [

8]. Therefore, in response to the pressing need to address the challenge of optimizing network coverage, we propose a fusion multi-strategy gray wolf optimizer (FMGWO), which significantly improves search efficiency and stability through electrostatic field initialization, dynamic parameter tuning, an elder council mechanism, head wolf tenure checking and a rotation mechanism, and a hybrid mutation strategy. This approach directly addresses the inefficiency and instability of existing techniques in complex WSN scenarios and achieves higher coverage with fewer nodes, outperforming algorithms such as PSO, GWO, CSA, DE, GA, FA, OGWO, DGWO1, and DGWO2. FMGWO’s innovation not only improves the performance of WSN deployments but also provides a reference framework for the IoT, edge computing, and efficient smart systems, providing a reference framework for optimization and demonstrating its potential for application in optimization problems.

2. Related Work

The issue of coverage in WSNs represents a fundamental challenge in various application areas, including the IoT, smart cities, and environmental monitoring. In these contexts, the objective is to optimize the coverage area while ensuring network connectivity and energy efficiency within the limitations imposed by the number of nodes, energy resources, and computational capabilities [

9]. A plethora of studies have been conducted on this issue, proposing a variety of optimization methods, primarily focusing on two strategies: deterministic and random deployment [

10].

Deterministic deployment is a methodical process that ensures comprehensive area coverage and network connectivity by meticulously positioning sensor nodes at predefined locations. This approach is particularly well suited for scenarios where environmental information is known, such as industrial monitoring or smart buildings [

11]. Boualem A et al. have proposed a minimum semi-deterministic deployment model for mobile wireless sensor networks based on Pick’s theorem. This model effectively reduces node mobility and enhances network lifetime and coverage efficiency [

12]. Zrelli A et al. investigated the k-coverage and connectivity of WSNs in border monitoring. They explored the optimal model under deterministic deployment to determine the minimum number of sensors. They also proposed a hybrid WSN scheme for Tunisian–Libyan border monitoring to achieve efficient connectivity and event detection [

13]. However, deterministic deployment is contingent on detailed environmental information obtained in advance and is challenging to implement in dynamic or unknown environments, thereby limiting its flexibility and practical application [

14].

Conversely, random deployment does not necessitate any a priori environmental data, and the deployment process is straightforward and adaptable, making it well suited to large-scale or emergency scenarios, such as forest fire monitoring or battlefield reconnaissance [

15]. The random scattering of nodes, a deployment strategy that aims to reduce complexity, often results in coverage blindness or excessive node clustering due to an uneven distribution of nodes. This, in turn, affects coverage and connectivity [

16]. In order to address these issues, researchers have devised post-optimization techniques to enhance the coverage effect by adjusting node positions. Furthermore, they have conducted theoretical analyses of the optimal and worst coverage scenarios and have proposed various improvement algorithms. These algorithms have the potential to alleviate the coverage unevenness problem to a certain extent [

17]. However, random deployment continues to encounter challenges, including node redundancy and energy expenditure. Consequently, the development of more efficient deployment optimization schemes is imperative [

18].

Beyond static placement problems, recent research has increasingly addressed dynamic coverage scenarios where sensors are mobile or environmental conditions change over time [

19]. Approaches to dynamic coverage often rely on adaptive re-deployment, online control policies, or evolutionary algorithms that can handle time-varying objectives [

20]. In particular, multi-objective evolutionary algorithms and multi-task learning frameworks have been proposed to handle conflicting goals and exploit knowledge transfers between related tasks or time instances [

21]. Such methods can include interval-based uncertainty handling, inverse-mapping techniques for rapid re-initialization, or learning-based surrogates to accelerate solution updates [

22]. While these dynamic and multi-objective methods are powerful for mobile or uncertain WSNs, they typically involve more complex objective formulations, additional communication for sensor mobility, or a higher computational burden [

23].

In light of the limitations of conventional optimization methodologies, swarm intelligence algorithms offer a novel approach to WSN coverage optimization due to their capacity to generate complex intelligent behaviors through local interactions of simple individuals. Song J et al. proposed an enhanced algorithm, NEHPO, based on the prey–predator optimization (HPO) algorithm to address the challenges posed by the low search accuracy of HPO and its propensity to converge on a local optimum, with the objective of enhancing WSN coverage performance [

24]. Although NEHPO improves the overall search capability, its hybrid structure introduces additional parameter-tuning complexity and increases computational overhead during each iteration. Furthermore, in large-scale WSN deployments, the algorithm may still suffer from unstable convergence behavior due to sensitivity to initial population distribution, and the improvements in local exploitation do not fully eliminate the tendency to stagnate when facing multimodal search landscapes.

Wang J et al. proposed an algorithm for optimizing network coverage based on the Improved Salpa Swarm Intelligence Algorithm (ATSSA). This algorithm enhances the global and local searching ability through the use of a tent chaotic sequence initialization of the population, a T-distribution variation, and an adaptive position-updating formula. The purpose of these elements is to improve WSN coverage and reduce node mobile energy consumption [

25]. Despite these enhancements, ATSSA’s reliance on chaotic sequence initialization may result in excessive randomness when the search space is very large, potentially causing inefficient early-stage exploration. The incorporation of a T-distribution variation, while beneficial for avoiding premature convergence, can produce large and unpredictable position jumps that slow convergence when the search approaches near-optimal regions. Additionally, the adaptive update mechanism adds computational complexity and requires careful parameter calibration to maintain a suitable balance between exploration and exploitation across different network scales.

Chen X et al. proposed a hybrid butterfly–predator optimization (HBPO) algorithm with a dynamic quadratic parameter adaptive strategy, and the hybrid butterfly–beluga optimization algorithm (NHBBWO), which combines the advantages of beluga and butterfly optimization algorithms to improve WSN node coverage area and reduce redundancy [

26]. Although HBPO and NHBBWO benefit from hybridization by integrating complementary search mechanisms, this combination inherently increases algorithmic complexity, leading to longer computation times per iteration. The performance gain is also highly dependent on appropriate parameter weighting between the two embedded metaheuristics, which reduces robustness in dynamically changing WSN environments. Moreover, hybrid algorithms may suffer from redundant search behavior when the optimization process lacks effective cooperation control, thus causing wasted computational effort and reduced scalability in large-scale deployments.

Despite the advances made by metaheuristic algorithms, such as the Hungarian method and Salpa, persistent challenges remain across most swarm intelligence-based coverage optimization methods. The majority encounter performance degradation in high-dimensional or large-scale networks, with slow convergence rates and an increased computational overhead from complex parameter tuning. Their search behaviors tend to collapse prematurely toward local optima, especially in rugged or multimodal landscapes. In mobile node scenarios, frequent relocation amplifies energy consumption, limiting the real-world applicability of these solutions. Notably, population diversity often decreases rapidly in the early search stages, reducing the algorithm’s global search capacity. These limitations impede the efficacy of node optimization deployment. These limitations have prompted researchers to investigate more efficient swarm intelligence algorithms to address the complexity of WSN coverage optimization.

To address these limitations, the GWO, introduced by Mirjalili et al., has emerged as a competitive alternative [

27]. Inspired by the social hierarchy and hunting behavior of grey wolf packs, GWO provides an efficient metaheuristic optimization framework by simulating the wolf pack collaboration mechanism [

27]. In comparison with algorithms such as HPO and Salpa, GWO demonstrates superiority in the optimization of WSN coverage with its simplicity and reduced computational cost, thereby effectively enhancing the coverage rate [

28]. However, the performance of GWO is limited due to the uneven distribution of the initial population, the lack of flexibility in the linear convergence strategy, and the tendency to fall into local optimization in complex, high-dimensional search spaces [

29].

Specifically, populations that are randomly initialized may result in inadequate coverage of the solution space [

30], linear convergence factors encounter challenges in achieving a balance between global exploration and local exploitation [

31], and a single-head wolf guidance mechanism may lead to convergence stagnation in multi-peak optimization scenarios [

32]. These issues are more prominent in the WSN optimization coverage problem, which affects the robustness of the algorithm and the actual deployment effect to a certain extent.

In summary, deterministic and random deployment strategies offer their own advantages in terms of WSN coverage optimization. However, the limitations of these strategies highlight the necessity of advanced optimization techniques. As a class of advanced optimization techniques, swarm intelligence algorithms provide effective solutions for coverage optimization through global search and adaptive mechanisms. We chose the GWO framework as the baseline for developing FMGWO for several pragmatic and theoretical reasons. First, GWO is a population-based metaheuristic whose leadership hierarchy and hunting mechanism provide an intuitive balance between exploration and exploitation, which aligns well with the nature of continuous node-placement problems in WSN coverage. Second, the canonical GWO possesses a relatively small number of tunable parameters and a simple update scheme, which makes it straightforward to analyze and hybridize with auxiliary mechanisms. Third, the literature shows that GWO exhibits competitive performance on many continuous optimization benchmarks, and it has been successfully applied to placement and coverage problems; thus, it represents a suitable and well-understood starting point for targeted algorithmic improvements. At the same time, the standard GWO has known limitations—most notably a tendency toward premature convergence and diminished population diversity in later stages of optimization. These limitations motivate the targeted enhancements presented in this work, each designed to alleviate specific weaknesses without substantially complicating the algorithm’s structure. The findings of the present study propose the implementation of FMGWO, with the objective of achieving substantial enhancement in the coverage and resource efficiency of WSNs, thereby addressing the prevailing challenges in the context of practical applications.

3. Wireless Sensor Network Coverage Problem and Standard GWO

3.1. Sensor Network Node Coverage Model

In WSNs, the sensing capability of sensor nodes is a pivotal component in ensuring the efficacy of network monitoring. In this study, the sensing range of a node is defined as a circular area centered on the node with a sensing radius of R. It is demonstrated that only monitoring points within this area can be effectively sensed. The sensing radius, R, a pivotal parameter, directly determines the coverage efficiency of wireless sensor networks. In order to facilitate an analysis of complex problems, this study simplifies the WSN coverage area into a two-dimensional plane and develops the study based on the following idealized assumptions.

Assumption 1. The sensing range of all nodes is a perfect circular area and is not affected by any obstacles or environmental factors.

Assumption 2. All sensor nodes have the same hardware structure and sensing capability to ensure a consistent sensing range.

Assumption 3. All sensor nodes are mobile and can sense other nodes within their sensing range in real time and obtain the precise location information of these nodes.

Based on the above assumptions, the WSN coverage model is as follows.

In this paper, the monitoring area is defined as a two-dimensional rectangular plane whose length is denoted as Y and whose width is denoted as Z, while the total area is Y × Z. In the planar rectangular coordinate system, the four vertices of this rectangular area have the following coordinates: (0, 0), (0, Z), (Y, 0), and (Y, Z). In the discretization process, the monitoring area is divided into n equal-area and symmetric grids. The center point of each grid is designated as the monitoring point, and its set is represented as follows.

Next, v sensor nodes are randomly deployed in the monitoring area, the set of which is represented as follows.

All nodes adhere to a Boolean perception model, with each node possessing a perception radius of R.

The calculation of the Euclidean distance between the sensor node, O

i, and the monitoring point, J

j, is achieved through the following equation.

In Equation (3),

denotes the Euclidean distance between sensor node O

i and monitoring point J

j. The Boolean perception function, P(O

i,J

j), is utilized to ascertain whether the sensor node is capable of detecting the target, the definition of which is as follows.

In the event that this distance is less than or equal to the sensing radius, R, the monitoring point, J

j, is within the sensing range of the sensor node, O

i. This indicates that the grid where the monitoring point, J

j, is located is covered by the WSN. As the same monitoring point, designated as J

j, may be repeatedly sensed via multiple sensor nodes, the joint probability of sensing monitoring point J via all sensor nodes is given by the following formula.

In Equation (5), denotes the joint probability that monitoring point Jj is sensed via at least one node in the monitoring area, Oall denotes all sensor nodes, and v is the total number of sensor nodes.

The evaluation of the performance of a WSN is contingent upon the utilization of an appropriate metric. One such metric is coverage, which is of paramount importance in this regard. In this model, the coverage ratio is defined as the ratio of the coverage area to the total area of the monitoring area. The coverage area is calculated by the product of the sum of the joint perception probabilities of all the monitoring points and the area of each grid. The calculation of the coverage ratio is as follows:

In Equations (6) and (7), Cr denotes the coverage ratio, Y × Z denotes the total area of the monitoring area, κ is the area of a single grid, and n is the number of grids. The coverage of the WSN is obtained by calculating the ratio of the area of the covered grid to the total area of the monitoring area.

3.2. Coverage Optimization Model

In order to achieve the optimal solution for the coverage performance of WSN in dynamic deployment, this study takes the coverage C

r as the optimization objective and constructs the corresponding objective function with the following mathematical expression.

In Equation (8), Cr denotes the coverage rate of the WSN, which is determined by Equation (6). Oi and Jj represent the coordinates of the i-th sensor node and the j-th monitoring point in the monitoring area, respectively, and G is the monitoring area.

The present study proposes a solution to the aforementioned optimization problem by introducing the GWO algorithm and embedding the objective function Cr into the fitness function of GWO. In the implementation of the algorithm, the monitoring area, G, is defined as the search space, and the coordinates of all v sensor nodes are mapped as dimension parameters of the gray wolf individuals. The first v dimensions correspond to the x-axis coordinates of all deployed nodes, and the last v dimensions correspond to their y-axis coordinates. The GWO iteratively optimizes the population positions through a high-frequency updating mechanism, and constraints within the search space ensure the feasibility of the solutions. Consequently, the optimal individuals resulting from the convergence of the algorithm correspond to the optimal node distribution scheme of the WSN, thereby maximizing the coverage. Note that the proposed FMGWO does not always guarantee finding the global optimal placement of sensor nodes, as it is a metaheuristic algorithm that approximates optimal solutions in complex, NP-hard problems like WSN coverage optimization. The optimal placement would ideally cover the rectangle with minimal overlap and no gaps, but due to the problem’s complexity, FMGWO provides near-optimal solutions, as validated through comparisons with other heuristics in the experiments.

3.3. Standard GWO

GWO is a metaheuristic optimization method inspired by the social behavior of grey wolves in nature. GWO’s design is based on the efficient simulation of the hierarchical structure of gray wolf packs with hunting strategies. The algorithm divides the pack into four hierarchical roles: alpha (α), beta (β), delta (δ), and omega (ω), which represent leaders, sub-leaders, secondary decision makers, and ordinary members of the pack, respectively. The wolves labeled α, β, and δ play the dominant roles in optimization and are responsible for guiding the direction of the search, whereas omega wolves adjust their behaviors based on the positional information of these dominant wolves. The wolves classified as α, β, and δ play a dominant role in the optimization process, guiding the search direction, while the wolves classified as ω adjust their behavior based on the position information of these dominant wolves.

The GWO algorithm is predicated on simulating the hunting behavior of gray wolves. This behavior can be divided into three phases: encircling the prey, tracking the prey, and executing the attack. The algorithm delineates the collaborative behavior of the wolf pack through a mathematical model and iteratively updates the positions of individuals step by step to approximate the global optimal solution. Within the search space, the position of each wolf individual denotes a potential solution. The following formula is employed to model hunting behavior in the GWO algorithm.

In Equation (9), X

t+1 denotes the location of the next generation of wolves, X

t denotes the location of the contemporary wolves, X

p denotes the location of the prey, and A and D denote the coefficients and distances, respectively.

In the formula, C is the perturbation parameter for correcting the prey position, which is determined via Equation (13). r1 and r2 are random numbers between (0, 1), and a is the convergence factor, whose value decreases linearly from 2 to 0, which is calculated through Equation (14). t denotes the number of current iterations, and T denotes the total number of iterations.

In the GWO algorithm, the core of the leadership hierarchy is reflected in the role of wolves α, β, and δ in guiding the search direction of the entire wolf pack. Among them, wolf α, wolf β, and wolf δ represent the optimal solution, the second-best solution, and the third-best solution in the current iteration, respectively. During the iteration process, wolf ω dynamically adjusts its position based on the information provided by the three dominant wolves, and its trajectory is described by Equations (15) and (16). The following is the position update formula for the leadership level.

In Equations (13) and (14), D

α, D

β, and D

δ represent the distances between the specified gray wolf and wolves α, β, and δ, respectively. A

1, A

2, and A

3 are the parameter control vectors that determine the direction of motion for the individual gray wolf. These vectors are calculated using the following Equation (11), and C

1, C

2, and C

3 correct the ingested parameters for the positions of wolf α, wolf β, and wolf δ, which are computed in Equation (13). X

α, X

β, and X

δ are the wolf α, wolf β, and wolf δ position vectors, and X

1, X

2, and X

3 are temporary positions.

During the execution of the algorithm, the roles of α, β, and δ wolves are defined. Specifically, these wolves are responsible for recognizing the location of the prey and moving in the direction of the prey to pursue it. Concurrently, the three alpha wolves direct the other wolves to assume their positions, thereby facilitating the encirclement and capture of the prey through a series of coordinated actions. This collaborative effort ultimately culminates in the attainment of the optimal solution. The position update process of the wolf pack is governed by Equation (15). The time complexity of the standard GWO is O (N × D × T), where N is the population size, D is the problem dimension, and T is the number of iterations, primarily due to fitness evaluations and position updates in each iteration.

4. FMGWO

The standard GWO algorithm demonstrates considerable application potential in a variety of optimization problems, particularly in addressing the WSN coverage problem, exhibiting certain advantages over other swarm intelligence algorithms. However, its intrinsic mechanism still exhibits limitations in complex, high-dimensional, or multi-peak optimization scenarios. Specifically, GWO’s random initialization results in uneven node distributions, producing coverage gaps in WSNs. Its linear convergence factor lacks adaptability, hindering the balance between global exploration of diverse node layouts and local exploitation to minimize overlaps. The reliance on current alpha wolf information ignores historical high-quality node configurations, reducing robustness. The alpha wolf selection mechanism risks diversity loss, leading to convergence stagnation in multi-peak WSN landscapes. Additionally, GWO’s simplistic position update struggles to navigate the complex, multi-peak search space, often trapping the algorithm in local optima. These shortcomings collectively impair standard GWO’s performance in WSN coverage optimization. To address these limitations, this paper proposes an FMGWO that incorporates multiple strategies to effectively overcome the aforementioned shortcomings. The specific improvements are described as follows.

4.1. Electrostatic Field Initialization

To address the problem of the random distribution of nodes in WSNs tending to lead to coverage blindness, an electrostatic field model is used to generate a uniform initial population to improve the coverage efficiency. The standard GWO algorithm utilizes random generation during the initialization of the population. This approach is straightforward but may result in an imbalanced distribution of initial solutions within high-dimensional or intricate search spaces. This lack of initial diversity weakens global exploration, as nodes cluster in suboptimal regions, reducing the coverage rate. Consequently, a more scientific initialization method is required to enhance the diversity and coverage of the population.

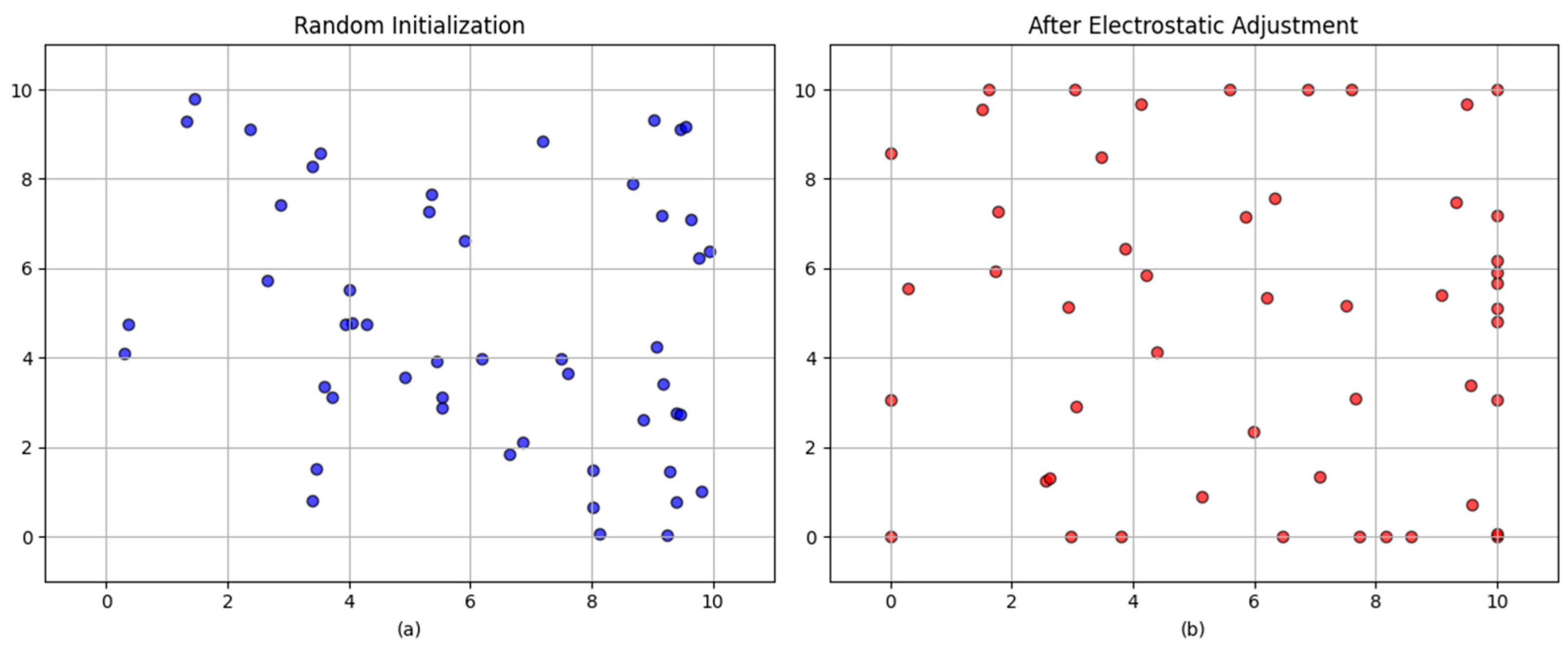

In order to address the issue of the imbalanced distribution of initial populations, this paper proposes an electrostatic field initialization strategy. The strategy under discussion draws inspiration from the theoretical framework of electrostatic fields as they apply in the physical sciences. In scenarios characterized by a low population number, the charged particles will tend to be uniformly distributed under the action of mutual repulsive force. By emulating the electrostatic force between particles, the initial population can be naturally dispersed in the search space, thereby providing a superior starting point for subsequent optimization. Furthermore, in comparison with the random initialization distribution, the electrostatic field initialization can better ensure enhanced stability during the initialization process. As illustrated in

Figure 1, the initialization schematic following the application of the electrostatic field is presented (the population size is 50).

The electrostatic field initialization aims to produce an initial population that is more uniformly distributed across the search space and less likely to cluster prematurely. By using repulsive-like interactions during initialization, candidate solutions tend to avoid high-density clusters, which improves early exploration and provides better coverage of the solution manifold. The practical effect on coverage: more diverse initial placements yield a higher probability of finding well-distributed sensor configurations that cover sparse regions of the area. The fundamental principle underlying the initialization of the electrostatic field is the dynamic adjustment of the population’s position, a process determined by the calculation of distance and electrostatic force between individuals. First, the initial population location is randomly generated using a uniform distribution within the upper and lower bounds of the search space [L, U] with a scale of (N, m), where N denotes the population size and m denotes the problem dimension. For each pair of individuals, P

i and P

j, in the population, the Euclidean distance, d, between them is computed. To avoid the potential division by zero error that can result from this calculation, a tiny value of 10

−6 is introduced. This step ensures that the computation is stable even when the distance between individuals is extremely small. The formula is as follows.

Subsequently, in accordance with the inverse square law of electrostatic force, the magnitude of the force F is calculated. The force is directly proportional to the distance between the objects; therefore, as the distance between the objects decreases, the force of repulsion increases, causing the objects to be pushed apart. Furthermore, the direction vector of the force, σ, denoting the unit vector pointing from P

i to P

j, must be calculated to ensure that the force acts along the line of connection between the individuals. The formula is as follows.

In Equations (17) and (18), F denotes the magnitude of the electrostatic force, and σ denotes the direction vector of the force.

The individual positions are updated through the application of electrostatic forces, and a regulation factor, designated as k, is incorporated to govern the step size.

In Equation (20), Pi and Pj represent the positions of the ith and jth individuals in the population, respectively. These positions are assumed to move in opposite directions, thereby simulating the repulsion effect. The parameter k is the electrostatic force regulation coefficient, which serves to balance the magnitude of movement with stability.

Ultimately, the updated position is cropped to guarantee that the search space is not exceeded.

In Equation (21), L denotes the upper bound of the search space and U denotes the lower bound of the search space.

This strategy is particularly effective for WSN coverage optimization, as it ensures a uniform initial node distribution, reducing coverage gaps in large-scale monitoring areas.

4.2. Dynamic Parameter Adjustment

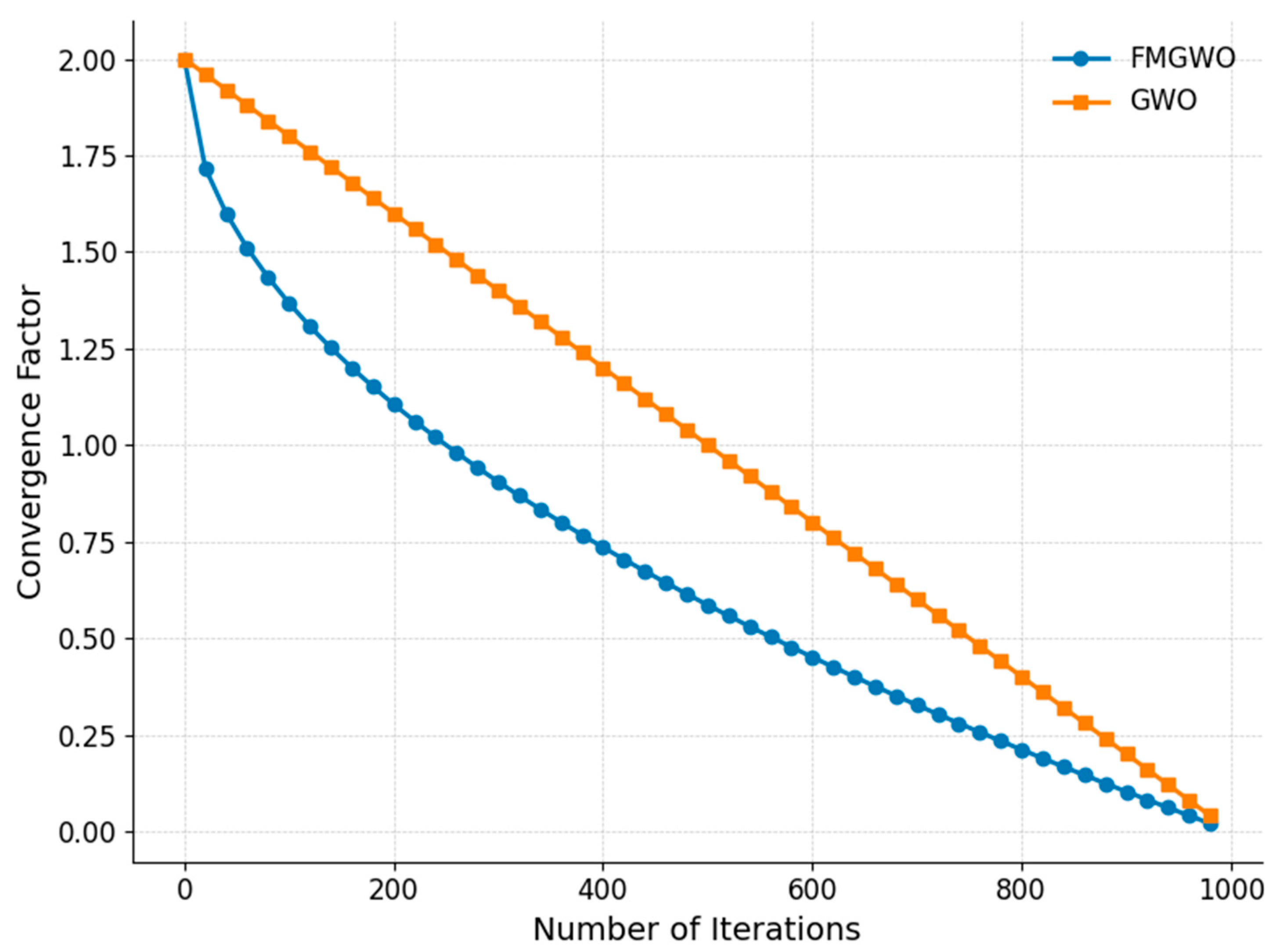

In order to accommodate the constrained computational and energy resources of WSNs, this study adopts a balanced optimization strategy by integrating a nonlinear convergence factor with a differential evolution scaling parameter. The conventional GWO employs a linearly decreasing convergence factor (a), which monotonically decreases from 2 to 0 throughout the iterations. Although this approach offers simplicity, it exhibits inadequate adaptability when confronted with the complex and multimodal nature of WSN coverage optimization. A linear decay imposes a uniform reduction in search step size across all iterations, often resulting in excessive exploration in the early stages and insufficient exploitation toward the end of the search process. This rigidity impairs the algorithm’s ability to dynamically adjust its search behavior to problem-specific terrain, adversely affecting the convergence speed, precision, and the balance between exploration and exploitation [

33].

In many biological systems, including the cooperative hunting of gray wolves, behavioral adjustments naturally exhibit nonlinear dynamics, where hunting speed, pursuit radius, and coordination strategies evolve according to environmental stimuli. Motivated by this observation, a nonlinear convergence factor is introduced to modulate the search radius more adaptively throughout the optimization process. At the early stage of iterations, the proposed nonlinear curve decreases more rapidly than its linear counterpart, enabling a swift contraction of the search range to intensify candidate solution refinement and accelerate convergence in promising regions. In the latter stage, the decay becomes gentler, preserving a certain degree of spatial exploration to reduce the probability of premature convergence to a local optimum. This nonlinear modulation effectively maintains a dynamic balance between intensification and diversification, thereby enhancing overall optimization accuracy, adaptability, and robustness in the presence of high-dimensional and irregular WSN topologies.

From a theoretical standpoint, this adjustment alters the search dynamics by providing a variable exploration radius proportional to both iteration progress and the curvature of the nonlinear decay function. This facilitates a more problem-aware transition from exploration to exploitation, which is particularly beneficial for multimodal landscapes with irregular objective value distributions. Comparative results against the standard GWO (see

Figure 2) demonstrate that the proposed nonlinear convergence factor yields a more desirable trade-off profile between the convergence rate and the solution quality. The nonlinear convergence factor (a) is formulated as follows.

In Equation (22), “a” denotes the convergence factor, “t” indicates the number of current iterations, and “T” signifies the maximum number of iterations.

Furthermore, the differential evolution scaling factor was recalibrated in this study. The magnitude of weighting in the differential evolution variant is controlled, thereby achieving a balance between global exploration and local exploitation.

In Equation (23), the differential evolutionary scaling factor is denoted as .

The mathematically nonlinear nature of the square root function enables the algorithm to adaptively adjust the search behavior. This adaptability renders the algorithm more flexible and suitable for complex optimization scenarios compared to linear decay.

By adaptively balancing exploration and exploitation, this approach addresses the dynamic and resource-constrained nature of WSNs, enabling efficient node placement.

4.3. Elder Council Mechanism

The standard GWO utilizes only the head wolf positions from the current iteration to guide the population update, with the alpha wolf serving as the principal leader in determining the search direction. However, high-quality solutions from earlier iterations, such as previous alpha positions with superior objective function values, are not preserved once replaced. This omission means that, if the current alpha is suboptimal, the algorithm is deprived of the ability to revert to historically superior solutions. As a result, the search process becomes more susceptible to premature convergence, potential quality degradation, and reduced robustness in WSN coverage optimization. In optimization theory, this represents a lack of temporal elitism retention, a concept well recognized in memory-based metaheuristics, where preservation of elite solutions can enhance both convergence stability and solution quality.

In order to address the problem of lost historical information and enhance the stability of the algorithm, the Council of Elders mechanism is proposed. The strategy draws inspiration from the elder system in human societies, wherein experienced elders are retained within the group to impart wisdom and guidance. In the context of wolf packs, this phenomenon can be conceptualized as analogous to the experience of the alpha wolf, who serves as the reference point for the pack. By periodically storing historical head wolf positions, the committee of elders provides a resource of candidate solutions for operations such as head wolf rotation, thereby avoiding the permanent loss of quality solutions.

The Council of Elders mechanism is implemented by periodically recording head wolf positions and limiting storage capacity. First, a storage set is created for alpha, beta, and delta, respectively, initially empty, for the purpose of recording historical positions. In each iteration, it is imperative to ascertain whether the current iteration number meets the update condition (i.e., every three generations). In the event that the specified condition is met, the current locations of alpha, beta, and delta are appended to the relevant storage collections.

In Equation (24), the variables Ea, Eb, and Ed represent a set of historical alpha, beta, and delta positions, respectively, which are stored in the Council of Elders. Initially, this set is empty. The variables A, B, and D correspond to the position vectors of the current alpha, beta, and delta, respectively. The variable t denotes the current iteration count.

In order to circumvent the accumulation of excessive historical data, the quantity of head wolves retained is constrained to a maximum of three per category. In the event that the limit is exceeded subsequent to the addition of new locations, the three most recent locations are retained, while the earliest records are removed, in order to ensure the currency of historical information.

In Equation (25), x is the category identifier for elders, which takes values in the range of {α, β, δ} and corresponds to alpha, beta, and delta, respectively. |Ex| denotes the number of elements in the set Ex. ei denotes the ith element in the set Ex, sorted in the order of additions. m denotes the problem dimension.

The Council of Elders’ primary function is to establish historical head wolf positions for the head wolf rotation mechanism as part of the Candidate Solution. They will not be directly involved in daily position updates.

This mechanism enhances the stability of WSN coverage optimization by preserving high-quality node layouts, preventing the loss of effective configurations in complex scenarios.

4.4. Alpha Wolf Tenure Inspection and Rotation

To mitigate premature convergence and preserve population diversity, this study introduces a tenure check and rotation mechanism for leader wolves in the GWO. In the standard GWO, the alpha, beta, and delta wolves are selected purely based on the instantaneous fitness ranking of the current population. While this procedure is computationally efficient, it cannot detect temporal stagnation; if the alpha wolf’s position remains unchanged or exhibits negligible fitness improvement across successive iterations, the search direction becomes overly dependent on a single, potentially suboptimal reference. This overreliance accelerates population clustering, reduces positional variance, and shrinks the effective exploration radius, often causing the algorithm to be trapped in a local optimum—a limitation particularly pronounced in WSN coverage optimization. Therefore, a strategy is required to detect and resolve this stagnant state.

This strategy draws inspiration from the phenomenon of leadership turnover observed among wolves in their natural habitat. In a wolf pack, when the incumbent leader is unable to effectively guide the pack forward due to advanced age or diminished capabilities, a new, strong individual typically assumes the leadership role. This turnover mechanism is instrumental in ensuring the continuity of the group’s adaptation to its environment. In a similar vein, the diversity of the algorithm and the capacity to circumvent local optima can be augmented by monitoring the performance of the alpha wolf and opting for a new alpha wolf from historical information and random perturbations when the need arises. The mechanism for monitoring and regulating the tenure of the alpha wolf is implemented through the use of counters and triggers. This mechanism is designed to initiate a rotation when a period of stagnation reaches a predetermined threshold.

First, a counter is initialized to keep track of the number of consecutive unimproved iterations of alpha adaptation. In each iteration, a comparison is made between the adaptation of the current alpha and the adaptation of the previous generation alpha. In the event that the counter remains unchanged, it is incremented; conversely, if an improvement is detected, the counter is reset to zero. This process quantifies the degree of stagnation of the alpha wolf.

As indicated in Equations (26) and (27), nt is the counter value at the t-th iteration, denoting the number of consecutive unimproved generations of alpha scores, with an initial value of 0. signifies the objective function score of alpha at the t-th iteration, while denotes the objective function score of alpha in the previous generation, with an initial value of ∞.

The rotation mechanism is activated once the counter reaches a preset threshold, which is set to five generations. The set of candidate solutions is to be constructed, including three types of sources: first, historical alpha, beta, and delta positions stored in the Council of Elders, which represent past quality solutions; second, alpha, beta, and delta positions of the current iteration, which retains the existing optimal solutions; and third, randomly perturbed solutions generated based on the current alpha positions, which use the normal distribution to introduce randomness to enhance the diversity.

In Equation (28), C is the set of candidate solutions containing the historical head wolf, the current head wolf, and the randomly perturbed solutions. Ea, Eb, and Ed are the set of historical alpha, beta, and delta positions stored in the Council of Elders. A, B, and D are the position vectors of the current alpha, beta, and delta. is a normally distributed stochastic vector with a mean of 0, a standard deviation of convergence factor of α, and a dimension of m.

The objective function value is computed for each solution in the set of candidate solutions, and the solution with the best fitness is selected as the new alpha.

As illustrated in Equations (29)–(32), S denotes the set of objective function scores for the candidate solutions. An element, p, in the set of candidate solutions, C, denotes a specific candidate position vector. The index, i, indicates the candidate solution with the optimal score. The position vector of the alpha, A, and the objective function score of the alpha,

, are also defined.

Once the rotation is complete, we reset the counters and empty the Council of Elders to ensure that the history is updated from the new alpha and to avoid old data interfering with subsequent optimizations.

This strategy mitigates the risk of local optima in WSN coverage problems, ensuring diverse node placements that maximize monitoring efficiency.

4.5. Hybrid Mutation Strategy

The position update of the standard GWO algorithm relies exclusively on the guidance of the head wolves (alpha, beta, delta) to compute the new position via a linear combination. This single strategy has limitations when facing multi-peak or high-dimensional optimization problems. Due to the lack of additional diversity generation mechanisms, the population tends to converge to the local optimum prematurely, leading to an insufficient search capability. Furthermore, the standard GWO’s simplistic position update mechanism struggles to navigate the multi-peak search space of WSN coverage optimization, often trapping the algorithm in local optima. Consequently, a more effective mutation strategy is required to promote population diversity and enhance global search capability.

In order to enhance the global search capability and the ability to jump out of local optima, this paper introduces a hybrid mutation strategy that combines population difference learning via differential evolution [

34] and random perturbation through the Cauchy distribution [

35]. The exploration and development capability of the algorithm is enhanced through multi-level variation.

The hybrid mutation strategy is realized via multi-stage position updating, which gradually enhances the diversity and adaptability of the population.

Initially, the position of each individual is calculated based on the position of the head wolf. The distances to alpha, beta, and delta were calculated independently for each individual, and the step size was adjusted via random coefficients. Ultimately, the average of the three was taken as the preliminary solution. This stage preserves the bootstrapping properties of GWO.

In Equations (35)–(37), Δα, Δβ, and Δδ represent the distance vectors between the gray wolf individuals and wolves α, β, and δ, respectively. C1, C2, and C3 are the ingestion parameters that correct the positions of wolves α, β, and δ. is the current position vector of the i-th individual. H1, H2, and H3 are the parameter control vectors that determine the direction of movement for the gray wolf individuals. Pα, Pβ, and Pδ denote the position vectors of wolf α, wolf β, and wolf δ, respectively. X1, X2, and X3 are the temporary position vectors. is the initial new position vector of the i-th individual in the t-th iteration.

Based on the preliminary position vector, the differential evolution mechanism is introduced in the t-th iteration. Two different individuals are randomly selected from the population, and the difference in their position vectors is calculated, weighted by a dynamic scaling factor, and superimposed on the preliminary position vector to form a variant position vector. This step utilizes inter-population differences to enhance diversity.

As indicated by Equations (38) and (39), denotes the variant position vector of the i-th individual in the t-th iteration, while signifies the differential evolutionary scaling factor in the t-th iteration, which is determined by Equation (23). The position vectors of two randomly selected individuals in the population in the t-th iteration are represented by and , respectively. The random indexes k1 and k2 are defined as such.

In the t-th iteration, a random perturbation is applied to the variant position vector. This perturbation is based on the Cauchy distribution, and it is applied with a certain probability. The long-tailed nature of the Cauchy distribution generates larger jump steps, which facilitate the escape from local optima. The magnitude of the perturbation is associated with the range of the search space, thereby ensuring that the stochasticity remains moderate.

In Equations (40) and (41), is defined as the Cauchy perturbation vector in the t-th iteration. μ is a uniformly distributed random number with values in the range of [0, 1], which is employed to regulate the Cauchy perturbation probability. is a standard Cauchy-distributed random vector with a mean of 0, a scale parameter of 1, and a dimensionality of m. L denotes the upper bound of the search space, and U denotes the lower bound of the search space.

Prior to the conclusion of the t-th iteration, boundary constraints are imposed on the variant position vector. The fitness of the variant position vector is then compared with that of the preliminary position vector. The superior one is selected as the final position vector for that individual in this iteration. This process is designed to ensure that the quality of the individual is improved or maintained at each iteration.

As indicated by Equations (42) and (43), denotes the final updated position vector of the i-th individual in the t + 1st iteration. The objective function, , is employed to assess the fitness of the solution.

The hybrid mutation strategy is tailored to the multi-peak nature of WSN coverage optimization, enabling the algorithm to explore diverse node arrangements and achieve higher coverage rates.

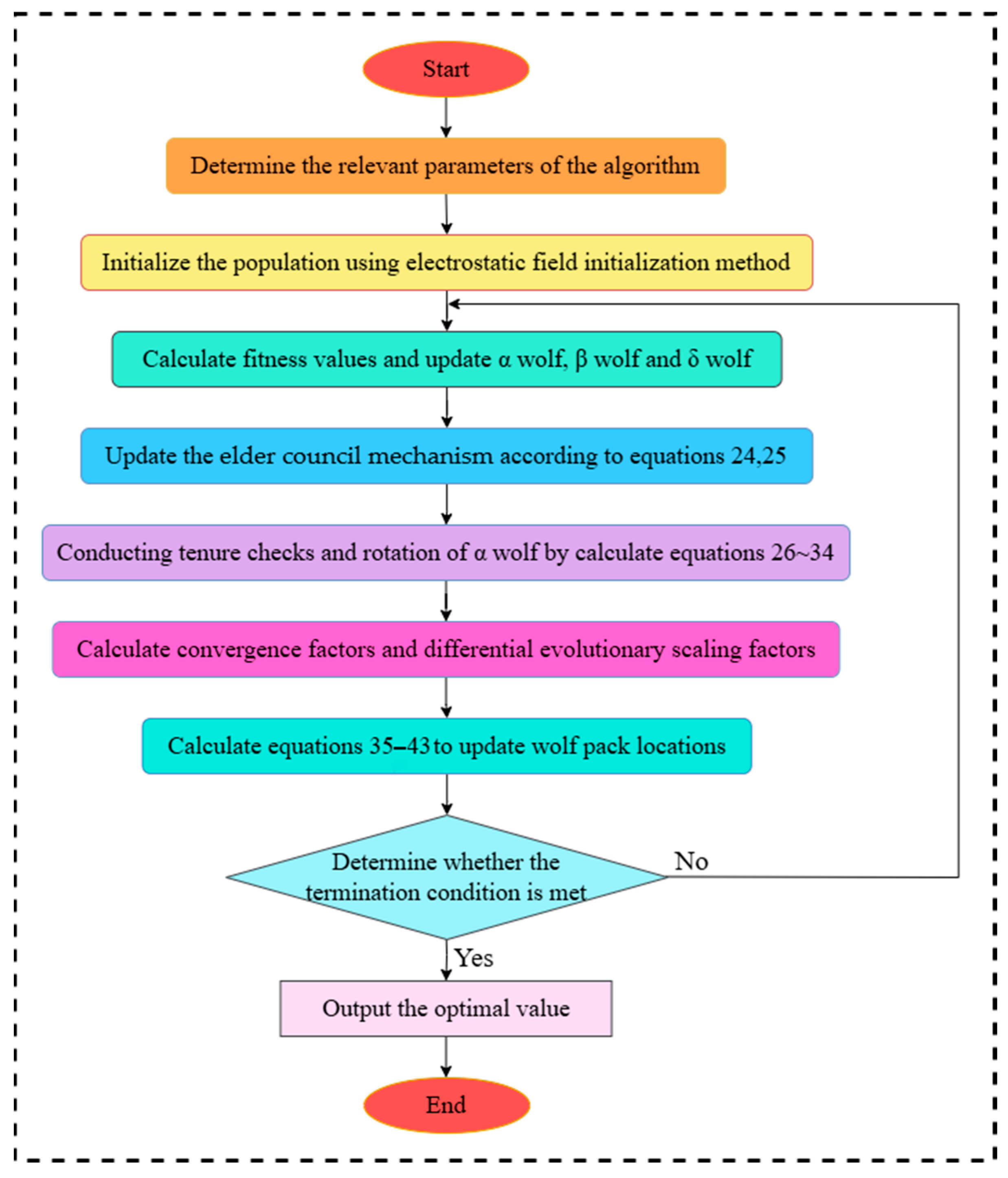

4.6. Algorithm Steps

Based on the above improvements, the implementation of the algorithm proposed in this paper can be divided into the following eight steps. As illustrated in

Figure 3.

Step 1: determine the relevant parameters of the algorithm, including the population size, N, the maximum number of iterations T, and the search space.

Step 2: initialize the wolf pack and generate the initial position of the wolf pack in the search space using the electrostatic field initialization method.

Step 3: compute the fitness value and update wolf α, wolf β, and wolf δ.

Step 4: update the Council of Elders according to Equations (24) and (25).

Step 5: monitor the fitness of Wolf α and perform tenure checking and the rotation of Wolf α according to Equations (26)–(34).

Step 6: calculate the convergence factor and the differential evolutionary scaling factor.

Step 7: update the position of the mixed-variant strategy computed using Equations (35)–(43).

Step 8: Determine whether the current algorithm satisfies the optimal solution or the maximum number of iterations. If so, terminate the algorithm, and output the optimal solution. Otherwise, proceed to Step 3.

5. Experimental Design and Analysis

To verify the effectiveness of the algorithm improvement and its application performance in WSN coverage optimization, ablation experiments and application simulations using the algorithm were conducted. The experimental execution environment was an Intel (R) Core i9-12900H CPU with 2.90 GHz, 16 GB RAM, the Windows 11 64-bit operating system, and the Python 3.12 integrated development environment.

5.1. Design of Ablation Experiments

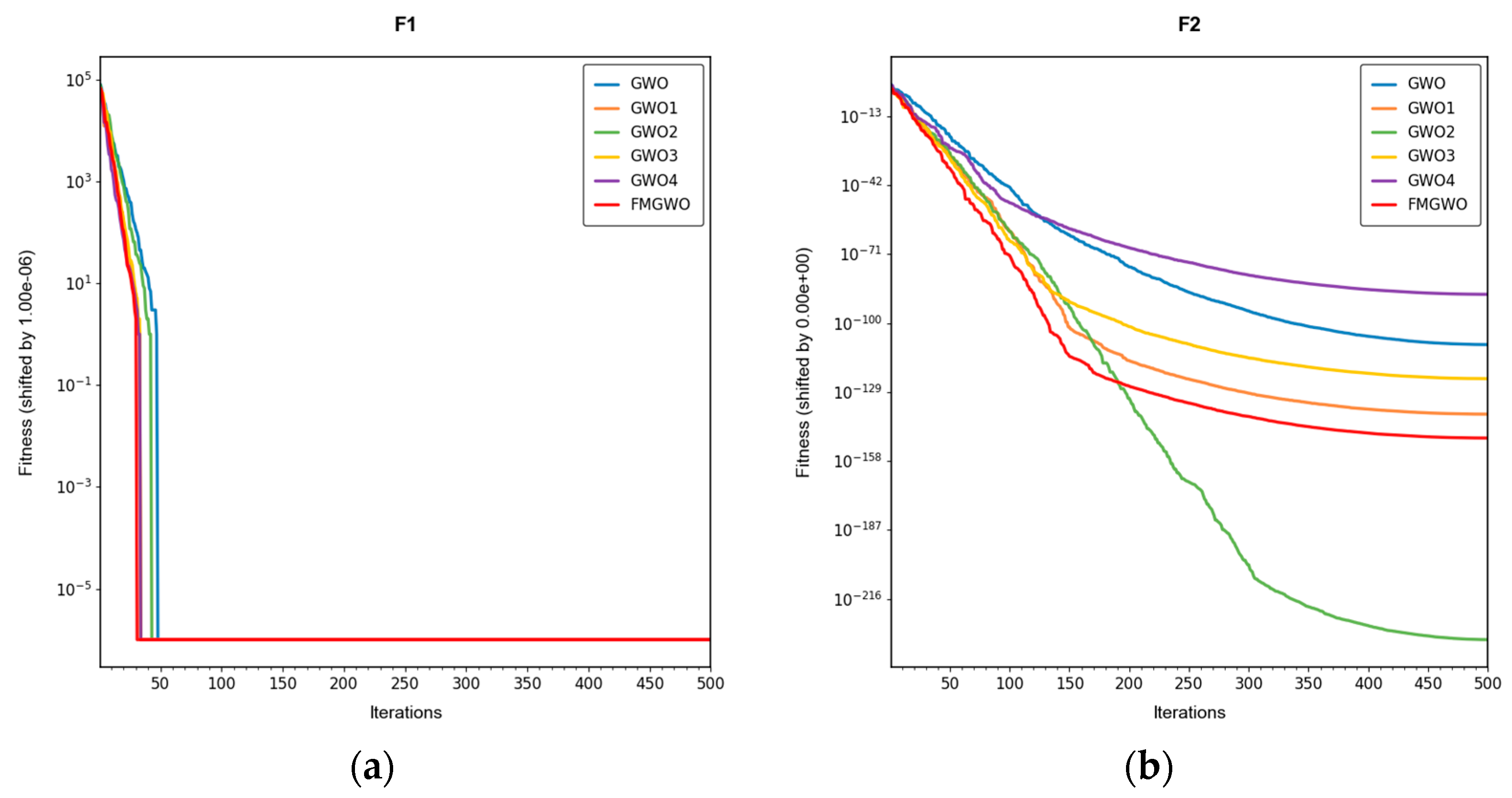

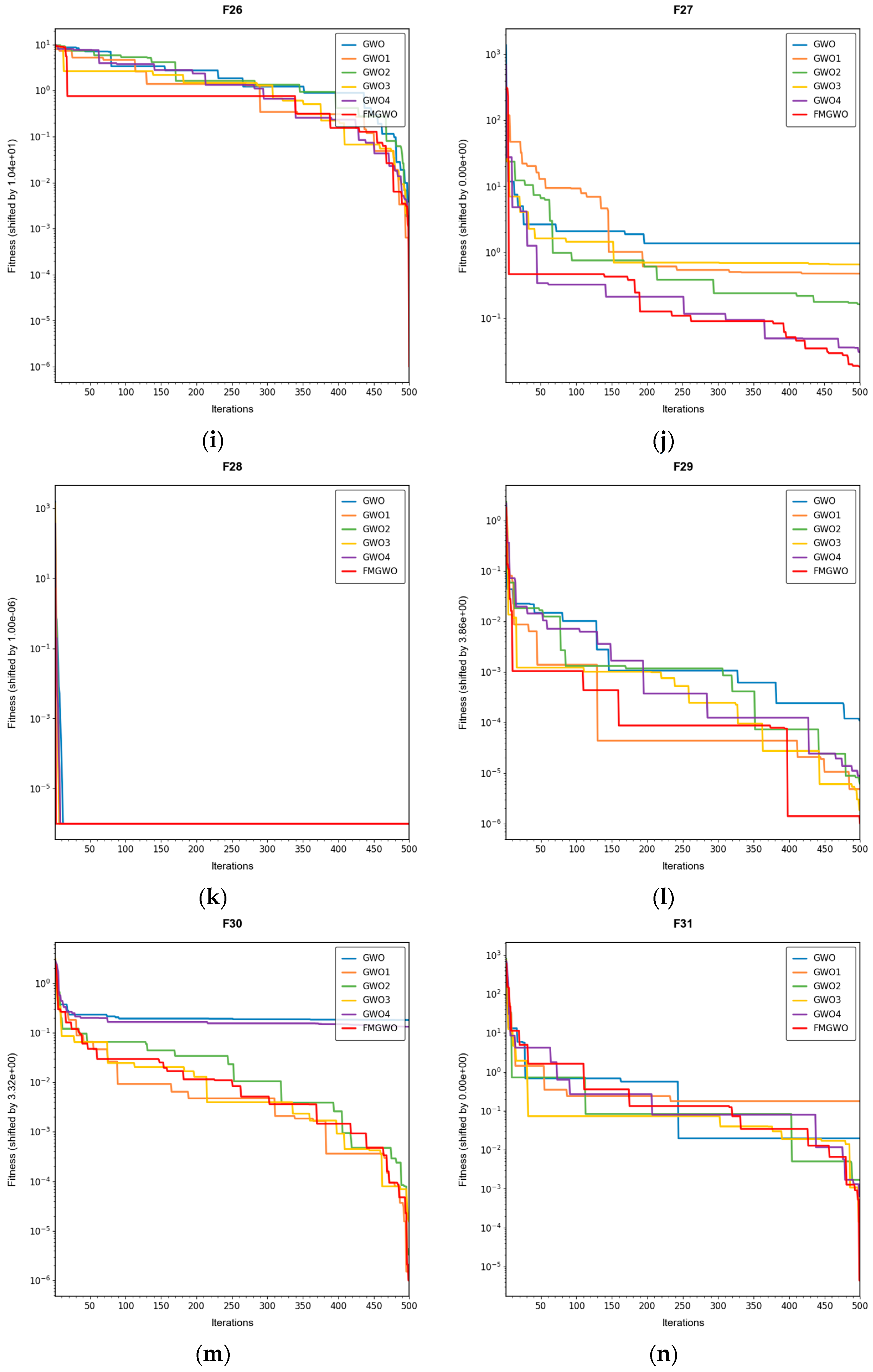

To verify the effectiveness of the improved strategy, ablation experiments were performed using the improved FMGWO algorithm. The experimental comparison algorithm is shown in

Table 1. A total of 33 benchmark functions were selected as test functions for the experiment in

Table 2,

Table 3 and

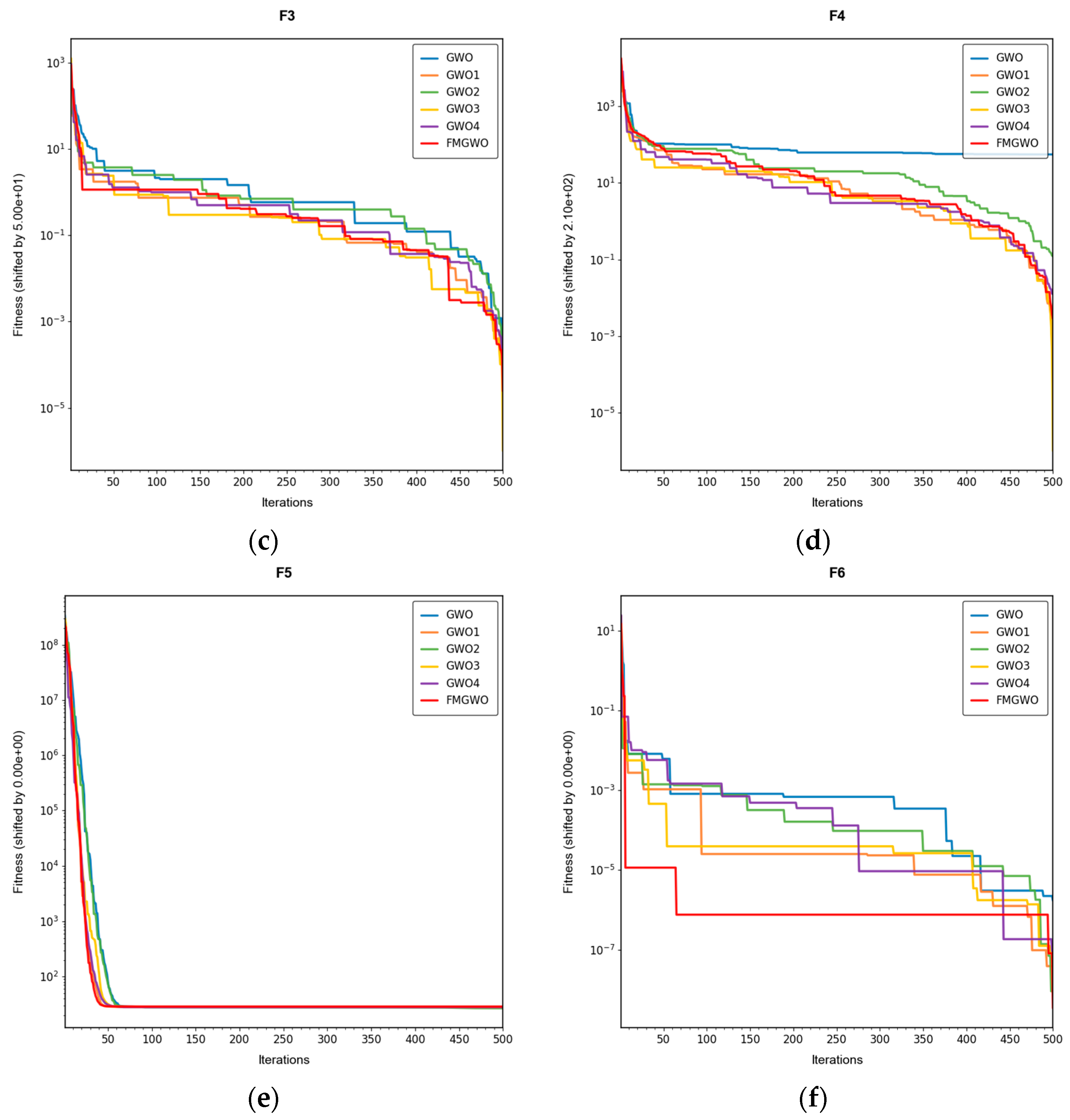

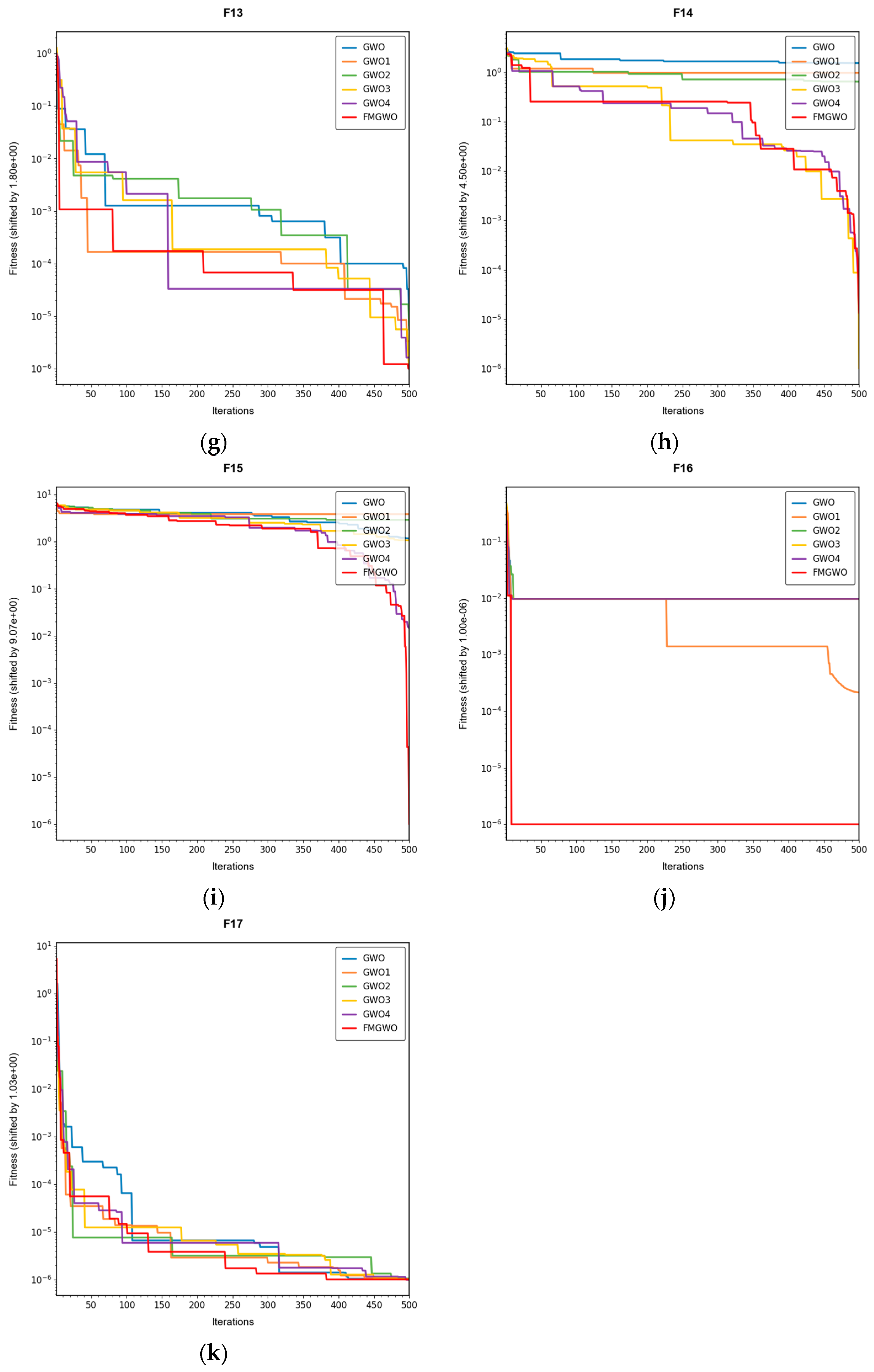

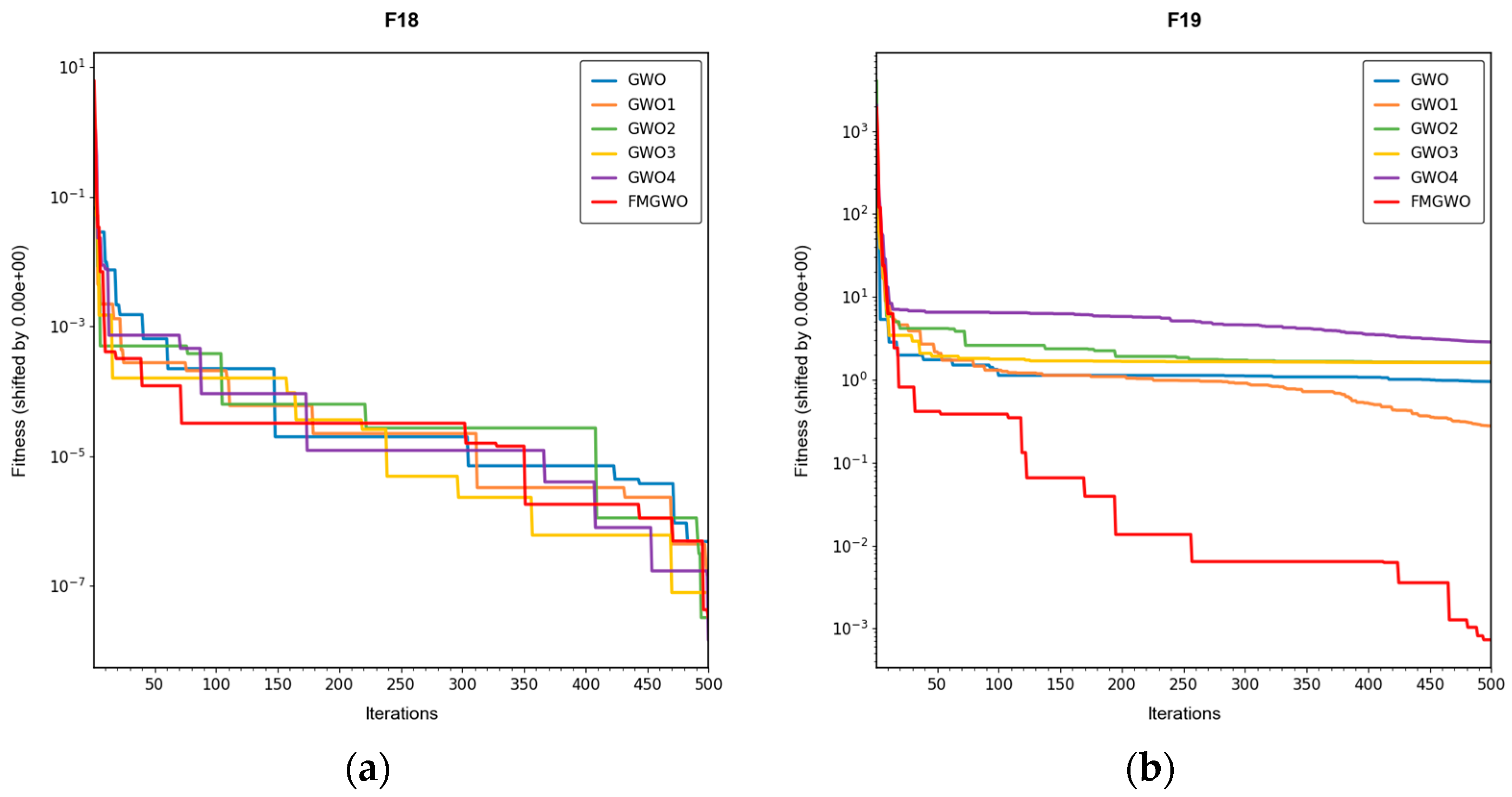

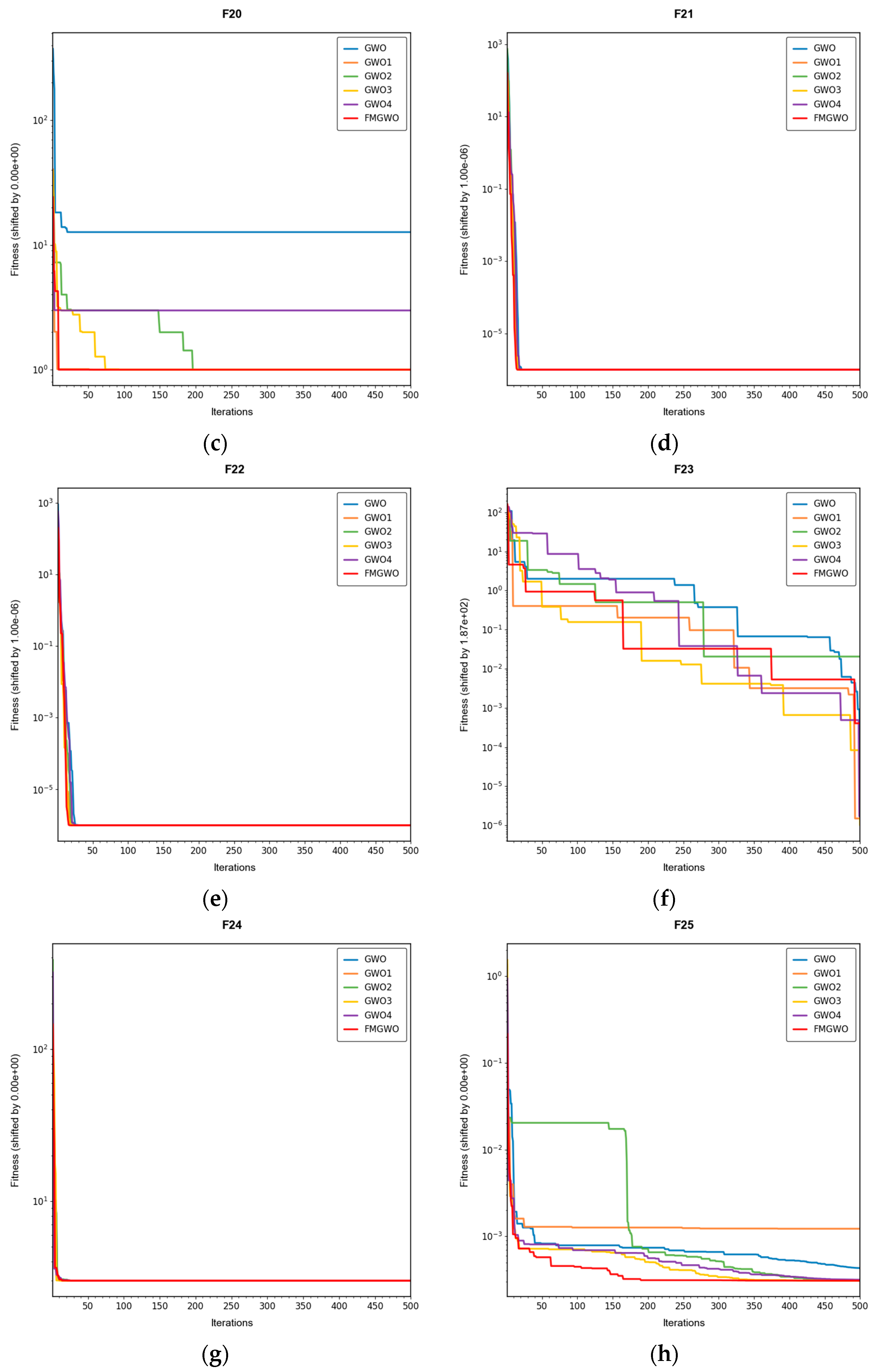

Table 4. The selection of these functions was driven by their capacity to showcase a range of optimization challenges, encompassing high dimensionality, multi-modal, and intricate search spaces. These characteristics emulate those encountered in the domain of WSN coverage problems. The employment of FMGWO in the context of these functions serves two primary objectives. Firstly, it ensures the system’s resilience in confronting high-dimensional, non-linear optimization challenges. Secondly, it validates the system’s aptitude in addressing the specific challenges posed by the WSN coverage problem in a domain-specific manner. The population size of the algorithms was set to 30, and the number of iterations was set to 500. To ensure the stability of the experimental data, each algorithm was run independently 30 times, and the optimal, average, standard deviation, and worst values were taken as the performance comparison indices. The experimental results are shown in

Table 5,

Table 6,

Table 7,

Table 8,

Table 9,

Table 10,

Table 11,

Table 12,

Table 13,

Table 14,

Table 15 and

Table 16. The convergence curves are shown in

Figure 4,

Figure 5 and

Figure 6.

In the course of analyzing the data for this study, it was discovered that certain data points were found to be in close proximity to a specific value during the data-processing and computational procedures. Consequently, these data points were directly approximated to that particular value when utilizing Excel formulas for computation. However, in reality, these data were not strictly equal to that particular value but were infinitely closer to it.

This phenomenon can be attributed to a confluence of factors, including the inherent characteristics of the data itself and the precision limitations inherent to Excel’s calculation capabilities. Excel employs distinct numerical precision and rounding rules when executing formula calculations. In instances where the discrepancy between the data and a specific value falls below Excel’s computational precision thresholds, the value is approximated as that specific value.

It is important to note that, although the data are shown as specific values in Excel, based on our in-depth understanding of the experimental process, data characteristics, and calculation methods, these data are actually infinitely close to that specific value rather than strictly equal. The data can be found with the standard deviation not being equal to zero. In the subsequent analysis of the data and discussion of the results, this characteristic was given full consideration. The data were processed and interpreted in a reasonable manner to ensure the accuracy and reliability of the research results.

When evaluating the convergence performance of the algorithms on functions, it should be noted that some of the fitness values may be non-positive. Consequently, the logarithmic scale cannot be applied directly for the visualization of these values. Consequently, a leveling operation is performed on a subset of the fitness values. It is important to note that this panning operation does not modify the relative disparity between the fitness values. Furthermore, it preserves the trend of the convergence curve, which can effectively demonstrate the iterative process of the algorithm. The shifted data are then employed to plot the convergence curve under the logarithmic scale, with the specific amount of shifting indicated in the graph description.

The experimental results demonstrate that the FMGWO algorithm exhibits superior performance in comparison to other algorithms when evaluated under a range of test functions, including basic unimodal functions, classic multimodal functions, and complex composite functions. These test functions are subsequently analyzed as follows:

All four strategies play a certain role in promoting the FMGWO algorithm.

The strategy in

Section 4.1 enhances the initialization stage by combining a uniform distribution with controlled random perturbations. This ensures that the initial population is evenly dispersed across the search space, preventing local aggregation that often occurs in the standard GWO. By using a more balanced initialization, a sufficient number of candidate solutions are distributed across most feasible regions, laying a solid foundation for subsequent global exploration. Experimental results on unimodal functions F2 and F6 indicate that the standard GWO’s initial distribution can result in insufficient search coverage. When the

Section 4.1 strategy is removed, GWO1 shows larger fluctuations across independent runs and, in several cases, fails to reach the global optimum. In contrast, FMGWO with the

Section 4.1 strategy preserves higher diversity at the start of the search, avoids early local traps, and achieves better optimal and mean fitness values. This confirms that improving uniformity and stochasticity at initialization significantly enhances stability, the breadth of early exploration, and overall optimization robustness.

The strategy delineated in

Section 4.2 is intended to enhance the algorithm’s capacity to conduct local searches with greater precision while ensuring that the depth and breadth of the global search are balanced. In practice, the search process often faces a trade-off between global exploration and local mining. It is easy to fall into a local optimum if only focusing on local search and difficult to converge if it is too decentralized. This strategy in FMGWO enables the algorithm to swiftly augment its advantage in the proximity of the optimal solution region by dynamically calibrating the search step size and implementing a local learning mechanism, while preserving a degree of jumping out capability in the global stage. An examination of the experimental data, such as the F6 test function, reveals that the standard GWO exhibits diminished local convergence performance. Additionally, a discernible discrepancy is evident between the optimal value and the mean value of GWO2 and FMGWO on specific functions. For instance, the optimal value of GWO2 in the F6 function is 1. In the context of the aforementioned numerical simulations, the convergence rate of 44 × 10

−7 is observed to be less than that of FMGWO, which is found to decrease to 1.67 × 10

−8. A similar trend is observed in the complex composite functions. While GWO2 performs marginally better than FMGWO in some of the tested functions, FMGWO ultimately demonstrates superior convergence speed and higher accuracy in most cases through this strategy. It can be argued that the

Section 4.2 strategy effectively encourages individuals to seek out high-quality solutions within their local region. Additionally, its balancing mechanism serves to mitigate the loss of diversity that can result from too-fast convergence, thereby ensuring that the sensitivity of local search is not compromised while maintaining the effectiveness of a global search. A comprehensive analysis integrating the aforementioned mechanisms and data comparisons reveals that this strategy offers substantial advantages in enhancing solution accuracy and reducing convergence time, thereby playing a pivotal role in optimizing the overall performance of FMGWO.

Strategies 4.3 and 4.4 focus on addressing search stagnation by preserving high-quality solutions from historical iterations and integrating them into future searches. This prevents excessive reliance on the current best individual, thereby reducing the risk of local entrapment. The use of historical information gives the population a “memory effect,” guiding it toward unexplored yet promising regions. Comparative tests—particularly on F2, F11, and other functions—show that GWO3 consistently underperforms FMGWO, especially on unimodal, multimodal, and composite function benchmarks. Without these “memory” capabilities, the algorithm is prone to forgetting good solutions and repeatedly converging to suboptimal areas in complex landscapes. The combined effect of

Section 4.3 and

Section 4.4 is twofold: accelerating early search through historical guidance, and maintaining adaptability to escape local optima later, as reflected in smoother convergence curves. This long-term perspective greatly enhances the algorithm’s resilience and supports fine-tuning accuracy in challenging optimization tasks.

Section 4.5 introduces mutations and perturbations to maintain population diversity, particularly vital in high-dimensional, multi-peak problems. Without sufficient diversity, traditional algorithms tend to concentrate in a few local regions while neglecting potentially optimal areas. Data from composite functions F32 and F33 show that the standard GWO’s best values stagnate earlier at higher levels, and in some cases GWO4 even underperforms the original GWO. In contrast, FMGWO with

Section 4.5 maintains wide coverage early through random perturbations, and it prevents over-convergence later in the search. This ensures the continuous exploration of new regions and improves global search performance, as confirmed by convergence curves showing a gradual, stable descent on multi-peak problems. Overall, this strategy significantly delays premature convergence, enhances population diversity, and provides the algorithm with a stronger global perspective in complex search spaces.

5.2. Wireless Sensor Network Coverage Experiment

The effectiveness of FMGWO in optimizing the WSN coverage problem is evaluated by using the coverage C

r (see Equation (6)) as the fitness value. The experimental parameters are listed in

Table 17. Among them, PSO [

36], GWO [

27], CSA [

37], DE [

34], GA [

38], FA [

39], OGWO [

40], DGWO1 [

41], DGWO2 [

41], and FMGWO optimize the coverage of WSNs, respectively. The superiority of FMGWO over other algorithms is verified by comparing the coverage C

r of each algorithm. The parameter settings of the above algorithms are shown in

Table 18.

For practical implementation, we assume that each sensor node is equipped with localization capabilities to determine its exact position, as is common in many modern WSN applications. The computation of FMGWO is performed centrally at a sink node or base station that has sufficient computational resources and energy. Sensor nodes periodically report their positions to the sink via multi-hop communication, assuming a connected network. The sink then computes the optimized positions and broadcasts repositioning commands back to the nodes. This centralized approach minimizes the computational burden on energy-constrained sensor nodes, though it requires reliable communication links and assumes nodes have mobility capabilities for repositioning. These requirements introduce additional energy overhead for communication and movement, which should be considered in resource-limited deployments.

In the simulation setup, sensor nodes are initially deployed at random locations within the 100 m × 100 m monitoring area, as per the random deployment strategy described in

Section 4.1. The algorithms then iteratively optimize node positions to maximize coverage, with performance examined through metrics such as best coverage, mean coverage, and standard deviation over 30 independent runs, ensuring statistical reliability.

These parameters were selected based on standard practices in the swarm intelligence literature for similar optimization problems: a population size of 30 balances exploration and computational efficiency, 500 iterations ensure sufficient convergence without excessive runtime, and algorithm-specific values follow original proposals or empirical tuning for WSN scenarios to promote effective search. In order to verify that each algorithm achieves the highest coverage with the minimum number of nodes, we conducted simulation experiments and selected three scenarios of 20, 25, and 30 nodes from small to large for comparison and analysis. Each comparison algorithm was run independently for 30 times, and the final coverage optimum, mean, and standard deviation were used as the comparison data. The experimental results are shown in

Table 19.

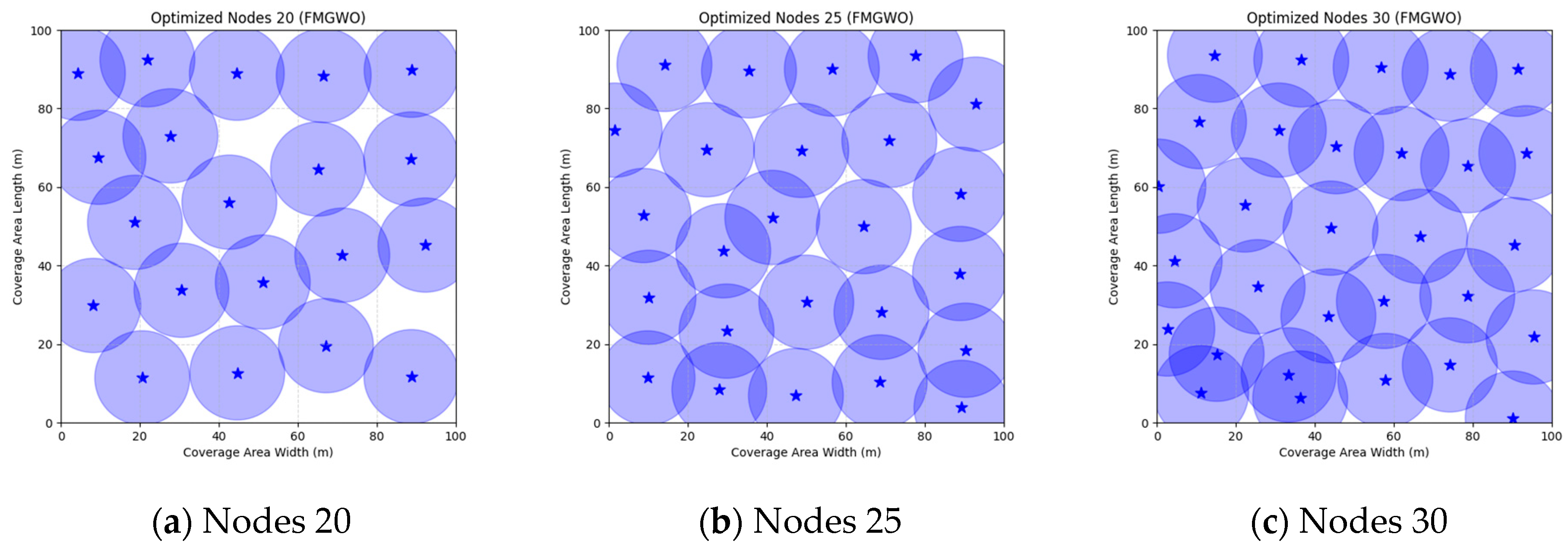

The FMGWO algorithm demonstrates notable efficacy across a range of node counts. When the number of nodes is 20, the optimal coverage is 84.39%, and the average coverage is 82.47%. These values are superior to those obtained via other algorithms, such as PSO and GWO. The standard deviation of 0.0092 demonstrates the stability of the algorithm. As the number of nodes is increased from 25 to 30, the optimal coverage rate increases from 94.85% to 98.63%. Concurrently, the average coverage rate continues to exceed the other rates, while the standard deviation experiences a slight increase. However, the high coverage rate persists. A comparative analysis of the FMGWO algorithm with other algorithms reveals its superiority in key performance indicators such as optimal coverage, average coverage, and stability. This reflects its ability to adaptively reposition nodes, ensuring connectivity and coverage. Furthermore, the FMGWO algorithm demonstrates a capacity for achieving high coverage with a reduced number of nodes.

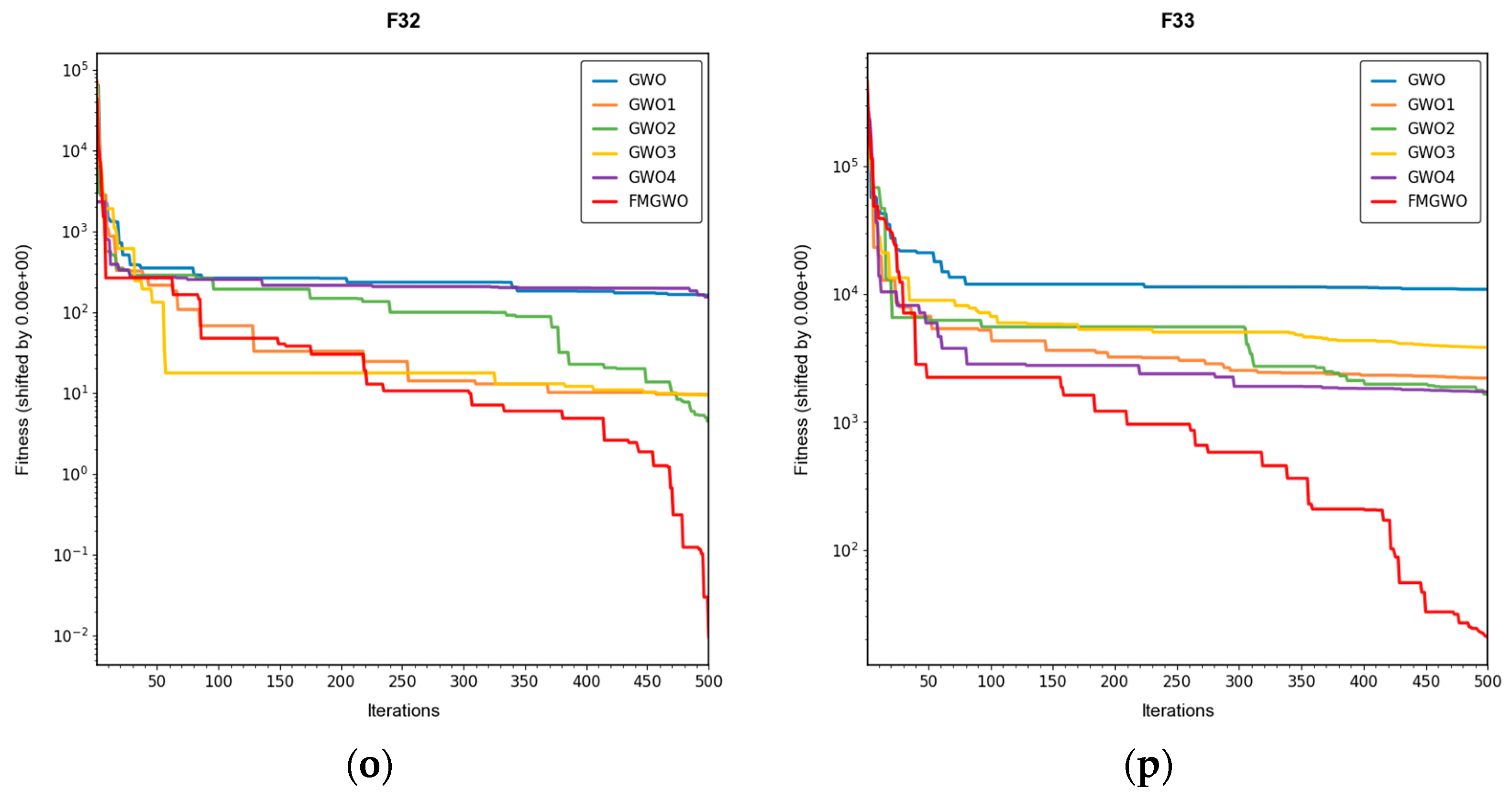

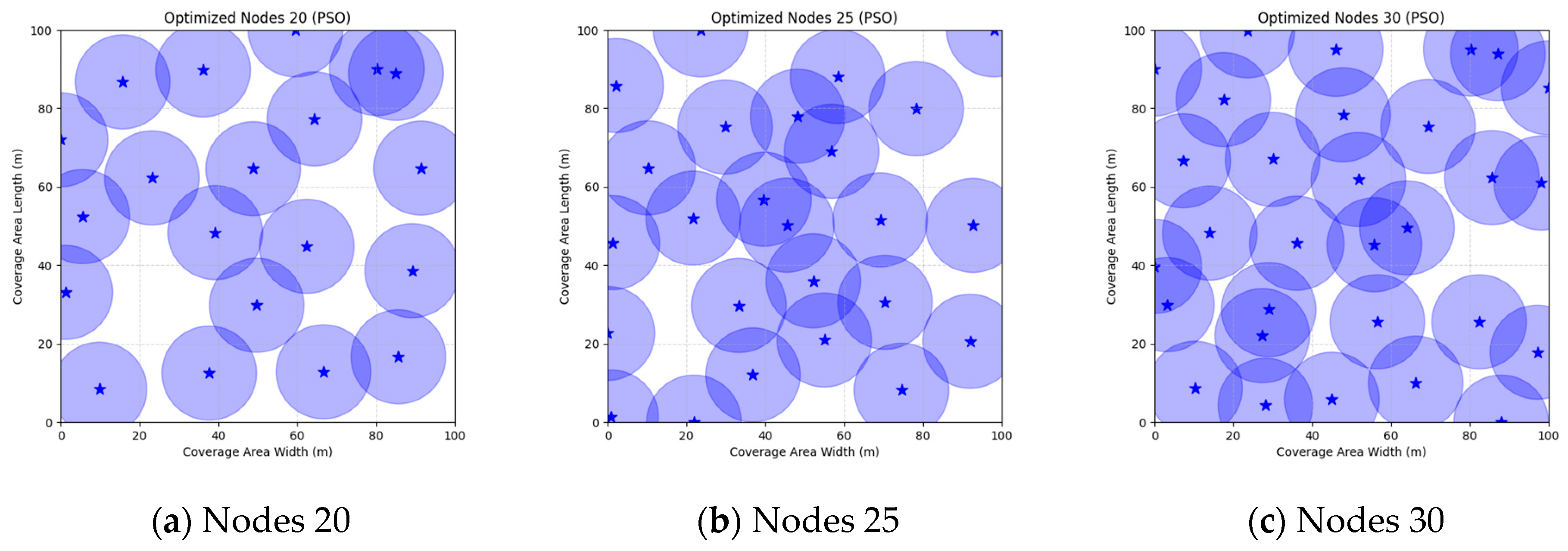

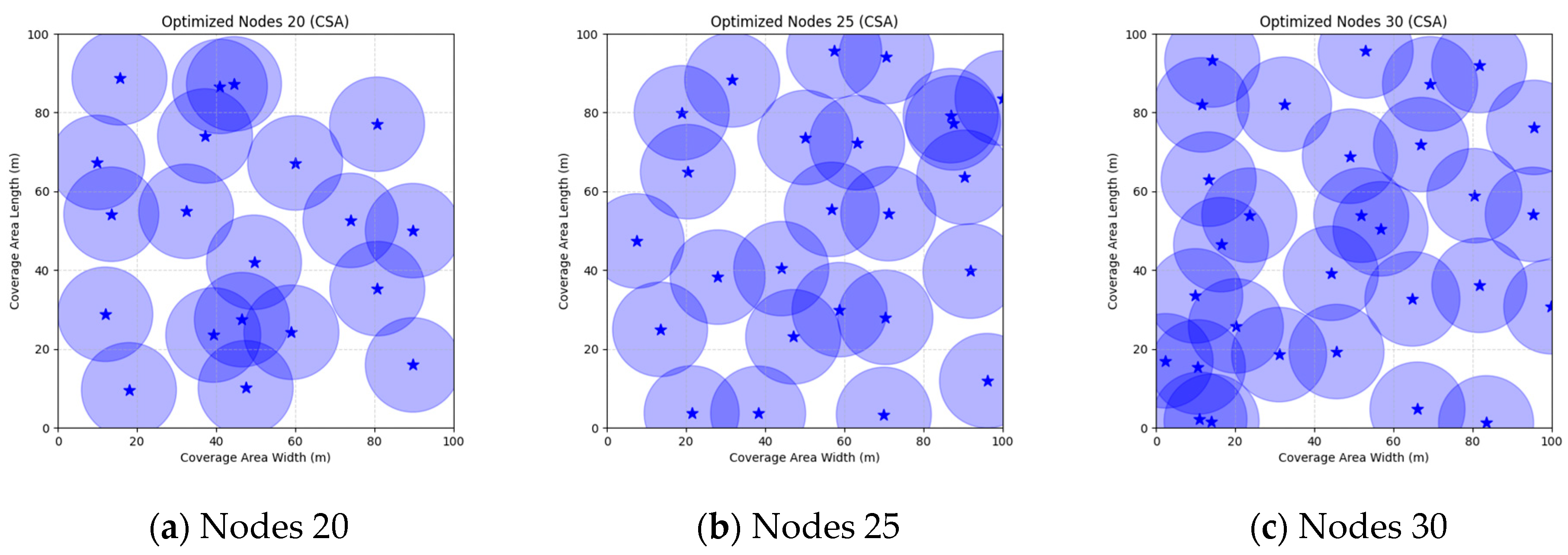

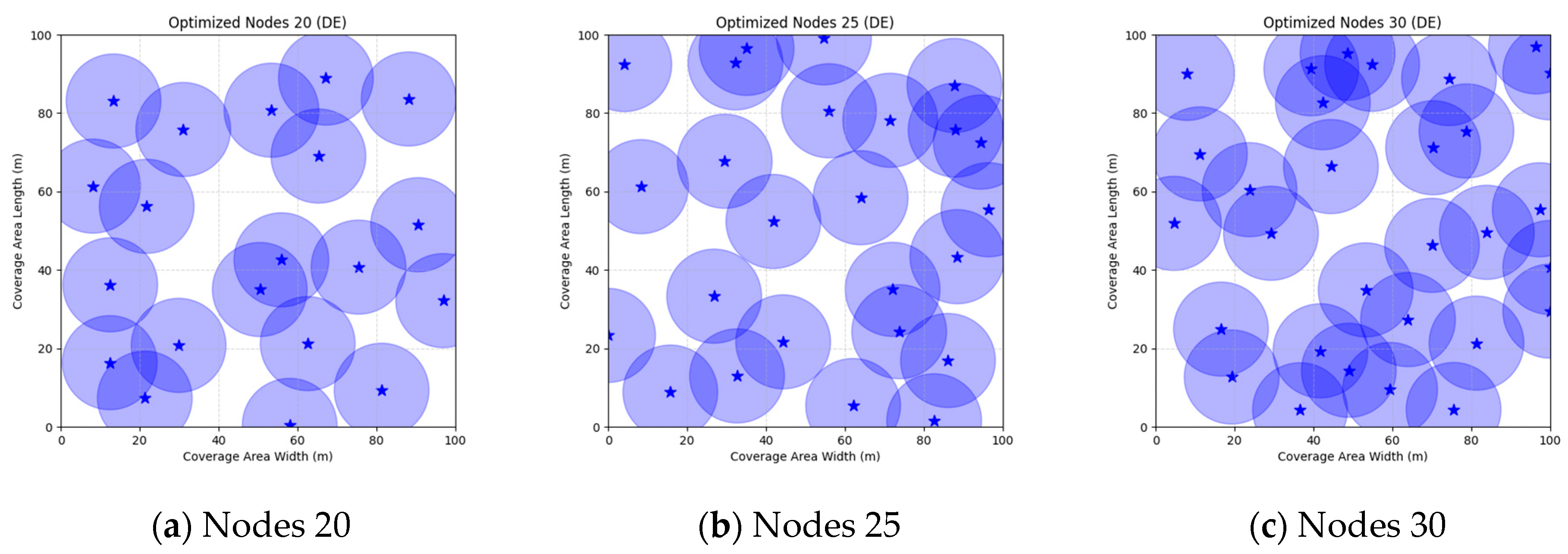

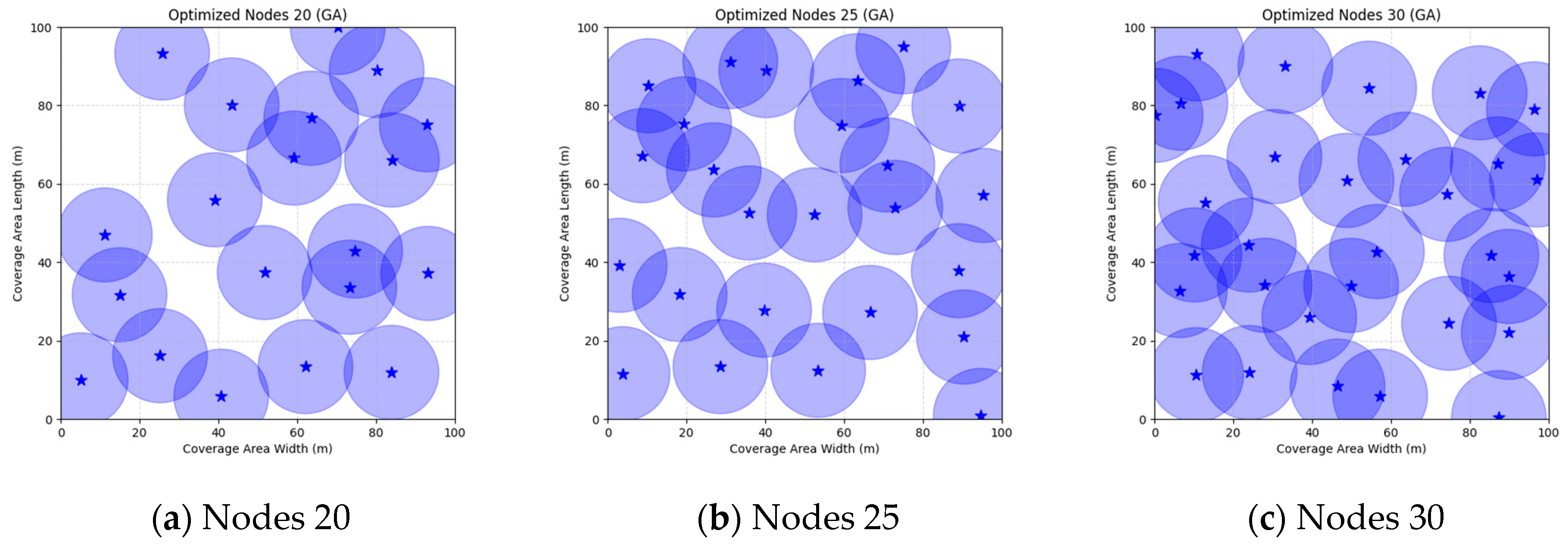

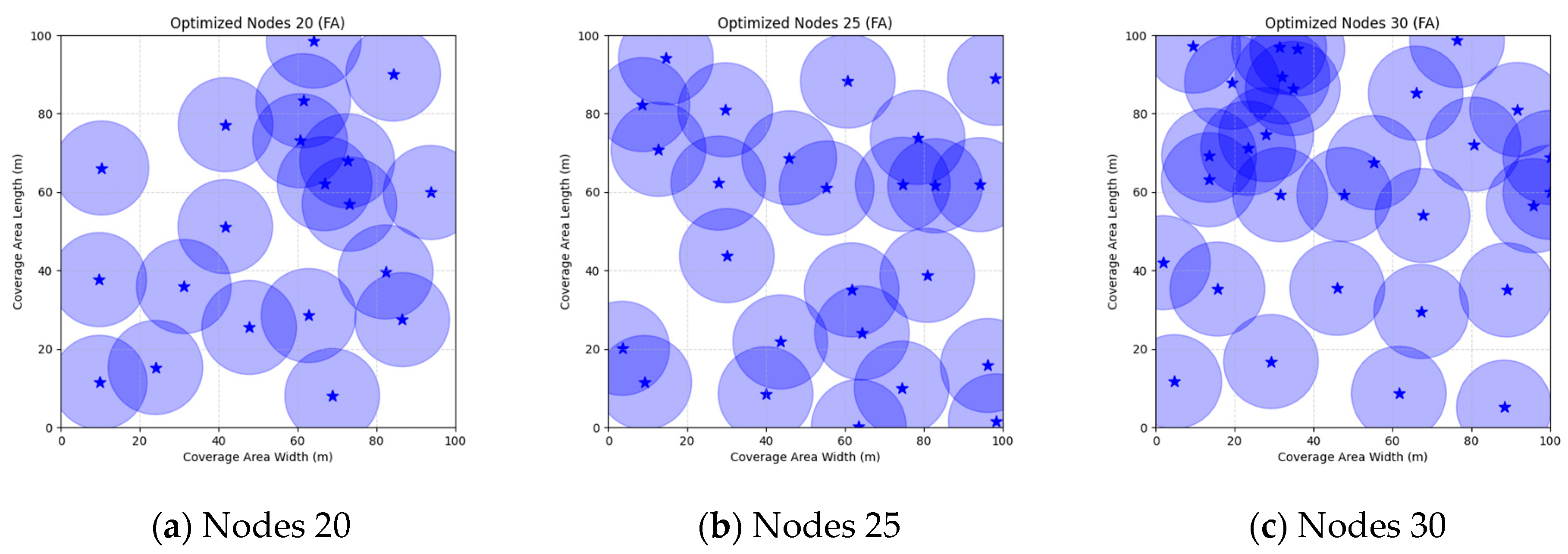

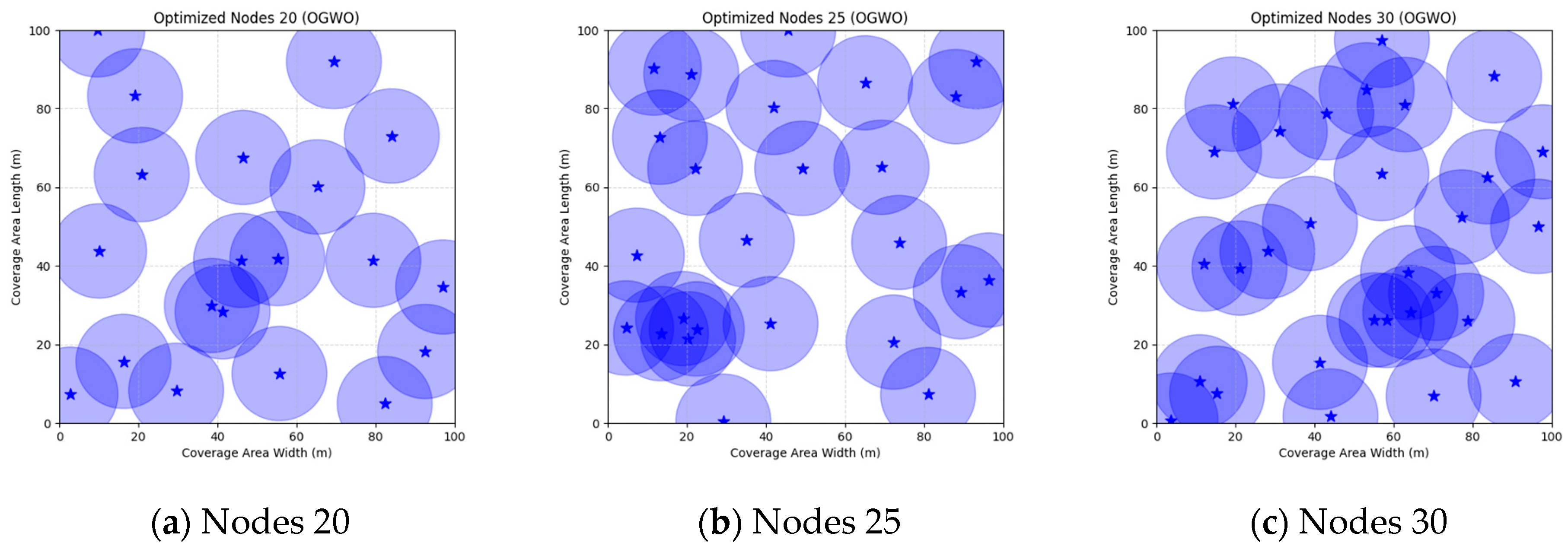

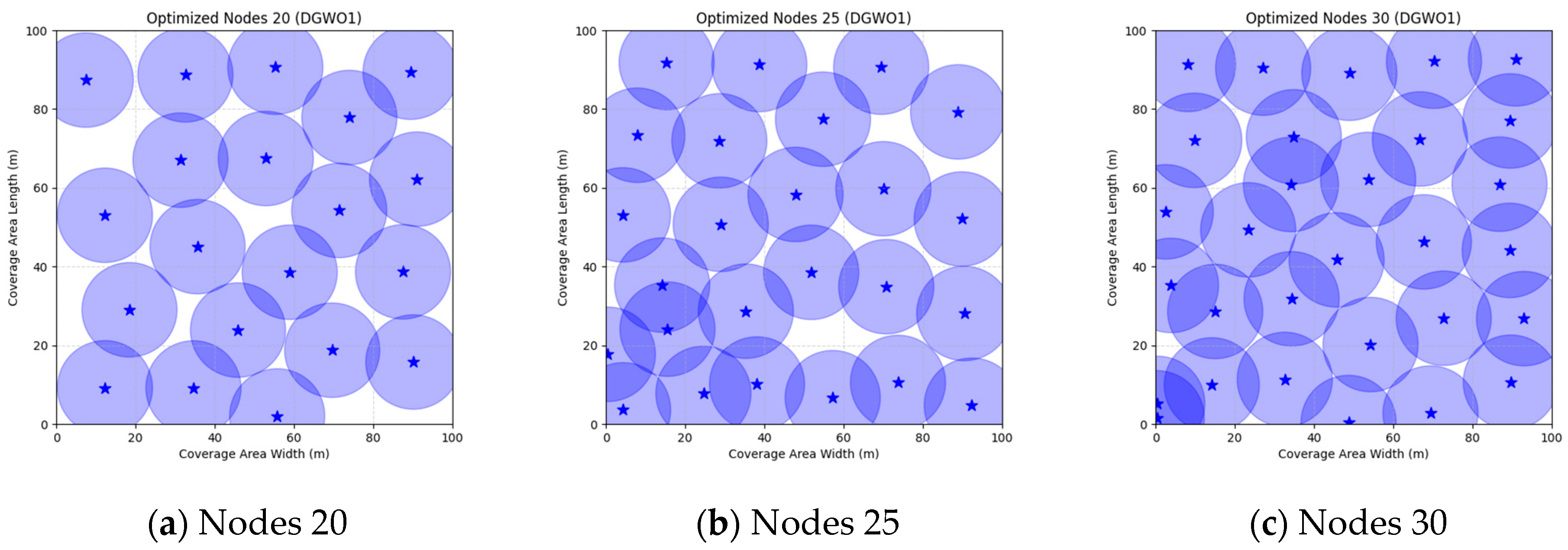

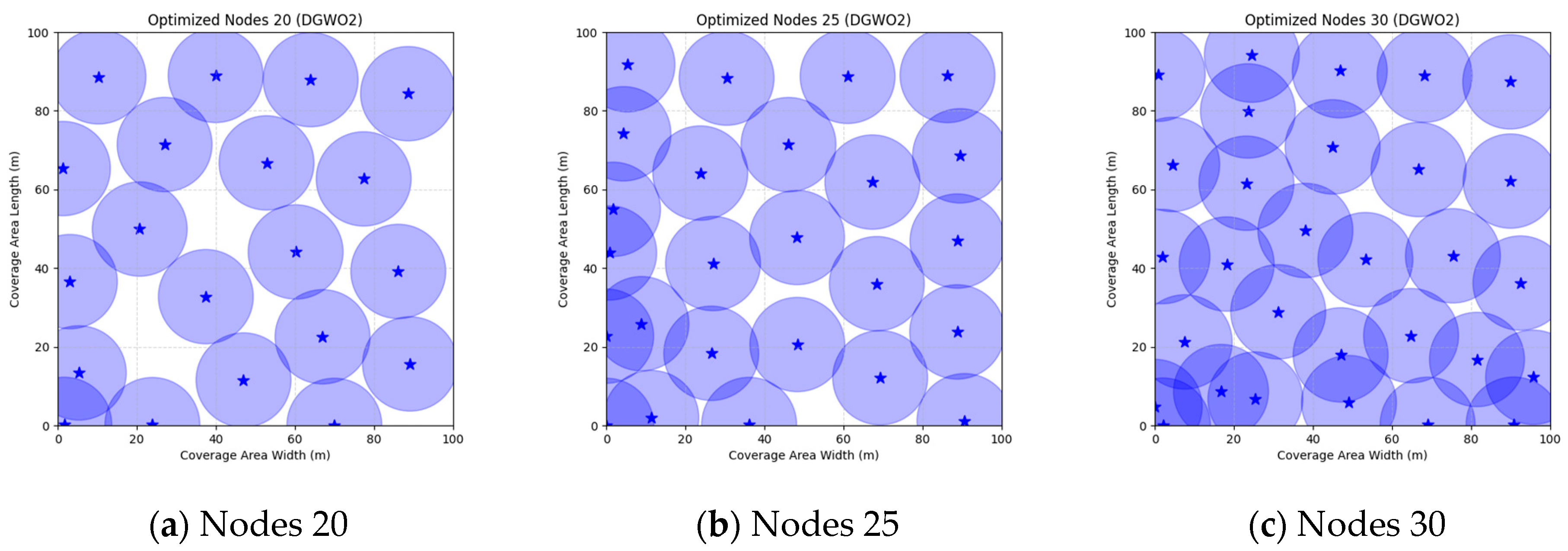

A comparison of FMGWO with other algorithms reveals its superior performance. A comparison of the GWO with other algorithms, including PSO, GSA, FA, and GA, reveals that the GWO exhibits superior performance in terms of coverage rate across a range of node numbers. This observation suggests that the GWO enhances the standard GWO algorithm, thereby facilitating a more balanced exploration and development capacity and preventing the occurrence of local optimal solutions. When confronted with advanced algorithms such as OGWO, DGWO1, and DGWO2, FMGWO with 30 nodes exhibited an optimal coverage rate of 98.63% and an average coverage rate of 96.57%. These results substantiate the efficacy of the implemented improvement measures in enhancing the overall performance of the system. In practical applications, the FMGWO algorithm has been shown to achieve high coverage with fewer nodes, thereby effectively reducing costs. This has significant application value and broad application prospects. In order to provide a more intuitive demonstration of the efficacy of the FMGWO algorithm in addressing the WSN coverage problem,

Figure 7,

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12,

Figure 13,

Figure 14,

Figure 15 and

Figure 16 illustrate the distribution of nodes associated with the optimization of the WSN coverage problem by each comparative algorithm. The asterisks (*) in the figure represent the specific locations of the sensor nodes, and the circular area clearly depicts the actual sensing range of each sensor node.

As demonstrated in

Figure 7,

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12,

Figure 13,

Figure 14,

Figure 15 and

Figure 16, the FMGWO algorithm exhibits superior performance in comparison to the PSO, GWO, CSA, DE, GA, FA, OGWO, DGWO1, and DGWO2 algorithms when the number of sensor nodes is 20, 25, and 30, respectively. It has been demonstrated that these comparative algorithms are generally afflicted with two main issues. Firstly, there is an absence of comprehensive coverage of monitoring areas. Secondly, and perhaps more significantly, there is redundant monitoring in areas that have undergone optimization. Conversely, the FMGWO algorithm employs optimization to achieve a more uniform distribution of nodes, thereby reducing the redundant monitoring area and enhancing network coverage.

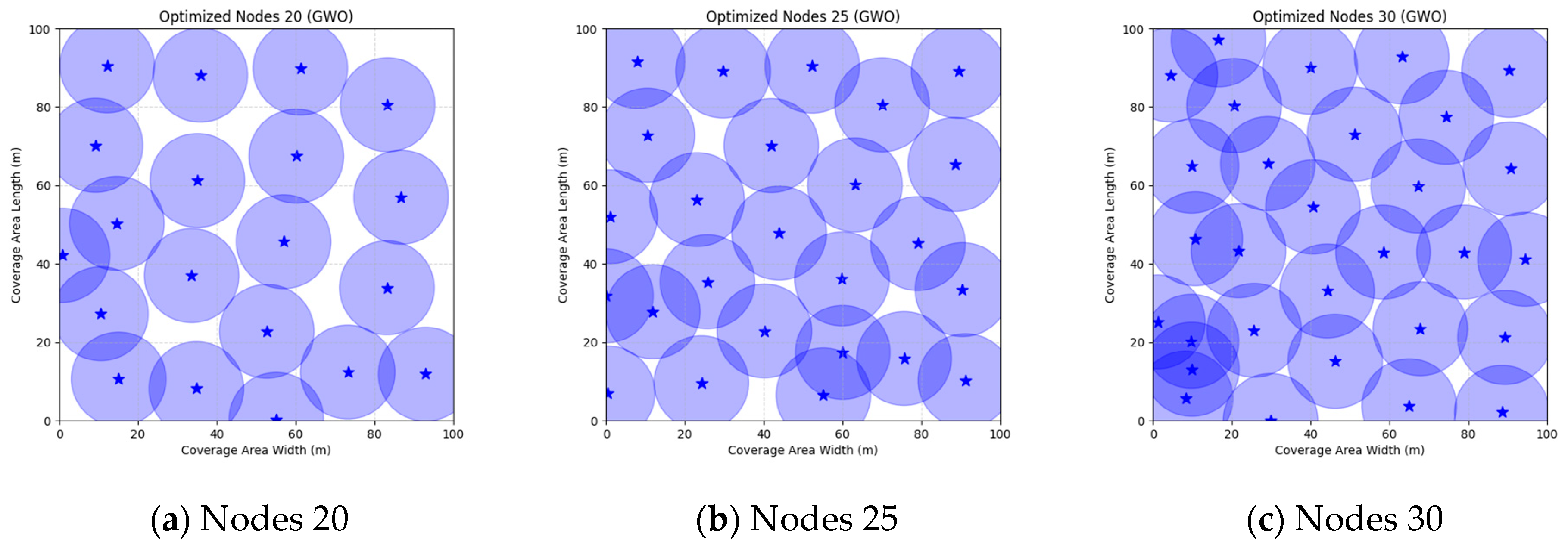

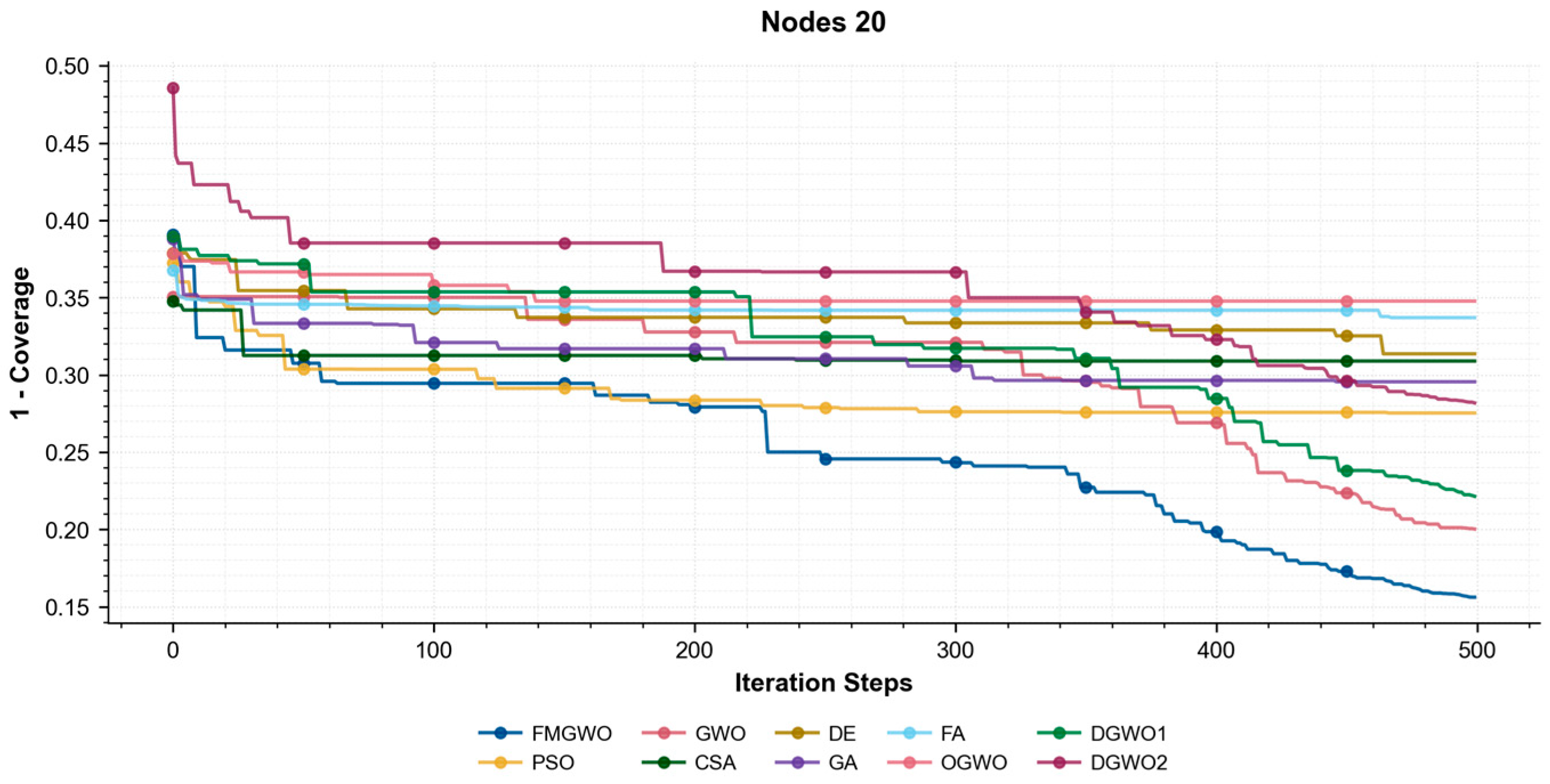

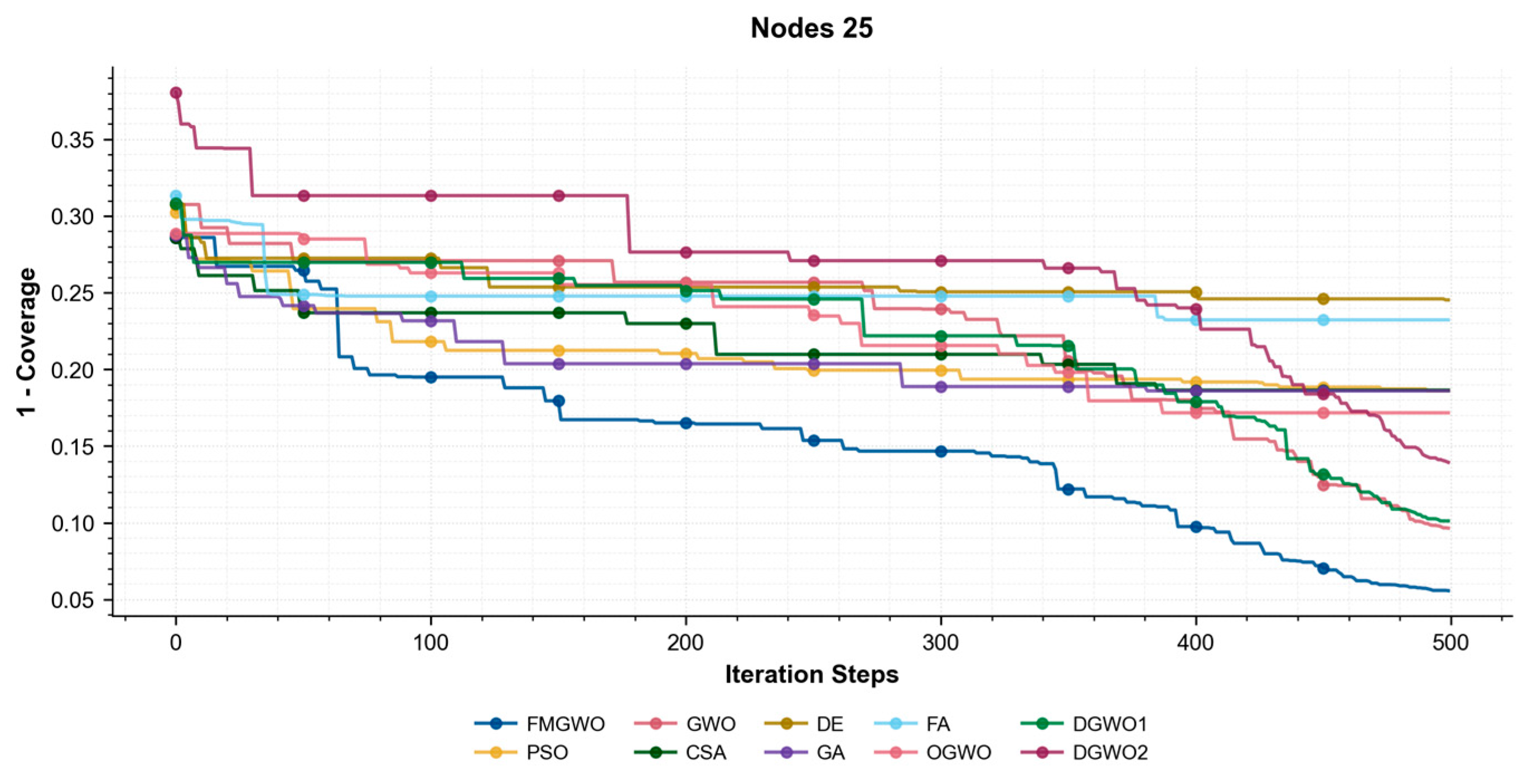

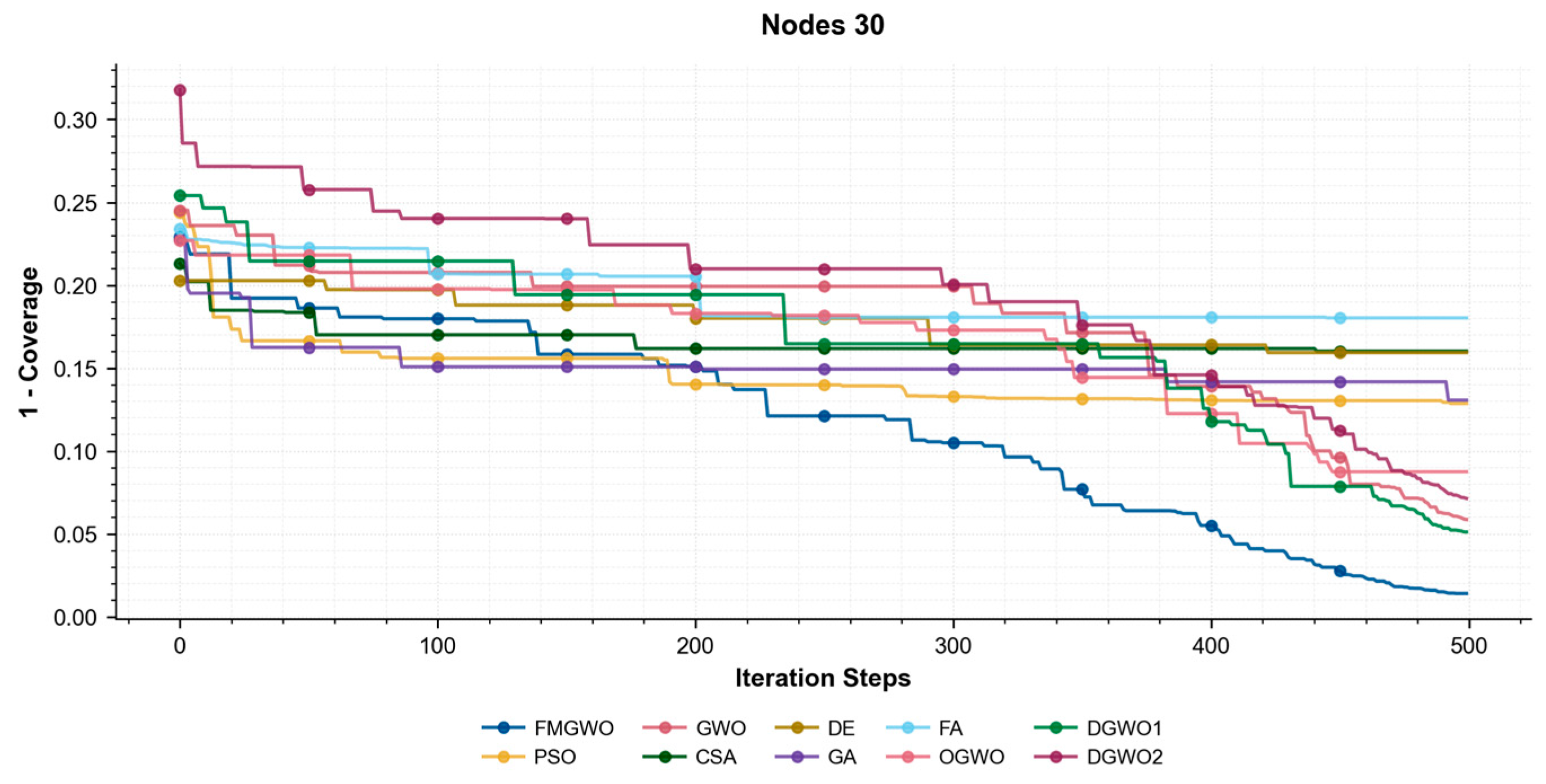

As illustrated in

Figure 17,

Figure 18 and

Figure 19, the coverage convergence curves for each algorithm are demonstrated after 500 iterations for three cases of 20, 25, and 30 nodes. The convergence accuracy and speed of the algorithms can be used to further understand their performance in optimizing the WSN coverage problem.

As demonstrated in

Figure 17,

Figure 18 and

Figure 19, the FMGWO enhances the pre-convergence speed and post-convergence accuracy of WSN optimization while preserving the benefits inherent to GWO. The curves stabilize after approximately 450–500 iterations, as evidenced by the flattening of fitness values, indicating effective convergence to near-optimal solutions. The other two improved GWO algorithms mentioned above, i.e., OGWO, DGWO1, and DGWO2, are not as effective as FMGWO in optimizing the WSN coverage, although they improve GWO. Therefore, compared with the comparison algorithms, the FMGWO algorithm achieves stronger overall competitiveness in terms of searching accuracy, convergence speed, and stability.