Railway Intrusion Risk Quantification with Track Semantic Segmentation and Spatiotemporal Features

Abstract

1. Introduction

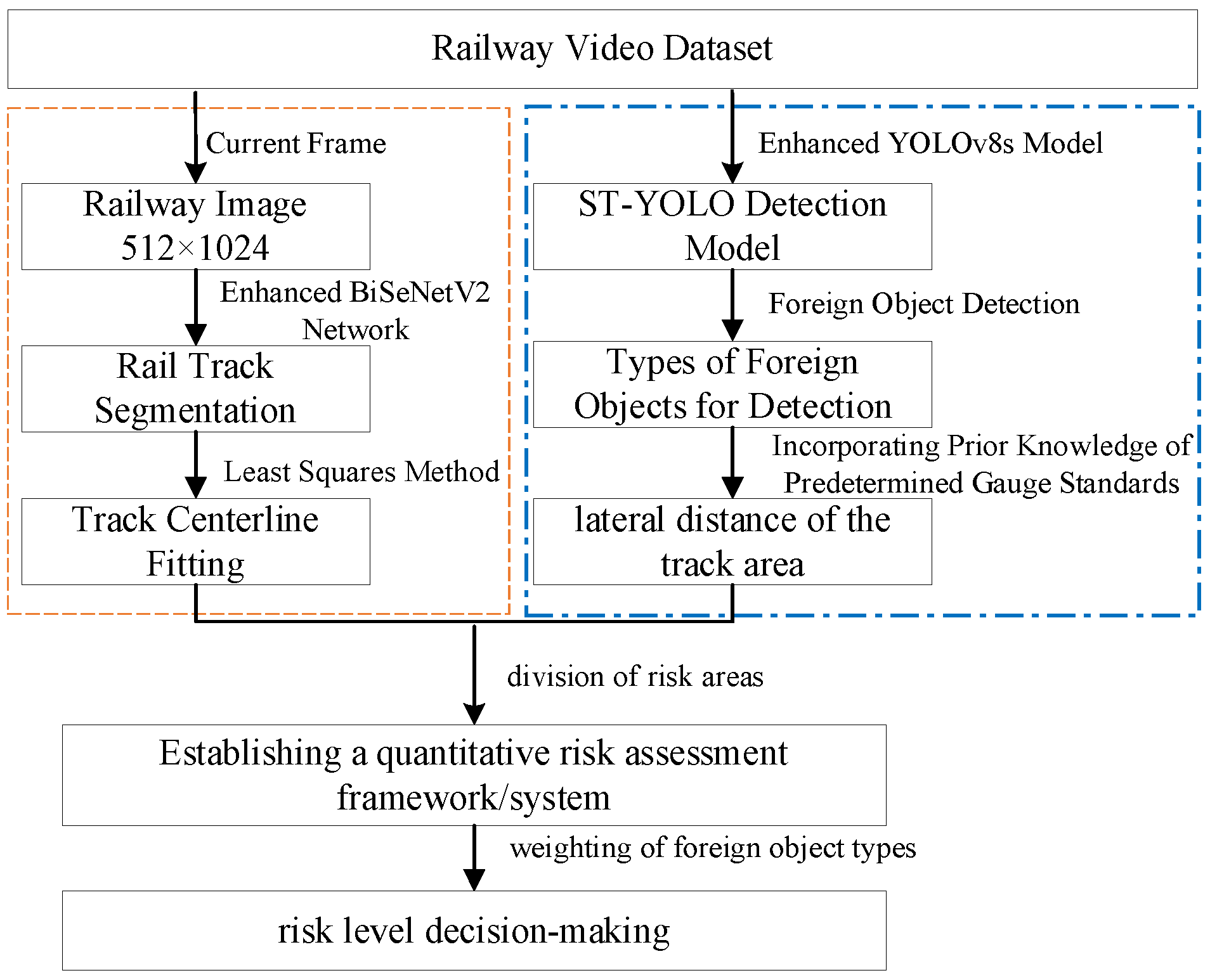

- A physically constrained track boundary model was developed by integrating railway clearance standards with an enhanced semantic segmentation network. This model incorporates track prior knowledge and enables spatial distance calculation of foreign objects through pixel transformation.

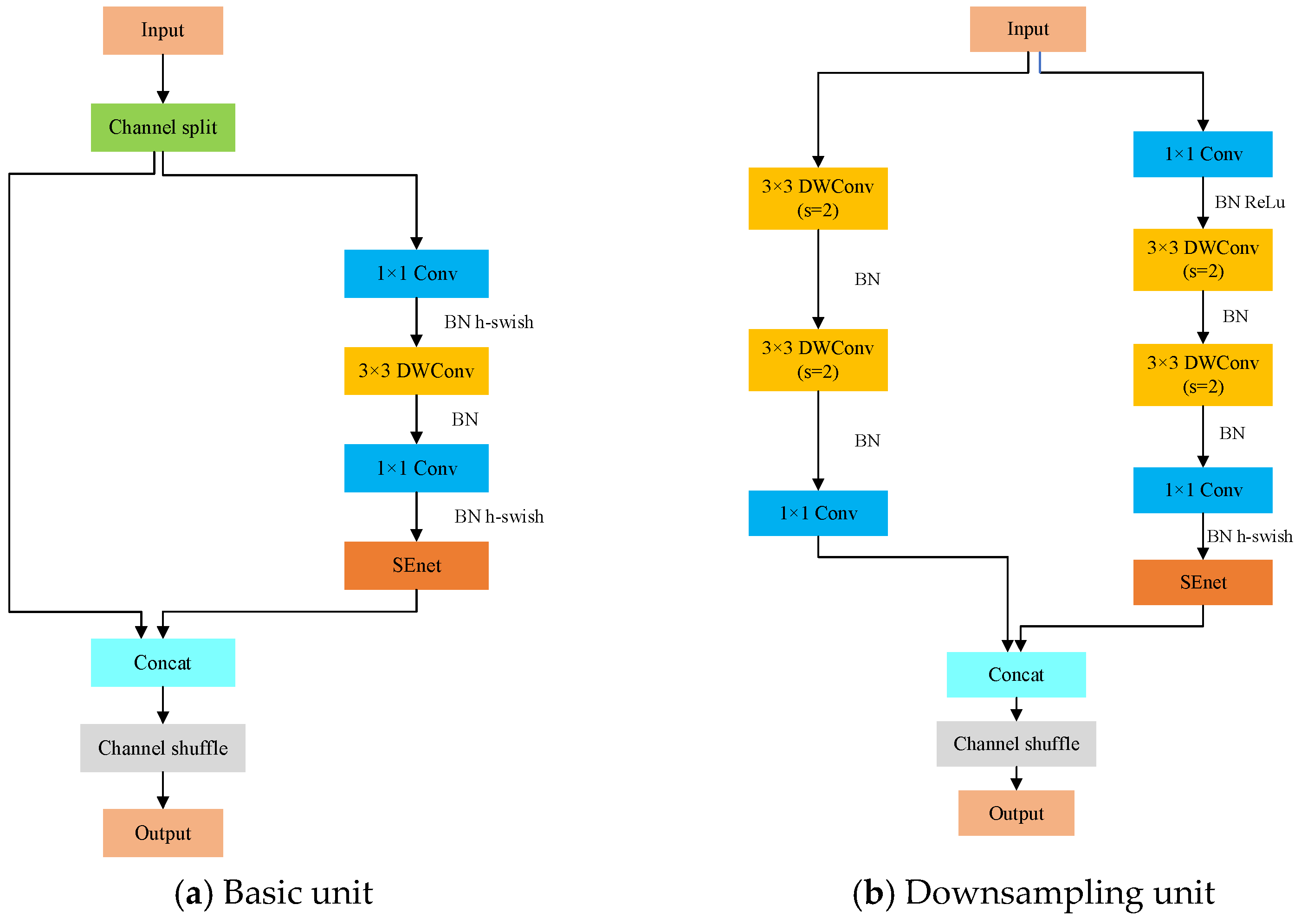

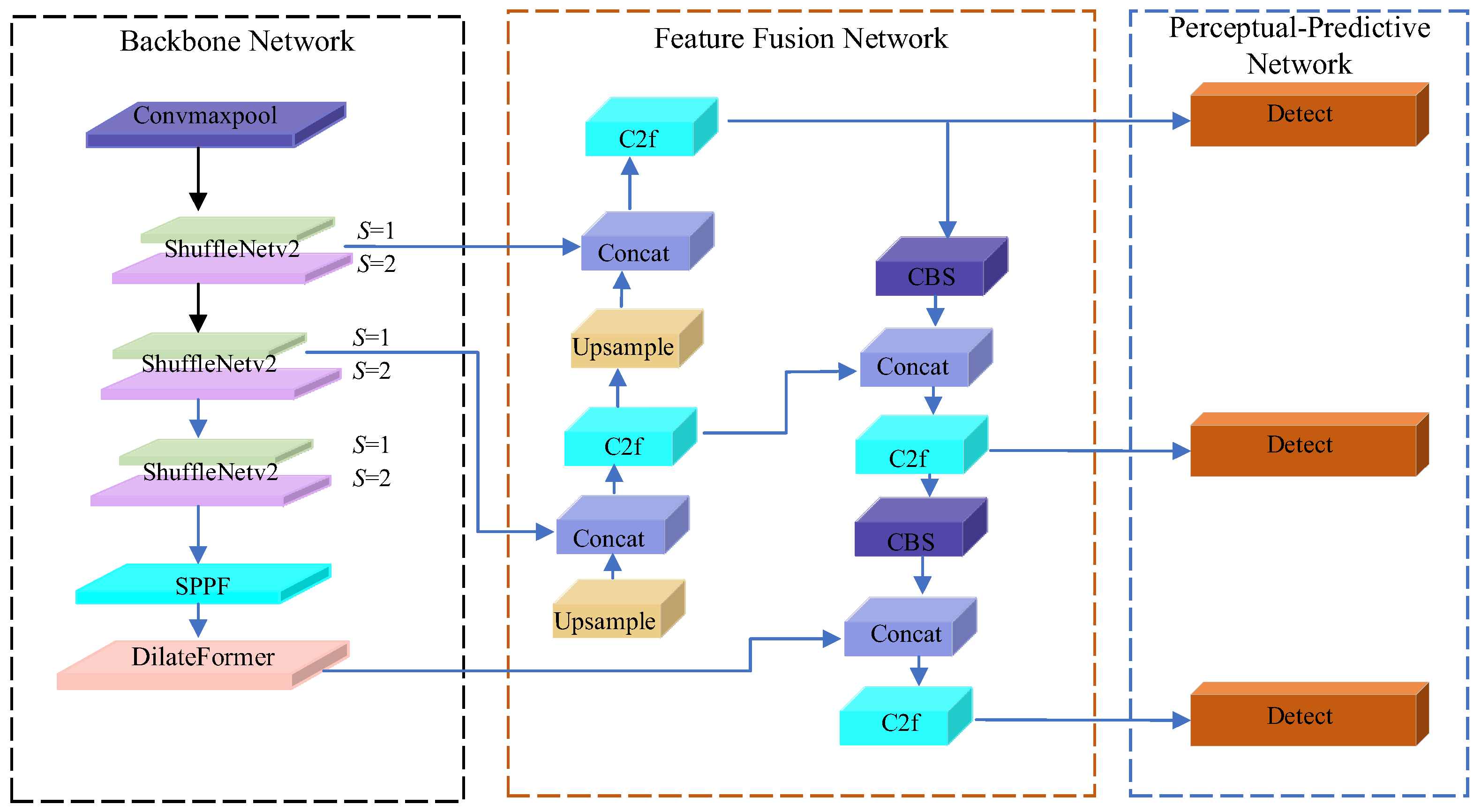

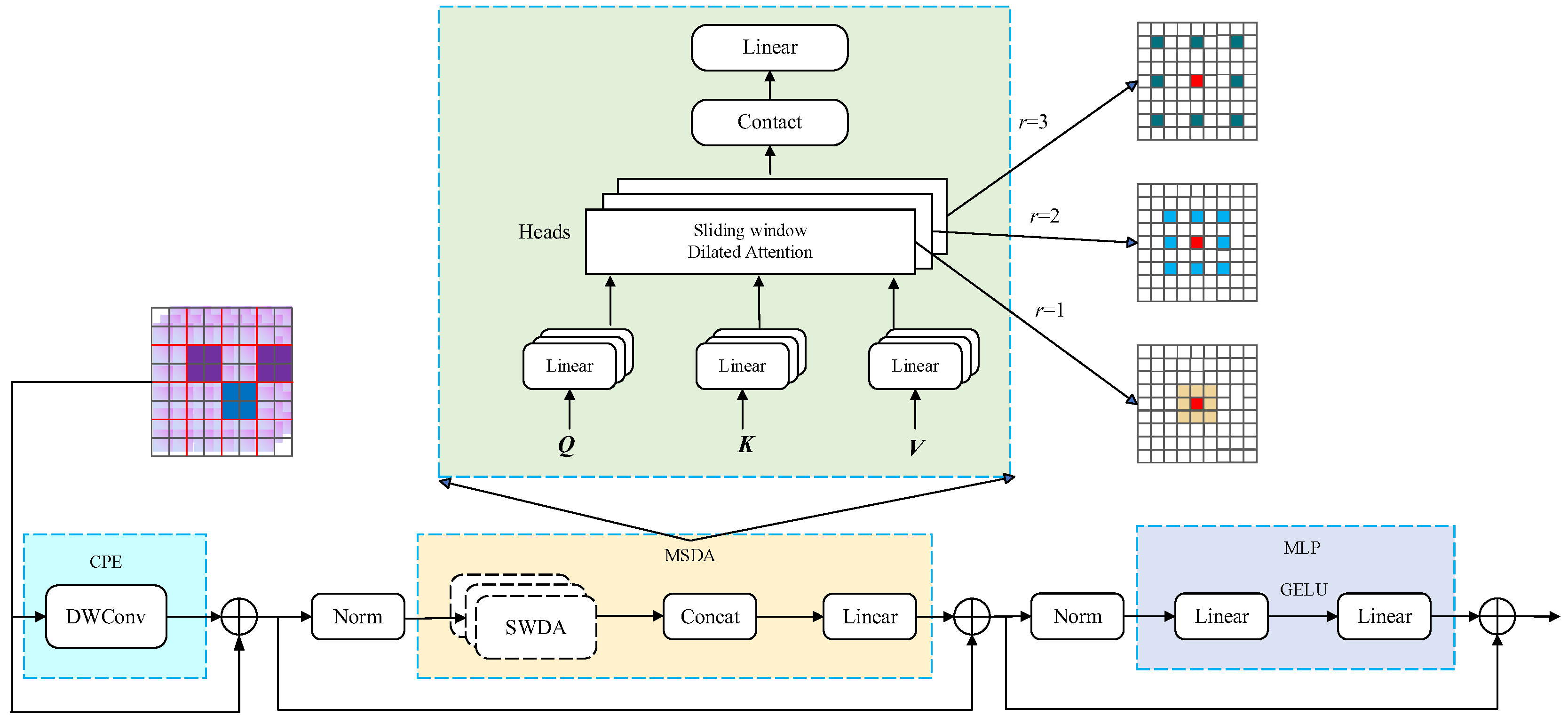

- An ST-YOLO based heterogeneous feature fusion mechanism was proposed, where the original YOLOv8s backbone network was replaced with an improved lightweight ShuffleNetV2 architecture. A DilateFormer module (Dilated Transformer) was incorporated at the bottom of the backbone network to significantly enhance feature extraction capability.

- A dynamic risk assessment matrix was constructed by integrating three-dimensional feature vectors: intruding object category, intrusion zone weighting coefficient, and spatiotemporal distance threat value. This enables comprehensive quantitative risk evaluation of foreign object intrusions.

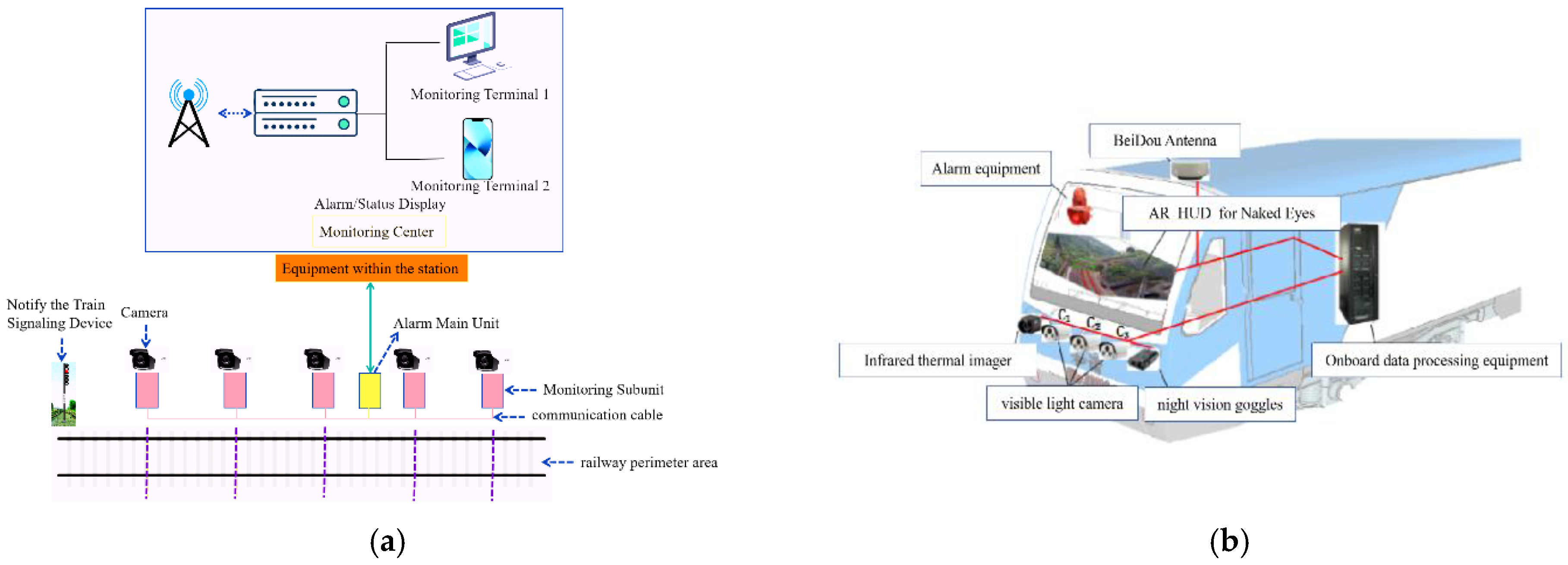

2. Foreign Object Detection and Risk Assessment

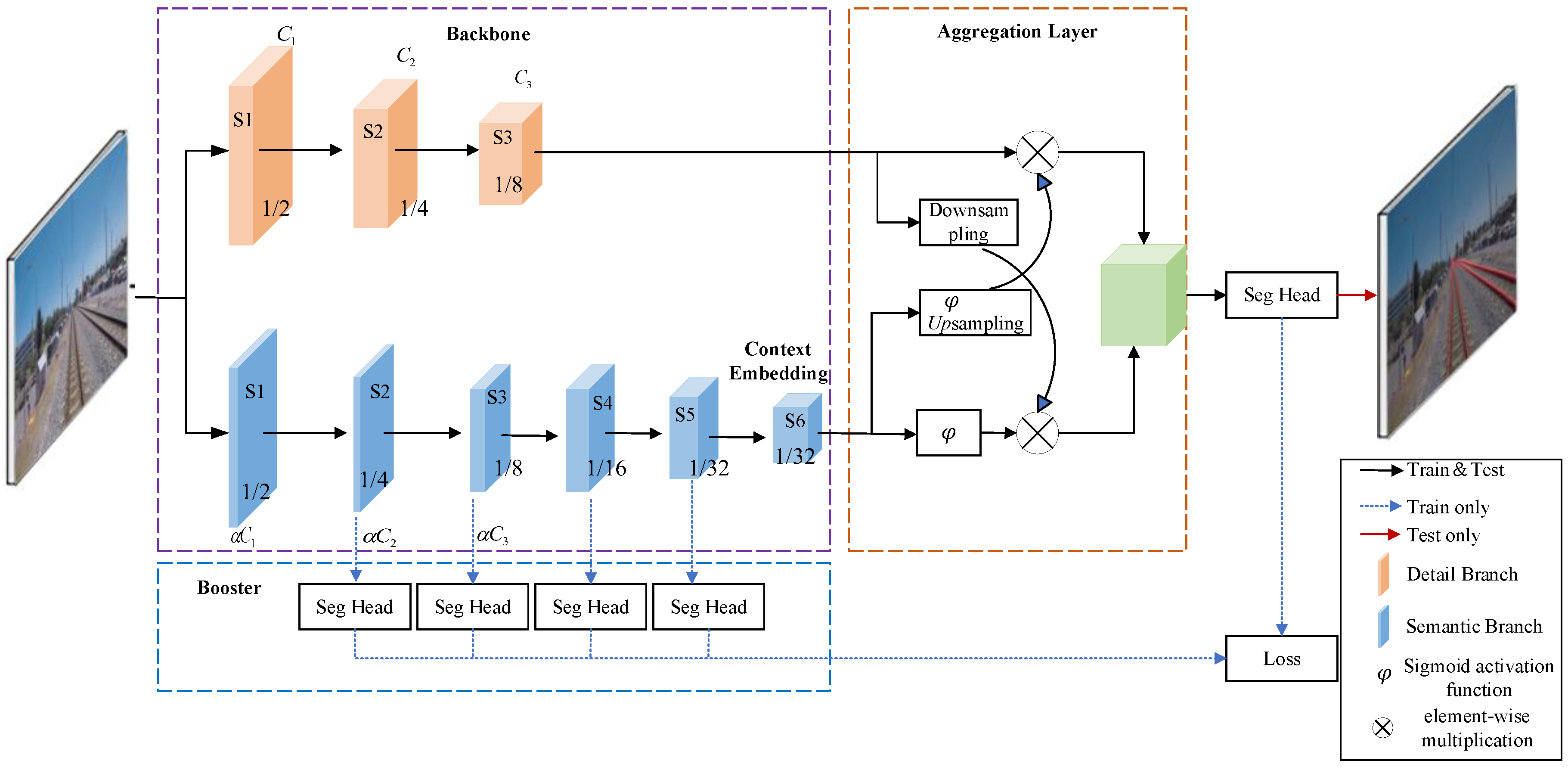

2.1. Track Detection Based on BiSeNetV2 Semantic Segmentation Network

2.2. ST-YOLO Foreign Object Detection Model

2.2.1. ShuffleNetV2 Network and Its Enhancements

2.2.2. Foreign Object Detection Model

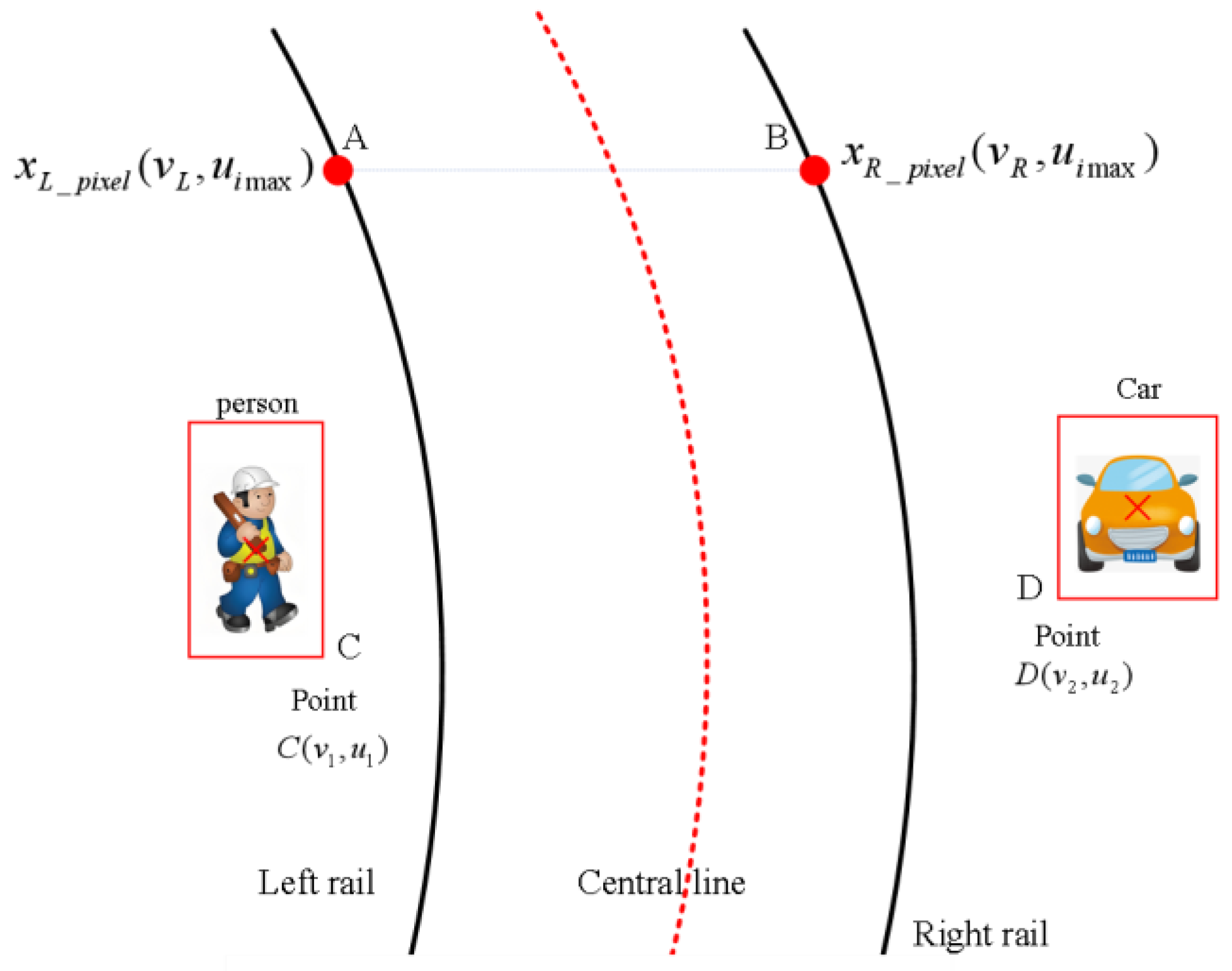

2.3. Spatiotemporal Distance-Based Risk Quantification for Foreign Object Intrusion

2.3.1. Lateral Distance Calculation Between Obstacles and Track Area

2.3.2. Construction of Risk Quantification Assessment System

3. Experiments

3.1. Rail Track Detection Experiments and Analysis

3.2. Foreign Object Detection Experiments and Analysis

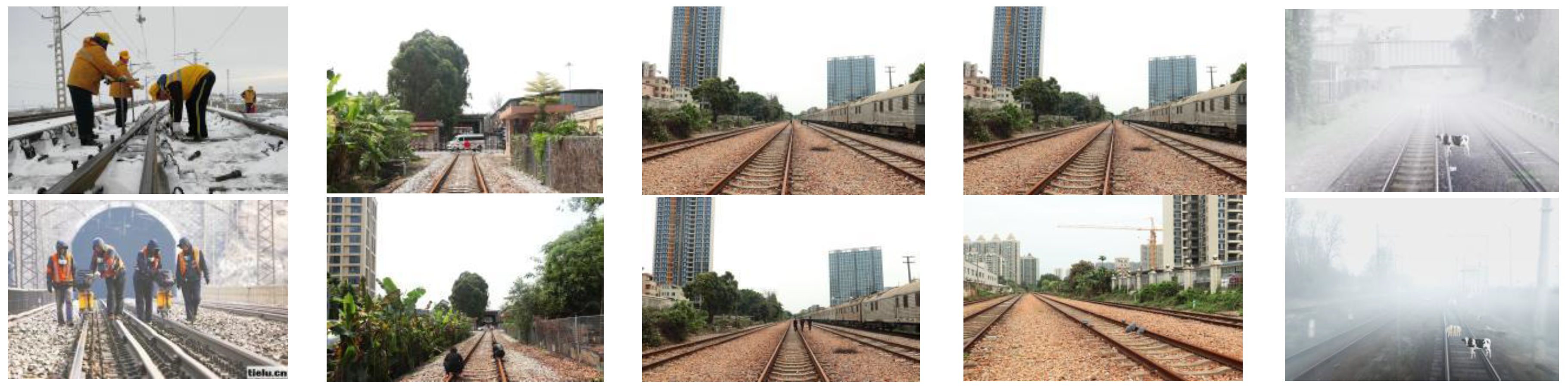

3.2.1. Description of Dataset and Evaluation Protocol

- The mean Average Precision (mAP) serves as the primary metric for evaluating model performance in this study, representing the mean value of Average Precision (AP) scores across all detection categories.

- 2.

- Recall serves as the metric for evaluating the model’s capability to correctly detect target objects.

- 3.

- Precision measures the accuracy of the model’s predictions.

- 4.

- The detection speed was quantitatively compared using the frames-per-second (FPS) metric.

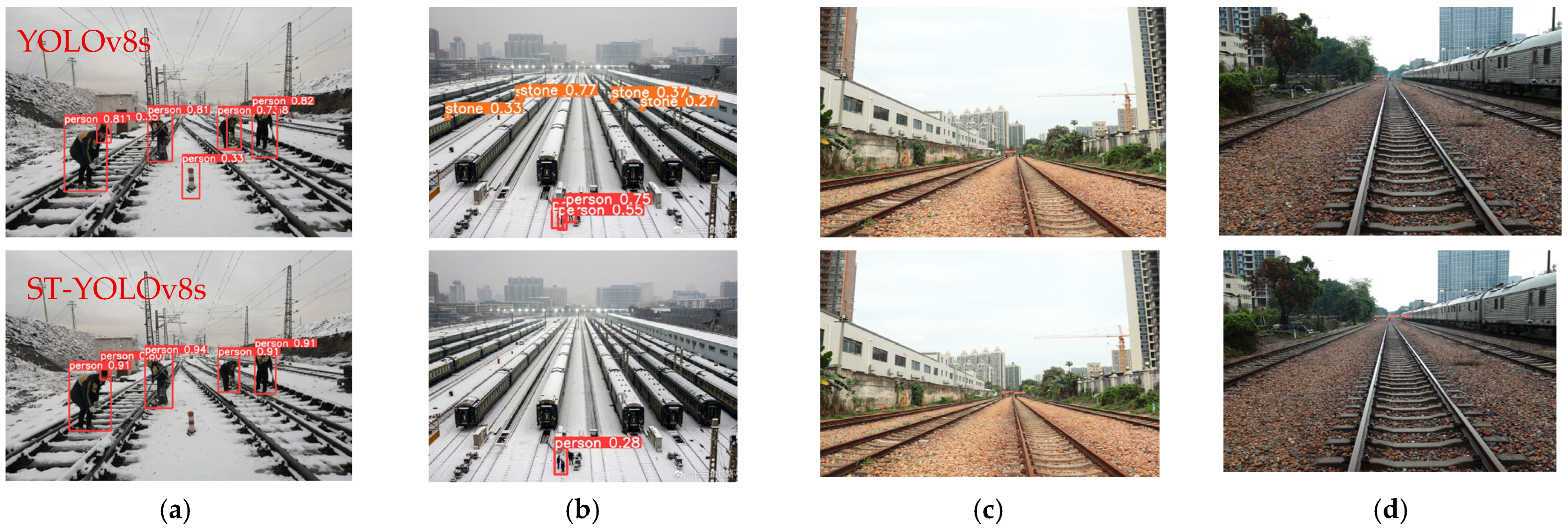

3.2.2. Experimental Analysis of Foreign Object Detection

3.3. Quantitative Risk Assessment Experiment for Foreign Object Intrusion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, B.; Yang, Q.; Chen, F.; Gao, D. A real-time foreign object detection method based on deep learning in complex open railway environments. J. Real-Time Image Process. 2024, 21, 166. [Google Scholar] [CrossRef]

- Kapoor, R.; Goel, R.; Sharma, A. An intelligent railway surveillance framework based on recognition of object and railway track using deep learning. Multimed. Tools Appl. 2022, 81, 21083–21109. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, Z.; Xie, M. An Anomaly-Free Representation Learning Approach for Efficient Railway Foreign Object Detection. IEEE Trans. Ind. Inf. 2024, 20, 12125–12135. [Google Scholar] [CrossRef]

- Ning, S.; Ding, F.; Chen, B. Research on the Method of Foreign Object Detection for Railway Tracks Based on Deep Learning. Sensors 2024, 24, 4483. [Google Scholar] [CrossRef] [PubMed]

- Yüksel, K.; Kinet, D.; Moeyaert, V.; Kouroussis, G.; Caucheteur, C. Railway monitoring system using optical fiber grating accelerometers. Smart Mater. Struct. 2018, 27, 105033. [Google Scholar] [CrossRef]

- Gregor, S.; Beavan, G.; Culbert, A.; Kan John, P.; Ngo, N.V.; Keating, B.; Radwan, I. Patterns of pre-crash behaviour in railway suicides and the effect of corridor fencing: A natural experiment in New South Wales. Int. J. Inj. Control Saf. Promot. 2019, 26, 423–430. [Google Scholar] [CrossRef]

- Tan, F.; Zhai, M.; Zhai, C. Foreign Object Detection in Urban Rail Transit Based on Deep Differentiation Segmentation Neural Network. Heliyon 2024, 10, e37072. [Google Scholar] [CrossRef]

- Wang, L.; Xiao, Y.; Zhang, B.; Liu, R.; Zhao, B. Water Surface Targets Detection Based on the Fusion of Vision and LiDAR. Sensors 2023, 23, 1768. [Google Scholar] [CrossRef]

- Simonovi’c, M.; Bani’c, M.; Stamenkovi’c, D.; Franke, M.; Michels, K.; Schoolmann, I.; Risti’c-Durrant, D.; Ulianov, C.; Dan-Stan, S.; Plesa, A. Toward the Enhancement of Rail Sustainability: Demonstration of a Holistic Approach to Obstacle Detection in Operational Railway Environments. Sustainability 2024, 16, 2613. [Google Scholar] [CrossRef]

- Chen, W.; Meng, S.; Jiang, Y. Foreign Object Detection in Railway Images Based on an Efficient Two-Stage Convolutional Neural Network. Comput. Intell. Neurosci. 2022, 1, 3749635. [Google Scholar] [CrossRef] [PubMed]

- Meng, C.; Wang, Z.; Shi, L.; Gao, Y.; Tao, Y.; Wei, L. SDRC-YOLO: A Novel Foreign Object Intrusion Detection Algorithm in Railway Scenarios. Electronics 2023, 12, 1256. [Google Scholar] [CrossRef]

- Ning, S.; Guo, R.; Guo, P.; Xiong, L.; Chen, B. Research on Foreign Object Intrusion Detection for Railway Tracks Utilizing Risk Assessment and YOLO Detection. IEEE Access 2024, 12, 175926–175939. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Z.; Tao, Y.; Hu, H. Quantitative risk assessment of railway intrusions with text mining and fuzzy Rule-Based Bow-Tie model. Adv. Eng. Inf. 2022, 54, 101726. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, Y.; Chen, F.; Shang, E.; Yao, W.; Zhang, S.; Yang, J. YOLOv7oSAR: A Lightweight High-Precision Ship Detection Model for SAR Images Based on the YOLOv7 Algorithm. Remote Sens. 2024, 16, 913. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542. [Google Scholar] [CrossRef] [PubMed]

- An, Y.; Cao, Y.; Sun, Y.; Su, S.; Wang, F. Modified Hough Transform Based Registration Method for Heavy-haul Railway Rail Profile with Intensive Environmental Noise. IEEE Trans. Instrum. Meas. 2025, 74, 5035312. [Google Scholar] [CrossRef]

- Liu, J.; Zhao, B.; Tian, M. Asymmetric-Convolution-Guided Multipath Fusion for Real-Time Semantic Segmentation Networks. Mathematics 2024, 12, 2759. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Z.; Lv, S.; Pan, M.; Ma, Q.; Yu, H. Road extraction from high spatial resolution remote sensing image based on multi-task key point constraints. IEEE Access 2021, 9, 95896–95910. [Google Scholar] [CrossRef]

- Han, G.; Zhang, X.; Huang, Y. An end-to-end based on semantic region guidance for infrared and visible image fusion. Signal Image Video Process. 2024, 18, 295–303. [Google Scholar] [CrossRef]

- Fu, Z.; Pan, X.; Zhang, G. Linear array image alignment under nonlinear scale distortion for train fault detection. IEEE Sens. J. 2024, 24, 23197–23211. [Google Scholar] [CrossRef]

- Udeh, C.; Chen, L.; Du, S.; Liu, Y.; Li, M.; Wu, M. Improved ShuffleNet V2 network with attention for speech emotion recognition. Inf. Sci. 2025, 689, 121488. [Google Scholar] [CrossRef]

- Cao, Z.; Li, J.; Fang, L.; Yang, H.; Dong, G. Research on efficient classification algorithm for coal and gangue based on improved MobilenetV3-small. Int. J. Coal Prep. Util. 2025, 45, 437–462. [Google Scholar] [CrossRef]

- Qin, H.; Wang, J.; Mao, X.; Zhao, Z.; Gao, X.; Lu, W. An improved faster R-CNN method for landslide detection in remote sensing images. J. Geovis. Spat. Anal. 2024, 8, 2. [Google Scholar] [CrossRef]

- Sridharan, N.; Vaithiyanathan, S.; Aghaei, M. Voting based ensemble for detecting visual faults in photovoltaic modules using AlexNet features. Energy Rep. 2024, 11, 3889–3901. [Google Scholar] [CrossRef]

- Huang, Y.; Shi, P.; He, H.; He, H.; Zhao, B. Senet: Spatial information enhancement for semantic segmentation neural networks. Vis. Comput. 2024, 40, 3427–3440. [Google Scholar] [CrossRef]

- Wang, J.; Zhao, H. Improved YOLOv8 Algorithm for Water Surface Object Detection. Sensors 2024, 24, 5059. [Google Scholar] [CrossRef] [PubMed]

- Shen, C.; Wu, J. Research on credit risk of listed companies: A hybrid model based on TCN and DilateFormer. Sci. Rep. 2025, 15, 2599. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Fan, X.; Fan, J.; Li, Q.; Gao, Y.; Zhao, X. DASR-Net: Land Cover Classification Methods for Hybrid Multiattention Multispectral High Spectral Resolution Remote Sensing Imagery. Forests 2024, 15, 1826. [Google Scholar] [CrossRef]

- Cao, Z.; Qin, Y.; Jia, L.; Xie, Z.; Gao, Y.; Wang, Y. Railway intrusion detection based on machine vision: A survey, challenges, and perspectives. IEEE Trans. Intell. Transp. Syst. 2024, 25, 6427–6448. [Google Scholar] [CrossRef]

- Zhang, Z.; Chen, P.; Huang, Y.; Dai, L.; Xu, F.; Hu, H. Railway obstacle intrusion warning mechanism integrating YOLO-based detection and risk assessment. J. Ind. Inf. Integr. 2024, 38, 100571. [Google Scholar] [CrossRef]

- Bai, D.; Guo, B.; Ruan, T.; Zhou, X.; Sun, T.; Wang, H. Both Objects and Relationships Matter: Cross Coupled Transformer for Railway Scene Perception. IEEE Trans. Intell. Transp. Syst. 2025, 26, 9812–9823. [Google Scholar] [CrossRef]

- Ye, T.; Cong, X.; Li, Y.; Zheng, Z.; Deng, X.; Yan, X. Few-Shot Railway Intrusion Detection Without Forgetting via Double RPN and Detector. IEEE Trans. Intell. Veh. 2024, 1–16. [Google Scholar] [CrossRef]

- Jiang, Z.; Wang, H.; Luo, G.; Fan, Z.; Xu, L. Pedestrian Intrusion Detection in Railway Station Based on Mirror Translation Attention and Feature Pooling Enhancement. IEEE Signal Process Lett. 2024, 31, 2730–2734. [Google Scholar] [CrossRef]

- Shi, T.; Guo, P.; Wang, R.; Ma, Z.; Zhang, W.; Li, W.; Fu, H.; Hu, H. A Survey on Multi-Sensor Fusion Perimeter Intrusion Detection in High-Speed Railways. Sensors 2024, 24, 5463. [Google Scholar] [CrossRef] [PubMed]

- Sevi, M.; Aydın, İ. Enhanced railway monitoring and segmentation using DNet and mathematical methods. Signal Image Video Process. 2025, 19, 1–14. [Google Scholar] [CrossRef]

- Shen, Y.; He, D.; Liu, Q.; Jin, Z.; Li, X.; Ren, C. Unsupervised intrusion detection for rail transit based on anomaly segmentation. Signal Image Video Process. 2024, 18, 1079–1087. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhang, J.; Zhong, Y.; Deng, L.; Wang, M. STNet: A time-frequency analysis-based intrusion detection network for distributed optical fiber acoustic sensing systems. Sensors 2024, 24, 1570. [Google Scholar] [CrossRef] [PubMed]

| Detail Branch | Semantic Branch | Output Dimensions | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Input | 512 × 1024 | |||||||||||

| layer | k | c | s | r | layer | k | c | e | S | r | ||

| S1 | Conv2d | 3 | 64 | 2 | 1 | Stem | 3 | 16 | - | 4 | 1 | 256 × 512 256 × 512 |

| Conv2d | 3 | 64 | 1 | 1 | ||||||||

| S2 | Conv2d | 3 | 64 | 2 | 1 | 128 × 256 128 × 256 | ||||||

| Conv2d | 3 | 64 | 1 | 2 | ||||||||

| S3 | Conv2d | 3 | 128 | 2 | 1 | GE | 3 | 32 | 6 | 2 | 1 | 64 × 128 64 × 128 |

| Conv2d | 3 | 128 | 1 | 2 | GE | 3 | 32 | 6 | 1 | 1 | ||

| S4 | GE | 3 | 64 | 6 | 2 | 1 | 32 × 64 32 × 64 | |||||

| GE | 3 | 64 | 6 | 1 | 1 | |||||||

| S5 | GE | 3 | 128 | 6 | 2 | 1 | 16 × 32 16 × 32 | |||||

| GE | 3 | 128 | 6 | 1 | 3 | |||||||

| S6 | CE | 3 | 128 | - | 1 | 1 | 16 × 32 | |||||

| Zone Type | Lateral Range (mm) | Risk Base Value |

|---|---|---|

| High-risk area | 0 ≤ dx ≤ 717.5 | 1.0 |

| Medium-risk area | 717.5 ≤ dx ≤ 3157.5 | 0.7 |

| Safe area | dx ≥ 3157.5 | 0 |

| Category | Weight | Behavioral Characteristics |

|---|---|---|

| person | 1.1 | potential for sudden movement |

| car | 1.2 | bulky mass with high momentum |

| animal | 0.9 | erratic movement |

| stone | 0.7 | motionless |

| Risk Threshold Range | Risk Grade | Mitigation Measure |

|---|---|---|

| ≥1.2 | Level I—Extremely High Risk | Emergency Braking Application (EBA) |

| 0.8~1.2 | Level II—High Risk | Automatic Speed Constraint (ASC) |

| 0.5~0.8 | Level III—Elevated Risk | Multi-modal Warning and Brake Pre-activation (MWBP) |

| 0.2~0.5 | Level IV—Managed Risk | HUD Warning Alert |

| <0.2 | Level V—Controlled Risk | Non-intrusive Event Logging (NIEL) |

| Sub-Dataset Names | Car | Person | Stone | Animal | Total |

|---|---|---|---|---|---|

| Daylight Condition Dataset | 510 | 1270 | 390 | 612 | 2782 |

| Low-Light Condition Dataset | 310 | 750 | 260 | 170 | 1490 |

| Extreme Weather Application Dataset | 260 | 560 | 150 | 128 | 1098 |

| r | mAP/% | Params/106 | FLOPs/109 | FPS |

|---|---|---|---|---|

| Baseline Model | 81.6 | 13.2 | 31.5 | 118 |

| r = 1 | 84.2 | 14.7 | 29.8 | 112 |

| r = 2 | 83.9 | 14.7 | 29.8 | 107 |

| r = 3 | 83.5 | 14.7 | 29.8 | 104 |

| Model | mAP/% | Params/106 | FLOPs/109 | FPS |

|---|---|---|---|---|

| DarkNet-53 | 81.6 | 13.2 | 31.5 | 118 |

| ShuffleNetV2 | 81.1 | 7.9 | 23.1 | 206 |

| Proposed Method | 82.8 | 7.9 | 23.9 | 215 |

| Model | mAP/% | Params/106 | FLOPs/109 | FPS | P/% | R/% |

|---|---|---|---|---|---|---|

| YOLOv8s | 81.6 | 13.2 | 31.5 | 118 | 81.5 | 79.8 |

| YOLOv8s-S | 82.8 | 7.9 | 23.9 | 215 | 82.6 | 79.2 |

| YOLOv8s-T | 84.2 | 14.7 | 29.8 | 112 | 80.2 | 85.1 |

| ST-YOLOv8s | 84.9 | 9.6 | 22.7 | 154 | 82.1 | 81.2 |

| Model | mAP/% | Params/106 | FLOPs/109 | FPS |

|---|---|---|---|---|

| Faster R-CNN | 70.8 | 65.2 | 96.1 | 29 |

| SSD | 68.1 | 49.3 | 85.6 | 41 |

| YOLOv3 | 76.3 | 39.5 | 88.9 | 36 |

| YOLOv3-tiny | 53.6 | 8.4 | 19.5 | 148 |

| YOLOv4 | 58.5 | 31.6 | 71.7 | 52 |

| YOLOv4-tiny | 59.7 | 7.8 | 24.7 | 139 |

| YOLOv5s | 79.4 | 12.2 | 29.2 | 125 |

| YOLOv7 | 80.7 | 13.6 | 32.9 | 112 |

| YOLOv8s | 81.6 | 13.2 | 31.5 | 118 |

| YOLOv12s | 81.9 | 13.7 | 31.9 | 119 |

| YOLOv13s | 82.1 | 14.9 | 32.6 | 122 |

| ST-YOLOv8s | 84.9 | 9.6 | 22.7 | 154 |

| Distance to Left (Right) Rail | Risk Grade | |||||

|---|---|---|---|---|---|---|

| Figure 16a | Person1 | 120.7 mm from the left rail | 0.48 | 1.49 | 0.95 | Level II—High Risk |

| Person2 | 8.8mm from the left rail | 0.71 | 1.49 | 1.39 | Level I—Extremely High Risk | |

| Person3 | 153mm from the right rail | 0.47 | 1.49 | 0.92 | Level II—High Risk | |

| Figure 16b | Person1 | 189mm from the right rail | 0.78 | 0.94 | 0.98 | Level II—High Risk |

| Person2 | 241mm from the right rail | 0.45 | 0.94 | 0.71 | Level III—Elevated Risk | |

| Figure 16c | Person1 | 332mm from the left rail | 0.84 | 0.87 | 0.97 | Level II—High Risk |

| Figure 16d | Person1 | 1280mm from the left rail | 0.76 | 0.82 | 0.83 | Level II—High Risk |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ning, S.; Ding, F.; Chen, B.; Huang, Y. Railway Intrusion Risk Quantification with Track Semantic Segmentation and Spatiotemporal Features. Sensors 2025, 25, 5266. https://doi.org/10.3390/s25175266

Ning S, Ding F, Chen B, Huang Y. Railway Intrusion Risk Quantification with Track Semantic Segmentation and Spatiotemporal Features. Sensors. 2025; 25(17):5266. https://doi.org/10.3390/s25175266

Chicago/Turabian StyleNing, Shanping, Feng Ding, Bangbang Chen, and Yuanfang Huang. 2025. "Railway Intrusion Risk Quantification with Track Semantic Segmentation and Spatiotemporal Features" Sensors 25, no. 17: 5266. https://doi.org/10.3390/s25175266

APA StyleNing, S., Ding, F., Chen, B., & Huang, Y. (2025). Railway Intrusion Risk Quantification with Track Semantic Segmentation and Spatiotemporal Features. Sensors, 25(17), 5266. https://doi.org/10.3390/s25175266